Upload 7 files

Browse files- .gitattributes +1 -0

- ISIC_0000060_downsampled.jpg +0 -0

- ISIC_0000146.jpg +3 -0

- ISIC_0068279.jpg +0 -0

- age_approx_scaler.joblib +3 -0

- app.py +140 -0

- best_epoch_weights.pth +3 -0

- requirements.txt +7 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

ISIC_0000146.jpg filter=lfs diff=lfs merge=lfs -text

|

ISIC_0000060_downsampled.jpg

ADDED

|

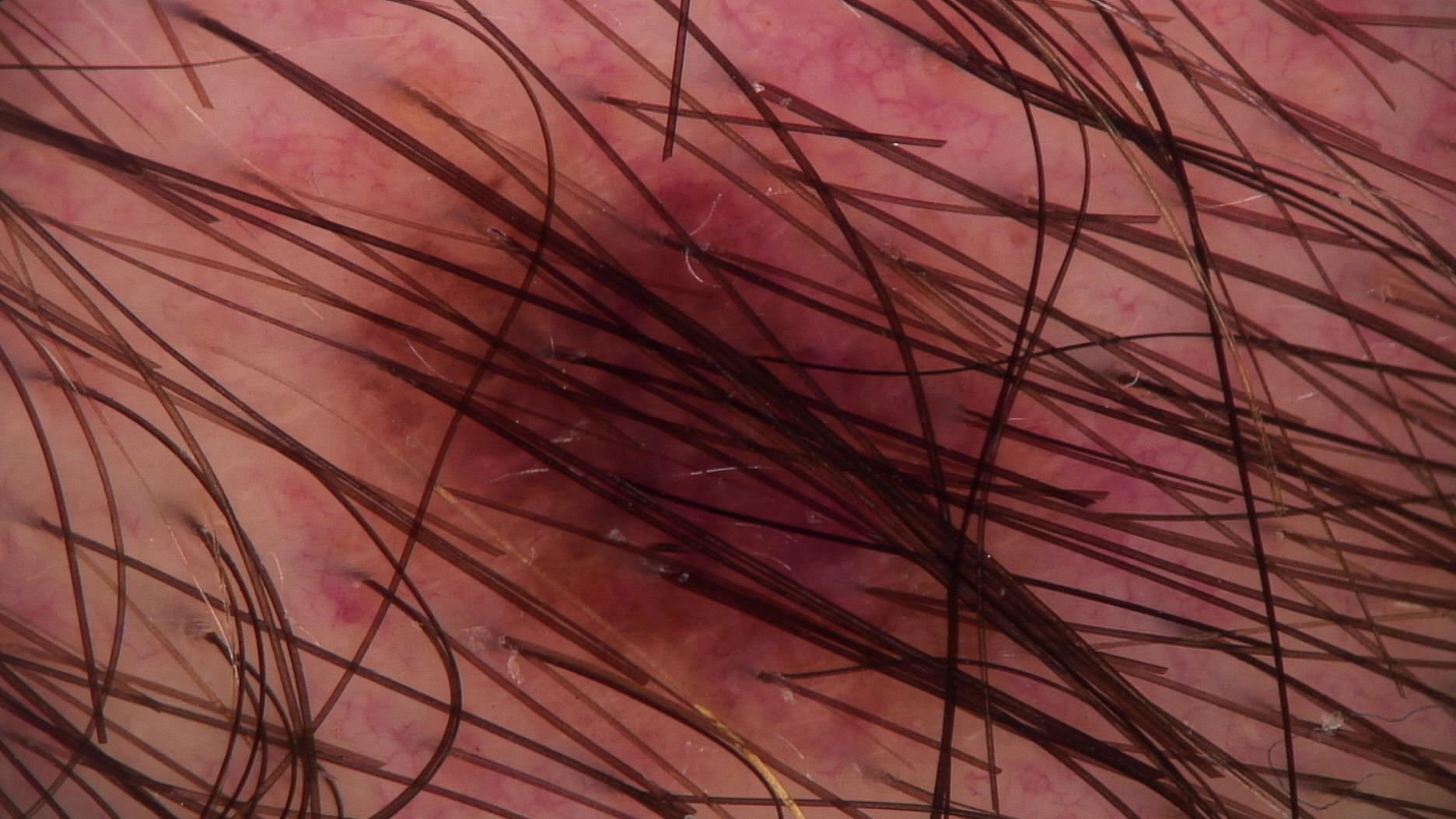

ISIC_0000146.jpg

ADDED

|

Git LFS Details

|

ISIC_0068279.jpg

ADDED

|

age_approx_scaler.joblib

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b3f5634fa2508e531c4196c06207c60c40de6f224e6ef73afc9b935242b18c2c

|

| 3 |

+

size 623

|

app.py

ADDED

|

@@ -0,0 +1,140 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from PIL import Image

|

| 3 |

+

from joblib import load

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

import torch.nn as nn

|

| 7 |

+

import torch.nn.functional as F

|

| 8 |

+

from torchvision.models import efficientnet_b0

|

| 9 |

+

import torchvision.transforms as transforms

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class MultiModalClassifier(nn.Module):

|

| 13 |

+

def __init__(self, num_classes, num_features):

|

| 14 |

+

super(MultiModalClassifier, self).__init__()

|

| 15 |

+

# Load pre-trained EfficientNet model

|

| 16 |

+

efficientnet = efficientnet_b0(pretrained=True)

|

| 17 |

+

|

| 18 |

+

# Remove the last classifier layer

|

| 19 |

+

self.efficientnet_features = nn.Sequential(*list(efficientnet.children())[:-1])

|

| 20 |

+

|

| 21 |

+

# Define additional feature dimensions

|

| 22 |

+

self.age_dim = 1 # assuming age is a single scalar value

|

| 23 |

+

self.anatom_site_dim = 1 # assuming anatomical site is a single scalar value

|

| 24 |

+

self.sex_dim = 1 # assuming sex is a single scalar value

|

| 25 |

+

|

| 26 |

+

# Fully connected layers for classification

|

| 27 |

+

self.fc1 = nn.Linear(num_features + self.age_dim + self.anatom_site_dim + self.sex_dim, 256)

|

| 28 |

+

self.fc2 = nn.Linear(256, num_classes)

|

| 29 |

+

|

| 30 |

+

# Dropout layer

|

| 31 |

+

self.dropout = nn.Dropout(p=0.5)

|

| 32 |

+

|

| 33 |

+

def forward(self, image, age, anatom_site, sex):

|

| 34 |

+

# Forward pass through the pre-trained EfficientNet model

|

| 35 |

+

image_features = self.efficientnet_features(image)

|

| 36 |

+

image_features = F.avg_pool2d(image_features, image_features.size()[2:]).view(image.size(0), -1) # Flatten

|

| 37 |

+

|

| 38 |

+

# Reshape age, anatom_site, and sex tensors

|

| 39 |

+

age = age.view(-1, 1) # Reshape to [batch_size, 1]

|

| 40 |

+

anatom_site = anatom_site.view(-1, 1) # Reshape to [batch_size, 1]

|

| 41 |

+

sex = sex.view(-1, 1) # Reshape to [batch_size, 1]

|

| 42 |

+

# Concatenate image features with additional features

|

| 43 |

+

additional_features = torch.cat((age, anatom_site, sex), dim=1)

|

| 44 |

+

combined_features = torch.cat((image_features, additional_features), dim=1)

|

| 45 |

+

|

| 46 |

+

# Fully connected layers for classification

|

| 47 |

+

combined_features = F.relu(self.fc1(combined_features))

|

| 48 |

+

combined_features = self.dropout(combined_features)

|

| 49 |

+

output = self.fc2(combined_features)

|

| 50 |

+

|

| 51 |

+

return output

|

| 52 |

+

|

| 53 |

+

# Initialize the model

|

| 54 |

+

num_classes = 1 # Assuming binary classification

|

| 55 |

+

num_features = 1280 # Number of features extracted by EfficientNet-B0

|

| 56 |

+

model = MultiModalClassifier(num_classes, num_features)

|

| 57 |

+

|

| 58 |

+

# Load the saved model state dictionary

|

| 59 |

+

model.load_state_dict(torch.load(r'best_epoch_weights.pth',map_location=torch.device('cpu')))

|

| 60 |

+

|

| 61 |

+

# Set the model to evaluation mode

|

| 62 |

+

model.eval()

|

| 63 |

+

|

| 64 |

+

# Load the age scaler

|

| 65 |

+

age_scaler = load(r'age_approx_scaler.joblib')

|

| 66 |

+

|

| 67 |

+

# Define transforms for the data (adjust as necessary)

|

| 68 |

+

test_transform = transforms.Compose([

|

| 69 |

+

transforms.Resize((224, 224)),

|

| 70 |

+

transforms.ToTensor(),

|

| 71 |

+

])

|

| 72 |

+

|

| 73 |

+

diagnosis_map = {0: 'benign', 1: 'malignant'}

|

| 74 |

+

|

| 75 |

+

# Define mapping dictionaries for sexes and anatom_sites

|

| 76 |

+

sexes_mapping = {'male': 0, 'female': 1}

|

| 77 |

+

|

| 78 |

+

# Define mapping dictionary for anatom_site_general

|

| 79 |

+

anatom_site_mapping = {

|

| 80 |

+

'torso': 0,

|

| 81 |

+

'lower extremity': 1,

|

| 82 |

+

'head/neck': 2,

|

| 83 |

+

'upper extremity': 3,

|

| 84 |

+

'palms/soles': 4,

|

| 85 |

+

'oral/genital': 5,

|

| 86 |

+

}

|

| 87 |

+

|

| 88 |

+

def predict(image, age, gender, anatom_site):

|

| 89 |

+

|

| 90 |

+

image = Image.fromarray(image)

|

| 91 |

+

# Apply transformations to the image

|

| 92 |

+

image = test_transform(image)

|

| 93 |

+

image = image.float()

|

| 94 |

+

image = image.unsqueeze(0) # Add batch dimension

|

| 95 |

+

|

| 96 |

+

sex = torch.tensor([[sexes_mapping[gender.lower()]]], dtype=torch.float32)

|

| 97 |

+

anatom_site = torch.tensor([[anatom_site_mapping[anatom_site]]], dtype=torch.float32)

|

| 98 |

+

|

| 99 |

+

# Scale the age using the loaded scaler

|

| 100 |

+

scaled_age = age_scaler.transform([[age]])

|

| 101 |

+

# Convert scaled age to a tensor

|

| 102 |

+

age_tensor = torch.tensor(np.array(scaled_age), dtype=torch.float32)

|

| 103 |

+

|

| 104 |

+

# Forward pass

|

| 105 |

+

output = model(image, age_tensor, anatom_site, sex)

|

| 106 |

+

|

| 107 |

+

# Apply sigmoid to the output (since it's a binary classification)

|

| 108 |

+

output_sigmoid = torch.sigmoid(output)

|

| 109 |

+

# Get the predicted class (0 or 1)

|

| 110 |

+

predicted_class = (output_sigmoid > 0.5).float()

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

return f"The predicted_class is a {diagnosis_map[int(predicted_class)]}."

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

description_html = """

|

| 117 |

+

Fill in the required parameters and click 'classify'.

|

| 118 |

+

"""

|

| 119 |

+

|

| 120 |

+

example_data = [

|

| 121 |

+

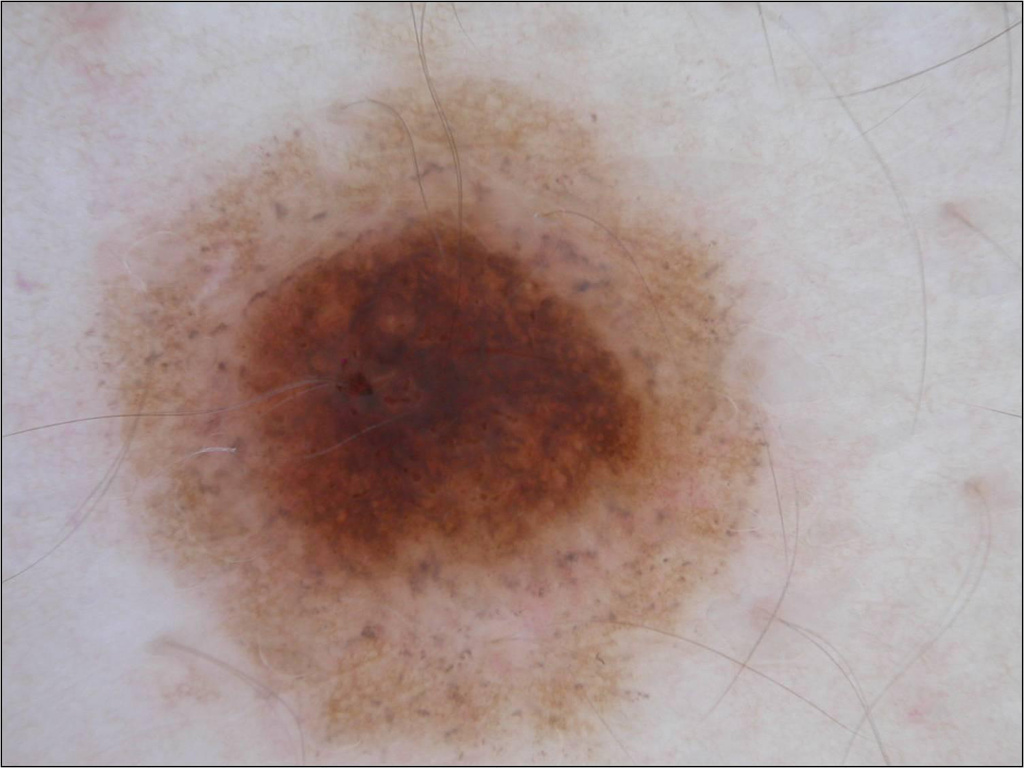

["ISIC_0000060_downsampled.jpg", 35, "Female", "torso"],

|

| 122 |

+

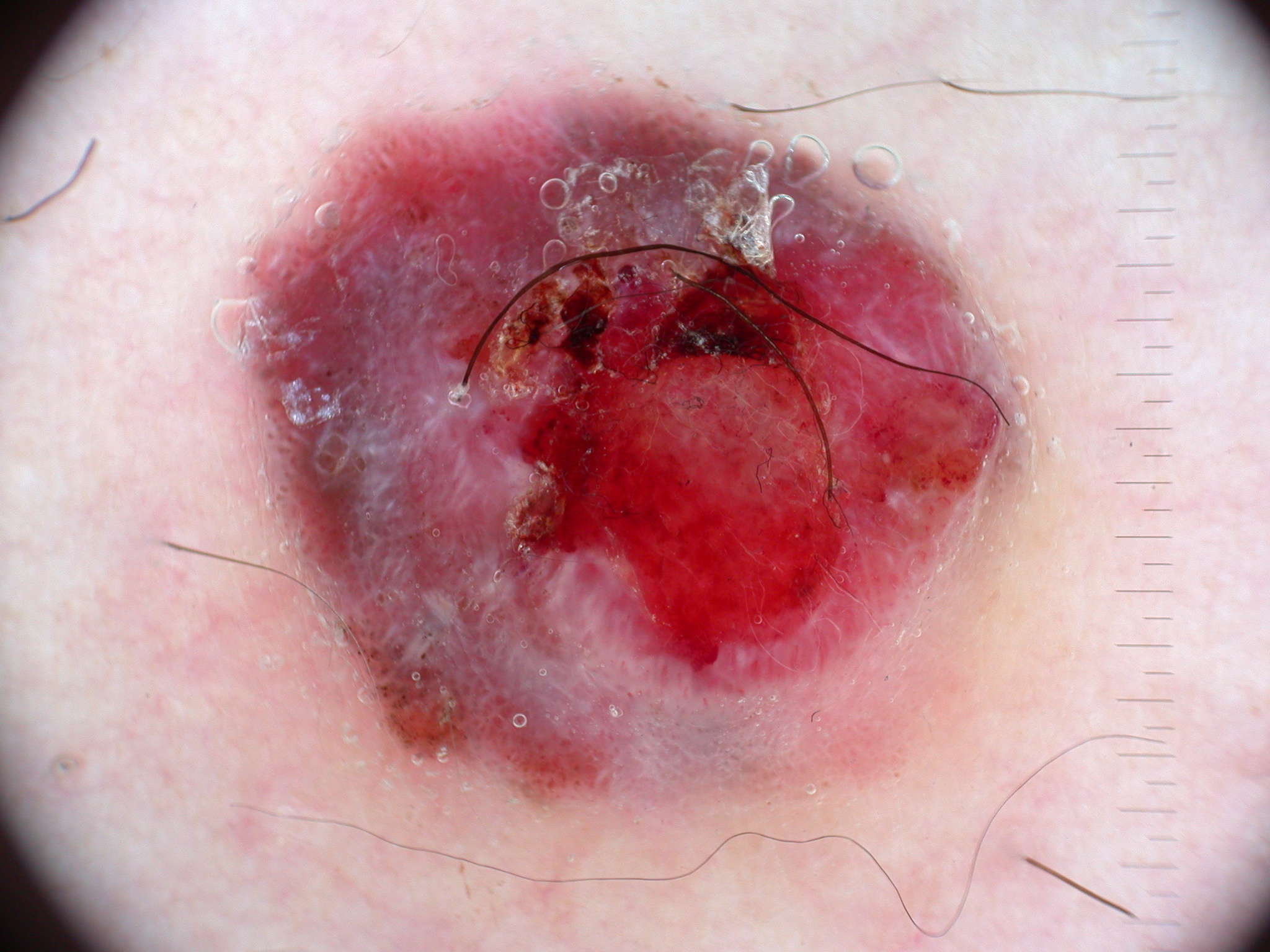

["ISIC_0068279.jpg", 45.0, "Female", "head/neck"]

|

| 123 |

+

]

|

| 124 |

+

|

| 125 |

+

inputs = [

|

| 126 |

+

"image",

|

| 127 |

+

gr.Number(label="Age", minimum=0, maximum=120),

|

| 128 |

+

gr.Dropdown(['Male', 'Female'], label="Gender"),

|

| 129 |

+

gr.Dropdown(['torso', 'lower extremity', 'head/neck', 'upper extremity', 'palms/soles', 'oral/genital'], label="Anatomical Site")

|

| 130 |

+

]

|

| 131 |

+

|

| 132 |

+

gr.Interface(

|

| 133 |

+

predict,

|

| 134 |

+

inputs,

|

| 135 |

+

outputs = gr.Textbox(label="Output", type="text"),

|

| 136 |

+

title="Skin Cancer Diagnosis",

|

| 137 |

+

description=description_html,

|

| 138 |

+

allow_flagging='never',

|

| 139 |

+

examples=example_data

|

| 140 |

+

).launch()

|

best_epoch_weights.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4ab0618a9c77f2d85403b2c29988d3273ad991519678ceadc7ee16242bb5bb5f

|

| 3 |

+

size 17659032

|

requirements.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==3.39.0

|

| 2 |

+

Pillow==9.5.0

|

| 3 |

+

torch==2.2.1

|

| 4 |

+

torchvision==0.17.1

|

| 5 |

+

joblib==1.3.2

|

| 6 |

+

numpy==1.26.2

|

| 7 |

+

scikit-learn==1.2.2

|