Spaces:

Runtime error

Runtime error

Commit

·

1a1ee1f

1

Parent(s):

799a750

app

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +674 -0

- README.md +224 -1

- app.py +104 -0

- cfg/yolor_csp.cfg +1376 -0

- cfg/yolor_csp_x.cfg +1576 -0

- cfg/yolor_p6.cfg +1760 -0

- cfg/yolor_w6.cfg +1760 -0

- cfg/yolov4_csp.cfg +1334 -0

- cfg/yolov4_csp_x.cfg +1534 -0

- cfg/yolov4_p6.cfg +2260 -0

- cfg/yolov4_p7.cfg +2714 -0

- darknet/README.md +63 -0

- darknet/cfg/yolov4-csp-x.cfg +1555 -0

- darknet/cfg/yolov4-csp.cfg +1354 -0

- darknet/new_layers.md +329 -0

- data/coco.names +80 -0

- data/coco.yaml +18 -0

- data/hyp.finetune.1280.yaml +28 -0

- data/hyp.scratch.1280.yaml +28 -0

- data/hyp.scratch.640.yaml +28 -0

- figure/implicit_modeling.png +0 -0

- figure/performance.png +0 -0

- figure/schedule.png +0 -0

- figure/unifued_network.png +0 -0

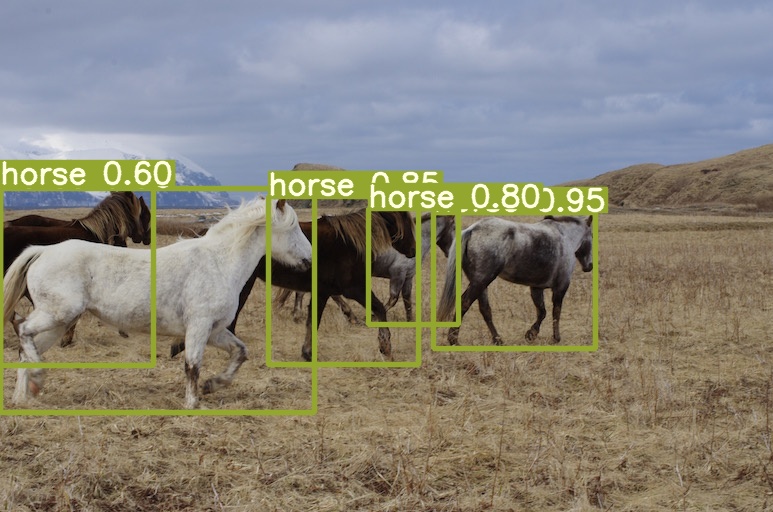

- inference/images/horses.jpg +0 -0

- inference/output/horses.jpg +0 -0

- models/__init__.py +1 -0

- models/__pycache__/__init__.cpython-37.pyc +0 -0

- models/__pycache__/models.cpython-37.pyc +0 -0

- models/export.py +68 -0

- models/models.py +761 -0

- requirements.txt +33 -0

- scripts/get_coco.sh +27 -0

- scripts/get_pretrain.sh +7 -0

- test.py +344 -0

- train.py +619 -0

- tune.py +619 -0

- utils/__init__.py +1 -0

- utils/__pycache__/__init__.cpython-37.pyc +0 -0

- utils/__pycache__/__init__.cpython-38.pyc +0 -0

- utils/__pycache__/datasets.cpython-37.pyc +0 -0

- utils/__pycache__/datasets.cpython-38.pyc +0 -0

- utils/__pycache__/general.cpython-37.pyc +0 -0

- utils/__pycache__/google_utils.cpython-37.pyc +0 -0

- utils/__pycache__/google_utils.cpython-38.pyc +0 -0

- utils/__pycache__/layers.cpython-37.pyc +0 -0

- utils/__pycache__/metrics.cpython-37.pyc +0 -0

- utils/__pycache__/parse_config.cpython-37.pyc +0 -0

- utils/__pycache__/plots.cpython-37.pyc +0 -0

- utils/__pycache__/torch_utils.cpython-37.pyc +0 -0

LICENSE

ADDED

|

@@ -0,0 +1,674 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

GNU GENERAL PUBLIC LICENSE

|

| 2 |

+

Version 3, 29 June 2007

|

| 3 |

+

|

| 4 |

+

Copyright (C) 2007 Free Software Foundation, Inc. <https://fsf.org/>

|

| 5 |

+

Everyone is permitted to copy and distribute verbatim copies

|

| 6 |

+

of this license document, but changing it is not allowed.

|

| 7 |

+

|

| 8 |

+

Preamble

|

| 9 |

+

|

| 10 |

+

The GNU General Public License is a free, copyleft license for

|

| 11 |

+

software and other kinds of works.

|

| 12 |

+

|

| 13 |

+

The licenses for most software and other practical works are designed

|

| 14 |

+

to take away your freedom to share and change the works. By contrast,

|

| 15 |

+

the GNU General Public License is intended to guarantee your freedom to

|

| 16 |

+

share and change all versions of a program--to make sure it remains free

|

| 17 |

+

software for all its users. We, the Free Software Foundation, use the

|

| 18 |

+

GNU General Public License for most of our software; it applies also to

|

| 19 |

+

any other work released this way by its authors. You can apply it to

|

| 20 |

+

your programs, too.

|

| 21 |

+

|

| 22 |

+

When we speak of free software, we are referring to freedom, not

|

| 23 |

+

price. Our General Public Licenses are designed to make sure that you

|

| 24 |

+

have the freedom to distribute copies of free software (and charge for

|

| 25 |

+

them if you wish), that you receive source code or can get it if you

|

| 26 |

+

want it, that you can change the software or use pieces of it in new

|

| 27 |

+

free programs, and that you know you can do these things.

|

| 28 |

+

|

| 29 |

+

To protect your rights, we need to prevent others from denying you

|

| 30 |

+

these rights or asking you to surrender the rights. Therefore, you have

|

| 31 |

+

certain responsibilities if you distribute copies of the software, or if

|

| 32 |

+

you modify it: responsibilities to respect the freedom of others.

|

| 33 |

+

|

| 34 |

+

For example, if you distribute copies of such a program, whether

|

| 35 |

+

gratis or for a fee, you must pass on to the recipients the same

|

| 36 |

+

freedoms that you received. You must make sure that they, too, receive

|

| 37 |

+

or can get the source code. And you must show them these terms so they

|

| 38 |

+

know their rights.

|

| 39 |

+

|

| 40 |

+

Developers that use the GNU GPL protect your rights with two steps:

|

| 41 |

+

(1) assert copyright on the software, and (2) offer you this License

|

| 42 |

+

giving you legal permission to copy, distribute and/or modify it.

|

| 43 |

+

|

| 44 |

+

For the developers' and authors' protection, the GPL clearly explains

|

| 45 |

+

that there is no warranty for this free software. For both users' and

|

| 46 |

+

authors' sake, the GPL requires that modified versions be marked as

|

| 47 |

+

changed, so that their problems will not be attributed erroneously to

|

| 48 |

+

authors of previous versions.

|

| 49 |

+

|

| 50 |

+

Some devices are designed to deny users access to install or run

|

| 51 |

+

modified versions of the software inside them, although the manufacturer

|

| 52 |

+

can do so. This is fundamentally incompatible with the aim of

|

| 53 |

+

protecting users' freedom to change the software. The systematic

|

| 54 |

+

pattern of such abuse occurs in the area of products for individuals to

|

| 55 |

+

use, which is precisely where it is most unacceptable. Therefore, we

|

| 56 |

+

have designed this version of the GPL to prohibit the practice for those

|

| 57 |

+

products. If such problems arise substantially in other domains, we

|

| 58 |

+

stand ready to extend this provision to those domains in future versions

|

| 59 |

+

of the GPL, as needed to protect the freedom of users.

|

| 60 |

+

|

| 61 |

+

Finally, every program is threatened constantly by software patents.

|

| 62 |

+

States should not allow patents to restrict development and use of

|

| 63 |

+

software on general-purpose computers, but in those that do, we wish to

|

| 64 |

+

avoid the special danger that patents applied to a free program could

|

| 65 |

+

make it effectively proprietary. To prevent this, the GPL assures that

|

| 66 |

+

patents cannot be used to render the program non-free.

|

| 67 |

+

|

| 68 |

+

The precise terms and conditions for copying, distribution and

|

| 69 |

+

modification follow.

|

| 70 |

+

|

| 71 |

+

TERMS AND CONDITIONS

|

| 72 |

+

|

| 73 |

+

0. Definitions.

|

| 74 |

+

|

| 75 |

+

"This License" refers to version 3 of the GNU General Public License.

|

| 76 |

+

|

| 77 |

+

"Copyright" also means copyright-like laws that apply to other kinds of

|

| 78 |

+

works, such as semiconductor masks.

|

| 79 |

+

|

| 80 |

+

"The Program" refers to any copyrightable work licensed under this

|

| 81 |

+

License. Each licensee is addressed as "you". "Licensees" and

|

| 82 |

+

"recipients" may be individuals or organizations.

|

| 83 |

+

|

| 84 |

+

To "modify" a work means to copy from or adapt all or part of the work

|

| 85 |

+

in a fashion requiring copyright permission, other than the making of an

|

| 86 |

+

exact copy. The resulting work is called a "modified version" of the

|

| 87 |

+

earlier work or a work "based on" the earlier work.

|

| 88 |

+

|

| 89 |

+

A "covered work" means either the unmodified Program or a work based

|

| 90 |

+

on the Program.

|

| 91 |

+

|

| 92 |

+

To "propagate" a work means to do anything with it that, without

|

| 93 |

+

permission, would make you directly or secondarily liable for

|

| 94 |

+

infringement under applicable copyright law, except executing it on a

|

| 95 |

+

computer or modifying a private copy. Propagation includes copying,

|

| 96 |

+

distribution (with or without modification), making available to the

|

| 97 |

+

public, and in some countries other activities as well.

|

| 98 |

+

|

| 99 |

+

To "convey" a work means any kind of propagation that enables other

|

| 100 |

+

parties to make or receive copies. Mere interaction with a user through

|

| 101 |

+

a computer network, with no transfer of a copy, is not conveying.

|

| 102 |

+

|

| 103 |

+

An interactive user interface displays "Appropriate Legal Notices"

|

| 104 |

+

to the extent that it includes a convenient and prominently visible

|

| 105 |

+

feature that (1) displays an appropriate copyright notice, and (2)

|

| 106 |

+

tells the user that there is no warranty for the work (except to the

|

| 107 |

+

extent that warranties are provided), that licensees may convey the

|

| 108 |

+

work under this License, and how to view a copy of this License. If

|

| 109 |

+

the interface presents a list of user commands or options, such as a

|

| 110 |

+

menu, a prominent item in the list meets this criterion.

|

| 111 |

+

|

| 112 |

+

1. Source Code.

|

| 113 |

+

|

| 114 |

+

The "source code" for a work means the preferred form of the work

|

| 115 |

+

for making modifications to it. "Object code" means any non-source

|

| 116 |

+

form of a work.

|

| 117 |

+

|

| 118 |

+

A "Standard Interface" means an interface that either is an official

|

| 119 |

+

standard defined by a recognized standards body, or, in the case of

|

| 120 |

+

interfaces specified for a particular programming language, one that

|

| 121 |

+

is widely used among developers working in that language.

|

| 122 |

+

|

| 123 |

+

The "System Libraries" of an executable work include anything, other

|

| 124 |

+

than the work as a whole, that (a) is included in the normal form of

|

| 125 |

+

packaging a Major Component, but which is not part of that Major

|

| 126 |

+

Component, and (b) serves only to enable use of the work with that

|

| 127 |

+

Major Component, or to implement a Standard Interface for which an

|

| 128 |

+

implementation is available to the public in source code form. A

|

| 129 |

+

"Major Component", in this context, means a major essential component

|

| 130 |

+

(kernel, window system, and so on) of the specific operating system

|

| 131 |

+

(if any) on which the executable work runs, or a compiler used to

|

| 132 |

+

produce the work, or an object code interpreter used to run it.

|

| 133 |

+

|

| 134 |

+

The "Corresponding Source" for a work in object code form means all

|

| 135 |

+

the source code needed to generate, install, and (for an executable

|

| 136 |

+

work) run the object code and to modify the work, including scripts to

|

| 137 |

+

control those activities. However, it does not include the work's

|

| 138 |

+

System Libraries, or general-purpose tools or generally available free

|

| 139 |

+

programs which are used unmodified in performing those activities but

|

| 140 |

+

which are not part of the work. For example, Corresponding Source

|

| 141 |

+

includes interface definition files associated with source files for

|

| 142 |

+

the work, and the source code for shared libraries and dynamically

|

| 143 |

+

linked subprograms that the work is specifically designed to require,

|

| 144 |

+

such as by intimate data communication or control flow between those

|

| 145 |

+

subprograms and other parts of the work.

|

| 146 |

+

|

| 147 |

+

The Corresponding Source need not include anything that users

|

| 148 |

+

can regenerate automatically from other parts of the Corresponding

|

| 149 |

+

Source.

|

| 150 |

+

|

| 151 |

+

The Corresponding Source for a work in source code form is that

|

| 152 |

+

same work.

|

| 153 |

+

|

| 154 |

+

2. Basic Permissions.

|

| 155 |

+

|

| 156 |

+

All rights granted under this License are granted for the term of

|

| 157 |

+

copyright on the Program, and are irrevocable provided the stated

|

| 158 |

+

conditions are met. This License explicitly affirms your unlimited

|

| 159 |

+

permission to run the unmodified Program. The output from running a

|

| 160 |

+

covered work is covered by this License only if the output, given its

|

| 161 |

+

content, constitutes a covered work. This License acknowledges your

|

| 162 |

+

rights of fair use or other equivalent, as provided by copyright law.

|

| 163 |

+

|

| 164 |

+

You may make, run and propagate covered works that you do not

|

| 165 |

+

convey, without conditions so long as your license otherwise remains

|

| 166 |

+

in force. You may convey covered works to others for the sole purpose

|

| 167 |

+

of having them make modifications exclusively for you, or provide you

|

| 168 |

+

with facilities for running those works, provided that you comply with

|

| 169 |

+

the terms of this License in conveying all material for which you do

|

| 170 |

+

not control copyright. Those thus making or running the covered works

|

| 171 |

+

for you must do so exclusively on your behalf, under your direction

|

| 172 |

+

and control, on terms that prohibit them from making any copies of

|

| 173 |

+

your copyrighted material outside their relationship with you.

|

| 174 |

+

|

| 175 |

+

Conveying under any other circumstances is permitted solely under

|

| 176 |

+

the conditions stated below. Sublicensing is not allowed; section 10

|

| 177 |

+

makes it unnecessary.

|

| 178 |

+

|

| 179 |

+

3. Protecting Users' Legal Rights From Anti-Circumvention Law.

|

| 180 |

+

|

| 181 |

+

No covered work shall be deemed part of an effective technological

|

| 182 |

+

measure under any applicable law fulfilling obligations under article

|

| 183 |

+

11 of the WIPO copyright treaty adopted on 20 December 1996, or

|

| 184 |

+

similar laws prohibiting or restricting circumvention of such

|

| 185 |

+

measures.

|

| 186 |

+

|

| 187 |

+

When you convey a covered work, you waive any legal power to forbid

|

| 188 |

+

circumvention of technological measures to the extent such circumvention

|

| 189 |

+

is effected by exercising rights under this License with respect to

|

| 190 |

+

the covered work, and you disclaim any intention to limit operation or

|

| 191 |

+

modification of the work as a means of enforcing, against the work's

|

| 192 |

+

users, your or third parties' legal rights to forbid circumvention of

|

| 193 |

+

technological measures.

|

| 194 |

+

|

| 195 |

+

4. Conveying Verbatim Copies.

|

| 196 |

+

|

| 197 |

+

You may convey verbatim copies of the Program's source code as you

|

| 198 |

+

receive it, in any medium, provided that you conspicuously and

|

| 199 |

+

appropriately publish on each copy an appropriate copyright notice;

|

| 200 |

+

keep intact all notices stating that this License and any

|

| 201 |

+

non-permissive terms added in accord with section 7 apply to the code;

|

| 202 |

+

keep intact all notices of the absence of any warranty; and give all

|

| 203 |

+

recipients a copy of this License along with the Program.

|

| 204 |

+

|

| 205 |

+

You may charge any price or no price for each copy that you convey,

|

| 206 |

+

and you may offer support or warranty protection for a fee.

|

| 207 |

+

|

| 208 |

+

5. Conveying Modified Source Versions.

|

| 209 |

+

|

| 210 |

+

You may convey a work based on the Program, or the modifications to

|

| 211 |

+

produce it from the Program, in the form of source code under the

|

| 212 |

+

terms of section 4, provided that you also meet all of these conditions:

|

| 213 |

+

|

| 214 |

+

a) The work must carry prominent notices stating that you modified

|

| 215 |

+

it, and giving a relevant date.

|

| 216 |

+

|

| 217 |

+

b) The work must carry prominent notices stating that it is

|

| 218 |

+

released under this License and any conditions added under section

|

| 219 |

+

7. This requirement modifies the requirement in section 4 to

|

| 220 |

+

"keep intact all notices".

|

| 221 |

+

|

| 222 |

+

c) You must license the entire work, as a whole, under this

|

| 223 |

+

License to anyone who comes into possession of a copy. This

|

| 224 |

+

License will therefore apply, along with any applicable section 7

|

| 225 |

+

additional terms, to the whole of the work, and all its parts,

|

| 226 |

+

regardless of how they are packaged. This License gives no

|

| 227 |

+

permission to license the work in any other way, but it does not

|

| 228 |

+

invalidate such permission if you have separately received it.

|

| 229 |

+

|

| 230 |

+

d) If the work has interactive user interfaces, each must display

|

| 231 |

+

Appropriate Legal Notices; however, if the Program has interactive

|

| 232 |

+

interfaces that do not display Appropriate Legal Notices, your

|

| 233 |

+

work need not make them do so.

|

| 234 |

+

|

| 235 |

+

A compilation of a covered work with other separate and independent

|

| 236 |

+

works, which are not by their nature extensions of the covered work,

|

| 237 |

+

and which are not combined with it such as to form a larger program,

|

| 238 |

+

in or on a volume of a storage or distribution medium, is called an

|

| 239 |

+

"aggregate" if the compilation and its resulting copyright are not

|

| 240 |

+

used to limit the access or legal rights of the compilation's users

|

| 241 |

+

beyond what the individual works permit. Inclusion of a covered work

|

| 242 |

+

in an aggregate does not cause this License to apply to the other

|

| 243 |

+

parts of the aggregate.

|

| 244 |

+

|

| 245 |

+

6. Conveying Non-Source Forms.

|

| 246 |

+

|

| 247 |

+

You may convey a covered work in object code form under the terms

|

| 248 |

+

of sections 4 and 5, provided that you also convey the

|

| 249 |

+

machine-readable Corresponding Source under the terms of this License,

|

| 250 |

+

in one of these ways:

|

| 251 |

+

|

| 252 |

+

a) Convey the object code in, or embodied in, a physical product

|

| 253 |

+

(including a physical distribution medium), accompanied by the

|

| 254 |

+

Corresponding Source fixed on a durable physical medium

|

| 255 |

+

customarily used for software interchange.

|

| 256 |

+

|

| 257 |

+

b) Convey the object code in, or embodied in, a physical product

|

| 258 |

+

(including a physical distribution medium), accompanied by a

|

| 259 |

+

written offer, valid for at least three years and valid for as

|

| 260 |

+

long as you offer spare parts or customer support for that product

|

| 261 |

+

model, to give anyone who possesses the object code either (1) a

|

| 262 |

+

copy of the Corresponding Source for all the software in the

|

| 263 |

+

product that is covered by this License, on a durable physical

|

| 264 |

+

medium customarily used for software interchange, for a price no

|

| 265 |

+

more than your reasonable cost of physically performing this

|

| 266 |

+

conveying of source, or (2) access to copy the

|

| 267 |

+

Corresponding Source from a network server at no charge.

|

| 268 |

+

|

| 269 |

+

c) Convey individual copies of the object code with a copy of the

|

| 270 |

+

written offer to provide the Corresponding Source. This

|

| 271 |

+

alternative is allowed only occasionally and noncommercially, and

|

| 272 |

+

only if you received the object code with such an offer, in accord

|

| 273 |

+

with subsection 6b.

|

| 274 |

+

|

| 275 |

+

d) Convey the object code by offering access from a designated

|

| 276 |

+

place (gratis or for a charge), and offer equivalent access to the

|

| 277 |

+

Corresponding Source in the same way through the same place at no

|

| 278 |

+

further charge. You need not require recipients to copy the

|

| 279 |

+

Corresponding Source along with the object code. If the place to

|

| 280 |

+

copy the object code is a network server, the Corresponding Source

|

| 281 |

+

may be on a different server (operated by you or a third party)

|

| 282 |

+

that supports equivalent copying facilities, provided you maintain

|

| 283 |

+

clear directions next to the object code saying where to find the

|

| 284 |

+

Corresponding Source. Regardless of what server hosts the

|

| 285 |

+

Corresponding Source, you remain obligated to ensure that it is

|

| 286 |

+

available for as long as needed to satisfy these requirements.

|

| 287 |

+

|

| 288 |

+

e) Convey the object code using peer-to-peer transmission, provided

|

| 289 |

+

you inform other peers where the object code and Corresponding

|

| 290 |

+

Source of the work are being offered to the general public at no

|

| 291 |

+

charge under subsection 6d.

|

| 292 |

+

|

| 293 |

+

A separable portion of the object code, whose source code is excluded

|

| 294 |

+

from the Corresponding Source as a System Library, need not be

|

| 295 |

+

included in conveying the object code work.

|

| 296 |

+

|

| 297 |

+

A "User Product" is either (1) a "consumer product", which means any

|

| 298 |

+

tangible personal property which is normally used for personal, family,

|

| 299 |

+

or household purposes, or (2) anything designed or sold for incorporation

|

| 300 |

+

into a dwelling. In determining whether a product is a consumer product,

|

| 301 |

+

doubtful cases shall be resolved in favor of coverage. For a particular

|

| 302 |

+

product received by a particular user, "normally used" refers to a

|

| 303 |

+

typical or common use of that class of product, regardless of the status

|

| 304 |

+

of the particular user or of the way in which the particular user

|

| 305 |

+

actually uses, or expects or is expected to use, the product. A product

|

| 306 |

+

is a consumer product regardless of whether the product has substantial

|

| 307 |

+

commercial, industrial or non-consumer uses, unless such uses represent

|

| 308 |

+

the only significant mode of use of the product.

|

| 309 |

+

|

| 310 |

+

"Installation Information" for a User Product means any methods,

|

| 311 |

+

procedures, authorization keys, or other information required to install

|

| 312 |

+

and execute modified versions of a covered work in that User Product from

|

| 313 |

+

a modified version of its Corresponding Source. The information must

|

| 314 |

+

suffice to ensure that the continued functioning of the modified object

|

| 315 |

+

code is in no case prevented or interfered with solely because

|

| 316 |

+

modification has been made.

|

| 317 |

+

|

| 318 |

+

If you convey an object code work under this section in, or with, or

|

| 319 |

+

specifically for use in, a User Product, and the conveying occurs as

|

| 320 |

+

part of a transaction in which the right of possession and use of the

|

| 321 |

+

User Product is transferred to the recipient in perpetuity or for a

|

| 322 |

+

fixed term (regardless of how the transaction is characterized), the

|

| 323 |

+

Corresponding Source conveyed under this section must be accompanied

|

| 324 |

+

by the Installation Information. But this requirement does not apply

|

| 325 |

+

if neither you nor any third party retains the ability to install

|

| 326 |

+

modified object code on the User Product (for example, the work has

|

| 327 |

+

been installed in ROM).

|

| 328 |

+

|

| 329 |

+

The requirement to provide Installation Information does not include a

|

| 330 |

+

requirement to continue to provide support service, warranty, or updates

|

| 331 |

+

for a work that has been modified or installed by the recipient, or for

|

| 332 |

+

the User Product in which it has been modified or installed. Access to a

|

| 333 |

+

network may be denied when the modification itself materially and

|

| 334 |

+

adversely affects the operation of the network or violates the rules and

|

| 335 |

+

protocols for communication across the network.

|

| 336 |

+

|

| 337 |

+

Corresponding Source conveyed, and Installation Information provided,

|

| 338 |

+

in accord with this section must be in a format that is publicly

|

| 339 |

+

documented (and with an implementation available to the public in

|

| 340 |

+

source code form), and must require no special password or key for

|

| 341 |

+

unpacking, reading or copying.

|

| 342 |

+

|

| 343 |

+

7. Additional Terms.

|

| 344 |

+

|

| 345 |

+

"Additional permissions" are terms that supplement the terms of this

|

| 346 |

+

License by making exceptions from one or more of its conditions.

|

| 347 |

+

Additional permissions that are applicable to the entire Program shall

|

| 348 |

+

be treated as though they were included in this License, to the extent

|

| 349 |

+

that they are valid under applicable law. If additional permissions

|

| 350 |

+

apply only to part of the Program, that part may be used separately

|

| 351 |

+

under those permissions, but the entire Program remains governed by

|

| 352 |

+

this License without regard to the additional permissions.

|

| 353 |

+

|

| 354 |

+

When you convey a copy of a covered work, you may at your option

|

| 355 |

+

remove any additional permissions from that copy, or from any part of

|

| 356 |

+

it. (Additional permissions may be written to require their own

|

| 357 |

+

removal in certain cases when you modify the work.) You may place

|

| 358 |

+

additional permissions on material, added by you to a covered work,

|

| 359 |

+

for which you have or can give appropriate copyright permission.

|

| 360 |

+

|

| 361 |

+

Notwithstanding any other provision of this License, for material you

|

| 362 |

+

add to a covered work, you may (if authorized by the copyright holders of

|

| 363 |

+

that material) supplement the terms of this License with terms:

|

| 364 |

+

|

| 365 |

+

a) Disclaiming warranty or limiting liability differently from the

|

| 366 |

+

terms of sections 15 and 16 of this License; or

|

| 367 |

+

|

| 368 |

+

b) Requiring preservation of specified reasonable legal notices or

|

| 369 |

+

author attributions in that material or in the Appropriate Legal

|

| 370 |

+

Notices displayed by works containing it; or

|

| 371 |

+

|

| 372 |

+

c) Prohibiting misrepresentation of the origin of that material, or

|

| 373 |

+

requiring that modified versions of such material be marked in

|

| 374 |

+

reasonable ways as different from the original version; or

|

| 375 |

+

|

| 376 |

+

d) Limiting the use for publicity purposes of names of licensors or

|

| 377 |

+

authors of the material; or

|

| 378 |

+

|

| 379 |

+

e) Declining to grant rights under trademark law for use of some

|

| 380 |

+

trade names, trademarks, or service marks; or

|

| 381 |

+

|

| 382 |

+

f) Requiring indemnification of licensors and authors of that

|

| 383 |

+

material by anyone who conveys the material (or modified versions of

|

| 384 |

+

it) with contractual assumptions of liability to the recipient, for

|

| 385 |

+

any liability that these contractual assumptions directly impose on

|

| 386 |

+

those licensors and authors.

|

| 387 |

+

|

| 388 |

+

All other non-permissive additional terms are considered "further

|

| 389 |

+

restrictions" within the meaning of section 10. If the Program as you

|

| 390 |

+

received it, or any part of it, contains a notice stating that it is

|

| 391 |

+

governed by this License along with a term that is a further

|

| 392 |

+

restriction, you may remove that term. If a license document contains

|

| 393 |

+

a further restriction but permits relicensing or conveying under this

|

| 394 |

+

License, you may add to a covered work material governed by the terms

|

| 395 |

+

of that license document, provided that the further restriction does

|

| 396 |

+

not survive such relicensing or conveying.

|

| 397 |

+

|

| 398 |

+

If you add terms to a covered work in accord with this section, you

|

| 399 |

+

must place, in the relevant source files, a statement of the

|

| 400 |

+

additional terms that apply to those files, or a notice indicating

|

| 401 |

+

where to find the applicable terms.

|

| 402 |

+

|

| 403 |

+

Additional terms, permissive or non-permissive, may be stated in the

|

| 404 |

+

form of a separately written license, or stated as exceptions;

|

| 405 |

+

the above requirements apply either way.

|

| 406 |

+

|

| 407 |

+

8. Termination.

|

| 408 |

+

|

| 409 |

+

You may not propagate or modify a covered work except as expressly

|

| 410 |

+

provided under this License. Any attempt otherwise to propagate or

|

| 411 |

+

modify it is void, and will automatically terminate your rights under

|

| 412 |

+

this License (including any patent licenses granted under the third

|

| 413 |

+

paragraph of section 11).

|

| 414 |

+

|

| 415 |

+

However, if you cease all violation of this License, then your

|

| 416 |

+

license from a particular copyright holder is reinstated (a)

|

| 417 |

+

provisionally, unless and until the copyright holder explicitly and

|

| 418 |

+

finally terminates your license, and (b) permanently, if the copyright

|

| 419 |

+

holder fails to notify you of the violation by some reasonable means

|

| 420 |

+

prior to 60 days after the cessation.

|

| 421 |

+

|

| 422 |

+

Moreover, your license from a particular copyright holder is

|

| 423 |

+

reinstated permanently if the copyright holder notifies you of the

|

| 424 |

+

violation by some reasonable means, this is the first time you have

|

| 425 |

+

received notice of violation of this License (for any work) from that

|

| 426 |

+

copyright holder, and you cure the violation prior to 30 days after

|

| 427 |

+

your receipt of the notice.

|

| 428 |

+

|

| 429 |

+

Termination of your rights under this section does not terminate the

|

| 430 |

+

licenses of parties who have received copies or rights from you under

|

| 431 |

+

this License. If your rights have been terminated and not permanently

|

| 432 |

+

reinstated, you do not qualify to receive new licenses for the same

|

| 433 |

+

material under section 10.

|

| 434 |

+

|

| 435 |

+

9. Acceptance Not Required for Having Copies.

|

| 436 |

+

|

| 437 |

+

You are not required to accept this License in order to receive or

|

| 438 |

+

run a copy of the Program. Ancillary propagation of a covered work

|

| 439 |

+

occurring solely as a consequence of using peer-to-peer transmission

|

| 440 |

+

to receive a copy likewise does not require acceptance. However,

|

| 441 |

+

nothing other than this License grants you permission to propagate or

|

| 442 |

+

modify any covered work. These actions infringe copyright if you do

|

| 443 |

+

not accept this License. Therefore, by modifying or propagating a

|

| 444 |

+

covered work, you indicate your acceptance of this License to do so.

|

| 445 |

+

|

| 446 |

+

10. Automatic Licensing of Downstream Recipients.

|

| 447 |

+

|

| 448 |

+

Each time you convey a covered work, the recipient automatically

|

| 449 |

+

receives a license from the original licensors, to run, modify and

|

| 450 |

+

propagate that work, subject to this License. You are not responsible

|

| 451 |

+

for enforcing compliance by third parties with this License.

|

| 452 |

+

|

| 453 |

+

An "entity transaction" is a transaction transferring control of an

|

| 454 |

+

organization, or substantially all assets of one, or subdividing an

|

| 455 |

+

organization, or merging organizations. If propagation of a covered

|

| 456 |

+

work results from an entity transaction, each party to that

|

| 457 |

+

transaction who receives a copy of the work also receives whatever

|

| 458 |

+

licenses to the work the party's predecessor in interest had or could

|

| 459 |

+

give under the previous paragraph, plus a right to possession of the

|

| 460 |

+

Corresponding Source of the work from the predecessor in interest, if

|

| 461 |

+

the predecessor has it or can get it with reasonable efforts.

|

| 462 |

+

|

| 463 |

+

You may not impose any further restrictions on the exercise of the

|

| 464 |

+

rights granted or affirmed under this License. For example, you may

|

| 465 |

+

not impose a license fee, royalty, or other charge for exercise of

|

| 466 |

+

rights granted under this License, and you may not initiate litigation

|

| 467 |

+

(including a cross-claim or counterclaim in a lawsuit) alleging that

|

| 468 |

+

any patent claim is infringed by making, using, selling, offering for

|

| 469 |

+

sale, or importing the Program or any portion of it.

|

| 470 |

+

|

| 471 |

+

11. Patents.

|

| 472 |

+

|

| 473 |

+

A "contributor" is a copyright holder who authorizes use under this

|

| 474 |

+

License of the Program or a work on which the Program is based. The

|

| 475 |

+

work thus licensed is called the contributor's "contributor version".

|

| 476 |

+

|

| 477 |

+

A contributor's "essential patent claims" are all patent claims

|

| 478 |

+

owned or controlled by the contributor, whether already acquired or

|

| 479 |

+

hereafter acquired, that would be infringed by some manner, permitted

|

| 480 |

+

by this License, of making, using, or selling its contributor version,

|

| 481 |

+

but do not include claims that would be infringed only as a

|

| 482 |

+

consequence of further modification of the contributor version. For

|

| 483 |

+

purposes of this definition, "control" includes the right to grant

|

| 484 |

+

patent sublicenses in a manner consistent with the requirements of

|

| 485 |

+

this License.

|

| 486 |

+

|

| 487 |

+

Each contributor grants you a non-exclusive, worldwide, royalty-free

|

| 488 |

+

patent license under the contributor's essential patent claims, to

|

| 489 |

+

make, use, sell, offer for sale, import and otherwise run, modify and

|

| 490 |

+

propagate the contents of its contributor version.

|

| 491 |

+

|

| 492 |

+

In the following three paragraphs, a "patent license" is any express

|

| 493 |

+

agreement or commitment, however denominated, not to enforce a patent

|

| 494 |

+

(such as an express permission to practice a patent or covenant not to

|

| 495 |

+

sue for patent infringement). To "grant" such a patent license to a

|

| 496 |

+

party means to make such an agreement or commitment not to enforce a

|

| 497 |

+

patent against the party.

|

| 498 |

+

|

| 499 |

+

If you convey a covered work, knowingly relying on a patent license,

|

| 500 |

+

and the Corresponding Source of the work is not available for anyone

|

| 501 |

+

to copy, free of charge and under the terms of this License, through a

|

| 502 |

+

publicly available network server or other readily accessible means,

|

| 503 |

+

then you must either (1) cause the Corresponding Source to be so

|

| 504 |

+

available, or (2) arrange to deprive yourself of the benefit of the

|

| 505 |

+

patent license for this particular work, or (3) arrange, in a manner

|

| 506 |

+

consistent with the requirements of this License, to extend the patent

|

| 507 |

+

license to downstream recipients. "Knowingly relying" means you have

|

| 508 |

+

actual knowledge that, but for the patent license, your conveying the

|

| 509 |

+

covered work in a country, or your recipient's use of the covered work

|

| 510 |

+

in a country, would infringe one or more identifiable patents in that

|

| 511 |

+

country that you have reason to believe are valid.

|

| 512 |

+

|

| 513 |

+

If, pursuant to or in connection with a single transaction or

|

| 514 |

+

arrangement, you convey, or propagate by procuring conveyance of, a

|

| 515 |

+

covered work, and grant a patent license to some of the parties

|

| 516 |

+

receiving the covered work authorizing them to use, propagate, modify

|

| 517 |

+

or convey a specific copy of the covered work, then the patent license

|

| 518 |

+

you grant is automatically extended to all recipients of the covered

|

| 519 |

+

work and works based on it.

|

| 520 |

+

|

| 521 |

+

A patent license is "discriminatory" if it does not include within

|

| 522 |

+

the scope of its coverage, prohibits the exercise of, or is

|

| 523 |

+

conditioned on the non-exercise of one or more of the rights that are

|

| 524 |

+

specifically granted under this License. You may not convey a covered

|

| 525 |

+

work if you are a party to an arrangement with a third party that is

|

| 526 |

+

in the business of distributing software, under which you make payment

|

| 527 |

+

to the third party based on the extent of your activity of conveying

|

| 528 |

+

the work, and under which the third party grants, to any of the

|

| 529 |

+

parties who would receive the covered work from you, a discriminatory

|

| 530 |

+

patent license (a) in connection with copies of the covered work

|

| 531 |

+

conveyed by you (or copies made from those copies), or (b) primarily

|

| 532 |

+

for and in connection with specific products or compilations that

|

| 533 |

+

contain the covered work, unless you entered into that arrangement,

|

| 534 |

+

or that patent license was granted, prior to 28 March 2007.

|

| 535 |

+

|

| 536 |

+

Nothing in this License shall be construed as excluding or limiting

|

| 537 |

+

any implied license or other defenses to infringement that may

|

| 538 |

+

otherwise be available to you under applicable patent law.

|

| 539 |

+

|

| 540 |

+

12. No Surrender of Others' Freedom.

|

| 541 |

+

|

| 542 |

+

If conditions are imposed on you (whether by court order, agreement or

|

| 543 |

+

otherwise) that contradict the conditions of this License, they do not

|

| 544 |

+

excuse you from the conditions of this License. If you cannot convey a

|

| 545 |

+

covered work so as to satisfy simultaneously your obligations under this

|

| 546 |

+

License and any other pertinent obligations, then as a consequence you may

|

| 547 |

+

not convey it at all. For example, if you agree to terms that obligate you

|

| 548 |

+

to collect a royalty for further conveying from those to whom you convey

|

| 549 |

+

the Program, the only way you could satisfy both those terms and this

|

| 550 |

+

License would be to refrain entirely from conveying the Program.

|

| 551 |

+

|

| 552 |

+

13. Use with the GNU Affero General Public License.

|

| 553 |

+

|

| 554 |

+

Notwithstanding any other provision of this License, you have

|

| 555 |

+

permission to link or combine any covered work with a work licensed

|

| 556 |

+

under version 3 of the GNU Affero General Public License into a single

|

| 557 |

+

combined work, and to convey the resulting work. The terms of this

|

| 558 |

+

License will continue to apply to the part which is the covered work,

|

| 559 |

+

but the special requirements of the GNU Affero General Public License,

|

| 560 |

+

section 13, concerning interaction through a network will apply to the

|

| 561 |

+

combination as such.

|

| 562 |

+

|

| 563 |

+

14. Revised Versions of this License.

|

| 564 |

+

|

| 565 |

+

The Free Software Foundation may publish revised and/or new versions of

|

| 566 |

+

the GNU General Public License from time to time. Such new versions will

|

| 567 |

+

be similar in spirit to the present version, but may differ in detail to

|

| 568 |

+

address new problems or concerns.

|

| 569 |

+

|

| 570 |

+

Each version is given a distinguishing version number. If the

|

| 571 |

+

Program specifies that a certain numbered version of the GNU General

|

| 572 |

+

Public License "or any later version" applies to it, you have the

|

| 573 |

+

option of following the terms and conditions either of that numbered

|

| 574 |

+

version or of any later version published by the Free Software

|

| 575 |

+

Foundation. If the Program does not specify a version number of the

|

| 576 |

+

GNU General Public License, you may choose any version ever published

|

| 577 |

+

by the Free Software Foundation.

|

| 578 |

+

|

| 579 |

+

If the Program specifies that a proxy can decide which future

|

| 580 |

+

versions of the GNU General Public License can be used, that proxy's

|

| 581 |

+

public statement of acceptance of a version permanently authorizes you

|

| 582 |

+

to choose that version for the Program.

|

| 583 |

+

|

| 584 |

+

Later license versions may give you additional or different

|

| 585 |

+

permissions. However, no additional obligations are imposed on any

|

| 586 |

+

author or copyright holder as a result of your choosing to follow a

|

| 587 |

+

later version.

|

| 588 |

+

|

| 589 |

+

15. Disclaimer of Warranty.

|

| 590 |

+

|

| 591 |

+

THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

|

| 592 |

+

APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT

|

| 593 |

+

HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM "AS IS" WITHOUT WARRANTY

|

| 594 |

+

OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO,

|

| 595 |

+

THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

|

| 596 |

+

PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM

|

| 597 |

+

IS WITH YOU. SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF

|

| 598 |

+

ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

|

| 599 |

+

|

| 600 |

+

16. Limitation of Liability.

|

| 601 |

+

|

| 602 |

+

IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN WRITING

|

| 603 |

+

WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES AND/OR CONVEYS

|

| 604 |

+

THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY

|

| 605 |

+

GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE

|

| 606 |

+

USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT NOT LIMITED TO LOSS OF

|

| 607 |

+

DATA OR DATA BEING RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD

|

| 608 |

+

PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS),

|

| 609 |

+

EVEN IF SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF

|

| 610 |

+

SUCH DAMAGES.

|

| 611 |

+

|

| 612 |

+

17. Interpretation of Sections 15 and 16.

|

| 613 |

+

|

| 614 |

+

If the disclaimer of warranty and limitation of liability provided

|

| 615 |

+

above cannot be given local legal effect according to their terms,

|

| 616 |

+

reviewing courts shall apply local law that most closely approximates

|

| 617 |

+

an absolute waiver of all civil liability in connection with the

|

| 618 |

+

Program, unless a warranty or assumption of liability accompanies a

|

| 619 |

+

copy of the Program in return for a fee.

|

| 620 |

+

|

| 621 |

+

END OF TERMS AND CONDITIONS

|

| 622 |

+

|

| 623 |

+

How to Apply These Terms to Your New Programs

|

| 624 |

+

|

| 625 |

+

If you develop a new program, and you want it to be of the greatest

|

| 626 |

+

possible use to the public, the best way to achieve this is to make it

|

| 627 |

+

free software which everyone can redistribute and change under these terms.

|

| 628 |

+

|

| 629 |

+

To do so, attach the following notices to the program. It is safest

|

| 630 |

+

to attach them to the start of each source file to most effectively

|

| 631 |

+

state the exclusion of warranty; and each file should have at least

|

| 632 |

+

the "copyright" line and a pointer to where the full notice is found.

|

| 633 |

+

|

| 634 |

+

<one line to give the program's name and a brief idea of what it does.>

|

| 635 |

+

Copyright (C) <year> <name of author>

|

| 636 |

+

|

| 637 |

+

This program is free software: you can redistribute it and/or modify

|

| 638 |

+

it under the terms of the GNU General Public License as published by

|

| 639 |

+

the Free Software Foundation, either version 3 of the License, or

|

| 640 |

+

(at your option) any later version.

|

| 641 |

+

|

| 642 |

+

This program is distributed in the hope that it will be useful,

|

| 643 |

+

but WITHOUT ANY WARRANTY; without even the implied warranty of

|

| 644 |

+

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

|

| 645 |

+

GNU General Public License for more details.

|

| 646 |

+

|

| 647 |

+

You should have received a copy of the GNU General Public License

|

| 648 |

+

along with this program. If not, see <https://www.gnu.org/licenses/>.

|

| 649 |

+

|

| 650 |

+

Also add information on how to contact you by electronic and paper mail.

|

| 651 |

+

|

| 652 |

+

If the program does terminal interaction, make it output a short

|

| 653 |

+

notice like this when it starts in an interactive mode:

|

| 654 |

+

|

| 655 |

+

<program> Copyright (C) <year> <name of author>

|

| 656 |

+

This program comes with ABSOLUTELY NO WARRANTY; for details type `show w'.

|

| 657 |

+

This is free software, and you are welcome to redistribute it

|

| 658 |

+

under certain conditions; type `show c' for details.

|

| 659 |

+

|

| 660 |

+

The hypothetical commands `show w' and `show c' should show the appropriate

|

| 661 |

+

parts of the General Public License. Of course, your program's commands

|

| 662 |

+

might be different; for a GUI interface, you would use an "about box".

|

| 663 |

+

|

| 664 |

+

You should also get your employer (if you work as a programmer) or school,

|

| 665 |

+

if any, to sign a "copyright disclaimer" for the program, if necessary.

|

| 666 |

+

For more information on this, and how to apply and follow the GNU GPL, see

|

| 667 |

+

<https://www.gnu.org/licenses/>.

|

| 668 |

+

|

| 669 |

+

The GNU General Public License does not permit incorporating your program

|

| 670 |

+

into proprietary programs. If your program is a subroutine library, you

|

| 671 |

+

may consider it more useful to permit linking proprietary applications with

|

| 672 |

+

the library. If this is what you want to do, use the GNU Lesser General

|

| 673 |

+

Public License instead of this License. But first, please read

|

| 674 |

+

<https://www.gnu.org/licenses/why-not-lgpl.html>.

|

README.md

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

---

|

| 2 |

title: YOLOR

|

| 3 |