Spaces:

Build error

Build error

UI changes, add examples

Browse files- .gitattributes +4 -0

- app.py +24 -8

- examples/beaver.png +3 -0

- examples/couch.png +3 -0

- examples/sparrow.png +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

cc3m_embeddings.pkl filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

cc3m_embeddings.pkl filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

examples filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

examples/beaver.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

examples/couch.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

examples/sparrow.png filter=lfs diff=lfs merge=lfs -text

|

app.py

CHANGED

|

@@ -48,6 +48,9 @@ css = """

|

|

| 48 |

"""

|

| 49 |

|

| 50 |

examples = [

|

|

|

|

|

|

|

|

|

|

| 51 |

]

|

| 52 |

|

| 53 |

# Download model from HF Hub.

|

|

@@ -142,12 +145,25 @@ def generate_for_prompt(input_text, state, ret_scale_factor, max_num_rets, num_w

|

|

| 142 |

|

| 143 |

|

| 144 |

with gr.Blocks(css=css) as demo:

|

| 145 |

-

gr.Markdown(

|

| 146 |

-

'### Grounding Language Models to Images for Multimodal Generation'

|

| 147 |

-

)

|

| 148 |

-

|

| 149 |

gr.HTML("""

|

| 150 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 151 |

""")

|

| 152 |

|

| 153 |

gr_state = gr.State([[], []]) # conversation, chat_history

|

|

@@ -183,9 +199,9 @@ with gr.Blocks(css=css) as demo:

|

|

| 183 |

gr_temperature = gr.Slider(

|

| 184 |

minimum=0.0, maximum=1.0, value=0.0, interactive=True, label="Temperature (0 for deterministic, higher for more randomness)")

|

| 185 |

|

| 186 |

-

|

| 187 |

-

|

| 188 |

-

|

| 189 |

|

| 190 |

text_input.submit(generate_for_prompt, [text_input, gr_state, ret_scale_factor,

|

| 191 |

max_ret_images, gr_max_len, gr_temperature], [gr_state, chatbot, share_group, save_group])

|

|

|

|

| 48 |

"""

|

| 49 |

|

| 50 |

examples = [

|

| 51 |

+

'examples/sparrow.png',

|

| 52 |

+

'examples/beaver.png',

|

| 53 |

+

'examples/couch.png',

|

| 54 |

]

|

| 55 |

|

| 56 |

# Download model from HF Hub.

|

|

|

|

| 145 |

|

| 146 |

|

| 147 |

with gr.Blocks(css=css) as demo:

|

|

|

|

|

|

|

|

|

|

|

|

|

| 148 |

gr.HTML("""

|

| 149 |

+

<h1>🧀 FROMAGe</h1>

|

| 150 |

+

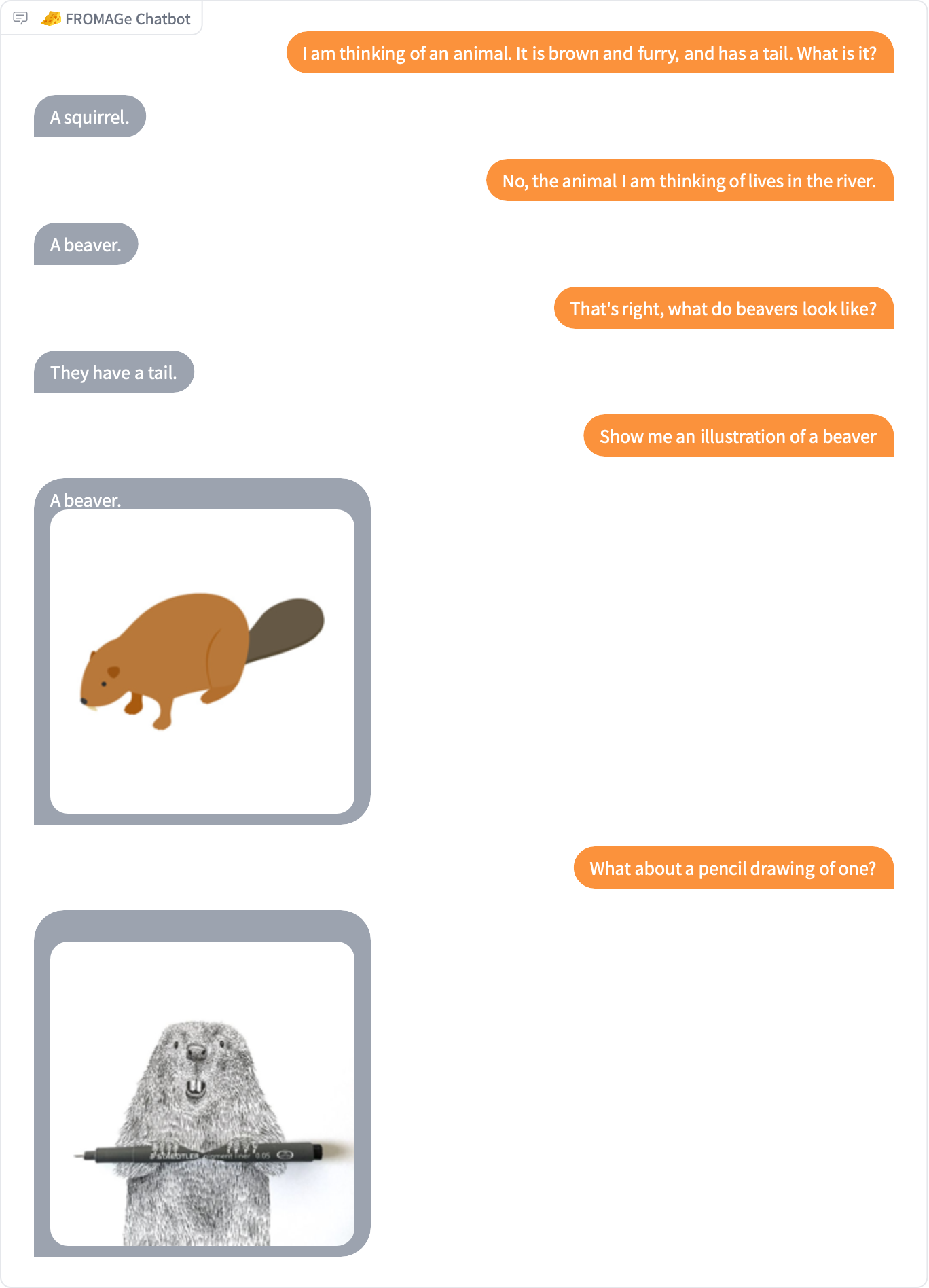

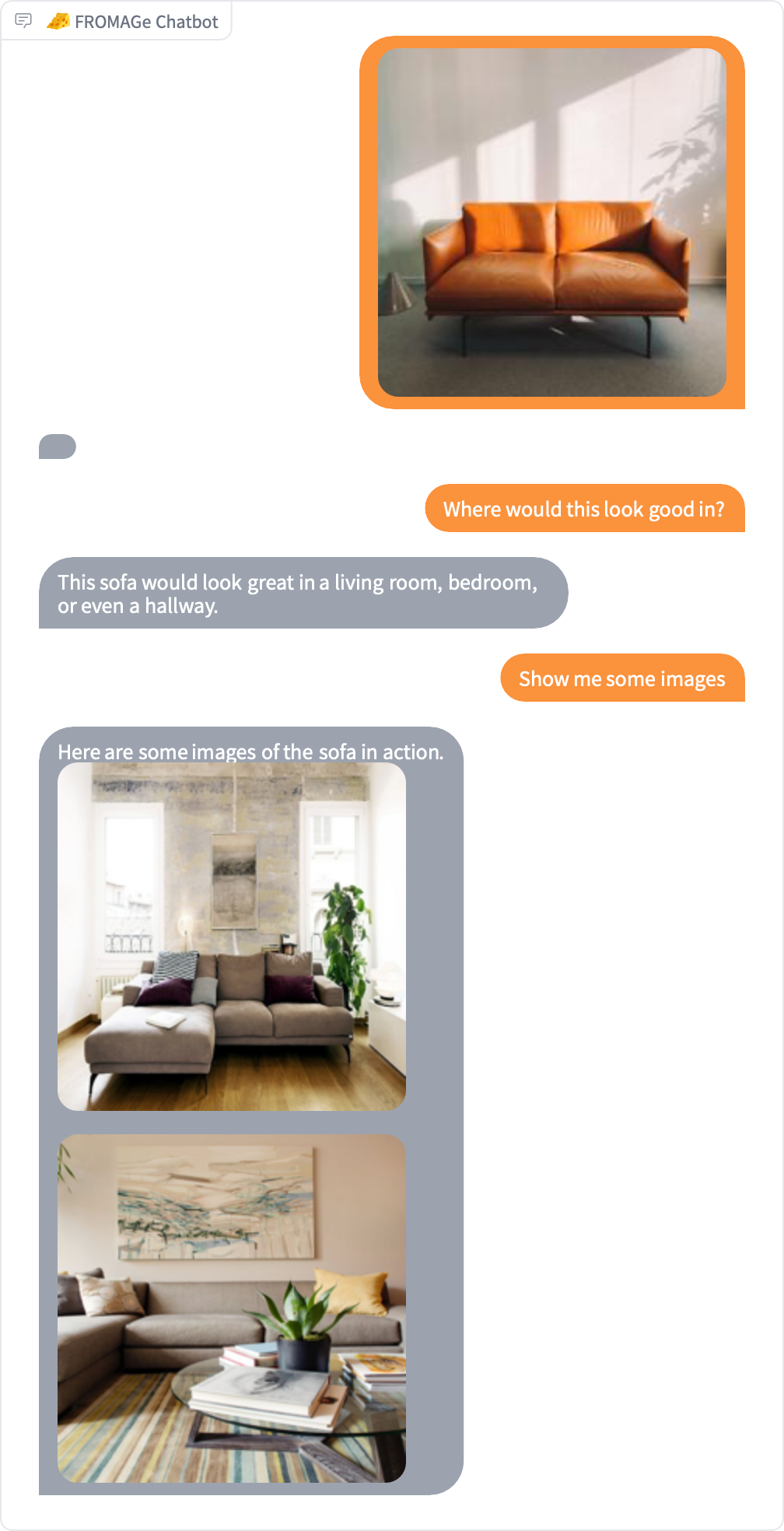

<p>This is the official Gradio demo for the FROMAGe model, a model that can process arbitrarily interleaved image and text inputs, and produce image and text outputs.</p>

|

| 151 |

+

|

| 152 |

+

<strong>Paper:</strong> <a href="https://arxiv.org/abs/2301.13823" target="_blank">Grounding Language Models to Images for Multimodal Generation</a>

|

| 153 |

+

<br/>

|

| 154 |

+

<strong>Project Website:</strong> <a href="https://jykoh.com/fromage" target="_blank">FROMAGe Website</a>

|

| 155 |

+

<br/>

|

| 156 |

+

<strong>Code and Models:</strong> <a href="https://github.com/kohjingyu/fromage" target="_blank">GitHub</a>

|

| 157 |

+

<br/>

|

| 158 |

+

<br/>

|

| 159 |

+

|

| 160 |

+

<strong>Tips:</strong>

|

| 161 |

+

<ul>

|

| 162 |

+

<li>Start by inputting either image or text prompts (or both) and chat with FROMAGe to get image-and-text replies.</li>

|

| 163 |

+

<li>Tweak the level of sensitivity to images and text using the parameters on the right.</li>

|

| 164 |

+

<li>Check out cool conversations in the examples or community tab for inspiration and share your own!</li>

|

| 165 |

+

<li>For faster inference without waiting in queue, you may duplicate the space and use your own GPU: <a href="https://huggingface.co/spaces/jykoh/fromage?duplicate=true"><img style="display: inline-block; margin-top: 0em; margin-bottom: 0em" src="https://img.shields.io/badge/-Duplicate%20Space-blue?labelColor=white&style=flat&logo=data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAABAAAAAQCAYAAAAf8/9hAAAAAXNSR0IArs4c6QAAAP5JREFUOE+lk7FqAkEURY+ltunEgFXS2sZGIbXfEPdLlnxJyDdYB62sbbUKpLbVNhyYFzbrrA74YJlh9r079973psed0cvUD4A+4HoCjsA85X0Dfn/RBLBgBDxnQPfAEJgBY+A9gALA4tcbamSzS4xq4FOQAJgCDwV2CPKV8tZAJcAjMMkUe1vX+U+SMhfAJEHasQIWmXNN3abzDwHUrgcRGmYcgKe0bxrblHEB4E/pndMazNpSZGcsZdBlYJcEL9Afo75molJyM2FxmPgmgPqlWNLGfwZGG6UiyEvLzHYDmoPkDDiNm9JR9uboiONcBXrpY1qmgs21x1QwyZcpvxt9NS09PlsPAAAAAElFTkSuQmCC&logoWidth=14" alt="Duplicate Space"></a></li>

|

| 166 |

+

</ul>

|

| 167 |

""")

|

| 168 |

|

| 169 |

gr_state = gr.State([[], []]) # conversation, chat_history

|

|

|

|

| 199 |

gr_temperature = gr.Slider(

|

| 200 |

minimum=0.0, maximum=1.0, value=0.0, interactive=True, label="Temperature (0 for deterministic, higher for more randomness)")

|

| 201 |

|

| 202 |

+

gallery = gr.Gallery(

|

| 203 |

+

value=[Image.open(e) for e in examples], label="Example Conversations", show_label=True, elem_id="gallery",

|

| 204 |

+

).style(grid=[2], height="auto")

|

| 205 |

|

| 206 |

text_input.submit(generate_for_prompt, [text_input, gr_state, ret_scale_factor,

|

| 207 |

max_ret_images, gr_max_len, gr_temperature], [gr_state, chatbot, share_group, save_group])

|

examples/beaver.png

ADDED

|

Git LFS Details

|

examples/couch.png

ADDED

|

Git LFS Details

|

examples/sparrow.png

ADDED

|

Git LFS Details

|