init project

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .dockerignore +7 -0

- .github/ISSUE_TEMPLATE/bug_report.yml +50 -0

- .github/ISSUE_TEMPLATE/config.yml +5 -0

- .github/ISSUE_TEMPLATE/feature_request.yml +40 -0

- .github/pull_request_template.md +7 -0

- .github/workflows/build-docker-image.yml +70 -0

- .github/workflows/docs.yml +33 -0

- .github/workflows/stale.yml +25 -0

- .gitignore +31 -0

- .pre-commit-config.yaml +25 -0

- .project-root +0 -0

- .readthedocs.yaml +19 -0

- API_FLAGS.txt +6 -0

- Dockerfile +44 -0

- LICENSE +437 -0

- docker-compose.dev.yml +16 -0

- dockerfile.dev +33 -0

- docs/CNAME +1 -0

- docs/assets/figs/VS_1.jpg +0 -0

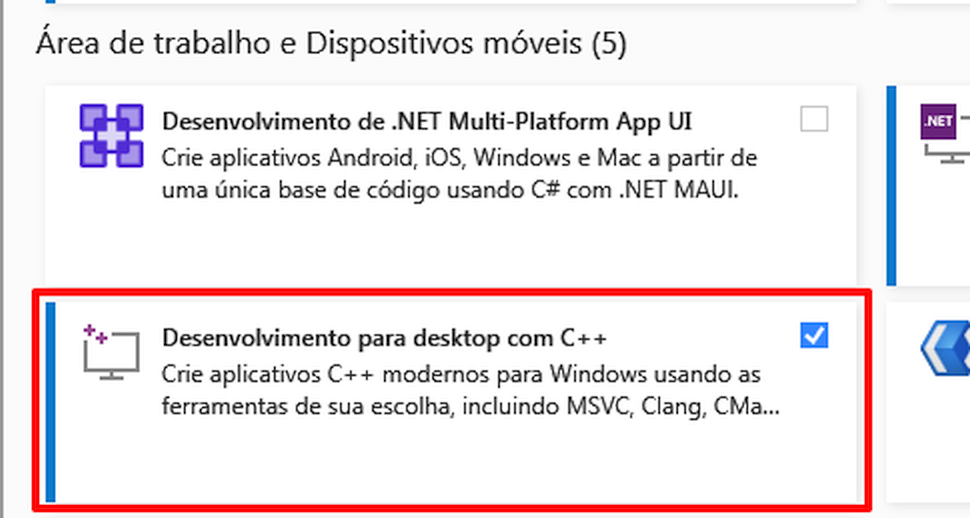

- docs/assets/figs/VS_1_pt-BR.png +0 -0

- docs/assets/figs/diagram.png +0 -0

- docs/assets/figs/diagrama.png +0 -0

- docs/en/finetune.md +125 -0

- docs/en/index.md +133 -0

- docs/en/inference.md +124 -0

- docs/en/samples.md +223 -0

- docs/ja/finetune.md +125 -0

- docs/ja/index.md +128 -0

- docs/ja/inference.md +157 -0

- docs/ja/samples.md +223 -0

- docs/pt/finetune.md +125 -0

- docs/pt/index.md +131 -0

- docs/pt/inference.md +153 -0

- docs/pt/samples.md +223 -0

- docs/requirements.txt +3 -0

- docs/stylesheets/extra.css +3 -0

- docs/zh/finetune.md +136 -0

- docs/zh/index.md +191 -0

- docs/zh/inference.md +134 -0

- docs/zh/samples.md +223 -0

- entrypoint.sh +10 -0

- fish_speech/callbacks/__init__.py +3 -0

- fish_speech/callbacks/grad_norm.py +113 -0

- fish_speech/configs/base.yaml +87 -0

- fish_speech/configs/firefly_gan_vq.yaml +33 -0

- fish_speech/configs/lora/r_8_alpha_16.yaml +4 -0

- fish_speech/configs/text2semantic_finetune.yaml +83 -0

- fish_speech/conversation.py +2 -0

- fish_speech/datasets/concat_repeat.py +53 -0

- fish_speech/datasets/protos/text-data.proto +24 -0

.dockerignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.git

|

| 2 |

+

.github

|

| 3 |

+

results

|

| 4 |

+

data

|

| 5 |

+

*.filelist

|

| 6 |

+

/data_server/target

|

| 7 |

+

checkpoints

|

.github/ISSUE_TEMPLATE/bug_report.yml

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: "🕷️ Bug report"

|

| 2 |

+

description: Report errors or unexpected behavior

|

| 3 |

+

labels:

|

| 4 |

+

- bug

|

| 5 |

+

body:

|

| 6 |

+

- type: checkboxes

|

| 7 |

+

attributes:

|

| 8 |

+

label: Self Checks

|

| 9 |

+

description: "To make sure we get to you in time, please check the following :)"

|

| 10 |

+

options:

|

| 11 |

+

- label: This is only for bug report, if you would like to ask a question, please head to [Discussions](https://github.com/fishaudio/fish-speech/discussions).

|

| 12 |

+

required: true

|

| 13 |

+

- label: I have searched for existing issues [search for existing issues](https://github.com/fishaudio/fish-speech/issues), including closed ones.

|

| 14 |

+

required: true

|

| 15 |

+

- label: I confirm that I am using English to submit this report (我已阅读并同意 [Language Policy](https://github.com/fishaudio/fish-speech/issues/515)).

|

| 16 |

+

required: true

|

| 17 |

+

- label: "[FOR CHINESE USERS] 请务必使用英文提交 Issue,否则会被关闭。谢谢!:)"

|

| 18 |

+

required: true

|

| 19 |

+

- label: "Please do not modify this template :) and fill in all the required fields."

|

| 20 |

+

required: true

|

| 21 |

+

- type: dropdown

|

| 22 |

+

attributes:

|

| 23 |

+

label: Cloud or Self Hosted

|

| 24 |

+

multiple: true

|

| 25 |

+

options:

|

| 26 |

+

- Cloud

|

| 27 |

+

- Self Hosted (Docker)

|

| 28 |

+

- Self Hosted (Source)

|

| 29 |

+

validations:

|

| 30 |

+

required: true

|

| 31 |

+

- type: textarea

|

| 32 |

+

attributes:

|

| 33 |

+

label: Steps to reproduce

|

| 34 |

+

description: We highly suggest including screenshots and a bug report log. Please use the right markdown syntax for code blocks.

|

| 35 |

+

placeholder: Having detailed steps helps us reproduce the bug.

|

| 36 |

+

validations:

|

| 37 |

+

required: true

|

| 38 |

+

- type: textarea

|

| 39 |

+

attributes:

|

| 40 |

+

label: ✔️ Expected Behavior

|

| 41 |

+

placeholder: What were you expecting?

|

| 42 |

+

validations:

|

| 43 |

+

required: false

|

| 44 |

+

|

| 45 |

+

- type: textarea

|

| 46 |

+

attributes:

|

| 47 |

+

label: ❌ Actual Behavior

|

| 48 |

+

placeholder: What happened instead?

|

| 49 |

+

validations:

|

| 50 |

+

required: false

|

.github/ISSUE_TEMPLATE/config.yml

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

blank_issues_enabled: false

|

| 2 |

+

contact_links:

|

| 3 |

+

- name: "\U0001F4E7 Discussions"

|

| 4 |

+

url: https://github.com/fishaudio/fish-speech/discussions

|

| 5 |

+

about: General discussions and request help from the community

|

.github/ISSUE_TEMPLATE/feature_request.yml

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: "⭐ Feature or enhancement request"

|

| 2 |

+

description: Propose something new.

|

| 3 |

+

labels:

|

| 4 |

+

- enhancement

|

| 5 |

+

body:

|

| 6 |

+

- type: checkboxes

|

| 7 |

+

attributes:

|

| 8 |

+

label: Self Checks

|

| 9 |

+

description: "To make sure we get to you in time, please check the following :)"

|

| 10 |

+

options:

|

| 11 |

+

- label: I have searched for existing issues [search for existing issues]([https://github.com/langgenius/dify/issues](https://github.com/fishaudio/fish-speech/issues)), including closed ones.

|

| 12 |

+

required: true

|

| 13 |

+

- label: I confirm that I am using English to submit this report (我已阅读并同意 [Language Policy](https://github.com/fishaudio/fish-speech/issues/515)).

|

| 14 |

+

required: true

|

| 15 |

+

- label: "[FOR CHINESE USERS] 请务必使用英文提交 Issue,否则会被关闭。谢谢!:)"

|

| 16 |

+

required: true

|

| 17 |

+

- label: "Please do not modify this template :) and fill in all the required fields."

|

| 18 |

+

required: true

|

| 19 |

+

- type: textarea

|

| 20 |

+

attributes:

|

| 21 |

+

label: 1. Is this request related to a challenge you're experiencing? Tell me about your story.

|

| 22 |

+

placeholder: Please describe the specific scenario or problem you're facing as clearly as possible. For instance "I was trying to use [feature] for [specific task], and [what happened]... It was frustrating because...."

|

| 23 |

+

validations:

|

| 24 |

+

required: true

|

| 25 |

+

- type: textarea

|

| 26 |

+

attributes:

|

| 27 |

+

label: 2. Additional context or comments

|

| 28 |

+

placeholder: (Any other information, comments, documentations, links, or screenshots that would provide more clarity. This is the place to add anything else not covered above.)

|

| 29 |

+

validations:

|

| 30 |

+

required: false

|

| 31 |

+

- type: checkboxes

|

| 32 |

+

attributes:

|

| 33 |

+

label: 3. Can you help us with this feature?

|

| 34 |

+

description: Let us know! This is not a commitment, but a starting point for collaboration.

|

| 35 |

+

options:

|

| 36 |

+

- label: I am interested in contributing to this feature.

|

| 37 |

+

required: false

|

| 38 |

+

- type: markdown

|

| 39 |

+

attributes:

|

| 40 |

+

value: Please limit one request per issue.

|

.github/pull_request_template.md

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**Is this PR adding new feature or fix a BUG?**

|

| 2 |

+

|

| 3 |

+

Add feature / Fix BUG.

|

| 4 |

+

|

| 5 |

+

**Is this pull request related to any issue? If yes, please link the issue.**

|

| 6 |

+

|

| 7 |

+

#xxx

|

.github/workflows/build-docker-image.yml

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Build Image

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

tags:

|

| 8 |

+

- 'v*'

|

| 9 |

+

|

| 10 |

+

jobs:

|

| 11 |

+

build:

|

| 12 |

+

runs-on: ubuntu-latest-16c64g

|

| 13 |

+

steps:

|

| 14 |

+

- uses: actions/checkout@v4

|

| 15 |

+

- name: Set up Docker Buildx

|

| 16 |

+

uses: docker/setup-buildx-action@v3

|

| 17 |

+

- name: Get Version

|

| 18 |

+

run: |

|

| 19 |

+

if [[ $GITHUB_REF == refs/tags/v* ]]; then

|

| 20 |

+

version=$(basename ${GITHUB_REF})

|

| 21 |

+

else

|

| 22 |

+

version=nightly

|

| 23 |

+

fi

|

| 24 |

+

|

| 25 |

+

echo "version=${version}" >> $GITHUB_ENV

|

| 26 |

+

echo "Current version: ${version}"

|

| 27 |

+

|

| 28 |

+

- name: Login to Docker Hub

|

| 29 |

+

uses: docker/login-action@v3

|

| 30 |

+

with:

|

| 31 |

+

username: ${{ secrets.DOCKER_USER }}

|

| 32 |

+

password: ${{ secrets.DOCKER_PAT }}

|

| 33 |

+

|

| 34 |

+

- name: Build and Push Image

|

| 35 |

+

uses: docker/build-push-action@v6

|

| 36 |

+

with:

|

| 37 |

+

context: .

|

| 38 |

+

file: dockerfile

|

| 39 |

+

platforms: linux/amd64

|

| 40 |

+

push: true

|

| 41 |

+

tags: |

|

| 42 |

+

fishaudio/fish-speech:${{ env.version }}

|

| 43 |

+

fishaudio/fish-speech:latest

|

| 44 |

+

outputs: type=image,oci-mediatypes=true,compression=zstd,compression-level=3,force-compression=true

|

| 45 |

+

cache-from: type=registry,ref=fishaudio/fish-speech:latest

|

| 46 |

+

cache-to: type=inline

|

| 47 |

+

|

| 48 |

+

- name: Build and Push Dev Image

|

| 49 |

+

uses: docker/build-push-action@v6

|

| 50 |

+

with:

|

| 51 |

+

context: .

|

| 52 |

+

file: dockerfile.dev

|

| 53 |

+

platforms: linux/amd64

|

| 54 |

+

push: true

|

| 55 |

+

build-args: |

|

| 56 |

+

VERSION=${{ env.version }}

|

| 57 |

+

BASE_IMAGE=fishaudio/fish-speech:${{ env.version }}

|

| 58 |

+

tags: |

|

| 59 |

+

fishaudio/fish-speech:${{ env.version }}-dev

|

| 60 |

+

fishaudio/fish-speech:latest-dev

|

| 61 |

+

outputs: type=image,oci-mediatypes=true,compression=zstd,compression-level=3,force-compression=true

|

| 62 |

+

cache-from: type=registry,ref=fishaudio/fish-speech:latest-dev

|

| 63 |

+

cache-to: type=inline

|

| 64 |

+

|

| 65 |

+

- name: Push README to Dockerhub

|

| 66 |

+

uses: peter-evans/dockerhub-description@v4

|

| 67 |

+

with:

|

| 68 |

+

username: ${{ secrets.DOCKER_USER }}

|

| 69 |

+

password: ${{ secrets.DOCKER_PAT }}

|

| 70 |

+

repository: fishaudio/fish-speech

|

.github/workflows/docs.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: docs

|

| 2 |

+

on:

|

| 3 |

+

push:

|

| 4 |

+

branches:

|

| 5 |

+

- main

|

| 6 |

+

paths:

|

| 7 |

+

- 'docs/**'

|

| 8 |

+

- 'mkdocs.yml'

|

| 9 |

+

|

| 10 |

+

permissions:

|

| 11 |

+

contents: write

|

| 12 |

+

|

| 13 |

+

jobs:

|

| 14 |

+

deploy:

|

| 15 |

+

runs-on: ubuntu-latest

|

| 16 |

+

steps:

|

| 17 |

+

- uses: actions/checkout@v4

|

| 18 |

+

- name: Configure Git Credentials

|

| 19 |

+

run: |

|

| 20 |

+

git config user.name github-actions[bot]

|

| 21 |

+

git config user.email 41898282+github-actions[bot]@users.noreply.github.com

|

| 22 |

+

- uses: actions/setup-python@v5

|

| 23 |

+

with:

|

| 24 |

+

python-version: 3.x

|

| 25 |

+

- run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

|

| 26 |

+

- uses: actions/cache@v4

|

| 27 |

+

with:

|

| 28 |

+

key: mkdocs-material-${{ env.cache_id }}

|

| 29 |

+

path: .cache

|

| 30 |

+

restore-keys: |

|

| 31 |

+

mkdocs-material-

|

| 32 |

+

- run: pip install -r docs/requirements.txt

|

| 33 |

+

- run: mkdocs gh-deploy --force

|

.github/workflows/stale.yml

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Close inactive issues

|

| 2 |

+

on:

|

| 3 |

+

schedule:

|

| 4 |

+

- cron: "0 0 * * *"

|

| 5 |

+

|

| 6 |

+

jobs:

|

| 7 |

+

close-issues:

|

| 8 |

+

runs-on: ubuntu-latest

|

| 9 |

+

permissions:

|

| 10 |

+

issues: write

|

| 11 |

+

pull-requests: write

|

| 12 |

+

steps:

|

| 13 |

+

- uses: actions/stale@v9

|

| 14 |

+

with:

|

| 15 |

+

days-before-issue-stale: 30

|

| 16 |

+

days-before-issue-close: 14

|

| 17 |

+

stale-issue-label: "stale"

|

| 18 |

+

stale-issue-message: "This issue is stale because it has been open for 30 days with no activity."

|

| 19 |

+

close-issue-message: "This issue was closed because it has been inactive for 14 days since being marked as stale."

|

| 20 |

+

days-before-pr-stale: 30

|

| 21 |

+

days-before-pr-close: 30

|

| 22 |

+

stale-pr-label: "stale"

|

| 23 |

+

stale-pr-message: "This PR is stale because it has been open for 30 days with no activity."

|

| 24 |

+

close-pr-message: "This PR was closed because it has been inactive for 30 days since being marked as stale."

|

| 25 |

+

repo-token: ${{ secrets.GITHUB_TOKEN }}

|

.gitignore

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

| 2 |

+

.pgx.*

|

| 3 |

+

.pdm-python

|

| 4 |

+

/fish_speech.egg-info

|

| 5 |

+

__pycache__

|

| 6 |

+

/results

|

| 7 |

+

/data

|

| 8 |

+

/*.test.sh

|

| 9 |

+

*.filelist

|

| 10 |

+

filelists

|

| 11 |

+

/fish_speech/text/cmudict_cache.pickle

|

| 12 |

+

/checkpoints

|

| 13 |

+

/.vscode

|

| 14 |

+

/data_server/target

|

| 15 |

+

/*.npy

|

| 16 |

+

/*.wav

|

| 17 |

+

/*.mp3

|

| 18 |

+

/*.lab

|

| 19 |

+

/results

|

| 20 |

+

/data

|

| 21 |

+

/.idea

|

| 22 |

+

ffmpeg.exe

|

| 23 |

+

ffprobe.exe

|

| 24 |

+

asr-label*

|

| 25 |

+

/.cache

|

| 26 |

+

/fishenv

|

| 27 |

+

/.locale

|

| 28 |

+

/demo-audios

|

| 29 |

+

/references

|

| 30 |

+

/example

|

| 31 |

+

/faster_whisper

|

.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ci:

|

| 2 |

+

autoupdate_schedule: monthly

|

| 3 |

+

|

| 4 |

+

repos:

|

| 5 |

+

- repo: https://github.com/pycqa/isort

|

| 6 |

+

rev: 5.13.2

|

| 7 |

+

hooks:

|

| 8 |

+

- id: isort

|

| 9 |

+

args: [--profile=black]

|

| 10 |

+

|

| 11 |

+

- repo: https://github.com/psf/black

|

| 12 |

+

rev: 24.8.0

|

| 13 |

+

hooks:

|

| 14 |

+

- id: black

|

| 15 |

+

|

| 16 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 17 |

+

rev: v4.6.0

|

| 18 |

+

hooks:

|

| 19 |

+

- id: end-of-file-fixer

|

| 20 |

+

- id: check-yaml

|

| 21 |

+

- id: check-json

|

| 22 |

+

- id: mixed-line-ending

|

| 23 |

+

args: ['--fix=lf']

|

| 24 |

+

- id: check-added-large-files

|

| 25 |

+

args: ['--maxkb=5000']

|

.project-root

ADDED

|

File without changes

|

.readthedocs.yaml

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Read the Docs configuration file for MkDocs projects

|

| 2 |

+

# See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

|

| 3 |

+

|

| 4 |

+

# Required

|

| 5 |

+

version: 2

|

| 6 |

+

|

| 7 |

+

# Set the version of Python and other tools you might need

|

| 8 |

+

build:

|

| 9 |

+

os: ubuntu-22.04

|

| 10 |

+

tools:

|

| 11 |

+

python: "3.12"

|

| 12 |

+

|

| 13 |

+

mkdocs:

|

| 14 |

+

configuration: mkdocs.yml

|

| 15 |

+

|

| 16 |

+

# Optionally declare the Python requirements required to build your docs

|

| 17 |

+

python:

|

| 18 |

+

install:

|

| 19 |

+

- requirements: docs/requirements.txt

|

API_FLAGS.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# --infer

|

| 2 |

+

# --api

|

| 3 |

+

--listen 0.0.0.0:8080 \

|

| 4 |

+

--llama-checkpoint-path "checkpoints/fish-speech-1.4" \

|

| 5 |

+

--decoder-checkpoint-path "checkpoints/fish-speech-1.4/firefly-gan-vq-fsq-8x1024-21hz-generator.pth" \

|

| 6 |

+

--decoder-config-name firefly_gan_vq

|

Dockerfile

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.12-slim-bookworm AS stage-1

|

| 2 |

+

ARG TARGETARCH

|

| 3 |

+

|

| 4 |

+

ARG HUGGINGFACE_MODEL=fish-speech-1.4

|

| 5 |

+

ARG HF_ENDPOINT=https://huggingface.co

|

| 6 |

+

|

| 7 |

+

WORKDIR /opt/fish-speech

|

| 8 |

+

|

| 9 |

+

RUN set -ex \

|

| 10 |

+

&& pip install huggingface_hub \

|

| 11 |

+

&& HF_ENDPOINT=${HF_ENDPOINT} huggingface-cli download --resume-download fishaudio/${HUGGINGFACE_MODEL} --local-dir checkpoints/${HUGGINGFACE_MODEL}

|

| 12 |

+

|

| 13 |

+

FROM python:3.12-slim-bookworm

|

| 14 |

+

ARG TARGETARCH

|

| 15 |

+

|

| 16 |

+

ARG DEPENDENCIES=" \

|

| 17 |

+

ca-certificates \

|

| 18 |

+

libsox-dev \

|

| 19 |

+

ffmpeg"

|

| 20 |

+

|

| 21 |

+

RUN --mount=type=cache,target=/var/cache/apt,sharing=locked \

|

| 22 |

+

--mount=type=cache,target=/var/lib/apt,sharing=locked \

|

| 23 |

+

set -ex \

|

| 24 |

+

&& rm -f /etc/apt/apt.conf.d/docker-clean \

|

| 25 |

+

&& echo 'Binary::apt::APT::Keep-Downloaded-Packages "true";' >/etc/apt/apt.conf.d/keep-cache \

|

| 26 |

+

&& apt-get update \

|

| 27 |

+

&& apt-get -y install --no-install-recommends ${DEPENDENCIES} \

|

| 28 |

+

&& echo "no" | dpkg-reconfigure dash

|

| 29 |

+

|

| 30 |

+

WORKDIR /opt/fish-speech

|

| 31 |

+

|

| 32 |

+

COPY . .

|

| 33 |

+

|

| 34 |

+

RUN --mount=type=cache,target=/root/.cache,sharing=locked \

|

| 35 |

+

set -ex \

|

| 36 |

+

&& pip install -e .[stable]

|

| 37 |

+

|

| 38 |

+

COPY --from=stage-1 /opt/fish-speech/checkpoints /opt/fish-speech/checkpoints

|

| 39 |

+

|

| 40 |

+

ENV GRADIO_SERVER_NAME="0.0.0.0"

|

| 41 |

+

|

| 42 |

+

EXPOSE 7860

|

| 43 |

+

|

| 44 |

+

CMD ["./entrypoint.sh"]

|

LICENSE

ADDED

|

@@ -0,0 +1,437 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Attribution-NonCommercial-ShareAlike 4.0 International

|

| 2 |

+

|

| 3 |

+

=======================================================================

|

| 4 |

+

|

| 5 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and

|

| 6 |

+

does not provide legal services or legal advice. Distribution of

|

| 7 |

+

Creative Commons public licenses does not create a lawyer-client or

|

| 8 |

+

other relationship. Creative Commons makes its licenses and related

|

| 9 |

+

information available on an "as-is" basis. Creative Commons gives no

|

| 10 |

+

warranties regarding its licenses, any material licensed under their

|

| 11 |

+

terms and conditions, or any related information. Creative Commons

|

| 12 |

+

disclaims all liability for damages resulting from their use to the

|

| 13 |

+

fullest extent possible.

|

| 14 |

+

|

| 15 |

+

Using Creative Commons Public Licenses

|

| 16 |

+

|

| 17 |

+

Creative Commons public licenses provide a standard set of terms and

|

| 18 |

+

conditions that creators and other rights holders may use to share

|

| 19 |

+

original works of authorship and other material subject to copyright

|

| 20 |

+

and certain other rights specified in the public license below. The

|

| 21 |

+

following considerations are for informational purposes only, are not

|

| 22 |

+

exhaustive, and do not form part of our licenses.

|

| 23 |

+

|

| 24 |

+

Considerations for licensors: Our public licenses are

|

| 25 |

+

intended for use by those authorized to give the public

|

| 26 |

+

permission to use material in ways otherwise restricted by

|

| 27 |

+

copyright and certain other rights. Our licenses are

|

| 28 |

+

irrevocable. Licensors should read and understand the terms

|

| 29 |

+

and conditions of the license they choose before applying it.

|

| 30 |

+

Licensors should also secure all rights necessary before

|

| 31 |

+

applying our licenses so that the public can reuse the

|

| 32 |

+

material as expected. Licensors should clearly mark any

|

| 33 |

+

material not subject to the license. This includes other CC-

|

| 34 |

+

licensed material, or material used under an exception or

|

| 35 |

+

limitation to copyright. More considerations for licensors:

|

| 36 |

+

wiki.creativecommons.org/Considerations_for_licensors

|

| 37 |

+

|

| 38 |

+

Considerations for the public: By using one of our public

|

| 39 |

+

licenses, a licensor grants the public permission to use the

|

| 40 |

+

licensed material under specified terms and conditions. If

|

| 41 |

+

the licensor's permission is not necessary for any reason--for

|

| 42 |

+

example, because of any applicable exception or limitation to

|

| 43 |

+

copyright--then that use is not regulated by the license. Our

|

| 44 |

+

licenses grant only permissions under copyright and certain

|

| 45 |

+

other rights that a licensor has authority to grant. Use of

|

| 46 |

+

the licensed material may still be restricted for other

|

| 47 |

+

reasons, including because others have copyright or other

|

| 48 |

+

rights in the material. A licensor may make special requests,

|

| 49 |

+

such as asking that all changes be marked or described.

|

| 50 |

+

Although not required by our licenses, you are encouraged to

|

| 51 |

+

respect those requests where reasonable. More considerations

|

| 52 |

+

for the public:

|

| 53 |

+

wiki.creativecommons.org/Considerations_for_licensees

|

| 54 |

+

|

| 55 |

+

=======================================================================

|

| 56 |

+

|

| 57 |

+

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International

|

| 58 |

+

Public License

|

| 59 |

+

|

| 60 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 61 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 62 |

+

Attribution-NonCommercial-ShareAlike 4.0 International Public License

|

| 63 |

+

("Public License"). To the extent this Public License may be

|

| 64 |

+

interpreted as a contract, You are granted the Licensed Rights in

|

| 65 |

+

consideration of Your acceptance of these terms and conditions, and the

|

| 66 |

+

Licensor grants You such rights in consideration of benefits the

|

| 67 |

+

Licensor receives from making the Licensed Material available under

|

| 68 |

+

these terms and conditions.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

Section 1 -- Definitions.

|

| 72 |

+

|

| 73 |

+

a. Adapted Material means material subject to Copyright and Similar

|

| 74 |

+

Rights that is derived from or based upon the Licensed Material

|

| 75 |

+

and in which the Licensed Material is translated, altered,

|

| 76 |

+

arranged, transformed, or otherwise modified in a manner requiring

|

| 77 |

+

permission under the Copyright and Similar Rights held by the

|

| 78 |

+

Licensor. For purposes of this Public License, where the Licensed

|

| 79 |

+

Material is a musical work, performance, or sound recording,

|

| 80 |

+

Adapted Material is always produced where the Licensed Material is

|

| 81 |

+

synched in timed relation with a moving image.

|

| 82 |

+

|

| 83 |

+

b. Adapter's License means the license You apply to Your Copyright

|

| 84 |

+

and Similar Rights in Your contributions to Adapted Material in

|

| 85 |

+

accordance with the terms and conditions of this Public License.

|

| 86 |

+

|

| 87 |

+

c. BY-NC-SA Compatible License means a license listed at

|

| 88 |

+

creativecommons.org/compatiblelicenses, approved by Creative

|

| 89 |

+

Commons as essentially the equivalent of this Public License.

|

| 90 |

+

|

| 91 |

+

d. Copyright and Similar Rights means copyright and/or similar rights

|

| 92 |

+

closely related to copyright including, without limitation,

|

| 93 |

+

performance, broadcast, sound recording, and Sui Generis Database

|

| 94 |

+

Rights, without regard to how the rights are labeled or

|

| 95 |

+

categorized. For purposes of this Public License, the rights

|

| 96 |

+

specified in Section 2(b)(1)-(2) are not Copyright and Similar

|

| 97 |

+

Rights.

|

| 98 |

+

|

| 99 |

+

e. Effective Technological Measures means those measures that, in the

|

| 100 |

+

absence of proper authority, may not be circumvented under laws

|

| 101 |

+

fulfilling obligations under Article 11 of the WIPO Copyright

|

| 102 |

+

Treaty adopted on December 20, 1996, and/or similar international

|

| 103 |

+

agreements.

|

| 104 |

+

|

| 105 |

+

f. Exceptions and Limitations means fair use, fair dealing, and/or

|

| 106 |

+

any other exception or limitation to Copyright and Similar Rights

|

| 107 |

+

that applies to Your use of the Licensed Material.

|

| 108 |

+

|

| 109 |

+

g. License Elements means the license attributes listed in the name

|

| 110 |

+

of a Creative Commons Public License. The License Elements of this

|

| 111 |

+

Public License are Attribution, NonCommercial, and ShareAlike.

|

| 112 |

+

|

| 113 |

+

h. Licensed Material means the artistic or literary work, database,

|

| 114 |

+

or other material to which the Licensor applied this Public

|

| 115 |

+

License.

|

| 116 |

+

|

| 117 |

+

i. Licensed Rights means the rights granted to You subject to the

|

| 118 |

+

terms and conditions of this Public License, which are limited to

|

| 119 |

+

all Copyright and Similar Rights that apply to Your use of the

|

| 120 |

+

Licensed Material and that the Licensor has authority to license.

|

| 121 |

+

|

| 122 |

+

j. Licensor means the individual(s) or entity(ies) granting rights

|

| 123 |

+

under this Public License.

|

| 124 |

+

|

| 125 |

+

k. NonCommercial means not primarily intended for or directed towards

|

| 126 |

+

commercial advantage or monetary compensation. For purposes of

|

| 127 |

+

this Public License, the exchange of the Licensed Material for

|

| 128 |

+

other material subject to Copyright and Similar Rights by digital

|

| 129 |

+

file-sharing or similar means is NonCommercial provided there is

|

| 130 |

+

no payment of monetary compensation in connection with the

|

| 131 |

+

exchange.

|

| 132 |

+

|

| 133 |

+

l. Share means to provide material to the public by any means or

|

| 134 |

+

process that requires permission under the Licensed Rights, such

|

| 135 |

+

as reproduction, public display, public performance, distribution,

|

| 136 |

+

dissemination, communication, or importation, and to make material

|

| 137 |

+

available to the public including in ways that members of the

|

| 138 |

+

public may access the material from a place and at a time

|

| 139 |

+

individually chosen by them.

|

| 140 |

+

|

| 141 |

+

m. Sui Generis Database Rights means rights other than copyright

|

| 142 |

+

resulting from Directive 96/9/EC of the European Parliament and of

|

| 143 |

+

the Council of 11 March 1996 on the legal protection of databases,

|

| 144 |

+

as amended and/or succeeded, as well as other essentially

|

| 145 |

+

equivalent rights anywhere in the world.

|

| 146 |

+

|

| 147 |

+

n. You means the individual or entity exercising the Licensed Rights

|

| 148 |

+

under this Public License. Your has a corresponding meaning.

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

Section 2 -- Scope.

|

| 152 |

+

|

| 153 |

+

a. License grant.

|

| 154 |

+

|

| 155 |

+

1. Subject to the terms and conditions of this Public License,

|

| 156 |

+

the Licensor hereby grants You a worldwide, royalty-free,

|

| 157 |

+

non-sublicensable, non-exclusive, irrevocable license to

|

| 158 |

+

exercise the Licensed Rights in the Licensed Material to:

|

| 159 |

+

|

| 160 |

+

a. reproduce and Share the Licensed Material, in whole or

|

| 161 |

+

in part, for NonCommercial purposes only; and

|

| 162 |

+

|

| 163 |

+

b. produce, reproduce, and Share Adapted Material for

|

| 164 |

+

NonCommercial purposes only.

|

| 165 |

+

|

| 166 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where

|

| 167 |

+

Exceptions and Limitations apply to Your use, this Public

|

| 168 |

+

License does not apply, and You do not need to comply with

|

| 169 |

+

its terms and conditions.

|

| 170 |

+

|

| 171 |

+

3. Term. The term of this Public License is specified in Section

|

| 172 |

+

6(a).

|

| 173 |

+

|

| 174 |

+

4. Media and formats; technical modifications allowed. The

|

| 175 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 176 |

+

all media and formats whether now known or hereafter created,

|

| 177 |

+

and to make technical modifications necessary to do so. The

|

| 178 |

+

Licensor waives and/or agrees not to assert any right or

|

| 179 |

+

authority to forbid You from making technical modifications

|

| 180 |

+

necessary to exercise the Licensed Rights, including

|

| 181 |

+

technical modifications necessary to circumvent Effective

|

| 182 |

+

Technological Measures. For purposes of this Public License,

|

| 183 |

+

simply making modifications authorized by this Section 2(a)

|

| 184 |

+

(4) never produces Adapted Material.

|

| 185 |

+

|

| 186 |

+

5. Downstream recipients.

|

| 187 |

+

|

| 188 |

+

a. Offer from the Licensor -- Licensed Material. Every

|

| 189 |

+

recipient of the Licensed Material automatically

|

| 190 |

+

receives an offer from the Licensor to exercise the

|

| 191 |

+

Licensed Rights under the terms and conditions of this

|

| 192 |

+

Public License.

|

| 193 |

+

|

| 194 |

+

b. Additional offer from the Licensor -- Adapted Material.

|

| 195 |

+

Every recipient of Adapted Material from You

|

| 196 |

+

automatically receives an offer from the Licensor to

|

| 197 |

+

exercise the Licensed Rights in the Adapted Material

|

| 198 |

+

under the conditions of the Adapter's License You apply.

|

| 199 |

+

|

| 200 |

+

c. No downstream restrictions. You may not offer or impose

|

| 201 |

+

any additional or different terms or conditions on, or

|

| 202 |

+

apply any Effective Technological Measures to, the

|

| 203 |

+

Licensed Material if doing so restricts exercise of the

|

| 204 |

+

Licensed Rights by any recipient of the Licensed

|

| 205 |

+

Material.

|

| 206 |

+

|

| 207 |

+

6. No endorsement. Nothing in this Public License constitutes or

|

| 208 |

+

may be construed as permission to assert or imply that You

|

| 209 |

+

are, or that Your use of the Licensed Material is, connected

|

| 210 |

+

with, or sponsored, endorsed, or granted official status by,

|

| 211 |

+

the Licensor or others designated to receive attribution as

|

| 212 |

+

provided in Section 3(a)(1)(A)(i).

|

| 213 |

+

|

| 214 |

+

b. Other rights.

|

| 215 |

+

|

| 216 |

+

1. Moral rights, such as the right of integrity, are not

|

| 217 |

+

licensed under this Public License, nor are publicity,

|

| 218 |

+

privacy, and/or other similar personality rights; however, to

|

| 219 |

+

the extent possible, the Licensor waives and/or agrees not to

|

| 220 |

+

assert any such rights held by the Licensor to the limited

|

| 221 |

+

extent necessary to allow You to exercise the Licensed

|

| 222 |

+

Rights, but not otherwise.

|

| 223 |

+

|

| 224 |

+

2. Patent and trademark rights are not licensed under this

|

| 225 |

+

Public License.

|

| 226 |

+

|

| 227 |

+

3. To the extent possible, the Licensor waives any right to

|

| 228 |

+

collect royalties from You for the exercise of the Licensed

|

| 229 |

+

Rights, whether directly or through a collecting society

|

| 230 |

+

under any voluntary or waivable statutory or compulsory

|

| 231 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 232 |

+

reserves any right to collect such royalties, including when

|

| 233 |

+

the Licensed Material is used other than for NonCommercial

|

| 234 |

+

purposes.

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

Section 3 -- License Conditions.

|

| 238 |

+

|

| 239 |

+

Your exercise of the Licensed Rights is expressly made subject to the

|

| 240 |

+

following conditions.

|

| 241 |

+

|

| 242 |

+

a. Attribution.

|

| 243 |

+

|

| 244 |

+

1. If You Share the Licensed Material (including in modified

|

| 245 |

+

form), You must:

|

| 246 |

+

|

| 247 |

+

a. retain the following if it is supplied by the Licensor

|

| 248 |

+

with the Licensed Material:

|

| 249 |

+

|

| 250 |

+

i. identification of the creator(s) of the Licensed

|

| 251 |

+

Material and any others designated to receive

|

| 252 |

+

attribution, in any reasonable manner requested by

|

| 253 |

+

the Licensor (including by pseudonym if

|

| 254 |

+

designated);

|

| 255 |

+

|

| 256 |

+

ii. a copyright notice;

|

| 257 |

+

|

| 258 |

+

iii. a notice that refers to this Public License;

|

| 259 |

+

|

| 260 |

+

iv. a notice that refers to the disclaimer of

|

| 261 |

+

warranties;

|

| 262 |

+

|

| 263 |

+

v. a URI or hyperlink to the Licensed Material to the

|

| 264 |

+

extent reasonably practicable;

|

| 265 |

+

|

| 266 |

+

b. indicate if You modified the Licensed Material and

|

| 267 |

+

retain an indication of any previous modifications; and

|

| 268 |

+

|

| 269 |

+

c. indicate the Licensed Material is licensed under this

|

| 270 |

+

Public License, and include the text of, or the URI or

|

| 271 |

+

hyperlink to, this Public License.

|

| 272 |

+

|

| 273 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 274 |

+

reasonable manner based on the medium, means, and context in

|

| 275 |

+

which You Share the Licensed Material. For example, it may be

|

| 276 |

+

reasonable to satisfy the conditions by providing a URI or

|

| 277 |

+

hyperlink to a resource that includes the required

|

| 278 |

+

information.

|

| 279 |

+

3. If requested by the Licensor, You must remove any of the

|

| 280 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 281 |

+

reasonably practicable.

|

| 282 |

+

|

| 283 |

+

b. ShareAlike.

|

| 284 |

+

|

| 285 |

+

In addition to the conditions in Section 3(a), if You Share

|

| 286 |

+

Adapted Material You produce, the following conditions also apply.

|

| 287 |

+

|

| 288 |

+

1. The Adapter's License You apply must be a Creative Commons

|

| 289 |

+

license with the same License Elements, this version or

|

| 290 |

+

later, or a BY-NC-SA Compatible License.

|

| 291 |

+

|

| 292 |

+

2. You must include the text of, or the URI or hyperlink to, the

|

| 293 |

+

Adapter's License You apply. You may satisfy this condition

|

| 294 |

+

in any reasonable manner based on the medium, means, and

|

| 295 |

+

context in which You Share Adapted Material.

|

| 296 |

+

|

| 297 |

+

3. You may not offer or impose any additional or different terms

|

| 298 |

+

or conditions on, or apply any Effective Technological

|

| 299 |

+

Measures to, Adapted Material that restrict exercise of the

|

| 300 |

+

rights granted under the Adapter's License You apply.

|

| 301 |

+

|

| 302 |

+

|

| 303 |

+

Section 4 -- Sui Generis Database Rights.

|

| 304 |

+

|

| 305 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 306 |

+

apply to Your use of the Licensed Material:

|

| 307 |

+

|

| 308 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right

|

| 309 |

+

to extract, reuse, reproduce, and Share all or a substantial

|

| 310 |

+

portion of the contents of the database for NonCommercial purposes

|

| 311 |

+

only;

|

| 312 |

+

|

| 313 |

+

b. if You include all or a substantial portion of the database

|

| 314 |

+

contents in a database in which You have Sui Generis Database

|

| 315 |

+

Rights, then the database in which You have Sui Generis Database

|

| 316 |

+

Rights (but not its individual contents) is Adapted Material,

|

| 317 |

+

including for purposes of Section 3(b); and

|

| 318 |

+

|

| 319 |

+

c. You must comply with the conditions in Section 3(a) if You Share

|

| 320 |

+

all or a substantial portion of the contents of the database.

|

| 321 |

+

|

| 322 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 323 |

+

replace Your obligations under this Public License where the Licensed

|

| 324 |

+

Rights include other Copyright and Similar Rights.

|

| 325 |

+

|

| 326 |

+

|

| 327 |

+

Section 5 -- Disclaimer of Warranties and Limitation of Liability.

|

| 328 |

+

|

| 329 |

+

a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

|

| 330 |

+

EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

|

| 331 |

+

AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

|

| 332 |

+

ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

|

| 333 |

+

IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

|

| 334 |

+

WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

|

| 335 |

+

PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

|

| 336 |

+

ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

|

| 337 |

+

KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

|

| 338 |

+

ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

|

| 339 |

+

|

| 340 |

+

b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

|

| 341 |

+

TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

|

| 342 |

+

NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

|

| 343 |

+

INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

|

| 344 |

+

COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

|

| 345 |

+

USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

|

| 346 |

+

ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

|

| 347 |

+

DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

|

| 348 |

+

IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

|

| 349 |

+

|

| 350 |

+

c. The disclaimer of warranties and limitation of liability provided

|

| 351 |

+

above shall be interpreted in a manner that, to the extent

|

| 352 |

+

possible, most closely approximates an absolute disclaimer and

|

| 353 |

+

waiver of all liability.

|

| 354 |

+

|

| 355 |

+

|

| 356 |

+

Section 6 -- Term and Termination.

|

| 357 |

+

|

| 358 |

+

a. This Public License applies for the term of the Copyright and

|

| 359 |

+

Similar Rights licensed here. However, if You fail to comply with

|

| 360 |

+

this Public License, then Your rights under this Public License

|

| 361 |

+

terminate automatically.

|

| 362 |

+

|

| 363 |

+

b. Where Your right to use the Licensed Material has terminated under

|

| 364 |

+

Section 6(a), it reinstates:

|

| 365 |

+

|

| 366 |

+

1. automatically as of the date the violation is cured, provided

|

| 367 |

+

it is cured within 30 days of Your discovery of the

|

| 368 |

+

violation; or

|

| 369 |

+

|

| 370 |

+

2. upon express reinstatement by the Licensor.

|

| 371 |

+

|

| 372 |

+

For the avoidance of doubt, this Section 6(b) does not affect any

|

| 373 |

+

right the Licensor may have to seek remedies for Your violations

|

| 374 |

+

of this Public License.

|

| 375 |

+

|

| 376 |

+

c. For the avoidance of doubt, the Licensor may also offer the

|

| 377 |

+

Licensed Material under separate terms or conditions or stop

|

| 378 |

+

distributing the Licensed Material at any time; however, doing so

|

| 379 |

+

will not terminate this Public License.

|

| 380 |

+

|

| 381 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 382 |

+

License.

|

| 383 |

+

|

| 384 |

+

|

| 385 |

+

Section 7 -- Other Terms and Conditions.

|

| 386 |

+

|

| 387 |

+

a. The Licensor shall not be bound by any additional or different

|

| 388 |

+

terms or conditions communicated by You unless expressly agreed.

|

| 389 |

+

|

| 390 |

+

b. Any arrangements, understandings, or agreements regarding the

|

| 391 |

+

Licensed Material not stated herein are separate from and

|

| 392 |

+

independent of the terms and conditions of this Public License.

|

| 393 |

+

|

| 394 |

+

|

| 395 |

+

Section 8 -- Interpretation.

|

| 396 |

+

|

| 397 |

+

a. For the avoidance of doubt, this Public License does not, and

|

| 398 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 399 |

+

conditions on any use of the Licensed Material that could lawfully

|

| 400 |

+

be made without permission under this Public License.

|

| 401 |

+

|

| 402 |

+

b. To the extent possible, if any provision of this Public License is

|

| 403 |

+

deemed unenforceable, it shall be automatically reformed to the

|

| 404 |

+

minimum extent necessary to make it enforceable. If the provision

|