Spaces:

Paused

Paused

Commit

•

1353ea1

1

Parent(s):

763d1d7

Improve UI

Browse files- app.py +4 -13

- assets/azimuth.jpg +0 -0

app.py

CHANGED

|

@@ -26,10 +26,11 @@ _DESCRIPTION = '''

|

|

| 26 |

Given a single-view image and select a target azimuth, ControlNet + SyncDreamer is able to generate the target view

|

| 27 |

|

| 28 |

This HF app is modified from [SyncDreamer HF app](https://huggingface.co/spaces/liuyuan-pal/SyncDreamer). The difference is that I added ControlNet on top of SyncDreamer.

|

|

|

|

| 29 |

'''

|

| 30 |

_USER_GUIDE0 = "Step1: Please upload an image in the block above (or choose an example shown in the left)."

|

| 31 |

-

_USER_GUIDE2 = "Step2: Please choose a **

|

| 32 |

-

_USER_GUIDE3 = "Generated

|

| 33 |

|

| 34 |

others = '''**Step 1**. Select "Crop size" and click "Crop it". ==> The foreground object is centered and resized. </br>'''

|

| 35 |

|

|

@@ -58,7 +59,6 @@ class BackgroundRemoval:

|

|

| 58 |

|

| 59 |

@torch.no_grad()

|

| 60 |

def __call__(self, image):

|

| 61 |

-

# image: [H, W, 3] array in [0, 255].

|

| 62 |

image = self.interface([image])[0]

|

| 63 |

return image

|

| 64 |

|

|

@@ -93,19 +93,16 @@ def sam_predict(predictor, removal, raw_im):

|

|

| 93 |

y_min = int(y_nonzero[0].min())

|

| 94 |

x_max = int(x_nonzero[0].max())

|

| 95 |

y_max = int(y_nonzero[0].max())

|

| 96 |

-

# image_nobg.save('./nobg.png')

|

| 97 |

|

| 98 |

image_nobg.thumbnail([512, 512], Image.Resampling.LANCZOS)

|

| 99 |

image_sam = sam_out_nosave(predictor, image_nobg.convert("RGB"), (x_min, y_min, x_max, y_max))

|

| 100 |

|

| 101 |

-

# imsave('./mask.png', np.asarray(image_sam)[:,:,3]*255)

|

| 102 |

image_sam = np.asarray(image_sam, np.float32) / 255

|

| 103 |

out_mask = image_sam[:, :, 3:]

|

| 104 |

out_rgb = image_sam[:, :, :3] * out_mask + 1 - out_mask

|

| 105 |

out_img = (np.concatenate([out_rgb, out_mask], 2) * 255).astype(np.uint8)

|

| 106 |

|

| 107 |

image_sam = Image.fromarray(out_img, mode='RGBA')

|

| 108 |

-

# image_sam.save('./output.png')

|

| 109 |

torch.cuda.empty_cache()

|

| 110 |

return image_sam

|

| 111 |

else:

|

|

@@ -186,16 +183,12 @@ def run_demo():

|

|

| 186 |

with gr.Column(scale=0.8):

|

| 187 |

sam_block = gr.Image(type='pil', image_mode='RGBA', label="SAM output", height=256, interactive=False)

|

| 188 |

crop_size.render()

|

| 189 |

-

fig1 = gr.Image(value=Image.open('assets/

|

| 190 |

|

| 191 |

with gr.Column(scale=0.8):

|

| 192 |

input_block = gr.Image(type='pil', image_mode='RGBA', label="Input to SyncDreamer", height=256, interactive=False)

|

| 193 |

azimuth.render()

|

| 194 |

with gr.Accordion('Advanced options', open=False):

|

| 195 |

-

cfg_scale = gr.Slider(1.0, 5.0, 2.0, step=0.1, label='Classifier free guidance', interactive=True)

|

| 196 |

-

sample_num = gr.Slider(1, 2, 1, step=1, label='Sample num', interactive=False, info='How many instance (16 images per instance)')

|

| 197 |

-

sample_steps = gr.Slider(10, 300, 50, step=10, label='Sample steps', interactive=False)

|

| 198 |

-

batch_view_num = gr.Slider(1, 16, 16, step=1, label='Batch num', interactive=True)

|

| 199 |

seed = gr.Number(6033, label='Random seed', interactive=True)

|

| 200 |

run_btn = gr.Button('Run generation', variant='primary', interactive=True)

|

| 201 |

|

|

@@ -216,8 +209,6 @@ def run_demo():

|

|

| 216 |

|

| 217 |

crop_size.change(fn=resize_inputs, inputs=[sam_block, crop_size], outputs=[input_block], queue=True)\

|

| 218 |

.success(fn=partial(update_guide, _USER_GUIDE2), outputs=[guide_text], queue=False)

|

| 219 |

-

# crop_btn.click(fn=resize_inputs, inputs=[sam_block, crop_size], outputs=[input_block], queue=False)\

|

| 220 |

-

# .success(fn=partial(update_guide, _USER_GUIDE2), outputs=[guide_text], queue=False)

|

| 221 |

|

| 222 |

run_btn.click(partial(generate, pipe), inputs=[input_block, azimuth], outputs=[output_block], queue=True)\

|

| 223 |

.success(fn=partial(update_guide, _USER_GUIDE3), outputs=[guide_text], queue=False)

|

|

|

|

| 26 |

Given a single-view image and select a target azimuth, ControlNet + SyncDreamer is able to generate the target view

|

| 27 |

|

| 28 |

This HF app is modified from [SyncDreamer HF app](https://huggingface.co/spaces/liuyuan-pal/SyncDreamer). The difference is that I added ControlNet on top of SyncDreamer.

|

| 29 |

+

In addition, the azimuths of both input and output images are both assumed to be 30 degrees.

|

| 30 |

'''

|

| 31 |

_USER_GUIDE0 = "Step1: Please upload an image in the block above (or choose an example shown in the left)."

|

| 32 |

+

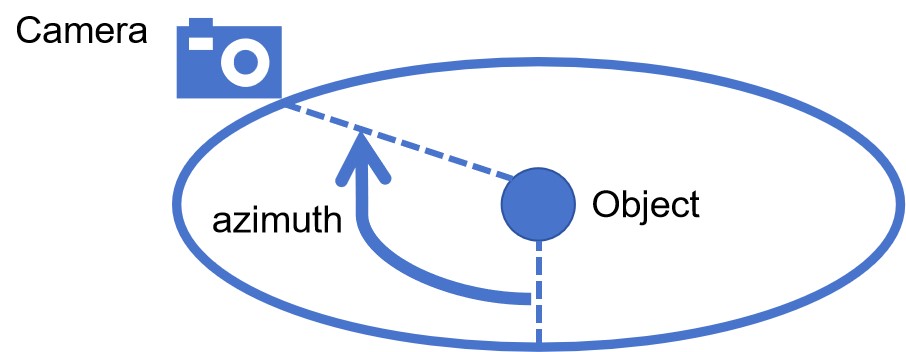

_USER_GUIDE2 = "Step2: Please choose a **Target azimuth** and click **Run Generation**. The **Target azimuth** is the azimuth of the output image relative to the input image in clockwise. This costs about 30s."

|

| 33 |

+

_USER_GUIDE3 = "Generated output image of the target view is shown below! (You may adjust the **Crop size** and **Target azimuth** to get another result!)"

|

| 34 |

|

| 35 |

others = '''**Step 1**. Select "Crop size" and click "Crop it". ==> The foreground object is centered and resized. </br>'''

|

| 36 |

|

|

|

|

| 59 |

|

| 60 |

@torch.no_grad()

|

| 61 |

def __call__(self, image):

|

|

|

|

| 62 |

image = self.interface([image])[0]

|

| 63 |

return image

|

| 64 |

|

|

|

|

| 93 |

y_min = int(y_nonzero[0].min())

|

| 94 |

x_max = int(x_nonzero[0].max())

|

| 95 |

y_max = int(y_nonzero[0].max())

|

|

|

|

| 96 |

|

| 97 |

image_nobg.thumbnail([512, 512], Image.Resampling.LANCZOS)

|

| 98 |

image_sam = sam_out_nosave(predictor, image_nobg.convert("RGB"), (x_min, y_min, x_max, y_max))

|

| 99 |

|

|

|

|

| 100 |

image_sam = np.asarray(image_sam, np.float32) / 255

|

| 101 |

out_mask = image_sam[:, :, 3:]

|

| 102 |

out_rgb = image_sam[:, :, :3] * out_mask + 1 - out_mask

|

| 103 |

out_img = (np.concatenate([out_rgb, out_mask], 2) * 255).astype(np.uint8)

|

| 104 |

|

| 105 |

image_sam = Image.fromarray(out_img, mode='RGBA')

|

|

|

|

| 106 |

torch.cuda.empty_cache()

|

| 107 |

return image_sam

|

| 108 |

else:

|

|

|

|

| 183 |

with gr.Column(scale=0.8):

|

| 184 |

sam_block = gr.Image(type='pil', image_mode='RGBA', label="SAM output", height=256, interactive=False)

|

| 185 |

crop_size.render()

|

| 186 |

+

fig1 = gr.Image(value=Image.open('assets/azimuth.jpg'), type='pil', image_mode='RGB', height=256, show_label=False, tool=None, interactive=False)

|

| 187 |

|

| 188 |

with gr.Column(scale=0.8):

|

| 189 |

input_block = gr.Image(type='pil', image_mode='RGBA', label="Input to SyncDreamer", height=256, interactive=False)

|

| 190 |

azimuth.render()

|

| 191 |

with gr.Accordion('Advanced options', open=False):

|

|

|

|

|

|

|

|

|

|

|

|

|

| 192 |

seed = gr.Number(6033, label='Random seed', interactive=True)

|

| 193 |

run_btn = gr.Button('Run generation', variant='primary', interactive=True)

|

| 194 |

|

|

|

|

| 209 |

|

| 210 |

crop_size.change(fn=resize_inputs, inputs=[sam_block, crop_size], outputs=[input_block], queue=True)\

|

| 211 |

.success(fn=partial(update_guide, _USER_GUIDE2), outputs=[guide_text], queue=False)

|

|

|

|

|

|

|

| 212 |

|

| 213 |

run_btn.click(partial(generate, pipe), inputs=[input_block, azimuth], outputs=[output_block], queue=True)\

|

| 214 |

.success(fn=partial(update_guide, _USER_GUIDE3), outputs=[guide_text], queue=False)

|

assets/azimuth.jpg

ADDED

|