gradio app: support chatting with llama-2

Browse files- .env.example +2 -2

- Makefile +5 -2

- README.md +120 -1

- app.py +33 -19

- app_modules/llm_loader.py +34 -14

- assets/Open Source LLMs.png +0 -0

- assets/Workflow-Overview.png +0 -0

- data/questions.txt +1 -0

- test.py +67 -147

- unit_test.py +183 -0

.env.example

CHANGED

|

@@ -25,7 +25,7 @@ HF_PIPELINE_DEVICE_TYPE=

|

|

| 25 |

# LOAD_QUANTIZED_MODEL=4bit

|

| 26 |

# LOAD_QUANTIZED_MODEL=8bit

|

| 27 |

|

| 28 |

-

USE_LLAMA_2_PROMPT_TEMPLATE=true

|

| 29 |

DISABLE_MODEL_PRELOADING=true

|

| 30 |

CHAT_HISTORY_ENABLED=true

|

| 31 |

SHOW_PARAM_SETTINGS=false

|

|

@@ -84,7 +84,7 @@ TOKENIZERS_PARALLELISM=true

|

|

| 84 |

|

| 85 |

# env variables for ingesting source PDF files

|

| 86 |

SOURCE_PDFS_PATH="./data/pdfs/"

|

| 87 |

-

SOURCE_URLS=

|

| 88 |

CHUNCK_SIZE=1024

|

| 89 |

CHUNK_OVERLAP=512

|

| 90 |

|

|

|

|

| 25 |

# LOAD_QUANTIZED_MODEL=4bit

|

| 26 |

# LOAD_QUANTIZED_MODEL=8bit

|

| 27 |

|

| 28 |

+

# USE_LLAMA_2_PROMPT_TEMPLATE=true

|

| 29 |

DISABLE_MODEL_PRELOADING=true

|

| 30 |

CHAT_HISTORY_ENABLED=true

|

| 31 |

SHOW_PARAM_SETTINGS=false

|

|

|

|

| 84 |

|

| 85 |

# env variables for ingesting source PDF files

|

| 86 |

SOURCE_PDFS_PATH="./data/pdfs/"

|

| 87 |

+

SOURCE_URLS=

|

| 88 |

CHUNCK_SIZE=1024

|

| 89 |

CHUNK_OVERLAP=512

|

| 90 |

|

Makefile

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

.PHONY: start

|

| 2 |

start:

|

| 3 |

python app.py

|

| 4 |

-

|

| 5 |

serve:

|

| 6 |

ifeq ("$(PORT)", "")

|

| 7 |

JINA_HIDE_SURVEY=1 TRANSFORMERS_OFFLINE=1 python -m lcserve deploy local server

|

|

@@ -10,11 +10,14 @@ else

|

|

| 10 |

endif

|

| 11 |

|

| 12 |

test:

|

| 13 |

-

python test.py

|

| 14 |

|

| 15 |

chat:

|

| 16 |

python test.py chat

|

| 17 |

|

|

|

|

|

|

|

|

|

|

| 18 |

tele:

|

| 19 |

python telegram_bot.py

|

| 20 |

|

|

|

|

| 1 |

.PHONY: start

|

| 2 |

start:

|

| 3 |

python app.py

|

| 4 |

+

|

| 5 |

serve:

|

| 6 |

ifeq ("$(PORT)", "")

|

| 7 |

JINA_HIDE_SURVEY=1 TRANSFORMERS_OFFLINE=1 python -m lcserve deploy local server

|

|

|

|

| 10 |

endif

|

| 11 |

|

| 12 |

test:

|

| 13 |

+

python test.py

|

| 14 |

|

| 15 |

chat:

|

| 16 |

python test.py chat

|

| 17 |

|

| 18 |

+

unittest:

|

| 19 |

+

python unit_test.py $(TEST)

|

| 20 |

+

|

| 21 |

tele:

|

| 22 |

python telegram_bot.py

|

| 23 |

|

README.md

CHANGED

|

@@ -8,7 +8,126 @@ sdk_version: 3.36.1

|

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: apache-2.0

|

| 11 |

-

duplicated_from: inflaton/chat-with-pci-dss-v4

|

| 12 |

---

|

| 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: apache-2.0

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

+

# ChatPDF - Talk to Your PDF Files

|

| 14 |

+

|

| 15 |

+

This project uses Open AI and open-source large language models (LLMs) to enable you to talk to your own PDF files.

|

| 16 |

+

|

| 17 |

+

## How it works

|

| 18 |

+

|

| 19 |

+

We're using an AI design pattern, namely "in-context learning" which uses LLMs off the shelf (i.e., without any fine-tuning), then controls their behavior through clever prompting and conditioning on private “contextual” data, e.g., texts extracted from your PDF files.

|

| 20 |

+

|

| 21 |

+

At a very high level, the workflow can be divided into three stages:

|

| 22 |

+

|

| 23 |

+

1. Data preprocessing / embedding: This stage involves storing private data (your PDF files) to be retrieved later. Typically, the documents are broken into chunks, passed through an embedding model, then stored the created embeddings in a vectorstore.

|

| 24 |

+

|

| 25 |

+

2. Prompt construction / retrieval: When a user submits a query, the application constructs a series of prompts to submit to the language model. A compiled prompt typically combines a prompt template and a set of relevant documents retrieved from the vectorstore.

|

| 26 |

+

|

| 27 |

+

3. Prompt execution / inference: Once the prompts have been compiled, they are submitted to a pre-trained LLM for inference—including both proprietary model APIs and open-source or self-trained models.

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

Tech stack used includes LangChain, Gradio, Chroma and FAISS.

|

| 32 |

+

- LangChain is an open-source framework that makes it easier to build scalable AI/LLM apps and chatbots.

|

| 33 |

+

- Gradio is an open-source Python library that is used to build machine learning and data science demos and web applications.

|

| 34 |

+

- Chroma and FAISS are open-source vectorstores for storing embeddings for your files.

|

| 35 |

+

|

| 36 |

+

## Running Locally

|

| 37 |

+

|

| 38 |

+

1. Check pre-conditions:

|

| 39 |

+

|

| 40 |

+

- [Git Large File Storage (LFS)](https://git-lfs.com/) must have been installed.

|

| 41 |

+

- Run `python --version` to make sure you're running Python version 3.10 or above.

|

| 42 |

+

- The latest PyTorch with GPU support must have been installed. Here is a sample `conda` command:

|

| 43 |

+

```

|

| 44 |

+

conda install -y pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

|

| 45 |

+

```

|

| 46 |

+

- [CMake](https://cmake.org/) must have been installed. Here is a sample command to install `CMake` on `ubuntu`:

|

| 47 |

+

```

|

| 48 |

+

sudo apt install cmake

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

2. Clone the repo

|

| 52 |

+

|

| 53 |

+

```

|

| 54 |

+

git lfs install

|

| 55 |

+

git clone https://huggingface.co/spaces/inflaton/learn-ai

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

3. Install packages

|

| 60 |

+

|

| 61 |

+

```

|

| 62 |

+

pip install -U -r requirements.txt

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

4. Set up your environment variables

|

| 66 |

+

|

| 67 |

+

- By default, environment variables are loaded `.env.example` file

|

| 68 |

+

- If you don't want to use the default settings, copy `.env.example` into `.env`. Your can then update it for your local runs.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

5. Start the local server at `http://localhost:7860`:

|

| 72 |

+

|

| 73 |

+

```

|

| 74 |

+

python app.py

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

## Duplicate This Space

|

| 78 |

+

|

| 79 |

+

Duplicate this HuggingFace Space from the UI or click the following link:

|

| 80 |

+

|

| 81 |

+

- [Duplicate this space](https://huggingface.co/spaces/inflaton/learn-ai?duplicate=true)

|

| 82 |

+

|

| 83 |

+

Once duplicated, you can set up environment variables from the space settings. The values there will take precedence of those in `.env.example`.

|

| 84 |

+

|

| 85 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

| 86 |

+

|

| 87 |

+

## Talk to Your Own PDF Files

|

| 88 |

+

|

| 89 |

+

- The sample PDF books & documents are downloaded from the internet (for AI Books) and [PCI DSS official website](https://www.pcisecuritystandards.org/document_library/?category=pcidss) and the corresponding embeddings are stored in folders `data/ai_books` and `data/pci_dss_v4` respectively, which allows you to run locally without any additional effort.

|

| 90 |

+

|

| 91 |

+

- You can also put your own PDF files into any folder specified in `SOURCE_PDFS_PATH` and run the command below to generate embeddings which will be stored in folder `FAISS_INDEX_PATH` or `CHROMADB_INDEX_PATH`. If both `*_INDEX_PATH` env vars are set, `FAISS_INDEX_PATH` takes precedence. Make sure the folder specified by `*_INDEX_PATH` doesn't exist; other wise the command will simply try to load index from the folder and do a simple similarity search, as a way to verify if embeddings are generated and stored properly. Please note the HuggingFace Embedding model specified by `HF_EMBEDDINGS_MODEL_NAME` will be used to generate the embeddings.

|

| 92 |

+

|

| 93 |

+

```

|

| 94 |

+

python ingest.py

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

- Once embeddings are generated, you can test them out locally, or check them into your duplicated space. Please note HF Spaces git server does not allow PDF files to be checked in.

|

| 98 |

+

|

| 99 |

+

## Play with Different Large Language Models

|

| 100 |

+

|

| 101 |

+

The source code supports different LLM types - as shown at the top of `.env.example`

|

| 102 |

+

|

| 103 |

+

```

|

| 104 |

+

# LLM_MODEL_TYPE=openai

|

| 105 |

+

# LLM_MODEL_TYPE=gpt4all-j

|

| 106 |

+

# LLM_MODEL_TYPE=gpt4all

|

| 107 |

+

# LLM_MODEL_TYPE=llamacpp

|

| 108 |

+

LLM_MODEL_TYPE=huggingface

|

| 109 |

+

# LLM_MODEL_TYPE=mosaicml

|

| 110 |

+

# LLM_MODEL_TYPE=stablelm

|

| 111 |

+

# LLM_MODEL_TYPE=openllm

|

| 112 |

+

# LLM_MODEL_TYPE=hftgi

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

- By default, the app runs `lmsys/fastchat-t5-3b-v1.0` model with HF Transformers, which works well with most PCs/laptops with 32GB or more RAM, without any GPU. It also works on HF Spaces with their free-tier: 2 vCPU, 16GB RAM and 500GB hard disk, though the inference speed is very slow.

|

| 116 |

+

|

| 117 |

+

- Uncomment/comment the above to play with different LLM types. You may also want to update other related env vars. E.g., here's the list of HF models which have been tested with the code:

|

| 118 |

+

|

| 119 |

+

```

|

| 120 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="databricks/dolly-v2-3b"

|

| 121 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="databricks/dolly-v2-7b"

|

| 122 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="databricks/dolly-v2-12b"

|

| 123 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="TheBloke/wizardLM-7B-HF"

|

| 124 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="TheBloke/vicuna-7B-1.1-HF"

|

| 125 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="nomic-ai/gpt4all-j"

|

| 126 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="nomic-ai/gpt4all-falcon"

|

| 127 |

+

HUGGINGFACE_MODEL_NAME_OR_PATH="lmsys/fastchat-t5-3b-v1.0"

|

| 128 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="meta-llama/Llama-2-7b-chat-hf"

|

| 129 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="meta-llama/Llama-2-13b-chat-hf"

|

| 130 |

+

# HUGGINGFACE_MODEL_NAME_OR_PATH="meta-llama/Llama-2-70b-chat-hf"

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

The script `test.sh` automates running different LLMs and records the outputs in `data/logs` folder which currently contains a few log files created by previous test runs on Nvidia GeForce RTX 4090, A40 and L40 GPUs.

|

app.py

CHANGED

|

@@ -8,15 +8,21 @@ import gradio as gr

|

|

| 8 |

from anyio.from_thread import start_blocking_portal

|

| 9 |

|

| 10 |

from app_modules.init import app_init

|

|

|

|

| 11 |

from app_modules.utils import print_llm_response, remove_extra_spaces

|

| 12 |

|

| 13 |

llm_loader, qa_chain = app_init()

|

| 14 |

|

| 15 |

-

chat_history_enabled = os.environ.get("CHAT_HISTORY_ENABLED") == "true"

|

| 16 |

show_param_settings = os.environ.get("SHOW_PARAM_SETTINGS") == "true"

|

| 17 |

share_gradio_app = os.environ.get("SHARE_GRADIO_APP") == "true"

|

| 18 |

-

|

| 19 |

using_openai = os.environ.get("LLM_MODEL_TYPE") == "openai"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

model = (

|

| 21 |

"OpenAI GPT-3.5"

|

| 22 |

if using_openai

|

|

@@ -28,7 +34,13 @@ href = (

|

|

| 28 |

else f"https://huggingface.co/{model}"

|

| 29 |

)

|

| 30 |

|

| 31 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

|

| 33 |

description_top = f"""\

|

| 34 |

<div align="left">

|

|

@@ -42,7 +54,7 @@ The demo is built on <a href="https://github.com/hwchase17/langchain">LangChain<

|

|

| 42 |

</div>

|

| 43 |

"""

|

| 44 |

|

| 45 |

-

CONCURRENT_COUNT =

|

| 46 |

|

| 47 |

|

| 48 |

def qa(chatbot):

|

|

@@ -53,9 +65,10 @@ def qa(chatbot):

|

|

| 53 |

|

| 54 |

def task(question, chat_history):

|

| 55 |

start = timer()

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

|

|

|

| 59 |

end = timer()

|

| 60 |

|

| 61 |

print(f"Completed in {end - start:.3f}s")

|

|

@@ -93,17 +106,18 @@ def qa(chatbot):

|

|

| 93 |

|

| 94 |

count -= 1

|

| 95 |

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

|

| 104 |

-

|

| 105 |

-

titles

|

| 106 |

-

|

|

|

|

| 107 |

|

| 108 |

yield chatbot

|

| 109 |

|

|

@@ -195,5 +209,5 @@ with gr.Blocks(css=customCSS) as demo:

|

|

| 195 |

api_name="reset",

|

| 196 |

)

|

| 197 |

|

| 198 |

-

demo.title = "Chat with AI Books"

|

| 199 |

demo.queue(concurrency_count=CONCURRENT_COUNT).launch(share=share_gradio_app)

|

|

|

|

| 8 |

from anyio.from_thread import start_blocking_portal

|

| 9 |

|

| 10 |

from app_modules.init import app_init

|

| 11 |

+

from app_modules.llm_chat_chain import ChatChain

|

| 12 |

from app_modules.utils import print_llm_response, remove_extra_spaces

|

| 13 |

|

| 14 |

llm_loader, qa_chain = app_init()

|

| 15 |

|

|

|

|

| 16 |

show_param_settings = os.environ.get("SHOW_PARAM_SETTINGS") == "true"

|

| 17 |

share_gradio_app = os.environ.get("SHARE_GRADIO_APP") == "true"

|

|

|

|

| 18 |

using_openai = os.environ.get("LLM_MODEL_TYPE") == "openai"

|

| 19 |

+

chat_with_llama_2 = (

|

| 20 |

+

not using_openai and os.environ.get("USE_LLAMA_2_PROMPT_TEMPLATE") == "true"

|

| 21 |

+

)

|

| 22 |

+

chat_history_enabled = (

|

| 23 |

+

not chat_with_llama_2 and os.environ.get("CHAT_HISTORY_ENABLED") == "true"

|

| 24 |

+

)

|

| 25 |

+

|

| 26 |

model = (

|

| 27 |

"OpenAI GPT-3.5"

|

| 28 |

if using_openai

|

|

|

|

| 34 |

else f"https://huggingface.co/{model}"

|

| 35 |

)

|

| 36 |

|

| 37 |

+

if chat_with_llama_2:

|

| 38 |

+

qa_chain = ChatChain(llm_loader)

|

| 39 |

+

name = "Llama-2"

|

| 40 |

+

else:

|

| 41 |

+

name = "AI Books"

|

| 42 |

+

|

| 43 |

+

title = f"""<h1 align="left" style="min-width:200px; margin-top:0;"> Chat with {name} </h1>"""

|

| 44 |

|

| 45 |

description_top = f"""\

|

| 46 |

<div align="left">

|

|

|

|

| 54 |

</div>

|

| 55 |

"""

|

| 56 |

|

| 57 |

+

CONCURRENT_COUNT = 1

|

| 58 |

|

| 59 |

|

| 60 |

def qa(chatbot):

|

|

|

|

| 65 |

|

| 66 |

def task(question, chat_history):

|

| 67 |

start = timer()

|

| 68 |

+

inputs = {"question": question}

|

| 69 |

+

if not chat_with_llama_2:

|

| 70 |

+

inputs["chat_history"] = chat_history

|

| 71 |

+

ret = qa_chain.call_chain(inputs, None, q)

|

| 72 |

end = timer()

|

| 73 |

|

| 74 |

print(f"Completed in {end - start:.3f}s")

|

|

|

|

| 106 |

|

| 107 |

count -= 1

|

| 108 |

|

| 109 |

+

if not chat_with_llama_2:

|

| 110 |

+

chatbot[-1][1] += "\n\nSources:\n"

|

| 111 |

+

ret = result.get()

|

| 112 |

+

titles = []

|

| 113 |

+

for doc in ret["source_documents"]:

|

| 114 |

+

page = doc.metadata["page"] + 1

|

| 115 |

+

url = f"{doc.metadata['url']}#page={page}"

|

| 116 |

+

file_name = doc.metadata["source"].split("/")[-1]

|

| 117 |

+

title = f"{file_name} Page: {page}"

|

| 118 |

+

if title not in titles:

|

| 119 |

+

titles.append(title)

|

| 120 |

+

chatbot[-1][1] += f"1. [{title}]({url})\n"

|

| 121 |

|

| 122 |

yield chatbot

|

| 123 |

|

|

|

|

| 209 |

api_name="reset",

|

| 210 |

)

|

| 211 |

|

| 212 |

+

demo.title = "Chat with AI Books" if chat_with_llama_2 else "Chat with Llama-2"

|

| 213 |

demo.queue(concurrency_count=CONCURRENT_COUNT).launch(share=share_gradio_app)

|

app_modules/llm_loader.py

CHANGED

|

@@ -421,20 +421,40 @@ class LLMLoader:

|

|

| 421 |

else:

|

| 422 |

model = MODEL_NAME_OR_PATH

|

| 423 |

|

| 424 |

-

pipe =

|

| 425 |

-

|

| 426 |

-

|

| 427 |

-

|

| 428 |

-

|

| 429 |

-

|

| 430 |

-

|

| 431 |

-

|

| 432 |

-

|

| 433 |

-

|

| 434 |

-

|

| 435 |

-

|

| 436 |

-

|

| 437 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 438 |

)

|

| 439 |

|

| 440 |

self.llm = HuggingFacePipeline(pipeline=pipe, callbacks=callbacks)

|

|

|

|

| 421 |

else:

|

| 422 |

model = MODEL_NAME_OR_PATH

|

| 423 |

|

| 424 |

+

pipe = (

|

| 425 |

+

pipeline(

|

| 426 |

+

task,

|

| 427 |

+

model=model,

|

| 428 |

+

tokenizer=tokenizer,

|

| 429 |

+

streamer=self.streamer,

|

| 430 |

+

return_full_text=return_full_text, # langchain expects the full text

|

| 431 |

+

device=hf_pipeline_device_type,

|

| 432 |

+

torch_dtype=torch_dtype,

|

| 433 |

+

max_new_tokens=2048,

|

| 434 |

+

trust_remote_code=True,

|

| 435 |

+

temperature=temperature,

|

| 436 |

+

top_p=0.95,

|

| 437 |

+

top_k=0, # select from top 0 tokens (because zero, relies on top_p)

|

| 438 |

+

repetition_penalty=1.115,

|

| 439 |

+

)

|

| 440 |

+

if token is None

|

| 441 |

+

else pipeline(

|

| 442 |

+

task,

|

| 443 |

+

model=model,

|

| 444 |

+

tokenizer=tokenizer,

|

| 445 |

+

streamer=self.streamer,

|

| 446 |

+

return_full_text=return_full_text, # langchain expects the full text

|

| 447 |

+

device=hf_pipeline_device_type,

|

| 448 |

+

torch_dtype=torch_dtype,

|

| 449 |

+

max_new_tokens=2048,

|

| 450 |

+

trust_remote_code=True,

|

| 451 |

+

temperature=temperature,

|

| 452 |

+

top_p=0.95,

|

| 453 |

+

top_k=0, # select from top 0 tokens (because zero, relies on top_p)

|

| 454 |

+

repetition_penalty=1.115,

|

| 455 |

+

use_auth_token=token,

|

| 456 |

+

token=token,

|

| 457 |

+

)

|

| 458 |

)

|

| 459 |

|

| 460 |

self.llm = HuggingFacePipeline(pipeline=pipe, callbacks=callbacks)

|

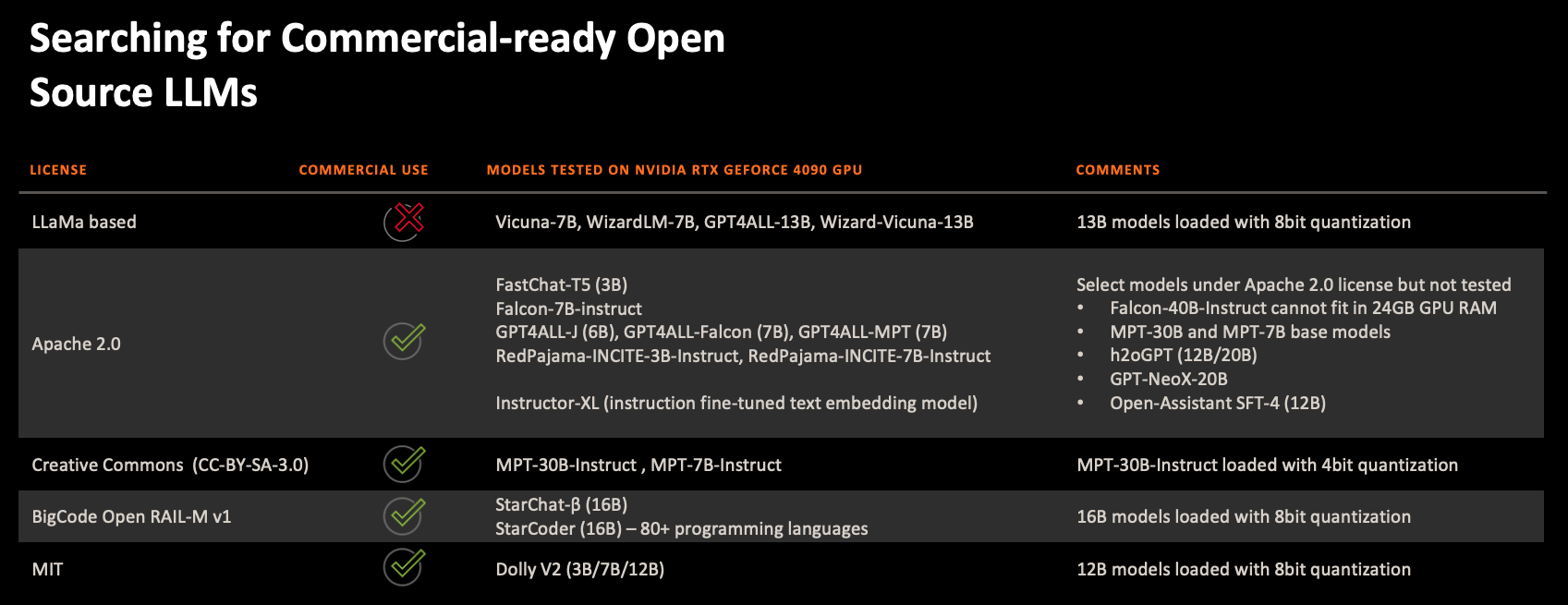

assets/Open Source LLMs.png

ADDED

|

assets/Workflow-Overview.png

ADDED

|

data/questions.txt

CHANGED

|

@@ -2,3 +2,4 @@ What's AI?

|

|

| 2 |

life in AI era

|

| 3 |

machine learning

|

| 4 |

generative model

|

|

|

|

|

|

| 2 |

life in AI era

|

| 3 |

machine learning

|

| 4 |

generative model

|

| 5 |

+

graph attention network

|

test.py

CHANGED

|

@@ -1,183 +1,103 @@

|

|

| 1 |

-

# project/test.py

|

| 2 |

-

|

| 3 |

import os

|

| 4 |

import sys

|

| 5 |

-

import

|

| 6 |

from timeit import default_timer as timer

|

| 7 |

|

| 8 |

from langchain.callbacks.base import BaseCallbackHandler

|

| 9 |

-

from langchain.schema import

|

| 10 |

|

| 11 |

from app_modules.init import app_init

|

| 12 |

-

from app_modules.

|

| 13 |

-

from app_modules.llm_loader import LLMLoader

|

| 14 |

-

from app_modules.utils import get_device_types, print_llm_response

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

class TestLLMLoader(unittest.TestCase):

|

| 18 |

-

question = os.environ.get("CHAT_QUESTION")

|

| 19 |

-

|

| 20 |

-

def run_test_case(self, llm_model_type, query):

|

| 21 |

-

n_threds = int(os.environ.get("NUMBER_OF_CPU_CORES") or "4")

|

| 22 |

-

|

| 23 |

-

hf_embeddings_device_type, hf_pipeline_device_type = get_device_types()

|

| 24 |

-

print(f"hf_embeddings_device_type: {hf_embeddings_device_type}")

|

| 25 |

-

print(f"hf_pipeline_device_type: {hf_pipeline_device_type}")

|

| 26 |

-

|

| 27 |

-

llm_loader = LLMLoader(llm_model_type)

|

| 28 |

-

start = timer()

|

| 29 |

-

llm_loader.init(

|

| 30 |

-

n_threds=n_threds, hf_pipeline_device_type=hf_pipeline_device_type

|

| 31 |

-

)

|

| 32 |

-

end = timer()

|

| 33 |

-

print(f"Model loaded in {end - start:.3f}s")

|

| 34 |

-

|

| 35 |

-

result = llm_loader.llm(

|

| 36 |

-

[HumanMessage(content=query)] if llm_model_type == "openai" else query

|

| 37 |

-

)

|

| 38 |

-

end2 = timer()

|

| 39 |

-

print(f"Inference completed in {end2 - end:.3f}s")

|

| 40 |

-

print(result)

|

| 41 |

-

|

| 42 |

-

def test_openai(self):

|

| 43 |

-

self.run_test_case("openai", self.question)

|

| 44 |

-

|

| 45 |

-

def test_llamacpp(self):

|

| 46 |

-

self.run_test_case("llamacpp", self.question)

|

| 47 |

-

|

| 48 |

-

def test_gpt4all_j(self):

|

| 49 |

-

self.run_test_case("gpt4all-j", self.question)

|

| 50 |

-

|

| 51 |

-

def test_huggingface(self):

|

| 52 |

-

self.run_test_case("huggingface", self.question)

|

| 53 |

-

|

| 54 |

-

def test_hftgi(self):

|

| 55 |

-

self.run_test_case("hftgi", self.question)

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

class TestChatChain(unittest.TestCase):

|

| 59 |

-

question = os.environ.get("CHAT_QUESTION")

|

| 60 |

-

|

| 61 |

-

def run_test_case(self, llm_model_type, query):

|

| 62 |

-

n_threds = int(os.environ.get("NUMBER_OF_CPU_CORES") or "4")

|

| 63 |

-

|

| 64 |

-

hf_embeddings_device_type, hf_pipeline_device_type = get_device_types()

|

| 65 |

-

print(f"hf_embeddings_device_type: {hf_embeddings_device_type}")

|

| 66 |

-

print(f"hf_pipeline_device_type: {hf_pipeline_device_type}")

|

| 67 |

|

| 68 |

-

|

| 69 |

-

start = timer()

|

| 70 |

-

llm_loader.init(

|

| 71 |

-

n_threds=n_threds, hf_pipeline_device_type=hf_pipeline_device_type

|

| 72 |

-

)

|

| 73 |

-

chat = ChatChain(llm_loader)

|

| 74 |

-

end = timer()

|

| 75 |

-

print(f"Model loaded in {end - start:.3f}s")

|

| 76 |

|

| 77 |

-

inputs = {"question": query}

|

| 78 |

-

result = chat.call_chain(inputs, None)

|

| 79 |

-

end2 = timer()

|

| 80 |

-

print(f"Inference completed in {end2 - end:.3f}s")

|

| 81 |

-

print(result)

|

| 82 |

|

| 83 |

-

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

print(f"Inference completed in {end3 - end2:.3f}s")

|

| 87 |

-

print(result)

|

| 88 |

|

| 89 |

-

def

|

| 90 |

-

self.

|

| 91 |

|

| 92 |

-

def

|

| 93 |

-

self.

|

| 94 |

|

| 95 |

-

def

|

| 96 |

-

|

|

|

|

|

|

|

|

|

|

| 97 |

|

| 98 |

-

def test_huggingface(self):

|

| 99 |

-

self.run_test_case("huggingface", self.question)

|

| 100 |

|

| 101 |

-

|

| 102 |

-

|

|

|

|

| 103 |

|

|

|

|

| 104 |

|

| 105 |

-

|

| 106 |

-

|

| 107 |

-

|

| 108 |

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

os.environ["LLM_MODEL_TYPE"] = llm_model_type

|

| 112 |

-

qa_chain = app_init()[1]

|

| 113 |

-

end = timer()

|

| 114 |

-

print(f"App initialized in {end - start:.3f}s")

|

| 115 |

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

print(f"Inference completed in {end2 - end:.3f}s")

|

| 121 |

-

print_llm_response(result)

|

| 122 |

|

| 123 |

-

|

|

|

|

| 124 |

|

| 125 |

-

|

| 126 |

-

result = qa_chain.call_chain(inputs, None)

|

| 127 |

-

end3 = timer()

|

| 128 |

-

print(f"Inference completed in {end3 - end2:.3f}s")

|

| 129 |

-

print_llm_response(result)

|

| 130 |

|

| 131 |

-

|

| 132 |

-

self.run_test_case("openai", self.question)

|

| 133 |

|

| 134 |

-

|

| 135 |

-

|

| 136 |

-

|

| 137 |

-

|

| 138 |

-

|

| 139 |

-

|

| 140 |

-

def test_huggingface(self):

|

| 141 |

-

self.run_test_case("huggingface", self.question)

|

| 142 |

|

| 143 |

-

|

| 144 |

-

|

|

|

|

| 145 |

|

|

|

|

|

|

|

| 146 |

|

| 147 |

-

def chat():

|

| 148 |

start = timer()

|

| 149 |

-

|

|

|

|

|

|

|

| 150 |

end = timer()

|

| 151 |

-

print(f"

|

| 152 |

|

| 153 |

-

|

| 154 |

-

chat_history = []

|

| 155 |

|

| 156 |

-

|

| 157 |

-

|

| 158 |

-

|

| 159 |

-

|

| 160 |

-

query = query.strip()

|

| 161 |

-

if query.lower() == "exit":

|

| 162 |

-

break

|

| 163 |

-

|

| 164 |

-

print("\nQuestion: " + query)

|

| 165 |

|

|

|

|

|

|

|

| 166 |

start = timer()

|

| 167 |

-

|

| 168 |

-

|

| 169 |

-

)

|

| 170 |

end = timer()

|

| 171 |

-

print(f"Completed in {end - start:.3f}s")

|

| 172 |

|

| 173 |

-

|

| 174 |

-

|

| 175 |

-

chat_end = timer()

|

| 176 |

-

print(f"Total time used: {chat_end - chat_start:.3f}s")

|

| 177 |

|

|

|

|

|

|

|

| 178 |

|

| 179 |

-

|

| 180 |

-

|

| 181 |

-

|

| 182 |

-

|

| 183 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

import os

|

| 2 |

import sys

|

| 3 |

+

from queue import Queue

|

| 4 |

from timeit import default_timer as timer

|

| 5 |

|

| 6 |

from langchain.callbacks.base import BaseCallbackHandler

|

| 7 |

+

from langchain.schema import LLMResult

|

| 8 |

|

| 9 |

from app_modules.init import app_init

|

| 10 |

+

from app_modules.utils import print_llm_response

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

+

llm_loader, qa_chain = app_init()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

|

| 15 |

+

class MyCustomHandler(BaseCallbackHandler):

|

| 16 |

+

def __init__(self):

|

| 17 |

+

self.reset()

|

|

|

|

|

|

|

| 18 |

|

| 19 |

+

def reset(self):

|

| 20 |

+

self.texts = []

|

| 21 |

|

| 22 |

+

def get_standalone_question(self) -> str:

|

| 23 |

+

return self.texts[0].strip() if len(self.texts) > 0 else None

|

| 24 |

|

| 25 |

+

def on_llm_end(self, response: LLMResult, **kwargs) -> None:

|

| 26 |

+

"""Run when chain ends running."""

|

| 27 |

+

print("\non_llm_end - response:")

|

| 28 |

+

print(response)

|

| 29 |

+

self.texts.append(response.generations[0][0].text)

|

| 30 |

|

|

|

|

|

|

|

| 31 |

|

| 32 |

+

chatting = len(sys.argv) > 1 and sys.argv[1] == "chat"

|

| 33 |

+

questions_file_path = os.environ.get("QUESTIONS_FILE_PATH")

|

| 34 |

+

chat_history_enabled = os.environ.get("CHAT_HISTORY_ENABLED") or "true"

|

| 35 |

|

| 36 |

+

custom_handler = MyCustomHandler()

|

| 37 |

|

| 38 |

+

# Chatbot loop

|

| 39 |

+

chat_history = []

|

| 40 |

+

print("Welcome to the ChatPDF! Type 'exit' to stop.")

|

| 41 |

|

| 42 |

+

# Open the file for reading

|

| 43 |

+

file = open(questions_file_path, "r")

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

|

| 45 |

+

# Read the contents of the file into a list of strings

|

| 46 |

+

queue = file.readlines()

|

| 47 |

+

for i in range(len(queue)):

|

| 48 |

+

queue[i] = queue[i].strip()

|

|

|

|

|

|

|

| 49 |

|

| 50 |

+

# Close the file

|

| 51 |

+

file.close()

|

| 52 |

|

| 53 |

+

queue.append("exit")

|

|

|

|

|

|

|

|

|

|

|

|

|

| 54 |

|

| 55 |

+

chat_start = timer()

|

|

|

|

| 56 |

|

| 57 |

+

while True:

|

| 58 |

+

if chatting:

|

| 59 |

+

query = input("Please enter your question: ")

|

| 60 |

+

else:

|

| 61 |

+

query = queue.pop(0)

|

|

|

|

|

|

|

|

|

|

| 62 |

|

| 63 |

+

query = query.strip()

|

| 64 |

+

if query.lower() == "exit":

|

| 65 |

+

break

|

| 66 |

|

| 67 |

+

print("\nQuestion: " + query)

|

| 68 |

+

custom_handler.reset()

|

| 69 |

|

|

|

|

| 70 |

start = timer()

|

| 71 |

+

result = qa_chain.call_chain(

|

| 72 |

+

{"question": query, "chat_history": chat_history}, custom_handler

|

| 73 |

+

)

|

| 74 |

end = timer()

|

| 75 |

+

print(f"Completed in {end - start:.3f}s")

|

| 76 |

|

| 77 |

+

print_llm_response(result)

|

|

|

|

| 78 |

|

| 79 |

+

if len(chat_history) == 0:

|

| 80 |

+

standalone_question = query

|

| 81 |

+

else:

|

| 82 |

+

standalone_question = custom_handler.get_standalone_question()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 83 |

|

| 84 |

+

if standalone_question is not None:

|

| 85 |

+

print(f"Load relevant documents for standalone question: {standalone_question}")

|

| 86 |

start = timer()

|

| 87 |

+

qa = qa_chain.get_chain()

|

| 88 |

+

docs = qa.retriever.get_relevant_documents(standalone_question)

|

|

|

|

| 89 |

end = timer()

|

|

|

|

| 90 |

|

| 91 |

+

# print(docs)

|

| 92 |

+

print(f"Completed in {end - start:.3f}s")

|

|

|

|

|

|

|

| 93 |

|

| 94 |

+

if chat_history_enabled == "true":

|

| 95 |

+

chat_history.append((query, result["answer"]))

|

| 96 |

|

| 97 |

+

chat_end = timer()

|

| 98 |

+

total_time = chat_end - chat_start

|

| 99 |

+

print(f"Total time used: {total_time:.3f} s")

|

| 100 |

+

print(f"Number of tokens generated: {llm_loader.streamer.total_tokens}")

|

| 101 |

+

print(

|

| 102 |

+

f"Average generation speed: {llm_loader.streamer.total_tokens / total_time:.3f} tokens/s"

|

| 103 |

+

)

|

unit_test.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# project/test.py

|

| 2 |

+

|

| 3 |

+

import os

|

| 4 |

+

import sys

|

| 5 |

+

import unittest

|

| 6 |

+

from timeit import default_timer as timer

|

| 7 |

+

|

| 8 |

+

from langchain.callbacks.base import BaseCallbackHandler

|

| 9 |

+

from langchain.schema import HumanMessage

|

| 10 |

+

|

| 11 |

+

from app_modules.init import app_init

|

| 12 |

+

from app_modules.llm_chat_chain import ChatChain

|

| 13 |

+

from app_modules.llm_loader import LLMLoader

|

| 14 |

+

from app_modules.utils import get_device_types, print_llm_response

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

class TestLLMLoader(unittest.TestCase):

|

| 18 |

+

question = os.environ.get("CHAT_QUESTION")

|

| 19 |

+

|

| 20 |

+

def run_test_case(self, llm_model_type, query):

|

| 21 |

+

n_threds = int(os.environ.get("NUMBER_OF_CPU_CORES") or "4")

|

| 22 |

+

|

| 23 |

+

hf_embeddings_device_type, hf_pipeline_device_type = get_device_types()

|

| 24 |

+

print(f"hf_embeddings_device_type: {hf_embeddings_device_type}")

|

| 25 |

+

print(f"hf_pipeline_device_type: {hf_pipeline_device_type}")

|

| 26 |

+

|

| 27 |

+

llm_loader = LLMLoader(llm_model_type)

|

| 28 |

+

start = timer()

|

| 29 |

+

llm_loader.init(

|

| 30 |

+

n_threds=n_threds, hf_pipeline_device_type=hf_pipeline_device_type

|

| 31 |

+

)

|

| 32 |

+

end = timer()

|

| 33 |

+

print(f"Model loaded in {end - start:.3f}s")

|

| 34 |

+

|

| 35 |

+

result = llm_loader.llm(

|

| 36 |

+

[HumanMessage(content=query)] if llm_model_type == "openai" else query

|

| 37 |

+

)

|

| 38 |

+

end2 = timer()

|

| 39 |

+

print(f"Inference completed in {end2 - end:.3f}s")

|

| 40 |

+

print(result)

|

| 41 |

+

|

| 42 |

+

def test_openai(self):

|

| 43 |

+

self.run_test_case("openai", self.question)

|

| 44 |

+

|

| 45 |

+

def test_llamacpp(self):

|

| 46 |

+

self.run_test_case("llamacpp", self.question)

|

| 47 |

+

|

| 48 |

+

def test_gpt4all_j(self):

|

| 49 |

+

self.run_test_case("gpt4all-j", self.question)

|

| 50 |

+

|

| 51 |

+

def test_huggingface(self):

|

| 52 |

+

self.run_test_case("huggingface", self.question)

|

| 53 |

+

|

| 54 |

+

def test_hftgi(self):

|

| 55 |

+

self.run_test_case("hftgi", self.question)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

class TestChatChain(unittest.TestCase):

|

| 59 |

+

question = os.environ.get("CHAT_QUESTION")

|

| 60 |

+

|

| 61 |

+

def run_test_case(self, llm_model_type, query):

|

| 62 |

+

n_threds = int(os.environ.get("NUMBER_OF_CPU_CORES") or "4")

|

| 63 |

+

|

| 64 |

+

hf_embeddings_device_type, hf_pipeline_device_type = get_device_types()

|

| 65 |

+

print(f"hf_embeddings_device_type: {hf_embeddings_device_type}")

|

| 66 |

+

print(f"hf_pipeline_device_type: {hf_pipeline_device_type}")

|

| 67 |

+

|

| 68 |

+

llm_loader = LLMLoader(llm_model_type)

|

| 69 |

+

start = timer()

|

| 70 |

+

llm_loader.init(

|

| 71 |

+

n_threds=n_threds, hf_pipeline_device_type=hf_pipeline_device_type

|

| 72 |

+

)

|

| 73 |

+

chat = ChatChain(llm_loader)

|

| 74 |

+

end = timer()

|

| 75 |

+

print(f"Model loaded in {end - start:.3f}s")

|

| 76 |

+

|

| 77 |

+

inputs = {"question": query}

|

| 78 |

+

result = chat.call_chain(inputs, None)

|

| 79 |

+

end2 = timer()

|

| 80 |

+

print(f"Inference completed in {end2 - end:.3f}s")

|

| 81 |

+

print(result)

|

| 82 |

+

|

| 83 |

+

inputs = {"question": "how many people?"}

|

| 84 |

+

result = chat.call_chain(inputs, None)

|

| 85 |

+

end3 = timer()

|

| 86 |

+

print(f"Inference completed in {end3 - end2:.3f}s")

|

| 87 |

+

print(result)

|

| 88 |

+

|

| 89 |

+

def test_openai(self):

|

| 90 |

+

self.run_test_case("openai", self.question)

|

| 91 |

+

|

| 92 |

+

def test_llamacpp(self):

|

| 93 |

+

self.run_test_case("llamacpp", self.question)

|

| 94 |

+

|

| 95 |

+

def test_gpt4all_j(self):

|

| 96 |

+

self.run_test_case("gpt4all-j", self.question)

|

| 97 |

+

|

| 98 |

+

def test_huggingface(self):

|

| 99 |

+

self.run_test_case("huggingface", self.question)

|

| 100 |

+

|

| 101 |

+

def test_hftgi(self):

|

| 102 |

+

self.run_test_case("hftgi", self.question)

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

class TestQAChain(unittest.TestCase):

|

| 106 |

+

qa_chain: any

|

| 107 |

+

question = os.environ.get("QA_QUESTION")

|

| 108 |

+

|

| 109 |

+

def run_test_case(self, llm_model_type, query):

|

| 110 |

+

start = timer()

|

| 111 |

+

os.environ["LLM_MODEL_TYPE"] = llm_model_type

|

| 112 |

+

qa_chain = app_init()[1]

|

| 113 |

+

end = timer()

|

| 114 |

+

print(f"App initialized in {end - start:.3f}s")

|

| 115 |

+

|

| 116 |

+

chat_history = []

|

| 117 |

+

inputs = {"question": query, "chat_history": chat_history}

|

| 118 |

+

result = qa_chain.call_chain(inputs, None)

|

| 119 |

+

end2 = timer()

|

| 120 |

+

print(f"Inference completed in {end2 - end:.3f}s")

|

| 121 |

+

print_llm_response(result)

|

| 122 |

+

|

| 123 |

+

chat_history.append((query, result["answer"]))

|

| 124 |

+

|

| 125 |

+

inputs = {"question": "tell me more", "chat_history": chat_history}

|

| 126 |

+

result = qa_chain.call_chain(inputs, None)

|

| 127 |

+

end3 = timer()

|

| 128 |

+

print(f"Inference completed in {end3 - end2:.3f}s")

|

| 129 |

+

print_llm_response(result)

|

| 130 |

+

|

| 131 |

+

def test_openai(self):

|

| 132 |

+

self.run_test_case("openai", self.question)

|

| 133 |

+

|

| 134 |

+

def test_llamacpp(self):

|

| 135 |

+

self.run_test_case("llamacpp", self.question)

|

| 136 |

+

|

| 137 |

+

def test_gpt4all_j(self):

|

| 138 |

+

self.run_test_case("gpt4all-j", self.question)

|

| 139 |

+

|

| 140 |

+

def test_huggingface(self):

|

| 141 |

+

self.run_test_case("huggingface", self.question)

|

| 142 |

+

|

| 143 |

+

def test_hftgi(self):

|

| 144 |

+

self.run_test_case("hftgi", self.question)

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

def chat():

|

| 148 |

+

start = timer()

|

| 149 |

+

llm_loader = app_init()[0]

|

| 150 |

+

end = timer()

|

| 151 |

+

print(f"Model loaded in {end - start:.3f}s")

|

| 152 |

+

|

| 153 |

+

chat_chain = ChatChain(llm_loader)

|

| 154 |

+

chat_history = []

|

| 155 |

+

|

| 156 |

+

chat_start = timer()

|

| 157 |

+

|

| 158 |

+

while True:

|

| 159 |

+

query = input("Please enter your question: ")

|

| 160 |

+

query = query.strip()

|

| 161 |

+

if query.lower() == "exit":

|

| 162 |

+

break

|

| 163 |

+

|

| 164 |

+

print("\nQuestion: " + query)

|

| 165 |

+

|

| 166 |

+

start = timer()

|

| 167 |

+

result = chat_chain.call_chain(

|

| 168 |

+

{"question": query, "chat_history": chat_history}, None

|

| 169 |

+

)

|

| 170 |

+

end = timer()

|

| 171 |

+

print(f"Completed in {end - start:.3f}s")

|

| 172 |

+

|

| 173 |

+

chat_history.append((query, result["text"]))

|

| 174 |

+

|

| 175 |

+

chat_end = timer()

|

| 176 |

+

print(f"Total time used: {chat_end - chat_start:.3f}s")

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

if __name__ == "__main__":

|

| 180 |

+

if len(sys.argv) > 1 and sys.argv[1] == "chat":

|

| 181 |

+

chat()

|

| 182 |

+

else:

|

| 183 |

+

unittest.main()

|