Spaces:

Running

on

CPU Upgrade

Running

on

CPU Upgrade

Commit

·

b2ecf7d

1

Parent(s):

cac61e8

🍱 Copy folders from huggingface.js

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +2 -0

- .npmrc +2 -0

- Dockerfile +13 -0

- README.md +2 -1

- package.json +30 -0

- packages/tasks/.prettierignore +4 -0

- packages/tasks/README.md +20 -0

- packages/tasks/package.json +46 -0

- packages/tasks/pnpm-lock.yaml +14 -0

- packages/tasks/src/Types.ts +64 -0

- packages/tasks/src/audio-classification/about.md +85 -0

- packages/tasks/src/audio-classification/data.ts +77 -0

- packages/tasks/src/audio-to-audio/about.md +56 -0

- packages/tasks/src/audio-to-audio/data.ts +66 -0

- packages/tasks/src/automatic-speech-recognition/about.md +87 -0

- packages/tasks/src/automatic-speech-recognition/data.ts +78 -0

- packages/tasks/src/const.ts +59 -0

- packages/tasks/src/conversational/about.md +50 -0

- packages/tasks/src/conversational/data.ts +66 -0

- packages/tasks/src/depth-estimation/about.md +36 -0

- packages/tasks/src/depth-estimation/data.ts +52 -0

- packages/tasks/src/document-question-answering/about.md +53 -0

- packages/tasks/src/document-question-answering/data.ts +70 -0

- packages/tasks/src/feature-extraction/about.md +34 -0

- packages/tasks/src/feature-extraction/data.ts +54 -0

- packages/tasks/src/fill-mask/about.md +51 -0

- packages/tasks/src/fill-mask/data.ts +79 -0

- packages/tasks/src/image-classification/about.md +50 -0

- packages/tasks/src/image-classification/data.ts +88 -0

- packages/tasks/src/image-segmentation/about.md +63 -0

- packages/tasks/src/image-segmentation/data.ts +99 -0

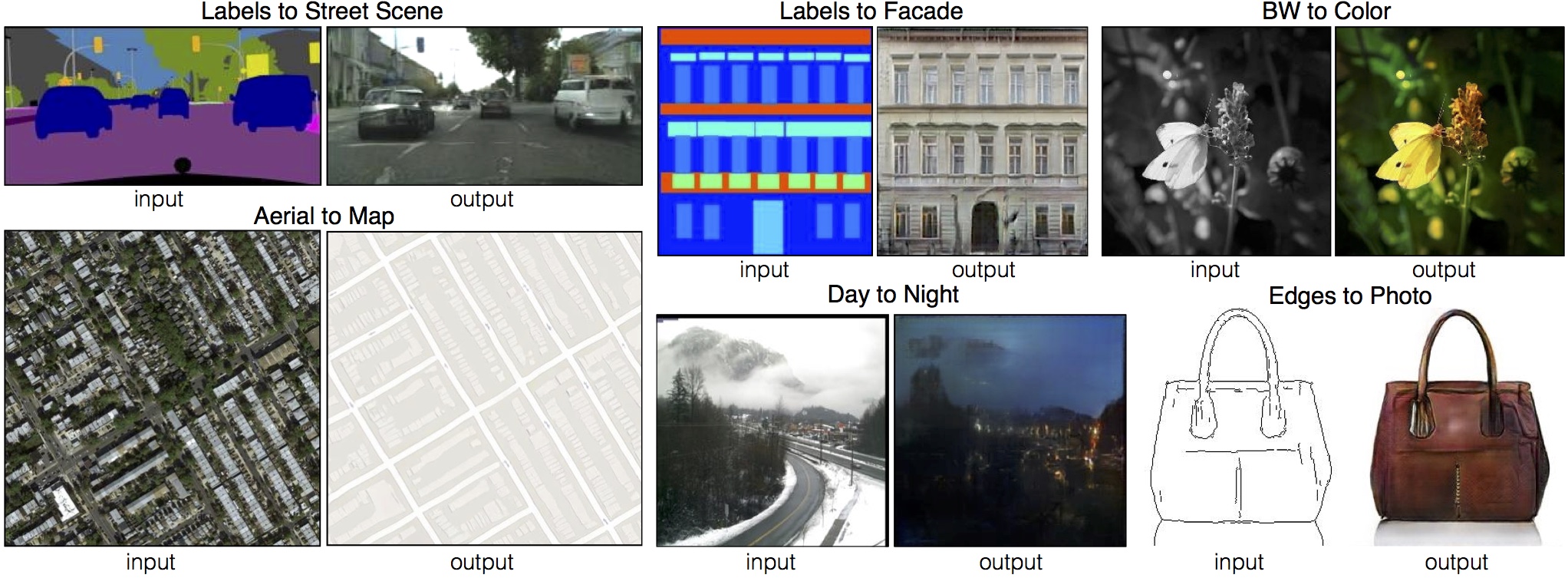

- packages/tasks/src/image-to-image/about.md +79 -0

- packages/tasks/src/image-to-image/data.ts +101 -0

- packages/tasks/src/image-to-text/about.md +65 -0

- packages/tasks/src/image-to-text/data.ts +86 -0

- packages/tasks/src/index.ts +13 -0

- packages/tasks/src/modelLibraries.ts +43 -0

- packages/tasks/src/object-detection/about.md +37 -0

- packages/tasks/src/object-detection/data.ts +76 -0

- packages/tasks/src/pipelines.ts +619 -0

- packages/tasks/src/placeholder/about.md +15 -0

- packages/tasks/src/placeholder/data.ts +18 -0

- packages/tasks/src/question-answering/about.md +56 -0

- packages/tasks/src/question-answering/data.ts +71 -0

- packages/tasks/src/reinforcement-learning/about.md +167 -0

- packages/tasks/src/reinforcement-learning/data.ts +75 -0

- packages/tasks/src/sentence-similarity/about.md +97 -0

- packages/tasks/src/sentence-similarity/data.ts +101 -0

- packages/tasks/src/summarization/about.md +58 -0

- packages/tasks/src/summarization/data.ts +75 -0

.gitignore

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

node_modules

|

| 2 |

+

dist

|

.npmrc

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

shared-workspace-lockfile = false

|

| 2 |

+

include-workspace-root = true

|

Dockerfile

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# syntax=docker/dockerfile:1

|

| 2 |

+

# read the doc: https://huggingface.co/docs/hub/spaces-sdks-docker

|

| 3 |

+

# you will also find guides on how best to write your Dockerfile

|

| 4 |

+

FROM node:20

|

| 5 |

+

|

| 6 |

+

WORKDIR /app

|

| 7 |

+

|

| 8 |

+

RUN corepack enable

|

| 9 |

+

|

| 10 |

+

COPY --link --chown=1000 . .

|

| 11 |

+

|

| 12 |

+

RUN pnpm install

|

| 13 |

+

RUN pnpm --filter widgets dev

|

README.md

CHANGED

|

@@ -5,6 +5,7 @@ colorFrom: pink

|

|

| 5 |

colorTo: red

|

| 6 |

sdk: docker

|

| 7 |

pinned: false

|

|

|

|

| 8 |

---

|

| 9 |

|

| 10 |

-

|

|

|

|

| 5 |

colorTo: red

|

| 6 |

sdk: docker

|

| 7 |

pinned: false

|

| 8 |

+

app_port: 5173

|

| 9 |

---

|

| 10 |

|

| 11 |

+

Demo app for [Inference Widgets](https://github.com/huggingface/huggingface.js/tree/main/packages/widgets).

|

package.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"license": "MIT",

|

| 3 |

+

"packageManager": "pnpm@8.10.5",

|

| 4 |

+

"dependencies": {

|

| 5 |

+

"@typescript-eslint/eslint-plugin": "^5.51.0",

|

| 6 |

+

"@typescript-eslint/parser": "^5.51.0",

|

| 7 |

+

"eslint": "^8.35.0",

|

| 8 |

+

"eslint-config-prettier": "^9.0.0",

|

| 9 |

+

"eslint-plugin-prettier": "^4.2.1",

|

| 10 |

+

"eslint-plugin-svelte": "^2.30.0",

|

| 11 |

+

"prettier": "^3.0.0",

|

| 12 |

+

"prettier-plugin-svelte": "^3.0.0",

|

| 13 |

+

"typescript": "^5.0.0",

|

| 14 |

+

"vite": "4.1.4"

|

| 15 |

+

},

|

| 16 |

+

"scripts": {

|

| 17 |

+

"lint": "eslint --quiet --fix --ext .cjs,.ts .eslintrc.cjs",

|

| 18 |

+

"lint:check": "eslint --ext .cjs,.ts .eslintrc.cjs",

|

| 19 |

+

"format": "prettier --write package.json .prettierrc .vscode .eslintrc.cjs e2e .github *.md",

|

| 20 |

+

"format:check": "prettier --check package.json .prettierrc .vscode .eslintrc.cjs .github *.md"

|

| 21 |

+

},

|

| 22 |

+

"devDependencies": {

|

| 23 |

+

"@vitest/browser": "^0.29.7",

|

| 24 |

+

"semver": "^7.5.0",

|

| 25 |

+

"ts-node": "^10.9.1",

|

| 26 |

+

"tsup": "^6.7.0",

|

| 27 |

+

"vitest": "^0.29.4",

|

| 28 |

+

"webdriverio": "^8.6.7"

|

| 29 |

+

}

|

| 30 |

+

}

|

packages/tasks/.prettierignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pnpm-lock.yaml

|

| 2 |

+

# In order to avoid code samples to have tabs, they don't display well on npm

|

| 3 |

+

README.md

|

| 4 |

+

dist

|

packages/tasks/README.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Tasks

|

| 2 |

+

|

| 3 |

+

This package contains data used for https://huggingface.co/tasks.

|

| 4 |

+

|

| 5 |

+

## Philosophy behind Tasks

|

| 6 |

+

|

| 7 |

+

The Task pages are made to lower the barrier of entry to understand a task that can be solved with machine learning and use or train a model to accomplish it. It's a collaborative documentation effort made to help out software developers, social scientists, or anyone with no background in machine learning that is interested in understanding how machine learning models can be used to solve a problem.

|

| 8 |

+

|

| 9 |

+

The task pages avoid jargon to let everyone understand the documentation, and if specific terminology is needed, it is explained on the most basic level possible. This is important to understand before contributing to Tasks: at the end of every task page, the user is expected to be able to find and pull a model from the Hub and use it on their data and see if it works for their use case to come up with a proof of concept.

|

| 10 |

+

|

| 11 |

+

## How to Contribute

|

| 12 |

+

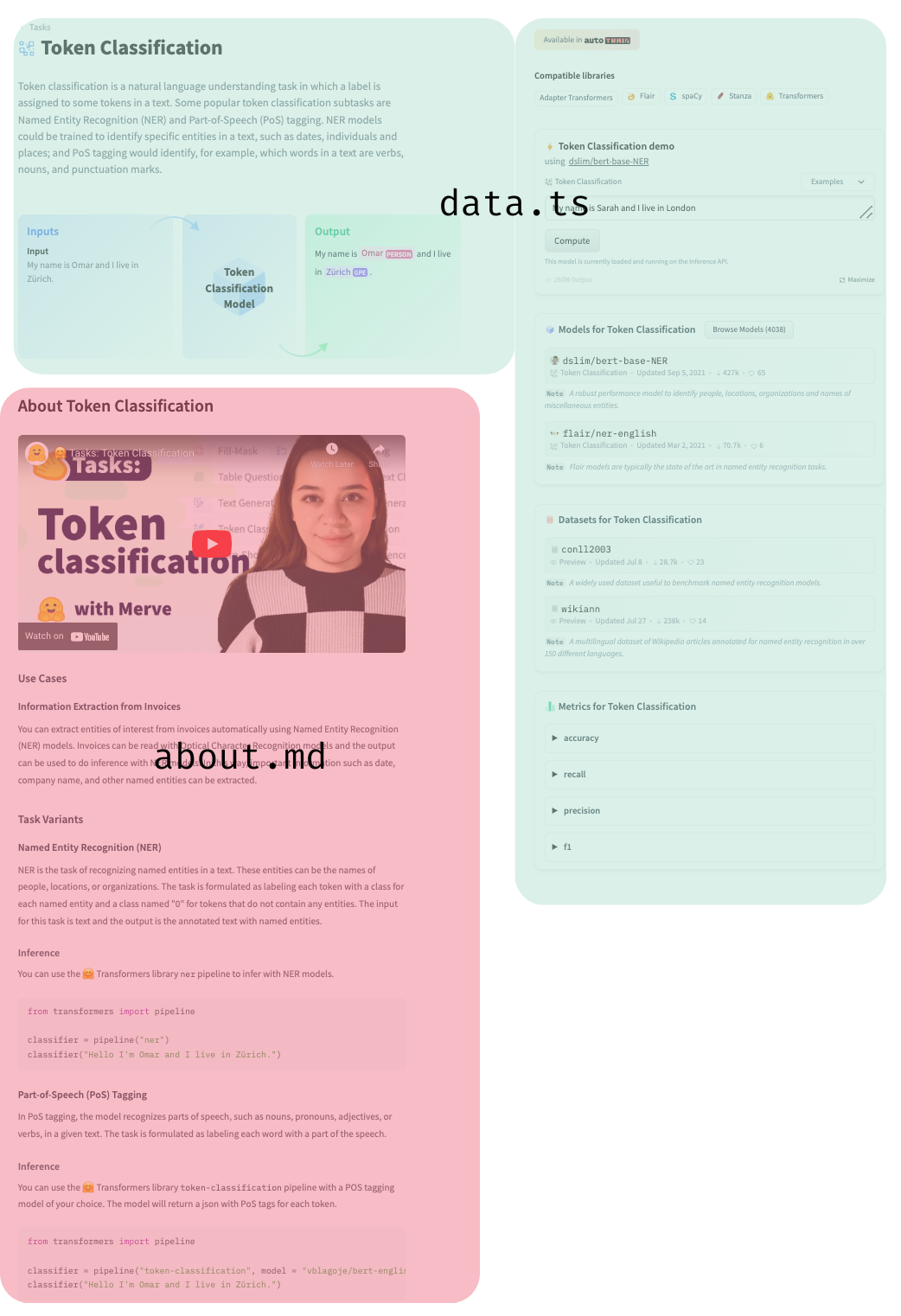

You can open a pull request to contribute a new documentation about a new task. Under `src` we have a folder for every task that contains two files, `about.md` and `data.ts`. `about.md` contains the markdown part of the page, use cases, resources and minimal code block to infer a model that belongs to the task. `data.ts` contains redirections to canonical models and datasets, metrics, the schema of the task and the information the inference widget needs.

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

We have a [`dataset`](https://huggingface.co/datasets/huggingfacejs/tasks) that contains data used in the inference widget. The last file is `const.ts`, which has the task to library mapping (e.g. spacy to token-classification) where you can add a library. They will look in the top right corner like below.

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

This might seem overwhelming, but you don't necessarily need to add all of these in one pull request or on your own, you can simply contribute one section. Feel free to ask for help whenever you need.

|

packages/tasks/package.json

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "@huggingface/tasks",

|

| 3 |

+

"packageManager": "pnpm@8.10.5",

|

| 4 |

+

"version": "0.0.5",

|

| 5 |

+

"description": "List of ML tasks for huggingface.co/tasks",

|

| 6 |

+

"repository": "https://github.com/huggingface/huggingface.js.git",

|

| 7 |

+

"publishConfig": {

|

| 8 |

+

"access": "public"

|

| 9 |

+

},

|

| 10 |

+

"main": "./dist/index.js",

|

| 11 |

+

"module": "./dist/index.mjs",

|

| 12 |

+

"types": "./dist/index.d.ts",

|

| 13 |

+

"exports": {

|

| 14 |

+

".": {

|

| 15 |

+

"types": "./dist/index.d.ts",

|

| 16 |

+

"require": "./dist/index.js",

|

| 17 |

+

"import": "./dist/index.mjs"

|

| 18 |

+

}

|

| 19 |

+

},

|

| 20 |

+

"source": "src/index.ts",

|

| 21 |

+

"scripts": {

|

| 22 |

+

"lint": "eslint --quiet --fix --ext .cjs,.ts .",

|

| 23 |

+

"lint:check": "eslint --ext .cjs,.ts .",

|

| 24 |

+

"format": "prettier --write .",

|

| 25 |

+

"format:check": "prettier --check .",

|

| 26 |

+

"prepublishOnly": "pnpm run build",

|

| 27 |

+

"build": "tsup src/index.ts --format cjs,esm --clean --dts",

|

| 28 |

+

"prepare": "pnpm run build",

|

| 29 |

+

"check": "tsc"

|

| 30 |

+

},

|

| 31 |

+

"files": [

|

| 32 |

+

"dist",

|

| 33 |

+

"src",

|

| 34 |

+

"tsconfig.json"

|

| 35 |

+

],

|

| 36 |

+

"keywords": [

|

| 37 |

+

"huggingface",

|

| 38 |

+

"hub",

|

| 39 |

+

"languages"

|

| 40 |

+

],

|

| 41 |

+

"author": "Hugging Face",

|

| 42 |

+

"license": "MIT",

|

| 43 |

+

"devDependencies": {

|

| 44 |

+

"typescript": "^5.0.4"

|

| 45 |

+

}

|

| 46 |

+

}

|

packages/tasks/pnpm-lock.yaml

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

lockfileVersion: '6.0'

|

| 2 |

+

|

| 3 |

+

devDependencies:

|

| 4 |

+

typescript:

|

| 5 |

+

specifier: ^5.0.4

|

| 6 |

+

version: 5.0.4

|

| 7 |

+

|

| 8 |

+

packages:

|

| 9 |

+

|

| 10 |

+

/typescript@5.0.4:

|

| 11 |

+

resolution: {integrity: sha512-cW9T5W9xY37cc+jfEnaUvX91foxtHkza3Nw3wkoF4sSlKn0MONdkdEndig/qPBWXNkmplh3NzayQzCiHM4/hqw==}

|

| 12 |

+

engines: {node: '>=12.20'}

|

| 13 |

+

hasBin: true

|

| 14 |

+

dev: true

|

packages/tasks/src/Types.ts

ADDED

|

@@ -0,0 +1,64 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import type { ModelLibraryKey } from "./modelLibraries";

|

| 2 |

+

import type { PipelineType } from "./pipelines";

|

| 3 |

+

|

| 4 |

+

export interface ExampleRepo {

|

| 5 |

+

description: string;

|

| 6 |

+

id: string;

|

| 7 |

+

}

|

| 8 |

+

|

| 9 |

+

export type TaskDemoEntry =

|

| 10 |

+

| {

|

| 11 |

+

filename: string;

|

| 12 |

+

type: "audio";

|

| 13 |

+

}

|

| 14 |

+

| {

|

| 15 |

+

data: Array<{

|

| 16 |

+

label: string;

|

| 17 |

+

score: number;

|

| 18 |

+

}>;

|

| 19 |

+

type: "chart";

|

| 20 |

+

}

|

| 21 |

+

| {

|

| 22 |

+

filename: string;

|

| 23 |

+

type: "img";

|

| 24 |

+

}

|

| 25 |

+

| {

|

| 26 |

+

table: string[][];

|

| 27 |

+

type: "tabular";

|

| 28 |

+

}

|

| 29 |

+

| {

|

| 30 |

+

content: string;

|

| 31 |

+

label: string;

|

| 32 |

+

type: "text";

|

| 33 |

+

}

|

| 34 |

+

| {

|

| 35 |

+

text: string;

|

| 36 |

+

tokens: Array<{

|

| 37 |

+

end: number;

|

| 38 |

+

start: number;

|

| 39 |

+

type: string;

|

| 40 |

+

}>;

|

| 41 |

+

type: "text-with-tokens";

|

| 42 |

+

};

|

| 43 |

+

|

| 44 |

+

export interface TaskDemo {

|

| 45 |

+

inputs: TaskDemoEntry[];

|

| 46 |

+

outputs: TaskDemoEntry[];

|

| 47 |

+

}

|

| 48 |

+

|

| 49 |

+

export interface TaskData {

|

| 50 |

+

datasets: ExampleRepo[];

|

| 51 |

+

demo: TaskDemo;

|

| 52 |

+

id: PipelineType;

|

| 53 |

+

isPlaceholder?: boolean;

|

| 54 |

+

label: string;

|

| 55 |

+

libraries: ModelLibraryKey[];

|

| 56 |

+

metrics: ExampleRepo[];

|

| 57 |

+

models: ExampleRepo[];

|

| 58 |

+

spaces: ExampleRepo[];

|

| 59 |

+

summary: string;

|

| 60 |

+

widgetModels: string[];

|

| 61 |

+

youtubeId?: string;

|

| 62 |

+

}

|

| 63 |

+

|

| 64 |

+

export type TaskDataCustom = Omit<TaskData, "id" | "label" | "libraries">;

|

packages/tasks/src/audio-classification/about.md

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Use Cases

|

| 2 |

+

|

| 3 |

+

### Command Recognition

|

| 4 |

+

|

| 5 |

+

Command recognition or keyword spotting classifies utterances into a predefined set of commands. This is often done on-device for fast response time.

|

| 6 |

+

|

| 7 |

+

As an example, using the Google Speech Commands dataset, given an input, a model can classify which of the following commands the user is typing:

|

| 8 |

+

|

| 9 |

+

```

|

| 10 |

+

'yes', 'no', 'up', 'down', 'left', 'right', 'on', 'off', 'stop', 'go', 'unknown', 'silence'

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

Speechbrain models can easily perform this task with just a couple of lines of code!

|

| 14 |

+

|

| 15 |

+

```python

|

| 16 |

+

from speechbrain.pretrained import EncoderClassifier

|

| 17 |

+

model = EncoderClassifier.from_hparams(

|

| 18 |

+

"speechbrain/google_speech_command_xvector"

|

| 19 |

+

)

|

| 20 |

+

model.classify_file("file.wav")

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

### Language Identification

|

| 24 |

+

|

| 25 |

+

Datasets such as VoxLingua107 allow anyone to train language identification models for up to 107 languages! This can be extremely useful as a preprocessing step for other systems. Here's an example [model](https://huggingface.co/TalTechNLP/voxlingua107-epaca-tdnn)trained on VoxLingua107.

|

| 26 |

+

|

| 27 |

+

### Emotion recognition

|

| 28 |

+

|

| 29 |

+

Emotion recognition is self explanatory. In addition to trying the widgets, you can use the Inference API to perform audio classification. Here is a simple example that uses a [HuBERT](https://huggingface.co/superb/hubert-large-superb-er) model fine-tuned for this task.

|

| 30 |

+

|

| 31 |

+

```python

|

| 32 |

+

import json

|

| 33 |

+

import requests

|

| 34 |

+

|

| 35 |

+

headers = {"Authorization": f"Bearer {API_TOKEN}"}

|

| 36 |

+

API_URL = "https://api-inference.huggingface.co/models/superb/hubert-large-superb-er"

|

| 37 |

+

|

| 38 |

+

def query(filename):

|

| 39 |

+

with open(filename, "rb") as f:

|

| 40 |

+

data = f.read()

|

| 41 |

+

response = requests.request("POST", API_URL, headers=headers, data=data)

|

| 42 |

+

return json.loads(response.content.decode("utf-8"))

|

| 43 |

+

|

| 44 |

+

data = query("sample1.flac")

|

| 45 |

+

# [{'label': 'neu', 'score': 0.60},

|

| 46 |

+

# {'label': 'hap', 'score': 0.20},

|

| 47 |

+

# {'label': 'ang', 'score': 0.13},

|

| 48 |

+

# {'label': 'sad', 'score': 0.07}]

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer with audio classification models on Hugging Face Hub.

|

| 52 |

+

|

| 53 |

+

```javascript

|

| 54 |

+

import { HfInference } from "@huggingface/inference";

|

| 55 |

+

|

| 56 |

+

const inference = new HfInference(HF_ACCESS_TOKEN);

|

| 57 |

+

await inference.audioClassification({

|

| 58 |

+

data: await (await fetch("sample.flac")).blob(),

|

| 59 |

+

model: "facebook/mms-lid-126",

|

| 60 |

+

});

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

### Speaker Identification

|

| 64 |

+

|

| 65 |

+

Speaker Identification is classifying the audio of the person speaking. Speakers are usually predefined. You can try out this task with [this model](https://huggingface.co/superb/wav2vec2-base-superb-sid). A useful dataset for this task is VoxCeleb1.

|

| 66 |

+

|

| 67 |

+

## Solving audio classification for your own data

|

| 68 |

+

|

| 69 |

+

We have some great news! You can do fine-tuning (transfer learning) to train a well-performing model without requiring as much data. Pretrained models such as Wav2Vec2 and HuBERT exist. [Facebook's Wav2Vec2 XLS-R model](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/) is a large multilingual model trained on 128 languages and with 436K hours of speech.

|

| 70 |

+

|

| 71 |

+

## Useful Resources

|

| 72 |

+

|

| 73 |

+

Would you like to learn more about the topic? Awesome! Here you can find some curated resources that you may find helpful!

|

| 74 |

+

|

| 75 |

+

### Notebooks

|

| 76 |

+

|

| 77 |

+

- [PyTorch](https://colab.research.google.com/github/huggingface/notebooks/blob/master/examples/audio_classification.ipynb)

|

| 78 |

+

|

| 79 |

+

### Scripts for training

|

| 80 |

+

|

| 81 |

+

- [PyTorch](https://github.com/huggingface/transformers/tree/main/examples/pytorch/audio-classification)

|

| 82 |

+

|

| 83 |

+

### Documentation

|

| 84 |

+

|

| 85 |

+

- [Audio classification task guide](https://huggingface.co/docs/transformers/tasks/audio_classification)

|

packages/tasks/src/audio-classification/data.ts

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import type { TaskDataCustom } from "../Types";

|

| 2 |

+

|

| 3 |

+

const taskData: TaskDataCustom = {

|

| 4 |

+

datasets: [

|

| 5 |

+

{

|

| 6 |

+

description: "A benchmark of 10 different audio tasks.",

|

| 7 |

+

id: "superb",

|

| 8 |

+

},

|

| 9 |

+

],

|

| 10 |

+

demo: {

|

| 11 |

+

inputs: [

|

| 12 |

+

{

|

| 13 |

+

filename: "audio.wav",

|

| 14 |

+

type: "audio",

|

| 15 |

+

},

|

| 16 |

+

],

|

| 17 |

+

outputs: [

|

| 18 |

+

{

|

| 19 |

+

data: [

|

| 20 |

+

{

|

| 21 |

+

label: "Up",

|

| 22 |

+

score: 0.2,

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

label: "Down",

|

| 26 |

+

score: 0.8,

|

| 27 |

+

},

|

| 28 |

+

],

|

| 29 |

+

type: "chart",

|

| 30 |

+

},

|

| 31 |

+

],

|

| 32 |

+

},

|

| 33 |

+

metrics: [

|

| 34 |

+

{

|

| 35 |

+

description: "",

|

| 36 |

+

id: "accuracy",

|

| 37 |

+

},

|

| 38 |

+

{

|

| 39 |

+

description: "",

|

| 40 |

+

id: "recall",

|

| 41 |

+

},

|

| 42 |

+

{

|

| 43 |

+

description: "",

|

| 44 |

+

id: "precision",

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

description: "",

|

| 48 |

+

id: "f1",

|

| 49 |

+

},

|

| 50 |

+

],

|

| 51 |

+

models: [

|

| 52 |

+

{

|

| 53 |

+

description: "An easy-to-use model for Command Recognition.",

|

| 54 |

+

id: "speechbrain/google_speech_command_xvector",

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

description: "An Emotion Recognition model.",

|

| 58 |

+

id: "ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition",

|

| 59 |

+

},

|

| 60 |

+

{

|

| 61 |

+

description: "A language identification model.",

|

| 62 |

+

id: "facebook/mms-lid-126",

|

| 63 |

+

},

|

| 64 |

+

],

|

| 65 |

+

spaces: [

|

| 66 |

+

{

|

| 67 |

+

description: "An application that can predict the language spoken in a given audio.",

|

| 68 |

+

id: "akhaliq/Speechbrain-audio-classification",

|

| 69 |

+

},

|

| 70 |

+

],

|

| 71 |

+

summary:

|

| 72 |

+

"Audio classification is the task of assigning a label or class to a given audio. It can be used for recognizing which command a user is giving or the emotion of a statement, as well as identifying a speaker.",

|

| 73 |

+

widgetModels: ["facebook/mms-lid-126"],

|

| 74 |

+

youtubeId: "KWwzcmG98Ds",

|

| 75 |

+

};

|

| 76 |

+

|

| 77 |

+

export default taskData;

|

packages/tasks/src/audio-to-audio/about.md

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Use Cases

|

| 2 |

+

|

| 3 |

+

### Speech Enhancement (Noise removal)

|

| 4 |

+

|

| 5 |

+

Speech Enhancement is a bit self explanatory. It improves (or enhances) the quality of an audio by removing noise. There are multiple libraries to solve this task, such as Speechbrain, Asteroid and ESPNet. Here is a simple example using Speechbrain

|

| 6 |

+

|

| 7 |

+

```python

|

| 8 |

+

from speechbrain.pretrained import SpectralMaskEnhancement

|

| 9 |

+

model = SpectralMaskEnhancement.from_hparams(

|

| 10 |

+

"speechbrain/mtl-mimic-voicebank"

|

| 11 |

+

)

|

| 12 |

+

model.enhance_file("file.wav")

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

Alternatively, you can use the [Inference API](https://huggingface.co/inference-api) to solve this task

|

| 16 |

+

|

| 17 |

+

```python

|

| 18 |

+

import json

|

| 19 |

+

import requests

|

| 20 |

+

|

| 21 |

+

headers = {"Authorization": f"Bearer {API_TOKEN}"}

|

| 22 |

+

API_URL = "https://api-inference.huggingface.co/models/speechbrain/mtl-mimic-voicebank"

|

| 23 |

+

|

| 24 |

+

def query(filename):

|

| 25 |

+

with open(filename, "rb") as f:

|

| 26 |

+

data = f.read()

|

| 27 |

+

response = requests.request("POST", API_URL, headers=headers, data=data)

|

| 28 |

+

return json.loads(response.content.decode("utf-8"))

|

| 29 |

+

|

| 30 |

+

data = query("sample1.flac")

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer with audio-to-audio models on Hugging Face Hub.

|

| 34 |

+

|

| 35 |

+

```javascript

|

| 36 |

+

import { HfInference } from "@huggingface/inference";

|

| 37 |

+

|

| 38 |

+

const inference = new HfInference(HF_ACCESS_TOKEN);

|

| 39 |

+

await inference.audioToAudio({

|

| 40 |

+

data: await (await fetch("sample.flac")).blob(),

|

| 41 |

+

model: "speechbrain/sepformer-wham",

|

| 42 |

+

});

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

### Audio Source Separation

|

| 46 |

+

|

| 47 |

+

Audio Source Separation allows you to isolate different sounds from individual sources. For example, if you have an audio file with multiple people speaking, you can get an audio file for each of them. You can then use an Automatic Speech Recognition system to extract the text from each of these sources as an initial step for your system!

|

| 48 |

+

|

| 49 |

+

Audio-to-Audio can also be used to remove noise from audio files: you get one audio for the person speaking and another audio for the noise. This can also be useful when you have multi-person audio with some noise: yyou can get one audio for each person and then one audio for the noise.

|

| 50 |

+

|

| 51 |

+

## Training a model for your own data

|

| 52 |

+

|

| 53 |

+

If you want to learn how to train models for the Audio-to-Audio task, we recommend the following tutorials:

|

| 54 |

+

|

| 55 |

+

- [Speech Enhancement](https://speechbrain.github.io/tutorial_enhancement.html)

|

| 56 |

+

- [Source Separation](https://speechbrain.github.io/tutorial_separation.html)

|

packages/tasks/src/audio-to-audio/data.ts

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import type { TaskDataCustom } from "../Types";

|

| 2 |

+

|

| 3 |

+

const taskData: TaskDataCustom = {

|

| 4 |

+

datasets: [

|

| 5 |

+

{

|

| 6 |

+

description: "512-element X-vector embeddings of speakers from CMU ARCTIC dataset.",

|

| 7 |

+

id: "Matthijs/cmu-arctic-xvectors",

|

| 8 |

+

},

|

| 9 |

+

],

|

| 10 |

+

demo: {

|

| 11 |

+

inputs: [

|

| 12 |

+

{

|

| 13 |

+

filename: "input.wav",

|

| 14 |

+

type: "audio",

|

| 15 |

+

},

|

| 16 |

+

],

|

| 17 |

+

outputs: [

|

| 18 |

+

{

|

| 19 |

+

filename: "label-0.wav",

|

| 20 |

+

type: "audio",

|

| 21 |

+

},

|

| 22 |

+

{

|

| 23 |

+

filename: "label-1.wav",

|

| 24 |

+

type: "audio",

|

| 25 |

+

},

|

| 26 |

+

],

|

| 27 |

+

},

|

| 28 |

+

metrics: [

|

| 29 |

+

{

|

| 30 |

+

description:

|

| 31 |

+

"The Signal-to-Noise ratio is the relationship between the target signal level and the background noise level. It is calculated as the logarithm of the target signal divided by the background noise, in decibels.",

|

| 32 |

+

id: "snri",

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

description:

|

| 36 |

+

"The Signal-to-Distortion ratio is the relationship between the target signal and the sum of noise, interference, and artifact errors",

|

| 37 |

+

id: "sdri",

|

| 38 |

+

},

|

| 39 |

+

],

|

| 40 |

+

models: [

|

| 41 |

+

{

|

| 42 |

+

description: "A solid model of audio source separation.",

|

| 43 |

+

id: "speechbrain/sepformer-wham",

|

| 44 |

+

},

|

| 45 |

+

{

|

| 46 |

+

description: "A speech enhancement model.",

|

| 47 |

+

id: "speechbrain/metricgan-plus-voicebank",

|

| 48 |

+

},

|

| 49 |

+

],

|

| 50 |

+

spaces: [

|

| 51 |

+

{

|

| 52 |

+

description: "An application for speech separation.",

|

| 53 |

+

id: "younver/speechbrain-speech-separation",

|

| 54 |

+

},

|

| 55 |

+

{

|

| 56 |

+

description: "An application for audio style transfer.",

|

| 57 |

+

id: "nakas/audio-diffusion_style_transfer",

|

| 58 |

+

},

|

| 59 |

+

],

|

| 60 |

+

summary:

|

| 61 |

+

"Audio-to-Audio is a family of tasks in which the input is an audio and the output is one or multiple generated audios. Some example tasks are speech enhancement and source separation.",

|

| 62 |

+

widgetModels: ["speechbrain/sepformer-wham"],

|

| 63 |

+

youtubeId: "iohj7nCCYoM",

|

| 64 |

+

};

|

| 65 |

+

|

| 66 |

+

export default taskData;

|

packages/tasks/src/automatic-speech-recognition/about.md

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Use Cases

|

| 2 |

+

|

| 3 |

+

### Virtual Speech Assistants

|

| 4 |

+

|

| 5 |

+

Many edge devices have an embedded virtual assistant to interact with the end users better. These assistances rely on ASR models to recognize different voice commands to perform various tasks. For instance, you can ask your phone for dialing a phone number, ask a general question, or schedule a meeting.

|

| 6 |

+

|

| 7 |

+

### Caption Generation

|

| 8 |

+

|

| 9 |

+

A caption generation model takes audio as input from sources to generate automatic captions through transcription, for live-streamed or recorded videos. This can help with content accessibility. For example, an audience watching a video that includes a non-native language, can rely on captions to interpret the content. It can also help with information retention at online-classes environments improving knowledge assimilation while reading and taking notes faster.

|

| 10 |

+

|

| 11 |

+

## Task Variants

|

| 12 |

+

|

| 13 |

+

### Multilingual ASR

|

| 14 |

+

|

| 15 |

+

Multilingual ASR models can convert audio inputs with multiple languages into transcripts. Some multilingual ASR models include [language identification](https://huggingface.co/tasks/audio-classification) blocks to improve the performance.

|

| 16 |

+

|

| 17 |

+

The use of Multilingual ASR has become popular, the idea of maintaining just a single model for all language can simplify the production pipeline. Take a look at [Whisper](https://huggingface.co/openai/whisper-large-v2) to get an idea on how 100+ languages can be processed by a single model.

|

| 18 |

+

|

| 19 |

+

## Inference

|

| 20 |

+

|

| 21 |

+

The Hub contains over [~9,000 ASR models](https://huggingface.co/models?pipeline_tag=automatic-speech-recognition&sort=downloads) that you can use right away by trying out the widgets directly in the browser or calling the models as a service using the Inference API. Here is a simple code snippet to do exactly this:

|

| 22 |

+

|

| 23 |

+

```python

|

| 24 |

+

import json

|

| 25 |

+

import requests

|

| 26 |

+

|

| 27 |

+

headers = {"Authorization": f"Bearer {API_TOKEN}"}

|

| 28 |

+

API_URL = "https://api-inference.huggingface.co/models/openai/whisper-large-v2"

|

| 29 |

+

|

| 30 |

+

def query(filename):

|

| 31 |

+

with open(filename, "rb") as f:

|

| 32 |

+

data = f.read()

|

| 33 |

+

response = requests.request("POST", API_URL, headers=headers, data=data)

|

| 34 |

+

return json.loads(response.content.decode("utf-8"))

|

| 35 |

+

|

| 36 |

+

data = query("sample1.flac")

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

You can also use libraries such as [transformers](https://huggingface.co/models?library=transformers&pipeline_tag=automatic-speech-recognition&sort=downloads), [speechbrain](https://huggingface.co/models?library=speechbrain&pipeline_tag=automatic-speech-recognition&sort=downloads), [NeMo](https://huggingface.co/models?pipeline_tag=automatic-speech-recognition&library=nemo&sort=downloads) and [espnet](https://huggingface.co/models?library=espnet&pipeline_tag=automatic-speech-recognition&sort=downloads) if you want one-click managed Inference without any hassle.

|

| 40 |

+

|

| 41 |

+

```python

|

| 42 |

+

from transformers import pipeline

|

| 43 |

+

|

| 44 |

+

with open("sample.flac", "rb") as f:

|

| 45 |

+

data = f.read()

|

| 46 |

+

|

| 47 |

+

pipe = pipeline("automatic-speech-recognition", "openai/whisper-large-v2")

|

| 48 |

+

pipe("sample.flac")

|

| 49 |

+

# {'text': "GOING ALONG SLUSHY COUNTRY ROADS AND SPEAKING TO DAMP AUDIENCES IN DRAUGHTY SCHOOL ROOMS DAY AFTER DAY FOR A FORTNIGHT HE'LL HAVE TO PUT IN AN APPEARANCE AT SOME PLACE OF WORSHIP ON SUNDAY MORNING AND HE CAN COME TO US IMMEDIATELY AFTERWARDS"}

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to transcribe text with javascript using models on Hugging Face Hub.

|

| 53 |

+

|

| 54 |

+

```javascript

|

| 55 |

+

import { HfInference } from "@huggingface/inference";

|

| 56 |

+

|

| 57 |

+

const inference = new HfInference(HF_ACCESS_TOKEN);

|

| 58 |

+

await inference.automaticSpeechRecognition({

|

| 59 |

+

data: await (await fetch("sample.flac")).blob(),

|

| 60 |

+

model: "openai/whisper-large-v2",

|

| 61 |

+

});

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

## Solving ASR for your own data

|

| 65 |

+

|

| 66 |

+

We have some great news! You can fine-tune (transfer learning) a foundational speech model on a specific language without tonnes of data. Pretrained models such as Whisper, Wav2Vec2-MMS and HuBERT exist. [OpenAI's Whisper model](https://huggingface.co/openai/whisper-large-v2) is a large multilingual model trained on 100+ languages and with 680K hours of speech.

|

| 67 |

+

|

| 68 |

+

The following detailed [blog post](https://huggingface.co/blog/fine-tune-whisper) shows how to fine-tune a pre-trained Whisper checkpoint on labeled data for ASR. With the right data and strategy you can fine-tune a high-performant model on a free Google Colab instance too. We suggest to read the blog post for more info!

|

| 69 |

+

|

| 70 |

+

## Hugging Face Whisper Event

|

| 71 |

+

|

| 72 |

+

On December 2022, over 450 participants collaborated, fine-tuned and shared 600+ ASR Whisper models in 100+ different languages. You can compare these models on the event's speech recognition [leaderboard](https://huggingface.co/spaces/whisper-event/leaderboard?dataset=mozilla-foundation%2Fcommon_voice_11_0&config=ar&split=test).

|

| 73 |

+

|

| 74 |

+

These events help democratize ASR for all languages, including low-resource languages. In addition to the trained models, the [event](https://github.com/huggingface/community-events/tree/main/whisper-fine-tuning-event) helps to build practical collaborative knowledge.

|

| 75 |

+

|

| 76 |

+

## Useful Resources

|

| 77 |

+

|

| 78 |

+

- [Fine-tuning MetaAI's MMS Adapter Models for Multi-Lingual ASR](https://huggingface.co/blog/mms_adapters)

|

| 79 |

+

- [Making automatic speech recognition work on large files with Wav2Vec2 in 🤗 Transformers](https://huggingface.co/blog/asr-chunking)

|

| 80 |

+

- [Boosting Wav2Vec2 with n-grams in 🤗 Transformers](https://huggingface.co/blog/wav2vec2-with-ngram)

|

| 81 |

+

- [ML for Audio Study Group - Intro to Audio and ASR Deep Dive](https://www.youtube.com/watch?v=D-MH6YjuIlE)

|

| 82 |

+

- [Massively Multilingual ASR: 50 Languages, 1 Model, 1 Billion Parameters](https://arxiv.org/pdf/2007.03001.pdf)

|

| 83 |

+

- An ASR toolkit made by [NVIDIA: NeMo](https://github.com/NVIDIA/NeMo) with code and pretrained models useful for new ASR models. Watch the [introductory video](https://www.youtube.com/embed/wBgpMf_KQVw) for an overview.

|

| 84 |

+

- [An introduction to SpeechT5, a multi-purpose speech recognition and synthesis model](https://huggingface.co/blog/speecht5)

|

| 85 |

+

- [A guide on Fine-tuning Whisper For Multilingual ASR with 🤗Transformers](https://huggingface.co/blog/fine-tune-whisper)

|

| 86 |

+

- [Automatic speech recognition task guide](https://huggingface.co/docs/transformers/tasks/asr)

|

| 87 |

+

- [Speech Synthesis, Recognition, and More With SpeechT5](https://huggingface.co/blog/speecht5)

|

packages/tasks/src/automatic-speech-recognition/data.ts

ADDED

|

@@ -0,0 +1,78 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import type { TaskDataCustom } from "../Types";

|

| 2 |

+

|

| 3 |

+

const taskData: TaskDataCustom = {

|

| 4 |

+

datasets: [

|

| 5 |

+

{

|

| 6 |

+

description: "18,000 hours of multilingual audio-text dataset in 108 languages.",

|

| 7 |

+

id: "mozilla-foundation/common_voice_13_0",

|

| 8 |

+

},

|

| 9 |

+

{

|

| 10 |

+

description: "An English dataset with 1,000 hours of data.",

|

| 11 |

+

id: "librispeech_asr",

|

| 12 |

+

},

|

| 13 |

+

{

|

| 14 |

+

description: "High quality, multi-speaker audio data and their transcriptions in various languages.",

|

| 15 |

+

id: "openslr",

|

| 16 |

+

},

|

| 17 |

+

],

|

| 18 |

+

demo: {

|

| 19 |

+

inputs: [

|

| 20 |

+

{

|

| 21 |

+

filename: "input.flac",

|

| 22 |

+

type: "audio",

|

| 23 |

+

},

|

| 24 |

+

],

|

| 25 |

+

outputs: [

|

| 26 |

+

{

|

| 27 |

+

/// GOING ALONG SLUSHY COUNTRY ROADS AND SPEAKING TO DAMP AUDIENCES I

|

| 28 |

+

label: "Transcript",

|

| 29 |

+

content: "Going along slushy country roads and speaking to damp audiences in...",

|

| 30 |

+

type: "text",

|

| 31 |

+

},

|

| 32 |

+

],

|

| 33 |

+

},

|

| 34 |

+

metrics: [

|

| 35 |

+

{

|

| 36 |

+

description: "",

|

| 37 |

+

id: "wer",

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

description: "",

|

| 41 |

+

id: "cer",

|

| 42 |

+

},

|

| 43 |

+

],

|

| 44 |

+

models: [

|

| 45 |

+

{

|

| 46 |

+

description: "A powerful ASR model by OpenAI.",

|

| 47 |

+

id: "openai/whisper-large-v2",

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

description: "A good generic ASR model by MetaAI.",

|

| 51 |

+

id: "facebook/wav2vec2-base-960h",

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

description: "An end-to-end model that performs ASR and Speech Translation by MetaAI.",

|

| 55 |

+

id: "facebook/s2t-small-mustc-en-fr-st",

|

| 56 |

+

},

|

| 57 |

+

],

|

| 58 |

+

spaces: [

|

| 59 |

+

{

|

| 60 |

+

description: "A powerful general-purpose speech recognition application.",

|

| 61 |

+

id: "openai/whisper",

|

| 62 |

+

},

|

| 63 |

+

{

|

| 64 |

+

description: "Fastest speech recognition application.",

|

| 65 |

+

id: "sanchit-gandhi/whisper-jax",

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

description: "An application that transcribes speeches in YouTube videos.",

|

| 69 |

+

id: "jeffistyping/Youtube-Whisperer",

|

| 70 |

+

},

|

| 71 |

+

],

|

| 72 |

+

summary:

|

| 73 |

+

"Automatic Speech Recognition (ASR), also known as Speech to Text (STT), is the task of transcribing a given audio to text. It has many applications, such as voice user interfaces.",

|

| 74 |

+

widgetModels: ["openai/whisper-large-v2"],

|

| 75 |

+

youtubeId: "TksaY_FDgnk",

|

| 76 |

+

};

|

| 77 |

+

|

| 78 |

+

export default taskData;

|

packages/tasks/src/const.ts

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import type { ModelLibraryKey } from "./modelLibraries";

|

| 2 |

+

import type { PipelineType } from "./pipelines";

|

| 3 |

+

|

| 4 |

+

/**

|

| 5 |

+

* Model libraries compatible with each ML task

|

| 6 |

+

*/

|

| 7 |

+

export const TASKS_MODEL_LIBRARIES: Record<PipelineType, ModelLibraryKey[]> = {

|

| 8 |

+

"audio-classification": ["speechbrain", "transformers"],

|

| 9 |

+

"audio-to-audio": ["asteroid", "speechbrain"],

|

| 10 |

+

"automatic-speech-recognition": ["espnet", "nemo", "speechbrain", "transformers", "transformers.js"],

|

| 11 |

+

conversational: ["transformers"],

|

| 12 |

+

"depth-estimation": ["transformers"],

|

| 13 |

+

"document-question-answering": ["transformers"],

|

| 14 |

+

"feature-extraction": ["sentence-transformers", "transformers", "transformers.js"],

|

| 15 |

+

"fill-mask": ["transformers", "transformers.js"],

|

| 16 |

+

"graph-ml": ["transformers"],

|

| 17 |

+

"image-classification": ["keras", "timm", "transformers", "transformers.js"],

|

| 18 |

+

"image-segmentation": ["transformers", "transformers.js"],

|

| 19 |

+

"image-to-image": [],

|

| 20 |

+

"image-to-text": ["transformers.js"],

|

| 21 |

+

"video-classification": [],

|

| 22 |

+

"multiple-choice": ["transformers"],

|

| 23 |

+

"object-detection": ["transformers", "transformers.js"],

|

| 24 |

+

other: [],

|

| 25 |

+

"question-answering": ["adapter-transformers", "allennlp", "transformers", "transformers.js"],

|

| 26 |

+

robotics: [],

|

| 27 |

+

"reinforcement-learning": ["transformers", "stable-baselines3", "ml-agents", "sample-factory"],

|

| 28 |

+

"sentence-similarity": ["sentence-transformers", "spacy", "transformers.js"],

|

| 29 |

+

summarization: ["transformers", "transformers.js"],

|

| 30 |

+

"table-question-answering": ["transformers"],

|

| 31 |

+

"table-to-text": ["transformers"],

|

| 32 |

+

"tabular-classification": ["sklearn"],

|

| 33 |

+

"tabular-regression": ["sklearn"],

|

| 34 |

+

"tabular-to-text": ["transformers"],

|

| 35 |

+

"text-classification": ["adapter-transformers", "spacy", "transformers", "transformers.js"],

|

| 36 |

+

"text-generation": ["transformers", "transformers.js"],

|

| 37 |

+

"text-retrieval": [],

|

| 38 |

+

"text-to-image": [],

|

| 39 |

+

"text-to-speech": ["espnet", "tensorflowtts", "transformers"],

|

| 40 |

+

"text-to-audio": ["transformers"],

|

| 41 |

+

"text-to-video": [],

|

| 42 |

+

"text2text-generation": ["transformers", "transformers.js"],

|

| 43 |

+

"time-series-forecasting": [],

|

| 44 |

+

"token-classification": [

|

| 45 |

+

"adapter-transformers",

|

| 46 |

+

"flair",

|

| 47 |

+

"spacy",

|

| 48 |

+

"span-marker",

|

| 49 |

+

"stanza",

|

| 50 |

+

"transformers",

|

| 51 |

+

"transformers.js",

|

| 52 |

+

],

|

| 53 |

+

translation: ["transformers", "transformers.js"],

|

| 54 |

+

"unconditional-image-generation": [],

|

| 55 |

+

"visual-question-answering": [],

|

| 56 |

+

"voice-activity-detection": [],

|

| 57 |

+

"zero-shot-classification": ["transformers", "transformers.js"],

|

| 58 |

+

"zero-shot-image-classification": ["transformers.js"],

|

| 59 |

+

};

|

packages/tasks/src/conversational/about.md

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Use Cases

|

| 2 |

+

|

| 3 |

+

### Chatbot 💬

|

| 4 |

+

|

| 5 |

+

Chatbots are used to have conversations instead of providing direct contact with a live human. They are used to provide customer service, sales, and can even be used to play games (see [ELIZA](https://en.wikipedia.org/wiki/ELIZA) from 1966 for one of the earliest examples).

|

| 6 |

+

|

| 7 |

+

## Voice Assistants 🎙️

|

| 8 |

+

|

| 9 |

+

Conversational response models are used as part of voice assistants to provide appropriate responses to voice based queries.

|

| 10 |

+

|

| 11 |

+

## Inference

|

| 12 |

+

|

| 13 |

+

You can infer with Conversational models with the 🤗 Transformers library using the `conversational` pipeline. This pipeline takes a conversation prompt or a list of conversations and generates responses for each prompt. The models that this pipeline can use are models that have been fine-tuned on a multi-turn conversational task (see https://huggingface.co/models?filter=conversational for a list of updated Conversational models).

|

| 14 |

+

|

| 15 |

+

```python

|

| 16 |

+

from transformers import pipeline, Conversation

|

| 17 |

+

converse = pipeline("conversational")

|

| 18 |

+

|

| 19 |

+

conversation_1 = Conversation("Going to the movies tonight - any suggestions?")

|

| 20 |

+

conversation_2 = Conversation("What's the last book you have read?")

|

| 21 |

+

converse([conversation_1, conversation_2])

|

| 22 |

+

|

| 23 |

+

## Output:

|

| 24 |

+

## Conversation 1

|

| 25 |

+

## user >> Going to the movies tonight - any suggestions?

|

| 26 |

+

## bot >> The Big Lebowski ,

|

| 27 |

+

## Conversation 2

|

| 28 |

+

## user >> What's the last book you have read?

|

| 29 |

+

## bot >> The Last Question

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer with conversational models on Hugging Face Hub.

|

| 33 |

+

|

| 34 |

+

```javascript

|

| 35 |

+

import { HfInference } from "@huggingface/inference";

|

| 36 |

+

|

| 37 |

+

const inference = new HfInference(HF_ACCESS_TOKEN);

|

| 38 |

+

await inference.conversational({

|

| 39 |

+

model: "facebook/blenderbot-400M-distill",

|

| 40 |

+

inputs: "Going to the movies tonight - any suggestions?",

|

| 41 |

+

});

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

## Useful Resources

|

| 45 |

+

|

| 46 |

+

- Learn how ChatGPT and InstructGPT work in this blog: [Illustrating Reinforcement Learning from Human Feedback (RLHF)](https://huggingface.co/blog/rlhf)

|

| 47 |

+

- [Reinforcement Learning from Human Feedback From Zero to ChatGPT](https://www.youtube.com/watch?v=EAd4oQtEJOM)

|

| 48 |