Spaces:

Runtime error

Runtime error

Ceyda Cinarel

commited on

Commit

·

21feb87

1

Parent(s):

47cfe13

almost final

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- app.py +65 -22

- assets/code_snippets/latent_walk.py +15 -0

- assets/code_snippets/latent_walk_music.py +55 -0

- assets/gen_mosaic_lowres.jpg +0 -0

- assets/outputs/0_fake.jpg +0 -0

- assets/outputs/100_fake.jpg +0 -0

- assets/outputs/101_fake.jpg +0 -0

- assets/outputs/102_fake.jpg +0 -0

- assets/outputs/103_fake.jpg +0 -0

- assets/outputs/104_fake.jpg +0 -0

- assets/outputs/105_fake.jpg +0 -0

- assets/outputs/106_fake.jpg +0 -0

- assets/outputs/107_fake.jpg +0 -0

- assets/outputs/108_fake.jpg +0 -0

- assets/outputs/109_fake.jpg +0 -0

- assets/outputs/10_fake.jpg +0 -0

- assets/outputs/110_fake.jpg +0 -0

- assets/outputs/111_fake.jpg +0 -0

- assets/outputs/112_fake.jpg +0 -0

- assets/outputs/113_fake.jpg +0 -0

- assets/outputs/114_fake.jpg +0 -0

- assets/outputs/115_fake.jpg +0 -0

- assets/outputs/116_fake.jpg +0 -0

- assets/outputs/117_fake.jpg +0 -0

- assets/outputs/118_fake.jpg +0 -0

- assets/outputs/119_fake.jpg +0 -0

- assets/outputs/11_fake.jpg +0 -0

- assets/outputs/120_fake.jpg +0 -0

- assets/outputs/121_fake.jpg +0 -0

- assets/outputs/122_fake.jpg +0 -0

- assets/outputs/123_fake.jpg +0 -0

- assets/outputs/124_fake.jpg +0 -0

- assets/outputs/125_fake.jpg +0 -0

- assets/outputs/126_fake.jpg +0 -0

- assets/outputs/127_fake.jpg +0 -0

- assets/outputs/128_fake.jpg +0 -0

- assets/outputs/129_fake.jpg +0 -0

- assets/outputs/12_fake.jpg +0 -0

- assets/outputs/130_fake.jpg +0 -0

- assets/outputs/131_fake.jpg +0 -0

- assets/outputs/132_fake.jpg +0 -0

- assets/outputs/133_fake.jpg +0 -0

- assets/outputs/134_fake.jpg +0 -0

- assets/outputs/135_fake.jpg +0 -0

- assets/outputs/136_fake.jpg +0 -0

- assets/outputs/137_fake.jpg +0 -0

- assets/outputs/138_fake.jpg +0 -0

- assets/outputs/139_fake.jpg +0 -0

- assets/outputs/13_fake.jpg +0 -0

- assets/outputs/140_fake.jpg +0 -0

app.py

CHANGED

|

@@ -1,20 +1,17 @@

|

|

| 1 |

-

from pydoc import ModuleScanner

|

| 2 |

-

import re

|

| 3 |

import streamlit as st # HF spaces at v1.2.0

|

| 4 |

from demo import load_model,generate,get_dataset,embed,make_meme

|

| 5 |

from PIL import Image

|

| 6 |

import numpy as np

|

|

|

|

| 7 |

# TODOs

|

| 8 |

# Add markdown short readme project intro

|

|

|

|

| 9 |

|

| 10 |

|

| 11 |

st.sidebar.subheader("This butterfly does not exist! ")

|

| 12 |

st.sidebar.image("assets/logo.png", width=200)

|

| 13 |

|

| 14 |

-

st.

|

| 15 |

-

|

| 16 |

-

st.write("Demo prep still in progress!! Come back later")

|

| 17 |

-

|

| 18 |

|

| 19 |

@st.experimental_singleton

|

| 20 |

def load_model_intocache(model_name,model_version):

|

|

@@ -27,16 +24,29 @@ def load_dataset():

|

|

| 27 |

dataset=get_dataset()

|

| 28 |

return dataset

|

| 29 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 30 |

model_name='ceyda/butterfly_cropped_uniq1K_512'

|

| 31 |

# model_version='0edac54b81958b82ce9fd5c1f688c33ac8e4f223'

|

| 32 |

model_version=None ##TBD

|

| 33 |

model=load_model_intocache(model_name,model_version)

|

| 34 |

dataset=load_dataset()

|

|

|

|

| 35 |

|

| 36 |

generate_menu="🦋 Make butterflies"

|

| 37 |

latent_walk_menu="🎧 Take a latent walk"

|

| 38 |

make_meme_menu="🐦 Make a meme"

|

| 39 |

mosaic_menu="👀 See the mosaic"

|

|

|

|

| 40 |

|

| 41 |

screen = st.sidebar.radio("Pick a destination",[generate_menu,latent_walk_menu,make_meme_menu,mosaic_menu])

|

| 42 |

|

|

@@ -52,8 +62,10 @@ if screen == generate_menu:

|

|

| 52 |

st.session_state['ims'] = None

|

| 53 |

run()

|

| 54 |

ims=st.session_state["ims"]

|

| 55 |

-

|

| 56 |

-

|

|

|

|

|

|

|

| 57 |

if ims is not None:

|

| 58 |

cols=st.columns(col_num)

|

| 59 |

picks=[False]*batch_size

|

|

@@ -79,34 +91,65 @@ if screen == generate_menu:

|

|

| 79 |

scores, retrieved_examples=dataset.get_nearest_examples('beit_embeddings', embed(ims[i]), k=5)

|

| 80 |

for r in retrieved_examples["image"]:

|

| 81 |

cols[i].image(r)

|

| 82 |

-

|

| 83 |

-

st.write(f"Latent dimension: {model.latent_dim},

|

| 84 |

|

| 85 |

elif screen == latent_walk_menu:

|

| 86 |

-

|

|

|

|

|

|

|

|

|

|

| 87 |

|

| 88 |

cols=st.columns(3)

|

| 89 |

|

|

|

|

| 90 |

cols[0].video("assets/latent_walks/regular_walk.mp4")

|

| 91 |

-

|

|

|

|

| 92 |

cols[1].video("assets/latent_walks/walk_happyrock.mp4")

|

| 93 |

-

cols[

|

| 94 |

cols[2].video("assets/latent_walks/walk_cute.mp4")

|

| 95 |

-

|

| 96 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 97 |

|

| 98 |

|

| 99 |

elif screen == make_meme_menu:

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 104 |

st.image(meme)

|

|

|

|

|

|

|

|

|

|

| 105 |

|

| 106 |

|

| 107 |

elif screen == mosaic_menu:

|

| 108 |

-

st.

|

| 109 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 110 |

|

| 111 |

|

| 112 |

# footer stuff

|

|

@@ -116,6 +159,6 @@ st.sidebar.caption(f"[Model](https://huggingface.co/ceyda/butterfly_cropped_uniq

|

|

| 116 |

# Credits

|

| 117 |

st.sidebar.caption(f"Made during the [huggan](https://github.com/huggingface/community-events) hackathon")

|

| 118 |

st.sidebar.caption(f"Contributors:")

|

| 119 |

-

st.sidebar.caption(f"[Ceyda Cinarel](https://

|

| 120 |

|

| 121 |

## Feel free to add more & change stuff ^

|

|

|

|

|

|

|

|

|

|

| 1 |

import streamlit as st # HF spaces at v1.2.0

|

| 2 |

from demo import load_model,generate,get_dataset,embed,make_meme

|

| 3 |

from PIL import Image

|

| 4 |

import numpy as np

|

| 5 |

+

import io

|

| 6 |

# TODOs

|

| 7 |

# Add markdown short readme project intro

|

| 8 |

+

# Add link to wandb logs

|

| 9 |

|

| 10 |

|

| 11 |

st.sidebar.subheader("This butterfly does not exist! ")

|

| 12 |

st.sidebar.image("assets/logo.png", width=200)

|

| 13 |

|

| 14 |

+

st.title("ButterflyGAN")

|

|

|

|

|

|

|

|

|

|

| 15 |

|

| 16 |

@st.experimental_singleton

|

| 17 |

def load_model_intocache(model_name,model_version):

|

|

|

|

| 24 |

dataset=get_dataset()

|

| 25 |

return dataset

|

| 26 |

|

| 27 |

+

@st.experimental_singleton

|

| 28 |

+

def load_variables():# Don't want to open read files over and over. not sure if it makes a diff

|

| 29 |

+

st.session_state['latent_walk_code']=open("assets/code_snippets/latent_walk.py").read()

|

| 30 |

+

st.session_state['latent_walk_code_music']=open("assets/code_snippets/latent_walk_music.py").read()

|

| 31 |

+

|

| 32 |

+

def img2download(image):

|

| 33 |

+

imgByteArr = io.BytesIO()

|

| 34 |

+

image.save(imgByteArr, format="JPEG")

|

| 35 |

+

imgByteArr = imgByteArr.getvalue()

|

| 36 |

+

return imgByteArr

|

| 37 |

+

|

| 38 |

model_name='ceyda/butterfly_cropped_uniq1K_512'

|

| 39 |

# model_version='0edac54b81958b82ce9fd5c1f688c33ac8e4f223'

|

| 40 |

model_version=None ##TBD

|

| 41 |

model=load_model_intocache(model_name,model_version)

|

| 42 |

dataset=load_dataset()

|

| 43 |

+

load_variables()

|

| 44 |

|

| 45 |

generate_menu="🦋 Make butterflies"

|

| 46 |

latent_walk_menu="🎧 Take a latent walk"

|

| 47 |

make_meme_menu="🐦 Make a meme"

|

| 48 |

mosaic_menu="👀 See the mosaic"

|

| 49 |

+

fun_menu="Release the butterflies"

|

| 50 |

|

| 51 |

screen = st.sidebar.radio("Pick a destination",[generate_menu,latent_walk_menu,make_meme_menu,mosaic_menu])

|

| 52 |

|

|

|

|

| 62 |

st.session_state['ims'] = None

|

| 63 |

run()

|

| 64 |

ims=st.session_state["ims"]

|

| 65 |

+

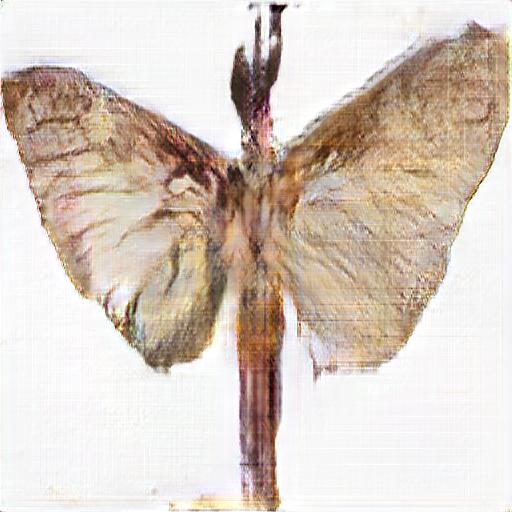

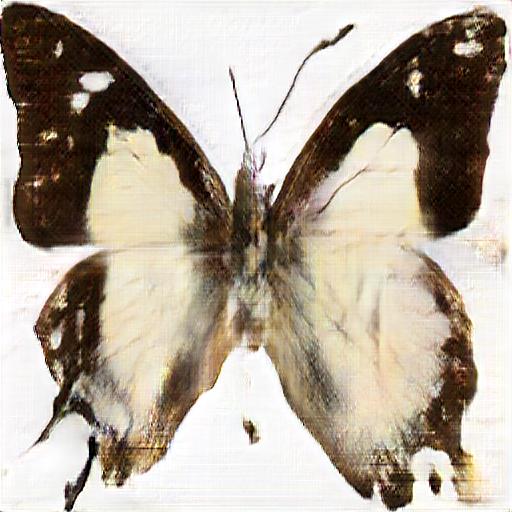

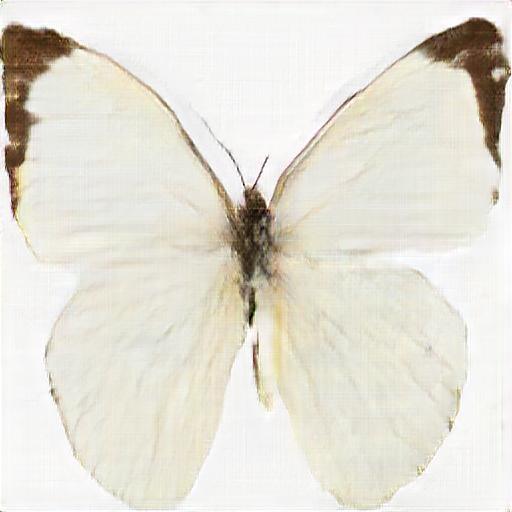

st.write("Light-GAN model trained on 1000 butterfly images taken from the Smithsonian Museum collection. \n \

|

| 66 |

+

Based on [paper:](https://openreview.net/forum?id=1Fqg133qRaI) *Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis*")

|

| 67 |

+

|

| 68 |

+

runb=st.button("Generate", on_click=run ,help="generated on the fly maybe slow")

|

| 69 |

if ims is not None:

|

| 70 |

cols=st.columns(col_num)

|

| 71 |

picks=[False]*batch_size

|

|

|

|

| 91 |

scores, retrieved_examples=dataset.get_nearest_examples('beit_embeddings', embed(ims[i]), k=5)

|

| 92 |

for r in retrieved_examples["image"]:

|

| 93 |

cols[i].image(r)

|

| 94 |

+

st.write("Nearest neighbors found in the training set according to L2 distance on 'microsoft/beit-base-patch16-224' embeddings")

|

| 95 |

+

st.write(f"Latent dimension: {model.latent_dim}, image size:{model.image_size}")

|

| 96 |

|

| 97 |

elif screen == latent_walk_menu:

|

| 98 |

+

|

| 99 |

+

latent_walk_code=open("assets/code_snippets/latent_walk.py").read()

|

| 100 |

+

latent_walk_music_code=open("assets/code_snippets/latent_walk_music.py").read()

|

| 101 |

+

st.write("Take a latent walk :musical_note: with cute butterflies")

|

| 102 |

|

| 103 |

cols=st.columns(3)

|

| 104 |

|

| 105 |

+

cols[0].caption("A regular walk (no music)")

|

| 106 |

cols[0].video("assets/latent_walks/regular_walk.mp4")

|

| 107 |

+

|

| 108 |

+

cols[1].caption("Walk with music :butterfly:")

|

| 109 |

cols[1].video("assets/latent_walks/walk_happyrock.mp4")

|

| 110 |

+

cols[2].caption("Walk with music :butterfly:")

|

| 111 |

cols[2].video("assets/latent_walks/walk_cute.mp4")

|

| 112 |

+

|

| 113 |

+

st.caption("Royalty Free Music from Bensound")

|

| 114 |

+

st.write("🎧Did those butterflies seem to be dancing to the music?!Here is the secret:")

|

| 115 |

+

with st.expander("See the Code Snippets"):

|

| 116 |

+

st.write("A regular latent walk:")

|

| 117 |

+

st.code(st.session_state['latent_walk_code'], language='python')

|

| 118 |

+

st.write(":musical_note: latent walk with music:")

|

| 119 |

+

st.code(st.session_state['latent_walk_code_music'], language='python')

|

| 120 |

|

| 121 |

|

| 122 |

elif screen == make_meme_menu:

|

| 123 |

+

if "pigeon" not in st.session_state:

|

| 124 |

+

st.session_state['pigeon'] = generate(model,1)[0]

|

| 125 |

+

|

| 126 |

+

def get_pigeon():

|

| 127 |

+

st.session_state['pigeon'] = generate(model,1)[0]

|

| 128 |

+

|

| 129 |

+

cols= st.columns(2)

|

| 130 |

+

cols[0].button("change pigeon",on_click=get_pigeon)

|

| 131 |

+

no_bg=cols[1].checkbox("Remove background?",True,help="Remove the background from pigeon")

|

| 132 |

+

show_text=cols[1].checkbox("Show text?",True)

|

| 133 |

+

|

| 134 |

+

meme_text=st.text_input("Enter text","Is this a pigeon?")

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

meme=make_meme(st.session_state['pigeon'],text=meme_text,show_text=show_text,remove_background=no_bg)

|

| 138 |

st.image(meme)

|

| 139 |

+

coly=st.columns(2)

|

| 140 |

+

coly[0].download_button("Download", img2download(meme),mime="image/jpeg")

|

| 141 |

+

coly[1].write("Made a cool one? [Share](https://twitter.com/intent/tweet?text=Check%20out%20the%20demo%20for%20Butterfly%20GAN%20%F0%9F%A6%8Bhttps%3A//huggingface.co/spaces/huggan/butterfly-gan%0Amade%20by%20%40ceyda_cinarel%20%26%20%40johnowhitaker%20) on Twitter")

|

| 142 |

|

| 143 |

|

| 144 |

elif screen == mosaic_menu:

|

| 145 |

+

cols=st.columns(2)

|

| 146 |

+

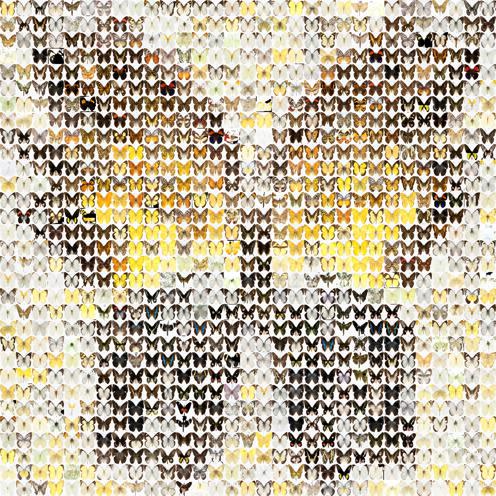

cols[0].markdown("These are all the butterflies in our [training set](https://huggingface.co/huggan/smithsonian_butterflies_subset)")

|

| 147 |

+

cols[0].image("assets/train_data_mosaic_lowres.jpg")

|

| 148 |

+

cols[0].write("🔎 view the high-res version [here](https://www.easyzoom.com/imageaccess/0c77e0e716f14ea7bc235447e5a4c397)")

|

| 149 |

+

|

| 150 |

+

cols[1].markdown("These are the butterflies our model generated.")

|

| 151 |

+

cols[1].image("assets/gen_mosaic_lowres.jpg")

|

| 152 |

+

cols[1].write("🔎 view the high-res version [here](https://www.easyzoom.com/imageaccess/cbb04e81106c4c54a9d9f9dbfb236eab)")

|

| 153 |

|

| 154 |

|

| 155 |

# footer stuff

|

|

|

|

| 159 |

# Credits

|

| 160 |

st.sidebar.caption(f"Made during the [huggan](https://github.com/huggingface/community-events) hackathon")

|

| 161 |

st.sidebar.caption(f"Contributors:")

|

| 162 |

+

st.sidebar.caption(f"[Ceyda Cinarel](https://github.com/cceyda) & [Jonathan Whitaker](https://datasciencecastnet.home.blog/)")

|

| 163 |

|

| 164 |

## Feel free to add more & change stuff ^

|

assets/code_snippets/latent_walk.py

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Some parameters

|

| 2 |

+

n_points = 6 #@param

|

| 3 |

+

n_steps = 300 #@param

|

| 4 |

+

latents = torch.randn(n_points, 256)

|

| 5 |

+

|

| 6 |

+

# Loop through generating the frames

|

| 7 |

+

frames = []

|

| 8 |

+

for i in tqdm(range(n_steps)):

|

| 9 |

+

p1 = max(0, int(n_points*i/n_steps))

|

| 10 |

+

p2 = min(n_points, int(n_points*i/n_steps)+1)%n_points # so it wraps back to 0

|

| 11 |

+

frac = (i-(p1*(n_steps/n_points))) / (n_steps/n_points)

|

| 12 |

+

l = latents[p1]*(1-frac) + latents[p2]*frac

|

| 13 |

+

im = model.G(l.unsqueeze(0)).clamp_(0., 1.)

|

| 14 |

+

frame=(im[0].permute(1, 2, 0).detach().cpu().numpy()*255).astype(np.uint8)

|

| 15 |

+

frames.append(frame)

|

assets/code_snippets/latent_walk_music.py

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#Code Author: Jonathan Whitaker 😎

|

| 2 |

+

|

| 3 |

+

import librosa

|

| 4 |

+

import soundfile as sf

|

| 5 |

+

from scipy.signal import savgol_filter

|

| 6 |

+

|

| 7 |

+

# The driving audio file

|

| 8 |

+

audio_file = './sounds/bensound-cute.wav' #@param

|

| 9 |

+

|

| 10 |

+

# How many points in the base latent walk loop

|

| 11 |

+

n_points = 6 #@param

|

| 12 |

+

|

| 13 |

+

# Smooths the animation effect, smaller=jerkier, must be odd

|

| 14 |

+

filter_window_size=301 #@param

|

| 15 |

+

|

| 16 |

+

# How much should we scale position based on music vs the base path?

|

| 17 |

+

chr_scale = 0.5 #@param

|

| 18 |

+

base_scale = 0.3 #@param

|

| 19 |

+

|

| 20 |

+

# Load the file

|

| 21 |

+

X, sample_rate = sf.read(audio_file, dtype='float32')

|

| 22 |

+

|

| 23 |

+

X= X[:int(len(X)*0.5)]

|

| 24 |

+

|

| 25 |

+

# Remove percussive elements

|

| 26 |

+

harmonic = librosa.effects.harmonic(X[:,0])

|

| 27 |

+

|

| 28 |

+

# Get chroma_stft (power in different notes)

|

| 29 |

+

chroma = librosa.feature.chroma_stft(harmonic) # Just one channel

|

| 30 |

+

|

| 31 |

+

# Smooth these out

|

| 32 |

+

chroma = savgol_filter(chroma, filter_window_size, 3)

|

| 33 |

+

|

| 34 |

+

# Calculate how many frames we want

|

| 35 |

+

fps = 25

|

| 36 |

+

duration = X.shape[0] / sample_rate

|

| 37 |

+

print('Duration:', duration)

|

| 38 |

+

n_steps = int(fps * duration)

|

| 39 |

+

print('N frames:', n_steps, fps * duration)

|

| 40 |

+

|

| 41 |

+

latents = torch.randn(n_points, 256)*base_scale

|

| 42 |

+

chroma_latents = torch.randn(12, 256)*chr_scale

|

| 43 |

+

|

| 44 |

+

frames=[]

|

| 45 |

+

for i in tqdm(range(n_steps)):

|

| 46 |

+

p1 = max(0, int(n_points*i/n_steps))

|

| 47 |

+

p2 = min(n_points, int(n_points*i/n_steps)+1)%n_points # so it wraps back to 0

|

| 48 |

+

frac = (i-(p1*(n_steps/n_points))) / (n_steps/n_points)

|

| 49 |

+

l = latents[p1]*(1-frac) + latents[p2]*frac

|

| 50 |

+

for c in range(12): # HERE adding the music influence to the latent

|

| 51 |

+

scale_factor = chroma[c, int(i*chroma.shape[1]/n_steps)]

|

| 52 |

+

l += chroma_latents[c]*chr_scale*scale_factor

|

| 53 |

+

im = model.G(l.unsqueeze(0)).clamp_(0., 1.)

|

| 54 |

+

frame=(im[0].permute(1, 2, 0).detach().cpu().numpy()*255).astype(np.uint8)

|

| 55 |

+

frames.append(frame)

|

assets/gen_mosaic_lowres.jpg

ADDED

|

assets/outputs/0_fake.jpg

ADDED

|

assets/outputs/100_fake.jpg

ADDED

|

assets/outputs/101_fake.jpg

ADDED

|

assets/outputs/102_fake.jpg

ADDED

|

assets/outputs/103_fake.jpg

ADDED

|

assets/outputs/104_fake.jpg

ADDED

|

assets/outputs/105_fake.jpg

ADDED

|

assets/outputs/106_fake.jpg

ADDED

|

assets/outputs/107_fake.jpg

ADDED

|

assets/outputs/108_fake.jpg

ADDED

|

assets/outputs/109_fake.jpg

ADDED

|

assets/outputs/10_fake.jpg

ADDED

|

assets/outputs/110_fake.jpg

ADDED

|

assets/outputs/111_fake.jpg

ADDED

|

assets/outputs/112_fake.jpg

ADDED

|

assets/outputs/113_fake.jpg

ADDED

|

assets/outputs/114_fake.jpg

ADDED

|

assets/outputs/115_fake.jpg

ADDED

|

assets/outputs/116_fake.jpg

ADDED

|

assets/outputs/117_fake.jpg

ADDED

|

assets/outputs/118_fake.jpg

ADDED

|

assets/outputs/119_fake.jpg

ADDED

|

assets/outputs/11_fake.jpg

ADDED

|

assets/outputs/120_fake.jpg

ADDED

|

assets/outputs/121_fake.jpg

ADDED

|

assets/outputs/122_fake.jpg

ADDED

|

assets/outputs/123_fake.jpg

ADDED

|

assets/outputs/124_fake.jpg

ADDED

|

assets/outputs/125_fake.jpg

ADDED

|

assets/outputs/126_fake.jpg

ADDED

|

assets/outputs/127_fake.jpg

ADDED

|

assets/outputs/128_fake.jpg

ADDED

|

assets/outputs/129_fake.jpg

ADDED

|

assets/outputs/12_fake.jpg

ADDED

|

assets/outputs/130_fake.jpg

ADDED

|

assets/outputs/131_fake.jpg

ADDED

|

assets/outputs/132_fake.jpg

ADDED

|

assets/outputs/133_fake.jpg

ADDED

|

assets/outputs/134_fake.jpg

ADDED

|

assets/outputs/135_fake.jpg

ADDED

|

assets/outputs/136_fake.jpg

ADDED

|

assets/outputs/137_fake.jpg

ADDED

|

assets/outputs/138_fake.jpg

ADDED

|

assets/outputs/139_fake.jpg

ADDED

|

assets/outputs/13_fake.jpg

ADDED

|

assets/outputs/140_fake.jpg

ADDED

|