Spaces:

Runtime error

Runtime error

Geraldine J

commited on

Commit

•

8eeee11

1

Parent(s):

f86e484

Add application files

Browse files- OceanApp_YOLOv5_Results.png +0 -0

- app.py +102 -0

- best.pt +3 -0

- requirements.txt +26 -0

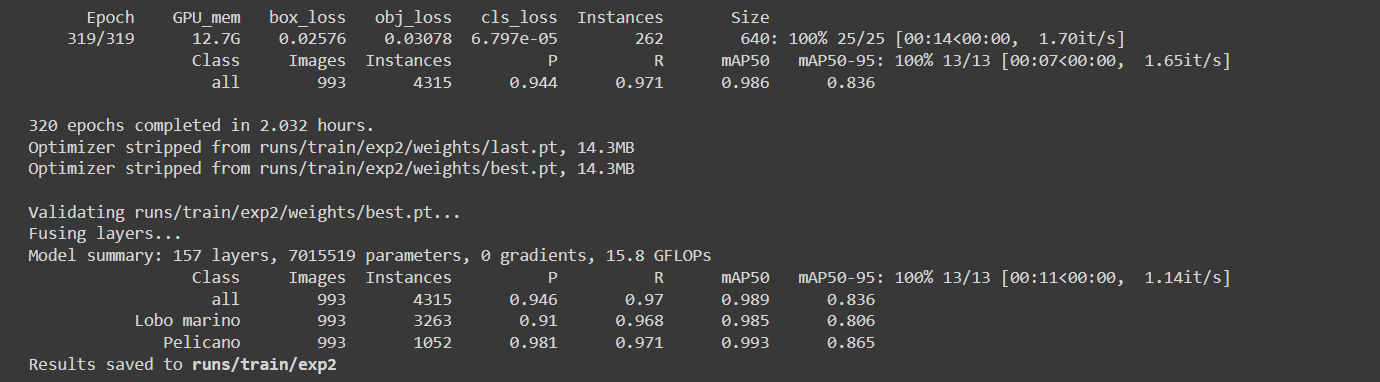

OceanApp_YOLOv5_Results.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,102 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

"""Deploy OceanApp demo.ipynb

|

| 3 |

+

|

| 4 |

+

Automatically generated by Colaboratory.

|

| 5 |

+

|

| 6 |

+

Original file is located at

|

| 7 |

+

https://colab.research.google.com/drive/1j0T8gdLIa0X8fzkIgFpXDoU27BF49RUz?usp=sharing

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

# Modelo

|

| 12 |

+

|

| 13 |

+

YOLO es una familia de modelos de detección de objetos a escala compuesta entrenados en COCO dataset, e incluye una funcionalidad simple para Test Time Augmentation (TTA), model ensembling, hyperparameter evolution, and export to ONNX, CoreML and TFLite.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

## Gradio Inferencia

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

Este Notebook se acelera opcionalmente con un entorno de ejecución de GPU

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

----------------------------------------------------------------------

|

| 24 |

+

|

| 25 |

+

YOLOv5 Gradio demo

|

| 26 |

+

|

| 27 |

+

*Author: Ultralytics LLC and Gradio*

|

| 28 |

+

|

| 29 |

+

# Código

|

| 30 |

+

"""

|

| 31 |

+

|

| 32 |

+

#!pip install -qr https://raw.githubusercontent.com/ultralytics/yolov5/master/requirements.txt gradio # install dependencies

|

| 33 |

+

|

| 34 |

+

import gradio as gr

|

| 35 |

+

import torch

|

| 36 |

+

import logging

|

| 37 |

+

from PIL import Image

|

| 38 |

+

|

| 39 |

+

# Images

|

| 40 |

+

torch.hub.download_url_to_file('https://i.pinimg.com/564x/18/0b/00/180b00e454362ff5caabe87d9a763a6f.jpg', 'ejemplo1.jpg')

|

| 41 |

+

torch.hub.download_url_to_file('https://i.pinimg.com/564x/3b/2f/d4/3b2fd4b6881b64429f208c5f32e5e4be.jpg', 'ejemplo2.jpg')

|

| 42 |

+

|

| 43 |

+

# Model

|

| 44 |

+

#model = torch.hub.load('ultralytics/yolov5', 'yolov5s') # force_reload=True to update

|

| 45 |

+

|

| 46 |

+

#model = torch.hub.load('ultralytics/yolov5', 'custom', path='best.pt') # local model o google colab

|

| 47 |

+

model = torch.hub.load('ultralytics/yolov5', 'custom', path='best.pt', force_reload=True, autoshape=True) # local model o google colab

|

| 48 |

+

#model = torch.hub.load('path/to/yolov5', 'custom', path='/content/yolov56.pt', source='local') # local repo

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

def yolo(size, iou, conf, im):

|

| 52 |

+

try:

|

| 53 |

+

'''Wrapper fn for gradio'''

|

| 54 |

+

g = (int(size) / max(im.size)) # gain

|

| 55 |

+

im = im.resize((int(x * g) for x in im.size), Image.ANTIALIAS) # resize

|

| 56 |

+

|

| 57 |

+

model.iou = iou

|

| 58 |

+

|

| 59 |

+

model.conf = conf

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

results2 = model(im) # inference

|

| 63 |

+

|

| 64 |

+

results2.render() # updates results.imgs with boxes and labels

|

| 65 |

+

return Image.fromarray(results2.ims[0])

|

| 66 |

+

except Exception as e:

|

| 67 |

+

logging.error(e, exc_info=True)

|

| 68 |

+

|

| 69 |

+

#------------ Interface-------------

|

| 70 |

+

|

| 71 |

+

in1 = gr.inputs.Radio(['640', '1280'], label="Tamaño de la imagen", default='640', type='value')

|

| 72 |

+

in2 = gr.inputs.Slider(minimum=0, maximum=1, step=0.05, default=0.45, label='NMS IoU threshold')

|

| 73 |

+

in3 = gr.inputs.Slider(minimum=0, maximum=1, step=0.05, default=0.50, label='Umbral o threshold')

|

| 74 |

+

in4 = gr.inputs.Image(type='pil', label="Original Image")

|

| 75 |

+

|

| 76 |

+

out2 = gr.outputs.Image(type="pil", label="YOLOv5")

|

| 77 |

+

#-------------- Text-----

|

| 78 |

+

title = 'OceanApp'

|

| 79 |

+

description = """

|

| 80 |

+

<p>

|

| 81 |

+

<center>

|

| 82 |

+

Sistema para el reconocimiento de las especies en la pesca acompañante de cerco, utilizando redes neuronales convolucionales para una empresa del sector pesquero en los puertos de callao y paracas.

|

| 83 |

+

<img src="https://i.pinimg.com/564x/3e/b8/f7/3eb8f7c348dffd7b3dffcafe81fbf2a6.jpg" alt="logo" width="250"/>

|

| 84 |

+

</center>

|

| 85 |

+

</p>

|

| 86 |

+

"""

|

| 87 |

+

article ="<p style='text-align: center'><a href='https://docs.google.com/presentation/d/1T5CdcLSzgRe8cQpoi_sPB4U170551NGOrZNykcJD0xU/edit?usp=sharing' target='_blank'>Para mas info, clik para ir al white paper</a></p><p style='text-align: center'><a href='https://drive.google.com/drive/folders/1owACN3HGIMo4zm2GQ_jf-OhGNeBVRS7l?usp=sharing ' target='_blank'>Google Colab Demo</a></p><p style='text-align: center'><a href='https://github.com/Municipalidad-de-Vicente-Lopez/Trampa_Barcelo' target='_blank'>Repo Github</a></p></center></p>"

|

| 88 |

+

|

| 89 |

+

examples = [['640',0.45, 0.75,'ejemplo1.jpg'], ['640',0.45, 0.75,'ejemplo2.jpg']]

|

| 90 |

+

|

| 91 |

+

iface = gr.Interface(yolo, inputs=[in1, in2, in3, in4], outputs=out2, title=title, description=description, article=article, examples=examples,theme="huggingface", analytics_enabled=False).launch(

|

| 92 |

+

debug=True)

|

| 93 |

+

|

| 94 |

+

iface.launch()

|

| 95 |

+

|

| 96 |

+

"""For YOLOv5 PyTorch Hub inference with **PIL**, **OpenCV**, **Numpy** or **PyTorch** inputs please see the full [YOLOv5 PyTorch Hub Tutorial](https://github.com/ultralytics/yolov5/issues/36).

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

## Citation

|

| 100 |

+

|

| 101 |

+

[](https://zenodo.org/badge/latestdoi/264818686)

|

| 102 |

+

"""

|

best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:388ea86a83bda4541be7e4185195588615482df9a9cd64bc0144d01ea01bb9c9

|

| 3 |

+

size 14339573

|

requirements.txt

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# pip install -r requirements.txt

|

| 2 |

+

# base ----------------------------------------

|

| 3 |

+

matplotlib>=3.2.2

|

| 4 |

+

numpy>=1.18.5

|

| 5 |

+

opencv-python-headless

|

| 6 |

+

Pillow

|

| 7 |

+

PyYAML>=5.3.1

|

| 8 |

+

scipy>=1.4.1

|

| 9 |

+

torch>=1.7.0

|

| 10 |

+

torchvision>=0.8.1

|

| 11 |

+

tqdm>=4.41.0

|

| 12 |

+

# logging -------------------------------------

|

| 13 |

+

tensorboard>=2.4.1

|

| 14 |

+

# wandb

|

| 15 |

+

# plotting ------------------------------------

|

| 16 |

+

seaborn>=0.11.0

|

| 17 |

+

pandas

|

| 18 |

+

# export --------------------------------------

|

| 19 |

+

# coremltools>=4.1

|

| 20 |

+

# onnx>=1.9.0

|

| 21 |

+

# scikit-learn==0.19.2 # for coreml quantization

|

| 22 |

+

# extras --------------------------------------

|

| 23 |

+

# Cython # for pycocotools https://github.com/cocodataset/cocoapi/issues/172

|

| 24 |

+

# pycocotools>=2.0 # COCO mAP

|

| 25 |

+

# albumentations>=1.0.3

|

| 26 |

+

thop # FLOPs computation

|