Spaces:

Runtime error

Runtime error

Commit

•

4a285f6

1

Parent(s):

f3d8775

added files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +133 -0

- README.md +1 -1

- Self-Correction-Human-Parsing-for-ACGPN/datasets/__init__.py +0 -0

- Self-Correction-Human-Parsing-for-ACGPN/datasets/datasets.py +201 -0

- Self-Correction-Human-Parsing-for-ACGPN/datasets/simple_extractor_dataset.py +80 -0

- Self-Correction-Human-Parsing-for-ACGPN/datasets/target_generation.py +40 -0

- Self-Correction-Human-Parsing-for-ACGPN/evaluate.py +209 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/README.md +38 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/coco_style_annotation_creator/human_to_coco.py +166 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/coco_style_annotation_creator/pycococreatortools.py +114 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/coco_style_annotation_creator/test_human2coco_format.py +74 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/data/DemoDataset/global_pic/demo.jpg +0 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/demo.ipynb +0 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/demo/demo.jpg +0 -0

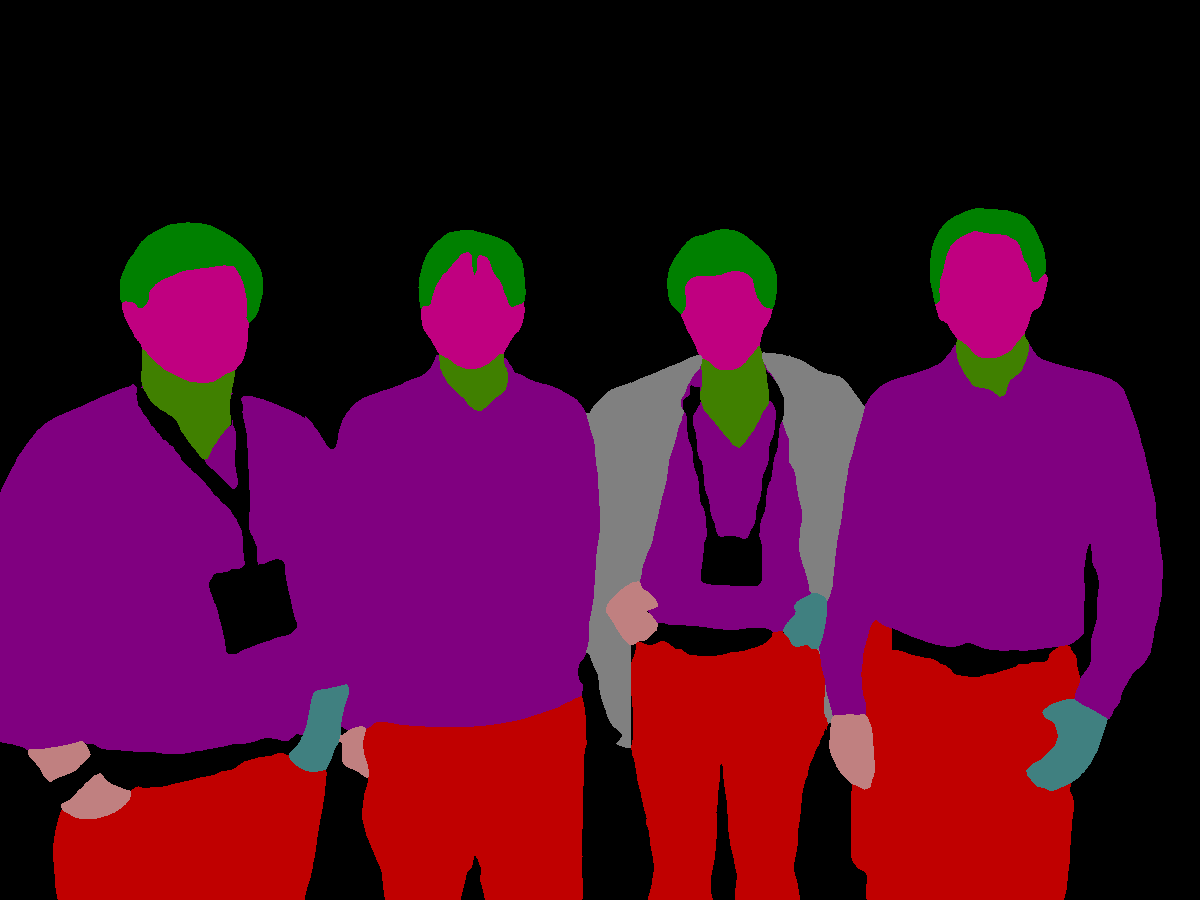

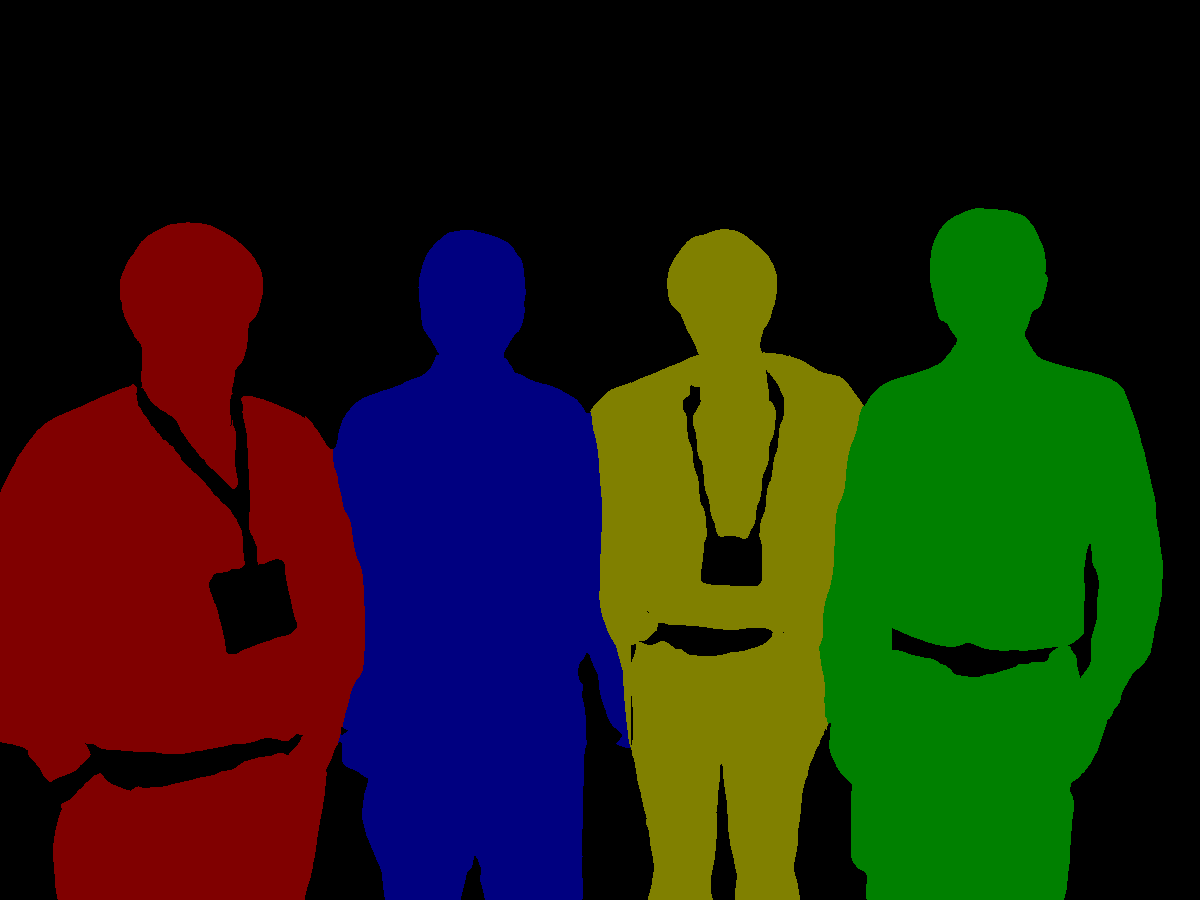

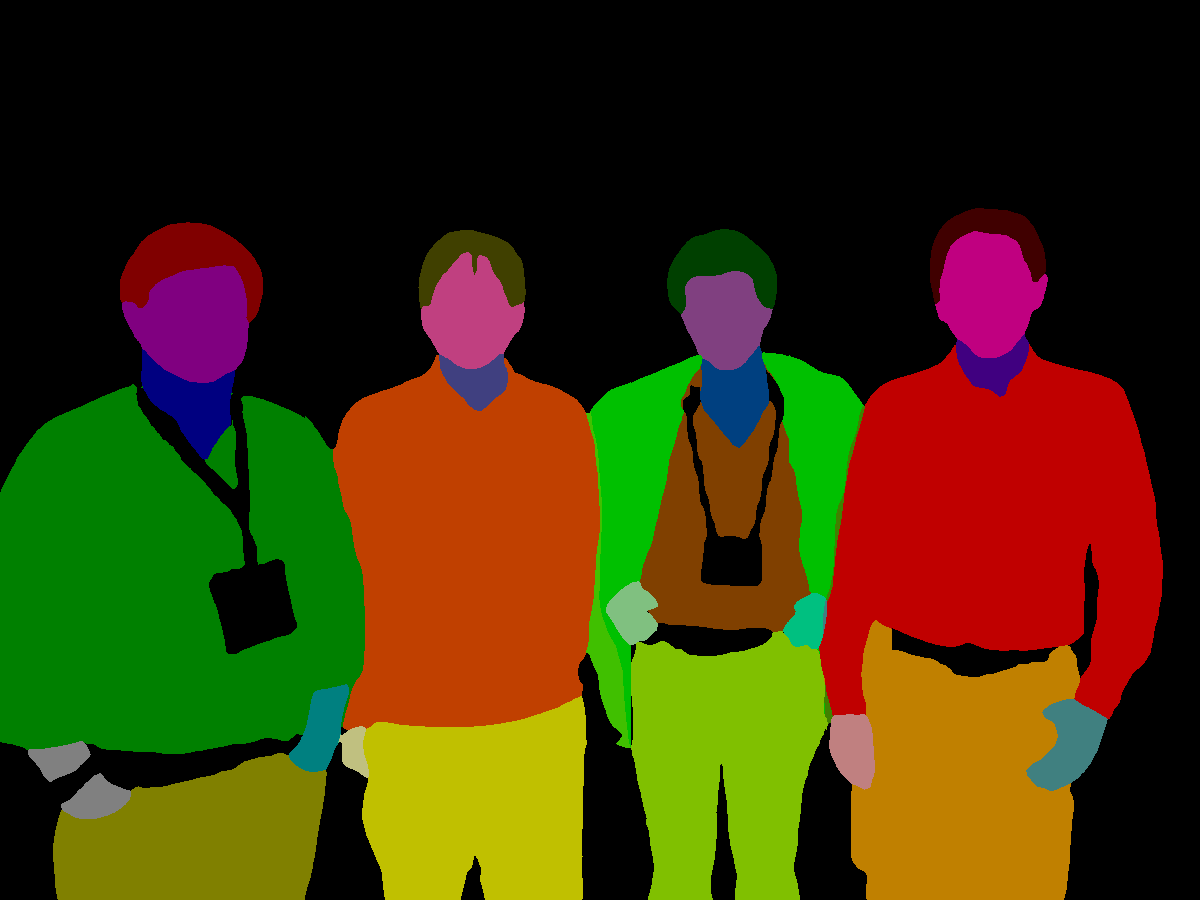

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/demo/demo_global_human_parsing.png +0 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/demo/demo_instance_human_mask.png +0 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/demo/demo_multiple_human_parsing.png +0 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.circleci/config.yml +179 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.clang-format +85 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.flake8 +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/CODE_OF_CONDUCT.md +5 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/CONTRIBUTING.md +49 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/Detectron2-Logo-Horz.svg +1 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/ISSUE_TEMPLATE.md +5 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/bugs.md +36 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/config.yml +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/feature-request.md +31 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/questions-help-support.md +26 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/unexpected-problems-bugs.md +45 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.github/pull_request_template.md +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/.gitignore +46 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/GETTING_STARTED.md +79 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/INSTALL.md +184 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/LICENSE +201 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/MODEL_ZOO.md +903 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/README.md +56 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/Base-RCNN-C4.yaml +18 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/Base-RCNN-DilatedC5.yaml +31 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/Base-RCNN-FPN.yaml +42 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/Base-RetinaNet.yaml +24 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/fast_rcnn_R_50_FPN_1x.yaml +17 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_101_C4_3x.yaml +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_101_DC5_3x.yaml +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_101_FPN_3x.yaml +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_50_C4_1x.yaml +6 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_50_C4_3x.yaml +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_50_DC5_1x.yaml +6 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_50_DC5_3x.yaml +9 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_50_FPN_1x.yaml +6 -0

- Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/detectron2/configs/COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml +9 -0

.gitignore

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Custom

|

| 2 |

+

.DS_Store

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

# Byte-compiled / optimized / DLL files

|

| 6 |

+

__pycache__/

|

| 7 |

+

*.py[cod]

|

| 8 |

+

*$py.class

|

| 9 |

+

|

| 10 |

+

# C extensions

|

| 11 |

+

*.so

|

| 12 |

+

|

| 13 |

+

# Distribution / packaging

|

| 14 |

+

.Python

|

| 15 |

+

build/

|

| 16 |

+

develop-eggs/

|

| 17 |

+

dist/

|

| 18 |

+

downloads/

|

| 19 |

+

eggs/

|

| 20 |

+

.eggs/

|

| 21 |

+

lib/

|

| 22 |

+

lib64/

|

| 23 |

+

parts/

|

| 24 |

+

sdist/

|

| 25 |

+

var/

|

| 26 |

+

wheels/

|

| 27 |

+

pip-wheel-metadata/

|

| 28 |

+

share/python-wheels/

|

| 29 |

+

*.egg-info/

|

| 30 |

+

.installed.cfg

|

| 31 |

+

*.egg

|

| 32 |

+

MANIFEST

|

| 33 |

+

|

| 34 |

+

# PyInstaller

|

| 35 |

+

# Usually these files are written by a python script from a template

|

| 36 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 37 |

+

*.manifest

|

| 38 |

+

*.spec

|

| 39 |

+

|

| 40 |

+

# Installer logs

|

| 41 |

+

pip-log.txt

|

| 42 |

+

pip-delete-this-directory.txt

|

| 43 |

+

|

| 44 |

+

# Unit test / coverage reports

|

| 45 |

+

htmlcov/

|

| 46 |

+

.tox/

|

| 47 |

+

.nox/

|

| 48 |

+

.coverage

|

| 49 |

+

.coverage.*

|

| 50 |

+

.cache

|

| 51 |

+

nosetests.xml

|

| 52 |

+

coverage.xml

|

| 53 |

+

*.cover

|

| 54 |

+

*.py,cover

|

| 55 |

+

.hypothesis/

|

| 56 |

+

.pytest_cache/

|

| 57 |

+

|

| 58 |

+

# Translations

|

| 59 |

+

*.mo

|

| 60 |

+

*.pot

|

| 61 |

+

|

| 62 |

+

# Django stuff:

|

| 63 |

+

*.log

|

| 64 |

+

local_settings.py

|

| 65 |

+

db.sqlite3

|

| 66 |

+

db.sqlite3-journal

|

| 67 |

+

|

| 68 |

+

# Flask stuff:

|

| 69 |

+

instance/

|

| 70 |

+

.webassets-cache

|

| 71 |

+

|

| 72 |

+

# Scrapy stuff:

|

| 73 |

+

.scrapy

|

| 74 |

+

|

| 75 |

+

# Sphinx documentation

|

| 76 |

+

docs/_build/

|

| 77 |

+

|

| 78 |

+

# PyBuilder

|

| 79 |

+

target/

|

| 80 |

+

|

| 81 |

+

# Jupyter Notebook

|

| 82 |

+

.ipynb_checkpoints

|

| 83 |

+

|

| 84 |

+

# IPython

|

| 85 |

+

profile_default/

|

| 86 |

+

ipython_config.py

|

| 87 |

+

|

| 88 |

+

# pyenv

|

| 89 |

+

.python-version

|

| 90 |

+

|

| 91 |

+

# pipenv

|

| 92 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 93 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 94 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 95 |

+

# install all needed dependencies.

|

| 96 |

+

#Pipfile.lock

|

| 97 |

+

|

| 98 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 99 |

+

__pypackages__/

|

| 100 |

+

|

| 101 |

+

# Celery stuff

|

| 102 |

+

celerybeat-schedule

|

| 103 |

+

celerybeat.pid

|

| 104 |

+

|

| 105 |

+

# SageMath parsed files

|

| 106 |

+

*.sage.py

|

| 107 |

+

|

| 108 |

+

# Environments

|

| 109 |

+

.env

|

| 110 |

+

.venv

|

| 111 |

+

env/

|

| 112 |

+

venv/

|

| 113 |

+

ENV/

|

| 114 |

+

env.bak/

|

| 115 |

+

venv.bak/

|

| 116 |

+

|

| 117 |

+

# Spyder project settings

|

| 118 |

+

.spyderproject

|

| 119 |

+

.spyproject

|

| 120 |

+

|

| 121 |

+

# Rope project settings

|

| 122 |

+

.ropeproject

|

| 123 |

+

|

| 124 |

+

# mkdocs documentation

|

| 125 |

+

/site

|

| 126 |

+

|

| 127 |

+

# mypy

|

| 128 |

+

.mypy_cache/

|

| 129 |

+

.dmypy.json

|

| 130 |

+

dmypy.json

|

| 131 |

+

|

| 132 |

+

# Pyre type checker

|

| 133 |

+

.pyre/

|

README.md

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

---

|

| 2 |

title: Fifa Tryon Demo

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: yellow

|

| 5 |

colorTo: red

|

| 6 |

sdk: gradio

|

|

|

|

| 1 |

---

|

| 2 |

title: Fifa Tryon Demo

|

| 3 |

+

emoji: 🧥👚👕👔

|

| 4 |

colorFrom: yellow

|

| 5 |

colorTo: red

|

| 6 |

sdk: gradio

|

Self-Correction-Human-Parsing-for-ACGPN/datasets/__init__.py

ADDED

|

File without changes

|

Self-Correction-Human-Parsing-for-ACGPN/datasets/datasets.py

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

# -*- encoding: utf-8 -*-

|

| 3 |

+

|

| 4 |

+

"""

|

| 5 |

+

@Author : Peike Li

|

| 6 |

+

@Contact : peike.li@yahoo.com

|

| 7 |

+

@File : datasets.py

|

| 8 |

+

@Time : 8/4/19 3:35 PM

|

| 9 |

+

@Desc :

|

| 10 |

+

@License : This source code is licensed under the license found in the

|

| 11 |

+

LICENSE file in the root directory of this source tree.

|

| 12 |

+

"""

|

| 13 |

+

|

| 14 |

+

import os

|

| 15 |

+

import numpy as np

|

| 16 |

+

import random

|

| 17 |

+

import torch

|

| 18 |

+

import cv2

|

| 19 |

+

from torch.utils import data

|

| 20 |

+

from utils.transforms import get_affine_transform

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

class LIPDataSet(data.Dataset):

|

| 24 |

+

def __init__(self, root, dataset, crop_size=[473, 473], scale_factor=0.25,

|

| 25 |

+

rotation_factor=30, ignore_label=255, transform=None):

|

| 26 |

+

self.root = root

|

| 27 |

+

self.aspect_ratio = crop_size[1] * 1.0 / crop_size[0]

|

| 28 |

+

self.crop_size = np.asarray(crop_size)

|

| 29 |

+

self.ignore_label = ignore_label

|

| 30 |

+

self.scale_factor = scale_factor

|

| 31 |

+

self.rotation_factor = rotation_factor

|

| 32 |

+

self.flip_prob = 0.5

|

| 33 |

+

self.transform = transform

|

| 34 |

+

self.dataset = dataset

|

| 35 |

+

|

| 36 |

+

list_path = os.path.join(self.root, self.dataset + '_id.txt')

|

| 37 |

+

train_list = [i_id.strip() for i_id in open(list_path)]

|

| 38 |

+

|

| 39 |

+

self.train_list = train_list

|

| 40 |

+

self.number_samples = len(self.train_list)

|

| 41 |

+

|

| 42 |

+

def __len__(self):

|

| 43 |

+

return self.number_samples

|

| 44 |

+

|

| 45 |

+

def _box2cs(self, box):

|

| 46 |

+

x, y, w, h = box[:4]

|

| 47 |

+

return self._xywh2cs(x, y, w, h)

|

| 48 |

+

|

| 49 |

+

def _xywh2cs(self, x, y, w, h):

|

| 50 |

+

center = np.zeros((2), dtype=np.float32)

|

| 51 |

+

center[0] = x + w * 0.5

|

| 52 |

+

center[1] = y + h * 0.5

|

| 53 |

+

if w > self.aspect_ratio * h:

|

| 54 |

+

h = w * 1.0 / self.aspect_ratio

|

| 55 |

+

elif w < self.aspect_ratio * h:

|

| 56 |

+

w = h * self.aspect_ratio

|

| 57 |

+

scale = np.array([w * 1.0, h * 1.0], dtype=np.float32)

|

| 58 |

+

return center, scale

|

| 59 |

+

|

| 60 |

+

def __getitem__(self, index):

|

| 61 |

+

train_item = self.train_list[index]

|

| 62 |

+

|

| 63 |

+

im_path = os.path.join(self.root, self.dataset + '_images', train_item + '.jpg')

|

| 64 |

+

parsing_anno_path = os.path.join(self.root, self.dataset + '_segmentations', train_item + '.png')

|

| 65 |

+

|

| 66 |

+

im = cv2.imread(im_path, cv2.IMREAD_COLOR)

|

| 67 |

+

h, w, _ = im.shape

|

| 68 |

+

parsing_anno = np.zeros((h, w), dtype=np.long)

|

| 69 |

+

|

| 70 |

+

# Get person center and scale

|

| 71 |

+

person_center, s = self._box2cs([0, 0, w - 1, h - 1])

|

| 72 |

+

r = 0

|

| 73 |

+

|

| 74 |

+

if self.dataset != 'test':

|

| 75 |

+

# Get pose annotation

|

| 76 |

+

parsing_anno = cv2.imread(parsing_anno_path, cv2.IMREAD_GRAYSCALE)

|

| 77 |

+

if self.dataset == 'train' or self.dataset == 'trainval':

|

| 78 |

+

sf = self.scale_factor

|

| 79 |

+

rf = self.rotation_factor

|

| 80 |

+

s = s * np.clip(np.random.randn() * sf + 1, 1 - sf, 1 + sf)

|

| 81 |

+

r = np.clip(np.random.randn() * rf, -rf * 2, rf * 2) if random.random() <= 0.6 else 0

|

| 82 |

+

|

| 83 |

+

if random.random() <= self.flip_prob:

|

| 84 |

+

im = im[:, ::-1, :]

|

| 85 |

+

parsing_anno = parsing_anno[:, ::-1]

|

| 86 |

+

person_center[0] = im.shape[1] - person_center[0] - 1

|

| 87 |

+

right_idx = [15, 17, 19]

|

| 88 |

+

left_idx = [14, 16, 18]

|

| 89 |

+

for i in range(0, 3):

|

| 90 |

+

right_pos = np.where(parsing_anno == right_idx[i])

|

| 91 |

+

left_pos = np.where(parsing_anno == left_idx[i])

|

| 92 |

+

parsing_anno[right_pos[0], right_pos[1]] = left_idx[i]

|

| 93 |

+

parsing_anno[left_pos[0], left_pos[1]] = right_idx[i]

|

| 94 |

+

|

| 95 |

+

trans = get_affine_transform(person_center, s, r, self.crop_size)

|

| 96 |

+

input = cv2.warpAffine(

|

| 97 |

+

im,

|

| 98 |

+

trans,

|

| 99 |

+

(int(self.crop_size[1]), int(self.crop_size[0])),

|

| 100 |

+

flags=cv2.INTER_LINEAR,

|

| 101 |

+

borderMode=cv2.BORDER_CONSTANT,

|

| 102 |

+

borderValue=(0, 0, 0))

|

| 103 |

+

|

| 104 |

+

if self.transform:

|

| 105 |

+

input = self.transform(input)

|

| 106 |

+

|

| 107 |

+

meta = {

|

| 108 |

+

'name': train_item,

|

| 109 |

+

'center': person_center,

|

| 110 |

+

'height': h,

|

| 111 |

+

'width': w,

|

| 112 |

+

'scale': s,

|

| 113 |

+

'rotation': r

|

| 114 |

+

}

|

| 115 |

+

|

| 116 |

+

if self.dataset == 'val' or self.dataset == 'test':

|

| 117 |

+

return input, meta

|

| 118 |

+

else:

|

| 119 |

+

label_parsing = cv2.warpAffine(

|

| 120 |

+

parsing_anno,

|

| 121 |

+

trans,

|

| 122 |

+

(int(self.crop_size[1]), int(self.crop_size[0])),

|

| 123 |

+

flags=cv2.INTER_NEAREST,

|

| 124 |

+

borderMode=cv2.BORDER_CONSTANT,

|

| 125 |

+

borderValue=(255))

|

| 126 |

+

|

| 127 |

+

label_parsing = torch.from_numpy(label_parsing)

|

| 128 |

+

|

| 129 |

+

return input, label_parsing, meta

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

class LIPDataValSet(data.Dataset):

|

| 133 |

+

def __init__(self, root, dataset='val', crop_size=[473, 473], transform=None, flip=False):

|

| 134 |

+

self.root = root

|

| 135 |

+

self.crop_size = crop_size

|

| 136 |

+

self.transform = transform

|

| 137 |

+

self.flip = flip

|

| 138 |

+

self.dataset = dataset

|

| 139 |

+

self.root = root

|

| 140 |

+

self.aspect_ratio = crop_size[1] * 1.0 / crop_size[0]

|

| 141 |

+

self.crop_size = np.asarray(crop_size)

|

| 142 |

+

|

| 143 |

+

list_path = os.path.join(self.root, self.dataset + '_id.txt')

|

| 144 |

+

val_list = [i_id.strip() for i_id in open(list_path)]

|

| 145 |

+

|

| 146 |

+

self.val_list = val_list

|

| 147 |

+

self.number_samples = len(self.val_list)

|

| 148 |

+

|

| 149 |

+

def __len__(self):

|

| 150 |

+

return len(self.val_list)

|

| 151 |

+

|

| 152 |

+

def _box2cs(self, box):

|

| 153 |

+

x, y, w, h = box[:4]

|

| 154 |

+

return self._xywh2cs(x, y, w, h)

|

| 155 |

+

|

| 156 |

+

def _xywh2cs(self, x, y, w, h):

|

| 157 |

+

center = np.zeros((2), dtype=np.float32)

|

| 158 |

+

center[0] = x + w * 0.5

|

| 159 |

+

center[1] = y + h * 0.5

|

| 160 |

+

if w > self.aspect_ratio * h:

|

| 161 |

+

h = w * 1.0 / self.aspect_ratio

|

| 162 |

+

elif w < self.aspect_ratio * h:

|

| 163 |

+

w = h * self.aspect_ratio

|

| 164 |

+

scale = np.array([w * 1.0, h * 1.0], dtype=np.float32)

|

| 165 |

+

|

| 166 |

+

return center, scale

|

| 167 |

+

|

| 168 |

+

def __getitem__(self, index):

|

| 169 |

+

val_item = self.val_list[index]

|

| 170 |

+

# Load training image

|

| 171 |

+

im_path = os.path.join(self.root, self.dataset + '_images', val_item + '.jpg')

|

| 172 |

+

im = cv2.imread(im_path, cv2.IMREAD_COLOR)

|

| 173 |

+

h, w, _ = im.shape

|

| 174 |

+

# Get person center and scale

|

| 175 |

+

person_center, s = self._box2cs([0, 0, w - 1, h - 1])

|

| 176 |

+

r = 0

|

| 177 |

+

trans = get_affine_transform(person_center, s, r, self.crop_size)

|

| 178 |

+

input = cv2.warpAffine(

|

| 179 |

+

im,

|

| 180 |

+

trans,

|

| 181 |

+

(int(self.crop_size[1]), int(self.crop_size[0])),

|

| 182 |

+

flags=cv2.INTER_LINEAR,

|

| 183 |

+

borderMode=cv2.BORDER_CONSTANT,

|

| 184 |

+

borderValue=(0, 0, 0))

|

| 185 |

+

input = self.transform(input)

|

| 186 |

+

flip_input = input.flip(dims=[-1])

|

| 187 |

+

if self.flip:

|

| 188 |

+

batch_input_im = torch.stack([input, flip_input])

|

| 189 |

+

else:

|

| 190 |

+

batch_input_im = input

|

| 191 |

+

|

| 192 |

+

meta = {

|

| 193 |

+

'name': val_item,

|

| 194 |

+

'center': person_center,

|

| 195 |

+

'height': h,

|

| 196 |

+

'width': w,

|

| 197 |

+

'scale': s,

|

| 198 |

+

'rotation': r

|

| 199 |

+

}

|

| 200 |

+

|

| 201 |

+

return batch_input_im, meta

|

Self-Correction-Human-Parsing-for-ACGPN/datasets/simple_extractor_dataset.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

# -*- encoding: utf-8 -*-

|

| 3 |

+

|

| 4 |

+

"""

|

| 5 |

+

@Author : Peike Li

|

| 6 |

+

@Contact : peike.li@yahoo.com

|

| 7 |

+

@File : dataset.py

|

| 8 |

+

@Time : 8/30/19 9:12 PM

|

| 9 |

+

@Desc : Dataset Definition

|

| 10 |

+

@License : This source code is licensed under the license found in the

|

| 11 |

+

LICENSE file in the root directory of this source tree.

|

| 12 |

+

"""

|

| 13 |

+

|

| 14 |

+

import os

|

| 15 |

+

import cv2

|

| 16 |

+

import numpy as np

|

| 17 |

+

|

| 18 |

+

from torch.utils import data

|

| 19 |

+

from utils.transforms import get_affine_transform

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

class SimpleFolderDataset(data.Dataset):

|

| 23 |

+

def __init__(self, root, input_size=[512, 512], transform=None):

|

| 24 |

+

self.root = root

|

| 25 |

+

self.input_size = input_size

|

| 26 |

+

self.transform = transform

|

| 27 |

+

self.aspect_ratio = input_size[1] * 1.0 / input_size[0]

|

| 28 |

+

self.input_size = np.asarray(input_size)

|

| 29 |

+

self.file_list = []

|

| 30 |

+

for file in os.listdir(self.root):

|

| 31 |

+

if file.endswith('.jpg') or file.endswith('.png'):

|

| 32 |

+

self.file_list.append(file)

|

| 33 |

+

|

| 34 |

+

def __len__(self):

|

| 35 |

+

return len(self.file_list)

|

| 36 |

+

|

| 37 |

+

def _box2cs(self, box):

|

| 38 |

+

x, y, w, h = box[:4]

|

| 39 |

+

return self._xywh2cs(x, y, w, h)

|

| 40 |

+

|

| 41 |

+

def _xywh2cs(self, x, y, w, h):

|

| 42 |

+

center = np.zeros((2), dtype=np.float32)

|

| 43 |

+

center[0] = x + w * 0.5

|

| 44 |

+

center[1] = y + h * 0.5

|

| 45 |

+

if w > self.aspect_ratio * h:

|

| 46 |

+

h = w * 1.0 / self.aspect_ratio

|

| 47 |

+

elif w < self.aspect_ratio * h:

|

| 48 |

+

w = h * self.aspect_ratio

|

| 49 |

+

scale = np.array([w, h], dtype=np.float32)

|

| 50 |

+

return center, scale

|

| 51 |

+

|

| 52 |

+

def __getitem__(self, index):

|

| 53 |

+

img_name = self.file_list[index]

|

| 54 |

+

img_path = os.path.join(self.root, img_name)

|

| 55 |

+

img = cv2.imread(img_path, cv2.IMREAD_COLOR)

|

| 56 |

+

h, w, _ = img.shape

|

| 57 |

+

|

| 58 |

+

# Get person center and scale

|

| 59 |

+

person_center, s = self._box2cs([0, 0, w - 1, h - 1])

|

| 60 |

+

r = 0

|

| 61 |

+

trans = get_affine_transform(person_center, s, r, self.input_size)

|

| 62 |

+

input = cv2.warpAffine(

|

| 63 |

+

img,

|

| 64 |

+

trans,

|

| 65 |

+

(int(self.input_size[1]), int(self.input_size[0])),

|

| 66 |

+

flags=cv2.INTER_LINEAR,

|

| 67 |

+

borderMode=cv2.BORDER_CONSTANT,

|

| 68 |

+

borderValue=(0, 0, 0))

|

| 69 |

+

|

| 70 |

+

input = self.transform(input)

|

| 71 |

+

meta = {

|

| 72 |

+

'name': img_name,

|

| 73 |

+

'center': person_center,

|

| 74 |

+

'height': h,

|

| 75 |

+

'width': w,

|

| 76 |

+

'scale': s,

|

| 77 |

+

'rotation': r

|

| 78 |

+

}

|

| 79 |

+

|

| 80 |

+

return input, meta

|

Self-Correction-Human-Parsing-for-ACGPN/datasets/target_generation.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch.nn import functional as F

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

def generate_edge_tensor(label, edge_width=3):

|

| 6 |

+

label = label.type(torch.cuda.FloatTensor)

|

| 7 |

+

if len(label.shape) == 2:

|

| 8 |

+

label = label.unsqueeze(0)

|

| 9 |

+

n, h, w = label.shape

|

| 10 |

+

edge = torch.zeros(label.shape, dtype=torch.float).cuda()

|

| 11 |

+

# right

|

| 12 |

+

edge_right = edge[:, 1:h, :]

|

| 13 |

+

edge_right[(label[:, 1:h, :] != label[:, :h - 1, :]) & (label[:, 1:h, :] != 255)

|

| 14 |

+

& (label[:, :h - 1, :] != 255)] = 1

|

| 15 |

+

|

| 16 |

+

# up

|

| 17 |

+

edge_up = edge[:, :, :w - 1]

|

| 18 |

+

edge_up[(label[:, :, :w - 1] != label[:, :, 1:w])

|

| 19 |

+

& (label[:, :, :w - 1] != 255)

|

| 20 |

+

& (label[:, :, 1:w] != 255)] = 1

|

| 21 |

+

|

| 22 |

+

# upright

|

| 23 |

+

edge_upright = edge[:, :h - 1, :w - 1]

|

| 24 |

+

edge_upright[(label[:, :h - 1, :w - 1] != label[:, 1:h, 1:w])

|

| 25 |

+

& (label[:, :h - 1, :w - 1] != 255)

|

| 26 |

+

& (label[:, 1:h, 1:w] != 255)] = 1

|

| 27 |

+

|

| 28 |

+

# bottomright

|

| 29 |

+

edge_bottomright = edge[:, :h - 1, 1:w]

|

| 30 |

+

edge_bottomright[(label[:, :h - 1, 1:w] != label[:, 1:h, :w - 1])

|

| 31 |

+

& (label[:, :h - 1, 1:w] != 255)

|

| 32 |

+

& (label[:, 1:h, :w - 1] != 255)] = 1

|

| 33 |

+

|

| 34 |

+

kernel = torch.ones((1, 1, edge_width, edge_width), dtype=torch.float).cuda()

|

| 35 |

+

with torch.no_grad():

|

| 36 |

+

edge = edge.unsqueeze(1)

|

| 37 |

+

edge = F.conv2d(edge, kernel, stride=1, padding=1)

|

| 38 |

+

edge[edge!=0] = 1

|

| 39 |

+

edge = edge.squeeze()

|

| 40 |

+

return edge

|

Self-Correction-Human-Parsing-for-ACGPN/evaluate.py

ADDED

|

@@ -0,0 +1,209 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

# -*- encoding: utf-8 -*-

|

| 3 |

+

|

| 4 |

+

"""

|

| 5 |

+

@Author : Peike Li

|

| 6 |

+

@Contact : peike.li@yahoo.com

|

| 7 |

+

@File : evaluate.py

|

| 8 |

+

@Time : 8/4/19 3:36 PM

|

| 9 |

+

@Desc :

|

| 10 |

+

@License : This source code is licensed under the license found in the

|

| 11 |

+

LICENSE file in the root directory of this source tree.

|

| 12 |

+

"""

|

| 13 |

+

|

| 14 |

+

import os

|

| 15 |

+

import argparse

|

| 16 |

+

import numpy as np

|

| 17 |

+

import torch

|

| 18 |

+

|

| 19 |

+

from torch.utils import data

|

| 20 |

+

from tqdm import tqdm

|

| 21 |

+

from PIL import Image as PILImage

|

| 22 |

+

import torchvision.transforms as transforms

|

| 23 |

+

import torch.backends.cudnn as cudnn

|

| 24 |

+

|

| 25 |

+

import networks

|

| 26 |

+

from datasets.datasets import LIPDataValSet

|

| 27 |

+

from utils.miou import compute_mean_ioU

|

| 28 |

+

from utils.transforms import BGR2RGB_transform

|

| 29 |

+

from utils.transforms import transform_parsing

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def get_arguments():

|

| 33 |

+

"""Parse all the arguments provided from the CLI.

|

| 34 |

+

|

| 35 |

+

Returns:

|

| 36 |

+

A list of parsed arguments.

|

| 37 |

+

"""

|

| 38 |

+

parser = argparse.ArgumentParser(description="Self Correction for Human Parsing")

|

| 39 |

+

|

| 40 |

+

# Network Structure

|

| 41 |

+

parser.add_argument("--arch", type=str, default='resnet101')

|

| 42 |

+

# Data Preference

|

| 43 |

+

parser.add_argument("--data-dir", type=str, default='./data/LIP')

|

| 44 |

+

parser.add_argument("--batch-size", type=int, default=1)

|

| 45 |

+

parser.add_argument("--input-size", type=str, default='473,473')

|

| 46 |

+

parser.add_argument("--num-classes", type=int, default=20)

|

| 47 |

+

parser.add_argument("--ignore-label", type=int, default=255)

|

| 48 |

+

parser.add_argument("--random-mirror", action="store_true")

|

| 49 |

+

parser.add_argument("--random-scale", action="store_true")

|

| 50 |

+

# Evaluation Preference

|

| 51 |

+

parser.add_argument("--log-dir", type=str, default='./log')

|

| 52 |

+

parser.add_argument("--model-restore", type=str, default='./log/checkpoint.pth.tar')

|

| 53 |

+

parser.add_argument("--gpu", type=str, default='0', help="choose gpu device.")

|

| 54 |

+

parser.add_argument("--save-results", action="store_true", help="whether to save the results.")

|

| 55 |

+

parser.add_argument("--flip", action="store_true", help="random flip during the test.")

|

| 56 |

+

parser.add_argument("--multi-scales", type=str, default='1', help="multiple scales during the test")

|

| 57 |

+

return parser.parse_args()

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def get_palette(num_cls):

|

| 61 |

+

""" Returns the color map for visualizing the segmentation mask.

|

| 62 |

+

Args:

|

| 63 |

+

num_cls: Number of classes

|

| 64 |

+

Returns:

|

| 65 |

+

The color map

|

| 66 |

+

"""

|

| 67 |

+

n = num_cls

|

| 68 |

+

palette = [0] * (n * 3)

|

| 69 |

+

for j in range(0, n):

|

| 70 |

+

lab = j

|

| 71 |

+

palette[j * 3 + 0] = 0

|

| 72 |

+

palette[j * 3 + 1] = 0

|

| 73 |

+

palette[j * 3 + 2] = 0

|

| 74 |

+

i = 0

|

| 75 |

+

while lab:

|

| 76 |

+

palette[j * 3 + 0] |= (((lab >> 0) & 1) << (7 - i))

|

| 77 |

+

palette[j * 3 + 1] |= (((lab >> 1) & 1) << (7 - i))

|

| 78 |

+

palette[j * 3 + 2] |= (((lab >> 2) & 1) << (7 - i))

|

| 79 |

+

i += 1

|

| 80 |

+

lab >>= 3

|

| 81 |

+

return palette

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

def multi_scale_testing(model, batch_input_im, crop_size=[473, 473], flip=True, multi_scales=[1]):

|

| 85 |

+

flipped_idx = (15, 14, 17, 16, 19, 18)

|

| 86 |

+

if len(batch_input_im.shape) > 4:

|

| 87 |

+

batch_input_im = batch_input_im.squeeze()

|

| 88 |

+

if len(batch_input_im.shape) == 3:

|

| 89 |

+

batch_input_im = batch_input_im.unsqueeze(0)

|

| 90 |

+

|

| 91 |

+

interp = torch.nn.Upsample(size=crop_size, mode='bilinear', align_corners=True)

|

| 92 |

+

ms_outputs = []

|

| 93 |

+

for s in multi_scales:

|

| 94 |

+

interp_im = torch.nn.Upsample(scale_factor=s, mode='bilinear', align_corners=True)

|

| 95 |

+

scaled_im = interp_im(batch_input_im)

|

| 96 |

+

parsing_output = model(scaled_im)

|

| 97 |

+

parsing_output = parsing_output[0][-1]

|

| 98 |

+

output = parsing_output[0]

|

| 99 |

+

if flip:

|

| 100 |

+

flipped_output = parsing_output[1]

|

| 101 |

+

flipped_output[14:20, :, :] = flipped_output[flipped_idx, :, :]

|

| 102 |

+

output += flipped_output.flip(dims=[-1])

|

| 103 |

+

output *= 0.5

|

| 104 |

+

output = interp(output.unsqueeze(0))

|

| 105 |

+

ms_outputs.append(output[0])

|

| 106 |

+

ms_fused_parsing_output = torch.stack(ms_outputs)

|

| 107 |

+

ms_fused_parsing_output = ms_fused_parsing_output.mean(0)

|

| 108 |

+

ms_fused_parsing_output = ms_fused_parsing_output.permute(1, 2, 0) # HWC

|

| 109 |

+

parsing = torch.argmax(ms_fused_parsing_output, dim=2)

|

| 110 |

+

parsing = parsing.data.cpu().numpy()

|

| 111 |

+

ms_fused_parsing_output = ms_fused_parsing_output.data.cpu().numpy()

|

| 112 |

+

return parsing, ms_fused_parsing_output

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

def main():

|

| 116 |

+

"""Create the model and start the evaluation process."""

|

| 117 |

+

args = get_arguments()

|

| 118 |

+

multi_scales = [float(i) for i in args.multi_scales.split(',')]

|

| 119 |

+

gpus = [int(i) for i in args.gpu.split(',')]

|

| 120 |

+

assert len(gpus) == 1

|

| 121 |

+

if not args.gpu == 'None':

|

| 122 |

+

os.environ["CUDA_VISIBLE_DEVICES"] = args.gpu

|

| 123 |

+

|

| 124 |

+

cudnn.benchmark = True

|

| 125 |

+

cudnn.enabled = True

|

| 126 |

+

|

| 127 |

+

h, w = map(int, args.input_size.split(','))

|

| 128 |

+

input_size = [h, w]

|

| 129 |

+

|

| 130 |

+

model = networks.init_model(args.arch, num_classes=args.num_classes, pretrained=None)

|

| 131 |

+

|

| 132 |

+

IMAGE_MEAN = model.mean

|

| 133 |

+

IMAGE_STD = model.std

|

| 134 |

+

INPUT_SPACE = model.input_space

|

| 135 |

+

print('image mean: {}'.format(IMAGE_MEAN))

|

| 136 |

+

print('image std: {}'.format(IMAGE_STD))

|

| 137 |

+

print('input space:{}'.format(INPUT_SPACE))

|

| 138 |

+

if INPUT_SPACE == 'BGR':

|

| 139 |

+

print('BGR Transformation')

|

| 140 |

+

transform = transforms.Compose([

|

| 141 |

+

transforms.ToTensor(),

|

| 142 |

+

transforms.Normalize(mean=IMAGE_MEAN,

|

| 143 |

+

std=IMAGE_STD),

|

| 144 |

+

|

| 145 |

+

])

|

| 146 |

+

if INPUT_SPACE == 'RGB':

|

| 147 |

+

print('RGB Transformation')

|

| 148 |

+

transform = transforms.Compose([

|

| 149 |

+

transforms.ToTensor(),

|

| 150 |

+

BGR2RGB_transform(),

|

| 151 |

+

transforms.Normalize(mean=IMAGE_MEAN,

|

| 152 |

+

std=IMAGE_STD),

|

| 153 |

+

])

|

| 154 |

+

|

| 155 |

+

# Data loader

|

| 156 |

+

lip_test_dataset = LIPDataValSet(args.data_dir, 'val', crop_size=input_size, transform=transform, flip=args.flip)

|

| 157 |

+

num_samples = len(lip_test_dataset)

|

| 158 |

+

print('Totoal testing sample numbers: {}'.format(num_samples))

|

| 159 |

+

testloader = data.DataLoader(lip_test_dataset, batch_size=args.batch_size, shuffle=False, pin_memory=True)

|

| 160 |

+

|

| 161 |

+

# Load model weight

|

| 162 |

+

state_dict = torch.load(args.model_restore)['state_dict']

|

| 163 |

+

from collections import OrderedDict

|

| 164 |

+

new_state_dict = OrderedDict()

|

| 165 |

+

for k, v in state_dict.items():

|

| 166 |

+

name = k[7:] # remove `module.`

|

| 167 |

+

new_state_dict[name] = v

|

| 168 |

+

model.load_state_dict(new_state_dict)

|

| 169 |

+

model.cuda()

|

| 170 |

+

model.eval()

|

| 171 |

+

|

| 172 |

+

sp_results_dir = os.path.join(args.log_dir, 'sp_results')

|

| 173 |

+

if not os.path.exists(sp_results_dir):

|

| 174 |

+

os.makedirs(sp_results_dir)

|

| 175 |

+

|

| 176 |

+

palette = get_palette(20)

|

| 177 |

+

parsing_preds = []

|

| 178 |

+

scales = np.zeros((num_samples, 2), dtype=np.float32)

|

| 179 |

+

centers = np.zeros((num_samples, 2), dtype=np.int32)

|

| 180 |

+

with torch.no_grad():

|

| 181 |

+

for idx, batch in enumerate(tqdm(testloader)):

|

| 182 |

+

image, meta = batch

|

| 183 |

+

if (len(image.shape) > 4):

|

| 184 |

+

image = image.squeeze()

|

| 185 |

+

im_name = meta['name'][0]

|

| 186 |

+

c = meta['center'].numpy()[0]

|

| 187 |

+

s = meta['scale'].numpy()[0]

|

| 188 |

+

w = meta['width'].numpy()[0]

|

| 189 |

+

h = meta['height'].numpy()[0]

|

| 190 |

+

scales[idx, :] = s

|

| 191 |

+

centers[idx, :] = c

|

| 192 |

+

parsing, logits = multi_scale_testing(model, image.cuda(), crop_size=input_size, flip=args.flip,

|

| 193 |

+

multi_scales=multi_scales)

|

| 194 |

+

if args.save_results:

|

| 195 |

+

parsing_result = transform_parsing(parsing, c, s, w, h, input_size)

|

| 196 |

+

parsing_result_path = os.path.join(sp_results_dir, im_name + '.png')

|

| 197 |

+

output_im = PILImage.fromarray(np.asarray(parsing_result, dtype=np.uint8))

|

| 198 |

+

output_im.putpalette(palette)

|

| 199 |

+

output_im.save(parsing_result_path)

|

| 200 |

+

|

| 201 |

+

parsing_preds.append(parsing)

|

| 202 |

+

assert len(parsing_preds) == num_samples

|

| 203 |

+

mIoU = compute_mean_ioU(parsing_preds, scales, centers, args.num_classes, args.data_dir, input_size)

|

| 204 |

+

print(mIoU)

|

| 205 |

+

return

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

if __name__ == '__main__':

|

| 209 |

+

main()

|

Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/README.md

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Self Correction for Human Parsing

|

| 2 |

+

|

| 3 |

+

We propose a simple yet effective multiple human parsing framework by extending our self-correction network.

|

| 4 |

+

|

| 5 |

+

Here we show an example usage jupyter notebook in [demo.ipynb](./demo.ipynb).

|

| 6 |

+

|

| 7 |

+

## Requirements

|

| 8 |

+

|

| 9 |

+

Please see [INSTALL.md](https://github.com/facebookresearch/detectron2/blob/master/INSTALL.md) for further requirements.

|

| 10 |

+

|

| 11 |

+

## Citation

|

| 12 |

+

|

| 13 |

+

Please cite our work if you find this repo useful in your research.

|

| 14 |

+

|

| 15 |

+

```latex

|

| 16 |

+

@article{li2019self,

|

| 17 |

+

title={Self-Correction for Human Parsing},

|

| 18 |

+

author={Li, Peike and Xu, Yunqiu and Wei, Yunchao and Yang, Yi},

|

| 19 |

+

journal={arXiv preprint arXiv:1910.09777},

|

| 20 |

+

year={2019}

|

| 21 |

+

}

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

## Visualization

|

| 25 |

+

|

| 26 |

+

* Source Image.

|

| 27 |

+

|

| 28 |

+

* Instance Human Mask.

|

| 29 |

+

|

| 30 |

+

* Global Human Parsing Result.

|

| 31 |

+

|

| 32 |

+

* Multiple Human Parsing Result.

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

## Related

|

| 36 |

+

|

| 37 |

+

Our implementation is based on the [Detectron2](https://github.com/facebookresearch/detectron2).

|

| 38 |

+

|

Self-Correction-Human-Parsing-for-ACGPN/mhp_extension/coco_style_annotation_creator/human_to_coco.py

ADDED

|

@@ -0,0 +1,166 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import datetime

|

| 3 |

+

import json

|

| 4 |

+

import os

|

| 5 |

+

from PIL import Image

|

| 6 |

+

import numpy as np

|

| 7 |

+

|

| 8 |

+

import pycococreatortools

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def get_arguments():

|

| 12 |

+

parser = argparse.ArgumentParser(description="transform mask annotation to coco annotation")

|

| 13 |

+

parser.add_argument("--dataset", type=str, default='CIHP', help="name of dataset (CIHP, MHPv2 or VIP)")

|

| 14 |

+

parser.add_argument("--json_save_dir", type=str, default='../data/msrcnn_finetune_annotations',

|

| 15 |

+

help="path to save coco-style annotation json file")

|

| 16 |

+

parser.add_argument("--use_val", type=bool, default=False,

|

| 17 |

+

help="use train+val set for finetuning or not")

|

| 18 |

+

parser.add_argument("--train_img_dir", type=str, default='../data/instance-level_human_parsing/Training/Images',

|

| 19 |

+

help="train image path")

|

| 20 |

+

parser.add_argument("--train_anno_dir", type=str,

|

| 21 |

+

default='../data/instance-level_human_parsing/Training/Human_ids',

|

| 22 |

+

help="train human mask path")

|

| 23 |

+

parser.add_argument("--val_img_dir", type=str, default='../data/instance-level_human_parsing/Validation/Images',

|

| 24 |

+

help="val image path")

|

| 25 |

+

parser.add_argument("--val_anno_dir", type=str,

|

| 26 |

+

default='../data/instance-level_human_parsing/Validation/Human_ids',

|

| 27 |

+

help="val human mask path")

|

| 28 |

+

return parser.parse_args()

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

def main(args):

|

| 32 |

+

INFO = {

|

| 33 |

+

"description": args.split_name + " Dataset",

|

| 34 |

+

"url": "",

|

| 35 |

+

"version": "",

|

| 36 |

+

"year": 2019,

|

| 37 |

+

"contributor": "xyq",

|

| 38 |

+

"date_created": datetime.datetime.utcnow().isoformat(' ')

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

LICENSES = [

|

| 42 |

+

{

|

| 43 |

+

"id": 1,

|

| 44 |

+

"name": "",

|

| 45 |

+

"url": ""

|

| 46 |

+

}

|

| 47 |

+

]

|

| 48 |

+

|

| 49 |

+

CATEGORIES = [

|

| 50 |

+

{

|

| 51 |

+

'id': 1,

|

| 52 |

+

'name': 'person',

|

| 53 |

+

'supercategory': 'person',

|

| 54 |

+

},

|

| 55 |

+

]

|

| 56 |

+

|

| 57 |

+

coco_output = {

|

| 58 |

+

"info": INFO,

|

| 59 |

+

"licenses": LICENSES,

|

| 60 |

+

"categories": CATEGORIES,

|

| 61 |

+

"images": [],

|

| 62 |

+

"annotations": []

|

| 63 |

+

}

|

| 64 |

+

|

| 65 |

+

image_id = 1

|

| 66 |

+

segmentation_id = 1

|

| 67 |

+

|

| 68 |

+

for image_name in os.listdir(args.train_img_dir):

|

| 69 |

+

image = Image.open(os.path.join(args.train_img_dir, image_name))

|

| 70 |

+

image_info = pycococreatortools.create_image_info(

|

| 71 |

+

image_id, image_name, image.size

|

| 72 |

+

)

|

| 73 |

+

coco_output["images"].append(image_info)

|

| 74 |

+

|

| 75 |

+

human_mask_name = os.path.splitext(image_name)[0] + '.png'

|

| 76 |

+

human_mask = np.asarray(Image.open(os.path.join(args.train_anno_dir, human_mask_name)))

|

| 77 |

+

human_gt_labels = np.unique(human_mask)

|

| 78 |

+

|

| 79 |

+

for i in range(1, len(human_gt_labels)):

|

| 80 |

+

category_info = {'id': 1, 'is_crowd': 0}

|

| 81 |

+

binary_mask = np.uint8(human_mask == i)

|

| 82 |

+

annotation_info = pycococreatortools.create_annotation_info(

|

| 83 |

+

segmentation_id, image_id, category_info, binary_mask,

|

| 84 |

+

image.size, tolerance=10

|

| 85 |

+

)

|

| 86 |

+

if annotation_info is not None:

|

| 87 |

+

coco_output["annotations"].append(annotation_info)

|

| 88 |

+

|

| 89 |

+

segmentation_id += 1

|

| 90 |

+

image_id += 1

|

| 91 |

+

|

| 92 |

+

if not os.path.exists(args.json_save_dir):

|

| 93 |