Commit

·

49bceed

1

Parent(s):

e7eede8

First commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +34 -34

- .gitignore +163 -0

- 01-🚀 Homepage.py +149 -0

- README.md +77 -13

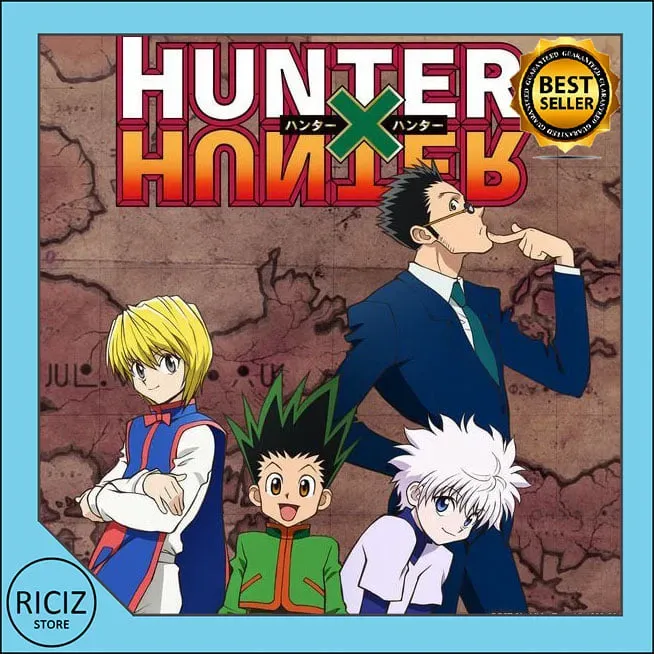

- assets/example_images/gon/306e5d35-b301-4299-8022-0c89dc0b7690.png +0 -0

- assets/example_images/gon/3509df87-a9cd-4500-a07a-0373cbe36715.png +0 -0

- assets/example_images/gon/620c33e9-59fe-418d-8953-1444e3cfa599.png +0 -0

- assets/example_images/gon/ab07531a-ab8f-445f-8e9d-d478dd67a73b.png +0 -0

- assets/example_images/gon/df746603-8dd9-4397-92d8-bf49f8df20d2.png +0 -0

- assets/example_images/hisoka/04af934d-ffb5-4cc9-83ad-88c585678e55.png +0 -0

- assets/example_images/hisoka/13d7867a-28e0-45f0-b141-8b8624d0e1e5.png +0 -0

- assets/example_images/hisoka/41954fdc-d740-49ec-a7ba-15cac7c22c11.png +0 -0

- assets/example_images/hisoka/422e9625-c523-4532-aa5b-dd4e21b209fc.png +0 -0

- assets/example_images/hisoka/80f95e87-2f7a-4808-9d01-4383feab90e2.png +0 -0

- assets/example_images/killua/0d2a44c4-c11e-474e-ac8b-7c0e84c7f879.png +0 -0

- assets/example_images/killua/2817e633-3239-41f1-a2bf-1be874bddf5e.png +0 -0

- assets/example_images/killua/4501242f-9bda-49b6-a3c5-23f97c8353c3.png +0 -0

- assets/example_images/killua/8aca13ab-a5b2-4192-ae4b-3b73e8c663f3.png +0 -0

- assets/example_images/killua/8b7e1854-8ca7-4ef1-8887-2c64b0309712.png +0 -0

- assets/example_images/kurapika/02265b41-9833-41eb-ad60-e043753f74b9.png +0 -0

- assets/example_images/kurapika/0650e968-d61b-4c4a-98bd-7ecdd2b991de.png +0 -0

- assets/example_images/kurapika/2728dfb5-788b-4be7-ad1b-e6d23297ecf3.png +0 -0

- assets/example_images/kurapika/3613a920-3efe-49d8-a39a-227bddefa86a.png +0 -0

- assets/example_images/kurapika/405b19b0-d982-44aa-b4c8-18e3a5e373b3.png +0 -0

- assets/example_images/leorio/00beabbf-063e-42b3-85e2-ce51c586195f.png +0 -0

- assets/example_images/leorio/613e8ffb-7534-481d-b780-6d23ecd31de4.png +0 -0

- assets/example_images/leorio/af2a59f2-fcf2-4621-bb4f-6540687b390a.png +0 -0

- assets/example_images/leorio/b134831a-5ee0-40c8-9a25-1a11329741d3.png +0 -0

- assets/example_images/leorio/ccc511a0-8a98-481c-97a1-c564a874bb60.png +0 -0

- assets/example_images/others/Presiden_Sukarno.jpg +0 -0

- assets/example_images/others/Tipe-Nen-yang-ada-di-Anime-Hunter-x-Hunter.jpg +0 -0

- assets/example_images/others/d29492bbe7604505a6f1b5394f62b393.png +0 -0

- assets/example_images/others/f575c3a5f23146b59bac51267db0ddb3.png +0 -0

- assets/example_images/others/fa4548a8f57041edb7fa19f8bf302326.png +0 -0

- assets/example_images/others/fb7c8048d54f48a29ab6aaf7f8383712.png +0 -0

- assets/example_images/others/fe96e8fce17b474195f8add2632b758e.png +0 -0

- assets/images/author.jpg +0 -0

- models/anime_face_detection_model/__init__.py +1 -0

- models/anime_face_detection_model/ssd_model.py +454 -0

- models/base_model/__init__.py +4 -0

- models/base_model/grad_cam.py +126 -0

- models/base_model/image_embeddings.py +67 -0

- models/base_model/image_similarity.py +86 -0

- models/base_model/main_model.py +52 -0

- models/deep_learning/__init__.py +4 -0

- models/deep_learning/backbone_model.py +109 -0

- models/deep_learning/deep_learning.py +90 -0

- models/deep_learning/grad_cam.py +59 -0

- models/deep_learning/image_embeddings.py +58 -0

- models/deep_learning/image_similarity.py +63 -0

.gitattributes

CHANGED

|

@@ -1,34 +1,34 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,163 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

.python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 110 |

+

.pdm.toml

|

| 111 |

+

|

| 112 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 113 |

+

__pypackages__/

|

| 114 |

+

|

| 115 |

+

# Celery stuff

|

| 116 |

+

celerybeat-schedule

|

| 117 |

+

celerybeat.pid

|

| 118 |

+

|

| 119 |

+

# SageMath parsed files

|

| 120 |

+

*.sage.py

|

| 121 |

+

|

| 122 |

+

# Environments

|

| 123 |

+

.env

|

| 124 |

+

.venv

|

| 125 |

+

env/

|

| 126 |

+

venv/

|

| 127 |

+

ENV/

|

| 128 |

+

env.bak/

|

| 129 |

+

venv.bak/

|

| 130 |

+

|

| 131 |

+

# Spyder project settings

|

| 132 |

+

.spyderproject

|

| 133 |

+

.spyproject

|

| 134 |

+

|

| 135 |

+

# Rope project settings

|

| 136 |

+

.ropeproject

|

| 137 |

+

|

| 138 |

+

# mkdocs documentation

|

| 139 |

+

/site

|

| 140 |

+

|

| 141 |

+

# mypy

|

| 142 |

+

.mypy_cache/

|

| 143 |

+

.dmypy.json

|

| 144 |

+

dmypy.json

|

| 145 |

+

|

| 146 |

+

# Pyre type checker

|

| 147 |

+

.pyre/

|

| 148 |

+

|

| 149 |

+

# pytype static type analyzer

|

| 150 |

+

.pytype/

|

| 151 |

+

|

| 152 |

+

# Cython debug symbols

|

| 153 |

+

cython_debug/

|

| 154 |

+

|

| 155 |

+

# PyCharm

|

| 156 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 157 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 158 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 159 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 160 |

+

#.idea/

|

| 161 |

+

|

| 162 |

+

# Custom gitignore

|

| 163 |

+

run_app.sh

|

01-🚀 Homepage.py

ADDED

|

@@ -0,0 +1,149 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

from utils.functional import generate_empty_space, set_page_config

|

| 5 |

+

|

| 6 |

+

# Set page config

|

| 7 |

+

set_page_config("Homepage", "🚀")

|

| 8 |

+

|

| 9 |

+

# First Header

|

| 10 |

+

st.markdown("# 😊 About Me")

|

| 11 |

+

st.write(

|

| 12 |

+

"""

|

| 13 |

+

👋 Hello everyone! My name is Hafidh Soekma Ardiansyah and I'm a student at Surabaya State University, majoring in Management Information Vocational Programs. 🎓

|

| 14 |

+

|

| 15 |

+

I am excited to share with you all about my final project for the semester. 📚 My project is about classifying anime characters from the popular Hunter X Hunter anime series using various machine learning algorithms. 🤖

|

| 16 |

+

|

| 17 |

+

To start the project, I collected a dataset of images featuring the characters from the series. 📷 Then, I preprocessed the data to ensure that the algorithms could efficiently process it. 💻

|

| 18 |

+

|

| 19 |

+

After the data preparation, I used various algorithms such as Deep Learning, Prototypical Networks, and many more to classify the characters. 🧠

|

| 20 |

+

|

| 21 |

+

Through this project, I hope to showcase my skills in machine learning and contribute to the community of anime fans who are interested in image classification. 🙌

|

| 22 |

+

|

| 23 |

+

Thank you for your attention, and please feel free to ask me any questions about the project! 🤗

|

| 24 |

+

|

| 25 |

+

"""

|

| 26 |

+

)

|

| 27 |

+

|

| 28 |

+

st.markdown("# 🕵️ About the Project")

|

| 29 |

+

|

| 30 |

+

st.markdown("### 🦸 HxH Character Anime Classification with Prototypical Networks")

|

| 31 |

+

st.write(

|

| 32 |

+

"Classify your favorite Hunter x Hunter characters with our cutting-edge Prototypical Networks! 🦸♂️🦸♀️"

|

| 33 |

+

)

|

| 34 |

+

go_to_page_0 = st.button(

|

| 35 |

+

"Go to page 0",

|

| 36 |

+

)

|

| 37 |

+

generate_empty_space(2)

|

| 38 |

+

if go_to_page_0:

|

| 39 |

+

switch_page("hxh character anime classification with prototypical networks")

|

| 40 |

+

|

| 41 |

+

st.markdown("### 🔎 HxH Character Anime Detection with Prototypical Networks")

|

| 42 |

+

st.write(

|

| 43 |

+

"Detect the presence of your beloved Hunter x Hunter characters using Prototypical Networks! 🔎🕵️♂️🕵️♀️"

|

| 44 |

+

)

|

| 45 |

+

go_to_page_1 = st.button(

|

| 46 |

+

"Go to page 1",

|

| 47 |

+

)

|

| 48 |

+

generate_empty_space(2)

|

| 49 |

+

if go_to_page_1:

|

| 50 |

+

switch_page("hxh character anime detection with prototypical networks")

|

| 51 |

+

|

| 52 |

+

st.markdown("### 📊 Image Similarity with Prototypical Networks")

|

| 53 |

+

st.write(

|

| 54 |

+

"Discover how similar your Images are to one another with our Prototypical Networks! 📊🤔"

|

| 55 |

+

)

|

| 56 |

+

go_to_page_2 = st.button(

|

| 57 |

+

"Go to page 2",

|

| 58 |

+

)

|

| 59 |

+

generate_empty_space(2)

|

| 60 |

+

if go_to_page_2:

|

| 61 |

+

switch_page("image similarity with prototypical networks")

|

| 62 |

+

|

| 63 |

+

st.markdown("### 🌌 Image Embeddings with Prototypical Networks")

|

| 64 |

+

st.write(

|

| 65 |

+

"Unleash the power of image embeddings to represent Images in a whole new way with our Prototypical Networks! 🌌🤯"

|

| 66 |

+

)

|

| 67 |

+

go_to_page_3 = st.button(

|

| 68 |

+

"Go to page 3",

|

| 69 |

+

)

|

| 70 |

+

generate_empty_space(2)

|

| 71 |

+

if go_to_page_3:

|

| 72 |

+

switch_page("image embeddings with prototypical networks")

|

| 73 |

+

|

| 74 |

+

st.markdown("### 🤖 HxH Character Anime Classification with Deep Learning")

|

| 75 |

+

st.write(

|

| 76 |

+

"Experience the next level of character classification with our Deep Learning models trained on Hunter x Hunter anime characters! 🤖📈"

|

| 77 |

+

)

|

| 78 |

+

go_to_page_4 = st.button(

|

| 79 |

+

"Go to page 4",

|

| 80 |

+

)

|

| 81 |

+

generate_empty_space(2)

|

| 82 |

+

if go_to_page_4:

|

| 83 |

+

switch_page("hxh character anime classification with deep learning")

|

| 84 |

+

|

| 85 |

+

st.markdown("### 📷 HxH Character Anime Detection with Deep Learning")

|

| 86 |

+

st.write(

|

| 87 |

+

"Detect your favorite Hunter x Hunter characters with our Deep Learning models! 📷🕵️♂️🕵️♀️"

|

| 88 |

+

)

|

| 89 |

+

go_to_page_5 = st.button(

|

| 90 |

+

"Go to page 5",

|

| 91 |

+

)

|

| 92 |

+

generate_empty_space(2)

|

| 93 |

+

if go_to_page_5:

|

| 94 |

+

switch_page("hxh character anime detection with deep learning")

|

| 95 |

+

|

| 96 |

+

st.markdown("### 🖼️ Image Similarity with Deep Learning")

|

| 97 |

+

st.write(

|

| 98 |

+

"Discover the similarities and differences between your Images with our Deep Learning models! 🖼️🧐"

|

| 99 |

+

)

|

| 100 |

+

go_to_page_6 = st.button(

|

| 101 |

+

"Go to page 6",

|

| 102 |

+

)

|

| 103 |

+

generate_empty_space(2)

|

| 104 |

+

if go_to_page_6:

|

| 105 |

+

switch_page("image similarity with deep learning")

|

| 106 |

+

|

| 107 |

+

st.markdown("### 📈 Image Embeddings with Deep Learning")

|

| 108 |

+

st.write(

|

| 109 |

+

"Explore a new dimension of Images representations with our Deep Learning-based image embeddings! 📈🔍"

|

| 110 |

+

)

|

| 111 |

+

go_to_page_7 = st.button(

|

| 112 |

+

"Go to page 7",

|

| 113 |

+

)

|

| 114 |

+

generate_empty_space(2)

|

| 115 |

+

if go_to_page_7:

|

| 116 |

+

switch_page("image embeddings with deep learning")

|

| 117 |

+

|

| 118 |

+

st.markdown("### 🎯 Zero-Shot Image Classification with CLIP")

|

| 119 |

+

st.write(

|

| 120 |

+

"Classify Images with zero training using CLIP, a state-of-the-art language-image model! 🎯🤯"

|

| 121 |

+

)

|

| 122 |

+

go_to_page_8 = st.button(

|

| 123 |

+

"Go to page 8",

|

| 124 |

+

)

|

| 125 |

+

generate_empty_space(2)

|

| 126 |

+

if go_to_page_8:

|

| 127 |

+

switch_page("zero-shot image classification with clip")

|

| 128 |

+

|

| 129 |

+

st.markdown("### 😊 More About Me")

|

| 130 |

+

st.write(

|

| 131 |

+

"Curious to learn more about the person behind these amazing projects? Check out my bio and get to know me better! 😊🧑💼"

|

| 132 |

+

)

|

| 133 |

+

go_to_page_9 = st.button(

|

| 134 |

+

"Go to page 9",

|

| 135 |

+

)

|

| 136 |

+

generate_empty_space(2)

|

| 137 |

+

if go_to_page_9:

|

| 138 |

+

switch_page("more about me")

|

| 139 |

+

|

| 140 |

+

st.markdown("### 📚 Glossary")

|

| 141 |

+

st.write(

|

| 142 |

+

"Not sure what some of the terms used in this project mean? Check out our glossary to learn more! 📚🤓"

|

| 143 |

+

)

|

| 144 |

+

go_to_page_10 = st.button(

|

| 145 |

+

"Go to page 10",

|

| 146 |

+

)

|

| 147 |

+

generate_empty_space(2)

|

| 148 |

+

if go_to_page_10:

|

| 149 |

+

switch_page("glossary")

|

README.md

CHANGED

|

@@ -1,13 +1,77 @@

|

|

| 1 |

-

---

|

| 2 |

-

title: Hunter X Hunter Anime Classification

|

| 3 |

-

emoji: 🔥

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo: green

|

| 6 |

-

sdk: streamlit

|

| 7 |

-

sdk_version: 1.19.0

|

| 8 |

-

app_file:

|

| 9 |

-

pinned: false

|

| 10 |

-

license: mit

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Hunter X Hunter Anime Classification

|

| 3 |

+

emoji: 🔥

|

| 4 |

+

colorFrom: white

|

| 5 |

+

colorTo: green

|

| 6 |

+

sdk: streamlit

|

| 7 |

+

sdk_version: 1.19.0

|

| 8 |

+

app_file: "01-🚀 Homepage.py"

|

| 9 |

+

pinned: false

|

| 10 |

+

license: mit

|

| 11 |

+

python_version: 3.9.13

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

# Hunter X Hunter Anime Classification

|

| 15 |

+

|

| 16 |

+

Welcome to the Hunter X Hunter Anime Classification application! This project focuses on classifying anime characters from the popular Hunter X Hunter anime series using various machine learning algorithms.

|

| 17 |

+

|

| 18 |

+

## About Me

|

| 19 |

+

|

| 20 |

+

👋 Hello everyone! My name is Hafidh Soekma Ardiansyah, and I'm a student at Surabaya State University, majoring in Management Information Vocational Programs.

|

| 21 |

+

|

| 22 |

+

I am excited to share with you all about my final project for the semester. My project is about classifying anime characters from the Hunter X Hunter series using various machine learning algorithms. To accomplish this, I collected a dataset of images featuring the characters from the series and preprocessed the data to ensure efficient processing by the algorithms.

|

| 23 |

+

|

| 24 |

+

## About the Project

|

| 25 |

+

|

| 26 |

+

### HxH Character Anime Classification with Prototypical Networks

|

| 27 |

+

|

| 28 |

+

Classify your favorite Hunter x Hunter characters with our cutting-edge Prototypical Networks! 🦸♂️🦸♀️

|

| 29 |

+

|

| 30 |

+

### HxH Character Anime Detection with Prototypical Networks

|

| 31 |

+

|

| 32 |

+

Detect the presence of your beloved Hunter x Hunter characters using Prototypical Networks! 🔎🕵️♂️🕵️♀️

|

| 33 |

+

|

| 34 |

+

### Image Similarity with Prototypical Networks

|

| 35 |

+

|

| 36 |

+

Discover how similar your images are to one another with our Prototypical Networks! 📊🤔

|

| 37 |

+

|

| 38 |

+

### Image Embeddings with Prototypical Networks

|

| 39 |

+

|

| 40 |

+

Unleash the power of image embeddings to represent images in a whole new way with our Prototypical Networks! 🌌🤯

|

| 41 |

+

|

| 42 |

+

### HxH Character Anime Classification with Deep Learning

|

| 43 |

+

|

| 44 |

+

Experience the next level of character classification with our Deep Learning models trained on Hunter x Hunter anime characters! 🤖📈

|

| 45 |

+

|

| 46 |

+

### HxH Character Anime Detection with Deep Learning

|

| 47 |

+

|

| 48 |

+

Detect your favorite Hunter x Hunter characters with our Deep Learning models! 📷🕵️♂️🕵️♀️

|

| 49 |

+

|

| 50 |

+

### Image Similarity with Deep Learning

|

| 51 |

+

|

| 52 |

+

Discover the similarities and differences between your images with our Deep Learning models! 🖼️🧐

|

| 53 |

+

|

| 54 |

+

### Image Embeddings with Deep Learning

|

| 55 |

+

|

| 56 |

+

Explore a new dimension of image representations with our Deep Learning-based image embeddings! 📈🔍

|

| 57 |

+

|

| 58 |

+

### Zero-Shot Image Classification with CLIP

|

| 59 |

+

|

| 60 |

+

Classify images with zero training using CLIP, a state-of-the-art language-image model! 🎯🤯

|

| 61 |

+

|

| 62 |

+

### More About Me

|

| 63 |

+

|

| 64 |

+

Curious to learn more about the person behind these amazing projects? Check out my bio and get to know me better! 😊🧑💼

|

| 65 |

+

|

| 66 |

+

### Glossary

|

| 67 |

+

|

| 68 |

+

Not sure what some of the terms used in this project mean? Check out our glossary to learn more! 📚🤓

|

| 69 |

+

|

| 70 |

+

## How to Run the Application

|

| 71 |

+

|

| 72 |

+

1. Clone the repository: `git clone hhttps://huggingface.co/spaces/hafidhsoekma/Hunter-X-Hunter-Anime-Classification`

|

| 73 |

+

2. Install the required dependencies: `pip install -r requirements.txt`

|

| 74 |

+

3. Run the application: `streamlit run "01-🚀 Homepage.py"`

|

| 75 |

+

4. Open your web browser and navigate to the provided URL to access the application.

|

| 76 |

+

|

| 77 |

+

Feel free to reach out to me if you have any questions or feedback. Enjoy exploring the Hunter X Hunter Anime Classification application!

|

assets/example_images/gon/306e5d35-b301-4299-8022-0c89dc0b7690.png

ADDED

|

assets/example_images/gon/3509df87-a9cd-4500-a07a-0373cbe36715.png

ADDED

|

assets/example_images/gon/620c33e9-59fe-418d-8953-1444e3cfa599.png

ADDED

|

assets/example_images/gon/ab07531a-ab8f-445f-8e9d-d478dd67a73b.png

ADDED

|

assets/example_images/gon/df746603-8dd9-4397-92d8-bf49f8df20d2.png

ADDED

|

assets/example_images/hisoka/04af934d-ffb5-4cc9-83ad-88c585678e55.png

ADDED

|

assets/example_images/hisoka/13d7867a-28e0-45f0-b141-8b8624d0e1e5.png

ADDED

|

assets/example_images/hisoka/41954fdc-d740-49ec-a7ba-15cac7c22c11.png

ADDED

|

assets/example_images/hisoka/422e9625-c523-4532-aa5b-dd4e21b209fc.png

ADDED

|

assets/example_images/hisoka/80f95e87-2f7a-4808-9d01-4383feab90e2.png

ADDED

|

assets/example_images/killua/0d2a44c4-c11e-474e-ac8b-7c0e84c7f879.png

ADDED

|

assets/example_images/killua/2817e633-3239-41f1-a2bf-1be874bddf5e.png

ADDED

|

assets/example_images/killua/4501242f-9bda-49b6-a3c5-23f97c8353c3.png

ADDED

|

assets/example_images/killua/8aca13ab-a5b2-4192-ae4b-3b73e8c663f3.png

ADDED

|

assets/example_images/killua/8b7e1854-8ca7-4ef1-8887-2c64b0309712.png

ADDED

|

assets/example_images/kurapika/02265b41-9833-41eb-ad60-e043753f74b9.png

ADDED

|

assets/example_images/kurapika/0650e968-d61b-4c4a-98bd-7ecdd2b991de.png

ADDED

|

assets/example_images/kurapika/2728dfb5-788b-4be7-ad1b-e6d23297ecf3.png

ADDED

|

assets/example_images/kurapika/3613a920-3efe-49d8-a39a-227bddefa86a.png

ADDED

|

assets/example_images/kurapika/405b19b0-d982-44aa-b4c8-18e3a5e373b3.png

ADDED

|

assets/example_images/leorio/00beabbf-063e-42b3-85e2-ce51c586195f.png

ADDED

|

assets/example_images/leorio/613e8ffb-7534-481d-b780-6d23ecd31de4.png

ADDED

|

assets/example_images/leorio/af2a59f2-fcf2-4621-bb4f-6540687b390a.png

ADDED

|

assets/example_images/leorio/b134831a-5ee0-40c8-9a25-1a11329741d3.png

ADDED

|

assets/example_images/leorio/ccc511a0-8a98-481c-97a1-c564a874bb60.png

ADDED

|

assets/example_images/others/Presiden_Sukarno.jpg

ADDED

|

assets/example_images/others/Tipe-Nen-yang-ada-di-Anime-Hunter-x-Hunter.jpg

ADDED

|

assets/example_images/others/d29492bbe7604505a6f1b5394f62b393.png

ADDED

|

assets/example_images/others/f575c3a5f23146b59bac51267db0ddb3.png

ADDED

|

assets/example_images/others/fa4548a8f57041edb7fa19f8bf302326.png

ADDED

|

assets/example_images/others/fb7c8048d54f48a29ab6aaf7f8383712.png

ADDED

|

assets/example_images/others/fe96e8fce17b474195f8add2632b758e.png

ADDED

|

assets/images/author.jpg

ADDED

|

models/anime_face_detection_model/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .ssd_model import SingleShotDetectorModel

|

models/anime_face_detection_model/ssd_model.py

ADDED

|

@@ -0,0 +1,454 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

|

| 4 |

+

sys.path.append(os.path.join(os.path.dirname(__file__), "..", ".."))

|

| 5 |

+

|

| 6 |

+

import time

|

| 7 |

+

from itertools import product as product

|

| 8 |

+

from math import ceil

|

| 9 |

+

|

| 10 |

+

import cv2

|

| 11 |

+

import numpy as np

|

| 12 |

+

import torch

|

| 13 |

+

import torch.nn as nn

|

| 14 |

+

import torch.nn.functional as F

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

class BasicConv2d(nn.Module):

|

| 18 |

+

def __init__(self, in_channels, out_channels, **kwargs):

|

| 19 |

+

super(BasicConv2d, self).__init__()

|

| 20 |

+

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

|

| 21 |

+

self.bn = nn.BatchNorm2d(out_channels, eps=1e-5)

|

| 22 |

+

|

| 23 |

+

def forward(self, x):

|

| 24 |

+

x = self.conv(x)

|

| 25 |

+

x = self.bn(x)

|

| 26 |

+

return F.relu(x, inplace=True)

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

class Inception(nn.Module):

|

| 30 |

+

def __init__(self):

|

| 31 |

+

super(Inception, self).__init__()

|

| 32 |

+

self.branch1x1 = BasicConv2d(128, 32, kernel_size=1, padding=0)

|

| 33 |

+

self.branch1x1_2 = BasicConv2d(128, 32, kernel_size=1, padding=0)

|

| 34 |

+

self.branch3x3_reduce = BasicConv2d(128, 24, kernel_size=1, padding=0)

|

| 35 |

+

self.branch3x3 = BasicConv2d(24, 32, kernel_size=3, padding=1)

|

| 36 |

+

self.branch3x3_reduce_2 = BasicConv2d(128, 24, kernel_size=1, padding=0)

|

| 37 |

+

self.branch3x3_2 = BasicConv2d(24, 32, kernel_size=3, padding=1)

|

| 38 |

+

self.branch3x3_3 = BasicConv2d(32, 32, kernel_size=3, padding=1)

|

| 39 |

+

|

| 40 |

+

def forward(self, x):

|

| 41 |

+

branch1x1 = self.branch1x1(x)

|

| 42 |

+

|

| 43 |

+

branch1x1_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

|

| 44 |

+

branch1x1_2 = self.branch1x1_2(branch1x1_pool)

|

| 45 |

+

|

| 46 |

+

branch3x3_reduce = self.branch3x3_reduce(x)

|

| 47 |

+

branch3x3 = self.branch3x3(branch3x3_reduce)

|

| 48 |

+

|

| 49 |

+

branch3x3_reduce_2 = self.branch3x3_reduce_2(x)

|

| 50 |

+

branch3x3_2 = self.branch3x3_2(branch3x3_reduce_2)

|

| 51 |

+

branch3x3_3 = self.branch3x3_3(branch3x3_2)

|

| 52 |

+

|

| 53 |

+

outputs = (branch1x1, branch1x1_2, branch3x3, branch3x3_3)

|

| 54 |

+

return torch.cat(outputs, 1)

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

class CRelu(nn.Module):

|

| 58 |

+

def __init__(self, in_channels, out_channels, **kwargs):

|

| 59 |

+

super(CRelu, self).__init__()

|

| 60 |

+

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

|

| 61 |

+

self.bn = nn.BatchNorm2d(out_channels, eps=1e-5)

|

| 62 |

+

|

| 63 |

+

def forward(self, x):

|

| 64 |

+

x = self.conv(x)

|

| 65 |

+

x = self.bn(x)

|

| 66 |

+

x = torch.cat((x, -x), 1)

|

| 67 |

+

x = F.relu(x, inplace=True)

|

| 68 |

+

return x

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

class FaceBoxes(nn.Module):

|

| 72 |

+

def __init__(self, phase, size, num_classes):

|

| 73 |

+

super(FaceBoxes, self).__init__()

|

| 74 |

+

self.phase = phase

|

| 75 |

+

self.num_classes = num_classes

|

| 76 |

+

self.size = size

|

| 77 |

+

|

| 78 |

+

self.conv1 = CRelu(3, 24, kernel_size=7, stride=4, padding=3)

|

| 79 |

+

self.conv2 = CRelu(48, 64, kernel_size=5, stride=2, padding=2)

|

| 80 |

+

|

| 81 |

+

self.inception1 = Inception()

|

| 82 |

+

self.inception2 = Inception()

|

| 83 |

+

self.inception3 = Inception()

|

| 84 |

+

|

| 85 |

+

self.conv3_1 = BasicConv2d(128, 128, kernel_size=1, stride=1, padding=0)

|

| 86 |

+

self.conv3_2 = BasicConv2d(128, 256, kernel_size=3, stride=2, padding=1)

|

| 87 |

+

|

| 88 |

+

self.conv4_1 = BasicConv2d(256, 128, kernel_size=1, stride=1, padding=0)

|

| 89 |

+

self.conv4_2 = BasicConv2d(128, 256, kernel_size=3, stride=2, padding=1)

|

| 90 |

+

|

| 91 |

+

self.loc, self.conf = self.multibox(self.num_classes)

|

| 92 |

+

|

| 93 |

+

if self.phase == "test":

|

| 94 |

+

self.softmax = nn.Softmax(dim=-1)

|

| 95 |

+

|

| 96 |

+

if self.phase == "train":

|

| 97 |

+

for m in self.modules():

|

| 98 |

+

if isinstance(m, nn.Conv2d):

|

| 99 |

+

if m.bias is not None:

|

| 100 |

+

nn.init.xavier_normal_(m.weight.data)

|

| 101 |

+

m.bias.data.fill_(0.02)

|

| 102 |

+

else:

|

| 103 |

+

m.weight.data.normal_(0, 0.01)

|

| 104 |

+

elif isinstance(m, nn.BatchNorm2d):

|

| 105 |

+

m.weight.data.fill_(1)

|

| 106 |

+

m.bias.data.zero_()

|

| 107 |

+

|

| 108 |

+

def multibox(self, num_classes):

|

| 109 |

+

loc_layers = []

|

| 110 |

+

conf_layers = []

|

| 111 |

+

loc_layers += [nn.Conv2d(128, 21 * 4, kernel_size=3, padding=1)]

|

| 112 |

+

conf_layers += [nn.Conv2d(128, 21 * num_classes, kernel_size=3, padding=1)]

|

| 113 |

+

loc_layers += [nn.Conv2d(256, 1 * 4, kernel_size=3, padding=1)]

|

| 114 |

+

conf_layers += [nn.Conv2d(256, 1 * num_classes, kernel_size=3, padding=1)]

|

| 115 |

+

loc_layers += [nn.Conv2d(256, 1 * 4, kernel_size=3, padding=1)]

|

| 116 |

+

conf_layers += [nn.Conv2d(256, 1 * num_classes, kernel_size=3, padding=1)]

|

| 117 |

+

return nn.Sequential(*loc_layers), nn.Sequential(*conf_layers)

|

| 118 |

+

|

| 119 |

+

def forward(self, x):

|

| 120 |

+

detection_sources = list()

|

| 121 |

+

loc = list()

|

| 122 |

+

conf = list()

|

| 123 |

+

|

| 124 |

+

x = self.conv1(x)

|

| 125 |

+

x = F.max_pool2d(x, kernel_size=3, stride=2, padding=1)

|

| 126 |

+

x = self.conv2(x)

|

| 127 |

+

x = F.max_pool2d(x, kernel_size=3, stride=2, padding=1)

|

| 128 |

+

x = self.inception1(x)

|

| 129 |

+

x = self.inception2(x)

|

| 130 |

+

x = self.inception3(x)

|

| 131 |

+

detection_sources.append(x)

|

| 132 |

+

|

| 133 |

+

x = self.conv3_1(x)

|

| 134 |

+

x = self.conv3_2(x)

|

| 135 |

+

detection_sources.append(x)

|

| 136 |

+

|

| 137 |

+

x = self.conv4_1(x)

|

| 138 |

+

x = self.conv4_2(x)

|

| 139 |

+

detection_sources.append(x)

|

| 140 |

+

|

| 141 |

+

for x, l, c in zip(detection_sources, self.loc, self.conf):

|

| 142 |

+

loc.append(l(x).permute(0, 2, 3, 1).contiguous())

|

| 143 |

+

conf.append(c(x).permute(0, 2, 3, 1).contiguous())

|

| 144 |

+

|

| 145 |

+

loc = torch.cat([o.view(o.size(0), -1) for o in loc], 1)

|

| 146 |

+

conf = torch.cat([o.view(o.size(0), -1) for o in conf], 1)

|

| 147 |

+

|

| 148 |

+

if self.phase == "test":

|

| 149 |

+

output = (

|

| 150 |

+

loc.view(loc.size(0), -1, 4),

|

| 151 |

+

self.softmax(conf.view(-1, self.num_classes)),

|

| 152 |

+

)

|

| 153 |

+

else:

|

| 154 |

+

output = (

|

| 155 |

+

loc.view(loc.size(0), -1, 4),

|

| 156 |

+

conf.view(conf.size(0), -1, self.num_classes),

|

| 157 |

+

)

|

| 158 |

+

|

| 159 |

+

return output

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

class PriorBox(object):

|

| 163 |

+

def __init__(self, cfg, image_size=None, phase="train"):

|

| 164 |

+

super(PriorBox, self).__init__()

|

| 165 |

+

# self.aspect_ratios = cfg['aspect_ratios']

|

| 166 |

+

self.min_sizes = cfg["min_sizes"]

|

| 167 |

+

self.steps = cfg["steps"]

|

| 168 |

+

self.clip = cfg["clip"]

|

| 169 |

+

self.image_size = image_size

|

| 170 |

+

self.feature_maps = [

|

| 171 |

+

(ceil(self.image_size[0] / step), ceil(self.image_size[1] / step))

|

| 172 |

+

for step in self.steps

|

| 173 |

+

]

|

| 174 |

+

self.feature_maps = tuple(self.feature_maps)

|

| 175 |

+

|

| 176 |

+

def forward(self):

|

| 177 |

+

anchors = []

|

| 178 |

+

for k, f in enumerate(self.feature_maps):

|

| 179 |

+

min_sizes = self.min_sizes[k]

|

| 180 |

+

for i, j in product(range(f[0]), range(f[1])):

|

| 181 |

+

for min_size in min_sizes:

|

| 182 |

+

s_kx = min_size / self.image_size[1]

|

| 183 |

+

s_ky = min_size / self.image_size[0]

|

| 184 |

+

if min_size == 32:

|

| 185 |

+

dense_cx = [

|

| 186 |

+

x * self.steps[k] / self.image_size[1]

|

| 187 |

+

for x in [j + 0, j + 0.25, j + 0.5, j + 0.75]

|

| 188 |

+

]

|

| 189 |

+

dense_cy = [

|

| 190 |

+

y * self.steps[k] / self.image_size[0]

|

| 191 |

+

for y in [i + 0, i + 0.25, i + 0.5, i + 0.75]

|

| 192 |

+

]

|

| 193 |

+

for cy, cx in product(dense_cy, dense_cx):

|

| 194 |

+

anchors += [cx, cy, s_kx, s_ky]

|

| 195 |

+

elif min_size == 64:

|

| 196 |

+

dense_cx = [

|

| 197 |

+

x * self.steps[k] / self.image_size[1]

|

| 198 |

+

for x in [j + 0, j + 0.5]

|

| 199 |

+

]

|

| 200 |

+

dense_cy = [

|

| 201 |

+

y * self.steps[k] / self.image_size[0]

|

| 202 |

+

for y in [i + 0, i + 0.5]

|

| 203 |

+

]

|

| 204 |

+

for cy, cx in product(dense_cy, dense_cx):

|

| 205 |

+

anchors += [cx, cy, s_kx, s_ky]

|

| 206 |

+

else:

|

| 207 |

+

cx = (j + 0.5) * self.steps[k] / self.image_size[1]

|

| 208 |

+

cy = (i + 0.5) * self.steps[k] / self.image_size[0]

|

| 209 |

+

anchors += [cx, cy, s_kx, s_ky]

|

| 210 |

+

# back to torch land

|

| 211 |

+

output = torch.Tensor(anchors).view(-1, 4)

|

| 212 |

+

if self.clip:

|

| 213 |

+

output.clamp_(max=1, min=0)

|

| 214 |

+

return output

|

| 215 |

+

|

| 216 |

+

|

| 217 |

+

def mymax(a, b):

|

| 218 |

+

if a >= b:

|

| 219 |

+

return a

|

| 220 |

+

else:

|

| 221 |

+

return b

|

| 222 |

+

|

| 223 |

+

|

| 224 |

+

def mymin(a, b):

|

| 225 |

+

if a >= b:

|

| 226 |

+

return b

|

| 227 |

+

else:

|

| 228 |

+

return a

|

| 229 |

+

|

| 230 |

+

|

| 231 |

+

def cpu_nms(dets, thresh):

|

| 232 |

+

x1 = dets[:, 0]

|

| 233 |

+

y1 = dets[:, 1]

|

| 234 |

+

x2 = dets[:, 2]

|

| 235 |

+

y2 = dets[:, 3]

|

| 236 |

+

scores = dets[:, 4]

|

| 237 |

+

areas = (x2 - x1 + 1) * (y2 - y1 + 1)

|

| 238 |

+

order = scores.argsort()[::-1]

|

| 239 |

+

ndets = dets.shape[0]

|

| 240 |

+

suppressed = np.zeros((ndets), dtype=int)

|

| 241 |

+

keep = []

|

| 242 |

+

for _i in range(ndets):

|

| 243 |

+

i = order[_i]

|

| 244 |

+

if suppressed[i] == 1:

|

| 245 |

+

continue

|

| 246 |

+

keep.append(i)

|

| 247 |

+

ix1 = x1[i]

|

| 248 |

+

iy1 = y1[i]

|

| 249 |

+

ix2 = x2[i]

|

| 250 |

+

iy2 = y2[i]

|

| 251 |

+

iarea = areas[i]

|

| 252 |

+

for _j in range(_i + 1, ndets):

|

| 253 |

+

j = order[_j]

|

| 254 |

+

if suppressed[j] == 1:

|

| 255 |

+

continue

|

| 256 |

+

xx1 = mymax(ix1, x1[j])

|

| 257 |

+

yy1 = mymax(iy1, y1[j])

|

| 258 |

+

xx2 = mymin(ix2, x2[j])

|

| 259 |

+

yy2 = mymin(iy2, y2[j])

|

| 260 |

+

w = mymax(0.0, xx2 - xx1 + 1)

|

| 261 |

+

h = mymax(0.0, yy2 - yy1 + 1)

|

| 262 |

+

inter = w * h

|

| 263 |

+

ovr = inter / (iarea + areas[j] - inter)

|

| 264 |

+

if ovr >= thresh:

|

| 265 |

+

suppressed[j] = 1

|

| 266 |

+

return tuple(keep)

|

| 267 |

+

|

| 268 |

+

|

| 269 |

+

def nms(dets, thresh, force_cpu=False):

|

| 270 |

+

"""Dispatch to either CPU or GPU NMS implementations."""

|

| 271 |

+

|

| 272 |

+

if dets.shape[0] == 0:

|

| 273 |

+

return ()

|

| 274 |

+

if force_cpu:

|

| 275 |

+

# return cpu_soft_nms(dets, thresh, method = 0)

|

| 276 |

+

return cpu_nms(dets, thresh)

|

| 277 |

+

return cpu_nms(dets, thresh)

|

| 278 |

+

|

| 279 |

+

|

| 280 |

+

# Adapted from https://github.com/Hakuyume/chainer-ssd

|

| 281 |

+

def decode(loc, priors, variances):

|

| 282 |

+

"""Decode locations from predictions using priors to undo

|

| 283 |

+

the encoding we did for offset regression at train time.

|

| 284 |

+

Args:

|

| 285 |

+

loc (tensor): location predictions for loc layers,

|

| 286 |

+

Shape: [num_priors,4]

|

| 287 |

+

priors (tensor): Prior boxes in center-offset form.

|

| 288 |

+

Shape: [num_priors,4].

|

| 289 |

+

variances: (list[float]) Variances of priorboxes

|

| 290 |

+

Return:

|

| 291 |

+

decoded bounding box predictions

|

| 292 |

+

"""

|

| 293 |

+

|

| 294 |

+

boxes = torch.cat(

|

| 295 |

+

(

|

| 296 |

+

priors[:, :2] + loc[:, :2] * variances[0] * priors[:, 2:],

|

| 297 |