This view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +7 -9

- datid3d_gradio_app.py +357 -0

- datid3d_test.py +251 -0

- datid3d_train.py +105 -0

- eg3d/LICENSE.txt +99 -0

- eg3d/README.md +216 -0

- eg3d/calc_metrics.py +190 -0

- eg3d/camera_utils.py +149 -0

- eg3d/dataset_tool.py +458 -0

- eg3d/datid3d_data_gen.py +204 -0

- eg3d/dnnlib/__init__.py +11 -0

- eg3d/dnnlib/util.py +493 -0

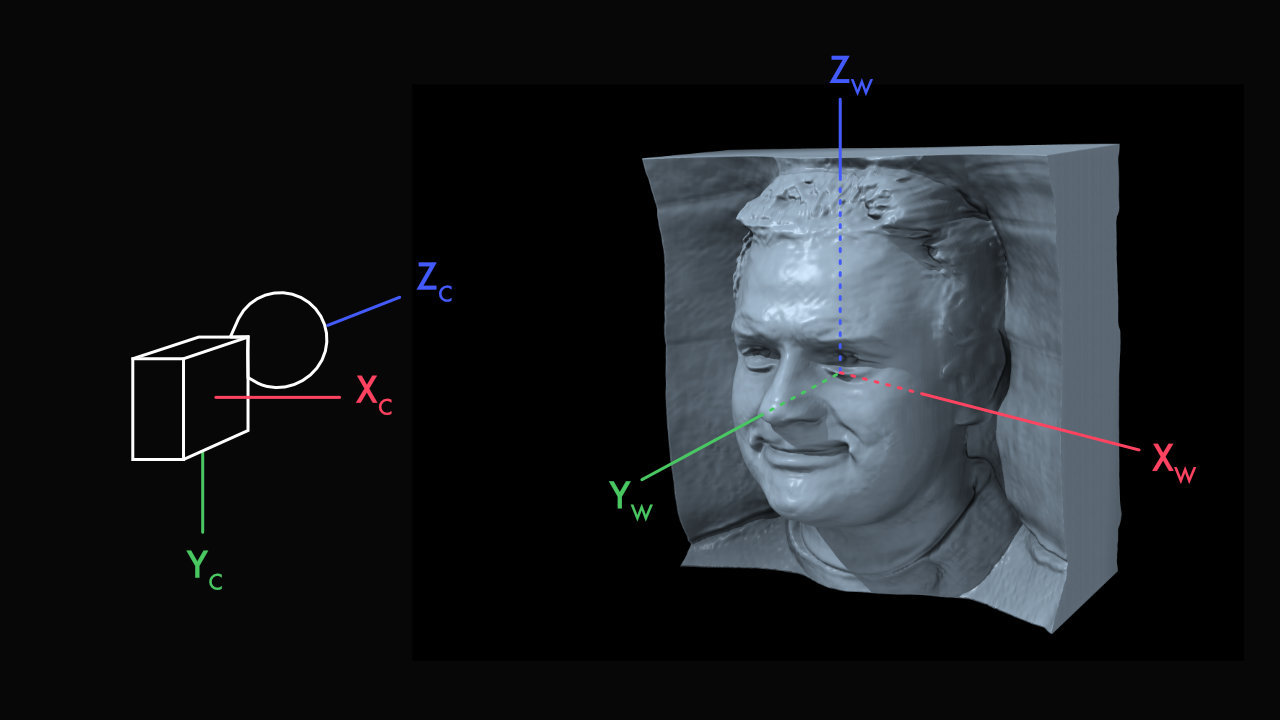

- eg3d/docs/camera_conventions.md +2 -0

- eg3d/docs/camera_coordinate_conventions.jpg +0 -0

- eg3d/docs/models.md +71 -0

- eg3d/docs/teaser.jpeg +0 -0

- eg3d/docs/training_guide.md +165 -0

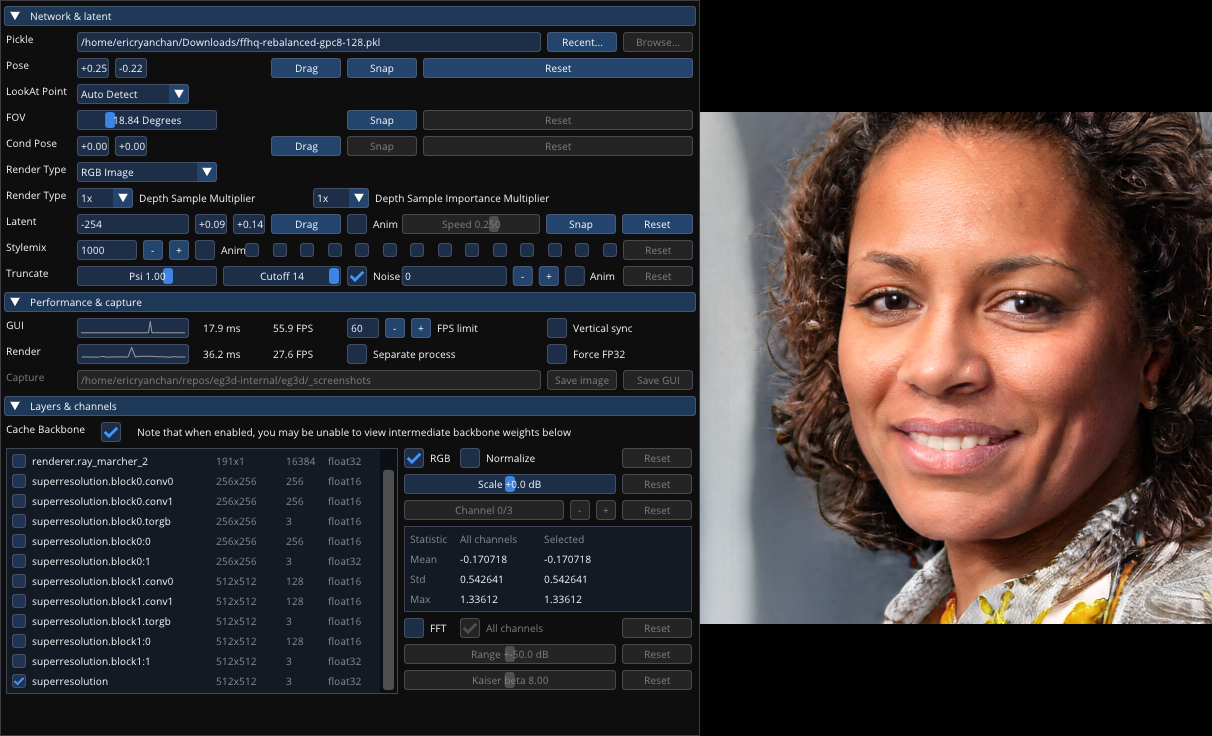

- eg3d/docs/visualizer.png +0 -0

- eg3d/docs/visualizer_guide.md +66 -0

- eg3d/gen_samples.py +280 -0

- eg3d/gen_videos.py +371 -0

- eg3d/gui_utils/__init__.py +11 -0

- eg3d/gui_utils/gl_utils.py +376 -0

- eg3d/gui_utils/glfw_window.py +231 -0

- eg3d/gui_utils/imgui_utils.py +171 -0

- eg3d/gui_utils/imgui_window.py +105 -0

- eg3d/gui_utils/text_utils.py +125 -0

- eg3d/legacy.py +325 -0

- eg3d/metrics/__init__.py +11 -0

- eg3d/metrics/equivariance.py +269 -0

- eg3d/metrics/frechet_inception_distance.py +43 -0

- eg3d/metrics/inception_score.py +40 -0

- eg3d/metrics/kernel_inception_distance.py +48 -0

- eg3d/metrics/metric_main.py +155 -0

- eg3d/metrics/metric_utils.py +281 -0

- eg3d/metrics/perceptual_path_length.py +127 -0

- eg3d/metrics/precision_recall.py +64 -0

- eg3d/projector/w_plus_projector.py +182 -0

- eg3d/projector/w_projector.py +177 -0

- eg3d/run_inversion.py +106 -0

- eg3d/shape_utils.py +124 -0

- eg3d/torch_utils/__init__.py +11 -0

- eg3d/torch_utils/custom_ops.py +159 -0

- eg3d/torch_utils/misc.py +268 -0

- eg3d/torch_utils/ops/__init__.py +11 -0

- eg3d/torch_utils/ops/bias_act.cpp +103 -0

- eg3d/torch_utils/ops/bias_act.cu +177 -0

- eg3d/torch_utils/ops/bias_act.h +42 -0

- eg3d/torch_utils/ops/bias_act.py +211 -0

- eg3d/torch_utils/ops/conv2d_gradfix.py +199 -0

README.md

CHANGED

|

@@ -1,13 +1,11 @@

|

|

| 1 |

---

|

| 2 |

-

title: DATID

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 3.

|

| 8 |

-

app_file:

|

| 9 |

pinned: false

|

| 10 |

-

license:

|

| 11 |

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: DATID-3D

|

| 3 |

+

emoji: 🛋

|

| 4 |

+

colorFrom: gray

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 3.28.3

|

| 8 |

+

app_file: datid3d_gradio_app.py

|

| 9 |

pinned: false

|

| 10 |

+

license: mit

|

| 11 |

---

|

|

|

|

|

|

datid3d_gradio_app.py

ADDED

|

@@ -0,0 +1,357 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import gradio as gr

|

| 3 |

+

import os

|

| 4 |

+

import shutil

|

| 5 |

+

from glob import glob

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import numpy as np

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

from torchvision.utils import make_grid, save_image

|

| 10 |

+

from torchvision.io import read_image

|

| 11 |

+

import torchvision.transforms.functional as F

|

| 12 |

+

from functools import partial

|

| 13 |

+

from datetime import datetime

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

plt.rcParams["savefig.bbox"] = 'tight'

|

| 18 |

+

|

| 19 |

+

def show(imgs):

|

| 20 |

+

if not isinstance(imgs, list):

|

| 21 |

+

imgs = [imgs]

|

| 22 |

+

fig, axs = plt.subplots(ncols=len(imgs), squeeze=False)

|

| 23 |

+

for i, img in enumerate(imgs):

|

| 24 |

+

img = F.to_pil_image(img.detach())

|

| 25 |

+

axs[0, i].imshow(np.asarray(img))

|

| 26 |

+

axs[0, i].set(xticklabels=[], yticklabels=[], xticks=[], yticks=[])

|

| 27 |

+

|

| 28 |

+

class Intermediate:

|

| 29 |

+

def __init__(self):

|

| 30 |

+

self.input_img = None

|

| 31 |

+

self.input_img_time = 0

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

model_ckpts = {"elf": "ffhq-elf.pkl",

|

| 35 |

+

"greek_statue": "ffhq-greek_statue.pkl",

|

| 36 |

+

"hobbit": "ffhq-hobbit.pkl",

|

| 37 |

+

"lego": "ffhq-lego.pkl",

|

| 38 |

+

"masquerade": "ffhq-masquerade.pkl",

|

| 39 |

+

"neanderthal": "ffhq-neanderthal.pkl",

|

| 40 |

+

"orc": "ffhq-orc.pkl",

|

| 41 |

+

"pixar": "ffhq-pixar.pkl",

|

| 42 |

+

"skeleton": "ffhq-skeleton.pkl",

|

| 43 |

+

"stone_golem": "ffhq-stone_golem.pkl",

|

| 44 |

+

"super_mario": "ffhq-super_mario.pkl",

|

| 45 |

+

"tekken": "ffhq-tekken.pkl",

|

| 46 |

+

"yoda": "ffhq-yoda.pkl",

|

| 47 |

+

"zombie": "ffhq-zombie.pkl",

|

| 48 |

+

"cat_in_Zootopia": "cat-cat_in_Zootopia.pkl",

|

| 49 |

+

"fox_in_Zootopia": "cat-fox_in_Zootopia.pkl",

|

| 50 |

+

"golden_aluminum_animal": "cat-golden_aluminum_animal.pkl",

|

| 51 |

+

}

|

| 52 |

+

|

| 53 |

+

manip_model_ckpts = {"super_mario": "ffhq-super_mario.pkl",

|

| 54 |

+

"lego": "ffhq-lego.pkl",

|

| 55 |

+

"neanderthal": "ffhq-neanderthal.pkl",

|

| 56 |

+

"orc": "ffhq-orc.pkl",

|

| 57 |

+

"pixar": "ffhq-pixar.pkl",

|

| 58 |

+

"skeleton": "ffhq-skeleton.pkl",

|

| 59 |

+

"stone_golem": "ffhq-stone_golem.pkl",

|

| 60 |

+

"tekken": "ffhq-tekken.pkl",

|

| 61 |

+

"greek_statue": "ffhq-greek_statue.pkl",

|

| 62 |

+

"yoda": "ffhq-yoda.pkl",

|

| 63 |

+

"zombie": "ffhq-zombie.pkl",

|

| 64 |

+

"elf": "ffhq-elf.pkl",

|

| 65 |

+

}

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

def TextGuidedImageTo3D(intermediate, img, model_name, num_inversion_steps, truncation):

|

| 69 |

+

if img != intermediate.input_img:

|

| 70 |

+

if os.path.exists('input_imgs_gradio'):

|

| 71 |

+

shutil.rmtree('input_imgs_gradio')

|

| 72 |

+

os.makedirs('input_imgs_gradio', exist_ok=True)

|

| 73 |

+

img.save('input_imgs_gradio/input.png')

|

| 74 |

+

intermediate.input_img = img

|

| 75 |

+

now = datetime.now()

|

| 76 |

+

intermediate.input_img_time = now.strftime('%Y-%m-%d_%H:%M:%S')

|

| 77 |

+

|

| 78 |

+

all_model_names = manip_model_ckpts.keys()

|

| 79 |

+

generator_type = 'ffhq'

|

| 80 |

+

|

| 81 |

+

if model_name == 'all':

|

| 82 |

+

_no_video_models = []

|

| 83 |

+

for _model_name in all_model_names:

|

| 84 |

+

if not os.path.exists(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/finetuned___{model_ckpts[_model_name]}__input_inv.mp4'):

|

| 85 |

+

print()

|

| 86 |

+

_no_video_models.append(_model_name)

|

| 87 |

+

|

| 88 |

+

model_names_command = ''

|

| 89 |

+

for _model_name in _no_video_models:

|

| 90 |

+

if not os.path.exists(f'finetuned/{model_ckpts[_model_name]}'):

|

| 91 |

+

command = f"""wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/{model_ckpts[_model_name]} -O finetuned/{model_ckpts[_model_name]}

|

| 92 |

+

"""

|

| 93 |

+

os.system(command)

|

| 94 |

+

|

| 95 |

+

model_names_command += f"finetuned/{model_ckpts[_model_name]} "

|

| 96 |

+

|

| 97 |

+

w_pths = sorted(glob(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/3_inversion_result/*.pt'))

|

| 98 |

+

if len(w_pths) == 0:

|

| 99 |

+

mode = 'manip'

|

| 100 |

+

else:

|

| 101 |

+

mode = 'manip_from_inv'

|

| 102 |

+

|

| 103 |

+

if len(_no_video_models) > 0:

|

| 104 |

+

command = f"""python datid3d_test.py --mode {mode} \

|

| 105 |

+

--indir='input_imgs_gradio' \

|

| 106 |

+

--generator_type={generator_type} \

|

| 107 |

+

--outdir='test_runs' \

|

| 108 |

+

--trunc={truncation} \

|

| 109 |

+

--network {model_names_command} \

|

| 110 |

+

--num_inv_steps={num_inversion_steps} \

|

| 111 |

+

--down_src_eg3d_from_nvidia=False \

|

| 112 |

+

--name_tag='_gradio_{intermediate.input_img_time}' \

|

| 113 |

+

--shape=False \

|

| 114 |

+

--w_frames 60

|

| 115 |

+

"""

|

| 116 |

+

print(command)

|

| 117 |

+

os.system(command)

|

| 118 |

+

|

| 119 |

+

aligned_img_pth = sorted(glob(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/2_pose_result/*.png'))[0]

|

| 120 |

+

aligned_img = Image.open(aligned_img_pth)

|

| 121 |

+

|

| 122 |

+

result_imgs = []

|

| 123 |

+

for _model_name in all_model_names:

|

| 124 |

+

img_pth = f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/finetuned___{model_ckpts[_model_name]}__input_inv.png'

|

| 125 |

+

result_imgs.append(read_image(img_pth))

|

| 126 |

+

|

| 127 |

+

result_grid_pt = make_grid(result_imgs, nrow=1)

|

| 128 |

+

result_img = F.to_pil_image(result_grid_pt)

|

| 129 |

+

else:

|

| 130 |

+

if not os.path.exists(f'finetuned/{model_ckpts[model_name]}'):

|

| 131 |

+

command = f"""wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/{model_ckpts[model_name]} -O finetuned/{model_ckpts[model_name]}

|

| 132 |

+

"""

|

| 133 |

+

os.system(command)

|

| 134 |

+

|

| 135 |

+

if not os.path.exists(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/finetuned___{model_ckpts[model_name]}__input_inv.mp4'):

|

| 136 |

+

w_pths = sorted(glob(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/3_inversion_result/*.pt'))

|

| 137 |

+

if len(w_pths) == 0:

|

| 138 |

+

mode = 'manip'

|

| 139 |

+

else:

|

| 140 |

+

mode = 'manip_from_inv'

|

| 141 |

+

|

| 142 |

+

command = f"""python datid3d_test.py --mode {mode} \

|

| 143 |

+

--indir='input_imgs_gradio' \

|

| 144 |

+

--generator_type={generator_type} \

|

| 145 |

+

--outdir='test_runs' \

|

| 146 |

+

--trunc={truncation} \

|

| 147 |

+

--network finetuned/{model_ckpts[model_name]} \

|

| 148 |

+

--num_inv_steps={num_inversion_steps} \

|

| 149 |

+

--down_src_eg3d_from_nvidia=0 \

|

| 150 |

+

--name_tag='_gradio_{intermediate.input_img_time}' \

|

| 151 |

+

--shape=False

|

| 152 |

+

--w_frames 60"""

|

| 153 |

+

print(command)

|

| 154 |

+

os.system(command)

|

| 155 |

+

|

| 156 |

+

aligned_img_pth = sorted(glob(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/2_pose_result/*.png'))[0]

|

| 157 |

+

aligned_img = Image.open(aligned_img_pth)

|

| 158 |

+

|

| 159 |

+

result_img_pth = sorted(glob(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/*{model_ckpts[model_name]}*.png'))[0]

|

| 160 |

+

result_img = Image.open(result_img_pth)

|

| 161 |

+

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

if model_name=='all':

|

| 166 |

+

result_video_pth = f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/finetuned___ffhq-all__input_inv.mp4'

|

| 167 |

+

if os.path.exists(result_video_pth):

|

| 168 |

+

os.remove(result_video_pth)

|

| 169 |

+

command = 'ffmpeg '

|

| 170 |

+

for _model_name in all_model_names:

|

| 171 |

+

command += f'-i test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/finetuned___ffhq-{_model_name}.pkl__input_inv.mp4 '

|

| 172 |

+

command += '-filter_complex "[0:v]scale=2*iw:-1[v0];[1:v]scale=2*iw:-1[v1];[2:v]scale=2*iw:-1[v2];[3:v]scale=2*iw:-1[v3];[4:v]scale=2*iw:-1[v4];[5:v]scale=2*iw:-1[v5];[6:v]scale=2*iw:-1[v6];[7:v]scale=2*iw:-1[v7];[8:v]scale=2*iw:-1[v8];[9:v]scale=2*iw:-1[v9];[10:v]scale=2*iw:-1[v10];[11:v]scale=2*iw:-1[v11];[v0][v1][v2][v3][v4][v5][v6][v7][v8][v9][v10][v11]xstack=inputs=12:layout=0_0|w0_0|w0+w1_0|w0+w1+w2_0|0_h0|w4_h0|w4+w5_h0|w4+w5+w6_h0|0_h0+h4|w8_h0+h4|w8+w9_h0+h4|w8+w9+w10_h0+h4" '

|

| 173 |

+

command += f" -vcodec libx264 {result_video_pth}"

|

| 174 |

+

print()

|

| 175 |

+

print(command)

|

| 176 |

+

os.system(command)

|

| 177 |

+

|

| 178 |

+

else:

|

| 179 |

+

result_video_pth = sorted(glob(f'test_runs/manip_3D_recon_gradio_{intermediate.input_img_time}/4_manip_result/*{model_ckpts[model_name]}*.mp4'))[0]

|

| 180 |

+

|

| 181 |

+

return aligned_img, result_img, result_video_pth

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

def SampleImage(model_name, num_samples, truncation, seed):

|

| 185 |

+

seed_list = np.random.RandomState(seed).choice(np.arange(10000), num_samples).tolist()

|

| 186 |

+

seeds = ''

|

| 187 |

+

for seed in seed_list:

|

| 188 |

+

seeds += f'{seed},'

|

| 189 |

+

seeds = seeds[:-1]

|

| 190 |

+

|

| 191 |

+

if model_name in ["fox_in_Zootopia", "cat_in_Zootopia", "golden_aluminum_animal"]:

|

| 192 |

+

generator_type = 'cat'

|

| 193 |

+

else:

|

| 194 |

+

generator_type = 'ffhq'

|

| 195 |

+

|

| 196 |

+

if not os.path.exists(f'finetuned/{model_ckpts[model_name]}'):

|

| 197 |

+

command = f"""wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/{model_ckpts[model_name]} -O finetuned/{model_ckpts[model_name]}

|

| 198 |

+

"""

|

| 199 |

+

os.system(command)

|

| 200 |

+

|

| 201 |

+

command = f"""python datid3d_test.py --mode image \

|

| 202 |

+

--generator_type={generator_type} \

|

| 203 |

+

--outdir='test_runs' \

|

| 204 |

+

--seeds={seeds} \

|

| 205 |

+

--trunc={truncation} \

|

| 206 |

+

--network=finetuned/{model_ckpts[model_name]} \

|

| 207 |

+

--shape=False"""

|

| 208 |

+

print(command)

|

| 209 |

+

os.system(command)

|

| 210 |

+

|

| 211 |

+

result_img_pths = sorted(glob(f'test_runs/image/*{model_ckpts[model_name]}*.png'))

|

| 212 |

+

result_imgs = []

|

| 213 |

+

for img_pth in result_img_pths:

|

| 214 |

+

result_imgs.append(read_image(img_pth))

|

| 215 |

+

|

| 216 |

+

result_grid_pt = make_grid(result_imgs, nrow=1)

|

| 217 |

+

result_grid_pil = F.to_pil_image(result_grid_pt)

|

| 218 |

+

return result_grid_pil

|

| 219 |

+

|

| 220 |

+

|

| 221 |

+

|

| 222 |

+

|

| 223 |

+

def SampleVideo(model_name, grid_height, truncation, seed):

|

| 224 |

+

seed_list = np.random.RandomState(seed).choice(np.arange(10000), grid_height**2).tolist()

|

| 225 |

+

seeds = ''

|

| 226 |

+

for seed in seed_list:

|

| 227 |

+

seeds += f'{seed},'

|

| 228 |

+

seeds = seeds[:-1]

|

| 229 |

+

|

| 230 |

+

if model_name in ["fox_in_Zootopia", "cat_in_Zootopia", "golden_aluminum_animal"]:

|

| 231 |

+

generator_type = 'cat'

|

| 232 |

+

else:

|

| 233 |

+

generator_type = 'ffhq'

|

| 234 |

+

|

| 235 |

+

if not os.path.exists(f'finetuned/{model_ckpts[model_name]}'):

|

| 236 |

+

command = f"""wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/{model_ckpts[model_name]} -O finetuned/{model_ckpts[model_name]}

|

| 237 |

+

"""

|

| 238 |

+

os.system(command)

|

| 239 |

+

|

| 240 |

+

command = f"""python datid3d_test.py --mode video \

|

| 241 |

+

--generator_type={generator_type} \

|

| 242 |

+

--outdir='test_runs' \

|

| 243 |

+

--seeds={seeds} \

|

| 244 |

+

--trunc={truncation} \

|

| 245 |

+

--grid={grid_height}x{grid_height} \

|

| 246 |

+

--network=finetuned/{model_ckpts[model_name]} \

|

| 247 |

+

--shape=False"""

|

| 248 |

+

print(command)

|

| 249 |

+

os.system(command)

|

| 250 |

+

|

| 251 |

+

result_video_pth = sorted(glob(f'test_runs/video/*{model_ckpts[model_name]}*.mp4'))[0]

|

| 252 |

+

|

| 253 |

+

return result_video_pth

|

| 254 |

+

|

| 255 |

+

|

| 256 |

+

if __name__ == '__main__':

|

| 257 |

+

parser = argparse.ArgumentParser()

|

| 258 |

+

parser.add_argument('--share', action='store_true', help="public url")

|

| 259 |

+

args = parser.parse_args()

|

| 260 |

+

|

| 261 |

+

demo = gr.Blocks(title="DATID-3D Interactive Demo")

|

| 262 |

+

os.makedirs('finetuned', exist_ok=True)

|

| 263 |

+

intermediate = Intermediate()

|

| 264 |

+

with demo:

|

| 265 |

+

gr.Markdown("# DATID-3D Interactive Demo")

|

| 266 |

+

gr.Markdown(

|

| 267 |

+

"### Demo of the CVPR 2023 paper \"DATID-3D: Diversity-Preserved Domain Adaptation Using Text-to-Image Diffusion for 3D Generative Model\"")

|

| 268 |

+

|

| 269 |

+

with gr.Tab("Text-guided Manipulated 3D reconstruction"):

|

| 270 |

+

gr.Markdown("Text-guided Image-to-3D Translation")

|

| 271 |

+

with gr.Row():

|

| 272 |

+

with gr.Column(scale=1, variant='panel'):

|

| 273 |

+

t_image_input = gr.Image(source='upload', type="pil", interactive=True)

|

| 274 |

+

|

| 275 |

+

t_model_name = gr.Radio(["super_mario", "lego", "neanderthal", "orc",

|

| 276 |

+

"pixar", "skeleton", "stone_golem","tekken",

|

| 277 |

+

"greek_statue", "yoda", "zombie", "elf", "all"],

|

| 278 |

+

label="Model fine-tuned through DATID-3D",

|

| 279 |

+

value="super_mario", interactive=True)

|

| 280 |

+

with gr.Accordion("Advanced Options", open=False):

|

| 281 |

+

t_truncation = gr.Slider(label="Truncation psi", minimum=0, maximum=1.0, step=0.01, randomize=False, value=0.8)

|

| 282 |

+

t_num_inversion_steps = gr.Slider(200, 1000, value=200, step=1, label='Number of steps for the invresion')

|

| 283 |

+

with gr.Row():

|

| 284 |

+

t_button_gen_result = gr.Button("Generate Result", variant='primary')

|

| 285 |

+

# t_button_gen_video = gr.Button("Generate Video", variant='primary')

|

| 286 |

+

# t_button_gen_image = gr.Button("Generate Image", variant='secondary')

|

| 287 |

+

with gr.Row():

|

| 288 |

+

t_align_image_result = gr.Image(label="Alignment result", interactive=False)

|

| 289 |

+

with gr.Column(scale=1, variant='panel'):

|

| 290 |

+

with gr.Row():

|

| 291 |

+

t_video_result = gr.Video(label="Video result", interactive=False)

|

| 292 |

+

|

| 293 |

+

with gr.Row():

|

| 294 |

+

t_image_result = gr.Image(label="Image result", interactive=False)

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

with gr.Tab("Sample Images"):

|

| 298 |

+

with gr.Row():

|

| 299 |

+

with gr.Column(scale=1, variant='panel'):

|

| 300 |

+

i_model_name = gr.Radio(

|

| 301 |

+

["elf", "greek_statue", "hobbit", "lego", "masquerade", "neanderthal", "orc", "pixar",

|

| 302 |

+

"skeleton", "stone_golem", "super_mario", "tekken", "yoda", "zombie", "fox_in_Zootopia",

|

| 303 |

+

"cat_in_Zootopia", "golden_aluminum_animal"],

|

| 304 |

+

label="Model fine-tuned through DATID-3D",

|

| 305 |

+

value="super_mario", interactive=True)

|

| 306 |

+

i_num_samples = gr.Slider(0, 20, value=4, step=1, label='Number of samples')

|

| 307 |

+

i_seed = gr.Slider(label="Seed", minimum=0, maximum=1000000000, step=1, value=1235)

|

| 308 |

+

with gr.Accordion("Advanced Options", open=False):

|

| 309 |

+

i_truncation = gr.Slider(label="Truncation psi", minimum=0, maximum=1.0, step=0.01, randomize=False, value=0.8)

|

| 310 |

+

with gr.Row():

|

| 311 |

+

i_button_gen_image = gr.Button("Generate Image", variant='primary')

|

| 312 |

+

with gr.Column(scale=1, variant='panel'):

|

| 313 |

+

with gr.Row():

|

| 314 |

+

i_image_result = gr.Image(label="Image result", interactive=False)

|

| 315 |

+

|

| 316 |

+

|

| 317 |

+

with gr.Tab("Sample Videos"):

|

| 318 |

+

with gr.Row():

|

| 319 |

+

with gr.Column(scale=1, variant='panel'):

|

| 320 |

+

v_model_name = gr.Radio(

|

| 321 |

+

["elf", "greek_statue", "hobbit", "lego", "masquerade", "neanderthal", "orc", "pixar",

|

| 322 |

+

"skeleton", "stone_golem", "super_mario", "tekken", "yoda", "zombie", "fox_in_Zootopia",

|

| 323 |

+

"cat_in_Zootopia", "golden_aluminum_animal"],

|

| 324 |

+

label="Model fine-tuned through DATID-3D",

|

| 325 |

+

value="super_mario", interactive=True)

|

| 326 |

+

v_grid_height = gr.Slider(0, 5, value=2, step=1,label='Height of the grid')

|

| 327 |

+

v_seed = gr.Slider(label="Seed", minimum=0, maximum=1000000000, step=1, value=1235)

|

| 328 |

+

with gr.Accordion("Advanced Options", open=False):

|

| 329 |

+

v_truncation = gr.Slider(label="Truncation psi", minimum=0, maximum=1.0, step=0.01, randomize=False,

|

| 330 |

+

value=0.8)

|

| 331 |

+

|

| 332 |

+

with gr.Row():

|

| 333 |

+

v_button_gen_video = gr.Button("Generate Video", variant='primary')

|

| 334 |

+

|

| 335 |

+

with gr.Column(scale=1, variant='panel'):

|

| 336 |

+

|

| 337 |

+

with gr.Row():

|

| 338 |

+

v_video_result = gr.Video(label="Video result", interactive=False)

|

| 339 |

+

|

| 340 |

+

|

| 341 |

+

|

| 342 |

+

|

| 343 |

+

|

| 344 |

+

# functions

|

| 345 |

+

t_button_gen_result.click(fn=partial(TextGuidedImageTo3D, intermediate),

|

| 346 |

+

inputs=[t_image_input, t_model_name, t_num_inversion_steps, t_truncation],

|

| 347 |

+

outputs=[t_align_image_result, t_image_result, t_video_result])

|

| 348 |

+

i_button_gen_image.click(fn=SampleImage,

|

| 349 |

+

inputs=[i_model_name, i_num_samples, i_truncation, i_seed],

|

| 350 |

+

outputs=[i_image_result])

|

| 351 |

+

v_button_gen_video.click(fn=SampleVideo,

|

| 352 |

+

inputs=[i_model_name, v_grid_height, v_truncation, v_seed],

|

| 353 |

+

outputs=[v_video_result])

|

| 354 |

+

|

| 355 |

+

demo.queue(concurrency_count=1)

|

| 356 |

+

demo.launch(share=args.share)

|

| 357 |

+

|

datid3d_test.py

ADDED

|

@@ -0,0 +1,251 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from os.path import join as opj

|

| 3 |

+

import argparse

|

| 4 |

+

from glob import glob

|

| 5 |

+

|

| 6 |

+

### Parameters

|

| 7 |

+

parser = argparse.ArgumentParser()

|

| 8 |

+

|

| 9 |

+

# For all

|

| 10 |

+

parser.add_argument('--mode', type=str, required=True, choices=['image', 'video', 'manip', 'manip_from_inv'],

|

| 11 |

+

help="image: Sample images and shapes, "

|

| 12 |

+

"video: Sample pose-controlled videos, "

|

| 13 |

+

"manip: Manipulated 3D reconstruction from images, "

|

| 14 |

+

"manip_from_inv: Manipulated 3D reconstruction from inverted latent")

|

| 15 |

+

parser.add_argument('--network', type=str, nargs='+', required=True)

|

| 16 |

+

parser.add_argument('--generator_type', default='ffhq', type=str, choices=['ffhq', 'cat']) # ffhq, cat

|

| 17 |

+

parser.add_argument('--outdir', type=str, default='test_runs')

|

| 18 |

+

parser.add_argument('--trunc', type=float, default=0.7)

|

| 19 |

+

parser.add_argument('--seeds', type=str, default='100-200')

|

| 20 |

+

parser.add_argument('--down_src_eg3d_from_nvidia', default=True)

|

| 21 |

+

parser.add_argument('--num_inv_steps', default=300, type=int)

|

| 22 |

+

# Manipulated 3D reconstruction

|

| 23 |

+

parser.add_argument('--indir', type=str, default='input_imgs')

|

| 24 |

+

parser.add_argument('--name_tag', type=str, default='')

|

| 25 |

+

# Sample images

|

| 26 |

+

parser.add_argument('--shape', default=True)

|

| 27 |

+

parser.add_argument('--shape_format', type=str, choices=['.mrc', '.ply'], default='.mrc')

|

| 28 |

+

parser.add_argument('--shape_only_first', type=bool, default=False)

|

| 29 |

+

# Sample pose-controlled videos

|

| 30 |

+

parser.add_argument('--grid', default='1x1')

|

| 31 |

+

parser.add_argument('--w_frames', type=int, default=120)

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

args = parser.parse_args()

|

| 36 |

+

os.makedirs(args.outdir, exist_ok=True)

|

| 37 |

+

print()

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

network_command = ''

|

| 41 |

+

for network_path in args.network:

|

| 42 |

+

network_command += f"--network {opj('..', network_path)} "

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

### Sample images

|

| 47 |

+

if args.mode == 'image':

|

| 48 |

+

image_path = opj(args.outdir, f'image{args.name_tag}')

|

| 49 |

+

os.makedirs(image_path, exist_ok=True)

|

| 50 |

+

|

| 51 |

+

os.chdir('eg3d')

|

| 52 |

+

command = f"""python gen_samples.py \

|

| 53 |

+

{network_command} \

|

| 54 |

+

--seeds={args.seeds} \

|

| 55 |

+

--generator_type={args.generator_type} \

|

| 56 |

+

--outdir={opj('..', image_path)} \

|

| 57 |

+

--shapes={args.shape} \

|

| 58 |

+

--shape_format={args.shape_format} \

|

| 59 |

+

--shape_only_first={args.shape_only_first} \

|

| 60 |

+

--trunc={args.trunc} \

|

| 61 |

+

"""

|

| 62 |

+

print(f"{command} \n")

|

| 63 |

+

os.system(command)

|

| 64 |

+

os.chdir('..')

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

### Sample pose-controlled videos

|

| 71 |

+

if args.mode == 'video':

|

| 72 |

+

video_path = opj(args.outdir, f'video{args.name_tag}')

|

| 73 |

+

os.makedirs(video_path, exist_ok=True)

|

| 74 |

+

|

| 75 |

+

os.chdir('eg3d')

|

| 76 |

+

command = f"""python gen_videos.py \

|

| 77 |

+

{network_command} \

|

| 78 |

+

--seeds={args.seeds} \

|

| 79 |

+

--generator_type={args.generator_type} \

|

| 80 |

+

--outdir={opj('..', video_path)} \

|

| 81 |

+

--shapes=False \

|

| 82 |

+

--trunc={args.trunc} \

|

| 83 |

+

--grid={args.grid} \

|

| 84 |

+

--w-frames={args.w_frames}

|

| 85 |

+

"""

|

| 86 |

+

print(f"{command} \n")

|

| 87 |

+

os.system(command)

|

| 88 |

+

os.chdir('..')

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

### Manipulated 3D reconstruction from images

|

| 92 |

+

if args.mode == 'manip':

|

| 93 |

+

input_path = opj(args.indir)

|

| 94 |

+

align_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '1_align_result')

|

| 95 |

+

pose_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '2_pose_result')

|

| 96 |

+

inversion_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '3_inversion_result')

|

| 97 |

+

manip_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '4_manip_result')

|

| 98 |

+

|

| 99 |

+

os.makedirs(opj(args.outdir, f'manip_3D_recon{args.name_tag}'), exist_ok=True)

|

| 100 |

+

os.makedirs(align_path, exist_ok=True)

|

| 101 |

+

os.makedirs(pose_path, exist_ok=True)

|

| 102 |

+

os.makedirs(inversion_path, exist_ok=True)

|

| 103 |

+

os.makedirs(manip_path, exist_ok=True)

|

| 104 |

+

|

| 105 |

+

os.chdir('eg3d')

|

| 106 |

+

if args.generator_type == 'cat':

|

| 107 |

+

generator_id = 'afhqcats512-128.pkl'

|

| 108 |

+

else:

|

| 109 |

+

generator_id = 'ffhqrebalanced512-128.pkl'

|

| 110 |

+

generator_path = f'pretrained/{generator_id}'

|

| 111 |

+

if not os.path.exists(generator_path):

|

| 112 |

+

os.makedirs(f'pretrained', exist_ok=True)

|

| 113 |

+

print("Pretrained EG3D model cannot be found. Downloading the pretrained EG3D models.")

|

| 114 |

+

if args.down_src_eg3d_from_nvidia == True:

|

| 115 |

+

os.system(f'wget -c https://api.ngc.nvidia.com/v2/models/nvidia/research/eg3d/versions/1/files/{generator_id} -O {generator_path}')

|

| 116 |

+

else:

|

| 117 |

+

os.system(f'wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/nvidia_{generator_id} -O {generator_path}')

|

| 118 |

+

os.chdir('..')

|

| 119 |

+

|

| 120 |

+

## Align images and Pose extraction

|

| 121 |

+

os.chdir('pose_estimation')

|

| 122 |

+

if not os.path.exists('checkpoints/pretrained/epoch_20.pth') or not os.path.exists('BFM'):

|

| 123 |

+

print(f"BFM and pretrained DeepFaceRecon3D model cannot be found. Downloading the pretrained pose estimation model and BFM files, put epoch_20.pth in ./pose_estimation/checkpoints/pretrained/ and put unzip BFM.zip in ./pose_estimation/.")

|

| 124 |

+

|

| 125 |

+

try:

|

| 126 |

+

from gdown import download as drive_download

|

| 127 |

+

drive_download(f'https://drive.google.com/uc?id=1mdqkEUepHZROeOj99pXogAPJPqzBDN2G', './BFM.zip', quiet=False)

|

| 128 |

+

os.system('unzip BFM.zip')

|

| 129 |

+

drive_download(f'https://drive.google.com/uc?id=1zawY7jYDJlUGnSAXn1pgIHgIvJpiSmj5', './checkpoints/pretrained/epoch_20.pth', quiet=False)

|

| 130 |

+

except:

|

| 131 |

+

os.system("pip install -U --no-cache-dir gdown --pre")

|

| 132 |

+

from gdown import download as drive_download

|

| 133 |

+

drive_download(f'https://drive.google.com/uc?id=1mdqkEUepHZROeOj99pXogAPJPqzBDN2G', './BFM.zip', quiet=False)

|

| 134 |

+

os.system('unzip BFM.zip')

|

| 135 |

+

drive_download(f'https://drive.google.com/uc?id=1zawY7jYDJlUGnSAXn1pgIHgIvJpiSmj5', './checkpoints/pretrained/epoch_20.pth', quiet=False)

|

| 136 |

+

|

| 137 |

+

print()

|

| 138 |

+

command = f"""python extract_pose.py 0 \

|

| 139 |

+

{opj('..', input_path)} {opj('..', align_path)} {opj('..', pose_path)}

|

| 140 |

+

"""

|

| 141 |

+

print(f"{command} \n")

|

| 142 |

+

os.system(command)

|

| 143 |

+

os.chdir('..')

|

| 144 |

+

|

| 145 |

+

## Invert images to the latent space of 3D GANs

|

| 146 |

+

os.chdir('eg3d')

|

| 147 |

+

command = f"""python run_inversion.py \

|

| 148 |

+

--outdir={opj('..', inversion_path)} \

|

| 149 |

+

--latent_space_type=w_plus \

|

| 150 |

+

--network={generator_path} \

|

| 151 |

+

--image_path={opj('..', pose_path)} \

|

| 152 |

+

--num_steps={args.num_inv_steps}

|

| 153 |

+

"""

|

| 154 |

+

print(f"{command} \n")

|

| 155 |

+

os.system(command)

|

| 156 |

+

os.chdir('..')

|

| 157 |

+

|

| 158 |

+

## Generate videos, images and mesh

|

| 159 |

+

os.chdir('eg3d')

|

| 160 |

+

w_pths = sorted(glob(opj('..', inversion_path, '*.pt')))

|

| 161 |

+

if len(w_pths) == 0:

|

| 162 |

+

print("No inverted latent")

|

| 163 |

+

exit()

|

| 164 |

+

for w_pth in w_pths:

|

| 165 |

+

print(f"{w_pth} \n")

|

| 166 |

+

|

| 167 |

+

command = f"""python gen_samples.py \

|

| 168 |

+

{network_command} \

|

| 169 |

+

--w_pth={w_pth} \

|

| 170 |

+

--seeds='100-200' \

|

| 171 |

+

--generator_type={args.generator_type} \

|

| 172 |

+

--outdir={opj('..', manip_path)} \

|

| 173 |

+

--shapes={args.shape} \

|

| 174 |

+

--shape_format={args.shape_format} \

|

| 175 |

+

--shape_only_first={args.shape_only_first} \

|

| 176 |

+

--trunc={args.trunc} \

|

| 177 |

+

"""

|

| 178 |

+

print(f"{command} \n")

|

| 179 |

+

os.system(command)

|

| 180 |

+

|

| 181 |

+

command = f"""python gen_videos.py \

|

| 182 |

+

{network_command} \

|

| 183 |

+

--w_pth={w_pth} \

|

| 184 |

+

--seeds='100-200' \

|

| 185 |

+

--generator_type={args.generator_type} \

|

| 186 |

+

--outdir={opj('..', manip_path)} \

|

| 187 |

+

--shapes=False \

|

| 188 |

+

--trunc={args.trunc} \

|

| 189 |

+

--grid=1x1 \

|

| 190 |

+

--w-frames={args.w_frames}

|

| 191 |

+

"""

|

| 192 |

+

print(f"{command} \n")

|

| 193 |

+

os.system(command)

|

| 194 |

+

os.chdir('..')

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

|

| 200 |

+

### Manipulated 3D reconstruction from inverted latent

|

| 201 |

+

if args.mode == 'manip_from_inv':

|

| 202 |

+

input_path = opj(args.indir)

|

| 203 |

+

align_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '1_align_result')

|

| 204 |

+

pose_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '2_pose_result')

|

| 205 |

+

inversion_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '3_inversion_result')

|

| 206 |

+

manip_path = opj(args.outdir, f'manip_3D_recon{args.name_tag}', '4_manip_result')

|

| 207 |

+

|

| 208 |

+

os.makedirs(opj(args.outdir, f'manip_3D_recon{args.name_tag}'), exist_ok=True)

|

| 209 |

+

os.makedirs(align_path, exist_ok=True)

|

| 210 |

+

os.makedirs(pose_path, exist_ok=True)

|

| 211 |

+

os.makedirs(inversion_path, exist_ok=True)

|

| 212 |

+

os.makedirs(manip_path, exist_ok=True)

|

| 213 |

+

|

| 214 |

+

## Generate videos, images and mesh

|

| 215 |

+

os.chdir('eg3d')

|

| 216 |

+

w_pths = sorted(glob(opj('..', inversion_path, '*.pt')))

|

| 217 |

+

if len(w_pths) == 0:

|

| 218 |

+

print("No inverted latent")

|

| 219 |

+

exit()

|

| 220 |

+

for w_pth in w_pths:

|

| 221 |

+

print(f"{w_pth} \n")

|

| 222 |

+

|

| 223 |

+

command = f"""python gen_samples.py \

|

| 224 |

+

{network_command} \

|

| 225 |

+

--w_pth={w_pth} \

|

| 226 |

+

--seeds='100-200' \

|

| 227 |

+

--generator_type={args.generator_type} \

|

| 228 |

+

--outdir={opj('..', manip_path)} \

|

| 229 |

+

--shapes={args.shape} \

|

| 230 |

+

--shape_format={args.shape_format} \

|

| 231 |

+

--shape_only_first={args.shape_only_first} \

|

| 232 |

+

--trunc={args.trunc} \

|

| 233 |

+

"""

|

| 234 |

+

print(f"{command} \n")

|

| 235 |

+

os.system(command)

|

| 236 |

+

|

| 237 |

+

command = f"""python gen_videos.py \

|

| 238 |

+

{network_command} \

|

| 239 |

+

--w_pth={w_pth} \

|

| 240 |

+

--seeds='100-200' \

|

| 241 |

+

--generator_type={args.generator_type} \

|

| 242 |

+

--outdir={opj('..', manip_path)} \

|

| 243 |

+

--shapes=False \

|

| 244 |

+

--trunc={args.trunc} \

|

| 245 |

+

--grid=1x1

|

| 246 |

+

"""

|

| 247 |

+

print(f"{command} \n")

|

| 248 |

+

os.system(command)

|

| 249 |

+

os.chdir('..')

|

| 250 |

+

|

| 251 |

+

|

datid3d_train.py

ADDED

|

@@ -0,0 +1,105 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import argparse

|

| 3 |

+

|

| 4 |

+

### Parameters

|

| 5 |

+

parser = argparse.ArgumentParser()

|

| 6 |

+

|

| 7 |

+

# For all

|

| 8 |

+

parser.add_argument('--mode', type=str, required=True, choices=['pdg', 'ft', 'both'],

|

| 9 |

+

help="pdg: Pose-aware dataset generation, ft: Fine-tuning 3D generative models, both: Doing both")

|

| 10 |

+

parser.add_argument('--down_src_eg3d_from_nvidia', default=True)

|

| 11 |

+

# Pose-aware dataset generation

|

| 12 |

+

parser.add_argument('--pdg_prompt', type=str, required=True)

|

| 13 |

+

parser.add_argument('--pdg_generator_type', default='ffhq', type=str, choices=['ffhq', 'cat']) # ffhq, cat

|

| 14 |

+

parser.add_argument('--pdg_strength', default=0.7, type=float)

|

| 15 |

+

parser.add_argument('--pdg_guidance_scale', default=8, type=float)

|

| 16 |

+

parser.add_argument('--pdg_num_images', default=1000, type=int)

|

| 17 |

+

parser.add_argument('--pdg_sd_model_id', default='stabilityai/stable-diffusion-2-1-base', type=str)

|

| 18 |

+

parser.add_argument('--pdg_num_inference_steps', default=50, type=int)

|

| 19 |

+

parser.add_argument('--pdg_name_tag', default='', type=str)

|

| 20 |

+

parser.add_argument('--down_src_eg3d_from_nvidia', default=True)

|

| 21 |

+

# Fine-tuning 3D generative models

|

| 22 |

+

parser.add_argument('--ft_generator_type', default='same', help="None: The same type as pdg_generator_type", type=str, choices=['ffhq', 'cat', 'same'])

|

| 23 |

+

parser.add_argument('--ft_kimg', default=200, type=int)

|

| 24 |

+

parser.add_argument('--ft_batch', default=20, type=int)

|

| 25 |

+

parser.add_argument('--ft_tick', default=1, type=int)

|

| 26 |

+

parser.add_argument('--ft_snap', default=50, type=int)

|

| 27 |

+

parser.add_argument('--ft_outdir', default='../training_runs', type=str) #

|

| 28 |

+

parser.add_argument('--ft_gpus', default=1, type=str) #

|

| 29 |

+

parser.add_argument('--ft_workers', default=8, type=int) #

|

| 30 |

+

parser.add_argument('--ft_data_max_size', default=500000000, type=int) #

|

| 31 |

+

parser.add_argument('--ft_freeze_dec_sr', default=True, type=bool) #

|

| 32 |

+

|

| 33 |

+

args = parser.parse_args()

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

### Pose-aware target generation

|

| 37 |

+

if args.mode in ['pdg', 'both']:

|

| 38 |

+

os.chdir('eg3d')

|

| 39 |

+

if args.pdg_generator_type == 'cat':

|

| 40 |

+

pdg_generator_id = 'afhqcats512-128.pkl'

|

| 41 |

+

else:

|

| 42 |

+

pdg_generator_id = 'ffhqrebalanced512-128.pkl'

|

| 43 |

+

|

| 44 |

+

pdg_generator_path = f'pretrained/{pdg_generator_id}'

|

| 45 |

+

if not os.path.exists(pdg_generator_path):

|

| 46 |

+

os.makedirs(f'pretrained', exist_ok=True)

|

| 47 |

+

print("Pretrained EG3D model cannot be found. Downloading the pretrained EG3D models.")

|

| 48 |

+

if args.down_src_eg3d_from_nvidia == True:

|

| 49 |

+

os.system(f'wget -c https://api.ngc.nvidia.com/v2/models/nvidia/research/eg3d/versions/1/files/{pdg_generator_id} -O {pdg_generator_path}')

|

| 50 |

+

else:

|

| 51 |

+

os.system(f'wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/nvidia_{pdg_generator_id} -O {pdg_generator_path}')

|

| 52 |

+

command = f"""python datid3d_data_gen.py \

|

| 53 |

+

--prompt="{args.pdg_prompt}" \

|

| 54 |

+

--data_type={args.pdg_generator_type} \

|

| 55 |

+

--strength={args.pdg_strength} \

|

| 56 |

+

--guidance_scale={args.pdg_guidance_scale} \

|

| 57 |

+

--num_images={args.pdg_num_images} \

|

| 58 |

+

--sd_model_id="{args.pdg_sd_model_id}" \

|

| 59 |

+

--num_inference_steps={args.pdg_num_inference_steps} \

|

| 60 |

+

--name_tag={args.pdg_name_tag}

|

| 61 |

+

"""

|

| 62 |

+

print(f"{command} \n")

|

| 63 |

+

os.system(command)

|

| 64 |

+

os.chdir('..')

|

| 65 |

+

|

| 66 |

+

### Filtering process

|

| 67 |

+

# TODO

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

### Fine-tuning 3D generative models

|

| 71 |

+

if args.mode in ['ft', 'both']:

|

| 72 |

+

os.chdir('eg3d')

|

| 73 |

+

if args.ft_generator_type == 'same':

|

| 74 |

+

args.ft_generator_type = args.pdg_generator_type

|

| 75 |

+

|

| 76 |

+

if args.ft_generator_type == 'cat':

|

| 77 |

+

ft_generator_id = 'afhqcats512-128.pkl'

|

| 78 |

+

else:

|

| 79 |

+

ft_generator_id = 'ffhqrebalanced512-128.pkl'

|

| 80 |

+

|

| 81 |

+

ft_generator_path = f'pretrained/{ft_generator_id}'

|

| 82 |

+

if not os.path.exists(ft_generator_path):

|

| 83 |

+

os.makedirs(f'pretrained', exist_ok=True)

|

| 84 |

+

print("Pretrained EG3D model cannot be found. Downloading the pretrained EG3D models.")

|

| 85 |

+

if args.down_src_eg3d_from_nvidia == True:

|

| 86 |

+

os.system(f'wget -c https://api.ngc.nvidia.com/v2/models/nvidia/research/eg3d/versions/1/files/{ft_generator_id} -O {ft_generator_path}')

|

| 87 |

+

else:

|

| 88 |

+

os.system(f'wget https://huggingface.co/gwang-kim/datid3d-finetuned-eg3d-models/resolve/main/finetuned_models/nvidia_{ft_generator_id} -O {ft_generator_path}')

|

| 89 |

+

|

| 90 |

+

dataset_id = f'data_{args.pdg_generator_type}_{args.pdg_prompt.replace(" ", "_")}{args.pdg_name_tag}'

|

| 91 |

+

dataset_path = f'./exp_data/{dataset_id}/{dataset_id}.zip'

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

command = f"""python train.py \

|

| 95 |

+

--outdir={args.ft_outdir} \

|

| 96 |

+

--cfg={args.ft_generator_type} \

|

| 97 |

+

--data="{dataset_path}" \

|

| 98 |

+

--resume={ft_generator_path} --freeze_dec_sr={args.ft_freeze_dec_sr} \

|

| 99 |

+

--batch={args.ft_batch} --workers={args.ft_workers} --gpus={args.ft_gpus} \

|

| 100 |

+

--tick={args.ft_tick} --snap={args.ft_snap} --data_max_size={args.ft_data_max_size} --kimg={args.ft_kimg} \

|

| 101 |

+

--gamma=5 --aug=ada --neural_rendering_resolution_final=128 --gen_pose_cond=True --gpc_reg_prob=0.8 --metrics=None

|

| 102 |

+

"""

|

| 103 |

+

print(f"{command} \n")

|

| 104 |

+

os.system(command)

|

| 105 |

+

os.chdir('..')

|

eg3d/LICENSE.txt

ADDED

|

@@ -0,0 +1,99 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2021-2022, NVIDIA Corporation & affiliates. All rights

|

| 2 |

+

reserved.

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

NVIDIA Source Code License for EG3D

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

=======================================================================

|

| 9 |

+

|

| 10 |

+

1. Definitions

|

| 11 |

+

|

| 12 |

+

"Licensor" means any person or entity that distributes its Work.

|

| 13 |

+

|

| 14 |

+

"Software" means the original work of authorship made available under

|

| 15 |

+

this License.

|

| 16 |

+

|

| 17 |

+

"Work" means the Software and any additions to or derivative works of

|

| 18 |

+

the Software that are made available under this License.

|

| 19 |

+

|

| 20 |

+

The terms "reproduce," "reproduction," "derivative works," and

|

| 21 |

+

"distribution" have the meaning as provided under U.S. copyright law;

|

| 22 |

+

provided, however, that for the purposes of this License, derivative

|

| 23 |

+

works shall not include works that remain separable from, or merely

|

| 24 |

+

link (or bind by name) to the interfaces of, the Work.

|

| 25 |

+

|

| 26 |

+

Works, including the Software, are "made available" under this License

|

| 27 |

+

by including in or with the Work either (a) a copyright notice

|

| 28 |

+

referencing the applicability of this License to the Work, or (b) a

|

| 29 |

+

copy of this License.

|

| 30 |

+

|

| 31 |

+

2. License Grants

|

| 32 |

+