Spaces:

Sleeping

Sleeping

init

Browse files- .gitattributes +1 -0

- app.py +48 -0

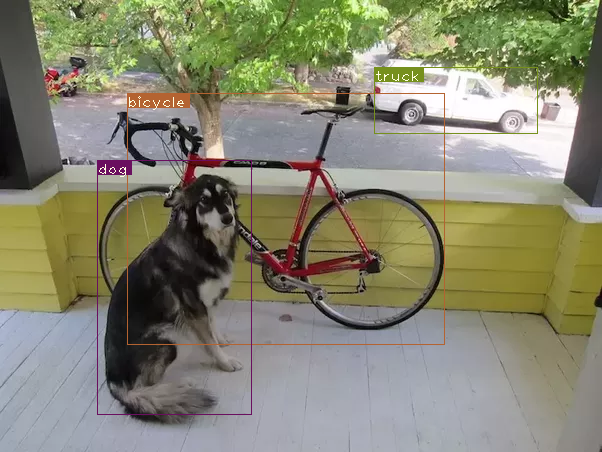

- det_dog-cycle-car.png +0 -0

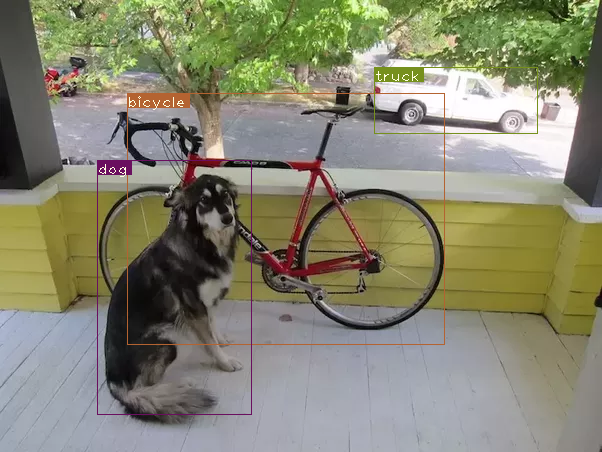

- dog-cycle-car.png +0 -0

- requirements.txt +8 -0

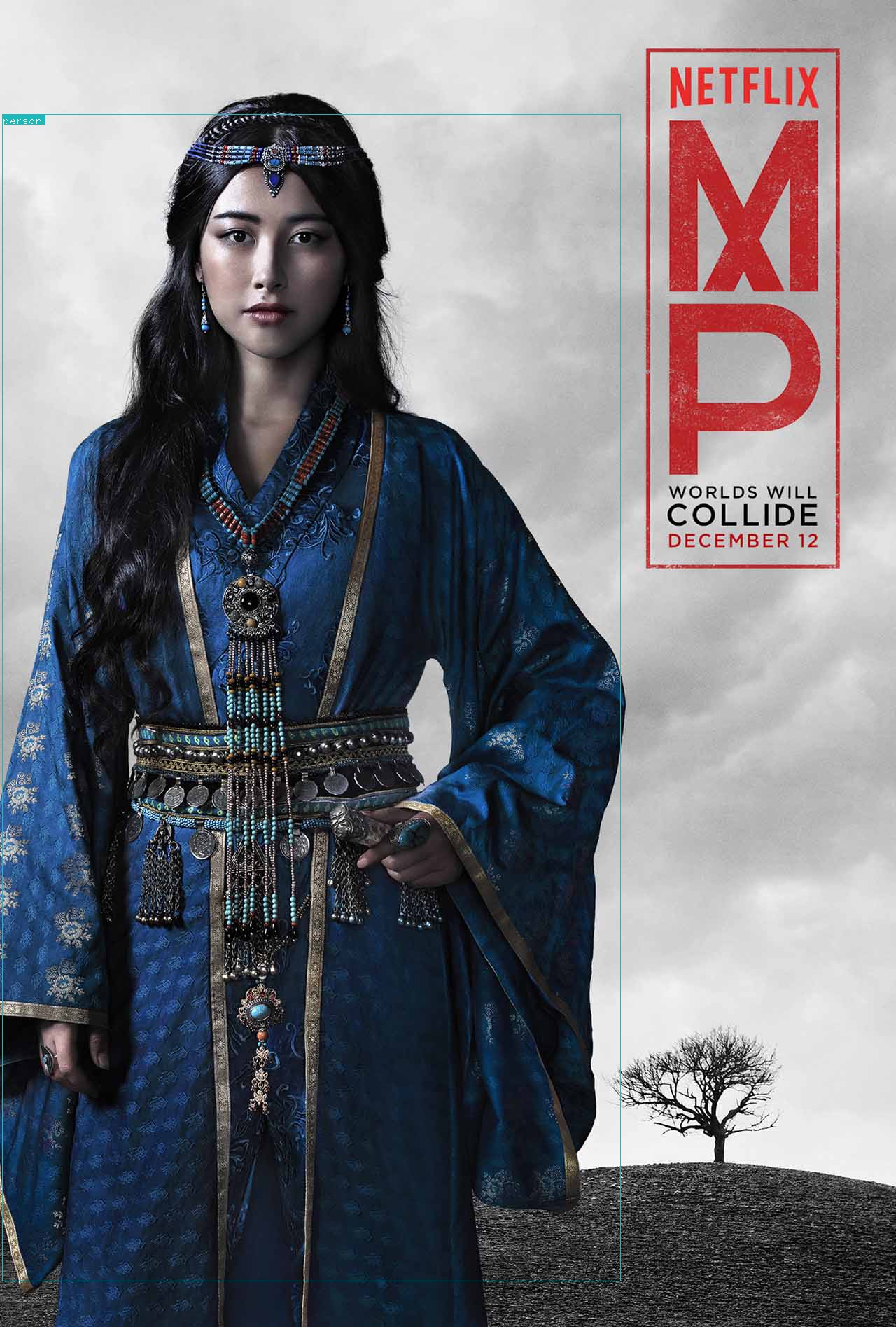

- yolo/Poster3.jpg +0 -0

- yolo/README.md +12 -0

- yolo/__pycache__/darknet.cpython-37.pyc +0 -0

- yolo/__pycache__/model.cpython-37.pyc +0 -0

- yolo/__pycache__/utils.cpython-37.pyc +0 -0

- yolo/cfg/yolov3.cfg +788 -0

- yolo/darknet.py +586 -0

- yolo/data/coco.names +80 -0

- yolo/det/det_Poster3.jpg +0 -0

- yolo/det/det_dog-cycle-car.png +0 -0

- yolo/det/det_sample.jpeg +0 -0

- yolo/det/det_victoria.jpg +0 -0

- yolo/detector.py +321 -0

- yolo/dog-cycle-car.png +0 -0

- yolo/model.py +189 -0

- yolo/pallete +0 -0

- yolo/sample.jpeg +0 -0

- yolo/sample.py +77 -0

- yolo/test.py +166 -0

- yolo/utils.py +324 -0

- yolo/victoria.jpg +0 -0

- yolo/yolov3-tiny.cfg +182 -0

- yolo/yolov3-tiny.weights +3 -0

- yolo/yolov3.cfg +788 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.weights filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import gradio as gr

|

| 3 |

+

from model import api

|

| 4 |

+

from PIL import Image

|

| 5 |

+

from yolo.model import *

|

| 6 |

+

|

| 7 |

+

model = yolo_model()

|

| 8 |

+

|

| 9 |

+

def predict(input_img):

|

| 10 |

+

input_img = Image.fromarray(input_img)

|

| 11 |

+

_, payload = model.predict(image)

|

| 12 |

+

# print('prediction',prediction)

|

| 13 |

+

return payload

|

| 14 |

+

|

| 15 |

+

css = ''

|

| 16 |

+

|

| 17 |

+

# with gr.Blocks(css=css) as demo:

|

| 18 |

+

# gr.HTML("<h1><center>Signsapp: Classify the signs based on the hands sign images<center><h1>")

|

| 19 |

+

# gr.Interface(sign,inputs=gr.Image(shape=(200, 200)), outputs=gr.Label())

|

| 20 |

+

|

| 21 |

+

title = r"yolov3"

|

| 22 |

+

|

| 23 |

+

description = r"""

|

| 24 |

+

<center>

|

| 25 |

+

Recognize common objects using the model

|

| 26 |

+

<img src="file/det_dog-cycle-car.png" width=350px>

|

| 27 |

+

</center>

|

| 28 |

+

"""

|

| 29 |

+

article = r"""

|

| 30 |

+

### Credits

|

| 31 |

+

- [Coursera](https://www.coursera.org/learn/convolutional-neural-networks/)

|

| 32 |

+

"""

|

| 33 |

+

|

| 34 |

+

demo = gr.Interface(

|

| 35 |

+

title = title,

|

| 36 |

+

description = description,

|

| 37 |

+

article = article,

|

| 38 |

+

fn=predict,

|

| 39 |

+

inputs = gr.Image(shape=(200, 200)),

|

| 40 |

+

outputs = gr.Image(shape=(200, 200)),

|

| 41 |

+

examples=["dog-cycle-car.png"]

|

| 42 |

+

# allow_flagging = "manual",

|

| 43 |

+

# flagging_options = ['recule', 'tournedroite', 'arretetoi', 'tournegauche', 'gauche', 'avance', 'droite'],

|

| 44 |

+

# flagging_dir = "./flag/men"

|

| 45 |

+

)

|

| 46 |

+

|

| 47 |

+

# demo.queue()

|

| 48 |

+

demo.launch(debug=True)

|

det_dog-cycle-car.png

ADDED

|

dog-cycle-car.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Flask-Cors

|

| 2 |

+

Flask

|

| 3 |

+

Werkzeug

|

| 4 |

+

pillow

|

| 5 |

+

numpy

|

| 6 |

+

boto3

|

| 7 |

+

pytorch==1.7.1

|

| 8 |

+

opencv==3.4.2

|

yolo/Poster3.jpg

ADDED

|

yolo/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Yolo

|

| 2 |

+

|

| 3 |

+

[Part 1 : Understanding How YOLO works](https://blog.paperspace.com/how-to-implement-a-yolo-object-detector-in-pytorch/)

|

| 4 |

+

|

| 5 |

+

[Part 2 : Creating the layers of the network architecture](https://blog.paperspace.com/how-to-implement-a-yolo-v3-object-detector-from-scratch-in-pytorch-part-2/)

|

| 6 |

+

|

| 7 |

+

[Part 3 : How to implement a YOLO (v3) object detector from scratch in PyTorch](https://blog.paperspace.com/how-to-implement-a-yolo-v3-object-detector-from-scratch-in-pytorch-part-3/)

|

| 8 |

+

|

| 9 |

+

[Part 4 : Objectness Confidence Thresholding and Non-maximum Suppression](https://blog.paperspace.com/how-to-implement-a-yolo-v3-object-detector-from-scratch-in-pytorch-part-4/)

|

| 10 |

+

|

| 11 |

+

[Part 5 : Designing the input and the output pipelines](https://blog.paperspace.com/how-to-implement-a-yolo-v3-object-detector-from-scratch-in-pytorch-part-5/)

|

| 12 |

+

|

yolo/__pycache__/darknet.cpython-37.pyc

ADDED

|

Binary file (11.9 kB). View file

|

|

|

yolo/__pycache__/model.cpython-37.pyc

ADDED

|

Binary file (4.87 kB). View file

|

|

|

yolo/__pycache__/utils.cpython-37.pyc

ADDED

|

Binary file (7.7 kB). View file

|

|

|

yolo/cfg/yolov3.cfg

ADDED

|

@@ -0,0 +1,788 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[net]

|

| 2 |

+

# Testing

|

| 3 |

+

# batch=1

|

| 4 |

+

# subdivisions=1

|

| 5 |

+

# Training

|

| 6 |

+

batch=64

|

| 7 |

+

subdivisions=16

|

| 8 |

+

width=624

|

| 9 |

+

height=624

|

| 10 |

+

channels=3

|

| 11 |

+

momentum=0.9

|

| 12 |

+

decay=0.0005

|

| 13 |

+

angle=0

|

| 14 |

+

saturation = 1.5

|

| 15 |

+

exposure = 1.5

|

| 16 |

+

hue=.1

|

| 17 |

+

|

| 18 |

+

learning_rate=0.001

|

| 19 |

+

burn_in=1000

|

| 20 |

+

max_batches = 500200

|

| 21 |

+

policy=steps

|

| 22 |

+

steps=400000,450000

|

| 23 |

+

scales=.1,.1

|

| 24 |

+

|

| 25 |

+

[convolutional]

|

| 26 |

+

batch_normalize=1

|

| 27 |

+

filters=32

|

| 28 |

+

size=3

|

| 29 |

+

stride=1

|

| 30 |

+

pad=1

|

| 31 |

+

activation=leaky

|

| 32 |

+

|

| 33 |

+

# Downsample

|

| 34 |

+

|

| 35 |

+

[convolutional]

|

| 36 |

+

batch_normalize=1

|

| 37 |

+

filters=64

|

| 38 |

+

size=3

|

| 39 |

+

stride=2

|

| 40 |

+

pad=1

|

| 41 |

+

activation=leaky

|

| 42 |

+

|

| 43 |

+

[convolutional]

|

| 44 |

+

batch_normalize=1

|

| 45 |

+

filters=32

|

| 46 |

+

size=1

|

| 47 |

+

stride=1

|

| 48 |

+

pad=1

|

| 49 |

+

activation=leaky

|

| 50 |

+

|

| 51 |

+

[convolutional]

|

| 52 |

+

batch_normalize=1

|

| 53 |

+

filters=64

|

| 54 |

+

size=3

|

| 55 |

+

stride=1

|

| 56 |

+

pad=1

|

| 57 |

+

activation=leaky

|

| 58 |

+

|

| 59 |

+

[shortcut]

|

| 60 |

+

from=-3

|

| 61 |

+

activation=linear

|

| 62 |

+

|

| 63 |

+

# Downsample

|

| 64 |

+

|

| 65 |

+

[convolutional]

|

| 66 |

+

batch_normalize=1

|

| 67 |

+

filters=128

|

| 68 |

+

size=3

|

| 69 |

+

stride=2

|

| 70 |

+

pad=1

|

| 71 |

+

activation=leaky

|

| 72 |

+

|

| 73 |

+

[convolutional]

|

| 74 |

+

batch_normalize=1

|

| 75 |

+

filters=64

|

| 76 |

+

size=1

|

| 77 |

+

stride=1

|

| 78 |

+

pad=1

|

| 79 |

+

activation=leaky

|

| 80 |

+

|

| 81 |

+

[convolutional]

|

| 82 |

+

batch_normalize=1

|

| 83 |

+

filters=128

|

| 84 |

+

size=3

|

| 85 |

+

stride=1

|

| 86 |

+

pad=1

|

| 87 |

+

activation=leaky

|

| 88 |

+

|

| 89 |

+

[shortcut]

|

| 90 |

+

from=-3

|

| 91 |

+

activation=linear

|

| 92 |

+

|

| 93 |

+

[convolutional]

|

| 94 |

+

batch_normalize=1

|

| 95 |

+

filters=64

|

| 96 |

+

size=1

|

| 97 |

+

stride=1

|

| 98 |

+

pad=1

|

| 99 |

+

activation=leaky

|

| 100 |

+

|

| 101 |

+

[convolutional]

|

| 102 |

+

batch_normalize=1

|

| 103 |

+

filters=128

|

| 104 |

+

size=3

|

| 105 |

+

stride=1

|

| 106 |

+

pad=1

|

| 107 |

+

activation=leaky

|

| 108 |

+

|

| 109 |

+

[shortcut]

|

| 110 |

+

from=-3

|

| 111 |

+

activation=linear

|

| 112 |

+

|

| 113 |

+

# Downsample

|

| 114 |

+

|

| 115 |

+

[convolutional]

|

| 116 |

+

batch_normalize=1

|

| 117 |

+

filters=256

|

| 118 |

+

size=3

|

| 119 |

+

stride=2

|

| 120 |

+

pad=1

|

| 121 |

+

activation=leaky

|

| 122 |

+

|

| 123 |

+

[convolutional]

|

| 124 |

+

batch_normalize=1

|

| 125 |

+

filters=128

|

| 126 |

+

size=1

|

| 127 |

+

stride=1

|

| 128 |

+

pad=1

|

| 129 |

+

activation=leaky

|

| 130 |

+

|

| 131 |

+

[convolutional]

|

| 132 |

+

batch_normalize=1

|

| 133 |

+

filters=256

|

| 134 |

+

size=3

|

| 135 |

+

stride=1

|

| 136 |

+

pad=1

|

| 137 |

+

activation=leaky

|

| 138 |

+

|

| 139 |

+

[shortcut]

|

| 140 |

+

from=-3

|

| 141 |

+

activation=linear

|

| 142 |

+

|

| 143 |

+

[convolutional]

|

| 144 |

+

batch_normalize=1

|

| 145 |

+

filters=128

|

| 146 |

+

size=1

|

| 147 |

+

stride=1

|

| 148 |

+

pad=1

|

| 149 |

+

activation=leaky

|

| 150 |

+

|

| 151 |

+

[convolutional]

|

| 152 |

+

batch_normalize=1

|

| 153 |

+

filters=256

|

| 154 |

+

size=3

|

| 155 |

+

stride=1

|

| 156 |

+

pad=1

|

| 157 |

+

activation=leaky

|

| 158 |

+

|

| 159 |

+

[shortcut]

|

| 160 |

+

from=-3

|

| 161 |

+

activation=linear

|

| 162 |

+

|

| 163 |

+

[convolutional]

|

| 164 |

+

batch_normalize=1

|

| 165 |

+

filters=128

|

| 166 |

+

size=1

|

| 167 |

+

stride=1

|

| 168 |

+

pad=1

|

| 169 |

+

activation=leaky

|

| 170 |

+

|

| 171 |

+

[convolutional]

|

| 172 |

+

batch_normalize=1

|

| 173 |

+

filters=256

|

| 174 |

+

size=3

|

| 175 |

+

stride=1

|

| 176 |

+

pad=1

|

| 177 |

+

activation=leaky

|

| 178 |

+

|

| 179 |

+

[shortcut]

|

| 180 |

+

from=-3

|

| 181 |

+

activation=linear

|

| 182 |

+

|

| 183 |

+

[convolutional]

|

| 184 |

+

batch_normalize=1

|

| 185 |

+

filters=128

|

| 186 |

+

size=1

|

| 187 |

+

stride=1

|

| 188 |

+

pad=1

|

| 189 |

+

activation=leaky

|

| 190 |

+

|

| 191 |

+

[convolutional]

|

| 192 |

+

batch_normalize=1

|

| 193 |

+

filters=256

|

| 194 |

+

size=3

|

| 195 |

+

stride=1

|

| 196 |

+

pad=1

|

| 197 |

+

activation=leaky

|

| 198 |

+

|

| 199 |

+

[shortcut]

|

| 200 |

+

from=-3

|

| 201 |

+

activation=linear

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

[convolutional]

|

| 205 |

+

batch_normalize=1

|

| 206 |

+

filters=128

|

| 207 |

+

size=1

|

| 208 |

+

stride=1

|

| 209 |

+

pad=1

|

| 210 |

+

activation=leaky

|

| 211 |

+

|

| 212 |

+

[convolutional]

|

| 213 |

+

batch_normalize=1

|

| 214 |

+

filters=256

|

| 215 |

+

size=3

|

| 216 |

+

stride=1

|

| 217 |

+

pad=1

|

| 218 |

+

activation=leaky

|

| 219 |

+

|

| 220 |

+

[shortcut]

|

| 221 |

+

from=-3

|

| 222 |

+

activation=linear

|

| 223 |

+

|

| 224 |

+

[convolutional]

|

| 225 |

+

batch_normalize=1

|

| 226 |

+

filters=128

|

| 227 |

+

size=1

|

| 228 |

+

stride=1

|

| 229 |

+

pad=1

|

| 230 |

+

activation=leaky

|

| 231 |

+

|

| 232 |

+

[convolutional]

|

| 233 |

+

batch_normalize=1

|

| 234 |

+

filters=256

|

| 235 |

+

size=3

|

| 236 |

+

stride=1

|

| 237 |

+

pad=1

|

| 238 |

+

activation=leaky

|

| 239 |

+

|

| 240 |

+

[shortcut]

|

| 241 |

+

from=-3

|

| 242 |

+

activation=linear

|

| 243 |

+

|

| 244 |

+

[convolutional]

|

| 245 |

+

batch_normalize=1

|

| 246 |

+

filters=128

|

| 247 |

+

size=1

|

| 248 |

+

stride=1

|

| 249 |

+

pad=1

|

| 250 |

+

activation=leaky

|

| 251 |

+

|

| 252 |

+

[convolutional]

|

| 253 |

+

batch_normalize=1

|

| 254 |

+

filters=256

|

| 255 |

+

size=3

|

| 256 |

+

stride=1

|

| 257 |

+

pad=1

|

| 258 |

+

activation=leaky

|

| 259 |

+

|

| 260 |

+

[shortcut]

|

| 261 |

+

from=-3

|

| 262 |

+

activation=linear

|

| 263 |

+

|

| 264 |

+

[convolutional]

|

| 265 |

+

batch_normalize=1

|

| 266 |

+

filters=128

|

| 267 |

+

size=1

|

| 268 |

+

stride=1

|

| 269 |

+

pad=1

|

| 270 |

+

activation=leaky

|

| 271 |

+

|

| 272 |

+

[convolutional]

|

| 273 |

+

batch_normalize=1

|

| 274 |

+

filters=256

|

| 275 |

+

size=3

|

| 276 |

+

stride=1

|

| 277 |

+

pad=1

|

| 278 |

+

activation=leaky

|

| 279 |

+

|

| 280 |

+

[shortcut]

|

| 281 |

+

from=-3

|

| 282 |

+

activation=linear

|

| 283 |

+

|

| 284 |

+

# Downsample

|

| 285 |

+

|

| 286 |

+

[convolutional]

|

| 287 |

+

batch_normalize=1

|

| 288 |

+

filters=512

|

| 289 |

+

size=3

|

| 290 |

+

stride=2

|

| 291 |

+

pad=1

|

| 292 |

+

activation=leaky

|

| 293 |

+

|

| 294 |

+

[convolutional]

|

| 295 |

+

batch_normalize=1

|

| 296 |

+

filters=256

|

| 297 |

+

size=1

|

| 298 |

+

stride=1

|

| 299 |

+

pad=1

|

| 300 |

+

activation=leaky

|

| 301 |

+

|

| 302 |

+

[convolutional]

|

| 303 |

+

batch_normalize=1

|

| 304 |

+

filters=512

|

| 305 |

+

size=3

|

| 306 |

+

stride=1

|

| 307 |

+

pad=1

|

| 308 |

+

activation=leaky

|

| 309 |

+

|

| 310 |

+

[shortcut]

|

| 311 |

+

from=-3

|

| 312 |

+

activation=linear

|

| 313 |

+

|

| 314 |

+

|

| 315 |

+

[convolutional]

|

| 316 |

+

batch_normalize=1

|

| 317 |

+

filters=256

|

| 318 |

+

size=1

|

| 319 |

+

stride=1

|

| 320 |

+

pad=1

|

| 321 |

+

activation=leaky

|

| 322 |

+

|

| 323 |

+

[convolutional]

|

| 324 |

+

batch_normalize=1

|

| 325 |

+

filters=512

|

| 326 |

+

size=3

|

| 327 |

+

stride=1

|

| 328 |

+

pad=1

|

| 329 |

+

activation=leaky

|

| 330 |

+

|

| 331 |

+

[shortcut]

|

| 332 |

+

from=-3

|

| 333 |

+

activation=linear

|

| 334 |

+

|

| 335 |

+

|

| 336 |

+

[convolutional]

|

| 337 |

+

batch_normalize=1

|

| 338 |

+

filters=256

|

| 339 |

+

size=1

|

| 340 |

+

stride=1

|

| 341 |

+

pad=1

|

| 342 |

+

activation=leaky

|

| 343 |

+

|

| 344 |

+

[convolutional]

|

| 345 |

+

batch_normalize=1

|

| 346 |

+

filters=512

|

| 347 |

+

size=3

|

| 348 |

+

stride=1

|

| 349 |

+

pad=1

|

| 350 |

+

activation=leaky

|

| 351 |

+

|

| 352 |

+

[shortcut]

|

| 353 |

+

from=-3

|

| 354 |

+

activation=linear

|

| 355 |

+

|

| 356 |

+

|

| 357 |

+

[convolutional]

|

| 358 |

+

batch_normalize=1

|

| 359 |

+

filters=256

|

| 360 |

+

size=1

|

| 361 |

+

stride=1

|

| 362 |

+

pad=1

|

| 363 |

+

activation=leaky

|

| 364 |

+

|

| 365 |

+

[convolutional]

|

| 366 |

+

batch_normalize=1

|

| 367 |

+

filters=512

|

| 368 |

+

size=3

|

| 369 |

+

stride=1

|

| 370 |

+

pad=1

|

| 371 |

+

activation=leaky

|

| 372 |

+

|

| 373 |

+

[shortcut]

|

| 374 |

+

from=-3

|

| 375 |

+

activation=linear

|

| 376 |

+

|

| 377 |

+

[convolutional]

|

| 378 |

+

batch_normalize=1

|

| 379 |

+

filters=256

|

| 380 |

+

size=1

|

| 381 |

+

stride=1

|

| 382 |

+

pad=1

|

| 383 |

+

activation=leaky

|

| 384 |

+

|

| 385 |

+

[convolutional]

|

| 386 |

+

batch_normalize=1

|

| 387 |

+

filters=512

|

| 388 |

+

size=3

|

| 389 |

+

stride=1

|

| 390 |

+

pad=1

|

| 391 |

+

activation=leaky

|

| 392 |

+

|

| 393 |

+

[shortcut]

|

| 394 |

+

from=-3

|

| 395 |

+

activation=linear

|

| 396 |

+

|

| 397 |

+

|

| 398 |

+

[convolutional]

|

| 399 |

+

batch_normalize=1

|

| 400 |

+

filters=256

|

| 401 |

+

size=1

|

| 402 |

+

stride=1

|

| 403 |

+

pad=1

|

| 404 |

+

activation=leaky

|

| 405 |

+

|

| 406 |

+

[convolutional]

|

| 407 |

+

batch_normalize=1

|

| 408 |

+

filters=512

|

| 409 |

+

size=3

|

| 410 |

+

stride=1

|

| 411 |

+

pad=1

|

| 412 |

+

activation=leaky

|

| 413 |

+

|

| 414 |

+

[shortcut]

|

| 415 |

+

from=-3

|

| 416 |

+

activation=linear

|

| 417 |

+

|

| 418 |

+

|

| 419 |

+

[convolutional]

|

| 420 |

+

batch_normalize=1

|

| 421 |

+

filters=256

|

| 422 |

+

size=1

|

| 423 |

+

stride=1

|

| 424 |

+

pad=1

|

| 425 |

+

activation=leaky

|

| 426 |

+

|

| 427 |

+

[convolutional]

|

| 428 |

+

batch_normalize=1

|

| 429 |

+

filters=512

|

| 430 |

+

size=3

|

| 431 |

+

stride=1

|

| 432 |

+

pad=1

|

| 433 |

+

activation=leaky

|

| 434 |

+

|

| 435 |

+

[shortcut]

|

| 436 |

+

from=-3

|

| 437 |

+

activation=linear

|

| 438 |

+

|

| 439 |

+

[convolutional]

|

| 440 |

+

batch_normalize=1

|

| 441 |

+

filters=256

|

| 442 |

+

size=1

|

| 443 |

+

stride=1

|

| 444 |

+

pad=1

|

| 445 |

+

activation=leaky

|

| 446 |

+

|

| 447 |

+

[convolutional]

|

| 448 |

+

batch_normalize=1

|

| 449 |

+

filters=512

|

| 450 |

+

size=3

|

| 451 |

+

stride=1

|

| 452 |

+

pad=1

|

| 453 |

+

activation=leaky

|

| 454 |

+

|

| 455 |

+

[shortcut]

|

| 456 |

+

from=-3

|

| 457 |

+

activation=linear

|

| 458 |

+

|

| 459 |

+

# Downsample

|

| 460 |

+

|

| 461 |

+

[convolutional]

|

| 462 |

+

batch_normalize=1

|

| 463 |

+

filters=1024

|

| 464 |

+

size=3

|

| 465 |

+

stride=2

|

| 466 |

+

pad=1

|

| 467 |

+

activation=leaky

|

| 468 |

+

|

| 469 |

+

[convolutional]

|

| 470 |

+

batch_normalize=1

|

| 471 |

+

filters=512

|

| 472 |

+

size=1

|

| 473 |

+

stride=1

|

| 474 |

+

pad=1

|

| 475 |

+

activation=leaky

|

| 476 |

+

|

| 477 |

+

[convolutional]

|

| 478 |

+

batch_normalize=1

|

| 479 |

+

filters=1024

|

| 480 |

+

size=3

|

| 481 |

+

stride=1

|

| 482 |

+

pad=1

|

| 483 |

+

activation=leaky

|

| 484 |

+

|

| 485 |

+

[shortcut]

|

| 486 |

+

from=-3

|

| 487 |

+

activation=linear

|

| 488 |

+

|

| 489 |

+

[convolutional]

|

| 490 |

+

batch_normalize=1

|

| 491 |

+

filters=512

|

| 492 |

+

size=1

|

| 493 |

+

stride=1

|

| 494 |

+

pad=1

|

| 495 |

+

activation=leaky

|

| 496 |

+

|

| 497 |

+

[convolutional]

|

| 498 |

+

batch_normalize=1

|

| 499 |

+

filters=1024

|

| 500 |

+

size=3

|

| 501 |

+

stride=1

|

| 502 |

+

pad=1

|

| 503 |

+

activation=leaky

|

| 504 |

+

|

| 505 |

+

[shortcut]

|

| 506 |

+

from=-3

|

| 507 |

+

activation=linear

|

| 508 |

+

|

| 509 |

+

[convolutional]

|

| 510 |

+

batch_normalize=1

|

| 511 |

+

filters=512

|

| 512 |

+

size=1

|

| 513 |

+

stride=1

|

| 514 |

+

pad=1

|

| 515 |

+

activation=leaky

|

| 516 |

+

|

| 517 |

+

[convolutional]

|

| 518 |

+

batch_normalize=1

|

| 519 |

+

filters=1024

|

| 520 |

+

size=3

|

| 521 |

+

stride=1

|

| 522 |

+

pad=1

|

| 523 |

+

activation=leaky

|

| 524 |

+

|

| 525 |

+

[shortcut]

|

| 526 |

+

from=-3

|

| 527 |

+

activation=linear

|

| 528 |

+

|

| 529 |

+

[convolutional]

|

| 530 |

+

batch_normalize=1

|

| 531 |

+

filters=512

|

| 532 |

+

size=1

|

| 533 |

+

stride=1

|

| 534 |

+

pad=1

|

| 535 |

+

activation=leaky

|

| 536 |

+

|

| 537 |

+

[convolutional]

|

| 538 |

+

batch_normalize=1

|

| 539 |

+

filters=1024

|

| 540 |

+

size=3

|

| 541 |

+

stride=1

|

| 542 |

+

pad=1

|

| 543 |

+

activation=leaky

|

| 544 |

+

|

| 545 |

+

[shortcut]

|

| 546 |

+

from=-3

|

| 547 |

+

activation=linear

|

| 548 |

+

|

| 549 |

+

######################

|

| 550 |

+

|

| 551 |

+

[convolutional]

|

| 552 |

+

batch_normalize=1

|

| 553 |

+

filters=512

|

| 554 |

+

size=1

|

| 555 |

+

stride=1

|

| 556 |

+

pad=1

|

| 557 |

+

activation=leaky

|

| 558 |

+

|

| 559 |

+

[convolutional]

|

| 560 |

+

batch_normalize=1

|

| 561 |

+

size=3

|

| 562 |

+

stride=1

|

| 563 |

+

pad=1

|

| 564 |

+

filters=1024

|

| 565 |

+

activation=leaky

|

| 566 |

+

|

| 567 |

+

[convolutional]

|

| 568 |

+

batch_normalize=1

|

| 569 |

+

filters=512

|

| 570 |

+

size=1

|

| 571 |

+

stride=1

|

| 572 |

+

pad=1

|

| 573 |

+

activation=leaky

|

| 574 |

+

|

| 575 |

+

[convolutional]

|

| 576 |

+

batch_normalize=1

|

| 577 |

+

size=3

|

| 578 |

+

stride=1

|

| 579 |

+

pad=1

|

| 580 |

+

filters=1024

|

| 581 |

+

activation=leaky

|

| 582 |

+

|

| 583 |

+

[convolutional]

|

| 584 |

+

batch_normalize=1

|

| 585 |

+

filters=512

|

| 586 |

+

size=1

|

| 587 |

+

stride=1

|

| 588 |

+

pad=1

|

| 589 |

+

activation=leaky

|

| 590 |

+

|

| 591 |

+

[convolutional]

|

| 592 |

+

batch_normalize=1

|

| 593 |

+

size=3

|

| 594 |

+

stride=1

|

| 595 |

+

pad=1

|

| 596 |

+

filters=1024

|

| 597 |

+

activation=leaky

|

| 598 |

+

|

| 599 |

+

[convolutional]

|

| 600 |

+

size=1

|

| 601 |

+

stride=1

|

| 602 |

+

pad=1

|

| 603 |

+

filters=255

|

| 604 |

+

activation=linear

|

| 605 |

+

|

| 606 |

+

|

| 607 |

+

[yolo]

|

| 608 |

+

mask = 6,7,8

|

| 609 |

+

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

| 610 |

+

classes=80

|

| 611 |

+

num=9

|

| 612 |

+

jitter=.3

|

| 613 |

+

ignore_thresh = .7

|

| 614 |

+

truth_thresh = 1

|

| 615 |

+

random=1

|

| 616 |

+

|

| 617 |

+

|

| 618 |

+

[route]

|

| 619 |

+

layers = -4

|

| 620 |

+

|

| 621 |

+

[convolutional]

|

| 622 |

+

batch_normalize=1

|

| 623 |

+

filters=256

|

| 624 |

+

size=1

|

| 625 |

+

stride=1

|

| 626 |

+

pad=1

|

| 627 |

+

activation=leaky

|

| 628 |

+

|

| 629 |

+

[upsample]

|

| 630 |

+

stride=2

|

| 631 |

+

|

| 632 |

+

[route]

|

| 633 |

+

layers = -1, 61

|

| 634 |

+

|

| 635 |

+

|

| 636 |

+

|

| 637 |

+

[convolutional]

|

| 638 |

+

batch_normalize=1

|

| 639 |

+

filters=256

|

| 640 |

+

size=1

|

| 641 |

+

stride=1

|

| 642 |

+

pad=1

|

| 643 |

+

activation=leaky

|

| 644 |

+

|

| 645 |

+

[convolutional]

|

| 646 |

+

batch_normalize=1

|

| 647 |

+

size=3

|

| 648 |

+

stride=1

|

| 649 |

+

pad=1

|

| 650 |

+

filters=512

|

| 651 |

+

activation=leaky

|

| 652 |

+

|

| 653 |

+

[convolutional]

|

| 654 |

+

batch_normalize=1

|

| 655 |

+

filters=256

|

| 656 |

+

size=1

|

| 657 |

+

stride=1

|

| 658 |

+

pad=1

|

| 659 |

+

activation=leaky

|

| 660 |

+

|

| 661 |

+

[convolutional]

|

| 662 |

+

batch_normalize=1

|

| 663 |

+

size=3

|

| 664 |

+

stride=1

|

| 665 |

+

pad=1

|

| 666 |

+

filters=512

|

| 667 |

+

activation=leaky

|

| 668 |

+

|

| 669 |

+

[convolutional]

|

| 670 |

+

batch_normalize=1

|

| 671 |

+

filters=256

|

| 672 |

+

size=1

|

| 673 |

+

stride=1

|

| 674 |

+

pad=1

|

| 675 |

+

activation=leaky

|

| 676 |

+

|

| 677 |

+

[convolutional]

|

| 678 |

+

batch_normalize=1

|

| 679 |

+

size=3

|

| 680 |

+

stride=1

|

| 681 |

+

pad=1

|

| 682 |

+

filters=512

|

| 683 |

+

activation=leaky

|

| 684 |

+

|

| 685 |

+

[convolutional]

|

| 686 |

+

size=1

|

| 687 |

+

stride=1

|

| 688 |

+

pad=1

|

| 689 |

+

filters=255

|

| 690 |

+

activation=linear

|

| 691 |

+

|

| 692 |

+

|

| 693 |

+

[yolo]

|

| 694 |

+

mask = 3,4,5

|

| 695 |

+

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

| 696 |

+

classes=80

|

| 697 |

+

num=9

|

| 698 |

+

jitter=.3

|

| 699 |

+

ignore_thresh = .7

|

| 700 |

+

truth_thresh = 1

|

| 701 |

+

random=1

|

| 702 |

+

|

| 703 |

+

|

| 704 |

+

|

| 705 |

+

[route]

|

| 706 |

+

layers = -4

|

| 707 |

+

|

| 708 |

+

[convolutional]

|

| 709 |

+

batch_normalize=1

|

| 710 |

+

filters=128

|

| 711 |

+

size=1

|

| 712 |

+

stride=1

|

| 713 |

+

pad=1

|

| 714 |

+

activation=leaky

|

| 715 |

+

|

| 716 |

+

[upsample]

|

| 717 |

+

stride=2

|

| 718 |

+

|

| 719 |

+

[route]

|

| 720 |

+

layers = -1, 36

|

| 721 |

+

|

| 722 |

+

|

| 723 |

+

|

| 724 |

+

[convolutional]

|

| 725 |

+

batch_normalize=1

|

| 726 |

+

filters=128

|

| 727 |

+

size=1

|

| 728 |

+

stride=1

|

| 729 |

+

pad=1

|

| 730 |

+

activation=leaky

|

| 731 |

+

|

| 732 |

+

[convolutional]

|

| 733 |

+

batch_normalize=1

|

| 734 |

+

size=3

|

| 735 |

+

stride=1

|

| 736 |

+

pad=1

|

| 737 |

+

filters=256

|

| 738 |

+

activation=leaky

|

| 739 |

+

|

| 740 |

+

[convolutional]

|

| 741 |

+

batch_normalize=1

|

| 742 |

+

filters=128

|

| 743 |

+

size=1

|

| 744 |

+

stride=1

|

| 745 |

+

pad=1

|

| 746 |

+

activation=leaky

|

| 747 |

+

|

| 748 |

+

[convolutional]

|

| 749 |

+

batch_normalize=1

|

| 750 |

+

size=3

|

| 751 |

+

stride=1

|

| 752 |

+

pad=1

|

| 753 |

+

filters=256

|

| 754 |

+

activation=leaky

|

| 755 |

+

|

| 756 |

+

[convolutional]

|

| 757 |

+

batch_normalize=1

|

| 758 |

+

filters=128

|

| 759 |

+

size=1

|

| 760 |

+

stride=1

|

| 761 |

+

pad=1

|

| 762 |

+

activation=leaky

|

| 763 |

+

|

| 764 |

+

[convolutional]

|

| 765 |

+

batch_normalize=1

|

| 766 |

+

size=3

|

| 767 |

+

stride=1

|

| 768 |

+

pad=1

|

| 769 |

+

filters=256

|

| 770 |

+

activation=leaky

|

| 771 |

+

|

| 772 |

+

[convolutional]

|

| 773 |

+

size=1

|

| 774 |

+

stride=1

|

| 775 |

+

pad=1

|

| 776 |

+

filters=255

|

| 777 |

+

activation=linear

|

| 778 |

+

|

| 779 |

+

|

| 780 |

+

[yolo]

|

| 781 |

+

mask = 0,1,2

|

| 782 |

+

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

| 783 |

+

classes=80

|

| 784 |

+

num=9

|

| 785 |

+

jitter=.3

|

| 786 |

+

ignore_thresh = .7

|

| 787 |

+

truth_thresh = 1

|

| 788 |

+

random=1

|

yolo/darknet.py

ADDED

|

@@ -0,0 +1,586 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|