Spaces:

Runtime error

Runtime error

custom vision imports

Browse files- .gitignore +2 -1

- app.py +156 -17

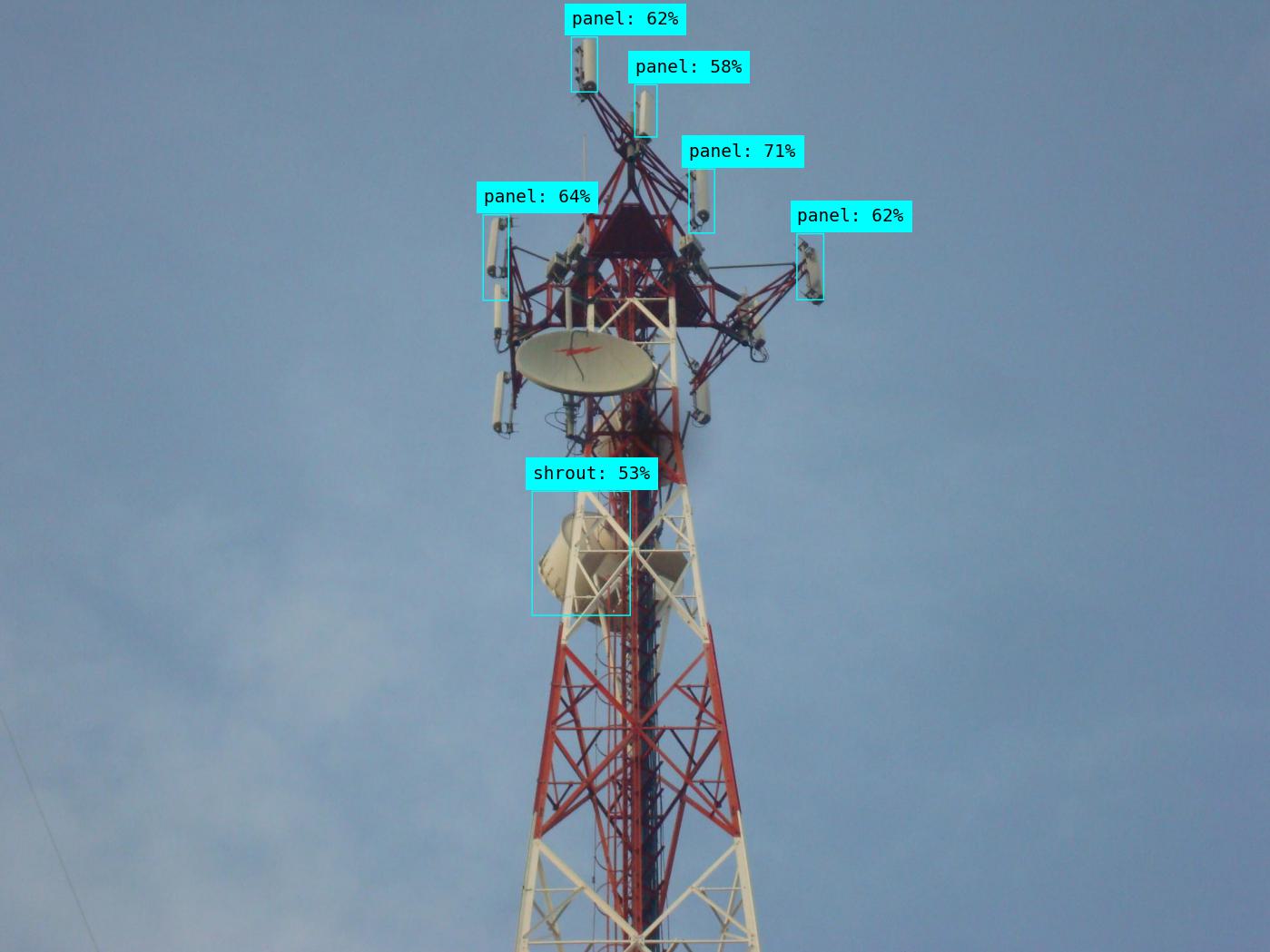

- input_object_detection.png +0 -0

- output.jpg +0 -0

- requirements.txt +0 -0

.gitignore

CHANGED

|

@@ -1,3 +1,4 @@

|

|

| 1 |

.ipynb_checkpoints

|

| 2 |

flagged

|

| 3 |

-

telecom_object_detection.ipynb

|

|

|

|

|

|

| 1 |

.ipynb_checkpoints

|

| 2 |

flagged

|

| 3 |

+

telecom_object_detection.ipynb

|

| 4 |

+

.env

|

app.py

CHANGED

|

@@ -1,13 +1,117 @@

|

|

| 1 |

# AUTOGENERATED! DO NOT EDIT! File to edit: telecom_object_detection.ipynb.

|

| 2 |

|

| 3 |

# %% auto 0

|

| 4 |

-

__all__ = ['title', 'css', 'urls', '

|

|

|

|

|

|

|

| 5 |

|

| 6 |

# %% telecom_object_detection.ipynb 2

|

| 7 |

import gradio as gr

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

from pathlib import Path

|

| 9 |

|

| 10 |

-

# %% telecom_object_detection.ipynb

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

title = """<h1 id="title">Telecom Object Detection with Azure Custom Vision</h1>"""

|

| 12 |

|

| 13 |

css = '''

|

|

@@ -16,15 +120,49 @@ h1#title {

|

|

| 16 |

}

|

| 17 |

'''

|

| 18 |

|

| 19 |

-

# %% telecom_object_detection.ipynb

|

| 20 |

-

import numpy as np

|

| 21 |

-

import gradio as gr

|

| 22 |

-

|

| 23 |

urls = ["https://c8.alamy.com/comp/J2AB4K/the-new-york-stock-exchange-on-the-wall-street-in-new-york-J2AB4K.jpg"]

|

|

|

|

|

|

|

| 24 |

|

|

|

|

| 25 |

def flip_text(): pass

|

| 26 |

def flip_image(): pass

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 27 |

|

|

|

|

| 28 |

with gr.Blocks(css=css) as demo:

|

| 29 |

|

| 30 |

gr.Markdown(title)

|

|

@@ -32,18 +170,16 @@ with gr.Blocks(css=css) as demo:

|

|

| 32 |

with gr.Tabs():

|

| 33 |

with gr.TabItem("Image Upload"):

|

| 34 |

with gr.Row():

|

| 35 |

-

image_input = gr.Image()

|

| 36 |

-

image_output = gr.Image()

|

|

|

|

| 37 |

with gr.Row():

|

| 38 |

"""example_images = gr.Dataset(components=[img_input],

|

| 39 |

samples=[[path.as_posix()] for path in sorted(Path('images').rglob('*.jpg'))]

|

| 40 |

)"""

|

| 41 |

-

example_images = gr.Examples(

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

)

|

| 45 |

-

|

| 46 |

-

|

| 47 |

image_button = gr.Button("Detect")

|

| 48 |

|

| 49 |

with gr.TabItem("Image URL"):

|

|

@@ -55,8 +191,11 @@ with gr.Blocks(css=css) as demo:

|

|

| 55 |

example_url = gr.Dataset(components=[url_input], samples=[[str(url)] for url in urls])

|

| 56 |

url_button = gr.Button("Detect")

|

| 57 |

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

image_button.click(

|

|

|

|

|

|

|

|

|

|

| 61 |

|

| 62 |

demo.launch()

|

|

|

|

| 1 |

# AUTOGENERATED! DO NOT EDIT! File to edit: telecom_object_detection.ipynb.

|

| 2 |

|

| 3 |

# %% auto 0

|

| 4 |

+

__all__ = ['prediction_endpoint', 'prediction_key', 'project_id', 'model_name', 'title', 'css', 'urls', 'imgs', 'img_samples',

|

| 5 |

+

'fig2img', 'custom_vision_detect_objects', 'flip_text', 'flip_image', 'set_example_url', 'set_example_image',

|

| 6 |

+

'detect_objects']

|

| 7 |

|

| 8 |

# %% telecom_object_detection.ipynb 2

|

| 9 |

import gradio as gr

|

| 10 |

+

import numpy as np

|

| 11 |

+

import os

|

| 12 |

+

import io

|

| 13 |

+

|

| 14 |

+

import requests

|

| 15 |

+

|

| 16 |

from pathlib import Path

|

| 17 |

|

| 18 |

+

# %% telecom_object_detection.ipynb 6

|

| 19 |

+

from azure.cognitiveservices.vision.customvision.prediction import CustomVisionPredictionClient

|

| 20 |

+

from msrest.authentication import ApiKeyCredentials

|

| 21 |

+

from matplotlib import pyplot as plt

|

| 22 |

+

from PIL import Image, ImageDraw, ImageFont

|

| 23 |

+

from dotenv import load_dotenv

|

| 24 |

+

|

| 25 |

+

# %% telecom_object_detection.ipynb 11

|

| 26 |

+

def fig2img(fig):

|

| 27 |

+

buf = io.BytesIO()

|

| 28 |

+

fig.savefig(buf)

|

| 29 |

+

buf.seek(0)

|

| 30 |

+

img = Image.open(buf)

|

| 31 |

+

return img

|

| 32 |

+

|

| 33 |

+

def custom_vision_detect_objects(image_file: Path):

|

| 34 |

+

dpi = 100

|

| 35 |

+

|

| 36 |

+

# Get Configuration Settings

|

| 37 |

+

load_dotenv()

|

| 38 |

+

prediction_endpoint = os.getenv('PredictionEndpoint')

|

| 39 |

+

prediction_key = os.getenv('PredictionKey')

|

| 40 |

+

project_id = os.getenv('ProjectID')

|

| 41 |

+

model_name = os.getenv('ModelName')

|

| 42 |

+

|

| 43 |

+

# Authenticate a client for the training API

|

| 44 |

+

credentials = ApiKeyCredentials(in_headers={"Prediction-key": prediction_key})

|

| 45 |

+

prediction_client = CustomVisionPredictionClient(endpoint=prediction_endpoint, credentials=credentials)

|

| 46 |

+

|

| 47 |

+

# Load image and get height, width and channels

|

| 48 |

+

#image_file = 'produce.jpg'

|

| 49 |

+

print('Detecting objects in', image_file)

|

| 50 |

+

image = Image.open(image_file)

|

| 51 |

+

h, w, ch = np.array(image).shape

|

| 52 |

+

|

| 53 |

+

# Detect objects in the test image

|

| 54 |

+

with open(image_file, mode="rb") as image_data:

|

| 55 |

+

results = prediction_client.detect_image(project_id, model_name, image_data)

|

| 56 |

+

|

| 57 |

+

# Create a figure for the results

|

| 58 |

+

fig = plt.figure(figsize=(w/dpi, h/dpi))

|

| 59 |

+

plt.axis('off')

|

| 60 |

+

|

| 61 |

+

# Display the image with boxes around each detected object

|

| 62 |

+

draw = ImageDraw.Draw(image)

|

| 63 |

+

lineWidth = int(w/800)

|

| 64 |

+

color = 'cyan'

|

| 65 |

+

|

| 66 |

+

for prediction in results.predictions:

|

| 67 |

+

# Only show objects with a > 50% probability

|

| 68 |

+

if (prediction.probability*100) > 50:

|

| 69 |

+

# Box coordinates and dimensions are proportional - convert to absolutes

|

| 70 |

+

left = prediction.bounding_box.left * w

|

| 71 |

+

top = prediction.bounding_box.top * h

|

| 72 |

+

height = prediction.bounding_box.height * h

|

| 73 |

+

width = prediction.bounding_box.width * w

|

| 74 |

+

|

| 75 |

+

# Draw the box

|

| 76 |

+

points = ((left,top), (left+width,top), (left+width,top+height), (left,top+height), (left,top))

|

| 77 |

+

draw.line(points, fill=color, width=lineWidth)

|

| 78 |

+

|

| 79 |

+

# Add the tag name and probability

|

| 80 |

+

#plt.annotate(prediction.tag_name + ": {0:.2f}%".format(prediction.probability * 100),(left,top), backgroundcolor=color)

|

| 81 |

+

plt.annotate(

|

| 82 |

+

prediction.tag_name + ": {0:.0f}%".format(prediction.probability * 100),

|

| 83 |

+

(left, top-1.372*h/dpi),

|

| 84 |

+

backgroundcolor=color,

|

| 85 |

+

fontsize=max(w/dpi, h/dpi),

|

| 86 |

+

fontfamily='monospace'

|

| 87 |

+

)

|

| 88 |

+

|

| 89 |

+

plt.imshow(image)

|

| 90 |

+

plt.tight_layout(pad=0)

|

| 91 |

+

|

| 92 |

+

return fig2img(fig)

|

| 93 |

+

|

| 94 |

+

outputfile = 'output.jpg'

|

| 95 |

+

fig.savefig(outputfile)

|

| 96 |

+

print('Resulabsts saved in ', outputfile)

|

| 97 |

+

|

| 98 |

+

# %% telecom_object_detection.ipynb 13

|

| 99 |

+

load_dotenv()

|

| 100 |

+

prediction_endpoint = os.getenv('PredictionEndpoint')

|

| 101 |

+

prediction_key = os.getenv('PredictionKey')

|

| 102 |

+

project_id = os.getenv('ProjectID')

|

| 103 |

+

model_name = os.getenv('ModelName')

|

| 104 |

+

print(prediction_endpoint)

|

| 105 |

+

print(prediction_key)

|

| 106 |

+

print(project_id)

|

| 107 |

+

print(model_name)

|

| 108 |

+

#print('/'*10)

|

| 109 |

+

#print(credentials)

|

| 110 |

+

#print(prediction_client)

|

| 111 |

+

#print('/'*10)

|

| 112 |

+

#print(h, w, ch)

|

| 113 |

+

|

| 114 |

+

# %% telecom_object_detection.ipynb 15

|

| 115 |

title = """<h1 id="title">Telecom Object Detection with Azure Custom Vision</h1>"""

|

| 116 |

|

| 117 |

css = '''

|

|

|

|

| 120 |

}

|

| 121 |

'''

|

| 122 |

|

| 123 |

+

# %% telecom_object_detection.ipynb 16

|

|

|

|

|

|

|

|

|

|

| 124 |

urls = ["https://c8.alamy.com/comp/J2AB4K/the-new-york-stock-exchange-on-the-wall-street-in-new-york-J2AB4K.jpg"]

|

| 125 |

+

imgs = [path.as_posix() for path in sorted(Path('images').rglob('*.jpg'))]

|

| 126 |

+

img_samples = [[path.as_posix()] for path in sorted(Path('images').rglob('*.jpg'))]

|

| 127 |

|

| 128 |

+

# %% telecom_object_detection.ipynb 17

|

| 129 |

def flip_text(): pass

|

| 130 |

def flip_image(): pass

|

| 131 |

+

def set_example_url(example: list) -> dict:

|

| 132 |

+

return gr.Textbox.update(value=example[0])

|

| 133 |

+

|

| 134 |

+

def set_example_image(example: list) -> dict:

|

| 135 |

+

#print(example)

|

| 136 |

+

#print(gr.Image.update(value=example[0]))

|

| 137 |

+

return gr.Image.update(value=example[0])

|

| 138 |

+

|

| 139 |

+

#def detect_objects(url_input, image_input):

|

| 140 |

+

|

| 141 |

+

def detect_objects(image_input:Image):

|

| 142 |

+

#if validators.url(url_input):

|

| 143 |

+

# image = Image.open(requests.get(url_input, stream=True).raw)

|

| 144 |

+

#elif image_input:

|

| 145 |

+

# image = image_input

|

| 146 |

+

print(image_input)

|

| 147 |

+

print(image_input.size)

|

| 148 |

+

w, h = image_input.size

|

| 149 |

+

|

| 150 |

+

if max(w, h) > 1_200:

|

| 151 |

+

#factor = int(max(w, h) / 1_200)

|

| 152 |

+

#image_input = image_input.reduce(factor)

|

| 153 |

+

factor = 1_200 / max(w, h)

|

| 154 |

+

size = (int(w*factor), int(h*factor))

|

| 155 |

+

image_input = image_input.resize(size, resample=Image.Resampling.BILINEAR)

|

| 156 |

+

|

| 157 |

+

resized_image_path = "input_object_detection.png"

|

| 158 |

+

print(image_input.save(resized_image_path))

|

| 159 |

+

|

| 160 |

+

#return fig2img(fig)

|

| 161 |

+

return image_input

|

| 162 |

+

#return custom_vision_detect_objects(Path(filename[0]))

|

| 163 |

+

#return custom_vision_detect_objects(resized_image_path))

|

| 164 |

|

| 165 |

+

# %% telecom_object_detection.ipynb 18

|

| 166 |

with gr.Blocks(css=css) as demo:

|

| 167 |

|

| 168 |

gr.Markdown(title)

|

|

|

|

| 170 |

with gr.Tabs():

|

| 171 |

with gr.TabItem("Image Upload"):

|

| 172 |

with gr.Row():

|

| 173 |

+

image_input = gr.Image(type='pil')

|

| 174 |

+

image_output = gr.Image(shape=(650,650))

|

| 175 |

+

|

| 176 |

with gr.Row():

|

| 177 |

"""example_images = gr.Dataset(components=[img_input],

|

| 178 |

samples=[[path.as_posix()] for path in sorted(Path('images').rglob('*.jpg'))]

|

| 179 |

)"""

|

| 180 |

+

#example_images = gr.Examples(examples=imgs, inputs=image_input)

|

| 181 |

+

example_images = gr.Dataset(components=[image_input], samples=img_samples)

|

| 182 |

+

|

|

|

|

|

|

|

|

|

|

| 183 |

image_button = gr.Button("Detect")

|

| 184 |

|

| 185 |

with gr.TabItem("Image URL"):

|

|

|

|

| 191 |

example_url = gr.Dataset(components=[url_input], samples=[[str(url)] for url in urls])

|

| 192 |

url_button = gr.Button("Detect")

|

| 193 |

|

| 194 |

+

url_button.click(detect_objects, inputs=[url_input], outputs=img_output_from_url)

|

| 195 |

+

image_button.click(detect_objects, inputs=[image_input], outputs=image_output)

|

| 196 |

+

#image_button.click(detect_objects, inputs=[example_images], outputs=image_output)

|

| 197 |

+

|

| 198 |

+

example_url.click(fn=set_example_url, inputs=[example_url], outputs=[url_input])

|

| 199 |

+

example_images.click(fn=set_example_image, inputs=[example_images], outputs=[image_input])

|

| 200 |

|

| 201 |

demo.launch()

|

input_object_detection.png

ADDED

|

output.jpg

ADDED

|

requirements.txt

ADDED

|

File without changes

|