Spaces:

Sleeping

Sleeping

Commit

•

6e32a75

1

Parent(s):

c168557

Upload 55 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- LICENSE +201 -0

- PromptNet.py +114 -0

- app.py +121 -0

- ckpts/few-shot.pth +3 -0

- data/annotation.json +3 -0

- decoder_config/decoder_config.pkl +3 -0

- example_figs/example_fig1.jpg.png +0 -0

- example_figs/example_fig2.jpg.jpg +0 -0

- example_figs/example_fig3.jpg.png +0 -0

- inference.py +110 -0

- models/models.py +125 -0

- models/r2gen.py +63 -0

- modules/att_model.py +319 -0

- modules/att_models.py +120 -0

- modules/caption_model.py +401 -0

- modules/config.pkl +3 -0

- modules/dataloader.py +59 -0

- modules/dataloaders.py +62 -0

- modules/dataset.py +68 -0

- modules/datasets.py +57 -0

- modules/decoder.py +50 -0

- modules/encoder_decoder.py +391 -0

- modules/loss.py +22 -0

- modules/metrics.py +33 -0

- modules/optimizers.py +18 -0

- modules/tester.py +144 -0

- modules/tokenizers.py +95 -0

- modules/trainer.py +255 -0

- modules/utils.py +55 -0

- modules/visual_extractor.py +53 -0

- prompt/prompt.pth +3 -0

- pycocoevalcap/README.md +23 -0

- pycocoevalcap/__init__.py +1 -0

- pycocoevalcap/bleu/LICENSE +19 -0

- pycocoevalcap/bleu/__init__.py +1 -0

- pycocoevalcap/bleu/bleu.py +57 -0

- pycocoevalcap/bleu/bleu_scorer.py +268 -0

- pycocoevalcap/cider/__init__.py +1 -0

- pycocoevalcap/cider/cider.py +55 -0

- pycocoevalcap/cider/cider_scorer.py +197 -0

- pycocoevalcap/eval.py +74 -0

- pycocoevalcap/license.txt +26 -0

- pycocoevalcap/meteor/__init__.py +1 -0

- pycocoevalcap/meteor/meteor-1.5.jar +3 -0

- pycocoevalcap/meteor/meteor.py +88 -0

- pycocoevalcap/rouge/__init__.py +1 -0

- pycocoevalcap/rouge/rouge.py +105 -0

- pycocoevalcap/tokenizer/__init__.py +1 -0

- pycocoevalcap/tokenizer/ptbtokenizer.py +76 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

data/annotation.json filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

pycocoevalcap/meteor/meteor-1.5.jar filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

pycocoevalcap/tokenizer/stanford-corenlp-3.4.1.jar filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

PromptNet.py

ADDED

|

@@ -0,0 +1,114 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import argparse

|

| 3 |

+

from modules.dataloader import R2DataLoader

|

| 4 |

+

from modules.tokenizers import Tokenizer

|

| 5 |

+

from modules.loss import compute_loss

|

| 6 |

+

from modules.metrics import compute_scores

|

| 7 |

+

from modules.optimizers import build_optimizer, build_lr_scheduler

|

| 8 |

+

from models.models import MedCapModel

|

| 9 |

+

from modules.trainer import Trainer

|

| 10 |

+

import numpy as np

|

| 11 |

+

|

| 12 |

+

def main():

|

| 13 |

+

parser = argparse.ArgumentParser()

|

| 14 |

+

|

| 15 |

+

# Data input Settings

|

| 16 |

+

parser.add_argument('--json_path', default='data/mimic_cxr/annotation.json',

|

| 17 |

+

help='Path to the json file')

|

| 18 |

+

parser.add_argument('--image_dir', default='data/mimic_cxr/images/',

|

| 19 |

+

help='Directory of images')

|

| 20 |

+

|

| 21 |

+

# Dataloader Settings

|

| 22 |

+

parser.add_argument('--dataset', default='mimic_cxr', help='dataset for training MedCap')

|

| 23 |

+

parser.add_argument('--bs', type=int, default=16)

|

| 24 |

+

parser.add_argument('--threshold', type=int, default=10, help='the cut off frequency for the words.')

|

| 25 |

+

parser.add_argument('--num_workers', type=int, default=2, help='the number of workers for dataloader.')

|

| 26 |

+

parser.add_argument('--max_seq_length', type=int, default=1024, help='the maximum sequence length of the reports.')

|

| 27 |

+

|

| 28 |

+

#Trainer Settings

|

| 29 |

+

parser.add_argument('--epochs', type=int, default=30)

|

| 30 |

+

parser.add_argument('--n_gpu', type=int, default=1, help='the number of gpus to be used.')

|

| 31 |

+

parser.add_argument('--save_dir', type=str, default='results/mimic_cxr/', help='the patch to save the models.')

|

| 32 |

+

parser.add_argument('--record_dir', type=str, default='./record_dir/',

|

| 33 |

+

help='the patch to save the results of experiments.')

|

| 34 |

+

parser.add_argument('--log_period', type=int, default=1000, help='the logging interval (in batches).')

|

| 35 |

+

parser.add_argument('--save_period', type=int, default=1)

|

| 36 |

+

parser.add_argument('--monitor_mode', type=str, default='max', choices=['min', 'max'], help='whether to max or min the metric.')

|

| 37 |

+

parser.add_argument('--monitor_metric', type=str, default='BLEU_4', help='the metric to be monitored.')

|

| 38 |

+

parser.add_argument('--early_stop', type=int, default=50, help='the patience of training.')

|

| 39 |

+

|

| 40 |

+

# Training related

|

| 41 |

+

parser.add_argument('--noise_inject', default='no', choices=['yes', 'no'])

|

| 42 |

+

|

| 43 |

+

# Sample related

|

| 44 |

+

parser.add_argument('--sample_method', type=str, default='greedy', help='the sample methods to sample a report.')

|

| 45 |

+

parser.add_argument('--prompt', default='/prompt/prompt.pt')

|

| 46 |

+

parser.add_argument('--prompt_load', default='no',choices=['yes','no'])

|

| 47 |

+

|

| 48 |

+

# Optimization

|

| 49 |

+

parser.add_argument('--optim', type=str, default='Adam', help='the type of the optimizer.')

|

| 50 |

+

parser.add_argument('--lr_ve', type=float, default=1e-5, help='the learning rate for the visual extractor.')

|

| 51 |

+

parser.add_argument('--lr_ed', type=float, default=5e-4, help='the learning rate for the remaining parameters.')

|

| 52 |

+

parser.add_argument('--weight_decay', type=float, default=5e-5, help='the weight decay.')

|

| 53 |

+

parser.add_argument('--adam_betas', type=tuple, default=(0.9, 0.98), help='the weight decay.')

|

| 54 |

+

parser.add_argument('--adam_eps', type=float, default=1e-9, help='the weight decay.')

|

| 55 |

+

parser.add_argument('--amsgrad', type=bool, default=True, help='.')

|

| 56 |

+

parser.add_argument('--noamopt_warmup', type=int, default=5000, help='.')

|

| 57 |

+

parser.add_argument('--noamopt_factor', type=int, default=1, help='.')

|

| 58 |

+

|

| 59 |

+

# Learning Rate Scheduler

|

| 60 |

+

parser.add_argument('--lr_scheduler', type=str, default='StepLR', help='the type of the learning rate scheduler.')

|

| 61 |

+

parser.add_argument('--step_size', type=int, default=50, help='the step size of the learning rate scheduler.')

|

| 62 |

+

parser.add_argument('--gamma', type=float, default=0.1, help='the gamma of the learning rate scheduler.')

|

| 63 |

+

|

| 64 |

+

# Others

|

| 65 |

+

parser.add_argument('--seed', type=int, default=9153, help='.')

|

| 66 |

+

parser.add_argument('--resume', type=str, help='whether to resume the training from existing checkpoints.')

|

| 67 |

+

parser.add_argument('--train_mode', default='base', choices=['base', 'fine-tuning'],

|

| 68 |

+

help='Training mode: base (autoencoding) or fine-tuning (full supervised training or fine-tuned on downstream datasets)')

|

| 69 |

+

parser.add_argument('--F_version', default='v1', choices=['v1', 'v2'],)

|

| 70 |

+

parser.add_argument('--clip_update', default='no' , choices=['yes','no'])

|

| 71 |

+

|

| 72 |

+

# Fine-tuning

|

| 73 |

+

parser.add_argument('--random_init', default='yes', choices=['yes', 'no'],

|

| 74 |

+

help='Whether to load the pre-trained weights for fine-tuning.')

|

| 75 |

+

parser.add_argument('--weight_path', default='path_to_default_weights', type=str,

|

| 76 |

+

help='Path to the pre-trained model weights.')

|

| 77 |

+

args = parser.parse_args()

|

| 78 |

+

|

| 79 |

+

# fix random seeds

|

| 80 |

+

torch.manual_seed(args.seed)

|

| 81 |

+

torch.backends.cudnn.deterministic = True

|

| 82 |

+

torch.backends.cudnn.benchmark = False

|

| 83 |

+

np.random.seed(args.seed)

|

| 84 |

+

|

| 85 |

+

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

|

| 86 |

+

|

| 87 |

+

# create tokenizer

|

| 88 |

+

tokenizer = Tokenizer(args)

|

| 89 |

+

|

| 90 |

+

# create data loader

|

| 91 |

+

train_dataloader = R2DataLoader(args, tokenizer, split='train', shuffle=True)

|

| 92 |

+

val_dataloader = R2DataLoader(args, tokenizer, split='val', shuffle=False)

|

| 93 |

+

test_dataloader = R2DataLoader(args, tokenizer, split='test', shuffle=False)

|

| 94 |

+

|

| 95 |

+

# get function handles of loss and metrics

|

| 96 |

+

criterion = compute_loss

|

| 97 |

+

metrics = compute_scores

|

| 98 |

+

model = MedCapModel(args, tokenizer)

|

| 99 |

+

|

| 100 |

+

if args.train_mode == 'fine-tuning' and args.random_init == 'no':

|

| 101 |

+

# Load weights from the specified path

|

| 102 |

+

checkpoint = torch.load(args.weight_path)

|

| 103 |

+

model.load_state_dict(checkpoint)

|

| 104 |

+

|

| 105 |

+

# build optimizer, learning rate scheduler

|

| 106 |

+

optimizer = build_optimizer(args, model)

|

| 107 |

+

lr_scheduler = build_lr_scheduler(args, optimizer)

|

| 108 |

+

|

| 109 |

+

# build trainer and start to train

|

| 110 |

+

trainer = Trainer(model, criterion, metrics, optimizer, args, lr_scheduler, train_dataloader, val_dataloader, test_dataloader)

|

| 111 |

+

trainer.train()

|

| 112 |

+

|

| 113 |

+

if __name__ == '__main__':

|

| 114 |

+

main()

|

app.py

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

from PIL import Image

|

| 4 |

+

from models.r2gen import R2GenModel

|

| 5 |

+

from modules.tokenizers import Tokenizer

|

| 6 |

+

import argparse

|

| 7 |

+

|

| 8 |

+

# Assuming you have a predefined configuration function for model args

|

| 9 |

+

def get_model_args():

|

| 10 |

+

parser = argparse.ArgumentParser()

|

| 11 |

+

|

| 12 |

+

# Model loader settings

|

| 13 |

+

parser.add_argument('--load', type=str, default='ckpts/few-shot.pth', help='the path to the model weights.')

|

| 14 |

+

parser.add_argument('--prompt', type=str, default='prompt/prompt.pth', help='the path to the prompt weights.')

|

| 15 |

+

|

| 16 |

+

# Data input settings

|

| 17 |

+

parser.add_argument('--image_path', type=str, default='example_figs/example_fig1.jpg', help='the path to the test image.')

|

| 18 |

+

parser.add_argument('--image_dir', type=str, default='data/images/', help='the path to the directory containing the data.')

|

| 19 |

+

parser.add_argument('--ann_path', type=str, default='data/annotation.json', help='the path to the directory containing the data.')

|

| 20 |

+

|

| 21 |

+

# Data loader settings

|

| 22 |

+

parser.add_argument('--dataset_name', type=str, default='mimic_cxr', help='the dataset to be used.')

|

| 23 |

+

parser.add_argument('--max_seq_length', type=int, default=60, help='the maximum sequence length of the reports.')

|

| 24 |

+

parser.add_argument('--threshold', type=int, default=3, help='the cut off frequency for the words.')

|

| 25 |

+

parser.add_argument('--num_workers', type=int, default=2, help='the number of workers for dataloader.')

|

| 26 |

+

parser.add_argument('--batch_size', type=int, default=16, help='the number of samples for a batch')

|

| 27 |

+

|

| 28 |

+

# Model settings (for visual extractor)

|

| 29 |

+

parser.add_argument('--visual_extractor', type=str, default='resnet101', help='the visual extractor to be used.')

|

| 30 |

+

parser.add_argument('--visual_extractor_pretrained', type=bool, default=True, help='whether to load the pretrained visual extractor')

|

| 31 |

+

|

| 32 |

+

# Model settings (for Transformer)

|

| 33 |

+

parser.add_argument('--d_model', type=int, default=512, help='the dimension of Transformer.')

|

| 34 |

+

parser.add_argument('--d_ff', type=int, default=512, help='the dimension of FFN.')

|

| 35 |

+

parser.add_argument('--d_vf', type=int, default=2048, help='the dimension of the patch features.')

|

| 36 |

+

parser.add_argument('--num_heads', type=int, default=8, help='the number of heads in Transformer.')

|

| 37 |

+

parser.add_argument('--num_layers', type=int, default=3, help='the number of layers of Transformer.')

|

| 38 |

+

parser.add_argument('--dropout', type=float, default=0.1, help='the dropout rate of Transformer.')

|

| 39 |

+

parser.add_argument('--logit_layers', type=int, default=1, help='the number of the logit layer.')

|

| 40 |

+

parser.add_argument('--bos_idx', type=int, default=0, help='the index of <bos>.')

|

| 41 |

+

parser.add_argument('--eos_idx', type=int, default=0, help='the index of <eos>.')

|

| 42 |

+

parser.add_argument('--pad_idx', type=int, default=0, help='the index of <pad>.')

|

| 43 |

+

parser.add_argument('--use_bn', type=int, default=0, help='whether to use batch normalization.')

|

| 44 |

+

parser.add_argument('--drop_prob_lm', type=float, default=0.5, help='the dropout rate of the output layer.')

|

| 45 |

+

# for Relational Memory

|

| 46 |

+

parser.add_argument('--rm_num_slots', type=int, default=3, help='the number of memory slots.')

|

| 47 |

+

parser.add_argument('--rm_num_heads', type=int, default=8, help='the numebr of heads in rm.')

|

| 48 |

+

parser.add_argument('--rm_d_model', type=int, default=512, help='the dimension of rm.')

|

| 49 |

+

|

| 50 |

+

# Sample related

|

| 51 |

+

parser.add_argument('--sample_method', type=str, default='beam_search', help='the sample methods to sample a report.')

|

| 52 |

+

parser.add_argument('--beam_size', type=int, default=3, help='the beam size when beam searching.')

|

| 53 |

+

parser.add_argument('--temperature', type=float, default=1.0, help='the temperature when sampling.')

|

| 54 |

+

parser.add_argument('--sample_n', type=int, default=1, help='the sample number per image.')

|

| 55 |

+

parser.add_argument('--group_size', type=int, default=1, help='the group size.')

|

| 56 |

+

parser.add_argument('--output_logsoftmax', type=int, default=1, help='whether to output the probabilities.')

|

| 57 |

+

parser.add_argument('--decoding_constraint', type=int, default=0, help='whether decoding constraint.')

|

| 58 |

+

parser.add_argument('--block_trigrams', type=int, default=1, help='whether to use block trigrams.')

|

| 59 |

+

|

| 60 |

+

# Trainer settings

|

| 61 |

+

parser.add_argument('--n_gpu', type=int, default=1, help='the number of gpus to be used.')

|

| 62 |

+

parser.add_argument('--epochs', type=int, default=100, help='the number of training epochs.')

|

| 63 |

+

parser.add_argument('--save_dir', type=str, default='results/iu_xray', help='the patch to save the models.')

|

| 64 |

+

parser.add_argument('--record_dir', type=str, default='records/', help='the patch to save the results of experiments')

|

| 65 |

+

parser.add_argument('--save_period', type=int, default=1, help='the saving period.')

|

| 66 |

+

parser.add_argument('--monitor_mode', type=str, default='max', choices=['min', 'max'], help='whether to max or min the metric.')

|

| 67 |

+

parser.add_argument('--monitor_metric', type=str, default='BLEU_4', help='the metric to be monitored.')

|

| 68 |

+

parser.add_argument('--early_stop', type=int, default=50, help='the patience of training.')

|

| 69 |

+

|

| 70 |

+

# Optimization

|

| 71 |

+

parser.add_argument('--optim', type=str, default='Adam', help='the type of the optimizer.')

|

| 72 |

+

parser.add_argument('--lr_ve', type=float, default=5e-5, help='the learning rate for the visual extractor.')

|

| 73 |

+

parser.add_argument('--lr_ed', type=float, default=1e-4, help='the learning rate for the remaining parameters.')

|

| 74 |

+

parser.add_argument('--weight_decay', type=float, default=5e-5, help='the weight decay.')

|

| 75 |

+

parser.add_argument('--amsgrad', type=bool, default=True, help='.')

|

| 76 |

+

|

| 77 |

+

# Learning Rate Scheduler

|

| 78 |

+

parser.add_argument('--lr_scheduler', type=str, default='StepLR', help='the type of the learning rate scheduler.')

|

| 79 |

+

parser.add_argument('--step_size', type=int, default=50, help='the step size of the learning rate scheduler.')

|

| 80 |

+

parser.add_argument('--gamma', type=float, default=0.1, help='the gamma of the learning rate scheduler.')

|

| 81 |

+

|

| 82 |

+

# Others

|

| 83 |

+

parser.add_argument('--seed', type=int, default=9233, help='.')

|

| 84 |

+

parser.add_argument('--resume', type=str, help='whether to resume the training from existing checkpoints.')

|

| 85 |

+

|

| 86 |

+

args = parser.parse_args()

|

| 87 |

+

return args

|

| 88 |

+

|

| 89 |

+

def load_model():

|

| 90 |

+

args = get_model_args()

|

| 91 |

+

tokenizer = Tokenizer(args)

|

| 92 |

+

device = 'cuda' if torch.cuda.is_available() else 'cpu' # Determine the device dynamically

|

| 93 |

+

model = R2GenModel(args, tokenizer).to(device)

|

| 94 |

+

checkpoint_path = args.load

|

| 95 |

+

# Ensure the state dict is loaded onto the same device as the model

|

| 96 |

+

state_dict = torch.load(checkpoint_path, map_location=device)

|

| 97 |

+

model_state_dict = state_dict['state_dict'] if 'state_dict' in state_dict else state_dict

|

| 98 |

+

model.load_state_dict(model_state_dict)

|

| 99 |

+

model.eval()

|

| 100 |

+

return model, tokenizer

|

| 101 |

+

|

| 102 |

+

model, tokenizer = load_model()

|

| 103 |

+

|

| 104 |

+

def generate_report(image):

|

| 105 |

+

image = Image.fromarray(image).convert('RGB')

|

| 106 |

+

with torch.no_grad():

|

| 107 |

+

output = model([image], mode='sample')

|

| 108 |

+

reports = tokenizer.decode_batch(output.cpu().numpy())

|

| 109 |

+

return reports[0]

|

| 110 |

+

|

| 111 |

+

# Define Gradio interface

|

| 112 |

+

iface = gr.Interface(

|

| 113 |

+

fn=generate_report,

|

| 114 |

+

inputs=gr.inputs.Image(), # Define input shape as needed

|

| 115 |

+

outputs="text",

|

| 116 |

+

title="PromptNet",

|

| 117 |

+

description="Upload a medical image for thorax disease reporting."

|

| 118 |

+

)

|

| 119 |

+

|

| 120 |

+

if __name__ == "__main__":

|

| 121 |

+

iface.launch()

|

ckpts/few-shot.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fa4c3ef1a822fdca8895f6ad0c73b4f355b036d0d28a8523aaf51f58c7393f38

|

| 3 |

+

size 1660341639

|

data/annotation.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5d9590de8db89b0c74343a7e2aecba61e8029e15801de10ec4e030be80b62adc

|

| 3 |

+

size 155745921

|

decoder_config/decoder_config.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c454e6bddb15af52c82734f1796391bf3a10a6c5533ea095de06f661ebb858bb

|

| 3 |

+

size 1744

|

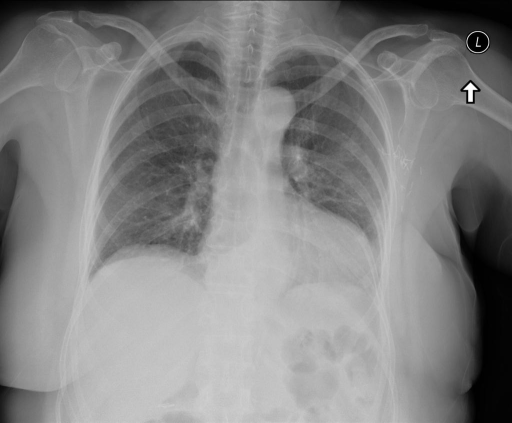

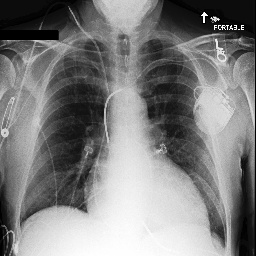

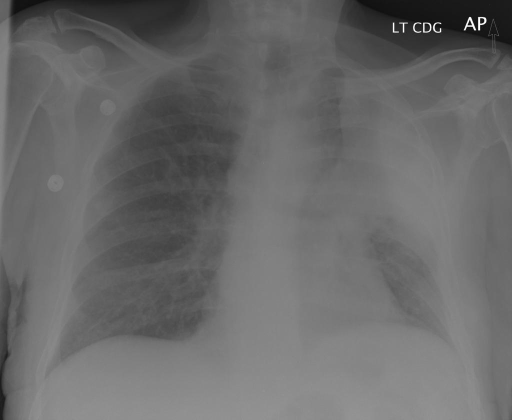

example_figs/example_fig1.jpg.png

ADDED

|

example_figs/example_fig2.jpg.jpg

ADDED

|

example_figs/example_fig3.jpg.png

ADDED

|

inference.py

ADDED

|

@@ -0,0 +1,110 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from models.r2gen import R2GenModel

|

| 3 |

+

from PIL import Image

|

| 4 |

+

from modules.tokenizers import Tokenizer

|

| 5 |

+

import main

|

| 6 |

+

import argparse

|

| 7 |

+

import json

|

| 8 |

+

import re

|

| 9 |

+

from collections import Counter

|

| 10 |

+

|

| 11 |

+

def parse_agrs():

|

| 12 |

+

parser = argparse.ArgumentParser()

|

| 13 |

+

|

| 14 |

+

# Model loader settings

|

| 15 |

+

parser.add_argument('--load', type=str, default='ckpt/checkpoint.pth', help='the path to the model weights.')

|

| 16 |

+

parser.add_argument('--prompt', type=str, default='ckpt/prompt.pth', help='the path to the prompt weights.')

|

| 17 |

+

|

| 18 |

+

# Data input settings

|

| 19 |

+

parser.add_argument('--image_path', type=str, default='example_figs/fig1.jpg', help='the path to the test image.')

|

| 20 |

+

parser.add_argument('--image_dir', type=str, default='data/images/', help='the path to the directory containing the data.')

|

| 21 |

+

parser.add_argument('--ann_path', type=str, default='data/annotation.json', help='the path to the directory containing the data.')

|

| 22 |

+

|

| 23 |

+

# Data loader settings

|

| 24 |

+

parser.add_argument('--dataset_name', type=str, default='mimic_cxr', help='the dataset to be used.')

|

| 25 |

+

parser.add_argument('--max_seq_length', type=int, default=60, help='the maximum sequence length of the reports.')

|

| 26 |

+

parser.add_argument('--threshold', type=int, default=3, help='the cut off frequency for the words.')

|

| 27 |

+

parser.add_argument('--num_workers', type=int, default=2, help='the number of workers for dataloader.')

|

| 28 |

+

parser.add_argument('--batch_size', type=int, default=16, help='the number of samples for a batch')

|

| 29 |

+

|

| 30 |

+

# Model settings (for visual extractor)

|

| 31 |

+

parser.add_argument('--visual_extractor', type=str, default='resnet101', help='the visual extractor to be used.')

|

| 32 |

+

parser.add_argument('--visual_extractor_pretrained', type=bool, default=True, help='whether to load the pretrained visual extractor')

|

| 33 |

+

|

| 34 |

+

# Model settings (for Transformer)

|

| 35 |

+

parser.add_argument('--d_model', type=int, default=512, help='the dimension of Transformer.')

|

| 36 |

+

parser.add_argument('--d_ff', type=int, default=512, help='the dimension of FFN.')

|

| 37 |

+

parser.add_argument('--d_vf', type=int, default=2048, help='the dimension of the patch features.')

|

| 38 |

+

parser.add_argument('--num_heads', type=int, default=8, help='the number of heads in Transformer.')

|

| 39 |

+

parser.add_argument('--num_layers', type=int, default=3, help='the number of layers of Transformer.')

|

| 40 |

+

parser.add_argument('--dropout', type=float, default=0.1, help='the dropout rate of Transformer.')

|

| 41 |

+

parser.add_argument('--logit_layers', type=int, default=1, help='the number of the logit layer.')

|

| 42 |

+

parser.add_argument('--bos_idx', type=int, default=0, help='the index of <bos>.')

|

| 43 |

+

parser.add_argument('--eos_idx', type=int, default=0, help='the index of <eos>.')

|

| 44 |

+

parser.add_argument('--pad_idx', type=int, default=0, help='the index of <pad>.')

|

| 45 |

+

parser.add_argument('--use_bn', type=int, default=0, help='whether to use batch normalization.')

|

| 46 |

+

parser.add_argument('--drop_prob_lm', type=float, default=0.5, help='the dropout rate of the output layer.')

|

| 47 |

+

# for Relational Memory

|

| 48 |

+

parser.add_argument('--rm_num_slots', type=int, default=3, help='the number of memory slots.')

|

| 49 |

+

parser.add_argument('--rm_num_heads', type=int, default=8, help='the numebr of heads in rm.')

|

| 50 |

+

parser.add_argument('--rm_d_model', type=int, default=512, help='the dimension of rm.')

|

| 51 |

+

|

| 52 |

+

# Sample related

|

| 53 |

+

parser.add_argument('--sample_method', type=str, default='beam_search', help='the sample methods to sample a report.')

|

| 54 |

+

parser.add_argument('--beam_size', type=int, default=3, help='the beam size when beam searching.')

|

| 55 |

+

parser.add_argument('--temperature', type=float, default=1.0, help='the temperature when sampling.')

|

| 56 |

+

parser.add_argument('--sample_n', type=int, default=1, help='the sample number per image.')

|

| 57 |

+

parser.add_argument('--group_size', type=int, default=1, help='the group size.')

|

| 58 |

+

parser.add_argument('--output_logsoftmax', type=int, default=1, help='whether to output the probabilities.')

|

| 59 |

+

parser.add_argument('--decoding_constraint', type=int, default=0, help='whether decoding constraint.')

|

| 60 |

+

parser.add_argument('--block_trigrams', type=int, default=1, help='whether to use block trigrams.')

|

| 61 |

+

|

| 62 |

+

# Trainer settings

|

| 63 |

+

parser.add_argument('--n_gpu', type=int, default=1, help='the number of gpus to be used.')

|

| 64 |

+

parser.add_argument('--epochs', type=int, default=100, help='the number of training epochs.')

|

| 65 |

+

parser.add_argument('--save_dir', type=str, default='results/iu_xray', help='the patch to save the models.')

|

| 66 |

+

parser.add_argument('--record_dir', type=str, default='records/', help='the patch to save the results of experiments')

|

| 67 |

+

parser.add_argument('--save_period', type=int, default=1, help='the saving period.')

|

| 68 |

+

parser.add_argument('--monitor_mode', type=str, default='max', choices=['min', 'max'], help='whether to max or min the metric.')

|

| 69 |

+

parser.add_argument('--monitor_metric', type=str, default='BLEU_4', help='the metric to be monitored.')

|

| 70 |

+

parser.add_argument('--early_stop', type=int, default=50, help='the patience of training.')

|

| 71 |

+

|

| 72 |

+

# Optimization

|

| 73 |

+

parser.add_argument('--optim', type=str, default='Adam', help='the type of the optimizer.')

|

| 74 |

+

parser.add_argument('--lr_ve', type=float, default=5e-5, help='the learning rate for the visual extractor.')

|

| 75 |

+

parser.add_argument('--lr_ed', type=float, default=1e-4, help='the learning rate for the remaining parameters.')

|

| 76 |

+

parser.add_argument('--weight_decay', type=float, default=5e-5, help='the weight decay.')

|

| 77 |

+

parser.add_argument('--amsgrad', type=bool, default=True, help='.')

|

| 78 |

+

|

| 79 |

+

# Learning Rate Scheduler

|

| 80 |

+

parser.add_argument('--lr_scheduler', type=str, default='StepLR', help='the type of the learning rate scheduler.')

|

| 81 |

+

parser.add_argument('--step_size', type=int, default=50, help='the step size of the learning rate scheduler.')

|

| 82 |

+

parser.add_argument('--gamma', type=float, default=0.1, help='the gamma of the learning rate scheduler.')

|

| 83 |

+

|

| 84 |

+

# Others

|

| 85 |

+

parser.add_argument('--seed', type=int, default=9233, help='.')

|

| 86 |

+

parser.add_argument('--resume', type=str, help='whether to resume the training from existing checkpoints.')

|

| 87 |

+

|

| 88 |

+

args = parser.parse_args()

|

| 89 |

+

return args

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

args = parse_agrs()

|

| 93 |

+

tokenizer = Tokenizer(args)

|

| 94 |

+

image_path=args.image_path

|

| 95 |

+

checkpoint_path = args.load

|

| 96 |

+

|

| 97 |

+

image =[Image.open(image_path).convert('RGB')

|

| 98 |

+

]

|

| 99 |

+

model=R2GenModel(args ,tokenizer).to('cuda' if torch.cuda.is_available() else 'cpu')

|

| 100 |

+

|

| 101 |

+

state_dict = torch.load(checkpoint_path)

|

| 102 |

+

model_state_dict = state_dict['state_dict']

|

| 103 |

+

model.load_state_dict(model_state_dict).to('cuda' if torch.cuda.is_available() else 'cpu')

|

| 104 |

+

|

| 105 |

+

model.eval()

|

| 106 |

+

with torch.no_grad():

|

| 107 |

+

|

| 108 |

+

output = model(image, mode='sample')

|

| 109 |

+

reports = model.tokenizer.decode_batch(output.cpu().numpy())

|

| 110 |

+

print(reports)

|

models/models.py

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import pickle

|

| 5 |

+

from typing import Tuple

|

| 6 |

+

from transformers import GPT2LMHeadModel

|

| 7 |

+

from modules.decoder import DeCap

|

| 8 |

+

from medclip import MedCLIPModel, MedCLIPVisionModelViT

|

| 9 |

+

import math

|

| 10 |

+

import pdb

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class MedCapModel(nn.Module):

|

| 14 |

+

def __init__(self, args, tokenizer):

|

| 15 |

+

super(MedCapModel, self).__init__()

|

| 16 |

+

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

|

| 17 |

+

self.args = args

|

| 18 |

+

self.tokenizer = tokenizer

|

| 19 |

+

self.model = DeCap(args, tokenizer)

|

| 20 |

+

|

| 21 |

+

self.align_model = MedCLIPModel(vision_cls=MedCLIPVisionModelViT)

|

| 22 |

+

self.align_model.from_pretrained()

|

| 23 |

+

self.prompt = torch.load(args.prompt)

|

| 24 |

+

if args.dataset == 'iu_xray':

|

| 25 |

+

self.forward = self.forward_iu_xray

|

| 26 |

+

else:

|

| 27 |

+

self.forward = self.forward_mimic_cxr

|

| 28 |

+

|

| 29 |

+

def noise_injection(self, x, variance=0.001, modality_offset=None, dont_norm=False):

|

| 30 |

+

if variance == 0.0:

|

| 31 |

+

return x

|

| 32 |

+

std = math.sqrt(variance)

|

| 33 |

+

if not dont_norm:

|

| 34 |

+

x = torch.nn.functional.normalize(x, dim=1)

|

| 35 |

+

else:

|

| 36 |

+

x = x + (torch.randn(x.shape) * std) # todo by some conventions multivraiance noise should be devided by sqrt of dim

|

| 37 |

+

if modality_offset is not None:

|

| 38 |

+

x = x + modality_offset

|

| 39 |

+

return torch.nn.functional.normalize(x, dim=1)

|

| 40 |

+

|

| 41 |

+

def align_encode_images_iu_xray(self, images):

|

| 42 |

+

# Split the images

|

| 43 |

+

image1, image2 = images.unbind(dim=1)

|

| 44 |

+

# Encode each image

|

| 45 |

+

feature1 = self.align_model.encode_image(image1)

|

| 46 |

+

feature2 = self.align_model.encode_image(image2)

|

| 47 |

+

if self.args.prompt_load == 'yes':

|

| 48 |

+

sim_1 = feature1 @ self.prompt.T.float()

|

| 49 |

+

sim_1 = (sim_1 * 100).softmax(dim=-1)

|

| 50 |

+

prefix_embedding_1 = sim_1 @ self.prompt.float()

|

| 51 |

+

prefix_embedding_1 /= prefix_embedding_1.norm(dim=-1, keepdim=True)

|

| 52 |

+

|

| 53 |

+

sim_2 = feature2 @ self.prompt.T.float()

|

| 54 |

+

sim_2 = (sim_2 * 100).softmax(dim=-1)

|

| 55 |

+

prefix_embedding_2 = sim_2 @ self.prompt.float()

|

| 56 |

+

prefix_embedding_2 /= prefix_embedding_2.norm(dim=-1, keepdim=True)

|

| 57 |

+

averaged_prompt_features = torch.mean(torch.stack([prefix_embedding_1, prefix_embedding_2]), dim=0)

|

| 58 |

+

return averaged_prompt_features

|

| 59 |

+

else:

|

| 60 |

+

# Concatenate the features

|

| 61 |

+

averaged_features = torch.mean(torch.stack([feature1, feature2]), dim=0)

|

| 62 |

+

return averaged_features

|

| 63 |

+

|

| 64 |

+

def align_encode_images_mimic_cxr(self, images):

|

| 65 |

+

feature = self.align_model.encode_image(images)

|

| 66 |

+

if self.args.prompt_load == 'yes':

|

| 67 |

+

sim = feature @ self.prompt.T.float()

|

| 68 |

+

sim = (sim * 100).softmax(dim=-1)

|

| 69 |

+

prefix_embedding = sim @ self.prompt.float()

|

| 70 |

+

prefix_embedding /= prefix_embedding.norm(dim=-1, keepdim=True)

|

| 71 |

+

return prefix_embedding

|

| 72 |

+

else:

|

| 73 |

+

return feature

|

| 74 |

+

|

| 75 |

+

def forward_iu_xray(self, reports_ids, align_ids, align_masks, images, mode='train', update_opts={}):

|

| 76 |

+

self.align_model.to(self.device)

|

| 77 |

+

self.align_model.eval()

|

| 78 |

+

align_ids = align_ids.long()

|

| 79 |

+

|

| 80 |

+

align_image_feature = None

|

| 81 |

+

if self.args.train_mode == 'fine-tuning':

|

| 82 |

+

align_image_feature = self.align_encode_images_iu_xray(images)

|

| 83 |

+

if mode == 'train':

|

| 84 |

+

align_text_feature = self.align_model.encode_text(align_ids, align_masks)

|

| 85 |

+

if self.args.noise_inject == 'yes':

|

| 86 |

+

align_text_feature = self.noise_injection(align_text_feature)

|

| 87 |

+

|

| 88 |

+

if self.args.train_mode == 'fine-tuning':

|

| 89 |

+

if self.args.F_version == 'v1':

|

| 90 |

+

combined_feature = torch.cat([align_text_feature, align_image_feature], dim=-1)

|

| 91 |

+

align_text_feature = self.fc_reduce_dim(combined_feature)

|

| 92 |

+

if self.args.F_version == 'v2':

|

| 93 |

+

align_text_feature = align_image_feature

|

| 94 |

+

|

| 95 |

+

outputs = self.model(align_text_feature, reports_ids, mode='forward')

|

| 96 |

+

logits = outputs.logits

|

| 97 |

+

logits = logits[:, :-1]

|

| 98 |

+

return logits

|

| 99 |

+

elif mode == 'sample':

|

| 100 |

+

align_image_feature = self.align_encode_images_iu_xray(images)

|

| 101 |

+

outputs = self.model(align_image_feature, reports_ids, mode='sample', update_opts=update_opts)

|

| 102 |

+

return outputs

|

| 103 |

+

else:

|

| 104 |

+

raise ValueError

|

| 105 |

+

|

| 106 |

+