Upload 166 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- LICENSE +201 -0

- README.md +41 -12

- assets/00.gif +0 -0

- assets/01.gif +0 -0

- assets/02.gif +0 -0

- assets/03.gif +0 -0

- assets/04.gif +0 -0

- assets/05.gif +0 -0

- assets/06.gif +0 -0

- assets/07.gif +0 -0

- assets/08.gif +0 -0

- assets/09.gif +0 -0

- assets/10.gif +0 -0

- assets/11.gif +0 -0

- assets/12.gif +0 -0

- assets/13.gif +3 -0

- assets/72105_388.mp4_00-00.png +0 -0

- assets/72105_388.mp4_00-01.png +0 -0

- assets/72109_125.mp4_00-00.png +0 -0

- assets/72109_125.mp4_00-01.png +0 -0

- assets/72110_255.mp4_00-00.png +0 -0

- assets/72110_255.mp4_00-01.png +0 -0

- assets/74302_1349_frame1.png +0 -0

- assets/74302_1349_frame3.png +0 -0

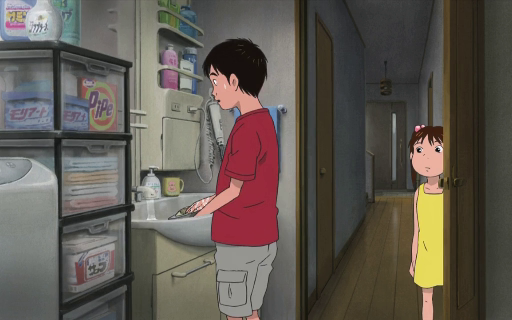

- assets/Japan_v2_1_070321_s3_frame1.png +0 -0

- assets/Japan_v2_1_070321_s3_frame3.png +0 -0

- assets/Japan_v2_2_062266_s2_frame1.png +0 -0

- assets/Japan_v2_2_062266_s2_frame3.png +0 -0

- assets/frame0001_05.png +0 -0

- assets/frame0001_09.png +0 -0

- assets/frame0001_10.png +0 -0

- assets/frame0001_11.png +0 -0

- assets/frame0016_10.png +0 -0

- assets/frame0016_11.png +0 -0

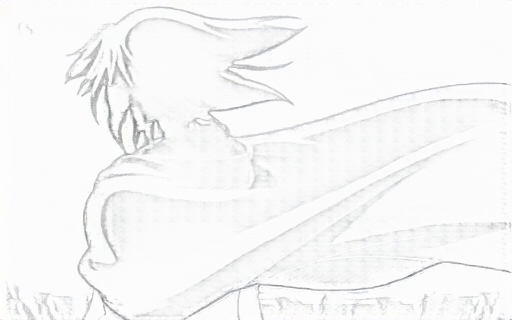

- assets/sketch_sample/frame_1.png +0 -0

- assets/sketch_sample/frame_2.png +0 -0

- assets/sketch_sample/sample.mov +0 -0

- checkpoints/tooncrafter_1024_interp_sketch/.cache/huggingface/.gitignore +1 -0

- checkpoints/tooncrafter_1024_interp_sketch/.cache/huggingface/download/tc_sketch.pt.lock +0 -0

- checkpoints/tooncrafter_1024_interp_sketch/.cache/huggingface/download/tc_sketch.pt.metadata +3 -0

- cldm/__pycache__/cldm.cpython-38.pyc +0 -0

- cldm/__pycache__/model.cpython-38.pyc +0 -0

- cldm/cldm.py +478 -0

- cldm/ddim_hacked.py +317 -0

- cldm/hack.py +111 -0

- cldm/logger.py +76 -0

- cldm/model.py +28 -0

- configs/cldm_v21.yaml +17 -0

- configs/inference_1024_v1.0.yaml +103 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/13.gif filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright Tencent

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,12 +1,41 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## ___***ToonCrafter_with_SketchGuidance***___

|

| 2 |

+

This repository is an implementation that recreates the SketchGuidance feature of "ToonCrafter".

|

| 3 |

+

|

| 4 |

+

- https://github.com/ToonCrafter/ToonCrafter

|

| 5 |

+

- https://arxiv.org/pdf/2405.17933

|

| 6 |

+

|

| 7 |

+

https://github.com/user-attachments/assets/f72f287d-f848-4982-8f91-43c49d037007

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

## 🧰 Models

|

| 12 |

+

|

| 13 |

+

|Model|Resolution|GPU Mem. & Inference Time (A100, ddim 50steps)|Checkpoint|

|

| 14 |

+

|:---------|:---------|:--------|:--------|

|

| 15 |

+

|ToonCrafter_512|320x512| TBD (`perframe_ae=True`)|[Hugging Face](https://huggingface.co/Doubiiu/ToonCrafter/blob/main/model.ckpt)|

|

| 16 |

+

|SketchEncoder|TBD| TBD |[Hugging Face](https://huggingface.co/Doubiiu/ToonCrafter/blob/main/sketch_encoder.ckpt)|

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

Currently, ToonCrafter can support generating videos of up to 16 frames with a resolution of 512x320. The inference time can be reduced by using fewer DDIM steps.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## ⚙️ Setup

|

| 24 |

+

|

| 25 |

+

### Install Environment via Anaconda (Recommended)

|

| 26 |

+

```bash

|

| 27 |

+

conda create -n tooncrafter python=3.8.5

|

| 28 |

+

conda activate tooncrafter

|

| 29 |

+

pip install -r requirements.txt

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

## 💫 Inference

|

| 34 |

+

|

| 35 |

+

### 1. Local Gradio demo

|

| 36 |

+

1. Download pretrained ToonCrafter_512 and put the model.ckpt in checkpoints/tooncrafter_512_interp_v1/model.ckpt.

|

| 37 |

+

2. Download pretrained SketchEncoder and put the model.ckpt in control_models/sketch_encoder.ckpt.

|

| 38 |

+

|

| 39 |

+

```bash

|

| 40 |

+

python gradio_app.py

|

| 41 |

+

```

|

assets/00.gif

ADDED

|

assets/01.gif

ADDED

|

assets/02.gif

ADDED

|

assets/03.gif

ADDED

|

assets/04.gif

ADDED

|

assets/05.gif

ADDED

|

assets/06.gif

ADDED

|

assets/07.gif

ADDED

|

assets/08.gif

ADDED

|

assets/09.gif

ADDED

|

assets/10.gif

ADDED

|

assets/11.gif

ADDED

|

assets/12.gif

ADDED

|

assets/13.gif

ADDED

|

Git LFS Details

|

assets/72105_388.mp4_00-00.png

ADDED

|

assets/72105_388.mp4_00-01.png

ADDED

|

assets/72109_125.mp4_00-00.png

ADDED

|

assets/72109_125.mp4_00-01.png

ADDED

|

assets/72110_255.mp4_00-00.png

ADDED

|

assets/72110_255.mp4_00-01.png

ADDED

|

assets/74302_1349_frame1.png

ADDED

|

assets/74302_1349_frame3.png

ADDED

|

assets/Japan_v2_1_070321_s3_frame1.png

ADDED

|

assets/Japan_v2_1_070321_s3_frame3.png

ADDED

|

assets/Japan_v2_2_062266_s2_frame1.png

ADDED

|

assets/Japan_v2_2_062266_s2_frame3.png

ADDED

|

assets/frame0001_05.png

ADDED

|

assets/frame0001_09.png

ADDED

|

assets/frame0001_10.png

ADDED

|

assets/frame0001_11.png

ADDED

|

assets/frame0016_10.png

ADDED

|

assets/frame0016_11.png

ADDED

|

assets/sketch_sample/frame_1.png

ADDED

|

assets/sketch_sample/frame_2.png

ADDED

|

assets/sketch_sample/sample.mov

ADDED

|

Binary file (228 kB). View file

|

|

|

checkpoints/tooncrafter_1024_interp_sketch/.cache/huggingface/.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

*

|

checkpoints/tooncrafter_1024_interp_sketch/.cache/huggingface/download/tc_sketch.pt.lock

ADDED

|

File without changes

|

checkpoints/tooncrafter_1024_interp_sketch/.cache/huggingface/download/tc_sketch.pt.metadata

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ff369905091bfeb92381885efbfee9f935b4ebc4

|

| 2 |

+

0f33c61f5e5046233e943d6e54a48b69512492ffe54b33b482dd48b8911a850b

|

| 3 |

+

1734665884.5047696

|

cldm/__pycache__/cldm.cpython-38.pyc

ADDED

|

Binary file (12.2 kB). View file

|

|

|

cldm/__pycache__/model.cpython-38.pyc

ADDED

|

Binary file (1.11 kB). View file

|

|

|

cldm/cldm.py

ADDED

|

@@ -0,0 +1,478 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import einops

|

| 2 |

+

import torch

|

| 3 |

+

import torch as th

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

|

| 6 |

+

from ldm.modules.diffusionmodules.util import (

|

| 7 |

+

conv_nd,

|

| 8 |

+

linear,

|

| 9 |

+

zero_module,

|

| 10 |

+

timestep_embedding,

|

| 11 |

+

)

|

| 12 |

+

|

| 13 |

+

from einops import rearrange, repeat

|

| 14 |

+

from torchvision.utils import make_grid

|

| 15 |

+

from ldm.modules.attention import SpatialTransformer

|

| 16 |

+

from ldm.modules.diffusionmodules.openaimodel import TimestepEmbedSequential, ResBlock, Downsample, AttentionBlock

|

| 17 |

+

from lvdm.modules.networks.openaimodel3d import UNetModel

|

| 18 |

+

from ldm.models.diffusion.ddpm import LatentDiffusion

|

| 19 |

+

from ldm.util import log_txt_as_img, exists, instantiate_from_config

|

| 20 |

+

from ldm.models.diffusion.ddim import DDIMSampler

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

class ControlledUnetModel(UNetModel):

|

| 24 |

+

def forward(self, x, timesteps, context=None, features_adapter=None, fs=None, control = None, **kwargs):

|

| 25 |

+

b,_,t,_,_ = x.shape

|

| 26 |

+

t_emb = timestep_embedding(timesteps, self.model_channels, repeat_only=False).type(x.dtype)

|

| 27 |

+

emb = self.time_embed(t_emb)

|

| 28 |

+

## repeat t times for context [(b t) 77 768] & time embedding

|

| 29 |

+

## check if we use per-frame image conditioning

|

| 30 |

+

_, l_context, _ = context.shape

|

| 31 |

+

if l_context == 77 + t*16: ## !!! HARD CODE here

|

| 32 |

+

context_text, context_img = context[:,:77,:], context[:,77:,:]

|

| 33 |

+

context_text = context_text.repeat_interleave(repeats=t, dim=0)

|

| 34 |

+

context_img = rearrange(context_img, 'b (t l) c -> (b t) l c', t=t)

|

| 35 |

+

context = torch.cat([context_text, context_img], dim=1)

|

| 36 |

+

else:

|

| 37 |

+

context = context.repeat_interleave(repeats=t, dim=0)

|

| 38 |

+

emb = emb.repeat_interleave(repeats=t, dim=0)

|

| 39 |

+

|

| 40 |

+

## always in shape (b t) c h w, except for temporal layer

|

| 41 |

+

x = rearrange(x, 'b c t h w -> (b t) c h w')

|

| 42 |

+

|

| 43 |

+

## combine emb

|

| 44 |

+

if self.fs_condition:

|

| 45 |

+

if fs is None:

|

| 46 |

+

fs = torch.tensor(

|

| 47 |

+

[self.default_fs] * b, dtype=torch.long, device=x.device)

|

| 48 |

+

fs_emb = timestep_embedding(fs, self.model_channels, repeat_only=False).type(x.dtype)

|

| 49 |

+

|

| 50 |

+

fs_embed = self.fps_embedding(fs_emb)

|

| 51 |

+

fs_embed = fs_embed.repeat_interleave(repeats=t, dim=0)

|

| 52 |

+

emb = emb + fs_embed

|

| 53 |

+

|

| 54 |

+

h = x.type(self.dtype)

|

| 55 |

+

adapter_idx = 0

|

| 56 |

+

hs = []

|

| 57 |

+

with torch.no_grad():

|

| 58 |

+

for id, module in enumerate(self.input_blocks):

|

| 59 |

+

h = module(h, emb, context=context, batch_size=b)

|

| 60 |

+

if id ==0 and self.addition_attention:

|

| 61 |

+

h = self.init_attn(h, emb, context=context, batch_size=b)

|

| 62 |

+

## plug-in adapter features

|

| 63 |

+

if ((id+1)%3 == 0) and features_adapter is not None:

|

| 64 |

+

h = h + features_adapter[adapter_idx]

|

| 65 |

+

adapter_idx += 1

|

| 66 |

+

hs.append(h)

|

| 67 |

+

if features_adapter is not None:

|

| 68 |

+

assert len(features_adapter)==adapter_idx, 'Wrong features_adapter'

|

| 69 |

+

|

| 70 |

+

h = self.middle_block(h, emb, context=context, batch_size=b)

|

| 71 |

+

|

| 72 |

+

if control is not None:

|

| 73 |

+

h += control.pop()

|

| 74 |

+

|

| 75 |

+

for module in self.output_blocks:

|

| 76 |

+

if control is None:

|

| 77 |

+

h = torch.cat([h, hs.pop()], dim=1)

|

| 78 |

+

else:

|

| 79 |

+

h = torch.cat([h, hs.pop() + control.pop()], dim=1)

|

| 80 |

+

h = module(h, emb, context=context, batch_size=b)

|

| 81 |

+

|

| 82 |

+

h = h.type(x.dtype)

|

| 83 |

+

y = self.out(h)

|

| 84 |

+

|

| 85 |

+

# reshape back to (b c t h w)

|

| 86 |

+

y = rearrange(y, '(b t) c h w -> b c t h w', b=b)

|

| 87 |

+

return y

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

class ControlNet(nn.Module):

|

| 91 |

+

def __init__(

|

| 92 |

+

self,

|

| 93 |

+

image_size,

|

| 94 |

+

in_channels,

|

| 95 |

+

model_channels,

|

| 96 |

+

hint_channels,

|

| 97 |

+

num_res_blocks,

|

| 98 |

+

attention_resolutions,

|

| 99 |

+

dropout=0,

|

| 100 |

+

channel_mult=(1, 2, 4, 8),

|

| 101 |

+

conv_resample=True,

|

| 102 |

+

dims=2,

|

| 103 |

+

use_checkpoint=False,

|

| 104 |

+

use_fp16=False,

|

| 105 |

+

num_heads=-1,

|

| 106 |

+

num_head_channels=-1,

|

| 107 |

+

num_heads_upsample=-1,

|

| 108 |

+

use_scale_shift_norm=False,

|

| 109 |

+

resblock_updown=False,

|

| 110 |

+

use_new_attention_order=False,

|

| 111 |

+

use_spatial_transformer=False, # custom transformer support

|

| 112 |

+

transformer_depth=1, # custom transformer support

|

| 113 |

+

context_dim=None, # custom transformer support

|

| 114 |

+

n_embed=None, # custom support for prediction of discrete ids into codebook of first stage vq model

|

| 115 |

+

legacy=True,

|

| 116 |

+

disable_self_attentions=None,

|

| 117 |

+

num_attention_blocks=None,

|

| 118 |

+

disable_middle_self_attn=False,

|

| 119 |

+

use_linear_in_transformer=False,

|

| 120 |

+

):

|

| 121 |

+

super().__init__()

|

| 122 |

+

if use_spatial_transformer:

|

| 123 |

+

assert context_dim is not None, 'Fool!! You forgot to include the dimension of your cross-attention conditioning...'

|

| 124 |

+

|

| 125 |

+

if context_dim is not None:

|

| 126 |

+

assert use_spatial_transformer, 'Fool!! You forgot to use the spatial transformer for your cross-attention conditioning...'

|

| 127 |

+

from omegaconf.listconfig import ListConfig

|

| 128 |

+

if type(context_dim) == ListConfig:

|

| 129 |

+

context_dim = list(context_dim)

|

| 130 |

+

|

| 131 |

+

if num_heads_upsample == -1:

|

| 132 |

+

num_heads_upsample = num_heads

|

| 133 |

+

|

| 134 |

+

if num_heads == -1:

|

| 135 |

+

assert num_head_channels != -1, 'Either num_heads or num_head_channels has to be set'

|

| 136 |

+

|

| 137 |

+

if num_head_channels == -1:

|

| 138 |

+

assert num_heads != -1, 'Either num_heads or num_head_channels has to be set'

|

| 139 |

+

|

| 140 |

+

self.dims = dims

|

| 141 |

+

self.image_size = image_size

|

| 142 |

+

self.in_channels = in_channels

|

| 143 |

+

self.model_channels = model_channels

|

| 144 |

+

if isinstance(num_res_blocks, int):

|

| 145 |

+

self.num_res_blocks = len(channel_mult) * [num_res_blocks]

|

| 146 |

+

else:

|

| 147 |

+

if len(num_res_blocks) != len(channel_mult):

|

| 148 |

+

raise ValueError("provide num_res_blocks either as an int (globally constant) or "

|

| 149 |

+

"as a list/tuple (per-level) with the same length as channel_mult")

|

| 150 |

+

self.num_res_blocks = num_res_blocks

|

| 151 |

+

if disable_self_attentions is not None:

|

| 152 |

+

# should be a list of booleans, indicating whether to disable self-attention in TransformerBlocks or not

|

| 153 |

+

assert len(disable_self_attentions) == len(channel_mult)

|

| 154 |

+

if num_attention_blocks is not None:

|

| 155 |

+

assert len(num_attention_blocks) == len(self.num_res_blocks)

|

| 156 |

+

assert all(map(lambda i: self.num_res_blocks[i] >= num_attention_blocks[i], range(len(num_attention_blocks))))

|

| 157 |

+

print(f"Constructor of UNetModel received num_attention_blocks={num_attention_blocks}. "

|

| 158 |

+

f"This option has LESS priority than attention_resolutions {attention_resolutions}, "

|

| 159 |

+

f"i.e., in cases where num_attention_blocks[i] > 0 but 2**i not in attention_resolutions, "

|

| 160 |

+

f"attention will still not be set.")

|

| 161 |

+

|

| 162 |

+

self.attention_resolutions = attention_resolutions

|

| 163 |

+

self.dropout = dropout

|

| 164 |

+

self.channel_mult = channel_mult

|

| 165 |

+

self.conv_resample = conv_resample

|

| 166 |

+

self.use_checkpoint = use_checkpoint

|

| 167 |

+

self.dtype = th.float16 if use_fp16 else th.float32

|

| 168 |

+

self.num_heads = num_heads

|

| 169 |

+

self.num_head_channels = num_head_channels

|

| 170 |

+

self.num_heads_upsample = num_heads_upsample

|

| 171 |

+

self.predict_codebook_ids = n_embed is not None

|

| 172 |

+

|

| 173 |

+

time_embed_dim = model_channels * 4

|

| 174 |

+

self.time_embed = nn.Sequential(

|

| 175 |

+

linear(model_channels, time_embed_dim),

|

| 176 |

+

nn.SiLU(),

|

| 177 |

+

linear(time_embed_dim, time_embed_dim),

|

| 178 |

+

)

|

| 179 |

+

|

| 180 |

+

self.input_blocks = nn.ModuleList(

|

| 181 |

+

[

|

| 182 |

+

TimestepEmbedSequential(

|

| 183 |

+

conv_nd(dims, in_channels, model_channels, 3, padding=1)

|

| 184 |

+

)

|

| 185 |

+

]

|

| 186 |

+

)

|

| 187 |

+

self.zero_convs = nn.ModuleList([self.make_zero_conv(model_channels)])

|

| 188 |

+

|

| 189 |

+

self.input_hint_block = TimestepEmbedSequential(

|

| 190 |

+

conv_nd(dims, hint_channels, 16, 3, padding=1),

|

| 191 |

+

nn.SiLU(),

|

| 192 |

+

conv_nd(dims, 16, 16, 3, padding=1),

|

| 193 |

+

nn.SiLU(),

|

| 194 |

+

conv_nd(dims, 16, 32, 3, padding=1, stride=2),

|

| 195 |

+

nn.SiLU(),

|

| 196 |

+

conv_nd(dims, 32, 32, 3, padding=1),

|

| 197 |

+

nn.SiLU(),

|

| 198 |

+

conv_nd(dims, 32, 96, 3, padding=1, stride=2),

|

| 199 |

+

nn.SiLU(),

|

| 200 |

+

conv_nd(dims, 96, 96, 3, padding=1),

|

| 201 |

+

nn.SiLU(),

|

| 202 |

+

conv_nd(dims, 96, 256, 3, padding=1, stride=2),

|

| 203 |

+

nn.SiLU(),

|

| 204 |

+

zero_module(conv_nd(dims, 256, model_channels, 3, padding=1))

|

| 205 |

+

)

|

| 206 |

+

|

| 207 |

+

self._feature_size = model_channels

|

| 208 |

+

input_block_chans = [model_channels]

|

| 209 |

+

ch = model_channels

|

| 210 |

+

ds = 1

|

| 211 |

+

for level, mult in enumerate(channel_mult):

|

| 212 |

+

for nr in range(self.num_res_blocks[level]):

|

| 213 |

+

layers = [

|

| 214 |

+

ResBlock(

|

| 215 |

+

ch,

|

| 216 |

+

time_embed_dim,

|

| 217 |

+

dropout,

|

| 218 |

+

out_channels=mult * model_channels,

|

| 219 |

+

dims=dims,

|

| 220 |

+

use_checkpoint=use_checkpoint,

|

| 221 |

+

use_scale_shift_norm=use_scale_shift_norm,

|

| 222 |

+

)

|

| 223 |

+

]

|

| 224 |

+

ch = mult * model_channels

|

| 225 |

+

if ds in attention_resolutions:

|

| 226 |

+

if num_head_channels == -1:

|

| 227 |

+

dim_head = ch // num_heads

|

| 228 |

+

else:

|

| 229 |

+

num_heads = ch // num_head_channels

|

| 230 |

+

dim_head = num_head_channels

|

| 231 |

+

if legacy:

|

| 232 |

+

# num_heads = 1

|

| 233 |

+

dim_head = ch // num_heads if use_spatial_transformer else num_head_channels

|

| 234 |

+

if exists(disable_self_attentions):

|

| 235 |

+

disabled_sa = disable_self_attentions[level]

|

| 236 |

+

else:

|

| 237 |

+

disabled_sa = False

|

| 238 |

+

|

| 239 |

+

if not exists(num_attention_blocks) or nr < num_attention_blocks[level]:

|

| 240 |

+

layers.append(

|

| 241 |

+

AttentionBlock(

|

| 242 |

+

ch,

|

| 243 |

+

use_checkpoint=use_checkpoint,

|

| 244 |

+

num_heads=num_heads,

|

| 245 |

+

num_head_channels=dim_head,

|

| 246 |

+

use_new_attention_order=use_new_attention_order,

|

| 247 |

+

) if not use_spatial_transformer else SpatialTransformer(

|

| 248 |

+

ch, num_heads, dim_head, depth=transformer_depth, context_dim=context_dim,

|

| 249 |

+

disable_self_attn=disabled_sa, use_linear=use_linear_in_transformer,

|

| 250 |

+

use_checkpoint=use_checkpoint

|

| 251 |

+

)

|

| 252 |

+

)

|

| 253 |

+

self.input_blocks.append(TimestepEmbedSequential(*layers))

|

| 254 |

+

self.zero_convs.append(self.make_zero_conv(ch))

|

| 255 |

+

self._feature_size += ch

|

| 256 |

+

input_block_chans.append(ch)

|

| 257 |

+

if level != len(channel_mult) - 1:

|

| 258 |

+

out_ch = ch

|

| 259 |

+

self.input_blocks.append(

|

| 260 |

+

TimestepEmbedSequential(

|

| 261 |

+

ResBlock(

|

| 262 |

+

ch,

|

| 263 |

+

time_embed_dim,

|

| 264 |

+

dropout,

|

| 265 |

+

out_channels=out_ch,

|

| 266 |

+

dims=dims,

|

| 267 |

+

use_checkpoint=use_checkpoint,

|

| 268 |

+

use_scale_shift_norm=use_scale_shift_norm,

|

| 269 |

+

down=True,

|

| 270 |

+

)

|

| 271 |

+

if resblock_updown

|

| 272 |

+

else Downsample(

|

| 273 |

+

ch, conv_resample, dims=dims, out_channels=out_ch

|

| 274 |

+

)

|

| 275 |

+

)

|

| 276 |

+

)

|

| 277 |

+

ch = out_ch

|

| 278 |

+

input_block_chans.append(ch)

|

| 279 |

+

self.zero_convs.append(self.make_zero_conv(ch))

|

| 280 |

+

ds *= 2

|

| 281 |

+

self._feature_size += ch

|

| 282 |

+

|

| 283 |

+

if num_head_channels == -1:

|

| 284 |

+

dim_head = ch // num_heads

|

| 285 |

+

else:

|

| 286 |

+

num_heads = ch // num_head_channels

|

| 287 |

+

dim_head = num_head_channels

|

| 288 |

+

if legacy:

|

| 289 |

+

# num_heads = 1

|

| 290 |

+

dim_head = ch // num_heads if use_spatial_transformer else num_head_channels

|

| 291 |

+

self.middle_block = TimestepEmbedSequential(

|

| 292 |

+

ResBlock(

|

| 293 |

+

ch,

|

| 294 |

+

time_embed_dim,

|

| 295 |

+

dropout,

|

| 296 |

+

dims=dims,

|

| 297 |

+

use_checkpoint=use_checkpoint,

|

| 298 |

+

use_scale_shift_norm=use_scale_shift_norm,

|

| 299 |

+

),

|

| 300 |

+

AttentionBlock(

|

| 301 |

+

ch,

|

| 302 |

+

use_checkpoint=use_checkpoint,

|

| 303 |

+

num_heads=num_heads,

|

| 304 |

+

num_head_channels=dim_head,

|

| 305 |

+

use_new_attention_order=use_new_attention_order,

|

| 306 |

+

) if not use_spatial_transformer else SpatialTransformer( # always uses a self-attn

|

| 307 |

+

ch, num_heads, dim_head, depth=transformer_depth, context_dim=context_dim,

|

| 308 |

+

disable_self_attn=disable_middle_self_attn, use_linear=use_linear_in_transformer,

|

| 309 |

+

use_checkpoint=use_checkpoint

|

| 310 |

+

),

|

| 311 |

+

ResBlock(

|

| 312 |

+

ch,

|

| 313 |

+

time_embed_dim,

|

| 314 |

+

dropout,

|

| 315 |

+

dims=dims,

|

| 316 |

+

use_checkpoint=use_checkpoint,

|

| 317 |

+

use_scale_shift_norm=use_scale_shift_norm,

|

| 318 |

+

),

|

| 319 |

+

)

|

| 320 |

+

self.middle_block_out = self.make_zero_conv(ch)

|

| 321 |

+

self._feature_size += ch

|

| 322 |

+

|

| 323 |

+

def make_zero_conv(self, channels):

|

| 324 |

+

return TimestepEmbedSequential(zero_module(conv_nd(self.dims, channels, channels, 1, padding=0)))

|

| 325 |

+

|

| 326 |

+

def forward(self, x, hint, timesteps, context, **kwargs):

|

| 327 |

+

t_emb = timestep_embedding(timesteps, self.model_channels, repeat_only=False)

|

| 328 |

+

emb = self.time_embed(t_emb)

|

| 329 |

+

|

| 330 |

+

guided_hint = self.input_hint_block(hint, emb, context)

|

| 331 |

+

|

| 332 |

+

outs = []

|

| 333 |

+

|

| 334 |

+

h = x.type(self.dtype)

|

| 335 |

+

|

| 336 |

+

for module, zero_conv in zip(self.input_blocks, self.zero_convs):

|

| 337 |

+

if guided_hint is not None:

|

| 338 |

+

h = module(h, emb, context)

|

| 339 |

+

h += guided_hint

|

| 340 |

+

guided_hint = None

|

| 341 |

+

else:

|

| 342 |

+

h = module(h, emb, context)

|

| 343 |

+

outs.append(zero_conv(h, emb, context, True))

|

| 344 |

+

|

| 345 |

+

h = self.middle_block(h, emb, context)

|

| 346 |

+

outs.append(self.middle_block_out(h, emb, context))

|

| 347 |

+

|

| 348 |

+

return outs

|

| 349 |

+

|

| 350 |

+

|

| 351 |

+

class ControlLDM(LatentDiffusion):

|

| 352 |

+

|

| 353 |

+

def __init__(self, control_stage_config, control_key, only_mid_control, *args, **kwargs):

|

| 354 |

+

super().__init__(*args, **kwargs)

|

| 355 |

+

self.control_model = instantiate_from_config(control_stage_config)

|

| 356 |

+

self.control_key = control_key

|

| 357 |

+

self.only_mid_control = only_mid_control

|

| 358 |

+

self.control_scales = [1.0] * 13

|

| 359 |

+

|

| 360 |

+

@torch.no_grad()

|

| 361 |

+

def get_input(self, batch, k, bs=None, *args, **kwargs):

|

| 362 |

+

x, c = super().get_input(batch, self.first_stage_key, *args, **kwargs)

|

| 363 |

+

control = batch[self.control_key]

|

| 364 |

+

if bs is not None:

|

| 365 |

+

control = control[:bs]

|

| 366 |

+

control = control.to(self.device)

|

| 367 |

+

control = einops.rearrange(control, 'b h w c -> b c h w')

|

| 368 |

+

control = control.to(memory_format=torch.contiguous_format).float()

|

| 369 |

+

return x, dict(c_crossattn=[c], c_concat=[control])

|

| 370 |

+

|

| 371 |

+

def apply_model(self, x_noisy, t, cond, *args, **kwargs):

|

| 372 |

+

assert isinstance(cond, dict)

|

| 373 |

+

diffusion_model = self.model.diffusion_model

|

| 374 |

+

|

| 375 |

+

cond_txt = torch.cat(cond['c_crossattn'], 1)

|

| 376 |

+

|

| 377 |

+

if cond['c_concat'] is None:

|

| 378 |

+

eps = diffusion_model(x=x_noisy, timesteps=t, context=cond_txt, control=None, only_mid_control=self.only_mid_control)

|

| 379 |

+

else:

|

| 380 |

+

control = self.control_model(x=x_noisy, hint=torch.cat(cond['c_concat'], 1), timesteps=t, context=cond_txt)

|

| 381 |

+

control = [c * scale for c, scale in zip(control, self.control_scales)]

|

| 382 |

+

eps = diffusion_model(x=x_noisy, timesteps=t, context=cond_txt, control=control, only_mid_control=self.only_mid_control)

|

| 383 |

+

|

| 384 |

+

return eps

|

| 385 |

+

|

| 386 |

+

@torch.no_grad()

|

| 387 |

+

def get_unconditional_conditioning(self, N):

|

| 388 |

+

return self.get_learned_conditioning([""] * N)

|

| 389 |

+

|

| 390 |

+

@torch.no_grad()

|

| 391 |

+

def log_images(self, batch, N=4, n_row=2, sample=False, ddim_steps=50, ddim_eta=0.0, return_keys=None,

|

| 392 |

+

quantize_denoised=True, inpaint=True, plot_denoise_rows=False, plot_progressive_rows=True,

|

| 393 |

+

plot_diffusion_rows=False, unconditional_guidance_scale=9.0, unconditional_guidance_label=None,

|

| 394 |

+

use_ema_scope=True,

|

| 395 |

+

**kwargs):

|

| 396 |

+

use_ddim = ddim_steps is not None

|

| 397 |

+

|

| 398 |

+

log = dict()

|

| 399 |

+

z, c = self.get_input(batch, self.first_stage_key, bs=N)

|

| 400 |

+

c_cat, c = c["c_concat"][0][:N], c["c_crossattn"][0][:N]

|

| 401 |

+

N = min(z.shape[0], N)

|

| 402 |

+

n_row = min(z.shape[0], n_row)

|

| 403 |

+

log["reconstruction"] = self.decode_first_stage(z)

|

| 404 |

+

log["control"] = c_cat * 2.0 - 1.0

|

| 405 |

+

log["conditioning"] = log_txt_as_img((512, 512), batch[self.cond_stage_key], size=16)

|

| 406 |

+

|

| 407 |

+

if plot_diffusion_rows:

|

| 408 |

+

# get diffusion row

|

| 409 |

+

diffusion_row = list()

|

| 410 |

+

z_start = z[:n_row]

|

| 411 |

+

for t in range(self.num_timesteps):

|

| 412 |

+

if t % self.log_every_t == 0 or t == self.num_timesteps - 1:

|

| 413 |

+

t = repeat(torch.tensor([t]), '1 -> b', b=n_row)

|

| 414 |

+

t = t.to(self.device).long()

|

| 415 |

+

noise = torch.randn_like(z_start)

|

| 416 |

+

z_noisy = self.q_sample(x_start=z_start, t=t, noise=noise)

|

| 417 |

+

diffusion_row.append(self.decode_first_stage(z_noisy))

|

| 418 |

+

|

| 419 |

+

diffusion_row = torch.stack(diffusion_row) # n_log_step, n_row, C, H, W

|

| 420 |

+

diffusion_grid = rearrange(diffusion_row, 'n b c h w -> b n c h w')

|

| 421 |

+

diffusion_grid = rearrange(diffusion_grid, 'b n c h w -> (b n) c h w')

|

| 422 |

+

diffusion_grid = make_grid(diffusion_grid, nrow=diffusion_row.shape[0])

|

| 423 |

+

log["diffusion_row"] = diffusion_grid

|

| 424 |

+

|

| 425 |

+

if sample:

|

| 426 |

+

# get denoise row

|

| 427 |

+

samples, z_denoise_row = self.sample_log(cond={"c_concat": [c_cat], "c_crossattn": [c]},

|

| 428 |

+

batch_size=N, ddim=use_ddim,

|

| 429 |

+

ddim_steps=ddim_steps, eta=ddim_eta)

|

| 430 |

+

x_samples = self.decode_first_stage(samples)

|

| 431 |

+

log["samples"] = x_samples

|

| 432 |

+

if plot_denoise_rows:

|

| 433 |

+

denoise_grid = self._get_denoise_row_from_list(z_denoise_row)

|

| 434 |

+

log["denoise_row"] = denoise_grid

|

| 435 |

+

|

| 436 |

+

if unconditional_guidance_scale > 1.0:

|

| 437 |

+

uc_cross = self.get_unconditional_conditioning(N)

|

| 438 |

+

uc_cat = c_cat # torch.zeros_like(c_cat)

|

| 439 |

+

uc_full = {"c_concat": [uc_cat], "c_crossattn": [uc_cross]}

|

| 440 |

+

samples_cfg, _ = self.sample_log(cond={"c_concat": [c_cat], "c_crossattn": [c]},

|

| 441 |

+

batch_size=N, ddim=use_ddim,

|

| 442 |

+

ddim_steps=ddim_steps, eta=ddim_eta,

|

| 443 |

+

unconditional_guidance_scale=unconditional_guidance_scale,

|

| 444 |

+

unconditional_conditioning=uc_full,

|

| 445 |

+

)

|

| 446 |

+

x_samples_cfg = self.decode_first_stage(samples_cfg)

|

| 447 |

+

log[f"samples_cfg_scale_{unconditional_guidance_scale:.2f}"] = x_samples_cfg

|

| 448 |

+

|

| 449 |

+

return log

|

| 450 |

+

|

| 451 |

+

@torch.no_grad()

|

| 452 |

+

def sample_log(self, cond, batch_size, ddim, ddim_steps, **kwargs):

|

| 453 |

+

ddim_sampler = DDIMSampler(self)

|

| 454 |

+

b, c, h, w = cond["c_concat"][0].shape

|

| 455 |

+

shape = (self.channels, h // 8, w // 8)

|

| 456 |

+

samples, intermediates = ddim_sampler.sample(ddim_steps, batch_size, shape, cond, verbose=False, **kwargs)

|

| 457 |

+

return samples, intermediates

|

| 458 |

+

|

| 459 |

+

def configure_optimizers(self):

|

| 460 |

+

lr = self.learning_rate

|

| 461 |

+

params = list(self.control_model.parameters())

|

| 462 |

+

if not self.sd_locked:

|

| 463 |

+

params += list(self.model.diffusion_model.output_blocks.parameters())

|

| 464 |

+

params += list(self.model.diffusion_model.out.parameters())

|

| 465 |

+

opt = torch.optim.AdamW(params, lr=lr)

|

| 466 |

+

return opt

|

| 467 |

+

|

| 468 |

+

def low_vram_shift(self, is_diffusing):

|

| 469 |

+

if is_diffusing:

|

| 470 |

+

self.model = self.model.cuda()

|

| 471 |

+

self.control_model = self.control_model.cuda()

|

| 472 |

+

self.first_stage_model = self.first_stage_model.cpu()

|

| 473 |

+

self.cond_stage_model = self.cond_stage_model.cpu()

|

| 474 |

+

else:

|

| 475 |

+

self.model = self.model.cpu()

|

| 476 |

+

self.control_model = self.control_model.cpu()

|

| 477 |

+

self.first_stage_model = self.first_stage_model.cuda()

|

| 478 |

+

self.cond_stage_model = self.cond_stage_model.cuda()

|

cldm/ddim_hacked.py

ADDED

|

@@ -0,0 +1,317 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|