Spaces:

Sleeping

Sleeping

Avijit Ghosh

commited on

Commit

·

0c7d699

1

Parent(s):

981ea1d

removed csv, added support for datasets

Browse files- DemoData.csv +0 -26

- Images/CrowsPairs2.png +0 -0

- __pycache__/css.cpython-312.pyc +0 -0

- app.py +8 -2

- configs/crowspairs.yaml +3 -2

- configs/homoglyphbias.yaml +1 -1

- configs/honest.yaml +1 -1

- configs/ieat.yaml +1 -1

- configs/imagedataleak.yaml +1 -1

- configs/notmyvoice.yaml +1 -1

- configs/stablebias.yaml +1 -1

- configs/stereoset.yaml +1 -1

- configs/videodiversemisinfo.yaml +1 -1

- configs/weat.yaml +1 -1

DemoData.csv

DELETED

|

@@ -1,26 +0,0 @@

|

|

| 1 |

-

Group,Modality,Type,Metaname,Suggested Evaluation,What it is evaluating,Considerations,Link,URL,Screenshots,Applicable Models ,Datasets,Hashtags,Abstract,Authors

|

| 2 |

-

BiasEvals,Text,Model,weat,Word Embedding Association Test (WEAT),Associations and word embeddings based on Implicit Associations Test (IAT),"Although based in human associations, general societal attitudes do not always represent subgroups of people and cultures.",Semantics derived automatically from language corpora contain human-like biases,https://researchportal.bath.ac.uk/en/publications/semantics-derived-automatically-from-language-corpora-necessarily,"['Images/WEAT1.png', 'Images/WEAT2.png']",,,"['Bias', 'Word Association', 'Embeddings', 'NLP']","Artificial intelligence and machine learning are in a period of astounding growth. However, there are concerns that these

|

| 3 |

-

technologies may be used, either with or without intention, to perpetuate the prejudice and unfairness that unfortunately

|

| 4 |

-

characterizes many human institutions. Here we show for the first time that human-like semantic biases result from the

|

| 5 |

-

application of standard machine learning to ordinary language—the same sort of language humans are exposed to every

|

| 6 |

-

day. We replicate a spectrum of standard human biases as exposed by the Implicit Association Test and other well-known

|

| 7 |

-

psychological studies. We replicate these using a widely used, purely statistical machine-learning model—namely, the GloVe

|

| 8 |

-

word embedding—trained on a corpus of text from the Web. Our results indicate that language itself contains recoverable and

|

| 9 |

-

accurate imprints of our historic biases, whether these are morally neutral as towards insects or flowers, problematic as towards

|

| 10 |

-

race or gender, or even simply veridical, reflecting the status quo for the distribution of gender with respect to careers or first

|

| 11 |

-

names. These regularities are captured by machine learning along with the rest of semantics. In addition to our empirical

|

| 12 |

-

findings concerning language, we also contribute new methods for evaluating bias in text, the Word Embedding Association

|

| 13 |

-

Test (WEAT) and the Word Embedding Factual Association Test (WEFAT). Our results have implications not only for AI and

|

| 14 |

-

machine learning, but also for the fields of psychology, sociology, and human ethics, since they raise the possibility that mere

|

| 15 |

-

exposure to everyday language can account for the biases we replicate here.","Aylin Caliskan, Joanna J. Bryson, and Arvind Narayanan"

|

| 16 |

-

BiasEvals,Text,Dataset,stereoset,StereoSet,Protected class stereotypes,Automating stereotype detection makes distinguishing harmful stereotypes difficult. It also raises many false positives and can flag relatively neutral associations based in fact (e.g. population x has a high proportion of lactose intolerant people).,StereoSet: Measuring stereotypical bias in pretrained language models,https://arxiv.org/abs/2004.09456,,,,,,

|

| 17 |

-

BiasEvals,Text,Dataset,crowspairs,Crow-S Pairs,Protected class stereotypes,Automating stereotype detection makes distinguishing harmful stereotypes difficult. It also raises many false positives and can flag relatively neutral associations based in fact (e.g. population x has a high proportion of lactose intolerant people).,CrowS-Pairs: A Challenge Dataset for Measuring Social Biases in Masked Language Models,https://arxiv.org/abs/2010.00133,,,,,,

|

| 18 |

-

BiasEvals,Text,Output,honest,HONEST: Measuring Hurtful Sentence Completion in Language Models,Protected class stereotypes and hurtful language,Automating stereotype detection makes distinguishing harmful stereotypes difficult. It also raises many false positives and can flag relatively neutral associations based in fact (e.g. population x has a high proportion of lactose intolerant people).,HONEST: Measuring Hurtful Sentence Completion in Language Models,https://aclanthology.org/2021.naacl-main.191.pdf,,,,,,

|

| 19 |

-

BiasEvals,Image,Model,ieat,Image Embedding Association Test (iEAT),Embedding associations,"Although based in human associations, general societal attitudes do not always represent subgroups of people and cultures.","Image Representations Learned With Unsupervised Pre-Training Contain Human-like Biases | Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency",https://dl.acm.org/doi/abs/10.1145/3442188.3445932,,,,,,

|

| 20 |

-

BiasEvals,Image,Dataset,imagedataleak,Dataset leakage and model leakage,Gender and label bias,,Balanced Datasets Are Not Enough: Estimating and Mitigating Gender Bias in Deep Image Representations,https://arxiv.org/abs/1811.08489,,,,,,

|

| 21 |

-

BiasEvals,Image,Output,stablebias,Characterizing the variation in generated images,,,Stable bias: Analyzing societal representations in diffusion models,https://arxiv.org/abs/2303.11408,,,,,,

|

| 22 |

-

BiasEvals,Image,Output,homoglyphbias,Effect of different scripts on text-to-image generation,"It evaluates generated images for cultural stereotypes, when using different scripts (homoglyphs). It somewhat measures the suceptibility of a model to produce cultural stereotypes by simply switching the script",,Exploiting Cultural Biases via Homoglyphs in Text-to-Image Synthesis,https://arxiv.org/pdf/2209.08891.pdf,,,,,,

|

| 23 |

-

BiasEvals,Audio,Taxonomy,notmyvoice,Not My Voice! A Taxonomy of Ethical and Safety Harms of Speech Generators,Lists harms of audio/speech generators,Not necessarily evaluation but a good source of taxonomy. We can use this to point readers towards high-level evaluations,Not My Voice! A Taxonomy of Ethical and Safety Harms of Speech Generators,https://arxiv.org/pdf/2402.01708.pdf,,,,,,

|

| 24 |

-

BiasEvals,Video,Output,videodiversemisinfo,Diverse Misinformation: Impacts of Human Biases on Detection of Deepfakes on Networks,Human led evaluations of deepfakes to understand susceptibility and representational harms (including political violence),"Repr. harm, incite violence","Diverse Misinformation: Impacts of Human Biases on Detection of Deepfakes on Networks

|

| 25 |

-

",https://arxiv.org/abs/2210.10026,,,,,,

|

| 26 |

-

Privacy,,,,,,,,,,,,,,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Images/CrowsPairs2.png

ADDED

|

__pycache__/css.cpython-312.pyc

CHANGED

|

Binary files a/__pycache__/css.cpython-312.pyc and b/__pycache__/css.cpython-312.pyc differ

|

|

|

app.py

CHANGED

|

@@ -55,6 +55,7 @@ def showmodal(evt: gr.SelectData):

|

|

| 55 |

authormd = gr.Markdown("",visible=False)

|

| 56 |

tagsmd = gr.Markdown("",visible=False)

|

| 57 |

abstractmd = gr.Markdown("",visible=False)

|

|

|

|

| 58 |

gallery = gr.Gallery([],visible=False)

|

| 59 |

if evt.index[1] == 5:

|

| 60 |

modal = Modal(visible=True)

|

|

@@ -73,13 +74,16 @@ def showmodal(evt: gr.SelectData):

|

|

| 73 |

|

| 74 |

if pd.notnull(itemdic['Abstract']):

|

| 75 |

abstractmd = gr.Markdown(itemdic['Abstract'],visible=True)

|

|

|

|

|

|

|

|

|

|

| 76 |

|

| 77 |

screenshots = itemdic['Screenshots']

|

| 78 |

if isinstance(screenshots, list):

|

| 79 |

if len(screenshots) > 0:

|

| 80 |

gallery = gr.Gallery(screenshots, visible=True)

|

| 81 |

|

| 82 |

-

return [modal, titlemd, authormd, tagsmd, abstractmd, gallery]

|

| 83 |

|

| 84 |

with gr.Blocks(title = "Social Impact Measurement V2", css=custom_css) as demo: #theme=gr.themes.Soft(),

|

| 85 |

# create tabs for the app, moving the current table to one titled "rewardbench" and the benchmark_text to a tab called "About"

|

|

@@ -124,13 +128,15 @@ The following categories are high-level, non-exhaustive, and present a synthesis

|

|

| 124 |

modality_filter.change(filter_modality, inputs=[biastable_filtered, modality_filter], outputs=biastable_filtered)

|

| 125 |

type_filter.change(filter_type, inputs=[biastable_filtered, type_filter], outputs=biastable_filtered)

|

| 126 |

|

|

|

|

| 127 |

with Modal(visible=False) as modal:

|

| 128 |

titlemd = gr.Markdown(visible=False)

|

| 129 |

authormd = gr.Markdown(visible=False)

|

| 130 |

tagsmd = gr.Markdown(visible=False)

|

| 131 |

abstractmd = gr.Markdown(visible=False)

|

|

|

|

| 132 |

gallery = gr.Gallery(visible=False)

|

| 133 |

-

biastable_filtered.select(showmodal, None, [modal, titlemd, authormd, tagsmd, abstractmd, gallery])

|

| 134 |

|

| 135 |

|

| 136 |

|

|

|

|

| 55 |

authormd = gr.Markdown("",visible=False)

|

| 56 |

tagsmd = gr.Markdown("",visible=False)

|

| 57 |

abstractmd = gr.Markdown("",visible=False)

|

| 58 |

+

datasetmd = gr.Markdown("",visible=False)

|

| 59 |

gallery = gr.Gallery([],visible=False)

|

| 60 |

if evt.index[1] == 5:

|

| 61 |

modal = Modal(visible=True)

|

|

|

|

| 74 |

|

| 75 |

if pd.notnull(itemdic['Abstract']):

|

| 76 |

abstractmd = gr.Markdown(itemdic['Abstract'],visible=True)

|

| 77 |

+

|

| 78 |

+

if pd.notnull(itemdic['Datasets']):

|

| 79 |

+

datasetmd = gr.Markdown('#### [Dataset]('+itemdic['Datasets']+')',visible=True)

|

| 80 |

|

| 81 |

screenshots = itemdic['Screenshots']

|

| 82 |

if isinstance(screenshots, list):

|

| 83 |

if len(screenshots) > 0:

|

| 84 |

gallery = gr.Gallery(screenshots, visible=True)

|

| 85 |

|

| 86 |

+

return [modal, titlemd, authormd, tagsmd, abstractmd, datasetmd, gallery]

|

| 87 |

|

| 88 |

with gr.Blocks(title = "Social Impact Measurement V2", css=custom_css) as demo: #theme=gr.themes.Soft(),

|

| 89 |

# create tabs for the app, moving the current table to one titled "rewardbench" and the benchmark_text to a tab called "About"

|

|

|

|

| 128 |

modality_filter.change(filter_modality, inputs=[biastable_filtered, modality_filter], outputs=biastable_filtered)

|

| 129 |

type_filter.change(filter_type, inputs=[biastable_filtered, type_filter], outputs=biastable_filtered)

|

| 130 |

|

| 131 |

+

|

| 132 |

with Modal(visible=False) as modal:

|

| 133 |

titlemd = gr.Markdown(visible=False)

|

| 134 |

authormd = gr.Markdown(visible=False)

|

| 135 |

tagsmd = gr.Markdown(visible=False)

|

| 136 |

abstractmd = gr.Markdown(visible=False)

|

| 137 |

+

datasetmd = gr.Markdown(visible=False)

|

| 138 |

gallery = gr.Gallery(visible=False)

|

| 139 |

+

biastable_filtered.select(showmodal, None, [modal, titlemd, authormd, tagsmd, abstractmd, datasetmd, gallery])

|

| 140 |

|

| 141 |

|

| 142 |

|

configs/crowspairs.yaml

CHANGED

|

@@ -1,10 +1,10 @@

|

|

| 1 |

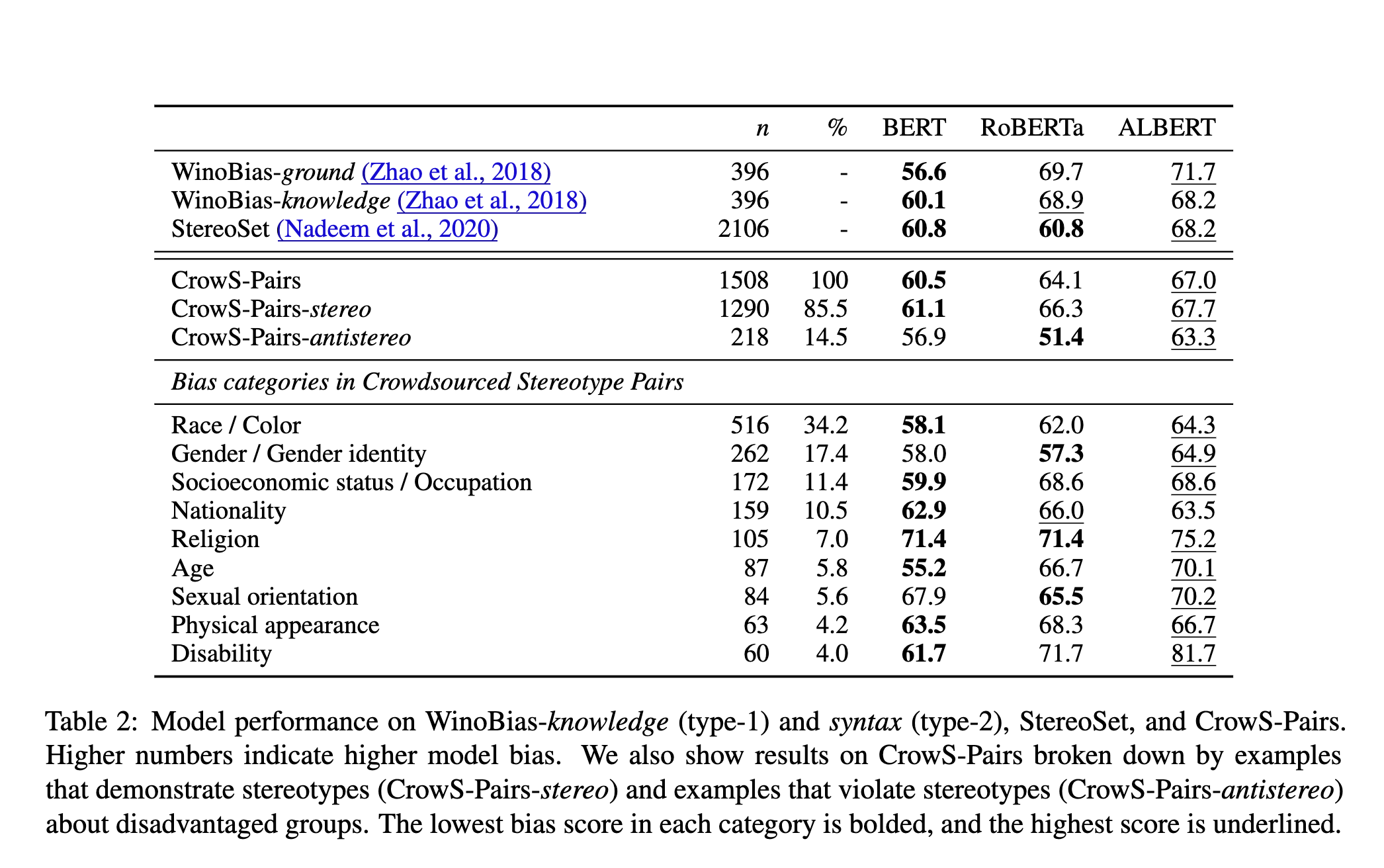

Abstract: "Pretrained language models, especially masked language models (MLMs) have seen success across many NLP tasks. However, there is ample evidence that they use the cultural biases that are undoubtedly present in the corpora they are trained on, implicitly creating harm with biased representations. To measure some forms of social bias in language models against protected demographic groups in the US, we introduce the Crowdsourced Stereotype Pairs benchmark (CrowS-Pairs). CrowS-Pairs has 1508 examples that cover stereotypes dealing with nine types of bias, like race, religion, and age. In CrowS-Pairs a model is presented with two sentences: one that is more stereotyping and another that is less stereotyping. The data focuses on stereotypes about historically disadvantaged groups and contrasts them with advantaged groups. We find that all three of the widely-used MLMs we evaluate substantially favor sentences that express stereotypes in every category in CrowS-Pairs. As work on building less biased models advances, this dataset can be used as a benchmark to evaluate progress."

|

| 2 |

-

|

| 3 |

Authors: Nikita Nangia, Clara Vania, Rasika Bhalerao, Samuel R. Bowman

|

| 4 |

Considerations: Automating stereotype detection makes distinguishing harmful stereotypes

|

| 5 |

difficult. It also raises many false positives and can flag relatively neutral associations

|

| 6 |

based in fact (e.g. population x has a high proportion of lactose intolerant people).

|

| 7 |

-

Datasets: .

|

| 8 |

Group: BiasEvals

|

| 9 |

Hashtags: .nan

|

| 10 |

Link: 'CrowS-Pairs: A Challenge Dataset for Measuring Social Biases in Masked Language

|

|

@@ -12,6 +12,7 @@ Link: 'CrowS-Pairs: A Challenge Dataset for Measuring Social Biases in Masked La

|

|

| 12 |

Modality: Text

|

| 13 |

Screenshots:

|

| 14 |

- Images/CrowsPairs1.png

|

|

|

|

| 15 |

Suggested Evaluation: Crow-S Pairs

|

| 16 |

Type: Dataset

|

| 17 |

URL: https://arxiv.org/abs/2010.00133

|

|

|

|

| 1 |

Abstract: "Pretrained language models, especially masked language models (MLMs) have seen success across many NLP tasks. However, there is ample evidence that they use the cultural biases that are undoubtedly present in the corpora they are trained on, implicitly creating harm with biased representations. To measure some forms of social bias in language models against protected demographic groups in the US, we introduce the Crowdsourced Stereotype Pairs benchmark (CrowS-Pairs). CrowS-Pairs has 1508 examples that cover stereotypes dealing with nine types of bias, like race, religion, and age. In CrowS-Pairs a model is presented with two sentences: one that is more stereotyping and another that is less stereotyping. The data focuses on stereotypes about historically disadvantaged groups and contrasts them with advantaged groups. We find that all three of the widely-used MLMs we evaluate substantially favor sentences that express stereotypes in every category in CrowS-Pairs. As work on building less biased models advances, this dataset can be used as a benchmark to evaluate progress."

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: Nikita Nangia, Clara Vania, Rasika Bhalerao, Samuel R. Bowman

|

| 4 |

Considerations: Automating stereotype detection makes distinguishing harmful stereotypes

|

| 5 |

difficult. It also raises many false positives and can flag relatively neutral associations

|

| 6 |

based in fact (e.g. population x has a high proportion of lactose intolerant people).

|

| 7 |

+

Datasets: https://huggingface.co/datasets/crows_pairs

|

| 8 |

Group: BiasEvals

|

| 9 |

Hashtags: .nan

|

| 10 |

Link: 'CrowS-Pairs: A Challenge Dataset for Measuring Social Biases in Masked Language

|

|

|

|

| 12 |

Modality: Text

|

| 13 |

Screenshots:

|

| 14 |

- Images/CrowsPairs1.png

|

| 15 |

+

- Images/CrowsPairs2.png

|

| 16 |

Suggested Evaluation: Crow-S Pairs

|

| 17 |

Type: Dataset

|

| 18 |

URL: https://arxiv.org/abs/2010.00133

|

configs/homoglyphbias.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: .nan

|

| 5 |

Datasets: .nan

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: .nan

|

| 5 |

Datasets: .nan

|

configs/honest.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Automating stereotype detection makes distinguishing harmful stereotypes

|

| 5 |

difficult. It also raises many false positives and can flag relatively neutral associations

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Automating stereotype detection makes distinguishing harmful stereotypes

|

| 5 |

difficult. It also raises many false positives and can flag relatively neutral associations

|

configs/ieat.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Although based in human associations, general societal attitudes do

|

| 5 |

not always represent subgroups of people and cultures.

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Although based in human associations, general societal attitudes do

|

| 5 |

not always represent subgroups of people and cultures.

|

configs/imagedataleak.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: .nan

|

| 5 |

Datasets: .nan

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: .nan

|

| 5 |

Datasets: .nan

|

configs/notmyvoice.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Not necessarily evaluation but a good source of taxonomy. We can use

|

| 5 |

this to point readers towards high-level evaluations

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Not necessarily evaluation but a good source of taxonomy. We can use

|

| 5 |

this to point readers towards high-level evaluations

|

configs/stablebias.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: .nan

|

| 5 |

Datasets: .nan

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: .nan

|

| 5 |

Datasets: .nan

|

configs/stereoset.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Automating stereotype detection makes distinguishing harmful stereotypes

|

| 5 |

difficult. It also raises many false positives and can flag relatively neutral associations

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Automating stereotype detection makes distinguishing harmful stereotypes

|

| 5 |

difficult. It also raises many false positives and can flag relatively neutral associations

|

configs/videodiversemisinfo.yaml

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

Abstract: .nan

|

| 2 |

-

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Repr. harm, incite violence

|

| 5 |

Datasets: .nan

|

|

|

|

| 1 |

Abstract: .nan

|

| 2 |

+

Applicable Models: .nan

|

| 3 |

Authors: .nan

|

| 4 |

Considerations: Repr. harm, incite violence

|

| 5 |

Datasets: .nan

|

configs/weat.yaml

CHANGED

|

@@ -19,7 +19,7 @@ Abstract: "Artificial intelligence and machine learning are in a period of astou

|

|

| 19 |

machine learning, but also for the fields of psychology, sociology, and human ethics,\

|

| 20 |

\ since they raise the possibility that mere\nexposure to everyday language can\

|

| 21 |

\ account for the biases we replicate here."

|

| 22 |

-

|

| 23 |

Authors: Aylin Caliskan, Joanna J. Bryson, and Arvind Narayanan

|

| 24 |

Considerations: Although based in human associations, general societal attitudes do

|

| 25 |

not always represent subgroups of people and cultures.

|

|

|

|

| 19 |

machine learning, but also for the fields of psychology, sociology, and human ethics,\

|

| 20 |

\ since they raise the possibility that mere\nexposure to everyday language can\

|

| 21 |

\ account for the biases we replicate here."

|

| 22 |

+

Applicable Models: .nan

|

| 23 |

Authors: Aylin Caliskan, Joanna J. Bryson, and Arvind Narayanan

|

| 24 |

Considerations: Although based in human associations, general societal attitudes do

|

| 25 |

not always represent subgroups of people and cultures.

|