Initial commit

Browse files- .gitignore +1 -0

- README.md +4 -3

- app.py +149 -0

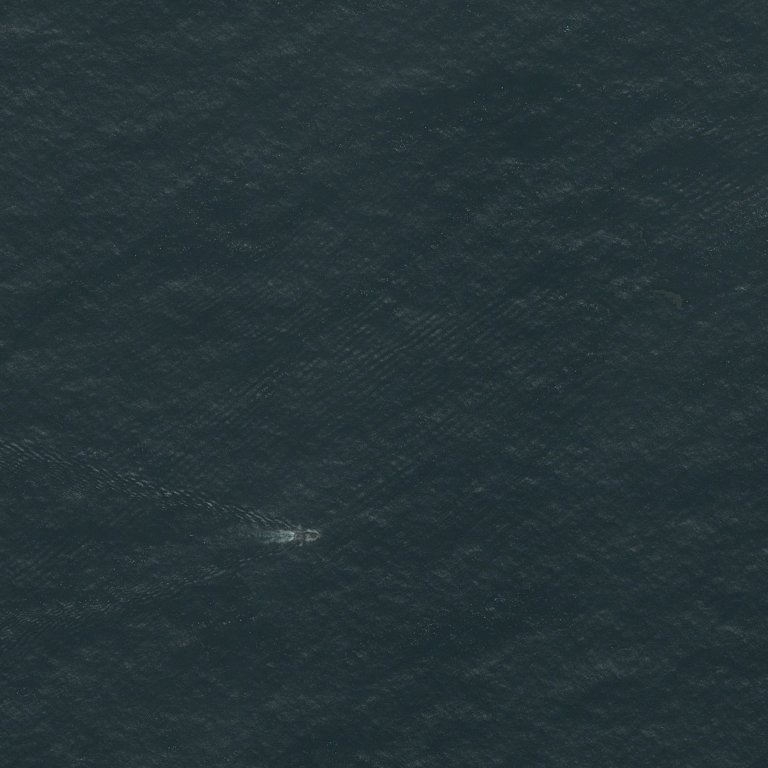

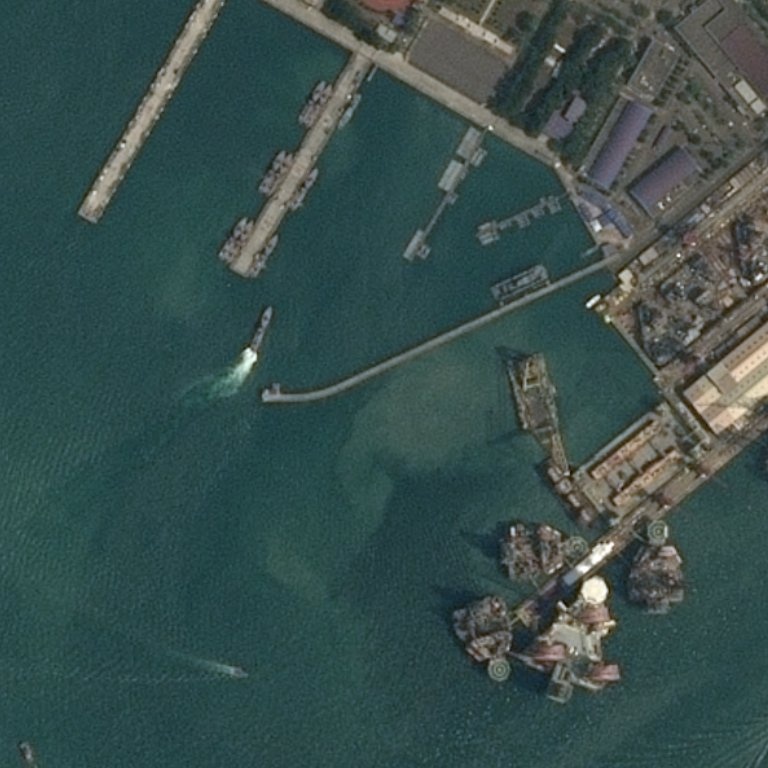

- demo/12ab97857.jpg +0 -0

- demo/82f13510a.jpg +0 -0

- demo/836f35381.jpg +0 -0

- demo/848d2afef.jpg +0 -0

- demo/911b25478.jpg +0 -0

- demo/b86e4046f.jpg +0 -0

- demo/ce2220f49.jpg +0 -0

- demo/d9762ef5e.jpg +0 -0

- demo/fa613751e.jpg +0 -0

- requirements.txt +3 -0

.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

**/.DS_Store

|

README.md

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

---

|

| 2 |

title: Ship Detection Optical Satellite

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

colorTo: blue

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.32.0

|

|

@@ -10,4 +10,5 @@ pinned: false

|

|

| 10 |

license: cc-by-nc-sa-4.0

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: Ship Detection Optical Satellite

|

| 3 |

+

emoji: 🚢

|

| 4 |

+

colorFrom: purple

|

| 5 |

colorTo: blue

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.32.0

|

|

|

|

| 10 |

license: cc-by-nc-sa-4.0

|

| 11 |

---

|

| 12 |

|

| 13 |

+

This app allows you to detect ships in optical satellite images.

|

| 14 |

+

It is connecting to a GPU enabled inference API deployed on https://modal.com

|

app.py

ADDED

|

@@ -0,0 +1,149 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import socket

|

| 3 |

+

import time

|

| 4 |

+

import gradio as gr

|

| 5 |

+

import numpy as np

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import supervision as sv

|

| 8 |

+

import cv2

|

| 9 |

+

import base64

|

| 10 |

+

import requests

|

| 11 |

+

import json

|

| 12 |

+

|

| 13 |

+

# API for inferences

|

| 14 |

+

DL4EO_API_URL = "https://dl4eo--ship-predict.modal.run"

|

| 15 |

+

|

| 16 |

+

# Auth Token to access API

|

| 17 |

+

DL4EO_API_KEY = os.environ['DL4EO_API_KEY']

|

| 18 |

+

|

| 19 |

+

# width of the boxes on image

|

| 20 |

+

LINE_WIDTH = 2

|

| 21 |

+

|

| 22 |

+

# Check Gradio version

|

| 23 |

+

print(f"Gradio version: {gr.__version__}")

|

| 24 |

+

|

| 25 |

+

# Define the inference function

|

| 26 |

+

def predict_image(img, threshold):

|

| 27 |

+

|

| 28 |

+

if isinstance(img, Image.Image):

|

| 29 |

+

img = np.array(img)

|

| 30 |

+

|

| 31 |

+

if not isinstance(img, np.ndarray) or len(img.shape) != 3 or img.shape[2] != 3:

|

| 32 |

+

raise BaseException("predict_image(): input 'img' shoud be single RGB image in PIL or Numpy array format.")

|

| 33 |

+

|

| 34 |

+

# Encode the image data as base64

|

| 35 |

+

image_base64 = base64.b64encode(np.ascontiguousarray(img)).decode()

|

| 36 |

+

|

| 37 |

+

# Create a dictionary representing the JSON payload

|

| 38 |

+

payload = {

|

| 39 |

+

'image': image_base64,

|

| 40 |

+

'shape': img.shape,

|

| 41 |

+

'threshold': threshold,

|

| 42 |

+

}

|

| 43 |

+

|

| 44 |

+

headers = {

|

| 45 |

+

'Authorization': 'Bearer ' + DL4EO_API_KEY,

|

| 46 |

+

'Content-Type': 'application/json' # Adjust the content type as needed

|

| 47 |

+

}

|

| 48 |

+

|

| 49 |

+

# Send the POST request to the API endpoint with the image file as binary payload

|

| 50 |

+

response = requests.post(DL4EO_API_URL, json=payload, headers=headers)

|

| 51 |

+

|

| 52 |

+

# Check the response status

|

| 53 |

+

if response.status_code != 200:

|

| 54 |

+

raise Exception(

|

| 55 |

+

f"Received status code={response.status_code} in inference API"

|

| 56 |

+

)

|

| 57 |

+

|

| 58 |

+

json_data = json.loads(response.content)

|

| 59 |

+

detections = json_data['detections']

|

| 60 |

+

duration = json_data['duration']

|

| 61 |

+

|

| 62 |

+

# Convert the numpy array (RGB format) to a cv2 image (BGR format)

|

| 63 |

+

cv2_img = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

|

| 64 |

+

|

| 65 |

+

# Annotate the image with detections

|

| 66 |

+

oriented_box_annotator = sv.OrientedBoxAnnotator()

|

| 67 |

+

annotated_frame = oriented_box_annotator.annotate(

|

| 68 |

+

scene=cv2_img,

|

| 69 |

+

detections=detections

|

| 70 |

+

)

|

| 71 |

+

image_with_predictions_rgb = cv2.cvtColor(annotated_frame, cv2.COLOR_BGR2RGB)

|

| 72 |

+

|

| 73 |

+

img_data_in = base64.b64decode(json_data['image'])

|

| 74 |

+

np_img = np.frombuffer(img_data_in, dtype=np.uint8).reshape(img.shape)

|

| 75 |

+

pil_img = Image.fromarray(np_img)

|

| 76 |

+

|

| 77 |

+

return pil_img, img.shape, len(detections), duration

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

# Define example images and their true labels for users to choose from

|

| 81 |

+

example_data = [

|

| 82 |

+

["./demo/12ab97857.jpg", 0.8],

|

| 83 |

+

["./demo/82f13510a.jpg", 0.8],

|

| 84 |

+

["./demo/836f35381.jpg", 0.8],

|

| 85 |

+

["./demo/848d2afef.jpg", 0.8],

|

| 86 |

+

["./demo/911b25478.jpg", 0.8],

|

| 87 |

+

["./demo/b86e4046f.jpg", 0.8],

|

| 88 |

+

["./demo/ce2220f49.jpg", 0.8],

|

| 89 |

+

["./demo/d9762ef5e.jpg", 0.8],

|

| 90 |

+

["./demo/fa613751e.jpg", 0.8],

|

| 91 |

+

# Add more example images and thresholds as needed

|

| 92 |

+

|

| 93 |

+

]

|

| 94 |

+

|

| 95 |

+

# Define CSS for some elements

|

| 96 |

+

css = """

|

| 97 |

+

.image-preview {

|

| 98 |

+

height: 820px !important;

|

| 99 |

+

width: 800px !important;

|

| 100 |

+

}

|

| 101 |

+

"""

|

| 102 |

+

|

| 103 |

+

TITLE = "Oriented bounding boxes detection on Optical Satellite images"

|

| 104 |

+

|

| 105 |

+

# Define the Gradio Interface

|

| 106 |

+

demo = gr.Blocks(title=TITLE, css=css).queue()

|

| 107 |

+

with demo:

|

| 108 |

+

gr.Markdown(f"<h1><center>{TITLE}<center><h1>")

|

| 109 |

+

|

| 110 |

+

with gr.Row():

|

| 111 |

+

with gr.Column(scale=0):

|

| 112 |

+

input_image = gr.Image(type="pil", interactive=True)

|

| 113 |

+

run_button = gr.Button(value="Run")

|

| 114 |

+

with gr.Accordion("Advanced options", open=True):

|

| 115 |

+

threshold = gr.Slider(label="Confidence threshold", minimum=0.0, maximum=1.0, value=0.25, step=0.01)

|

| 116 |

+

dimensions = gr.Textbox(label="Image size", interactive=False)

|

| 117 |

+

detections = gr.Textbox(label="Predicted objects", interactive=False)

|

| 118 |

+

stopwatch = gr.Number(label="Execution time (sec.)", interactive=False, precision=3)

|

| 119 |

+

|

| 120 |

+

with gr.Column(scale=2):

|

| 121 |

+

output_image = gr.Image(type="pil", elem_classes='image-preview', interactive=False)

|

| 122 |

+

|

| 123 |

+

run_button.click(fn=predict_image, inputs=[input_image, threshold], outputs=[output_image, dimensions, detections, stopwatch])

|

| 124 |

+

gr.Examples(

|

| 125 |

+

examples=example_data,

|

| 126 |

+

inputs = [input_image, threshold],

|

| 127 |

+

outputs = [output_image, dimensions, detections, stopwatch],

|

| 128 |

+

fn=predict_image,

|

| 129 |

+

cache_examples=True,

|

| 130 |

+

label='Try these images!'

|

| 131 |

+

)

|

| 132 |

+

|

| 133 |

+

gr.Markdown("""

|

| 134 |

+

<p>This demo is provided by <a href='https://www.linkedin.com/in/faudi/'>Jeff Faudi</a> and <a href='https://www.dl4eo.com/'>DL4EO</a>.

|

| 135 |

+

This model is based on the <a href='https://github.com/open-mmlab/mmrotate'>MMRotate framework</a> which provides oriented bounding boxes.

|

| 136 |

+

We believe that oriented bouding boxes are better suited for detection in satellite images. This model has been trained on the

|

| 137 |

+

<a href='https://captain-whu.github.io/DOTA/dataset.html'>DOTA dataset</a> which contains 15 classes: plane, ship, storage tank,

|

| 138 |

+

baseball diamond, tennis court, basketball court, ground track field, harbor, bridge, large vehicle, small vehicle, helicopter,

|

| 139 |

+

roundabout, soccer ball field and swimming pool. </p><p>The associated licenses are

|

| 140 |

+

<a href='https://about.google/brand-resource-center/products-and-services/geo-guidelines/#google-earth-web-and-apps'>GoogleEarth fair use</a>

|

| 141 |

+

and <a href='https://creativecommons.org/licenses/by-nc-sa/4.0/deed.en'>CC-BY-SA-NC</a>. This demonstration CANNOT be used for commercial puposes.

|

| 142 |

+

Please contact <a href='mailto:jeff@dl4eo.com'>me</a> for more information on how you could get access to a commercial grade model or API. </p>

|

| 143 |

+

""")

|

| 144 |

+

|

| 145 |

+

demo.launch(

|

| 146 |

+

inline=False,

|

| 147 |

+

show_api=False,

|

| 148 |

+

debug=False

|

| 149 |

+

)

|

demo/12ab97857.jpg

ADDED

|

demo/82f13510a.jpg

ADDED

|

demo/836f35381.jpg

ADDED

|

demo/848d2afef.jpg

ADDED

|

demo/911b25478.jpg

ADDED

|

demo/b86e4046f.jpg

ADDED

|

demo/ce2220f49.jpg

ADDED

|

demo/d9762ef5e.jpg

ADDED

|

demo/fa613751e.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

opencv-python

|

| 2 |

+

supervision

|

| 3 |

+

requests

|