Spaces:

Runtime error

Runtime error

uploading app and initial commit

Browse files- .gitattributes +2 -0

- app.py +118 -0

- examples/Image_105.jpg +0 -0

- examples/Image_18.jpg +0 -0

- examples/Image_28.jpg +0 -0

- examples/Image_4.jpg +3 -0

- examples/Image_58.jpg +0 -0

- examples/Image_67.jpg +0 -0

- examples/Image_89.jpg +3 -0

- models.py +183 -0

- models/mobilenet_v2.pth +3 -0

- models/resnet_18.pth +3 -0

- models/vgg_16.pth +3 -0

- requirements.txt +3 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

examples/Image_4.jpg filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

examples/Image_89.jpg filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

### 1. Imports and class names setup ###

|

| 2 |

+

import gradio as gr

|

| 3 |

+

import os

|

| 4 |

+

import torch

|

| 5 |

+

from torchvision import transforms

|

| 6 |

+

|

| 7 |

+

from models import get_mobilenet_v2_model, get_resnet_18_model, get_vgg_16_model

|

| 8 |

+

from timeit import default_timer as timer

|

| 9 |

+

from typing import Tuple, Dict

|

| 10 |

+

|

| 11 |

+

# Set device

|

| 12 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 13 |

+

|

| 14 |

+

# Setup class names

|

| 15 |

+

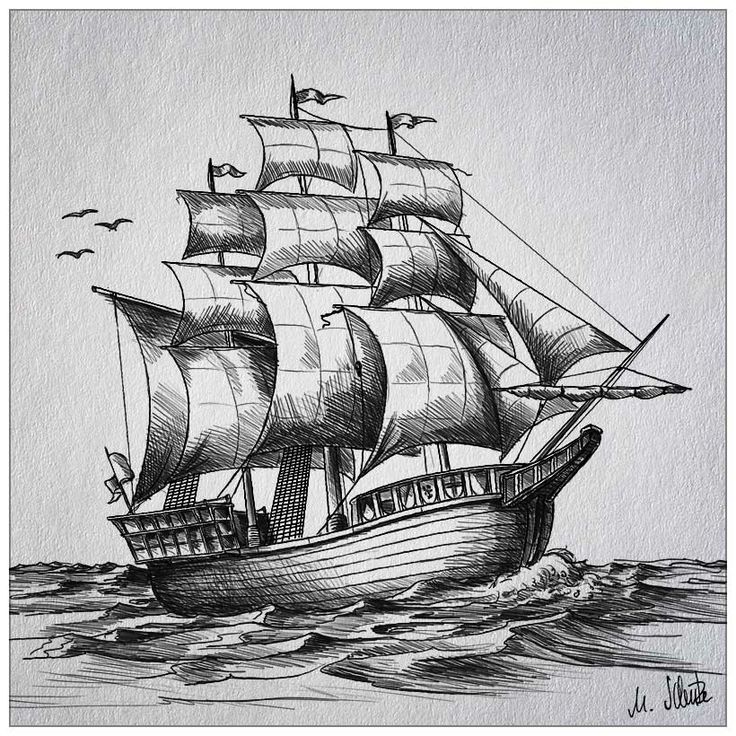

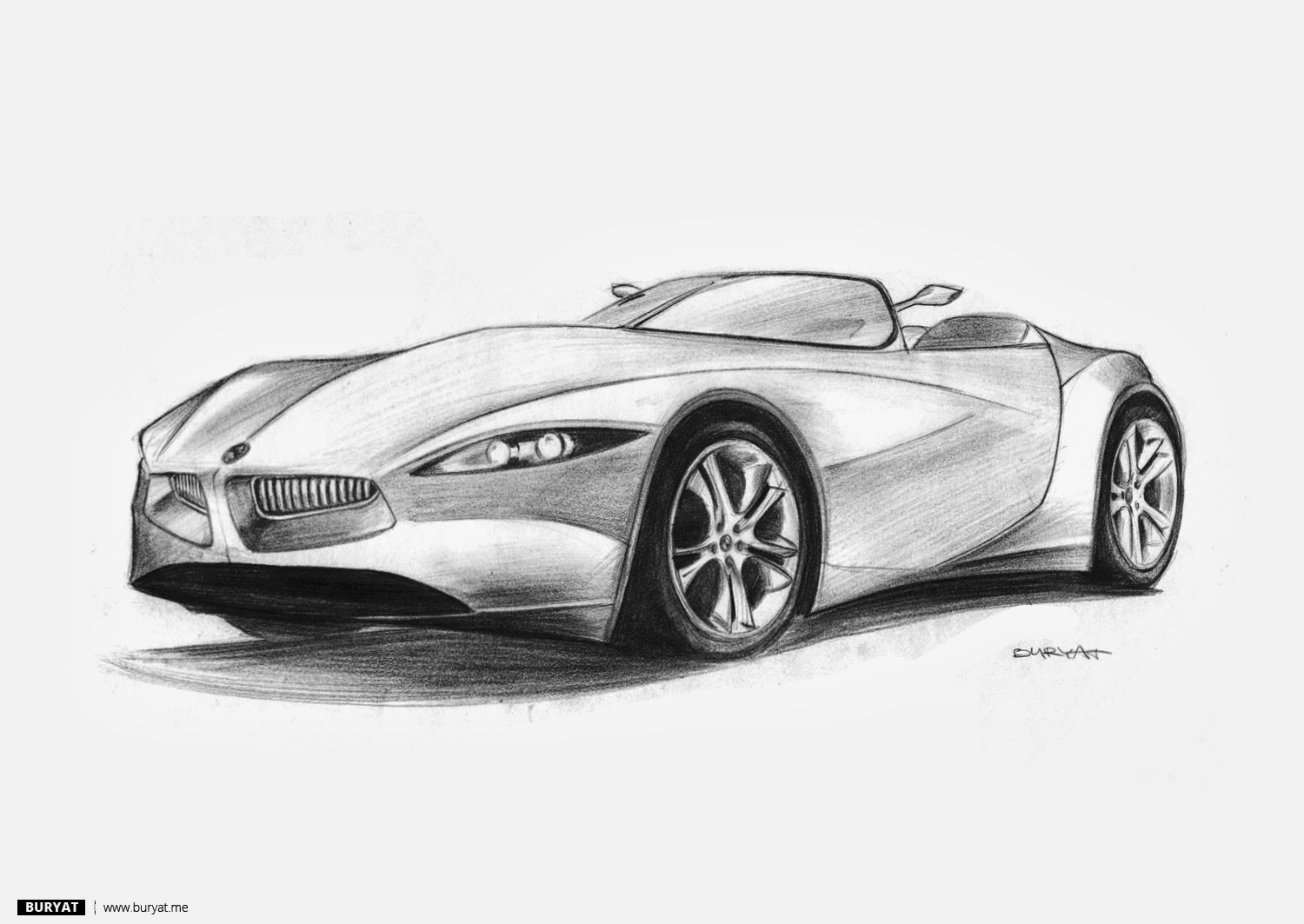

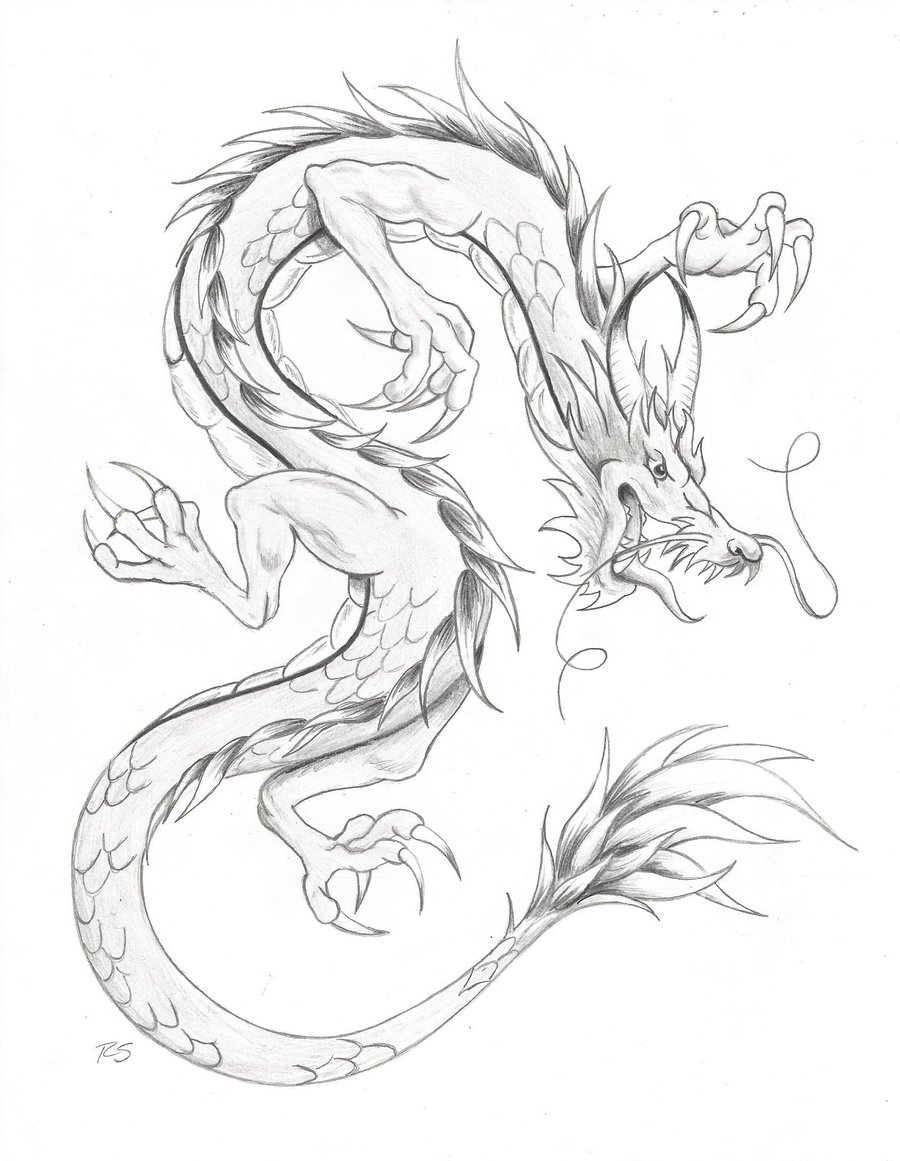

class_names = ["car","dragon","hourse","pegasus","ship","t-rex","tree"]

|

| 16 |

+

|

| 17 |

+

### 2. Model and transforms preparation ###

|

| 18 |

+

|

| 19 |

+

# Create EffNetB2 model

|

| 20 |

+

img_transforms = transforms.Compose(

|

| 21 |

+

[

|

| 22 |

+

transforms.Resize(size=(224, 224)),

|

| 23 |

+

transforms.ToTensor(),

|

| 24 |

+

]

|

| 25 |

+

)

|

| 26 |

+

|

| 27 |

+

model_name_to_fn = {

|

| 28 |

+

"mobilenet_v2": get_mobilenet_v2_model,

|

| 29 |

+

"resnet_18": get_resnet_18_model,

|

| 30 |

+

"vgg_16": get_vgg_16_model,

|

| 31 |

+

}

|

| 32 |

+

model_name_to_path = {

|

| 33 |

+

"mobilenet_v2": "mobilenet_v2.pth",

|

| 34 |

+

"resnet_18": "resnet_18.pth",

|

| 35 |

+

"vgg_16": "vgg_16.pt",

|

| 36 |

+

}

|

| 37 |

+

|

| 38 |

+

### 3. Predict function ###

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

# Create predict function

|

| 42 |

+

def predict(img, model_name: str,) -> Tuple[Dict, float]:

|

| 43 |

+

"""

|

| 44 |

+

Desc: Transforms and performs a prediction on img and returns prediction and time taken.

|

| 45 |

+

Args:

|

| 46 |

+

model_name (str): Name of the model to use for prediction.

|

| 47 |

+

img (PIL.Image): Image to perform prediction on.

|

| 48 |

+

Returns:

|

| 49 |

+

Tuple[Dict, float]: Tuple containing a dictionary of prediction labels and probabilities and the time taken to perform the prediction.

|

| 50 |

+

"""

|

| 51 |

+

# Start the timer

|

| 52 |

+

start_time = timer()

|

| 53 |

+

|

| 54 |

+

# Get the model function based on the model name

|

| 55 |

+

model_fn = model_name_to_fn[model_name]

|

| 56 |

+

model_path = model_name_to_path[model_name]

|

| 57 |

+

|

| 58 |

+

# Create the model and load its weights

|

| 59 |

+

model = model_fn().to(device)

|

| 60 |

+

model.load_state_dict(

|

| 61 |

+

torch.load(f"./models/{model_name}.pth", map_location=torch.device(device=device))

|

| 62 |

+

)

|

| 63 |

+

|

| 64 |

+

# Put model into evaluation mode and turn on inference mode

|

| 65 |

+

model.eval()

|

| 66 |

+

with torch.inference_mode():

|

| 67 |

+

# Transform the target image and add a batch dimension

|

| 68 |

+

img = img_transforms(img).unsqueeze(0).to(device)

|

| 69 |

+

|

| 70 |

+

# Pass the transformed image through the model and turn the prediction logits into prediction probabilities

|

| 71 |

+

pred_probs = torch.softmax(model(img), dim=1)

|

| 72 |

+

|

| 73 |

+

# Create a prediction label and prediction probability dictionary for each prediction class (this is the required format for Gradio's output parameter)

|

| 74 |

+

pred_labels_and_probs = {

|

| 75 |

+

class_names[i]: float(pred_probs[0][i]) for i in range(len(class_names))

|

| 76 |

+

}

|

| 77 |

+

|

| 78 |

+

# Calculate the prediction time

|

| 79 |

+

pred_time = round(timer() - start_time, 5)

|

| 80 |

+

|

| 81 |

+

# Return the prediction dictionary and prediction time

|

| 82 |

+

return pred_labels_and_probs, pred_time

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

### 4. Gradio app ###

|

| 86 |

+

|

| 87 |

+

# Create title, description and article strings

|

| 88 |

+

title = "SketchRec Mini ✍🏻"

|

| 89 |

+

description = "An Mutimodel Sketch Recognition App 🎨"

|

| 90 |

+

article = ""

|

| 91 |

+

|

| 92 |

+

# Create examples list from "examples/" directory

|

| 93 |

+

example_list = [["examples/" + example] for example in os.listdir("examples")]

|

| 94 |

+

|

| 95 |

+

# Create the Gradio demo

|

| 96 |

+

model_selection_dropdown = gr.components.Dropdown(

|

| 97 |

+

choices=list(model_name_to_fn.keys()), label="Select a model",

|

| 98 |

+

value="mobilenet_v2"

|

| 99 |

+

)

|

| 100 |

+

|

| 101 |

+

demo = gr.Interface(

|

| 102 |

+

fn=predict, # mapping function from input to output

|

| 103 |

+

inputs=[gr.Image(type="pil"),model_selection_dropdown], # what are the inputs?

|

| 104 |

+

outputs=[

|

| 105 |

+

gr.Label(num_top_classes=7, label="Predictions"), # what are the outputs?

|

| 106 |

+

gr.Number(label="Prediction time (s)"),

|

| 107 |

+

], # our fn has two outputs, therefore we have two outputs

|

| 108 |

+

# Create examples list from "examples/" directory

|

| 109 |

+

examples=example_list,

|

| 110 |

+

title=title,

|

| 111 |

+

description=description,

|

| 112 |

+

article=article,

|

| 113 |

+

)

|

| 114 |

+

|

| 115 |

+

# Launch the demo!

|

| 116 |

+

demo.launch(

|

| 117 |

+

debug=True,

|

| 118 |

+

)

|

examples/Image_105.jpg

ADDED

|

examples/Image_18.jpg

ADDED

|

examples/Image_28.jpg

ADDED

|

examples/Image_4.jpg

ADDED

|

Git LFS Details

|

examples/Image_58.jpg

ADDED

|

examples/Image_67.jpg

ADDED

|

examples/Image_89.jpg

ADDED

|

Git LFS Details

|

models.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List

|

| 2 |

+

import torch

|

| 3 |

+

from torch import nn

|

| 4 |

+

import numpy as np

|

| 5 |

+

from torchvision import models

|

| 6 |

+

from torchvision.models import ResNet18_Weights,ResNet50_Weights,VGG16_Weights,MobileNet_V2_Weights

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class EarlyStopping:

|

| 10 |

+

def __init__(self, tolerance=5, min_delta=0):

|

| 11 |

+

|

| 12 |

+

self.tolerance = tolerance

|

| 13 |

+

self.min_delta = min_delta

|

| 14 |

+

self.counter = 0

|

| 15 |

+

self.early_stop = False

|

| 16 |

+

|

| 17 |

+

def __call__(self, train_loss, validation_loss):

|

| 18 |

+

if (validation_loss - train_loss) > self.min_delta:

|

| 19 |

+

self.counter +=1

|

| 20 |

+

if self.counter >= self.tolerance:

|

| 21 |

+

self.early_stop = True

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

class Resnet18(nn.Module):

|

| 26 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 27 |

+

super().__init__()

|

| 28 |

+

self.resnet = models.resnet18()

|

| 29 |

+

self.resnet.fc = nn.Linear(512,out_shape)

|

| 30 |

+

|

| 31 |

+

def forward(self,x):

|

| 32 |

+

return self.resnet(x)

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

class PretrainedResnet18(nn.Module):

|

| 36 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 37 |

+

super().__init__()

|

| 38 |

+

self.resnet = models.resnet18(weights=ResNet18_Weights.DEFAULT)

|

| 39 |

+

|

| 40 |

+

# freeze all layers except last fc layer

|

| 41 |

+

for parms in self.resnet.parameters():

|

| 42 |

+

parms.requires_grad = False

|

| 43 |

+

|

| 44 |

+

self.resnet.fc = nn.Linear(512,out_shape)

|

| 45 |

+

|

| 46 |

+

def forward(self,x):

|

| 47 |

+

return self.resnet(x)

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

class Resnet50(nn.Module):

|

| 51 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 52 |

+

super().__init__()

|

| 53 |

+

self.resnet = models.resnet50()

|

| 54 |

+

self.resnet.fc = nn.Linear(2048,out_shape)

|

| 55 |

+

|

| 56 |

+

def forward(self,x):

|

| 57 |

+

return self.resnet(x)

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

class PretrainedResnet50(nn.Module):

|

| 61 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 62 |

+

super().__init__()

|

| 63 |

+

self.resnet = models.resnet50(weights=ResNet50_Weights.DEFAULT)

|

| 64 |

+

|

| 65 |

+

# freeze all layers except last fc layer

|

| 66 |

+

for parms in self.resnet.parameters():

|

| 67 |

+

parms.requires_grad = False

|

| 68 |

+

|

| 69 |

+

self.resnet.fc = nn.Linear(2048,out_shape)

|

| 70 |

+

|

| 71 |

+

def forward(self,x):

|

| 72 |

+

return self.resnet(x)

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

class EfficentNetB0(nn.Module):

|

| 76 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 77 |

+

super().__init__()

|

| 78 |

+

self.effnet = models.efficientnet_b0()

|

| 79 |

+

self.effnet.classifier = nn.Linear(1280,out_shape)

|

| 80 |

+

|

| 81 |

+

def forward(self,x):

|

| 82 |

+

return self.effnet(x)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

class MobileNetV2(nn.Module):

|

| 86 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 87 |

+

super().__init__()

|

| 88 |

+

self.mobilenet = models.mobilenet_v2()

|

| 89 |

+

self.mobilenet.classifier[1] = nn.Linear(1280,out_shape)

|

| 90 |

+

|

| 91 |

+

def forward(self,x):

|

| 92 |

+

return self.mobilenet(x)

|

| 93 |

+

|

| 94 |

+

class PretrainedMobileNetV2(nn.Module):

|

| 95 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 96 |

+

super().__init__()

|

| 97 |

+

self.mobilenet = models.mobilenet_v2(weights=MobileNet_V2_Weights.DEFAULT)

|

| 98 |

+

|

| 99 |

+

# freeze all layers except last fc layer

|

| 100 |

+

for parms in self.mobilenet.parameters():

|

| 101 |

+

parms.requires_grad = False

|

| 102 |

+

|

| 103 |

+

self.mobilenet.classifier = nn.Sequential(

|

| 104 |

+

nn.Dropout(p=0.2, inplace=False),

|

| 105 |

+

nn.Linear(in_features=1280, out_features=1000, bias=True)

|

| 106 |

+

)

|

| 107 |

+

|

| 108 |

+

def forward(self,x):

|

| 109 |

+

return self.mobilenet(x)

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

class VGG16(nn.Module):

|

| 113 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 114 |

+

super().__init__()

|

| 115 |

+

self.vgg = models.vgg16()

|

| 116 |

+

self.vgg.classifier = nn.Sequential(

|

| 117 |

+

nn.Linear(in_features=25088, out_features=4096, bias=True),

|

| 118 |

+

nn.ReLU(inplace=True),

|

| 119 |

+

nn.Dropout(p=0.5, inplace=False),

|

| 120 |

+

nn.Linear(in_features=4096, out_features=4096, bias=True),

|

| 121 |

+

nn.ReLU(inplace=True),

|

| 122 |

+

nn.Dropout(p=0.5, inplace=False),

|

| 123 |

+

nn.Linear(in_features=4096, out_features=out_shape, bias=True),

|

| 124 |

+

)

|

| 125 |

+

|

| 126 |

+

def forward(self,x):

|

| 127 |

+

return self.vgg(x)

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

class PretrainedVGG16(nn.Module):

|

| 131 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 132 |

+

super().__init__()

|

| 133 |

+

self.vgg = models.vgg16(weights=VGG16_Weights.DEFAULT)

|

| 134 |

+

|

| 135 |

+

# freeze all layers except last clf layer

|

| 136 |

+

for parms in self.vgg.parameters():

|

| 137 |

+

parms.requires_grad = False

|

| 138 |

+

|

| 139 |

+

self.vgg.classifier = nn.Sequential(

|

| 140 |

+

nn.Linear(in_features=25088, out_features=4096, bias=True),

|

| 141 |

+

nn.ReLU(inplace=True),

|

| 142 |

+

nn.Dropout(p=0.5, inplace=False),

|

| 143 |

+

nn.Linear(in_features=4096, out_features=4096, bias=True),

|

| 144 |

+

nn.ReLU(inplace=True),

|

| 145 |

+

nn.Dropout(p=0.5, inplace=False),

|

| 146 |

+

nn.Linear(in_features=4096, out_features=out_shape, bias=True),

|

| 147 |

+

)

|

| 148 |

+

|

| 149 |

+

def forward(self,x):

|

| 150 |

+

return self.vgg(x)

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

class VIT(nn.Module):

|

| 155 |

+

def __init__(self,out_shape:int = 1000) -> None:

|

| 156 |

+

super().__init__()

|

| 157 |

+

self.vit = models.vit_b_16()

|

| 158 |

+

self.vit.head = nn.Linear(768,out_shape)

|

| 159 |

+

|

| 160 |

+

def forward(self,x):

|

| 161 |

+

return self.vit(x)

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

# functions to get models

|

| 166 |

+

def get_resnet_18_model():

|

| 167 |

+

model = Resnet18(out_shape=7)

|

| 168 |

+

return model

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

def get_resnet_50_model():

|

| 172 |

+

model = Resnet50(out_shape=7)

|

| 173 |

+

return model

|

| 174 |

+

|

| 175 |

+

|

| 176 |

+

def get_vgg_16_model():

|

| 177 |

+

model = VGG16(out_shape=7)

|

| 178 |

+

return model

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

def get_mobilenet_v2_model():

|

| 182 |

+

model = MobileNetV2(out_shape=7)

|

| 183 |

+

return model

|

models/mobilenet_v2.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7597e7b6ba1183b33aca66ebf39eb73359bbc568b7293e2e62bc52cdad3f48e7

|

| 3 |

+

size 9263285

|

models/resnet_18.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ce03f8579e35fd33405cf5ac1b30fe62f87a8b47dc8c635fdaa500398bd915b2

|

| 3 |

+

size 44822093

|

models/vgg_16.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4c5f6a03dbaaa82674d76eaf0fd7fb831633d2929a25e15040ca3f05d4761f1b

|

| 3 |

+

size 537176517

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision

|

| 3 |

+

gradio

|