Spaces:

Build error

Build error

Upload 146 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- Dockerfile +41 -0

- LICENSE +1347 -0

- README.md +231 -12

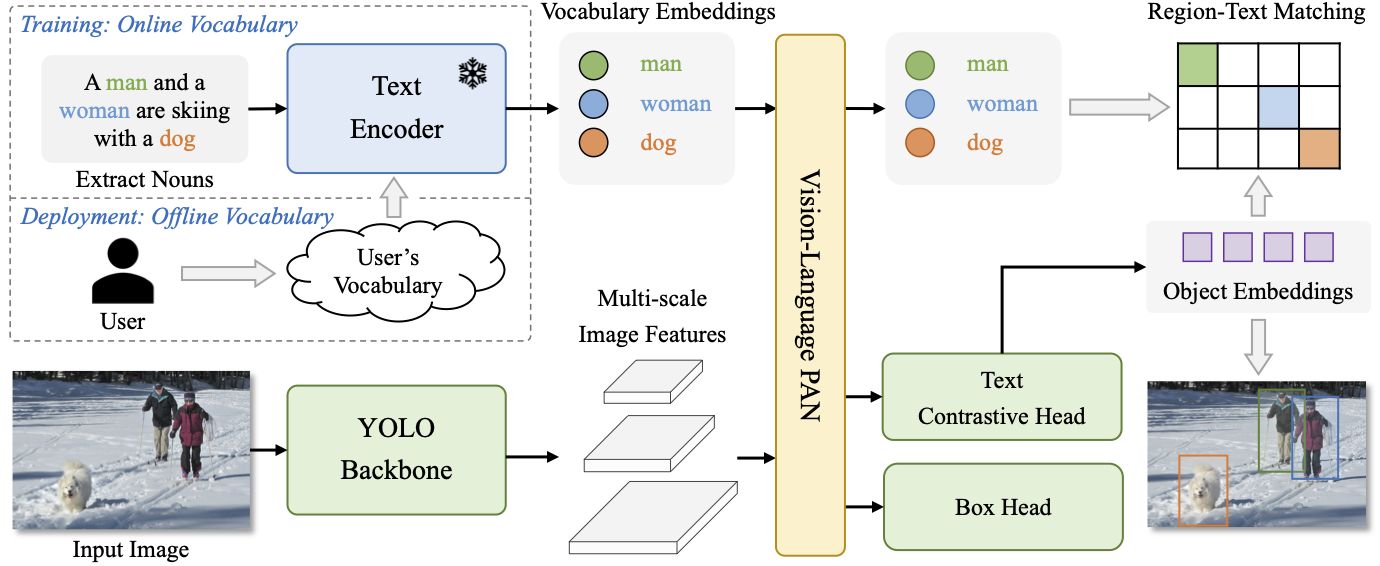

- assets/yolo_arch.png +0 -0

- assets/yolo_logo.png +0 -0

- configs/finetune_coco/README.md +26 -0

- configs/finetune_coco/yolo_world_l_dual_vlpan_2e-4_80e_8gpus_finetune_coco.py +179 -0

- configs/finetune_coco/yolo_world_l_dual_vlpan_2e-4_80e_8gpus_mask-refine_finetune_coco.py +181 -0

- configs/finetune_coco/yolo_world_l_efficient_neck_2e-4_80e_8gpus_mask-refine_finetune_coco.py +159 -0

- configs/finetune_coco/yolo_world_v2_l_efficient_neck_2e-4_80e_8gpus_mask-refine_finetune_coco.py +182 -0

- configs/finetune_coco/yolo_world_v2_l_vlpan_bn_2e-4_80e_8gpus_mask-refine_finetune_coco.py +181 -0

- configs/finetune_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_40e_8gpus_finetune_coco.py +160 -0

- configs/finetune_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_80e_8gpus_mask-refine_finetune_coco.py +161 -0

- configs/finetune_coco/yolo_world_v2_m_vlpan_bn_2e-4_80e_8gpus_mask-refine_finetune_coco.py +182 -0

- configs/finetune_coco/yolo_world_v2_s_vlpan_bn_2e-4_80e_8gpus_mask-refine_finetune_coco.py +184 -0

- configs/finetune_coco/yolo_world_v2_x_vlpan_bn_2e-4_80e_8gpus_mask-refine_finetune_coco.py +183 -0

- configs/finetune_coco/yolo_world_v2_xl_vlpan_bn_2e-4_80e_8gpus_mask-refine_finetune_coco.py +173 -0

- configs/pretrain/yolo_world_v2_l_clip_large_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_800ft_lvis_minival.py +200 -0

- configs/pretrain/yolo_world_v2_l_clip_large_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +171 -0

- configs/pretrain/yolo_world_v2_l_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_1280ft_lvis_minival.py +202 -0

- configs/pretrain/yolo_world_v2_l_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +171 -0

- configs/pretrain/yolo_world_v2_l_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_val.py +171 -0

- configs/pretrain/yolo_world_v2_m_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_1280ft_lvis_minival.py +198 -0

- configs/pretrain/yolo_world_v2_m_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +171 -0

- configs/pretrain/yolo_world_v2_m_vlpan_bn_noeinsum_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +176 -0

- configs/pretrain/yolo_world_v2_s_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_1280ft_lvis_minival.py +195 -0

- configs/pretrain/yolo_world_v2_s_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +170 -0

- configs/pretrain/yolo_world_v2_x_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +171 -0

- configs/pretrain/yolo_world_v2_xl_vlpan_bn_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +185 -0

- configs/pretrain_v1/README.md +21 -0

- configs/pretrain_v1/yolo_world_l_dual_vlpan_l2norm_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +172 -0

- configs/pretrain_v1/yolo_world_l_dual_vlpan_l2norm_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_val.py +172 -0

- configs/pretrain_v1/yolo_world_m_dual_vlpan_l2norm_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +172 -0

- configs/pretrain_v1/yolo_world_s_dual_vlpan_l2norm_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +172 -0

- configs/pretrain_v1/yolo_world_x_dual_vlpan_l2norm_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +172 -0

- configs/prompt_tuning_coco/READEME.md +12 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_2e-4_80e_8gpus_all_fine_tuning_coco.py +118 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_2e-4_80e_8gpus_all_fine_tuning_rmdecay_rmmosaic_coco.py +114 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_2e-4_80e_8gpus_mask-refine_all_fine_tuning_coco.py +156 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_2e-4_80e_8gpus_mask-refine_fine_prompt_tuning_coco.py +156 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_2e-4_80e_8gpus_mask-refine_prompt_tuning_coco.py +161 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_20e_8gpus_all_fine_tuning_rmdecay_coco.py +113 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_40e_8gpus_all_fine_tuning_rmdecay_coco.py +111 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_80e_8gpus_all_fine_tuning_coco.py +109 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_80e_8gpus_all_fine_tuning_rmdecay_coco.py +113 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-3_80e_8gpus_all_fine_tuning_rmdecay_coco_fixed.py +111 -0

- configs/prompt_tuning_coco/yolo_world_v2_l_vlpan_bn_sgd_1e-4_80e_8gpus_all_fine_tuning_coco.py +109 -0

- configs/segmentation/README.md +27 -0

- configs/segmentation/yolo_world_seg_l_dual_vlpan_2e-4_80e_8gpus_allmodules_finetune_lvis.py +227 -0

- configs/segmentation/yolo_world_seg_l_dual_vlpan_2e-4_80e_8gpus_seghead_finetune_lvis.py +237 -0

Dockerfile

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM nvidia/cuda:11.8.0-devel-ubuntu22.04

|

| 2 |

+

|

| 3 |

+

ARG MODEL="yolo_world_l_dual_vlpan_l2norm_2e-3_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py"

|

| 4 |

+

ARG WEIGHT="yolo_world_l_clip_base_dual_vlpan_2e-3adamw_32xb16_100e_o365_goldg_train_pretrained-0e566235.pth"

|

| 5 |

+

|

| 6 |

+

ENV FORCE_CUDA="1"

|

| 7 |

+

ENV MMCV_WITH_OPS=1

|

| 8 |

+

|

| 9 |

+

RUN apt-get update && apt-get install -y --no-install-recommends \

|

| 10 |

+

python3-pip \

|

| 11 |

+

libgl1-mesa-glx \

|

| 12 |

+

libsm6 \

|

| 13 |

+

libxext6 \

|

| 14 |

+

libxrender-dev \

|

| 15 |

+

libglib2.0-0 \

|

| 16 |

+

git \

|

| 17 |

+

python3-dev \

|

| 18 |

+

python3-wheel

|

| 19 |

+

|

| 20 |

+

RUN pip3 install --upgrade pip \

|

| 21 |

+

&& pip3 install \

|

| 22 |

+

gradio \

|

| 23 |

+

opencv-python \

|

| 24 |

+

supervision \

|

| 25 |

+

mmengine \

|

| 26 |

+

setuptools \

|

| 27 |

+

&& pip3 install --no-cache-dir --index-url https://download.pytorch.org/whl/cu118 \

|

| 28 |

+

wheel \

|

| 29 |

+

torch \

|

| 30 |

+

torchvision \

|

| 31 |

+

torchaudio

|

| 32 |

+

|

| 33 |

+

COPY . /yolo

|

| 34 |

+

WORKDIR /yolo

|

| 35 |

+

|

| 36 |

+

RUN pip3 install -e .

|

| 37 |

+

|

| 38 |

+

RUN curl -o weights/$WEIGHT -L https://huggingface.co/wondervictor/YOLO-World/resolve/main/$WEIGHT

|

| 39 |

+

|

| 40 |

+

ENTRYPOINT [ "python3", "demo.py" ]

|

| 41 |

+

CMD ["configs/pretrain/$MODEL", "weights/$WEIGHT"]

|

LICENSE

ADDED

|

@@ -0,0 +1,1347 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

GNU GENERAL PUBLIC LICENSE

|

| 2 |

+

Version 3, 29 June 2007

|

| 3 |

+

|

| 4 |

+

Copyright (C) 2007 Free Software Foundation, Inc. <https://fsf.org/>

|

| 5 |

+

Everyone is permitted to copy and distribute verbatim copies

|

| 6 |

+

of this license document, but changing it is not allowed.

|

| 7 |

+

|

| 8 |

+

Preamble

|

| 9 |

+

|

| 10 |

+

The GNU General Public License is a free, copyleft license for

|

| 11 |

+

software and other kinds of works.

|

| 12 |

+

|

| 13 |

+

The licenses for most software and other practical works are designed

|

| 14 |

+

to take away your freedom to share and change the works. By contrast,

|

| 15 |

+

the GNU General Public License is intended to guarantee your freedom to

|

| 16 |

+

share and change all versions of a program--to make sure it remains free

|

| 17 |

+

software for all its users. We, the Free Software Foundation, use the

|

| 18 |

+

GNU General Public License for most of our software; it applies also to

|

| 19 |

+

any other work released this way by its authors. You can apply it to

|

| 20 |

+

your programs, too.

|

| 21 |

+

|

| 22 |

+

When we speak of free software, we are referring to freedom, not

|

| 23 |

+

price. Our General Public Licenses are designed to make sure that you

|

| 24 |

+

have the freedom to distribute copies of free software (and charge for

|

| 25 |

+

them if you wish), that you receive source code or can get it if you

|

| 26 |

+

want it, that you can change the software or use pieces of it in new

|

| 27 |

+

free programs, and that you know you can do these things.

|

| 28 |

+

|

| 29 |

+

To protect your rights, we need to prevent others from denying you

|

| 30 |

+

these rights or asking you to surrender the rights. Therefore, you have

|

| 31 |

+

certain responsibilities if you distribute copies of the software, or if

|

| 32 |

+

you modify it: responsibilities to respect the freedom of others.

|

| 33 |

+

|

| 34 |

+

For example, if you distribute copies of such a program, whether

|

| 35 |

+

gratis or for a fee, you must pass on to the recipients the same

|

| 36 |

+

freedoms that you received. You must make sure that they, too, receive

|

| 37 |

+

or can get the source code. And you must show them these terms so they

|

| 38 |

+

know their rights.

|

| 39 |

+

|

| 40 |

+

Developers that use the GNU GPL protect your rights with two steps:

|

| 41 |

+

(1) assert copyright on the software, and (2) offer you this License

|

| 42 |

+

giving you legal permission to copy, distribute and/or modify it.

|

| 43 |

+

|

| 44 |

+

For the developers' and authors' protection, the GPL clearly explains

|

| 45 |

+

that there is no warranty for this free software. For both users' and

|

| 46 |

+

authors' sake, the GPL requires that modified versions be marked as

|

| 47 |

+

changed, so that their problems will not be attributed erroneously to

|

| 48 |

+

authors of previous versions.

|

| 49 |

+

|

| 50 |

+

Some devices are designed to deny users access to install or run

|

| 51 |

+

modified versions of the software inside them, although the manufacturer

|

| 52 |

+

can do so. This is fundamentally incompatible with the aim of

|

| 53 |

+

protecting users' freedom to change the software. The systematic

|

| 54 |

+

pattern of such abuse occurs in the area of products for individuals to

|

| 55 |

+

use, which is precisely where it is most unacceptable. Therefore, we

|

| 56 |

+

have designed this version of the GPL to prohibit the practice for those

|

| 57 |

+

products. If such problems arise substantially in other domains, we

|

| 58 |

+

stand ready to extend this provision to those domains in future versions

|

| 59 |

+

of the GPL, as needed to protect the freedom of users.

|

| 60 |

+

|

| 61 |

+

Finally, every program is threatened constantly by software patents.

|

| 62 |

+

States should not allow patents to restrict development and use of

|

| 63 |

+

software on general-purpose computers, but in those that do, we wish to

|

| 64 |

+

avoid the special danger that patents applied to a free program could

|

| 65 |

+

make it effectively proprietary. To prevent this, the GPL assures that

|

| 66 |

+

patents cannot be used to render the program non-free.

|

| 67 |

+

|

| 68 |

+

The precise terms and conditions for copying, distribution and

|

| 69 |

+

modification follow.

|

| 70 |

+

|

| 71 |

+

TERMS AND CONDITIONS

|

| 72 |

+

|

| 73 |

+

0. Definitions.

|

| 74 |

+

|

| 75 |

+

"This License" refers to version 3 of the GNU General Public License.

|

| 76 |

+

|

| 77 |

+

"Copyright" also means copyright-like laws that apply to other kinds of

|

| 78 |

+

works, such as semiconductor masks.

|

| 79 |

+

|

| 80 |

+

"The Program" refers to any copyrightable work licensed under this

|

| 81 |

+

License. Each licensee is addressed as "you". "Licensees" and

|

| 82 |

+

"recipients" may be individuals or organizations.

|

| 83 |

+

|

| 84 |

+

To "modify" a work means to copy from or adapt all or part of the work

|

| 85 |

+

in a fashion requiring copyright permission, other than the making of an

|

| 86 |

+

exact copy. The resulting work is called a "modified version" of the

|

| 87 |

+

earlier work or a work "based on" the earlier work.

|

| 88 |

+

|

| 89 |

+

A "covered work" means either the unmodified Program or a work based

|

| 90 |

+

on the Program.

|

| 91 |

+

|

| 92 |

+

To "propagate" a work means to do anything with it that, without

|

| 93 |

+

permission, would make you directly or secondarily liable for

|

| 94 |

+

infringement under applicable copyright law, except executing it on a

|

| 95 |

+

computer or modifying a private copy. Propagation includes copying,

|

| 96 |

+

distribution (with or without modification), making available to the

|

| 97 |

+

public, and in some countries other activities as well.

|

| 98 |

+

|

| 99 |

+

To "convey" a work means any kind of propagation that enables other

|

| 100 |

+

parties to make or receive copies. Mere interaction with a user through

|

| 101 |

+

a computer network, with no transfer of a copy, is not conveying.

|

| 102 |

+

|

| 103 |

+

An interactive user interface displays "Appropriate Legal Notices"

|

| 104 |

+

to the extent that it includes a convenient and prominently visible

|

| 105 |

+

feature that (1) displays an appropriate copyright notice, and (2)

|

| 106 |

+

tells the user that there is no warranty for the work (except to the

|

| 107 |

+

extent that warranties are provided), that licensees may convey the

|

| 108 |

+

work under this License, and how to view a copy of this License. If

|

| 109 |

+

the interface presents a list of user commands or options, such as a

|

| 110 |

+

menu, a prominent item in the list meets this criterion.

|

| 111 |

+

|

| 112 |

+

1. Source Code.

|

| 113 |

+

|

| 114 |

+

The "source code" for a work means the preferred form of the work

|

| 115 |

+

for making modifications to it. "Object code" means any non-source

|

| 116 |

+

form of a work.

|

| 117 |

+

|

| 118 |

+

A "Standard Interface" means an interface that either is an official

|

| 119 |

+

standard defined by a recognized standards body, or, in the case of

|

| 120 |

+

interfaces specified for a particular programming language, one that

|

| 121 |

+

is widely used among developers working in that language.

|

| 122 |

+

|

| 123 |

+

The "System Libraries" of an executable work include anything, other

|

| 124 |

+

than the work as a whole, that (a) is included in the normal form of

|

| 125 |

+

packaging a Major Component, but which is not part of that Major

|

| 126 |

+

Component, and (b) serves only to enable use of the work with that

|

| 127 |

+

Major Component, or to implement a Standard Interface for which an

|

| 128 |

+

implementation is available to the public in source code form. A

|

| 129 |

+

"Major Component", in this context, means a major essential component

|

| 130 |

+

(kernel, window system, and so on) of the specific operating system

|

| 131 |

+

(if any) on which the executable work runs, or a compiler used to

|

| 132 |

+

produce the work, or an object code interpreter used to run it.

|

| 133 |

+

|

| 134 |

+

The "Corresponding Source" for a work in object code form means all

|

| 135 |

+

the source code needed to generate, install, and (for an executable

|

| 136 |

+

work) run the object code and to modify the work, including scripts to

|

| 137 |

+

control those activities. However, it does not include the work's

|

| 138 |

+

System Libraries, or general-purpose tools or generally available free

|

| 139 |

+

programs which are used unmodified in performing those activities but

|

| 140 |

+

which are not part of the work. For example, Corresponding Source

|

| 141 |

+

includes interface definition files associated with source files for

|

| 142 |

+

the work, and the source code for shared libraries and dynamically

|

| 143 |

+

linked subprograms that the work is specifically designed to require,

|

| 144 |

+

such as by intimate data communication or control flow between those

|

| 145 |

+

subprograms and other parts of the work.

|

| 146 |

+

|

| 147 |

+

The Corresponding Source need not include anything that users

|

| 148 |

+

can regenerate automatically from other parts of the Corresponding

|

| 149 |

+

Source.

|

| 150 |

+

|

| 151 |

+

The Corresponding Source for a work in source code form is that

|

| 152 |

+

same work.

|

| 153 |

+

|

| 154 |

+

2. Basic Permissions.

|

| 155 |

+

|

| 156 |

+

All rights granted under this License are granted for the term of

|

| 157 |

+

copyright on the Program, and are irrevocable provided the stated

|

| 158 |

+

conditions are met. This License explicitly affirms your unlimited

|

| 159 |

+

permission to run the unmodified Program. The output from running a

|

| 160 |

+

covered work is covered by this License only if the output, given its

|

| 161 |

+

content, constitutes a covered work. This License acknowledges your

|

| 162 |

+

rights of fair use or other equivalent, as provided by copyright law.

|

| 163 |

+

|

| 164 |

+

You may make, run and propagate covered works that you do not

|

| 165 |

+

convey, without conditions so long as your license otherwise remains

|

| 166 |

+

in force. You may convey covered works to others for the sole purpose

|

| 167 |

+

of having them make modifications exclusively for you, or provide you

|

| 168 |

+

with facilities for running those works, provided that you comply with

|

| 169 |

+

the terms of this License in conveying all material for which you do

|

| 170 |

+

not control copyright. Those thus making or running the covered works

|

| 171 |

+

for you must do so exclusively on your behalf, under your direction

|

| 172 |

+

and control, on terms that prohibit them from making any copies of

|

| 173 |

+

your copyrighted material outside their relationship with you.

|

| 174 |

+

|

| 175 |

+

Conveying under any other circumstances is permitted solely under

|

| 176 |

+

the conditions stated below. Sublicensing is not allowed; section 10

|

| 177 |

+

makes it unnecessary.

|

| 178 |

+

|

| 179 |

+

3. Protecting Users' Legal Rights From Anti-Circumvention Law.

|

| 180 |

+

|

| 181 |

+

No covered work shall be deemed part of an effective technological

|

| 182 |

+

measure under any applicable law fulfilling obligations under article

|

| 183 |

+

11 of the WIPO copyright treaty adopted on 20 December 1996, or

|

| 184 |

+

similar laws prohibiting or restricting circumvention of such

|

| 185 |

+

measures.

|

| 186 |

+

|

| 187 |

+

When you convey a covered work, you waive any legal power to forbid

|

| 188 |

+

circumvention of technological measures to the extent such circumvention

|

| 189 |

+

is effected by exercising rights under this License with respect to

|

| 190 |

+

the covered work, and you disclaim any intention to limit operation or

|

| 191 |

+

modification of the work as a means of enforcing, against the work's

|

| 192 |

+

users, your or third parties' legal rights to forbid circumvention of

|

| 193 |

+

technological measures.

|

| 194 |

+

|

| 195 |

+

4. Conveying Verbatim Copies.

|

| 196 |

+

|

| 197 |

+

You may convey verbatim copies of the Program's source code as you

|

| 198 |

+

receive it, in any medium, provided that you conspicuously and

|

| 199 |

+

appropriately publish on each copy an appropriate copyright notice;

|

| 200 |

+

keep intact all notices stating that this License and any

|

| 201 |

+

non-permissive terms added in accord with section 7 apply to the code;

|

| 202 |

+

keep intact all notices of the absence of any warranty; and give all

|

| 203 |

+

recipients a copy of this License along with the Program.

|

| 204 |

+

|

| 205 |

+

You may charge any price or no price for each copy that you convey,

|

| 206 |

+

and you may offer support or warranty protection for a fee.

|

| 207 |

+

|

| 208 |

+

5. Conveying Modified Source Versions.

|

| 209 |

+

|

| 210 |

+

You may convey a work based on the Program, or the modifications to

|

| 211 |

+

produce it from the Program, in the form of source code under the

|

| 212 |

+

terms of section 4, provided that you also meet all of these conditions:

|

| 213 |

+

|

| 214 |

+

a) The work must carry prominent notices stating that you modified

|

| 215 |

+

it, and giving a relevant date.

|

| 216 |

+

|

| 217 |

+

b) The work must carry prominent notices stating that it is

|

| 218 |

+

released under this License and any conditions added under section

|

| 219 |

+

7. This requirement modifies the requirement in section 4 to

|

| 220 |

+

"keep intact all notices".

|

| 221 |

+

|

| 222 |

+

c) You must license the entire work, as a whole, under this

|

| 223 |

+

License to anyone who comes into possession of a copy. This

|

| 224 |

+

License will therefore apply, along with any applicable section 7

|

| 225 |

+

additional terms, to the whole of the work, and all its parts,

|

| 226 |

+

regardless of how they are packaged. This License gives no

|

| 227 |

+

permission to license the work in any other way, but it does not

|

| 228 |

+

invalidate such permission if you have separately received it.

|

| 229 |

+

|

| 230 |

+

d) If the work has interactive user interfaces, each must display

|

| 231 |

+

Appropriate Legal Notices; however, if the Program has interactive

|

| 232 |

+

interfaces that do not display Appropriate Legal Notices, your

|

| 233 |

+

work need not make them do so.

|

| 234 |

+

|

| 235 |

+

A compilation of a covered work with other separate and independent

|

| 236 |

+

works, which are not by their nature extensions of the covered work,

|

| 237 |

+

and which are not combined with it such as to form a larger program,

|

| 238 |

+

in or on a volume of a storage or distribution medium, is called an

|

| 239 |

+

"aggregate" if the compilation and its resulting copyright are not

|

| 240 |

+

used to limit the access or legal rights of the compilation's users

|

| 241 |

+

beyond what the individual works permit. Inclusion of a covered work

|

| 242 |

+

in an aggregate does not cause this License to apply to the other

|

| 243 |

+

parts of the aggregate.

|

| 244 |

+

|

| 245 |

+

6. Conveying Non-Source Forms.

|

| 246 |

+

|

| 247 |

+

You may convey a covered work in object code form under the terms

|

| 248 |

+

of sections 4 and 5, provided that you also convey the

|

| 249 |

+

machine-readable Corresponding Source under the terms of this License,

|

| 250 |

+

in one of these ways:

|

| 251 |

+

|

| 252 |

+

a) Convey the object code in, or embodied in, a physical product

|

| 253 |

+

(including a physical distribution medium), accompanied by the

|

| 254 |

+

Corresponding Source fixed on a durable physical medium

|

| 255 |

+

customarily used for software interchange.

|

| 256 |

+

|

| 257 |

+

b) Convey the object code in, or embodied in, a physical product

|

| 258 |

+

(including a physical distribution medium), accompanied by a

|

| 259 |

+

written offer, valid for at least three years and valid for as

|

| 260 |

+

long as you offer spare parts or customer support for that product

|

| 261 |

+

model, to give anyone who possesses the object code either (1) a

|

| 262 |

+

copy of the Corresponding Source for all the software in the

|

| 263 |

+

product that is covered by this License, on a durable physical

|

| 264 |

+

medium customarily used for software interchange, for a price no

|

| 265 |

+

more than your reasonable cost of physically performing this

|

| 266 |

+

conveying of source, or (2) access to copy the

|

| 267 |

+

Corresponding Source from a network server at no charge.

|

| 268 |

+

|

| 269 |

+

c) Convey individual copies of the object code with a copy of the

|

| 270 |

+

written offer to provide the Corresponding Source. This

|

| 271 |

+

alternative is allowed only occasionally and noncommercially, and

|

| 272 |

+

only if you received the object code with such an offer, in accord

|

| 273 |

+

with subsection 6b.

|

| 274 |

+

|

| 275 |

+

d) Convey the object code by offering access from a designated

|

| 276 |

+

place (gratis or for a charge), and offer equivalent access to the

|

| 277 |

+

Corresponding Source in the same way through the same place at no

|

| 278 |

+

further charge. You need not require recipients to copy the

|

| 279 |

+

Corresponding Source along with the object code. If the place to

|

| 280 |

+

copy the object code is a network server, the Corresponding Source

|

| 281 |

+

may be on a different server (operated by you or a third party)

|

| 282 |

+

that supports equivalent copying facilities, provided you maintain

|

| 283 |

+

clear directions next to the object code saying where to find the

|

| 284 |

+

Corresponding Source. Regardless of what server hosts the

|

| 285 |

+

Corresponding Source, you remain obligated to ensure that it is

|

| 286 |

+

available for as long as needed to satisfy these requirements.

|

| 287 |

+

|

| 288 |

+

e) Convey the object code using peer-to-peer transmission, provided

|

| 289 |

+

you inform other peers where the object code and Corresponding

|

| 290 |

+

Source of the work are being offered to the general public at no

|

| 291 |

+

charge under subsection 6d.

|

| 292 |

+

|

| 293 |

+

A separable portion of the object code, whose source code is excluded

|

| 294 |

+

from the Corresponding Source as a System Library, need not be

|

| 295 |

+

included in conveying the object code work.

|

| 296 |

+

|

| 297 |

+

A "User Product" is either (1) a "consumer product", which means any

|

| 298 |

+

tangible personal property which is normally used for personal, family,

|

| 299 |

+

or household purposes, or (2) anything designed or sold for incorporation

|

| 300 |

+

into a dwelling. In determining whether a product is a consumer product,

|

| 301 |

+

doubtful cases shall be resolved in favor of coverage. For a particular

|

| 302 |

+

product received by a particular user, "normally used" refers to a

|

| 303 |

+

typical or common use of that class of product, regardless of the status

|

| 304 |

+

of the particular user or of the way in which the particular user

|

| 305 |

+

actually uses, or expects or is expected to use, the product. A product

|

| 306 |

+

is a consumer product regardless of whether the product has substantial

|

| 307 |

+

commercial, industrial or non-consumer uses, unless such uses represent

|

| 308 |

+

the only significant mode of use of the product.

|

| 309 |

+

|

| 310 |

+

"Installation Information" for a User Product means any methods,

|

| 311 |

+

procedures, authorization keys, or other information required to install

|

| 312 |

+

and execute modified versions of a covered work in that User Product from

|

| 313 |

+

a modified version of its Corresponding Source. The information must

|

| 314 |

+

suffice to ensure that the continued functioning of the modified object

|

| 315 |

+

code is in no case prevented or interfered with solely because

|

| 316 |

+

modification has been made.

|

| 317 |

+

|

| 318 |

+

If you convey an object code work under this section in, or with, or

|

| 319 |

+

specifically for use in, a User Product, and the conveying occurs as

|

| 320 |

+

part of a transaction in which the right of possession and use of the

|

| 321 |

+

User Product is transferred to the recipient in perpetuity or for a

|

| 322 |

+

fixed term (regardless of how the transaction is characterized), the

|

| 323 |

+

Corresponding Source conveyed under this section must be accompanied

|

| 324 |

+

by the Installation Information. But this requirement does not apply

|

| 325 |

+

if neither you nor any third party retains the ability to install

|

| 326 |

+

modified object code on the User Product (for example, the work has

|

| 327 |

+

been installed in ROM).

|

| 328 |

+

|

| 329 |

+

The requirement to provide Installation Information does not include a

|

| 330 |

+

requirement to continue to provide support service, warranty, or updates

|

| 331 |

+

for a work that has been modified or installed by the recipient, or for

|

| 332 |

+

the User Product in which it has been modified or installed. Access to a

|

| 333 |

+

network may be denied when the modification itself materially and

|

| 334 |

+

adversely affects the operation of the network or violates the rules and

|

| 335 |

+

protocols for communication across the network.

|

| 336 |

+

|

| 337 |

+

Corresponding Source conveyed, and Installation Information provided,

|

| 338 |

+

in accord with this section must be in a format that is publicly

|

| 339 |

+

documented (and with an implementation available to the public in

|

| 340 |

+

source code form), and must require no special password or key for

|

| 341 |

+

unpacking, reading or copying.

|

| 342 |

+

|

| 343 |

+

7. Additional Terms.

|

| 344 |

+

|

| 345 |

+

"Additional permissions" are terms that supplement the terms of this

|

| 346 |

+

License by making exceptions from one or more of its conditions.

|

| 347 |

+

Additional permissions that are applicable to the entire Program shall

|

| 348 |

+

be treated as though they were included in this License, to the extent

|

| 349 |

+

that they are valid under applicable law. If additional permissions

|

| 350 |

+

apply only to part of the Program, that part may be used separately

|

| 351 |

+

under those permissions, but the entire Program remains governed by

|

| 352 |

+

this License without regard to the additional permissions.

|

| 353 |

+

|

| 354 |

+

When you convey a copy of a covered work, you may at your option

|

| 355 |

+

remove any additional permissions from that copy, or from any part of

|

| 356 |

+

it. (Additional permissions may be written to require their own

|

| 357 |

+

removal in certain cases when you modify the work.) You may place

|

| 358 |

+

additional permissions on material, added by you to a covered work,

|

| 359 |

+

for which you have or can give appropriate copyright permission.

|

| 360 |

+

|

| 361 |

+

Notwithstanding any other provision of this License, for material you

|

| 362 |

+

add to a covered work, you may (if authorized by the copyright holders of

|

| 363 |

+

that material) supplement the terms of this License with terms:

|

| 364 |

+

|

| 365 |

+

a) Disclaiming warranty or limiting liability differently from the

|

| 366 |

+

terms of sections 15 and 16 of this License; or

|

| 367 |

+

|

| 368 |

+

b) Requiring preservation of specified reasonable legal notices or

|

| 369 |

+

author attributions in that material or in the Appropriate Legal

|

| 370 |

+

Notices displayed by works containing it; or

|

| 371 |

+

|

| 372 |

+

c) Prohibiting misrepresentation of the origin of that material, or

|

| 373 |

+

requiring that modified versions of such material be marked in

|

| 374 |

+

reasonable ways as different from the original version; or

|

| 375 |

+

|

| 376 |

+

d) Limiting the use for publicity purposes of names of licensors or

|

| 377 |

+

authors of the material; or

|

| 378 |

+

|

| 379 |

+

e) Declining to grant rights under trademark law for use of some

|

| 380 |

+

trade names, trademarks, or service marks; or

|

| 381 |

+

|

| 382 |

+

f) Requiring indemnification of licensors and authors of that

|

| 383 |

+

material by anyone who conveys the material (or modified versions of

|

| 384 |

+

it) with contractual assumptions of liability to the recipient, for

|

| 385 |

+

any liability that these contractual assumptions directly impose on

|

| 386 |

+

those licensors and authors.

|

| 387 |

+

|

| 388 |

+

All other non-permissive additional terms are considered "further

|

| 389 |

+

restrictions" within the meaning of section 10. If the Program as you

|

| 390 |

+

received it, or any part of it, contains a notice stating that it is

|

| 391 |

+

governed by this License along with a term that is a further

|

| 392 |

+

restriction, you may remove that term. If a license document contains

|

| 393 |

+

a further restriction but permits relicensing or conveying under this

|

| 394 |

+

License, you may add to a covered work material governed by the terms

|

| 395 |

+

of that license document, provided that the further restriction does

|

| 396 |

+

not survive such relicensing or conveying.

|

| 397 |

+

|

| 398 |

+

If you add terms to a covered work in accord with this section, you

|

| 399 |

+

must place, in the relevant source files, a statement of the

|

| 400 |

+

additional terms that apply to those files, or a notice indicating

|

| 401 |

+

where to find the applicable terms.