Spaces:

Runtime error

Runtime error

Initial commit.

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +42 -6

- app.py +251 -0

- examples/barsik.jpg +0 -0

- examples/barsik.json +7 -0

- examples/biennale.jpg +0 -0

- examples/biennale.json +7 -0

- examples/billard1.jpg +0 -0

- examples/billard1.json +7 -0

- examples/billard2.jpg +0 -0

- examples/billard2.json +7 -0

- examples/bowie.jpg +0 -0

- examples/bowie.json +7 -0

- examples/branch.jpg +0 -0

- examples/branch.json +7 -0

- examples/cc_fox.jpg +0 -0

- examples/cc_fox.json +7 -0

- examples/cc_landscape.jpg +0 -0

- examples/cc_landscape.json +7 -0

- examples/cc_puffin.jpg +0 -0

- examples/cc_puffin.json +7 -0

- examples/couch.jpg +0 -0

- examples/couch.json +7 -0

- examples/couch_.json +7 -0

- examples/cups.jpg +0 -0

- examples/cups.json +7 -0

- examples/dice.jpg +0 -0

- examples/dice.json +7 -0

- examples/emu.jpg +0 -0

- examples/emu.json +7 -0

- examples/fridge.jpg +0 -0

- examples/fridge.json +7 -0

- examples/givt.jpg +0 -0

- examples/givt.json +7 -0

- examples/greenlake.jpg +0 -0

- examples/greenlake.json +7 -0

- examples/howto.jpg +0 -0

- examples/howto.json +7 -0

- examples/markers.jpg +0 -0

- examples/markers.json +7 -0

- examples/mcair.jpg +0 -0

- examples/mcair.json +7 -0

- examples/mcair_.json +7 -0

- examples/minergie.jpg +0 -0

- examples/minergie.json +7 -0

- examples/morel.jpg +0 -0

- examples/morel.json +7 -0

- examples/motorcyclists.jpg +0 -0

- examples/motorcyclists.json +7 -0

- examples/parking.jpg +0 -0

- examples/parking.json +7 -0

README.md

CHANGED

|

@@ -1,13 +1,49 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: green

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

-

license:

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: PaliGemma Demo

|

| 3 |

+

emoji: 🤲

|

| 4 |

colorFrom: green

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 4.22.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

+

license: apache-2.0

|

| 11 |

---

|

| 12 |

|

| 13 |

+

# PaliGemma Demo

|

| 14 |

+

|

| 15 |

+

See [Blogpost] and [`big_vision README.md`] for details about the model.

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

[Blogpost]: https://huggingface.co/blog/paligemma

|

| 19 |

+

|

| 20 |

+

[`big_vision README.md`]: https://github.com/google-research/big_vision/blob/main/big_vision/configs/proj/paligemma/README.md

|

| 21 |

+

|

| 22 |

+

## Development

|

| 23 |

+

|

| 24 |

+

Local testing (CPU, Python 3.12):

|

| 25 |

+

|

| 26 |

+

```bash

|

| 27 |

+

pip -m venv env

|

| 28 |

+

. env/bin/activate

|

| 29 |

+

pip install -qr requirements-cpu.txt

|

| 30 |

+

python app.py

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

Environment variables:

|

| 34 |

+

|

| 35 |

+

- `MOCK_MODEL=yes`: For quick UI testing.

|

| 36 |

+

- `RAM_CACHE_GB=18`: Enables caching of 3 bf16 models in memory: a single bf16

|

| 37 |

+

model is about 5860 MB. Use with care on spaces with little RAM. For example,

|

| 38 |

+

on a `A10G large` space you can cache five models in RAM, so you would set

|

| 39 |

+

`RAM_CACHE_GB=30`.

|

| 40 |

+

- `HOST_COLOCATION=4`: If host RAM/disk is shared between 4 processes (e.g. the

|

| 41 |

+

Huggingface `A10 large` Spaces).

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

Loading models:

|

| 45 |

+

|

| 46 |

+

- The set of models loaded is defined in `./models.py`.

|

| 47 |

+

- You must first acknowledge usage conditions to access models.

|

| 48 |

+

- When testing locally, you'll have to run `huggingface_cli login`.

|

| 49 |

+

- When running in a Huggingface Space, you'll have to set a `HF_TOKEN` secret.

|

app.py

ADDED

|

@@ -0,0 +1,251 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""PaliGemma demo gradio app."""

|

| 2 |

+

|

| 3 |

+

import datetime

|

| 4 |

+

import functools

|

| 5 |

+

import glob

|

| 6 |

+

import json

|

| 7 |

+

import logging

|

| 8 |

+

import os

|

| 9 |

+

import time

|

| 10 |

+

|

| 11 |

+

import gradio as gr

|

| 12 |

+

import jax

|

| 13 |

+

import PIL.Image

|

| 14 |

+

import gradio_helpers

|

| 15 |

+

import models

|

| 16 |

+

import paligemma_parse

|

| 17 |

+

|

| 18 |

+

INTRO_TEXT = """🤲 PaliGemma demo\n\n

|

| 19 |

+

| [GitHub](https://github.com/google-research/big_vision/blob/main/big_vision/configs/proj/paligemma/README.md)

|

| 20 |

+

| [HF blog post](https://huggingface.co/blog/paligemma)

|

| 21 |

+

| [Google blog post](https://developers.googleblog.com/en/gemma-family-and-toolkit-expansion-io-2024)

|

| 22 |

+

| [Vertex AI Model Garden](https://console.cloud.google.com/vertex-ai/publishers/google/model-garden/363)

|

| 23 |

+

| [Demo](https://huggingface.co/spaces/google/paligemma)

|

| 24 |

+

|\n\n

|

| 25 |

+

[PaliGemma](https://ai.google.dev/gemma/docs/paligemma) is an open vision-language model by Google,

|

| 26 |

+

inspired by [PaLI-3](https://arxiv.org/abs/2310.09199) and

|

| 27 |

+

built with open components such as the [SigLIP](https://arxiv.org/abs/2303.15343)

|

| 28 |

+

vision model and the [Gemma](https://arxiv.org/abs/2403.08295) language model. PaliGemma is designed as a versatile

|

| 29 |

+

model for transfer to a wide range of vision-language tasks such as image and short video caption, visual question

|

| 30 |

+

answering, text reading, object detection and object segmentation.

|

| 31 |

+

\n\n

|

| 32 |

+

This space includes models fine-tuned on a mix of downstream tasks.

|

| 33 |

+

See the [blog post](https://huggingface.co/blog/paligemma) and

|

| 34 |

+

[README](https://github.com/google-research/big_vision/blob/main/big_vision/configs/proj/paligemma/README.md)

|

| 35 |

+

for detailed information how to use and fine-tune PaliGemma models.

|

| 36 |

+

\n\n

|

| 37 |

+

**This is an experimental research model.** Make sure to add appropriate guardrails when using the model for applications.

|

| 38 |

+

"""

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

make_image = lambda value, visible: gr.Image(

|

| 42 |

+

value, label='Image', type='filepath', visible=visible)

|

| 43 |

+

make_annotated_image = functools.partial(gr.AnnotatedImage, label='Image')

|

| 44 |

+

make_highlighted_text = functools.partial(gr.HighlightedText, label='Output')

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

# https://coolors.co/4285f4-db4437-f4b400-0f9d58-e48ef1

|

| 48 |

+

COLORS = ['#4285f4', '#db4437', '#f4b400', '#0f9d58', '#e48ef1']

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

@gradio_helpers.synced

|

| 52 |

+

def compute(image, prompt, model_name, sampler):

|

| 53 |

+

"""Runs model inference."""

|

| 54 |

+

if image is None:

|

| 55 |

+

raise gr.Error('Image required')

|

| 56 |

+

|

| 57 |

+

logging.info('prompt="%s"', prompt)

|

| 58 |

+

|

| 59 |

+

if isinstance(image, str):

|

| 60 |

+

image = PIL.Image.open(image)

|

| 61 |

+

if gradio_helpers.should_mock():

|

| 62 |

+

logging.warning('Mocking response')

|

| 63 |

+

time.sleep(2.)

|

| 64 |

+

output = paligemma_parse.EXAMPLE_STRING

|

| 65 |

+

else:

|

| 66 |

+

if not model_name:

|

| 67 |

+

raise gr.Error('Models not loaded yet')

|

| 68 |

+

output = models.generate(model_name, sampler, image, prompt)

|

| 69 |

+

logging.info('output="%s"', output)

|

| 70 |

+

|

| 71 |

+

width, height = image.size

|

| 72 |

+

objs = paligemma_parse.extract_objs(output, width, height, unique_labels=True)

|

| 73 |

+

labels = set(obj.get('name') for obj in objs if obj.get('name'))

|

| 74 |

+

color_map = {l: COLORS[i % len(COLORS)] for i, l in enumerate(labels)}

|

| 75 |

+

highlighted_text = [(obj['content'], obj.get('name')) for obj in objs]

|

| 76 |

+

annotated_image = (

|

| 77 |

+

image,

|

| 78 |

+

[

|

| 79 |

+

(

|

| 80 |

+

obj['mask'] if obj.get('mask') is not None else obj['xyxy'],

|

| 81 |

+

obj['name'] or '',

|

| 82 |

+

)

|

| 83 |

+

for obj in objs

|

| 84 |

+

if 'mask' in obj or 'xyxy' in obj

|

| 85 |

+

],

|

| 86 |

+

)

|

| 87 |

+

has_annotations = bool(annotated_image[1])

|

| 88 |

+

return (

|

| 89 |

+

make_highlighted_text(

|

| 90 |

+

highlighted_text, visible=True, color_map=color_map),

|

| 91 |

+

make_image(image, visible=not has_annotations),

|

| 92 |

+

make_annotated_image(

|

| 93 |

+

annotated_image, visible=has_annotations, width=width, height=height,

|

| 94 |

+

color_map=color_map),

|

| 95 |

+

)

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

def warmup(model_name):

|

| 99 |

+

image = PIL.Image.new('RGB', [1, 1])

|

| 100 |

+

_ = compute(image, '', model_name, 'greedy')

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

def reset():

|

| 104 |

+

return (

|

| 105 |

+

'', make_highlighted_text('', visible=False),

|

| 106 |

+

make_image(None, visible=True), make_annotated_image(None, visible=False),

|

| 107 |

+

)

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

def create_app():

|

| 111 |

+

"""Creates demo UI."""

|

| 112 |

+

|

| 113 |

+

make_model = lambda choices: gr.Dropdown(

|

| 114 |

+

value=(choices + [''])[0],

|

| 115 |

+

choices=choices,

|

| 116 |

+

label='Model',

|

| 117 |

+

visible=bool(choices),

|

| 118 |

+

)

|

| 119 |

+

make_prompt = lambda value, visible=True: gr.Textbox(

|

| 120 |

+

value, label='Prompt', visible=visible)

|

| 121 |

+

|

| 122 |

+

with gr.Blocks() as demo:

|

| 123 |

+

|

| 124 |

+

##### Main UI structure.

|

| 125 |

+

|

| 126 |

+

gr.Markdown(INTRO_TEXT)

|

| 127 |

+

with gr.Row():

|

| 128 |

+

image = make_image(None, visible=True) # input

|

| 129 |

+

annotated_image = make_annotated_image(None, visible=False) # output

|

| 130 |

+

with gr.Column():

|

| 131 |

+

with gr.Row():

|

| 132 |

+

prompt = make_prompt('', visible=True)

|

| 133 |

+

model_info = gr.Markdown(label='Model Info')

|

| 134 |

+

with gr.Row():

|

| 135 |

+

model = make_model([])

|

| 136 |

+

samplers = [

|

| 137 |

+

'greedy', 'nucleus(0.1)', 'nucleus(0.3)', 'temperature(0.5)']

|

| 138 |

+

sampler = gr.Dropdown(

|

| 139 |

+

value=samplers[0], choices=samplers, label='Decoding'

|

| 140 |

+

)

|

| 141 |

+

with gr.Row():

|

| 142 |

+

run = gr.Button('Run', variant='primary')

|

| 143 |

+

clear = gr.Button('Clear')

|

| 144 |

+

highlighted_text = make_highlighted_text('', visible=False)

|

| 145 |

+

|

| 146 |

+

##### UI logic.

|

| 147 |

+

|

| 148 |

+

def update_ui(model, prompt):

|

| 149 |

+

prompt = make_prompt(prompt, visible=True)

|

| 150 |

+

model_info = f'Model `{model}` – {models.MODELS_INFO.get(model, "No info.")}'

|

| 151 |

+

return [prompt, model_info]

|

| 152 |

+

|

| 153 |

+

gr.on(

|

| 154 |

+

[model.change],

|

| 155 |

+

update_ui,

|

| 156 |

+

[model, prompt],

|

| 157 |

+

[prompt, model_info],

|

| 158 |

+

)

|

| 159 |

+

|

| 160 |

+

gr.on(

|

| 161 |

+

[run.click, prompt.submit],

|

| 162 |

+

compute,

|

| 163 |

+

[image, prompt, model, sampler],

|

| 164 |

+

[highlighted_text, image, annotated_image],

|

| 165 |

+

)

|

| 166 |

+

clear.click(

|

| 167 |

+

reset, None, [prompt, highlighted_text, image, annotated_image]

|

| 168 |

+

)

|

| 169 |

+

|

| 170 |

+

##### Examples.

|

| 171 |

+

|

| 172 |

+

gr.set_static_paths(['examples/'])

|

| 173 |

+

all_examples = [json.load(open(p)) for p in glob.glob('examples/*.json')]

|

| 174 |

+

logging.info('loaded %d examples', len(all_examples))

|

| 175 |

+

example_image = gr.Image(

|

| 176 |

+

label='Image', visible=False) # proxy, never visible

|

| 177 |

+

example_model = gr.Text(

|

| 178 |

+

label='Model', visible=False) # proxy, never visible

|

| 179 |

+

example_prompt = gr.Text(

|

| 180 |

+

label='Prompt', visible=False) # proxy, never visible

|

| 181 |

+

example_license = gr.Markdown(

|

| 182 |

+

label='Image License', visible=False) # placeholder, never visible

|

| 183 |

+

gr.Examples(

|

| 184 |

+

examples=[

|

| 185 |

+

[

|

| 186 |

+

f'examples/{ex["name"]}.jpg',

|

| 187 |

+

ex['prompt'],

|

| 188 |

+

ex['model'],

|

| 189 |

+

ex['license'],

|

| 190 |

+

]

|

| 191 |

+

for ex in all_examples

|

| 192 |

+

if ex['model'] in models.MODELS

|

| 193 |

+

],

|

| 194 |

+

inputs=[example_image, example_prompt, example_model, example_license],

|

| 195 |

+

)

|

| 196 |

+

|

| 197 |

+

##### Examples UI logic.

|

| 198 |

+

|

| 199 |

+

example_image.change(

|

| 200 |

+

lambda image_path: (

|

| 201 |

+

make_image(image_path, visible=True),

|

| 202 |

+

make_annotated_image(None, visible=False),

|

| 203 |

+

make_highlighted_text('', visible=False),

|

| 204 |

+

),

|

| 205 |

+

example_image,

|

| 206 |

+

[image, annotated_image, highlighted_text],

|

| 207 |

+

)

|

| 208 |

+

def example_model_changed(model):

|

| 209 |

+

if model not in gradio_helpers.get_paths():

|

| 210 |

+

raise gr.Error(f'Model "{model}" not loaded!')

|

| 211 |

+

return model

|

| 212 |

+

example_model.change(example_model_changed, example_model, model)

|

| 213 |

+

example_prompt.change(make_prompt, example_prompt, prompt)

|

| 214 |

+

|

| 215 |

+

##### Status.

|

| 216 |

+

|

| 217 |

+

status = gr.Markdown(f'Startup: {datetime.datetime.now()}')

|

| 218 |

+

gpu_kind = gr.Markdown(f'GPU=?')

|

| 219 |

+

demo.load(

|

| 220 |

+

lambda: [

|

| 221 |

+

gradio_helpers.get_status(),

|

| 222 |

+

make_model(list(gradio_helpers.get_paths())),

|

| 223 |

+

],

|

| 224 |

+

None,

|

| 225 |

+

[status, model],

|

| 226 |

+

)

|

| 227 |

+

def get_gpu_kind():

|

| 228 |

+

device = jax.devices()[0]

|

| 229 |

+

if not gradio_helpers.should_mock() and device.platform != 'gpu':

|

| 230 |

+

raise gr.Error('GPU not visible to JAX!')

|

| 231 |

+

return f'GPU={device.device_kind}'

|

| 232 |

+

demo.load(get_gpu_kind, None, gpu_kind)

|

| 233 |

+

|

| 234 |

+

return demo

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

if __name__ == '__main__':

|

| 238 |

+

|

| 239 |

+

logging.basicConfig(level=logging.INFO,

|

| 240 |

+

format='%(asctime)s - %(levelname)s - %(message)s')

|

| 241 |

+

|

| 242 |

+

logging.info('JAX devices: %s', jax.devices())

|

| 243 |

+

|

| 244 |

+

for k, v in os.environ.items():

|

| 245 |

+

logging.info('environ["%s"] = %r', k, v)

|

| 246 |

+

|

| 247 |

+

gradio_helpers.set_warmup_function(warmup)

|

| 248 |

+

for name, (repo, filename, revision) in models.MODELS.items():

|

| 249 |

+

gradio_helpers.register_download(name, repo, filename, revision)

|

| 250 |

+

|

| 251 |

+

create_app().queue().launch()

|

examples/barsik.jpg

ADDED

|

examples/barsik.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "barsik",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "segment cat",

|

| 6 |

+

"license": "CC0 by [maximneumann@](https://github.com/maximneumann)"

|

| 7 |

+

}

|

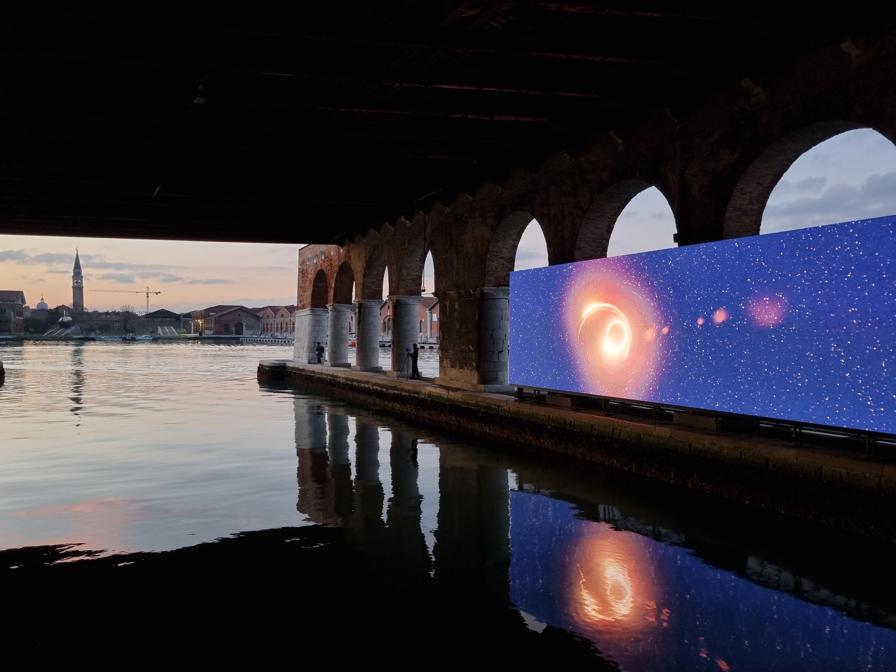

examples/biennale.jpg

ADDED

|

examples/biennale.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "biennale",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "In which city is this?",

|

| 6 |

+

"license": "CC0 by [andsteing@](https://huggingface.co/andsteing)"

|

| 7 |

+

}

|

examples/billard1.jpg

ADDED

|

examples/billard1.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "billard1",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "How many red balls are there?",

|

| 6 |

+

"license": "CC0 by [mbosnjak@](https://github.com/mbosnjak)"

|

| 7 |

+

}

|

examples/billard2.jpg

ADDED

|

examples/billard2.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "billard2",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "How many balls are there?",

|

| 6 |

+

"license": "CC0 by [mbosnjak@](https://github.com/mbosnjak)"

|

| 7 |

+

}

|

examples/bowie.jpg

ADDED

|

examples/bowie.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "bowie",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "Who is this?",

|

| 6 |

+

"license": "CC0 by [akolesnikoff@](https://github.com/akolesnikoff)"

|

| 7 |

+

}

|

examples/branch.jpg

ADDED

|

examples/branch.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "branch",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "What caused this?",

|

| 6 |

+

"license": "CC0 by [andsteing@](https://huggingface.co/andsteing)"

|

| 7 |

+

}

|

examples/cc_fox.jpg

ADDED

|

examples/cc_fox.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "cc_fox",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-448",

|

| 5 |

+

"prompt": "Which breed is this fox?",

|

| 6 |

+

"license": "CC0 by [XiaohuaZhai@](https://sites.google.com/view/xzhai)"

|

| 7 |

+

}

|

examples/cc_landscape.jpg

ADDED

|

examples/cc_landscape.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "cc_landscape",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-448",

|

| 5 |

+

"prompt": "What does the image show?",

|

| 6 |

+

"license": "CC0 by [XiaohuaZhai@](https://sites.google.com/view/xzhai)"

|

| 7 |

+

}

|

examples/cc_puffin.jpg

ADDED

|

examples/cc_puffin.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "cc_puffin",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-448",

|

| 5 |

+

"prompt": "detect puffin in the back ; puffin in front",

|

| 6 |

+

"license": "CC0 by [XiaohuaZhai@](https://sites.google.com/view/xzhai)"

|

| 7 |

+

}

|

examples/couch.jpg

ADDED

|

examples/couch.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "couch",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "How many yellow cushions are on the couch?",

|

| 6 |

+

"license": "CC0"

|

| 7 |

+

}

|

examples/couch_.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "couch",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "How many painting do you see in the image?",

|

| 6 |

+

"license": "CC0"

|

| 7 |

+

}

|

examples/cups.jpg

ADDED

|

examples/cups.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "cups",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "how many cups?",

|

| 6 |

+

"license": "CC0 by [mbosnjak@](https://github.com/mbosnjak)"

|

| 7 |

+

}

|

examples/dice.jpg

ADDED

|

examples/dice.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "dice",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "segment dice ; dice",

|

| 6 |

+

"license": "CC0 by [andresusanopinto@](https://github.com/andresusanopinto)"

|

| 7 |

+

}

|

examples/emu.jpg

ADDED

|

examples/emu.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "emu",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "What animal is this?",

|

| 6 |

+

"license": "CC0 by [akolesnikoff@](https://github.com/akolesnikoff)"

|

| 7 |

+

}

|

examples/fridge.jpg

ADDED

|

examples/fridge.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "fridge",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "Describe the image.",

|

| 6 |

+

"license": "CC0 by [andresusanopinto@](https://github.com/andresusanopinto)"

|

| 7 |

+

}

|

examples/givt.jpg

ADDED

|

examples/givt.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "givt",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "What does the image show?",

|

| 6 |

+

"license": "CC-BY [GIVT paper](https://arxiv.org/abs/2312.02116)"

|

| 7 |

+

}

|

examples/greenlake.jpg

ADDED

|

examples/greenlake.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "greenlake",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "Describe the image.",

|

| 6 |

+

"license": "CC0 by [akolesnikoff@](https://github.com/akolesnikoff)"

|

| 7 |

+

}

|

examples/howto.jpg

ADDED

|

examples/howto.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "howto",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "What does this image show?",

|

| 6 |

+

"license": "CC-BY [How to train your ViT?](https://arxiv.org/abs/2106.10270)"

|

| 7 |

+

}

|

examples/markers.jpg

ADDED

|

examples/markers.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "markers",

|

| 3 |

+

"comment": "answer en How many cups are there?",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "How many cups are there?",

|

| 6 |

+

"license": "CC0"

|

| 7 |

+

}

|

examples/mcair.jpg

ADDED

|

examples/mcair.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "mcair",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "Can you board this airplane?",

|

| 6 |

+

"license": "CC0 by [akolesnikoff@](https://github.com/akolesnikoff)"

|

| 7 |

+

}

|

examples/mcair_.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "mcair",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "Is this a restaurant?",

|

| 6 |

+

"license": "CC0 by [akolesnikoff@](https://github.com/akolesnikoff)"

|

| 7 |

+

}

|

examples/minergie.jpg

ADDED

|

examples/minergie.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "minergie",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "ocr",

|

| 6 |

+

"license": "CC0 by [andsteing@](https://huggingface.co/andsteing)"

|

| 7 |

+

}

|

examples/morel.jpg

ADDED

|

examples/morel.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "morel",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "detect morel",

|

| 6 |

+

"license": "CC0 by [andsteing@](https://huggingface.co/andsteing)"

|

| 7 |

+

}

|

examples/motorcyclists.jpg

ADDED

|

examples/motorcyclists.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "motorcyclists",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "What does the image show?",

|

| 6 |

+

"license": "CC0 by [akolesnikoff@](https://github.com/akolesnikoff)"

|

| 7 |

+

}

|

examples/parking.jpg

ADDED

|

examples/parking.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "parking",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "paligemma-3b-mix-224",

|

| 5 |

+

"prompt": "Describe the image.",

|

| 6 |

+

"license": "CC0 by [xiaohuazhai@](https://huggingface.co/xiaohuazhai)"

|

| 7 |

+

}

|