Srini

Browse files- __pycache__/helper.cpython-311.pyc +0 -0

- __pycache__/settings.cpython-311.pyc +0 -0

- __pycache__/sort.cpython-311.pyc +0 -0

- __pycache__/tracker.cpython-311.pyc +0 -0

- app.py +110 -0

- assets/Objdetectionyoutubegif-1.m4v +0 -0

- assets/pic1.png +0 -0

- assets/pic3.png +0 -0

- assets/segmentation.png +0 -0

- helper.py +219 -0

- images/office_4.jpg +0 -0

- images/office_4_detected.jpg +0 -0

- settings.py +52 -0

- sort.py +372 -0

- tracker.py +116 -0

- weights/yolov8n-seg.pt +3 -0

- weights/yolov8n.pt +3 -0

__pycache__/helper.cpython-311.pyc

ADDED

|

Binary file (9.27 kB). View file

|

|

|

__pycache__/settings.cpython-311.pyc

ADDED

|

Binary file (1.59 kB). View file

|

|

|

__pycache__/sort.cpython-311.pyc

ADDED

|

Binary file (22.4 kB). View file

|

|

|

__pycache__/tracker.cpython-311.pyc

ADDED

|

Binary file (5.73 kB). View file

|

|

|

app.py

ADDED

|

@@ -0,0 +1,110 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Python In-built packages

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import PIL

|

| 4 |

+

|

| 5 |

+

# External packages

|

| 6 |

+

import streamlit as st

|

| 7 |

+

|

| 8 |

+

# Local Modules

|

| 9 |

+

import settings

|

| 10 |

+

import helper

|

| 11 |

+

|

| 12 |

+

# Setting page layout

|

| 13 |

+

st.set_page_config(

|

| 14 |

+

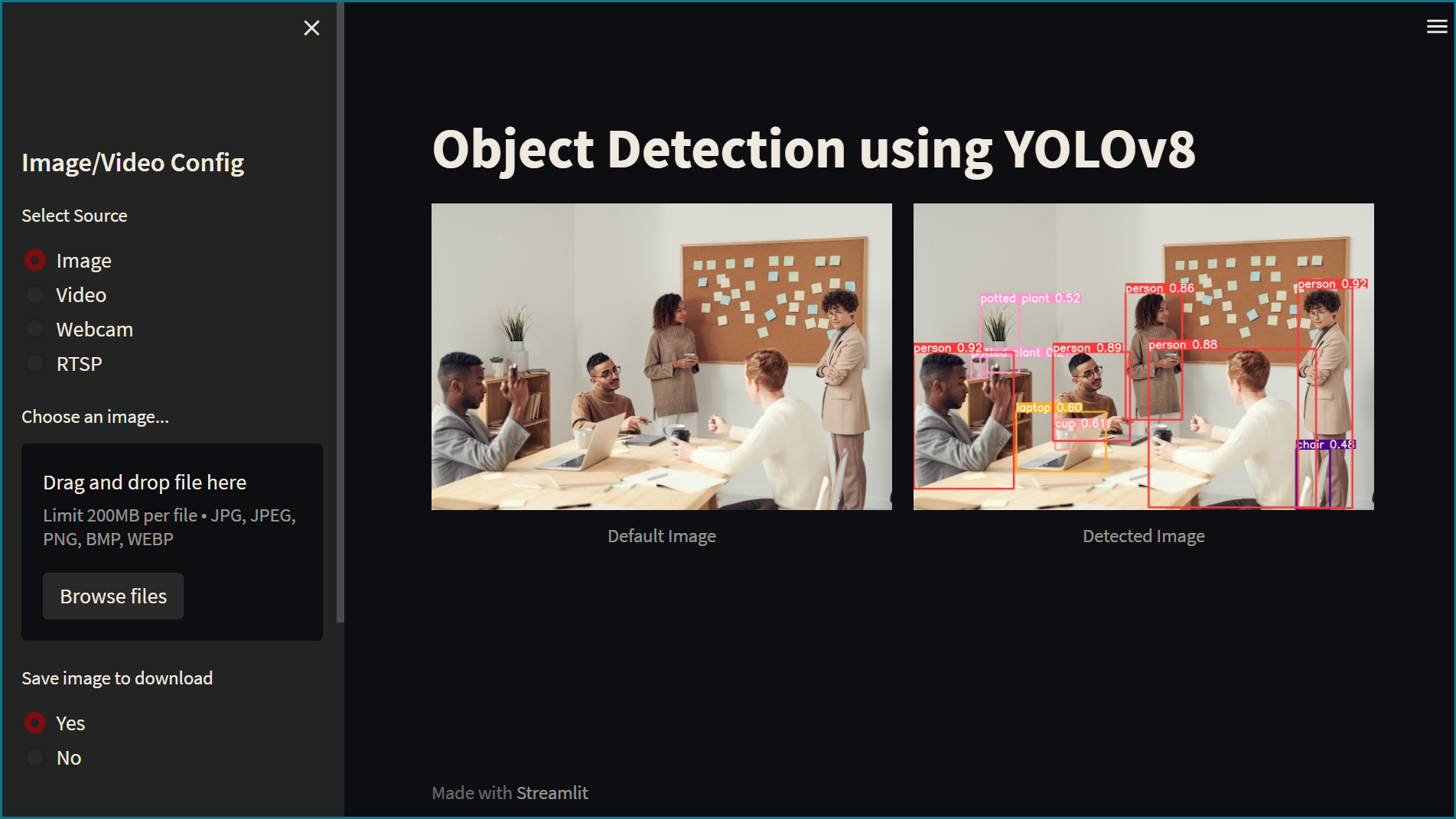

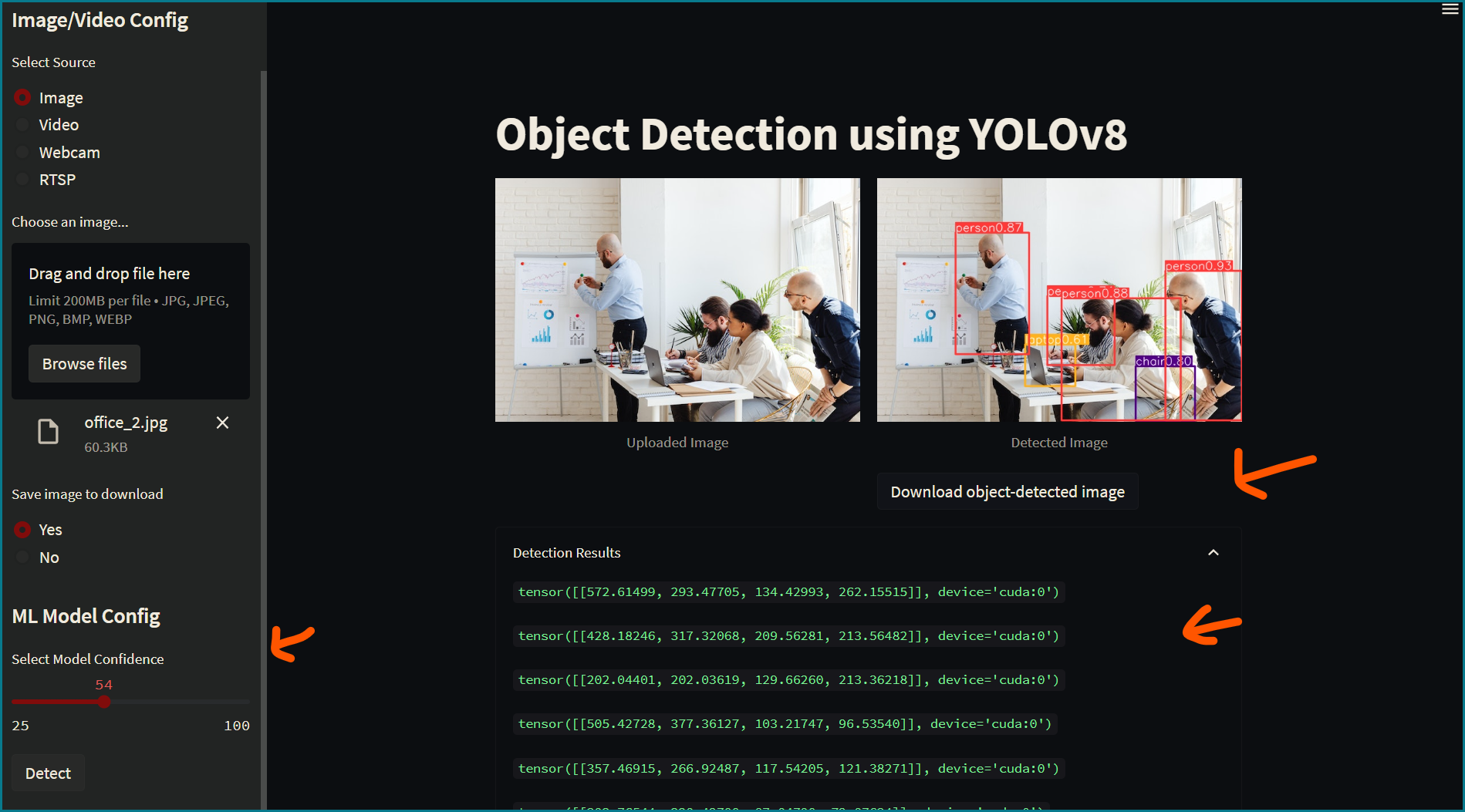

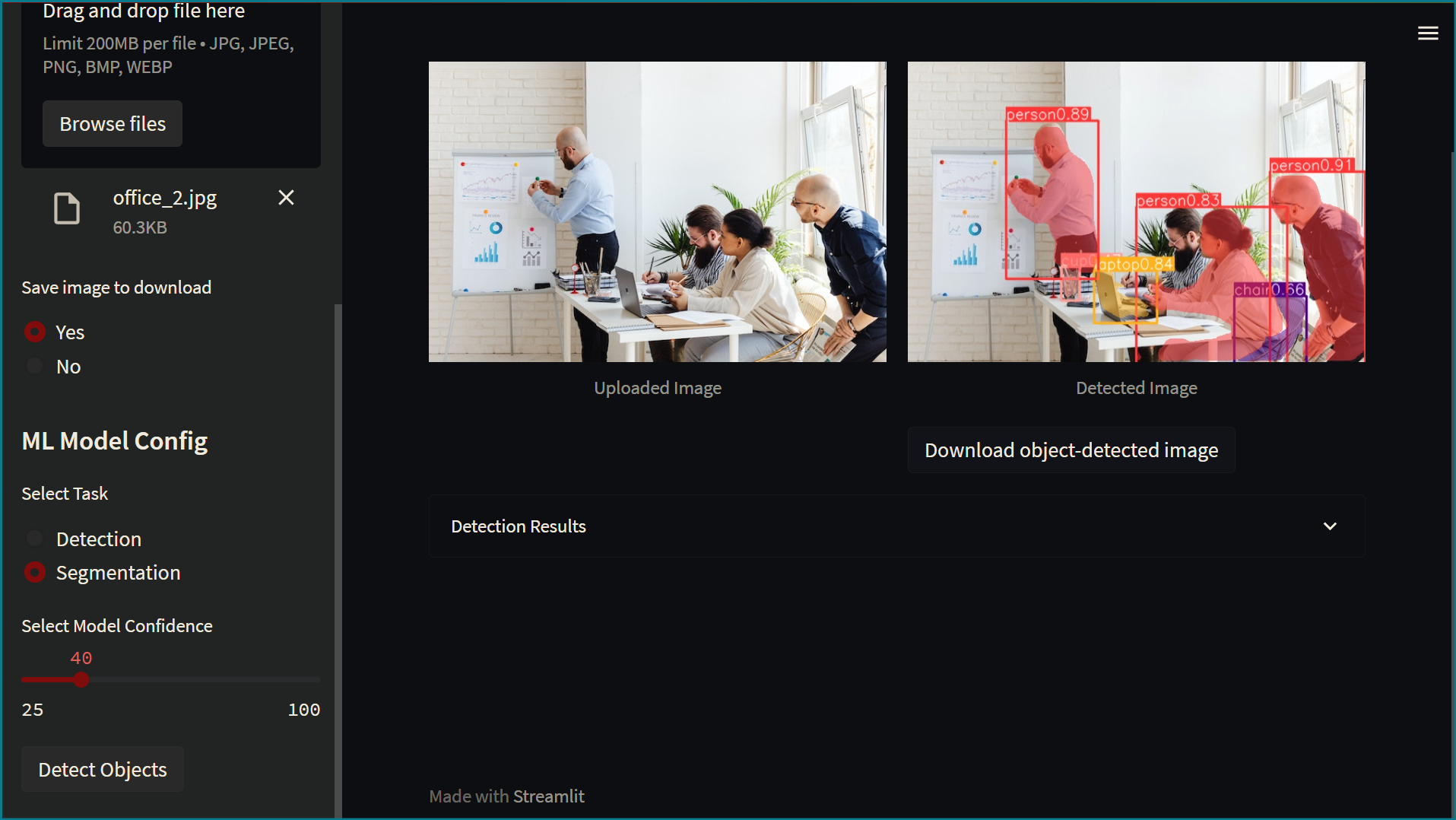

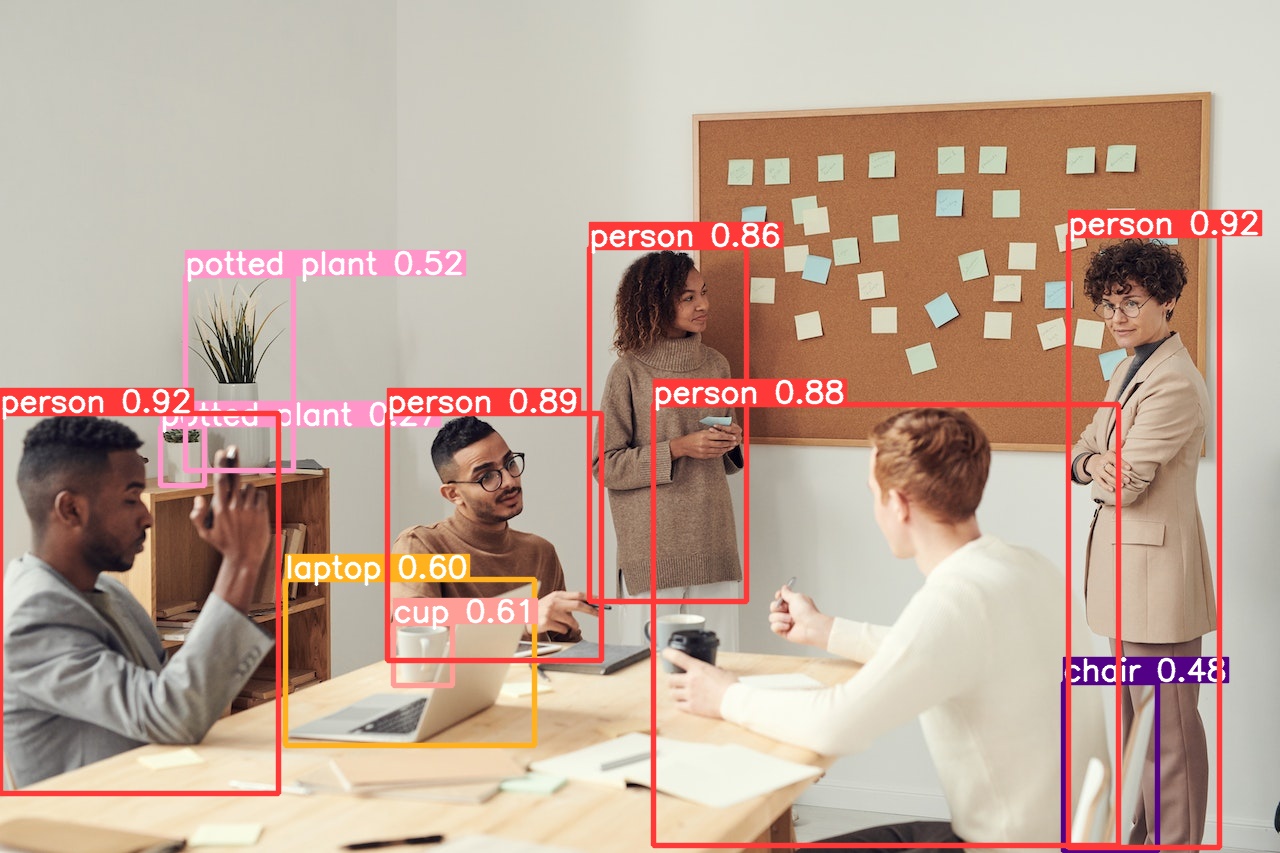

page_title="Object Detection using YOLOv8",

|

| 15 |

+

page_icon="🤖",

|

| 16 |

+

layout="wide",

|

| 17 |

+

initial_sidebar_state="expanded"

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

# Main page heading

|

| 21 |

+

st.title("Object Detection using YOLOv8")

|

| 22 |

+

|

| 23 |

+

# Sidebar

|

| 24 |

+

st.sidebar.header("ML Model Config")

|

| 25 |

+

|

| 26 |

+

# Model Options

|

| 27 |

+

model_type = st.sidebar.radio(

|

| 28 |

+

"Select Task", ['Detection', 'Segmentation'])

|

| 29 |

+

|

| 30 |

+

confidence = float(st.sidebar.slider(

|

| 31 |

+

"Select Model Confidence", 25, 100, 40)) / 100

|

| 32 |

+

|

| 33 |

+

# Selecting Detection Or Segmentation

|

| 34 |

+

if model_type == 'Detection':

|

| 35 |

+

model_path = Path(settings.DETECTION_MODEL)

|

| 36 |

+

elif model_type == 'Segmentation':

|

| 37 |

+

model_path = Path(settings.SEGMENTATION_MODEL)

|

| 38 |

+

|

| 39 |

+

# Load Pre-trained ML Model

|

| 40 |

+

try:

|

| 41 |

+

model = helper.load_model(model_path)

|

| 42 |

+

except Exception as ex:

|

| 43 |

+

st.error(f"Unable to load model. Check the specified path: {model_path}")

|

| 44 |

+

st.error(ex)

|

| 45 |

+

|

| 46 |

+

st.sidebar.header("Image/Video Config")

|

| 47 |

+

source_radio = st.sidebar.radio(

|

| 48 |

+

"Select Source", settings.SOURCES_LIST)

|

| 49 |

+

|

| 50 |

+

source_img = None

|

| 51 |

+

# If image is selected

|

| 52 |

+

if source_radio == settings.IMAGE:

|

| 53 |

+

source_img = st.sidebar.file_uploader(

|

| 54 |

+

"Choose an image...", type=("jpg", "jpeg", "png", 'bmp', 'webp'))

|

| 55 |

+

|

| 56 |

+

col1, col2 = st.columns(2)

|

| 57 |

+

|

| 58 |

+

with col1:

|

| 59 |

+

try:

|

| 60 |

+

if source_img is None:

|

| 61 |

+

default_image_path = str(settings.DEFAULT_IMAGE)

|

| 62 |

+

default_image = PIL.Image.open(default_image_path)

|

| 63 |

+

st.image(default_image_path, caption="Default Image",

|

| 64 |

+

use_column_width=True)

|

| 65 |

+

else:

|

| 66 |

+

uploaded_image = PIL.Image.open(source_img)

|

| 67 |

+

st.image(source_img, caption="Uploaded Image",

|

| 68 |

+

use_column_width=True)

|

| 69 |

+

except Exception as ex:

|

| 70 |

+

st.error("Error occurred while opening the image.")

|

| 71 |

+

st.error(ex)

|

| 72 |

+

|

| 73 |

+

with col2:

|

| 74 |

+

if source_img is None:

|

| 75 |

+

default_detected_image_path = str(settings.DEFAULT_DETECT_IMAGE)

|

| 76 |

+

default_detected_image = PIL.Image.open(

|

| 77 |

+

default_detected_image_path)

|

| 78 |

+

st.image(default_detected_image_path, caption='Detected Image',

|

| 79 |

+

use_column_width=True)

|

| 80 |

+

else:

|

| 81 |

+

if st.sidebar.button('Detect Objects'):

|

| 82 |

+

res = model.predict(uploaded_image,

|

| 83 |

+

conf=confidence

|

| 84 |

+

)

|

| 85 |

+

boxes = res[0].boxes

|

| 86 |

+

res_plotted = res[0].plot()[:, :, ::-1]

|

| 87 |

+

st.image(res_plotted, caption='Detected Image',

|

| 88 |

+

use_column_width=True)

|

| 89 |

+

try:

|

| 90 |

+

with st.expander("Detection Results"):

|

| 91 |

+

for box in boxes:

|

| 92 |

+

st.write(box.data)

|

| 93 |

+

except Exception as ex:

|

| 94 |

+

# st.write(ex)

|

| 95 |

+

st.write("No image is uploaded yet!")

|

| 96 |

+

|

| 97 |

+

elif source_radio == settings.VIDEO:

|

| 98 |

+

helper.play_stored_video(confidence, model)

|

| 99 |

+

|

| 100 |

+

elif source_radio == settings.WEBCAM:

|

| 101 |

+

helper.play_webcam(confidence, model)

|

| 102 |

+

|

| 103 |

+

elif source_radio == settings.RTSP:

|

| 104 |

+

helper.play_rtsp_stream(confidence, model)

|

| 105 |

+

|

| 106 |

+

elif source_radio == settings.YOUTUBE:

|

| 107 |

+

helper.play_youtube_video(confidence, model)

|

| 108 |

+

|

| 109 |

+

else:

|

| 110 |

+

st.error("Please select a valid source type!")

|

assets/Objdetectionyoutubegif-1.m4v

ADDED

|

Binary file (528 kB). View file

|

|

|

assets/pic1.png

ADDED

|

assets/pic3.png

ADDED

|

assets/segmentation.png

ADDED

|

helper.py

ADDED

|

@@ -0,0 +1,219 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from ultralytics import YOLO

|

| 2 |

+

import streamlit as st

|

| 3 |

+

import cv2

|

| 4 |

+

import pafy

|

| 5 |

+

|

| 6 |

+

import settings

|

| 7 |

+

import tracker

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def load_model(model_path):

|

| 11 |

+

"""

|

| 12 |

+

Loads a YOLO object detection model from the specified model_path.

|

| 13 |

+

|

| 14 |

+

Parameters:

|

| 15 |

+

model_path (str): The path to the YOLO model file.

|

| 16 |

+

|

| 17 |

+

Returns:

|

| 18 |

+

A YOLO object detection model.

|

| 19 |

+

"""

|

| 20 |

+

model = YOLO(model_path)

|

| 21 |

+

return model

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def display_tracker_option():

|

| 25 |

+

display_tracker = st.radio("Display Tracker", ('Yes', 'No'))

|

| 26 |

+

is_display_tracker = True if display_tracker == 'Yes' else False

|

| 27 |

+

return is_display_tracker

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

def _display_detected_frames(conf, model, st_frame, image, is_display_tracking=None):

|

| 31 |

+

"""

|

| 32 |

+

Display the detected objects on a video frame using the YOLOv8 model.

|

| 33 |

+

|

| 34 |

+

Args:

|

| 35 |

+

- conf (float): Confidence threshold for object detection.

|

| 36 |

+

- model (YoloV8): A YOLOv8 object detection model.

|

| 37 |

+

- st_frame (Streamlit object): A Streamlit object to display the detected video.

|

| 38 |

+

- image (numpy array): A numpy array representing the video frame.

|

| 39 |

+

- is_display_tracking (bool): A flag indicating whether to display object tracking (default=None).

|

| 40 |

+

|

| 41 |

+

Returns:

|

| 42 |

+

None

|

| 43 |

+

"""

|

| 44 |

+

|

| 45 |

+

# Resize the image to a standard size

|

| 46 |

+

image = cv2.resize(image, (720, int(720*(9/16))))

|

| 47 |

+

|

| 48 |

+

# Predict the objects in the image using the YOLOv8 model

|

| 49 |

+

res = model.predict(image, conf=conf)

|

| 50 |

+

result_tensor = res[0].boxes

|

| 51 |

+

|

| 52 |

+

# Display object tracking, if specified

|

| 53 |

+

if is_display_tracking:

|

| 54 |

+

tracker._display_detected_tracks(result_tensor.data, image)

|

| 55 |

+

|

| 56 |

+

# # Plot the detected objects on the video frame

|

| 57 |

+

res_plotted = res[0].plot()

|

| 58 |

+

st_frame.image(res_plotted,

|

| 59 |

+

caption='Detected Video',

|

| 60 |

+

channels="BGR",

|

| 61 |

+

use_column_width=True

|

| 62 |

+

)

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

def play_youtube_video(conf, model):

|

| 66 |

+

"""

|

| 67 |

+

Plays a webcam stream. Detects Objects in real-time using the YOLOv8 object detection model.

|

| 68 |

+

|

| 69 |

+

Parameters:

|

| 70 |

+

conf: Confidence of YOLOv8 model.

|

| 71 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 72 |

+

|

| 73 |

+

Returns:

|

| 74 |

+

None

|

| 75 |

+

|

| 76 |

+

Raises:

|

| 77 |

+

None

|

| 78 |

+

"""

|

| 79 |

+

source_youtube = st.sidebar.text_input("YouTube Video url")

|

| 80 |

+

|

| 81 |

+

is_display_tracker = display_tracker_option()

|

| 82 |

+

|

| 83 |

+

if st.sidebar.button('Detect Objects'):

|

| 84 |

+

try:

|

| 85 |

+

video = pafy.new(source_youtube)

|

| 86 |

+

best = video.getbest(preftype="mp4")

|

| 87 |

+

vid_cap = cv2.VideoCapture(best.url)

|

| 88 |

+

st_frame = st.empty()

|

| 89 |

+

while (vid_cap.isOpened()):

|

| 90 |

+

success, image = vid_cap.read()

|

| 91 |

+

if success:

|

| 92 |

+

_display_detected_frames(conf,

|

| 93 |

+

model,

|

| 94 |

+

st_frame,

|

| 95 |

+

image,

|

| 96 |

+

is_display_tracker

|

| 97 |

+

)

|

| 98 |

+

else:

|

| 99 |

+

vid_cap.release()

|

| 100 |

+

break

|

| 101 |

+

except Exception as e:

|

| 102 |

+

st.sidebar.error("Error loading video: " + str(e))

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

def play_rtsp_stream(conf, model):

|

| 106 |

+

"""

|

| 107 |

+

Plays an rtsp stream. Detects Objects in real-time using the YOLOv8 object detection model.

|

| 108 |

+

|

| 109 |

+

Parameters:

|

| 110 |

+

conf: Confidence of YOLOv8 model.

|

| 111 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 112 |

+

|

| 113 |

+

Returns:

|

| 114 |

+

None

|

| 115 |

+

|

| 116 |

+

Raises:

|

| 117 |

+

None

|

| 118 |

+

"""

|

| 119 |

+

source_rtsp = st.sidebar.text_input("rtsp stream url")

|

| 120 |

+

is_display_tracker = display_tracker_option()

|

| 121 |

+

if st.sidebar.button('Detect Objects'):

|

| 122 |

+

try:

|

| 123 |

+

vid_cap = cv2.VideoCapture(source_rtsp)

|

| 124 |

+

st_frame = st.empty()

|

| 125 |

+

while (vid_cap.isOpened()):

|

| 126 |

+

success, image = vid_cap.read()

|

| 127 |

+

if success:

|

| 128 |

+

_display_detected_frames(conf,

|

| 129 |

+

model,

|

| 130 |

+

st_frame,

|

| 131 |

+

image,

|

| 132 |

+

is_display_tracker

|

| 133 |

+

)

|

| 134 |

+

else:

|

| 135 |

+

vid_cap.release()

|

| 136 |

+

break

|

| 137 |

+

except Exception as e:

|

| 138 |

+

st.sidebar.error("Error loading RTSP stream: " + str(e))

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

def play_webcam(conf, model):

|

| 142 |

+

"""

|

| 143 |

+

Plays a webcam stream. Detects Objects in real-time using the YOLOv8 object detection model.

|

| 144 |

+

|

| 145 |

+

Parameters:

|

| 146 |

+

conf: Confidence of YOLOv8 model.

|

| 147 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 148 |

+

|

| 149 |

+

Returns:

|

| 150 |

+

None

|

| 151 |

+

|

| 152 |

+

Raises:

|

| 153 |

+

None

|

| 154 |

+

"""

|

| 155 |

+

source_webcam = settings.WEBCAM_PATH

|

| 156 |

+

is_display_tracker = display_tracker_option()

|

| 157 |

+

if st.sidebar.button('Detect Objects'):

|

| 158 |

+

try:

|

| 159 |

+

vid_cap = cv2.VideoCapture(source_webcam)

|

| 160 |

+

st_frame = st.empty()

|

| 161 |

+

while (vid_cap.isOpened()):

|

| 162 |

+

success, image = vid_cap.read()

|

| 163 |

+

if success:

|

| 164 |

+

_display_detected_frames(conf,

|

| 165 |

+

model,

|

| 166 |

+

st_frame,

|

| 167 |

+

image,

|

| 168 |

+

is_display_tracker

|

| 169 |

+

)

|

| 170 |

+

else:

|

| 171 |

+

vid_cap.release()

|

| 172 |

+

break

|

| 173 |

+

except Exception as e:

|

| 174 |

+

st.sidebar.error("Error loading video: " + str(e))

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

def play_stored_video(conf, model):

|

| 178 |

+

"""

|

| 179 |

+

Plays a stored video file. Tracks and detects objects in real-time using the YOLOv8 object detection model.

|

| 180 |

+

|

| 181 |

+

Parameters:

|

| 182 |

+

conf: Confidence of YOLOv8 model.

|

| 183 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 184 |

+

|

| 185 |

+

Returns:

|

| 186 |

+

None

|

| 187 |

+

|

| 188 |

+

Raises:

|

| 189 |

+

None

|

| 190 |

+

"""

|

| 191 |

+

source_vid = st.sidebar.selectbox(

|

| 192 |

+

"Choose a video...", settings.VIDEOS_DICT.keys())

|

| 193 |

+

|

| 194 |

+

is_display_tracker = display_tracker_option()

|

| 195 |

+

|

| 196 |

+

with open(settings.VIDEOS_DICT.get(source_vid), 'rb') as video_file:

|

| 197 |

+

video_bytes = video_file.read()

|

| 198 |

+

if video_bytes:

|

| 199 |

+

st.video(video_bytes)

|

| 200 |

+

|

| 201 |

+

if st.sidebar.button('Detect Video Objects'):

|

| 202 |

+

try:

|

| 203 |

+

vid_cap = cv2.VideoCapture(

|

| 204 |

+

str(settings.VIDEOS_DICT.get(source_vid)))

|

| 205 |

+

st_frame = st.empty()

|

| 206 |

+

while (vid_cap.isOpened()):

|

| 207 |

+

success, image = vid_cap.read()

|

| 208 |

+

if success:

|

| 209 |

+

_display_detected_frames(conf,

|

| 210 |

+

model,

|

| 211 |

+

st_frame,

|

| 212 |

+

image,

|

| 213 |

+

is_display_tracker

|

| 214 |

+

)

|

| 215 |

+

else:

|

| 216 |

+

vid_cap.release()

|

| 217 |

+

break

|

| 218 |

+

except Exception as e:

|

| 219 |

+

st.sidebar.error("Error loading video: " + str(e))

|

images/office_4.jpg

ADDED

|

images/office_4_detected.jpg

ADDED

|

settings.py

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from pathlib import Path

|

| 2 |

+

import sys

|

| 3 |

+

|

| 4 |

+

# Get the absolute path of the current file

|

| 5 |

+

file_path = Path(__file__).resolve()

|

| 6 |

+

|

| 7 |

+

# Get the parent directory of the current file

|

| 8 |

+

root_path = file_path.parent

|

| 9 |

+

|

| 10 |

+

# Add the root path to the sys.path list if it is not already there

|

| 11 |

+

if root_path not in sys.path:

|

| 12 |

+

sys.path.append(str(root_path))

|

| 13 |

+

|

| 14 |

+

# Get the relative path of the root directory with respect to the current working directory

|

| 15 |

+

ROOT = root_path.relative_to(Path.cwd())

|

| 16 |

+

|

| 17 |

+

# Sources

|

| 18 |

+

IMAGE = 'Image'

|

| 19 |

+

VIDEO = 'Video'

|

| 20 |

+

WEBCAM = 'Webcam'

|

| 21 |

+

RTSP = 'RTSP'

|

| 22 |

+

YOUTUBE = 'YouTube'

|

| 23 |

+

|

| 24 |

+

SOURCES_LIST = [IMAGE, VIDEO, WEBCAM, RTSP, YOUTUBE]

|

| 25 |

+

|

| 26 |

+

# Images config

|

| 27 |

+

IMAGES_DIR = ROOT / 'images'

|

| 28 |

+

DEFAULT_IMAGE = IMAGES_DIR / 'office_4.jpg'

|

| 29 |

+

DEFAULT_DETECT_IMAGE = IMAGES_DIR / 'office_4_detected.jpg'

|

| 30 |

+

|

| 31 |

+

# Videos config

|

| 32 |

+

VIDEO_DIR = ROOT / 'videos'

|

| 33 |

+

VIDEO_1_PATH = VIDEO_DIR / 'video_1.mp4'

|

| 34 |

+

VIDEO_2_PATH = VIDEO_DIR / 'video_2.mp4'

|

| 35 |

+

VIDEO_3_PATH = VIDEO_DIR / 'video_3.mp4'

|

| 36 |

+

VIDEO_4_PATH = VIDEO_DIR / 'video_4.mp4'

|

| 37 |

+

VIDEO_5_PATH = VIDEO_DIR / 'video_5.mp4'

|

| 38 |

+

VIDEOS_DICT = {

|

| 39 |

+

'video_1': VIDEO_1_PATH,

|

| 40 |

+

'video_2': VIDEO_2_PATH,

|

| 41 |

+

'video_3': VIDEO_3_PATH,

|

| 42 |

+

'video_4': VIDEO_4_PATH,

|

| 43 |

+

'video_5': VIDEO_5_PATH,

|

| 44 |

+

}

|

| 45 |

+

|

| 46 |

+

# ML Model config

|

| 47 |

+

MODEL_DIR = ROOT / 'weights'

|

| 48 |

+

DETECTION_MODEL = MODEL_DIR / 'yolov8n.pt'

|

| 49 |

+

SEGMENTATION_MODEL = MODEL_DIR / 'yolov8n-seg.pt'

|

| 50 |

+

|

| 51 |

+

# Webcam

|

| 52 |

+

WEBCAM_PATH = 0

|

sort.py

ADDED

|

@@ -0,0 +1,372 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import print_function

|

| 2 |

+

from filterpy.kalman import KalmanFilter

|

| 3 |

+

import argparse

|

| 4 |

+

import time

|

| 5 |

+

import glob

|

| 6 |

+

from skimage import io

|

| 7 |

+

import matplotlib.patches as patches

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

|

| 10 |

+

import os

|

| 11 |

+

import numpy as np

|

| 12 |

+

import matplotlib

|

| 13 |

+

matplotlib.use('TkAgg')

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

np.random.seed(0)

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def linear_assignment(cost_matrix):

|

| 20 |

+

try:

|

| 21 |

+

import lap # linear assignment problem solver

|

| 22 |

+

_, x, y = lap.lapjv(cost_matrix, extend_cost=True)

|

| 23 |

+

return np.array([[y[i], i] for i in x if i >= 0])

|

| 24 |

+

except ImportError:

|

| 25 |

+

from scipy.optimize import linear_sum_assignment

|

| 26 |

+

x, y = linear_sum_assignment(cost_matrix)

|

| 27 |

+

return np.array(list(zip(x, y)))

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

"""From SORT: Computes IOU between two boxes in the form [x1,y1,x2,y2]"""

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

def iou_batch(bb_test, bb_gt):

|

| 34 |

+

|

| 35 |

+

bb_gt = np.expand_dims(bb_gt, 0)

|

| 36 |

+

bb_test = np.expand_dims(bb_test, 1)

|

| 37 |

+

|

| 38 |

+

xx1 = np.maximum(bb_test[..., 0], bb_gt[..., 0])

|

| 39 |

+

yy1 = np.maximum(bb_test[..., 1], bb_gt[..., 1])

|

| 40 |

+

xx2 = np.minimum(bb_test[..., 2], bb_gt[..., 2])

|

| 41 |

+

yy2 = np.minimum(bb_test[..., 3], bb_gt[..., 3])

|

| 42 |

+

w = np.maximum(0., xx2 - xx1)

|

| 43 |

+

h = np.maximum(0., yy2 - yy1)

|

| 44 |

+

wh = w * h

|

| 45 |

+

o = wh / ((bb_test[..., 2] - bb_test[..., 0]) * (bb_test[..., 3] - bb_test[..., 1])

|

| 46 |

+

+ (bb_gt[..., 2] - bb_gt[..., 0]) * (bb_gt[..., 3] - bb_gt[..., 1]) - wh)

|

| 47 |

+

return (o)

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

"""Takes a bounding box in the form [x1,y1,x2,y2] and returns z in the form [x,y,s,r] where x,y is the center of the box and s is the scale/area and r is the aspect ratio"""

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

def convert_bbox_to_z(bbox):

|

| 54 |

+

w = bbox[2] - bbox[0]

|

| 55 |

+

h = bbox[3] - bbox[1]

|

| 56 |

+

x = bbox[0] + w/2.

|

| 57 |

+

y = bbox[1] + h/2.

|

| 58 |

+

s = w * h

|

| 59 |

+

# scale is just area

|

| 60 |

+

r = w / float(h)

|

| 61 |

+

return np.array([x, y, s, r]).reshape((4, 1))

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

"""Takes a bounding box in the centre form [x,y,s,r] and returns it in the form

|

| 65 |

+

[x1,y1,x2,y2] where x1,y1 is the top left and x2,y2 is the bottom right"""

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

def convert_x_to_bbox(x, score=None):

|

| 69 |

+

w = np.sqrt(x[2] * x[3])

|

| 70 |

+

h = x[2] / w

|

| 71 |

+

if (score == None):

|

| 72 |

+

return np.array([x[0]-w/2., x[1]-h/2., x[0]+w/2., x[1]+h/2.]).reshape((1, 4))

|

| 73 |

+

else:

|

| 74 |

+

return np.array([x[0]-w/2., x[1]-h/2., x[0]+w/2., x[1]+h/2., score]).reshape((1, 5))

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

"""This class represents the internal state of individual tracked objects observed as bbox."""

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

class KalmanBoxTracker(object):

|

| 81 |

+

|

| 82 |

+

count = 0

|

| 83 |

+

|

| 84 |

+

def __init__(self, bbox):

|

| 85 |

+

"""

|

| 86 |

+

Initialize a tracker using initial bounding box

|

| 87 |

+

|

| 88 |

+

Parameter 'bbox' must have 'detected class' int number at the -1 position.

|

| 89 |

+

"""

|

| 90 |

+

self.kf = KalmanFilter(dim_x=7, dim_z=4)

|

| 91 |

+

self.kf.F = np.array([[1, 0, 0, 0, 1, 0, 0], [0, 1, 0, 0, 0, 1, 0], [0, 0, 1, 0, 0, 0, 1], [

|

| 92 |

+

0, 0, 0, 1, 0, 0, 0], [0, 0, 0, 0, 1, 0, 0], [0, 0, 0, 0, 0, 1, 0], [0, 0, 0, 0, 0, 0, 1]])

|

| 93 |

+

self.kf.H = np.array([[1, 0, 0, 0, 0, 0, 0], [0, 1, 0, 0, 0, 0, 0], [

|

| 94 |

+

0, 0, 1, 0, 0, 0, 0], [0, 0, 0, 1, 0, 0, 0]])

|

| 95 |

+

|

| 96 |

+

self.kf.R[2:,

|

| 97 |

+

2:] *= 10. # R: Covariance matrix of measurement noise (set to high for noisy inputs -> more 'inertia' of boxes')

|

| 98 |

+

self.kf.P[4:, 4:] *= 1000. # give high uncertainty to the unobservable initial velocities

|

| 99 |

+

self.kf.P *= 10.

|

| 100 |

+

# Q: Covariance matrix of process noise (set to high for erratically moving things)

|

| 101 |

+

self.kf.Q[-1, -1] *= 0.5

|

| 102 |

+

self.kf.Q[4:, 4:] *= 0.5

|

| 103 |

+

|

| 104 |

+

self.kf.x[:4] = convert_bbox_to_z(bbox) # STATE VECTOR

|

| 105 |

+

self.time_since_update = 0

|

| 106 |

+

self.id = KalmanBoxTracker.count

|

| 107 |

+

KalmanBoxTracker.count += 1

|

| 108 |

+

self.history = []

|

| 109 |

+

self.hits = 0

|

| 110 |

+

self.hit_streak = 0

|

| 111 |

+

self.age = 0

|

| 112 |

+

self.centroidarr = []

|

| 113 |

+

CX = (bbox[0]+bbox[2])//2

|

| 114 |

+

CY = (bbox[1]+bbox[3])//2

|

| 115 |

+

self.centroidarr.append((CX, CY))

|

| 116 |

+

|

| 117 |

+

# keep yolov5 detected class information

|

| 118 |

+

self.detclass = bbox[5]

|

| 119 |

+

|

| 120 |

+

def update(self, bbox):

|

| 121 |

+

"""

|

| 122 |

+

Updates the state vector with observed bbox

|

| 123 |

+

"""

|

| 124 |

+

self.time_since_update = 0

|

| 125 |

+

self.history = []

|

| 126 |

+

self.hits += 1

|

| 127 |

+

self.hit_streak += 1

|

| 128 |

+

self.kf.update(convert_bbox_to_z(bbox))

|

| 129 |

+

self.detclass = bbox[5]

|

| 130 |

+

CX = (bbox[0]+bbox[2])//2

|

| 131 |

+

CY = (bbox[1]+bbox[3])//2

|

| 132 |

+

self.centroidarr.append((CX, CY))

|

| 133 |

+

|

| 134 |

+

def predict(self):

|

| 135 |

+

"""

|

| 136 |

+

Advances the state vector and returns the predicted bounding box estimate

|

| 137 |

+

"""

|

| 138 |

+

if ((self.kf.x[6]+self.kf.x[2]) <= 0):

|

| 139 |

+

self.kf.x[6] *= 0.0

|

| 140 |

+

self.kf.predict()

|

| 141 |

+

self.age += 1

|

| 142 |

+

if (self.time_since_update > 0):

|

| 143 |

+

self.hit_streak = 0

|

| 144 |

+

self.time_since_update += 1

|

| 145 |

+

self.history.append(convert_x_to_bbox(self.kf.x))

|

| 146 |

+

# bbox=self.history[-1]

|

| 147 |

+

# CX = (bbox[0]+bbox[2])/2

|

| 148 |

+

# CY = (bbox[1]+bbox[3])/2

|

| 149 |

+

# self.centroidarr.append((CX,CY))

|

| 150 |

+

|

| 151 |

+

return self.history[-1]

|

| 152 |

+

|

| 153 |

+

def get_state(self):

|

| 154 |

+

"""

|

| 155 |

+

Returns the current bounding box estimate

|

| 156 |

+

# test

|

| 157 |

+

arr1 = np.array([[1,2,3,4]])

|

| 158 |

+

arr2 = np.array([0])

|

| 159 |

+

arr3 = np.expand_dims(arr2, 0)

|

| 160 |

+

np.concatenate((arr1,arr3), axis=1)

|

| 161 |

+

"""

|

| 162 |

+

arr_detclass = np.expand_dims(np.array([self.detclass]), 0)

|

| 163 |

+

|

| 164 |

+

arr_u_dot = np.expand_dims(self.kf.x[4], 0)

|

| 165 |

+

arr_v_dot = np.expand_dims(self.kf.x[5], 0)

|

| 166 |

+

arr_s_dot = np.expand_dims(self.kf.x[6], 0)

|

| 167 |

+

|

| 168 |

+

return np.concatenate((convert_x_to_bbox(self.kf.x), arr_detclass, arr_u_dot, arr_v_dot, arr_s_dot), axis=1)

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

def associate_detections_to_trackers(detections, trackers, iou_threshold=0.3):

|

| 172 |

+

"""

|

| 173 |

+

Assigns detections to tracked object (both represented as bounding boxes)

|

| 174 |

+

Returns 3 lists of

|

| 175 |

+

1. matches,

|

| 176 |

+

2. unmatched_detections

|

| 177 |

+

3. unmatched_trackers

|

| 178 |

+

"""

|

| 179 |

+

if (len(trackers) == 0):

|

| 180 |

+

return np.empty((0, 2), dtype=int), np.arange(len(detections)), np.empty((0, 5), dtype=int)

|

| 181 |

+

|

| 182 |

+

iou_matrix = iou_batch(detections, trackers)

|

| 183 |

+

|

| 184 |

+

if min(iou_matrix.shape) > 0:

|

| 185 |

+

a = (iou_matrix > iou_threshold).astype(np.int32)

|

| 186 |

+

if a.sum(1).max() == 1 and a.sum(0).max() == 1:

|

| 187 |

+

matched_indices = np.stack(np.where(a), axis=1)

|

| 188 |

+

else:

|

| 189 |

+

matched_indices = linear_assignment(-iou_matrix)

|

| 190 |

+

else:

|

| 191 |

+

matched_indices = np.empty(shape=(0, 2))

|

| 192 |

+

|

| 193 |

+

unmatched_detections = []

|

| 194 |

+

for d, det in enumerate(detections):

|

| 195 |

+

if (d not in matched_indices[:, 0]):

|

| 196 |

+

unmatched_detections.append(d)

|

| 197 |

+

|

| 198 |

+

unmatched_trackers = []

|

| 199 |

+

for t, trk in enumerate(trackers):

|

| 200 |

+

if (t not in matched_indices[:, 1]):

|

| 201 |

+

unmatched_trackers.append(t)

|

| 202 |

+

|

| 203 |

+

# filter out matched with low IOU

|

| 204 |

+

matches = []

|

| 205 |

+

for m in matched_indices:

|

| 206 |

+

if (iou_matrix[m[0], m[1]] < iou_threshold):

|

| 207 |

+

unmatched_detections.append(m[0])

|

| 208 |

+

unmatched_trackers.append(m[1])

|

| 209 |

+

else:

|

| 210 |

+

matches.append(m.reshape(1, 2))

|

| 211 |

+

|

| 212 |

+

if (len(matches) == 0):

|

| 213 |

+

matches = np.empty((0, 2), dtype=int)

|

| 214 |

+

else:

|

| 215 |

+

matches = np.concatenate(matches, axis=0)

|

| 216 |

+

|

| 217 |

+

return matches, np.array(unmatched_detections), np.array(unmatched_trackers)

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

class Sort(object):

|

| 221 |

+

def __init__(self, max_age=1, min_hits=3, iou_threshold=0.3):

|

| 222 |

+

"""

|

| 223 |

+

Parameters for SORT

|

| 224 |

+

"""

|

| 225 |

+

self.max_age = max_age

|

| 226 |

+

self.min_hits = min_hits

|

| 227 |

+

self.iou_threshold = iou_threshold

|

| 228 |

+

self.trackers = []

|

| 229 |

+

self.frame_count = 0

|

| 230 |

+

|

| 231 |

+

def getTrackers(self,):

|

| 232 |

+

return self.trackers

|

| 233 |

+

|

| 234 |

+

def update(self, dets=np.empty((0, 6))):

|

| 235 |

+

"""

|

| 236 |

+

Parameters:

|

| 237 |

+

'dets' - a numpy array of detection in the format [[x1, y1, x2, y2, score], [x1,y1,x2,y2,score],...]

|

| 238 |

+

|

| 239 |

+

Ensure to call this method even frame has no detections. (pass np.empty((0,5)))

|

| 240 |

+

|

| 241 |

+

Returns a similar array, where the last column is object ID (replacing confidence score)

|

| 242 |

+

|

| 243 |

+

NOTE: The number of objects returned may differ from the number of objects provided.

|

| 244 |

+

"""

|

| 245 |

+

self.frame_count += 1

|

| 246 |

+

|

| 247 |

+

# Get predicted locations from existing trackers

|

| 248 |

+

trks = np.zeros((len(self.trackers), 6))

|

| 249 |

+

to_del = []

|

| 250 |

+

ret = []

|

| 251 |

+

for t, trk in enumerate(trks):

|

| 252 |

+

pos = self.trackers[t].predict()[0]

|

| 253 |

+

trk[:] = [pos[0], pos[1], pos[2], pos[3], 0, 0]

|

| 254 |

+

if np.any(np.isnan(pos)):

|

| 255 |

+

to_del.append(t)

|

| 256 |

+

trks = np.ma.compress_rows(np.ma.masked_invalid(trks))

|

| 257 |

+

for t in reversed(to_del):

|

| 258 |

+

self.trackers.pop(t)

|

| 259 |

+

matched, unmatched_dets, unmatched_trks = associate_detections_to_trackers(

|

| 260 |

+

dets, trks, self.iou_threshold)

|

| 261 |

+

|

| 262 |

+

# Update matched trackers with assigned detections

|

| 263 |

+

for m in matched:

|

| 264 |

+

self.trackers[m[1]].update(dets[m[0], :])

|

| 265 |

+

|

| 266 |

+

# Create and initialize new trackers for unmatched detections

|

| 267 |

+

for i in unmatched_dets:

|

| 268 |

+

trk = KalmanBoxTracker(np.hstack((dets[i, :], np.array([0]))))

|

| 269 |

+

# trk = KalmanBoxTracker(np.hstack(dets[i,:])

|

| 270 |

+

self.trackers.append(trk)

|

| 271 |

+

|

| 272 |

+

i = len(self.trackers)

|

| 273 |

+

for trk in reversed(self.trackers):

|

| 274 |

+

d = trk.get_state()[0]

|

| 275 |

+

if (trk.time_since_update < 1) and (trk.hit_streak >= self.min_hits or self.frame_count <= self.min_hits):

|

| 276 |

+

# +1'd because MOT benchmark requires positive value

|

| 277 |

+

ret.append(np.concatenate((d, [trk.id+1])).reshape(1, -1))

|

| 278 |

+

i -= 1

|

| 279 |

+

# remove dead tracklet

|

| 280 |

+

if (trk.time_since_update > self.max_age):

|

| 281 |

+

self.trackers.pop(i)

|

| 282 |

+

if (len(ret) > 0):

|

| 283 |

+

return np.concatenate(ret)

|

| 284 |

+

return np.empty((0, 6))

|

| 285 |

+

|

| 286 |

+

|

| 287 |

+

def parse_args():

|

| 288 |

+

"""Parse input arguments."""

|

| 289 |

+

parser = argparse.ArgumentParser(description='SORT demo')

|

| 290 |

+

parser.add_argument('--display', dest='display',

|

| 291 |

+

help='Display online tracker output (slow) [False]', action='store_true')

|

| 292 |

+

parser.add_argument(

|

| 293 |

+

"--seq_path", help="Path to detections.", type=str, default='data')

|

| 294 |

+

parser.add_argument(

|

| 295 |

+

"--phase", help="Subdirectory in seq_path.", type=str, default='train')

|

| 296 |

+

parser.add_argument("--max_age",

|

| 297 |

+

help="Maximum number of frames to keep alive a track without associated detections.",

|

| 298 |

+

type=int, default=1)

|

| 299 |

+

parser.add_argument("--min_hits",

|

| 300 |

+

help="Minimum number of associated detections before track is initialised.",

|

| 301 |

+

type=int, default=3)

|

| 302 |

+

parser.add_argument("--iou_threshold",

|

| 303 |

+

help="Minimum IOU for match.", type=float, default=0.3)

|

| 304 |

+

args = parser.parse_args()

|

| 305 |

+

return args

|

| 306 |

+

|

| 307 |

+

|

| 308 |

+

if __name__ == '__main__':

|

| 309 |

+

# all train

|

| 310 |

+

args = parse_args()

|

| 311 |

+

display = args.display

|

| 312 |

+

phase = args.phase

|

| 313 |

+

total_time = 0.0

|

| 314 |

+

total_frames = 0

|

| 315 |

+

colours = np.random.rand(32, 3) # used only for display

|

| 316 |

+

if (display):

|

| 317 |

+

if not os.path.exists('mot_benchmark'):

|

| 318 |

+

print('\n\tERROR: mot_benchmark link not found!\n\n Create a symbolic link to the MOT benchmark\n (https://motchallenge.net/data/2D_MOT_2015/#download). E.g.:\n\n $ ln -s /path/to/MOT2015_challenge/2DMOT2015 mot_benchmark\n\n')

|

| 319 |

+

exit()

|

| 320 |

+

plt.ion()

|

| 321 |

+

fig = plt.figure()

|

| 322 |

+

ax1 = fig.add_subplot(111, aspect='equal')

|

| 323 |

+

|

| 324 |

+

if not os.path.exists('output'):

|

| 325 |

+

os.makedirs('output')

|

| 326 |

+

pattern = os.path.join(args.seq_path, phase, '*', 'det', 'det.txt')

|

| 327 |

+

for seq_dets_fn in glob.glob(pattern):

|

| 328 |

+

mot_tracker = Sort(max_age=args.max_age,

|

| 329 |

+

min_hits=args.min_hits,

|

| 330 |

+

iou_threshold=args.iou_threshold) # create instance of the SORT tracker

|

| 331 |

+

seq_dets = np.loadtxt(seq_dets_fn, delimiter=',')

|

| 332 |

+

seq = seq_dets_fn[pattern.find('*'):].split(os.path.sep)[0]

|

| 333 |

+

|

| 334 |

+

with open(os.path.join('output', '%s.txt' % (seq)), 'w') as out_file:

|

| 335 |

+

print("Processing %s." % (seq))

|

| 336 |

+

for frame in range(int(seq_dets[:, 0].max())):

|

| 337 |

+

frame += 1 # detection and frame numbers begin at 1

|

| 338 |

+

dets = seq_dets[seq_dets[:, 0] == frame, 2:7]

|

| 339 |

+

# convert to [x1,y1,w,h] to [x1,y1,x2,y2]

|

| 340 |

+

dets[:, 2:4] += dets[:, 0:2]

|

| 341 |

+

total_frames += 1

|

| 342 |

+

|

| 343 |

+

if (display):

|

| 344 |

+

fn = os.path.join('mot_benchmark', phase, seq,

|

| 345 |

+

'img1', '%06d.jpg' % (frame))

|

| 346 |

+

im = io.imread(fn)

|

| 347 |

+

ax1.imshow(im)

|

| 348 |

+

plt.title(seq + ' Tracked Targets')

|

| 349 |

+

|

| 350 |

+

start_time = time.time()

|

| 351 |

+

trackers = mot_tracker.update(dets)

|

| 352 |

+

cycle_time = time.time() - start_time

|

| 353 |

+

total_time += cycle_time

|

| 354 |

+

|

| 355 |

+

for d in trackers:

|

| 356 |

+

print('%d,%d,%.2f,%.2f,%.2f,%.2f,1,-1,-1,-1' %

|

| 357 |

+

(frame, d[4], d[0], d[1], d[2]-d[0], d[3]-d[1]), file=out_file)

|

| 358 |

+

if (display):

|

| 359 |

+

d = d.astype(np.int32)

|

| 360 |

+

ax1.add_patch(patches.Rectangle(

|

| 361 |

+

(d[0], d[1]), d[2]-d[0], d[3]-d[1], fill=False, lw=3, ec=colours[d[4] % 32, :]))

|

| 362 |

+

|

| 363 |

+

if (display):

|

| 364 |

+

fig.canvas.flush_events()

|

| 365 |

+

plt.draw()

|

| 366 |

+

ax1.cla()

|

| 367 |

+

|

| 368 |

+

print("Total Tracking took: %.3f seconds for %d frames or %.1f FPS" %

|

| 369 |

+

(total_time, total_frames, total_frames / total_time))

|

| 370 |

+

|

| 371 |

+

if (display):

|

| 372 |

+

print("Note: to get real runtime results run without the option: --display")

|

tracker.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import cv2

|

| 2 |

+

import numpy as np

|

| 3 |

+

|

| 4 |

+

from sort import Sort

|

| 5 |

+

|

| 6 |

+

# SORT tracking algorithm initialization

|

| 7 |

+

sort_max_age = 50

|

| 8 |

+

sort_min_hits = 2

|

| 9 |

+

sort_iou_thresh = 0.2

|

| 10 |

+

sort_tracker = Sort(max_age=sort_max_age,

|

| 11 |

+

min_hits=sort_min_hits,

|

| 12 |

+

iou_threshold=sort_iou_thresh)

|

| 13 |

+

track_color_id = 0

|

| 14 |

+

|

| 15 |

+

# Sorting algorithm

|

| 16 |

+

'''Computer Color for every box and track'''

|

| 17 |

+

palette = (2 ** 11 - 1, 2 ** 15 - 1, 2 ** 20 - 1)

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def compute_color_for_labels(label):

|

| 21 |

+

color = [int(int(p * (label ** 2 - label + 1)) % 255) for p in palette]

|

| 22 |

+

return tuple(color)

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

"""" Calculates the relative bounding box from absolute pixel values. """

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

def bbox_rel(*xyxy):

|

| 29 |

+

bbox_left = min([xyxy[0].item(), xyxy[2].item()])

|

| 30 |

+

bbox_top = min([xyxy[1].item(), xyxy[3].item()])

|

| 31 |

+

bbox_w = abs(xyxy[0].item() - xyxy[2].item())

|

| 32 |

+

bbox_h = abs(xyxy[1].item() - xyxy[3].item())

|

| 33 |

+

x_c = (bbox_left + bbox_w / 2)

|

| 34 |

+

y_c = (bbox_top + bbox_h / 2)

|

| 35 |

+

w = bbox_w

|

| 36 |

+

h = bbox_h

|

| 37 |

+

return x_c, y_c, w, h

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

"""Function to Draw Bounding boxes"""

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def draw_boxes(img, bbox, identities=None, categories=None,

|

| 44 |

+

names=None, color_box=None, offset=(0, 0)):

|

| 45 |

+

for i, box in enumerate(bbox):

|

| 46 |

+

x1, y1, x2, y2 = [int(i) for i in box]

|

| 47 |

+

x1 += offset[0]

|

| 48 |

+

x2 += offset[0]

|

| 49 |

+

y1 += offset[1]

|

| 50 |

+

y2 += offset[1]

|

| 51 |

+

cat = int(categories[i]) if categories is not None else 0

|

| 52 |

+

id = int(identities[i]) if identities is not None else 0

|

| 53 |

+

data = (int((box[0]+box[2])/2), (int((box[1]+box[3])/2)))

|

| 54 |

+

label = str(id)

|

| 55 |

+

|

| 56 |

+

if color_box:

|

| 57 |

+

color = compute_color_for_labels(id)

|

| 58 |

+

(w, h), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.6, 1)

|

| 59 |

+

cv2.rectangle(img, (x1, y1), (x2, y2), color, 2)

|

| 60 |

+

cv2.rectangle(img, (x1, y1 - 20), (x1 + w, y1), (255, 191, 0), -1)

|

| 61 |

+

cv2.putText(img, label, (x1, y1 - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.6,

|

| 62 |

+

[255, 255, 255], 1)

|

| 63 |

+

cv2.circle(img, data, 3, color, -1)

|

| 64 |

+

else:

|

| 65 |

+

(w, h), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.6, 1)

|

| 66 |

+

cv2.rectangle(img, (x1, y1), (x2, y2), (255, 191, 0), 2)

|

| 67 |

+

# cv2.rectangle(img, (x1, y1 - 20), (x1 + w, y1), (255, 191, 0), -1)

|

| 68 |

+

# cv2.putText(img, label, (x1, y1 - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.6,

|

| 69 |

+

# [255, 255, 255], 1)

|

| 70 |

+

cv2.rectangle(img, (x1, y1 - 3*h), (x1 + w, y1), (255, 191, 0), -1)

|

| 71 |

+

cv2.putText(img, label, (x1, y1 - 2*h), cv2.FONT_HERSHEY_SIMPLEX, 0.6,

|

| 72 |

+

[255, 255, 255], 1)

|

| 73 |

+

cv2.circle(img, data, 3, (255, 191, 0), -1)

|

| 74 |

+

return img

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

def _display_detected_tracks(dets, img, color_box=None):

|

| 78 |

+

# pass an empty array to sort

|

| 79 |

+

dets_to_sort = np.empty((0, 6))

|

| 80 |

+

|

| 81 |

+

# NOTE: We send in detected object class too

|

| 82 |

+

for x1, y1, x2, y2, conf, detclass in dets.cpu().detach().numpy():

|

| 83 |

+

# for x1, y1, x2, y2, conf, detclass in dets.cpu().detach().numpy():

|

| 84 |

+

dets_to_sort = np.vstack((dets_to_sort,

|

| 85 |

+

np.array([x1, y1, x2, y2,

|

| 86 |

+

conf, detclass])))

|

| 87 |

+

|

| 88 |

+

# Run SORT

|

| 89 |

+

tracked_dets = sort_tracker.update(dets_to_sort)

|

| 90 |

+

# tracks = sort_tracker.getTrackers()

|

| 91 |

+

|

| 92 |

+

# loop over tracks

|

| 93 |

+

# for track in tracks:

|

| 94 |

+

# if color_box:

|

| 95 |

+

# color = compute_color_for_labels(track_color_id)

|

| 96 |

+

# [cv2.line(img, (int(track.centroidarr[i][0]), int(track.centroidarr[i][1])),

|

| 97 |

+

# (int(track.centroidarr[i+1][0]),

|

| 98 |

+

# int(track.centroidarr[i+1][1])),

|

| 99 |

+

# color, thickness=3) for i, _ in enumerate(track.centroidarr)

|

| 100 |

+

# if i < len(track.centroidarr)-1]

|

| 101 |

+

# track_color_id = track_color_id+1

|

| 102 |

+

# else:

|