Spaces:

Running

Running

initial commit

Browse files- app.py +42 -0

- images/53896.jpg +0 -0

- images/76320aa1c81ec116b3fec0212d95ed4c.png +0 -0

- images/C_M.png +0 -0

- images/L_A.png +0 -0

- images/LogReg.png +0 -0

- images/Struct.png +0 -0

- images/grafic.jpg +0 -0

- images/myTinyBERT.png +0 -0

- images/scale_1200.png +0 -0

- models/gpt/config.json +41 -0

- models/gpt/generation_config.json +7 -0

- models/gpt/merges.txt +0 -0

- models/gpt/model.safetensors +3 -0

- models/gpt/special_tokens_map.json +37 -0

- models/gpt/tokenizer_config.json +59 -0

- models/gpt/vocab.json +0 -0

- models/model_weight_bert.pt +3 -0

- models/rest/__pycache__/model_lstm.cpython-312.pyc +0 -0

- models/rest/model_lstm.py +48 -0

- models/rest/model_weights_3000cl.pt +3 -0

- models/rest/vocab_to_int.npy +3 -0

- pages/analysis_of_reviews.py +116 -0

- pages/gpt.py +96 -0

- pages/tgchannels.py +97 -0

- requirements.txt +83 -0

app.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pandas as pd

|

| 2 |

+

import matplotlib.pyplot as plt

|

| 3 |

+

import streamlit as st

|

| 4 |

+

|

| 5 |

+

st.markdown(

|

| 6 |

+

"<h1 style='text-align: center;'>GPT Team project</h1>",

|

| 7 |

+

unsafe_allow_html=True

|

| 8 |

+

)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

st.markdown(

|

| 13 |

+

"""

|

| 14 |

+

<style>

|

| 15 |

+

button[title^=Exit]+div [data-testid=stImage]{

|

| 16 |

+

test-align: center;

|

| 17 |

+

display: block;

|

| 18 |

+

margin-left: auto;

|

| 19 |

+

margin-right: auto;

|

| 20 |

+

width: 100%;

|

| 21 |

+

}

|

| 22 |

+

</style>

|

| 23 |

+

""", unsafe_allow_html=True

|

| 24 |

+

)

|

| 25 |

+

|

| 26 |

+

col1, col2, col3 = st.columns([1, 2, 1]) # Центральная колонка шире остальных

|

| 27 |

+

with col2:

|

| 28 |

+

st.image('images/76320aa1c81ec116b3fec0212d95ed4c.png', width=500)

|

| 29 |

+

|

| 30 |

+

st.markdown("---")

|

| 31 |

+

|

| 32 |

+

st.markdown("""

|

| 33 |

+

|

| 34 |

+

**Авторы:** [Михаил Бутин](https://github.com/allspicepaege), [Галина Горяинова](https://github.com/ratOfSteel), [Анатолий Яковлев](https://github.com/cdxxi)

|

| 35 |

+

|

| 36 |

+

**Описание:**

|

| 37 |

+

- **Главная страница**: Общая информация и навигация 🌏

|

| 38 |

+

- **Первая страница**: Классификация отзыва на рестораны 🍷

|

| 39 |

+

- **Вторая страница**: Бредогенератор 🤪

|

| 40 |

+

- **Третья страница**: Классификация тематики новостей из телеграм каналов 📰 [TGBOT](https://t.me/nlp_rubert_tiny_bot)

|

| 41 |

+

|

| 42 |

+

""")

|

images/53896.jpg

ADDED

|

images/76320aa1c81ec116b3fec0212d95ed4c.png

ADDED

|

images/C_M.png

ADDED

|

images/L_A.png

ADDED

|

images/LogReg.png

ADDED

|

images/Struct.png

ADDED

|

images/grafic.jpg

ADDED

|

images/myTinyBERT.png

ADDED

|

images/scale_1200.png

ADDED

|

models/gpt/config.json

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "ai-forever/rugpt3small_based_on_gpt2",

|

| 3 |

+

"activation_function": "gelu_new",

|

| 4 |

+

"architectures": [

|

| 5 |

+

"GPT2LMHeadModel"

|

| 6 |

+

],

|

| 7 |

+

"attn_pdrop": 0.1,

|

| 8 |

+

"bos_token_id": 1,

|

| 9 |

+

"embd_pdrop": 0.1,

|

| 10 |

+

"eos_token_id": 2,

|

| 11 |

+

"gradient_checkpointing": false,

|

| 12 |

+

"id2label": {

|

| 13 |

+

"0": "LABEL_0"

|

| 14 |

+

},

|

| 15 |

+

"initializer_range": 0.02,

|

| 16 |

+

"label2id": {

|

| 17 |

+

"LABEL_0": 0

|

| 18 |

+

},

|

| 19 |

+

"layer_norm_epsilon": 1e-05,

|

| 20 |

+

"model_type": "gpt2",

|

| 21 |

+

"n_ctx": 2048,

|

| 22 |

+

"n_embd": 768,

|

| 23 |

+

"n_head": 12,

|

| 24 |

+

"n_inner": null,

|

| 25 |

+

"n_layer": 12,

|

| 26 |

+

"n_positions": 2048,

|

| 27 |

+

"pad_token_id": 0,

|

| 28 |

+

"reorder_and_upcast_attn": false,

|

| 29 |

+

"resid_pdrop": 0.1,

|

| 30 |

+

"scale_attn_by_inverse_layer_idx": false,

|

| 31 |

+

"scale_attn_weights": true,

|

| 32 |

+

"summary_activation": null,

|

| 33 |

+

"summary_first_dropout": 0.1,

|

| 34 |

+

"summary_proj_to_labels": true,

|

| 35 |

+

"summary_type": "cls_index",

|

| 36 |

+

"summary_use_proj": true,

|

| 37 |

+

"torch_dtype": "float32",

|

| 38 |

+

"transformers_version": "4.49.0",

|

| 39 |

+

"use_cache": true,

|

| 40 |

+

"vocab_size": 50264

|

| 41 |

+

}

|

models/gpt/generation_config.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 1,

|

| 4 |

+

"eos_token_id": 2,

|

| 5 |

+

"pad_token_id": 0,

|

| 6 |

+

"transformers_version": "4.49.0"

|

| 7 |

+

}

|

models/gpt/merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

models/gpt/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:35ab0cecc7830d01f87207685d0e56106529f0a76a5d492fadab75381810e11d

|

| 3 |

+

size 500941440

|

models/gpt/special_tokens_map.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<s>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": true,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "</s>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": true,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"mask_token": {

|

| 17 |

+

"content": "<mask>",

|

| 18 |

+

"lstrip": false,

|

| 19 |

+

"normalized": false,

|

| 20 |

+

"rstrip": false,

|

| 21 |

+

"single_word": false

|

| 22 |

+

},

|

| 23 |

+

"pad_token": {

|

| 24 |

+

"content": "<pad>",

|

| 25 |

+

"lstrip": false,

|

| 26 |

+

"normalized": true,

|

| 27 |

+

"rstrip": false,

|

| 28 |

+

"single_word": false

|

| 29 |

+

},

|

| 30 |

+

"unk_token": {

|

| 31 |

+

"content": "<unk>",

|

| 32 |

+

"lstrip": false,

|

| 33 |

+

"normalized": true,

|

| 34 |

+

"rstrip": false,

|

| 35 |

+

"single_word": false

|

| 36 |

+

}

|

| 37 |

+

}

|

models/gpt/tokenizer_config.json

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": false,

|

| 3 |

+

"add_prefix_space": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"0": {

|

| 6 |

+

"content": "<pad>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": true,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"1": {

|

| 14 |

+

"content": "<s>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": true,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"2": {

|

| 22 |

+

"content": "</s>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": true,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

},

|

| 29 |

+

"3": {

|

| 30 |

+

"content": "<unk>",

|

| 31 |

+

"lstrip": false,

|

| 32 |

+

"normalized": true,

|

| 33 |

+

"rstrip": false,

|

| 34 |

+

"single_word": false,

|

| 35 |

+

"special": true

|

| 36 |

+

},

|

| 37 |

+

"4": {

|

| 38 |

+

"content": "<mask>",

|

| 39 |

+

"lstrip": false,

|

| 40 |

+

"normalized": false,

|

| 41 |

+

"rstrip": false,

|

| 42 |

+

"single_word": false,

|

| 43 |

+

"special": true

|

| 44 |

+

}

|

| 45 |

+

},

|

| 46 |

+

"bos_token": "<s>",

|

| 47 |

+

"clean_up_tokenization_spaces": true,

|

| 48 |

+

"eos_token": "</s>",

|

| 49 |

+

"errors": "replace",

|

| 50 |

+

"extra_special_tokens": {},

|

| 51 |

+

"mask_token": "<mask>",

|

| 52 |

+

"model_max_length": 2048,

|

| 53 |

+

"pad_token": "<pad>",

|

| 54 |

+

"padding_side": "left",

|

| 55 |

+

"tokenizer_class": "GPT2Tokenizer",

|

| 56 |

+

"truncation_side": "left",

|

| 57 |

+

"trust_remote_code": false,

|

| 58 |

+

"unk_token": "<unk>"

|

| 59 |

+

}

|

models/gpt/vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

models/model_weight_bert.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dbab0ed274346035c59d70570ec310eccd4bae3a99026bddde54cc1ae13d2745

|

| 3 |

+

size 117123291

|

models/rest/__pycache__/model_lstm.cpython-312.pyc

ADDED

|

Binary file (3.63 kB). View file

|

|

|

models/rest/model_lstm.py

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

from nltk.corpus import stopwords

|

| 5 |

+

from nltk.tokenize import word_tokenize

|

| 6 |

+

import re

|

| 7 |

+

import string

|

| 8 |

+

from collections import Counter

|

| 9 |

+

import numpy as np

|

| 10 |

+

from typing import List

|

| 11 |

+

import time

|

| 12 |

+

|

| 13 |

+

# Загрузка предобученной модели

|

| 14 |

+

class BahdanauAttention(nn.Module):

|

| 15 |

+

def __init__(self, hidden_size: int):

|

| 16 |

+

super().__init__()

|

| 17 |

+

self.Wa = nn.Linear(hidden_size, hidden_size)

|

| 18 |

+

self.Wk = nn.Linear(hidden_size, hidden_size)

|

| 19 |

+

self.Wv = nn.Linear(hidden_size, 1)

|

| 20 |

+

|

| 21 |

+

def forward(self, query, keys):

|

| 22 |

+

query = query.unsqueeze(1) # (batch_size, 1, hidden_size)

|

| 23 |

+

scores = self.Wv(torch.tanh(self.Wa(query) + self.Wk(keys))).squeeze(2) # (batch_size, seq_len)

|

| 24 |

+

attention_weights = torch.softmax(scores, dim=1) # (batch_size, seq_len)

|

| 25 |

+

context = torch.bmm(attention_weights.unsqueeze(1), keys).squeeze(1) # (batch_size, hidden_size)

|

| 26 |

+

return context, attention_weights

|

| 27 |

+

|

| 28 |

+

class LSTM_Word2Vec_Attention(nn.Module):

|

| 29 |

+

def __init__(self, hidden_size: int, vocab_size: int, embedding_dim: int):

|

| 30 |

+

super().__init__()

|

| 31 |

+

self.embedding = nn.Embedding(vocab_size, embedding_dim)

|

| 32 |

+

self.lstm = nn.LSTM(embedding_dim, hidden_size, batch_first=True)

|

| 33 |

+

self.attn = BahdanauAttention(hidden_size)

|

| 34 |

+

self.clf = nn.Sequential(

|

| 35 |

+

nn.Linear(hidden_size, 128),

|

| 36 |

+

nn.Dropout(),

|

| 37 |

+

nn.Tanh(),

|

| 38 |

+

nn.Linear(128, 3)

|

| 39 |

+

)

|

| 40 |

+

self.sigmoid = nn.Sigmoid()

|

| 41 |

+

|

| 42 |

+

def forward(self, x):

|

| 43 |

+

embedded = self.embedding(x)

|

| 44 |

+

output, (hidden, _) = self.lstm(embedded)

|

| 45 |

+

context, attention_weights = self.attn(hidden[-1], output)

|

| 46 |

+

output = self.clf(context.squeeze(1))

|

| 47 |

+

output = self.sigmoid(output)

|

| 48 |

+

return output, attention_weights

|

models/rest/model_weights_3000cl.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1f16531decf5e481fe8797e7091a99064d96908cb542f9c575bccad8b1154b28

|

| 3 |

+

size 137376

|

models/rest/vocab_to_int.npy

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6a976c15c580cd058e957ed5d07370eaf0bfa8fe27e683bc566c63e646710fe2

|

| 3 |

+

size 12615

|

pages/analysis_of_reviews.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import pandas as pd

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

|

| 6 |

+

import nltk

|

| 7 |

+

nltk.download('stopwords')

|

| 8 |

+

nltk.download('punkt_tab')

|

| 9 |

+

nltk.download('wordnet')

|

| 10 |

+

|

| 11 |

+

from nltk.corpus import stopwords

|

| 12 |

+

from nltk.tokenize import word_tokenize

|

| 13 |

+

stop_words = set(stopwords.words('russian'))

|

| 14 |

+

|

| 15 |

+

from nltk.tokenize import word_tokenize

|

| 16 |

+

import re

|

| 17 |

+

import string

|

| 18 |

+

from collections import Counter

|

| 19 |

+

import numpy as np

|

| 20 |

+

from typing import List

|

| 21 |

+

import time

|

| 22 |

+

|

| 23 |

+

from models.rest.model_lstm import LSTM_Word2Vec_Attention

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

# # Добавление цвета для значений F1-Macro

|

| 27 |

+

def color_high(val):

|

| 28 |

+

color = 'lightgreen' if val > 0.80 else ''

|

| 29 |

+

return f'background-color: {color}'

|

| 30 |

+

|

| 31 |

+

# Данные для первой таблицы

|

| 32 |

+

data1 = {

|

| 33 |

+

"Модель": ["Линейная регрессия", "Дерево решений"],

|

| 34 |

+

"F1-Macro": [0.2235, 0.1688]

|

| 35 |

+

}

|

| 36 |

+

|

| 37 |

+

# Данные для второй таблицы

|

| 38 |

+

data2 = {

|

| 39 |

+

"Модель": ["Линейная регрессия", "Наивный Байес", "Деревья", "XGBoost", "CatBoost"],

|

| 40 |

+

"F1-Macro": [0.7821, 0.7313, 0.8170, 0.7785, 0.7693]

|

| 41 |

+

}

|

| 42 |

+

|

| 43 |

+

# Создание DataFrame

|

| 44 |

+

df1 = pd.DataFrame(data1)

|

| 45 |

+

df2 = pd.DataFrame(data2)

|

| 46 |

+

|

| 47 |

+

st.title(":blue[_Классификация отзывов на рестораны_]")

|

| 48 |

+

st.image('images/53896.jpg')

|

| 49 |

+

|

| 50 |

+

# Отображение заголовков и таблиц

|

| 51 |

+

st.subheader("Сравнение моделей по метрике F1-Macro")

|

| 52 |

+

|

| 53 |

+

st.subheader("Начало. До понимания, что данные хуже чем можно было представить")

|

| 54 |

+

st.table(df1)

|

| 55 |

+

|

| 56 |

+

st.subheader("После всего... Работа классических ML-моделей")

|

| 57 |

+

df2 = df2.style.applymap(color_high, subset=['F1-Macro'])

|

| 58 |

+

st.table(df2)

|

| 59 |

+

|

| 60 |

+

# Загрузка модели и весов

|

| 61 |

+

@st.cache_data()

|

| 62 |

+

def load_model():

|

| 63 |

+

hidden_size = 32

|

| 64 |

+

vocab_size = 310

|

| 65 |

+

embedding_dim = 50

|

| 66 |

+

model = LSTM_Word2Vec_Attention(hidden_size, vocab_size, embedding_dim)

|

| 67 |

+

model.load_state_dict(torch.load('models/rest/model_weights_3000cl.pt', map_location=torch.device('cpu')))

|

| 68 |

+

model.eval()

|

| 69 |

+

return model

|

| 70 |

+

|

| 71 |

+

model = load_model()

|

| 72 |

+

|

| 73 |

+

# Предобработка текста

|

| 74 |

+

def preprocess_text(text: str) -> List[str]:

|

| 75 |

+

text = text.lower()

|

| 76 |

+

text = re.sub(r'<.*?>', '', text)

|

| 77 |

+

text = re.sub(r"[…–”—]", "", text)

|

| 78 |

+

text = re.sub(r'\d+', '', text)

|

| 79 |

+

text = re.sub(r'\b[a-zA-Z]+\b', '', text)

|

| 80 |

+

text = re.sub(r'\s+', ' ', text).strip()

|

| 81 |

+

text = ''.join([c for c in text if c not in string.punctuation])

|

| 82 |

+

tokens = word_tokenize(text, language='russian')

|

| 83 |

+

tokens = [word for word in tokens if word not in stopwords.words('russian')]

|

| 84 |

+

return tokens

|

| 85 |

+

|

| 86 |

+

# Словарь

|

| 87 |

+

# Предположим, что у вас есть словарь, который вы использовали для обучения модели

|

| 88 |

+

vocab_to_int = np.load('models/rest/vocab_to_int.npy', allow_pickle=True).item()

|

| 89 |

+

|

| 90 |

+

# Функция для преобразования текста в индексы

|

| 91 |

+

def text_to_indices(text: str) -> torch.Tensor:

|

| 92 |

+

tokens = preprocess_text(text)

|

| 93 |

+

indices = [vocab_to_int.get(token, 0) for token in tokens] # 0 для неизвестных слов

|

| 94 |

+

return torch.tensor([indices[:200] + [0]*(200 - len(indices))]) # Пэддинг до 200

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

st.title("Классификация отзывов о ресторанах")

|

| 98 |

+

|

| 99 |

+

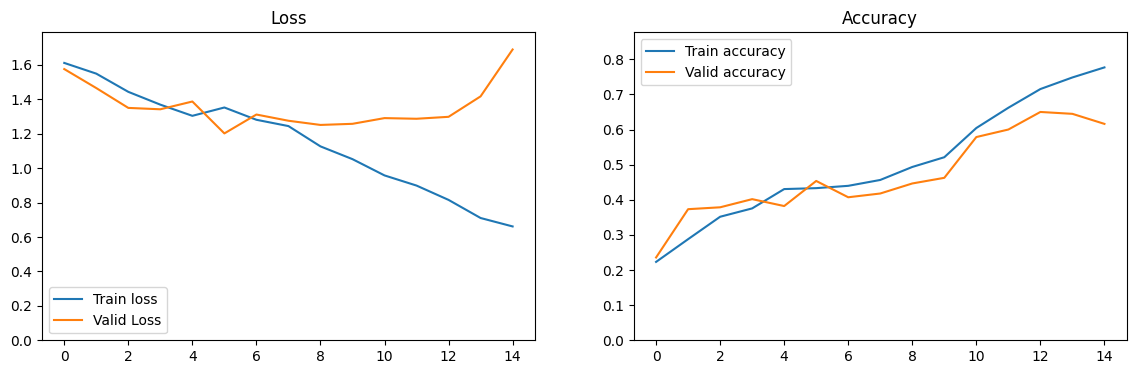

st.image('images/grafic.jpg')

|

| 100 |

+

|

| 101 |

+

review = st.text_area("Введите ваш отзыв о ресторане:")

|

| 102 |

+

|

| 103 |

+

if st.button("Предсказать"):

|

| 104 |

+

if review.strip() == "":

|

| 105 |

+

st.write("Пожалуйста, введите отзыв.")

|

| 106 |

+

else:

|

| 107 |

+

start_time = time.time()

|

| 108 |

+

input_data = text_to_indices(review)

|

| 109 |

+

with torch.no_grad():

|

| 110 |

+

output, attention_weights = model(input_data)

|

| 111 |

+

prediction = torch.argmax(output, dim=1).item()

|

| 112 |

+

end_time = time.time()

|

| 113 |

+

elapsed_time = end_time - start_time

|

| 114 |

+

st.write(f"Предсказанный класс: {prediction} (Положительный: 2, Отрицательный: 1, Нейтральный: 0)")

|

| 115 |

+

st.write(f"Время предсказания: {elapsed_time:.3f} секунд")

|

| 116 |

+

|

pages/gpt.py

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

# Загрузка модели и токенизатора

|

| 6 |

+

@st.cache_resource

|

| 7 |

+

def load_model():

|

| 8 |

+

model_name = "models/gpt"

|

| 9 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 10 |

+

model = AutoModelForCausalLM.from_pretrained(model_name)

|

| 11 |

+

return model, tokenizer

|

| 12 |

+

|

| 13 |

+

def generate_text(model, tokenizer, prompt, gen_params):

|

| 14 |

+

inputs = tokenizer(prompt, return_tensors="pt")

|

| 15 |

+

|

| 16 |

+

with torch.no_grad():

|

| 17 |

+

outputs = model.generate(

|

| 18 |

+

inputs.input_ids,

|

| 19 |

+

max_length=gen_params['max_length'],

|

| 20 |

+

temperature=gen_params['temperature'],

|

| 21 |

+

top_k=gen_params['top_k'],

|

| 22 |

+

top_p=gen_params['top_p'],

|

| 23 |

+

num_return_sequences=gen_params['num_return_sequences'],

|

| 24 |

+

do_sample=True,

|

| 25 |

+

pad_token_id=tokenizer.eos_token_id

|

| 26 |

+

)

|

| 27 |

+

|

| 28 |

+

generated = []

|

| 29 |

+

for i, output in enumerate(outputs):

|

| 30 |

+

text = tokenizer.decode(output, skip_special_tokens=True)

|

| 31 |

+

generated.append(f"Генерация {i+1}:\n{text}\n{'-'*50}")

|

| 32 |

+

|

| 33 |

+

return generated

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def main():

|

| 37 |

+

st.markdown(

|

| 38 |

+

"<h1 style='text-align: center;'>Генератор текста</h1>",

|

| 39 |

+

unsafe_allow_html=True

|

| 40 |

+

)

|

| 41 |

+

st.markdown(

|

| 42 |

+

"<h3 style='text-align: center;'>(ну почти)</h3>",

|

| 43 |

+

unsafe_allow_html=True

|

| 44 |

+

)

|

| 45 |

+

st.markdown("---")

|

| 46 |

+

|

| 47 |

+

col1, col2, col3 = st.columns([1, 2, 1])

|

| 48 |

+

with col2:

|

| 49 |

+

st.image('images/scale_1200.png', width=500)

|

| 50 |

+

|

| 51 |

+

# Загрузка модели

|

| 52 |

+

model, tokenizer = load_model()

|

| 53 |

+

|

| 54 |

+

# Параметры генерации

|

| 55 |

+

with st.sidebar:

|

| 56 |

+

st.header("Настройки генерации")

|

| 57 |

+

prompt = st.text_area("Введите начальный текст:", height=100)

|

| 58 |

+

max_length = st.slider("Максимальная длина:", 50, 500, 100)

|

| 59 |

+

num_return_sequences = st.slider("Число генераций:", 1, 5, 1)

|

| 60 |

+

|

| 61 |

+

st.subheader("Параметры выборки:")

|

| 62 |

+

sampling_method = st.radio("Метод:", ["Temperature", "Top-k & Top-p"])

|

| 63 |

+

|

| 64 |

+

if sampling_method == "Temperature":

|

| 65 |

+

temperature = st.slider("Temperature:", 0.1, 2.0, 1.0, 0.1)

|

| 66 |

+

top_k = None

|

| 67 |

+

top_p = None

|

| 68 |

+

else:

|

| 69 |

+

temperature = 1.0

|

| 70 |

+

top_k = st.slider("Top-k:", 1, 100, 50)

|

| 71 |

+

top_p = st.slider("Top-p:", 0.1, 1.0, 0.9, 0.05)

|

| 72 |

+

|

| 73 |

+

# Кнопка генерации

|

| 74 |

+

if st.sidebar.button("Сгенерировать текст"):

|

| 75 |

+

if not prompt:

|

| 76 |

+

st.warning("Введите начальный текст!")

|

| 77 |

+

return

|

| 78 |

+

|

| 79 |

+

gen_params = {

|

| 80 |

+

'max_length': max_length,

|

| 81 |

+

'temperature': temperature,

|

| 82 |

+

'top_k': top_k,

|

| 83 |

+

'top_p': top_p,

|

| 84 |

+

'num_return_sequences': num_return_sequences

|

| 85 |

+

}

|

| 86 |

+

|

| 87 |

+

with st.spinner("Прибухиваем..."):

|

| 88 |

+

generated = generate_text(model, tokenizer, prompt, gen_params)

|

| 89 |

+

|

| 90 |

+

st.markdown("---")

|

| 91 |

+

st.subheader("Результаты:")

|

| 92 |

+

for text in generated:

|

| 93 |

+

st.text_area(label="", value=text, height=200)

|

| 94 |

+

|

| 95 |

+

if __name__ == "__main__":

|

| 96 |

+

main()

|

pages/tgchannels.py

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from transformers import AutoTokenizer, AutoModel

|

| 3 |

+

import torch

|

| 4 |

+

from torch import nn

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

# Загрузка модели и токенизатора (кешируем для ускорения)

|

| 8 |

+

@st.cache_resource

|

| 9 |

+

def load_model():

|

| 10 |

+

MODEL_NAME = "cointegrated/rubert-tiny2"

|

| 11 |

+

model = AutoModel.from_pretrained(MODEL_NAME, num_labels=5)

|

| 12 |

+

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

|

| 13 |

+

return model, tokenizer

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

PATH = "models/model_weight_bert.pt"

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

class MyTinyBERT(nn.Module):

|

| 20 |

+

def __init__(self, model):

|

| 21 |

+

super().__init__()

|

| 22 |

+

self.bert = model

|

| 23 |

+

for param in self.bert.parameters():

|

| 24 |

+

param.requires_grad = False

|

| 25 |

+

self.linear = nn.Sequential(

|

| 26 |

+

nn.Linear(312, 256), nn.Dropout(0.3), nn.ReLU(), nn.Linear(256, 5)

|

| 27 |

+

)

|

| 28 |

+

|

| 29 |

+

def forward(self, input_ids, attention_mask):

|

| 30 |

+

bert_out = self.bert(input_ids=input_ids, attention_mask=attention_mask)

|

| 31 |

+

normed_bert_out = bert_out.last_hidden_state[:, 0, :]

|

| 32 |

+

out = self.linear(normed_bert_out)

|

| 33 |

+

return out

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def classification_myBERT(text, model, tokenizer):

|

| 37 |

+

model = MyTinyBERT(model)

|

| 38 |

+

model.load_state_dict(torch.load(PATH, weights_only=True))

|

| 39 |

+

model.eval()

|

| 40 |

+

my_classes = {0: "Крипта", 1: "Мода", 2: "Спорт", 3: "Технологии", 4: "Финансы"}

|

| 41 |

+

t = tokenizer(text, padding=True, truncation=True, return_tensors="pt")

|

| 42 |

+

return f'Хоть я и не ChatGPT, осмелюсь предположить, что данный текст относится к следующему классу:\n{my_classes[torch.argmax(model(t["input_ids"], t["attention_mask"])).item()]}'

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

# Интерфейс Streamlit

|

| 46 |

+

def main():

|

| 47 |

+

st.markdown(

|

| 48 |

+

"<h1 style='text-align: center;'>Классификация тематики новостей из телеграм каналов.</h1>",

|

| 49 |

+

unsafe_allow_html=True,

|

| 50 |

+

)

|

| 51 |

+

st.markdown("---")

|

| 52 |

+

|

| 53 |

+

col1, col2, col3 = st.columns([1, 8, 1]) # Центральная колонка шире остальных

|

| 54 |

+

with col2:

|

| 55 |

+

st.markdown(

|

| 56 |

+

"<h5 style='text-align: center;'>Использование классического алгоритма</h5>",

|

| 57 |

+

unsafe_allow_html=True,

|

| 58 |

+

)

|

| 59 |

+

# st.text("Использование классического алгоритма")

|

| 60 |

+

st.image("./images/Struct.png", width=500)

|

| 61 |

+

st.image("./images/L_A.png", width=800)

|

| 62 |

+

st.image("./images/C_M.png", width=800)

|

| 63 |

+

|

| 64 |

+

st.markdown(

|

| 65 |

+

"<h5 style='text-align: center;'>Стандартный rubert_tiny2</h5>",

|

| 66 |

+

unsafe_allow_html=True,

|

| 67 |

+

)

|

| 68 |

+

# st.text("Использование классического алгоритма")

|

| 69 |

+

st.image("./images/LogReg.png", width=800)

|

| 70 |

+

|

| 71 |

+

st.markdown(

|

| 72 |

+

"<h5 style='text-align: center;'>rubert_tiny2 с обучаемым fc слоем</h5>",

|

| 73 |

+

unsafe_allow_html=True,

|

| 74 |

+

)

|

| 75 |

+

# st.text("Использование классического алгоритма")

|

| 76 |

+

st.image("./images/myTinyBERT.png", width=800)

|

| 77 |

+

|

| 78 |

+

# Загрузка модели

|

| 79 |

+

model, tokenizer = load_model()

|

| 80 |

+

|

| 81 |

+

# Параметры генерации

|

| 82 |

+

with st.sidebar:

|

| 83 |

+

st.header("Настройки генерации")

|

| 84 |

+

prompt = st.text_area("Введите начальный текст:", height=100)

|

| 85 |

+

|

| 86 |

+

# Кнопка генерации

|

| 87 |

+

if st.sidebar.button("Сгенерировать текст"):

|

| 88 |

+

if not prompt:

|

| 89 |

+

st.warning("Введите начальный текст!")

|

| 90 |

+

return

|

| 91 |

+

|

| 92 |

+

st.subheader("Результаты:")

|

| 93 |

+

st.text(classification_myBERT(prompt, model, tokenizer))

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

if __name__ == "__main__":

|

| 97 |

+

main()

|

requirements.txt

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

altair==5.5.0

|

| 2 |

+

attrs==25.1.0

|

| 3 |

+

blinker==1.9.0

|

| 4 |

+

cachetools==5.5.2

|

| 5 |

+

certifi==2025.1.31

|

| 6 |

+

charset-normalizer==3.4.1

|

| 7 |

+

click==8.1.8

|

| 8 |

+

contourpy==1.3.1

|

| 9 |

+

cycler==0.12.1

|

| 10 |

+

filelock==3.17.0

|

| 11 |

+

fonttools==4.56.0

|

| 12 |

+

fsspec==2025.2.0

|

| 13 |

+

gitdb==4.0.12

|

| 14 |

+

GitPython==3.1.44

|

| 15 |

+

huggingface-hub==0.29.1

|

| 16 |

+

idna==3.10

|

| 17 |

+

Jinja2==3.1.5

|

| 18 |

+

joblib==1.4.2

|

| 19 |

+

jsonschema==4.23.0

|

| 20 |

+

jsonschema-specifications==2024.10.1

|

| 21 |

+

kiwisolver==1.4.8

|

| 22 |

+

markdown-it-py==3.0.0

|

| 23 |

+

MarkupSafe==3.0.2

|

| 24 |

+

matplotlib==3.10.0

|

| 25 |

+

mdurl==0.1.2

|

| 26 |

+

mpmath==1.3.0

|

| 27 |

+

narwhals==1.27.1

|

| 28 |

+

networkx==3.4.2

|

| 29 |

+

nltk==3.9.1

|

| 30 |

+

numpy==2.2.3

|

| 31 |

+

nvidia-cublas-cu12==12.4.5.8

|

| 32 |

+

nvidia-cuda-cupti-cu12==12.4.127

|

| 33 |

+

nvidia-cuda-nvrtc-cu12==12.4.127

|

| 34 |

+

nvidia-cuda-runtime-cu12==12.4.127

|

| 35 |

+

nvidia-cudnn-cu12==9.1.0.70

|

| 36 |

+

nvidia-cufft-cu12==11.2.1.3

|

| 37 |

+

nvidia-curand-cu12==10.3.5.147

|

| 38 |

+

nvidia-cusolver-cu12==11.6.1.9

|

| 39 |

+

nvidia-cusparse-cu12==12.3.1.170

|

| 40 |

+

nvidia-cusparselt-cu12==0.6.2

|

| 41 |

+

nvidia-nccl-cu12==2.21.5

|

| 42 |

+

nvidia-nvjitlink-cu12==12.4.127

|

| 43 |

+

nvidia-nvtx-cu12==12.4.127

|

| 44 |

+

packaging==24.2

|

| 45 |

+

pandas==2.2.3

|

| 46 |

+

pillow==11.1.0

|

| 47 |

+

pip==25.0.1

|

| 48 |

+

protobuf==5.29.3

|

| 49 |

+

pyarrow==19.0.1

|

| 50 |

+

pydeck==0.9.1

|

| 51 |

+

Pygments==2.19.1

|

| 52 |

+

pyparsing==3.2.1

|

| 53 |

+

python-dateutil==2.9.0.post0

|

| 54 |

+

pytz==2025.1

|

| 55 |

+

PyYAML==6.0.2

|

| 56 |

+

referencing==0.36.2

|

| 57 |

+

regex==2024.11.6

|

| 58 |

+

requests==2.32.3

|

| 59 |

+

rich==13.9.4

|

| 60 |

+

rpds-py==0.23.0

|

| 61 |

+

safetensors==0.5.2

|

| 62 |

+

scikit-learn==1.6.1

|

| 63 |

+

scipy==1.15.2

|

| 64 |

+

seaborn==0.13.2

|

| 65 |

+

setuptools==75.8.0

|

| 66 |

+

six==1.17.0

|

| 67 |

+

smmap==5.0.2

|

| 68 |

+

streamlit==1.42.2

|

| 69 |

+

sympy==1.13.1

|

| 70 |

+

tenacity==9.0.0

|

| 71 |

+

threadpoolctl==3.5.0

|

| 72 |

+

tokenizers==0.21.0

|

| 73 |

+

toml==0.10.2

|

| 74 |

+

torch==2.6.0

|

| 75 |

+

tornado==6.4.2

|

| 76 |

+

tqdm==4.67.1

|

| 77 |

+

transformers==4.49.0

|

| 78 |

+

triton==3.2.0

|

| 79 |

+

typing_extensions==4.12.2

|

| 80 |

+

tzdata==2025.1

|

| 81 |

+

urllib3==2.3.0

|

| 82 |

+

watchdog==6.0.0

|

| 83 |

+

wheel==0.45.1

|