Spaces:

Runtime error

Runtime error

Commit

•

0df5c51

1

Parent(s):

0ecb39b

Revert "Added Yolov5"

Browse filesThis reverts commit 0ecb39b61d564b6448b14452544e080965349198.

- .gitignore +0 -3

- app.py +2 -2

- lib/yolov5/README.md +497 -0

- lib/yolov5/README.zh-CN.md +9 -9

- lib/yolov5/requirements.txt +49 -0

- lib/yolov5/tutorial.ipynb +1 -1

- lib/yolov5/utils/__init__.py +1 -1

- lib/yolov5/utils/general.py +1 -1

- lib/yolov5/utils/loggers/comet/optimizer_config.json +209 -0

- lib/yolov5/val.py +0 -2

- static/example/input_file_results.json +0 -0

- static/example/input_file_results.zip +3 -0

.gitignore

CHANGED

|

@@ -9,10 +9,7 @@ user_data/*

|

|

| 9 |

|

| 10 |

.ipynb_checkpoints

|

| 11 |

.tmp*

|

| 12 |

-

*.mp4

|

| 13 |

*.jpg

|

| 14 |

-

*.json

|

| 15 |

-

*.zip

|

| 16 |

*.aris

|

| 17 |

*.log

|

| 18 |

*.DS_STORE

|

|

|

|

| 9 |

|

| 10 |

.ipynb_checkpoints

|

| 11 |

.tmp*

|

|

|

|

| 12 |

*.jpg

|

|

|

|

|

|

|

| 13 |

*.aris

|

| 14 |

*.log

|

| 15 |

*.DS_STORE

|

app.py

CHANGED

|

@@ -121,7 +121,7 @@ def show_data():

|

|

| 121 |

}

|

| 122 |

|

| 123 |

not_done = state['index'] < state['total']

|

| 124 |

-

|

| 125 |

return {

|

| 126 |

zip_out: gr.update(value=result["path_zip"]),

|

| 127 |

tabs[i]['tab']: gr.update(),

|

|

@@ -183,7 +183,7 @@ with demo:

|

|

| 183 |

UI_components.extend([tab, metadata_out, video_out, table_out])

|

| 184 |

|

| 185 |

# Button to show example result

|

| 186 |

-

gr.Button(value="Show Example Result").click(show_example_data, None, result_handler)

|

| 187 |

|

| 188 |

# Disclaimer at the bottom of page

|

| 189 |

gr.HTML(

|

|

|

|

| 121 |

}

|

| 122 |

|

| 123 |

not_done = state['index'] < state['total']

|

| 124 |

+

|

| 125 |

return {

|

| 126 |

zip_out: gr.update(value=result["path_zip"]),

|

| 127 |

tabs[i]['tab']: gr.update(),

|

|

|

|

| 183 |

UI_components.extend([tab, metadata_out, video_out, table_out])

|

| 184 |

|

| 185 |

# Button to show example result

|

| 186 |

+

#gr.Button(value="Show Example Result").click(show_example_data, None, result_handler)

|

| 187 |

|

| 188 |

# Disclaimer at the bottom of page

|

| 189 |

gr.HTML(

|

lib/yolov5/README.md

ADDED

|

@@ -0,0 +1,497 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

<p>

|

| 3 |

+

<a align="center" href="https://ultralytics.com/yolov5" target="_blank">

|

| 4 |

+

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png"></a>

|

| 5 |

+

</p>

|

| 6 |

+

|

| 7 |

+

[English](README.md) | [简体中文](README.zh-CN.md)

|

| 8 |

+

<br>

|

| 9 |

+

|

| 10 |

+

<div>

|

| 11 |

+

<a href="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml/badge.svg" alt="YOLOv5 CI"></a>

|

| 12 |

+

<a href="https://zenodo.org/badge/latestdoi/264818686"><img src="https://zenodo.org/badge/264818686.svg" alt="YOLOv5 Citation"></a>

|

| 13 |

+

<a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

|

| 14 |

+

<br>

|

| 15 |

+

<a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a>

|

| 16 |

+

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

|

| 17 |

+

<a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

| 18 |

+

</div>

|

| 19 |

+

<br>

|

| 20 |

+

|

| 21 |

+

YOLOv5 🚀 is the world's most loved vision AI, representing <a href="https://ultralytics.com">Ultralytics</a> open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development.

|

| 22 |

+

|

| 23 |

+

We hope that the resources here will help you get the most out of YOLOv5. Please browse the YOLOv5 <a href="https://docs.ultralytics.com/yolov5">Docs</a> for details, raise an issue on <a href="https://github.com/ultralytics/yolov5/issues/new/choose">GitHub</a> for support, and join our <a href="https://discord.gg/2wNGbc6g9X">Discord</a> community for questions and discussions!

|

| 24 |

+

|

| 25 |

+

To request an Enterprise License please complete the form at [Ultralytics Licensing](https://ultralytics.com/license).

|

| 26 |

+

|

| 27 |

+

<div align="center">

|

| 28 |

+

<a href="https://github.com/ultralytics" style="text-decoration:none;">

|

| 29 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="2%" alt="" /></a>

|

| 30 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 31 |

+

<a href="https://www.linkedin.com/company/ultralytics/" style="text-decoration:none;">

|

| 32 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="2%" alt="" /></a>

|

| 33 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 34 |

+

<a href="https://twitter.com/ultralytics" style="text-decoration:none;">

|

| 35 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="2%" alt="" /></a>

|

| 36 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 37 |

+

<a href="https://youtube.com/ultralytics" style="text-decoration:none;">

|

| 38 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="2%" alt="" /></a>

|

| 39 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 40 |

+

<a href="https://www.tiktok.com/@ultralytics" style="text-decoration:none;">

|

| 41 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="2%" alt="" /></a>

|

| 42 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 43 |

+

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

| 44 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

| 45 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 46 |

+

<a href="https://discord.gg/2wNGbc6g9X" style="text-decoration:none;">

|

| 47 |

+

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="2%" alt="" /></a>

|

| 48 |

+

</div>

|

| 49 |

+

|

| 50 |

+

</div>

|

| 51 |

+

<br>

|

| 52 |

+

|

| 53 |

+

## <div align="center">YOLOv8 🚀 NEW</div>

|

| 54 |

+

|

| 55 |

+

We are thrilled to announce the launch of Ultralytics YOLOv8 🚀, our NEW cutting-edge, state-of-the-art (SOTA) model

|

| 56 |

+

released at **[https://github.com/ultralytics/ultralytics](https://github.com/ultralytics/ultralytics)**.

|

| 57 |

+

YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of

|

| 58 |

+

object detection, image segmentation and image classification tasks.

|

| 59 |

+

|

| 60 |

+

See the [YOLOv8 Docs](https://docs.ultralytics.com) for details and get started with:

|

| 61 |

+

|

| 62 |

+

[](https://badge.fury.io/py/ultralytics) [](https://pepy.tech/project/ultralytics)

|

| 63 |

+

|

| 64 |

+

```bash

|

| 65 |

+

pip install ultralytics

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

<div align="center">

|

| 69 |

+

<a href="https://ultralytics.com/yolov8" target="_blank">

|

| 70 |

+

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov8/yolo-comparison-plots.png"></a>

|

| 71 |

+

</div>

|

| 72 |

+

|

| 73 |

+

## <div align="center">Documentation</div>

|

| 74 |

+

|

| 75 |

+

See the [YOLOv5 Docs](https://docs.ultralytics.com/yolov5) for full documentation on training, testing and deployment. See below for quickstart examples.

|

| 76 |

+

|

| 77 |

+

<details open>

|

| 78 |

+

<summary>Install</summary>

|

| 79 |

+

|

| 80 |

+

Clone repo and install [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a

|

| 81 |

+

[**Python>=3.7.0**](https://www.python.org/) environment, including

|

| 82 |

+

[**PyTorch>=1.7**](https://pytorch.org/get-started/locally/).

|

| 83 |

+

|

| 84 |

+

```bash

|

| 85 |

+

git clone https://github.com/ultralytics/yolov5 # clone

|

| 86 |

+

cd yolov5

|

| 87 |

+

pip install -r requirements.txt # install

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

</details>

|

| 91 |

+

|

| 92 |

+

<details>

|

| 93 |

+

<summary>Inference</summary>

|

| 94 |

+

|

| 95 |

+

YOLOv5 [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading) inference. [Models](https://github.com/ultralytics/yolov5/tree/master/models) download automatically from the latest

|

| 96 |

+

YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

| 97 |

+

|

| 98 |

+

```python

|

| 99 |

+

import torch

|

| 100 |

+

|

| 101 |

+

# Model

|

| 102 |

+

model = torch.hub.load("ultralytics/yolov5", "yolov5s") # or yolov5n - yolov5x6, custom

|

| 103 |

+

|

| 104 |

+

# Images

|

| 105 |

+

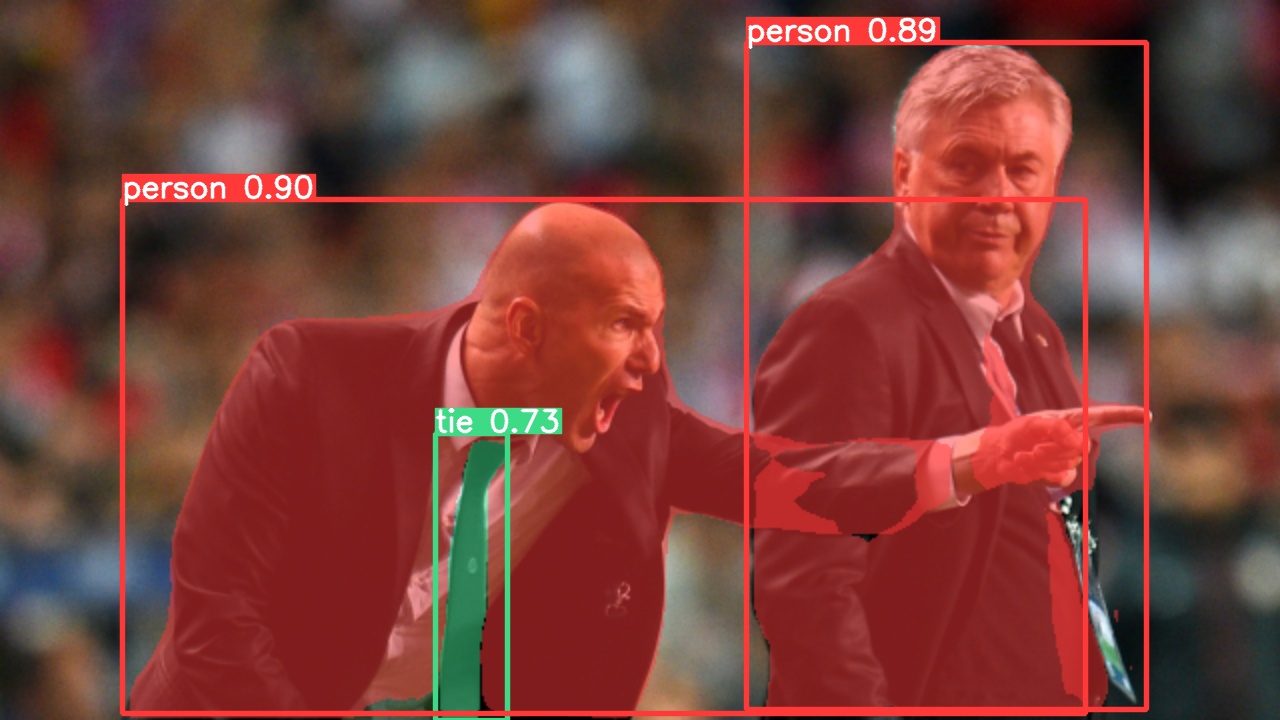

img = "https://ultralytics.com/images/zidane.jpg" # or file, Path, PIL, OpenCV, numpy, list

|

| 106 |

+

|

| 107 |

+

# Inference

|

| 108 |

+

results = model(img)

|

| 109 |

+

|

| 110 |

+

# Results

|

| 111 |

+

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

</details>

|

| 115 |

+

|

| 116 |

+

<details>

|

| 117 |

+

<summary>Inference with detect.py</summary>

|

| 118 |

+

|

| 119 |

+

`detect.py` runs inference on a variety of sources, downloading [models](https://github.com/ultralytics/yolov5/tree/master/models) automatically from

|

| 120 |

+

the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

|

| 121 |

+

|

| 122 |

+

```bash

|

| 123 |

+

python detect.py --weights yolov5s.pt --source 0 # webcam

|

| 124 |

+

img.jpg # image

|

| 125 |

+

vid.mp4 # video

|

| 126 |

+

screen # screenshot

|

| 127 |

+

path/ # directory

|

| 128 |

+

list.txt # list of images

|

| 129 |

+

list.streams # list of streams

|

| 130 |

+

'path/*.jpg' # glob

|

| 131 |

+

'https://youtu.be/Zgi9g1ksQHc' # YouTube

|

| 132 |

+

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

</details>

|

| 136 |

+

|

| 137 |

+

<details>

|

| 138 |

+

<summary>Training</summary>

|

| 139 |

+

|

| 140 |

+

The commands below reproduce YOLOv5 [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh)

|

| 141 |

+

results. [Models](https://github.com/ultralytics/yolov5/tree/master/models)

|

| 142 |

+

and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest

|

| 143 |

+

YOLOv5 [release](https://github.com/ultralytics/yolov5/releases). Training times for YOLOv5n/s/m/l/x are

|

| 144 |

+

1/2/4/6/8 days on a V100 GPU ([Multi-GPU](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training) times faster). Use the

|

| 145 |

+

largest `--batch-size` possible, or pass `--batch-size -1` for

|

| 146 |

+

YOLOv5 [AutoBatch](https://github.com/ultralytics/yolov5/pull/5092). Batch sizes shown for V100-16GB.

|

| 147 |

+

|

| 148 |

+

```bash

|

| 149 |

+

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128

|

| 150 |

+

yolov5s 64

|

| 151 |

+

yolov5m 40

|

| 152 |

+

yolov5l 24

|

| 153 |

+

yolov5x 16

|

| 154 |

+

```

|

| 155 |

+

|

| 156 |

+

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png">

|

| 157 |

+

|

| 158 |

+

</details>

|

| 159 |

+

|

| 160 |

+

<details open>

|

| 161 |

+

<summary>Tutorials</summary>

|

| 162 |

+

|

| 163 |

+

- [Train Custom Data](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data) 🚀 RECOMMENDED

|

| 164 |

+

- [Tips for Best Training Results](https://docs.ultralytics.com/yolov5/tutorials/tips_for_best_training_results) ☘️

|

| 165 |

+

- [Multi-GPU Training](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training)

|

| 166 |

+

- [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading) 🌟 NEW

|

| 167 |

+

- [TFLite, ONNX, CoreML, TensorRT Export](https://docs.ultralytics.com/yolov5/tutorials/model_export) 🚀

|

| 168 |

+

- [NVIDIA Jetson platform Deployment](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano) 🌟 NEW

|

| 169 |

+

- [Test-Time Augmentation (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation)

|

| 170 |

+

- [Model Ensembling](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling)

|

| 171 |

+

- [Model Pruning/Sparsity](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity)

|

| 172 |

+

- [Hyperparameter Evolution](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution)

|

| 173 |

+

- [Transfer Learning with Frozen Layers](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers)

|

| 174 |

+

- [Architecture Summary](https://docs.ultralytics.com/yolov5/tutorials/architecture_description) 🌟 NEW

|

| 175 |

+

- [Roboflow for Datasets, Labeling, and Active Learning](https://docs.ultralytics.com/yolov5/tutorials/roboflow_datasets_integration)

|

| 176 |

+

- [ClearML Logging](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration) 🌟 NEW

|

| 177 |

+

- [YOLOv5 with Neural Magic's Deepsparse](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization) 🌟 NEW

|

| 178 |

+

- [Comet Logging](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration) 🌟 NEW

|

| 179 |

+

|

| 180 |

+

</details>

|

| 181 |

+

|

| 182 |

+

## <div align="center">Integrations</div>

|

| 183 |

+

|

| 184 |

+

<br>

|

| 185 |

+

<a align="center" href="https://bit.ly/ultralytics_hub" target="_blank">

|

| 186 |

+

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/integrations-loop.png"></a>

|

| 187 |

+

<br>

|

| 188 |

+

<br>

|

| 189 |

+

|

| 190 |

+

<div align="center">

|

| 191 |

+

<a href="https://roboflow.com/?ref=ultralytics">

|

| 192 |

+

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-roboflow.png" width="10%" /></a>

|

| 193 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

|

| 194 |

+

<a href="https://cutt.ly/yolov5-readme-clearml">

|

| 195 |

+

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-clearml.png" width="10%" /></a>

|

| 196 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

|

| 197 |

+

<a href="https://bit.ly/yolov5-readme-comet2">

|

| 198 |

+

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-comet.png" width="10%" /></a>

|

| 199 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

|

| 200 |

+

<a href="https://bit.ly/yolov5-neuralmagic">

|

| 201 |

+

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" /></a>

|

| 202 |

+

</div>

|

| 203 |

+

|

| 204 |

+

| Roboflow | ClearML ⭐ NEW | Comet ⭐ NEW | Neural Magic ⭐ NEW |

|

| 205 |

+

| :--------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------: |

|

| 206 |

+

| Label and export your custom datasets directly to YOLOv5 for training with [Roboflow](https://roboflow.com/?ref=ultralytics) | Automatically track, visualize and even remotely train YOLOv5 using [ClearML](https://cutt.ly/yolov5-readme-clearml) (open-source!) | Free forever, [Comet](https://bit.ly/yolov5-readme-comet2) lets you save YOLOv5 models, resume training, and interactively visualise and debug predictions | Run YOLOv5 inference up to 6x faster with [Neural Magic DeepSparse](https://bit.ly/yolov5-neuralmagic) |

|

| 207 |

+

|

| 208 |

+

## <div align="center">Ultralytics HUB</div>

|

| 209 |

+

|

| 210 |

+

Experience seamless AI with [Ultralytics HUB](https://bit.ly/ultralytics_hub) ⭐, the all-in-one solution for data visualization, YOLOv5 and YOLOv8 🚀 model training and deployment, without any coding. Transform images into actionable insights and bring your AI visions to life with ease using our cutting-edge platform and user-friendly [Ultralytics App](https://ultralytics.com/app_install). Start your journey for **Free** now!

|

| 211 |

+

|

| 212 |

+

<a align="center" href="https://bit.ly/ultralytics_hub" target="_blank">

|

| 213 |

+

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png"></a>

|

| 214 |

+

|

| 215 |

+

## <div align="center">Why YOLOv5</div>

|

| 216 |

+

|

| 217 |

+

YOLOv5 has been designed to be super easy to get started and simple to learn. We prioritize real-world results.

|

| 218 |

+

|

| 219 |

+

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040763-93c22a27-347c-4e3c-847a-8094621d3f4e.png"></p>

|

| 220 |

+

<details>

|

| 221 |

+

<summary>YOLOv5-P5 640 Figure</summary>

|

| 222 |

+

|

| 223 |

+

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040757-ce0934a3-06a6-43dc-a979-2edbbd69ea0e.png"></p>

|

| 224 |

+

</details>

|

| 225 |

+

<details>

|

| 226 |

+

<summary>Figure Notes</summary>

|

| 227 |

+

|

| 228 |

+

- **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset over various inference sizes from 256 to 1536.

|

| 229 |

+

- **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100 instance at batch-size 32.

|

| 230 |

+

- **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8.

|

| 231 |

+

- **Reproduce** by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

|

| 232 |

+

|

| 233 |

+

</details>

|

| 234 |

+

|

| 235 |

+

### Pretrained Checkpoints

|

| 236 |

+

|

| 237 |

+

| Model | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | mAP<sup>val<br>50 | Speed<br><sup>CPU b1<br>(ms) | Speed<br><sup>V100 b1<br>(ms) | Speed<br><sup>V100 b32<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

| 238 |

+

| ----------------------------------------------------------------------------------------------- | --------------------- | -------------------- | ----------------- | ---------------------------- | ----------------------------- | ------------------------------ | ------------------ | ---------------------- |

|

| 239 |

+

| [YOLOv5n](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n.pt) | 640 | 28.0 | 45.7 | **45** | **6.3** | **0.6** | **1.9** | **4.5** |

|

| 240 |

+

| [YOLOv5s](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.pt) | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

|

| 241 |

+

| [YOLOv5m](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m.pt) | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

|

| 242 |

+

| [YOLOv5l](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l.pt) | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

|

| 243 |

+

| [YOLOv5x](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x.pt) | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

|

| 244 |

+

| | | | | | | | | |

|

| 245 |

+

| [YOLOv5n6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n6.pt) | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

|

| 246 |

+

| [YOLOv5s6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s6.pt) | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

|

| 247 |

+

| [YOLOv5m6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m6.pt) | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

|

| 248 |

+

| [YOLOv5l6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l6.pt) | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

|

| 249 |

+

| [YOLOv5x6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x6.pt)<br>+ [TTA] | 1280<br>1536 | 55.0<br>**55.8** | 72.7<br>**72.7** | 3136<br>- | 26.2<br>- | 19.4<br>- | 140.7<br>- | 209.8<br>- |

|

| 250 |

+

|

| 251 |

+

<details>

|

| 252 |

+

<summary>Table Notes</summary>

|

| 253 |

+

|

| 254 |

+

- All checkpoints are trained to 300 epochs with default settings. Nano and Small models use [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) hyps, all others use [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml).

|

| 255 |

+

- **mAP<sup>val</sup>** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.<br>Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

|

| 256 |

+

- **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) instance. NMS times (~1 ms/img) not included.<br>Reproduce by `python val.py --data coco.yaml --img 640 --task speed --batch 1`

|

| 257 |

+

- **TTA** [Test Time Augmentation](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation) includes reflection and scale augmentations.<br>Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

|

| 258 |

+

|

| 259 |

+

</details>

|

| 260 |

+

|

| 261 |

+

## <div align="center">Segmentation</div>

|

| 262 |

+

|

| 263 |

+

Our new YOLOv5 [release v7.0](https://github.com/ultralytics/yolov5/releases/v7.0) instance segmentation models are the fastest and most accurate in the world, beating all current [SOTA benchmarks](https://paperswithcode.com/sota/real-time-instance-segmentation-on-mscoco). We've made them super simple to train, validate and deploy. See full details in our [Release Notes](https://github.com/ultralytics/yolov5/releases/v7.0) and visit our [YOLOv5 Segmentation Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/segment/tutorial.ipynb) for quickstart tutorials.

|

| 264 |

+

|

| 265 |

+

<details>

|

| 266 |

+

<summary>Segmentation Checkpoints</summary>

|

| 267 |

+

|

| 268 |

+

<div align="center">

|

| 269 |

+

<a align="center" href="https://ultralytics.com/yolov5" target="_blank">

|

| 270 |

+

<img width="800" src="https://user-images.githubusercontent.com/61612323/204180385-84f3aca9-a5e9-43d8-a617-dda7ca12e54a.png"></a>

|

| 271 |

+

</div>

|

| 272 |

+

|

| 273 |

+

We trained YOLOv5 segmentations models on COCO for 300 epochs at image size 640 using A100 GPUs. We exported all models to ONNX FP32 for CPU speed tests and to TensorRT FP16 for GPU speed tests. We ran all speed tests on Google [Colab Pro](https://colab.research.google.com/signup) notebooks for easy reproducibility.

|

| 274 |

+

|

| 275 |

+

| Model | size<br><sup>(pixels) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | Train time<br><sup>300 epochs<br>A100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TRT A100<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@640 (B) |

|

| 276 |

+

| ------------------------------------------------------------------------------------------ | --------------------- | -------------------- | --------------------- | --------------------------------------------- | ------------------------------ | ------------------------------ | ------------------ | ---------------------- |

|

| 277 |

+

| [YOLOv5n-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-seg.pt) | 640 | 27.6 | 23.4 | 80:17 | **62.7** | **1.2** | **2.0** | **7.1** |

|

| 278 |

+

| [YOLOv5s-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-seg.pt) | 640 | 37.6 | 31.7 | 88:16 | 173.3 | 1.4 | 7.6 | 26.4 |

|

| 279 |

+

| [YOLOv5m-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-seg.pt) | 640 | 45.0 | 37.1 | 108:36 | 427.0 | 2.2 | 22.0 | 70.8 |

|

| 280 |

+

| [YOLOv5l-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-seg.pt) | 640 | 49.0 | 39.9 | 66:43 (2x) | 857.4 | 2.9 | 47.9 | 147.7 |

|

| 281 |

+

| [YOLOv5x-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-seg.pt) | 640 | **50.7** | **41.4** | 62:56 (3x) | 1579.2 | 4.5 | 88.8 | 265.7 |

|

| 282 |

+

|

| 283 |

+

- All checkpoints are trained to 300 epochs with SGD optimizer with `lr0=0.01` and `weight_decay=5e-5` at image size 640 and all default settings.<br>Runs logged to https://wandb.ai/glenn-jocher/YOLOv5_v70_official

|

| 284 |

+

- **Accuracy** values are for single-model single-scale on COCO dataset.<br>Reproduce by `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt`

|

| 285 |

+

- **Speed** averaged over 100 inference images using a [Colab Pro](https://colab.research.google.com/signup) A100 High-RAM instance. Values indicate inference speed only (NMS adds about 1ms per image). <br>Reproduce by `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt --batch 1`

|

| 286 |

+

- **Export** to ONNX at FP32 and TensorRT at FP16 done with `export.py`. <br>Reproduce by `python export.py --weights yolov5s-seg.pt --include engine --device 0 --half`

|

| 287 |

+

|

| 288 |

+

</details>

|

| 289 |

+

|

| 290 |

+

<details>

|

| 291 |

+

<summary>Segmentation Usage Examples <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/segment/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a></summary>

|

| 292 |

+

|

| 293 |

+

### Train

|

| 294 |

+

|

| 295 |

+

YOLOv5 segmentation training supports auto-download COCO128-seg segmentation dataset with `--data coco128-seg.yaml` argument and manual download of COCO-segments dataset with `bash data/scripts/get_coco.sh --train --val --segments` and then `python train.py --data coco.yaml`.

|

| 296 |

+

|

| 297 |

+

```bash

|

| 298 |

+

# Single-GPU

|

| 299 |

+

python segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640

|

| 300 |

+

|

| 301 |

+

# Multi-GPU DDP

|

| 302 |

+

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640 --device 0,1,2,3

|

| 303 |

+

```

|

| 304 |

+

|

| 305 |

+

### Val

|

| 306 |

+

|

| 307 |

+

Validate YOLOv5s-seg mask mAP on COCO dataset:

|

| 308 |

+

|

| 309 |

+

```bash

|

| 310 |

+

bash data/scripts/get_coco.sh --val --segments # download COCO val segments split (780MB, 5000 images)

|

| 311 |

+

python segment/val.py --weights yolov5s-seg.pt --data coco.yaml --img 640 # validate

|

| 312 |

+

```

|

| 313 |

+

|

| 314 |

+

### Predict

|

| 315 |

+

|

| 316 |

+

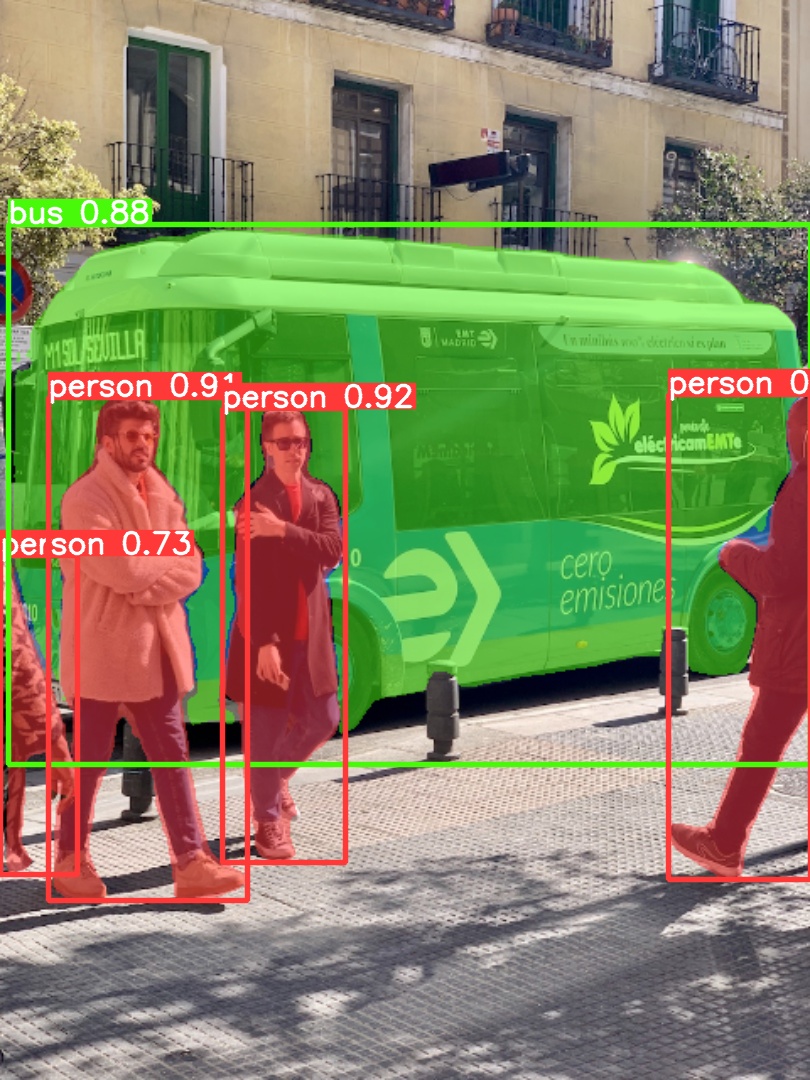

Use pretrained YOLOv5m-seg.pt to predict bus.jpg:

|

| 317 |

+

|

| 318 |

+

```bash

|

| 319 |

+

python segment/predict.py --weights yolov5m-seg.pt --source data/images/bus.jpg

|

| 320 |

+

```

|

| 321 |

+

|

| 322 |

+

```python

|

| 323 |

+

model = torch.hub.load(

|

| 324 |

+

"ultralytics/yolov5", "custom", "yolov5m-seg.pt"

|

| 325 |

+

) # load from PyTorch Hub (WARNING: inference not yet supported)

|

| 326 |

+

```

|

| 327 |

+

|

| 328 |

+

|  |  |

|

| 329 |

+

| ---------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------- |

|

| 330 |

+

|

| 331 |

+

### Export

|

| 332 |

+

|

| 333 |

+

Export YOLOv5s-seg model to ONNX and TensorRT:

|

| 334 |

+

|

| 335 |

+

```bash

|

| 336 |

+

python export.py --weights yolov5s-seg.pt --include onnx engine --img 640 --device 0

|

| 337 |

+

```

|

| 338 |

+

|

| 339 |

+

</details>

|

| 340 |

+

|

| 341 |

+

## <div align="center">Classification</div>

|

| 342 |

+

|

| 343 |

+

YOLOv5 [release v6.2](https://github.com/ultralytics/yolov5/releases) brings support for classification model training, validation and deployment! See full details in our [Release Notes](https://github.com/ultralytics/yolov5/releases/v6.2) and visit our [YOLOv5 Classification Colab Notebook](https://github.com/ultralytics/yolov5/blob/master/classify/tutorial.ipynb) for quickstart tutorials.

|

| 344 |

+

|

| 345 |

+

<details>

|

| 346 |

+

<summary>Classification Checkpoints</summary>

|

| 347 |

+

|

| 348 |

+

<br>

|

| 349 |

+

|

| 350 |

+

We trained YOLOv5-cls classification models on ImageNet for 90 epochs using a 4xA100 instance, and we trained ResNet and EfficientNet models alongside with the same default training settings to compare. We exported all models to ONNX FP32 for CPU speed tests and to TensorRT FP16 for GPU speed tests. We ran all speed tests on Google [Colab Pro](https://colab.research.google.com/signup) for easy reproducibility.

|

| 351 |

+

|

| 352 |

+

| Model | size<br><sup>(pixels) | acc<br><sup>top1 | acc<br><sup>top5 | Training<br><sup>90 epochs<br>4xA100 (hours) | Speed<br><sup>ONNX CPU<br>(ms) | Speed<br><sup>TensorRT V100<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>@224 (B) |

|

| 353 |

+

| -------------------------------------------------------------------------------------------------- | --------------------- | ---------------- | ---------------- | -------------------------------------------- | ------------------------------ | ----------------------------------- | ------------------ | ---------------------- |

|

| 354 |

+

| [YOLOv5n-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-cls.pt) | 224 | 64.6 | 85.4 | 7:59 | **3.3** | **0.5** | **2.5** | **0.5** |

|

| 355 |

+

| [YOLOv5s-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-cls.pt) | 224 | 71.5 | 90.2 | 8:09 | 6.6 | 0.6 | 5.4 | 1.4 |

|

| 356 |

+

| [YOLOv5m-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-cls.pt) | 224 | 75.9 | 92.9 | 10:06 | 15.5 | 0.9 | 12.9 | 3.9 |

|

| 357 |

+

| [YOLOv5l-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-cls.pt) | 224 | 78.0 | 94.0 | 11:56 | 26.9 | 1.4 | 26.5 | 8.5 |

|

| 358 |

+

| [YOLOv5x-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-cls.pt) | 224 | **79.0** | **94.4** | 15:04 | 54.3 | 1.8 | 48.1 | 15.9 |

|

| 359 |

+

| | | | | | | | | |

|

| 360 |

+

| [ResNet18](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet18.pt) | 224 | 70.3 | 89.5 | **6:47** | 11.2 | 0.5 | 11.7 | 3.7 |

|

| 361 |

+

| [ResNet34](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet34.pt) | 224 | 73.9 | 91.8 | 8:33 | 20.6 | 0.9 | 21.8 | 7.4 |

|

| 362 |

+

| [ResNet50](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet50.pt) | 224 | 76.8 | 93.4 | 11:10 | 23.4 | 1.0 | 25.6 | 8.5 |

|

| 363 |

+

| [ResNet101](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet101.pt) | 224 | 78.5 | 94.3 | 17:10 | 42.1 | 1.9 | 44.5 | 15.9 |

|

| 364 |

+

| | | | | | | | | |

|

| 365 |

+

| [EfficientNet_b0](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b0.pt) | 224 | 75.1 | 92.4 | 13:03 | 12.5 | 1.3 | 5.3 | 1.0 |

|

| 366 |

+

| [EfficientNet_b1](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b1.pt) | 224 | 76.4 | 93.2 | 17:04 | 14.9 | 1.6 | 7.8 | 1.5 |

|

| 367 |

+

| [EfficientNet_b2](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b2.pt) | 224 | 76.6 | 93.4 | 17:10 | 15.9 | 1.6 | 9.1 | 1.7 |

|

| 368 |

+

| [EfficientNet_b3](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b3.pt) | 224 | 77.7 | 94.0 | 19:19 | 18.9 | 1.9 | 12.2 | 2.4 |

|

| 369 |

+

|

| 370 |

+

<details>

|

| 371 |

+

<summary>Table Notes (click to expand)</summary>

|

| 372 |

+

|

| 373 |

+

- All checkpoints are trained to 90 epochs with SGD optimizer with `lr0=0.001` and `weight_decay=5e-5` at image size 224 and all default settings.<br>Runs logged to https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2

|

| 374 |

+

- **Accuracy** values are for single-model single-scale on [ImageNet-1k](https://www.image-net.org/index.php) dataset.<br>Reproduce by `python classify/val.py --data ../datasets/imagenet --img 224`

|

| 375 |

+

- **Speed** averaged over 100 inference images using a Google [Colab Pro](https://colab.research.google.com/signup) V100 High-RAM instance.<br>Reproduce by `python classify/val.py --data ../datasets/imagenet --img 224 --batch 1`

|

| 376 |

+

- **Export** to ONNX at FP32 and TensorRT at FP16 done with `export.py`. <br>Reproduce by `python export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224`

|

| 377 |

+

|

| 378 |

+

</details>

|

| 379 |

+

</details>

|

| 380 |

+

|

| 381 |

+

<details>

|

| 382 |

+

<summary>Classification Usage Examples <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/classify/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a></summary>

|

| 383 |

+

|

| 384 |

+

### Train

|

| 385 |

+

|

| 386 |

+

YOLOv5 classification training supports auto-download of MNIST, Fashion-MNIST, CIFAR10, CIFAR100, Imagenette, Imagewoof, and ImageNet datasets with the `--data` argument. To start training on MNIST for example use `--data mnist`.

|

| 387 |

+

|

| 388 |

+

```bash

|

| 389 |

+

# Single-GPU

|

| 390 |

+

python classify/train.py --model yolov5s-cls.pt --data cifar100 --epochs 5 --img 224 --batch 128

|

| 391 |

+

|

| 392 |

+

# Multi-GPU DDP

|

| 393 |

+

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

|

| 394 |

+

```

|

| 395 |

+

|

| 396 |

+

### Val

|

| 397 |

+

|

| 398 |

+

Validate YOLOv5m-cls accuracy on ImageNet-1k dataset:

|

| 399 |

+

|

| 400 |

+

```bash

|

| 401 |

+

bash data/scripts/get_imagenet.sh --val # download ImageNet val split (6.3G, 50000 images)

|

| 402 |

+

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224 # validate

|

| 403 |

+

```

|

| 404 |

+

|

| 405 |

+

### Predict

|

| 406 |

+

|

| 407 |

+

Use pretrained YOLOv5s-cls.pt to predict bus.jpg:

|

| 408 |

+

|

| 409 |

+

```bash

|

| 410 |

+

python classify/predict.py --weights yolov5s-cls.pt --source data/images/bus.jpg

|

| 411 |

+

```

|

| 412 |

+

|

| 413 |

+

```python

|

| 414 |

+

model = torch.hub.load(

|

| 415 |

+

"ultralytics/yolov5", "custom", "yolov5s-cls.pt"

|

| 416 |

+

) # load from PyTorch Hub

|

| 417 |

+

```

|

| 418 |

+

|

| 419 |

+

### Export

|

| 420 |

+

|

| 421 |

+

Export a group of trained YOLOv5s-cls, ResNet and EfficientNet models to ONNX and TensorRT:

|

| 422 |

+

|

| 423 |

+

```bash

|

| 424 |

+

python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --include onnx engine --img 224

|

| 425 |

+

```

|

| 426 |

+

|

| 427 |

+

</details>

|

| 428 |

+

|

| 429 |

+

## <div align="center">Environments</div>

|

| 430 |

+

|

| 431 |

+

Get started in seconds with our verified environments. Click each icon below for details.

|

| 432 |

+

|

| 433 |

+

<div align="center">

|

| 434 |

+

<a href="https://bit.ly/yolov5-paperspace-notebook">

|

| 435 |

+

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-gradient.png" width="10%" /></a>

|

| 436 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

| 437 |

+

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb">

|

| 438 |

+

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-colab-small.png" width="10%" /></a>

|

| 439 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

| 440 |

+

<a href="https://www.kaggle.com/ultralytics/yolov5">

|

| 441 |

+

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-kaggle-small.png" width="10%" /></a>

|

| 442 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

| 443 |

+

<a href="https://hub.docker.com/r/ultralytics/yolov5">

|

| 444 |

+

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-docker-small.png" width="10%" /></a>

|

| 445 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

| 446 |

+

<a href="https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial/">

|

| 447 |

+

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-aws-small.png" width="10%" /></a>

|

| 448 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

|

| 449 |

+

<a href="https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial/">

|

| 450 |

+

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-gcp-small.png" width="10%" /></a>

|

| 451 |

+

</div>

|

| 452 |

+

|

| 453 |

+

## <div align="center">Contribute</div>

|

| 454 |

+

|

| 455 |

+

We love your input! We want to make contributing to YOLOv5 as easy and transparent as possible. Please see our [Contributing Guide](https://docs.ultralytics.com/help/contributing/) to get started, and fill out the [YOLOv5 Survey](https://ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey) to send us feedback on your experiences. Thank you to all our contributors!

|

| 456 |

+

|

| 457 |

+

<!-- SVG image from https://opencollective.com/ultralytics/contributors.svg?width=990 -->

|

| 458 |

+

|

| 459 |

+

<a href="https://github.com/ultralytics/yolov5/graphs/contributors">

|

| 460 |

+

<img src="https://github.com/ultralytics/assets/raw/main/im/image-contributors.png" /></a>

|

| 461 |

+

|

| 462 |

+

## <div align="center">License</div>

|

| 463 |

+

|

| 464 |

+

YOLOv5 is available under two different licenses:

|

| 465 |

+

|

| 466 |

+

- **AGPL-3.0 License**: See [LICENSE](https://github.com/ultralytics/yolov5/blob/master/LICENSE) file for details.

|

| 467 |

+

- **Enterprise License**: Provides greater flexibility for commercial product development without the open-source requirements of AGPL-3.0. Typical use cases are embedding Ultralytics software and AI models in commercial products and applications. Request an Enterprise License at [Ultralytics Licensing](https://ultralytics.com/license).

|

| 468 |

+

|

| 469 |

+

## <div align="center">Contact</div>

|

| 470 |

+

|

| 471 |

+

For YOLOv5 bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/yolov5/issues), and join our [Discord](https://discord.gg/2wNGbc6g9X) community for questions and discussions!

|

| 472 |

+

|

| 473 |

+

<br>

|

| 474 |

+

<div align="center">

|

| 475 |

+

<a href="https://github.com/ultralytics" style="text-decoration:none;">

|

| 476 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="3%" alt="" /></a>

|

| 477 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 478 |

+

<a href="https://www.linkedin.com/company/ultralytics/" style="text-decoration:none;">

|

| 479 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="3%" alt="" /></a>

|

| 480 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 481 |

+

<a href="https://twitter.com/ultralytics" style="text-decoration:none;">

|

| 482 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="3%" alt="" /></a>

|

| 483 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 484 |

+

<a href="https://youtube.com/ultralytics" style="text-decoration:none;">

|

| 485 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="3%" alt="" /></a>

|

| 486 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 487 |

+

<a href="https://www.tiktok.com/@ultralytics" style="text-decoration:none;">

|

| 488 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="3%" alt="" /></a>

|

| 489 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 490 |

+

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

| 491 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

| 492 |

+

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 493 |

+

<a href="https://discord.gg/2wNGbc6g9X" style="text-decoration:none;">

|

| 494 |

+

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="3%" alt="" /></a>

|

| 495 |

+

</div>

|

| 496 |

+

|

| 497 |

+

[tta]: https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation

|

lib/yolov5/README.zh-CN.md

CHANGED

|

@@ -19,7 +19,7 @@

|

|

| 19 |

|

| 20 |

YOLOv5 🚀 是世界上最受欢迎的视觉 AI,代表<a href="https://ultralytics.com"> Ultralytics </a>对未来视觉 AI 方法的开源研究,结合在数千小时的研究和开发中积累的经验教训和最佳实践。

|

| 21 |

|

| 22 |

-

我们希望这里的资源能帮助您充分利用 YOLOv5。请浏览 YOLOv5 <a href="https://docs.ultralytics.com/">文档</a> 了解详细信息,在 <a href="https://github.com/ultralytics/yolov5/issues/new/choose">GitHub</a> 上提交问题以获得支持,并加入我们的 <a href="https://

|

| 23 |

|

| 24 |

如需申请企业许可,请在 [Ultralytics Licensing](https://ultralytics.com/license) 处填写表格

|

| 25 |

|

|

@@ -42,7 +42,7 @@ YOLOv5 🚀 是世界上最受欢迎的视觉 AI,代表<a href="https://ultral

|

|

| 42 |

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

| 43 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

| 44 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 45 |

-

<a href="https://

|

| 46 |

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="2%" alt="" /></a>

|

| 47 |

</div>

|

| 48 |

</div>

|

|

@@ -452,16 +452,16 @@ python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --inclu

|

|

| 452 |

<a href="https://github.com/ultralytics/yolov5/graphs/contributors">

|

| 453 |

<img src="https://github.com/ultralytics/assets/raw/main/im/image-contributors.png" /></a>

|

| 454 |

|

| 455 |

-

## <div align="center"

|

| 456 |

|

| 457 |

-

|

| 458 |

|

| 459 |

-

- **AGPL-3.0

|

| 460 |

-

-

|

| 461 |

|

| 462 |

-

## <div align="center"

|

| 463 |

|

| 464 |

-

对于

|

| 465 |

|

| 466 |

<br>

|

| 467 |

<div align="center">

|

|

@@ -483,7 +483,7 @@ Ultralytics 提供两种许可证选项以适应各种使用场景:

|

|

| 483 |

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

| 484 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

| 485 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 486 |

-

<a href="https://

|

| 487 |

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="3%" alt="" /></a>

|

| 488 |

</div>

|

| 489 |

|

|

|

|

| 19 |

|

| 20 |

YOLOv5 🚀 是世界上最受欢迎的视觉 AI,代表<a href="https://ultralytics.com"> Ultralytics </a>对未来视觉 AI 方法的开源研究,结合在数千小时的研究和开发中积累的经验教训和最佳实践。

|

| 21 |

|

| 22 |

+

我们希望这里的资源能帮助您充分利用 YOLOv5。请浏览 YOLOv5 <a href="https://docs.ultralytics.com/">文档</a> 了解详细信息,在 <a href="https://github.com/ultralytics/yolov5/issues/new/choose">GitHub</a> 上提交问题以获得支持,并加入我们的 <a href="https://discord.gg/2wNGbc6g9X">Discord</a> 社区进行问题和讨论!

|

| 23 |

|

| 24 |

如需申请企业许可,请在 [Ultralytics Licensing](https://ultralytics.com/license) 处填写表格

|

| 25 |

|

|

|

|

| 42 |

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

| 43 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

| 44 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

| 45 |

+

<a href="https://discord.gg/2wNGbc6g9X" style="text-decoration:none;">

|

| 46 |

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="2%" alt="" /></a>

|

| 47 |

</div>

|

| 48 |

</div>

|

|

|

|

| 452 |

<a href="https://github.com/ultralytics/yolov5/graphs/contributors">

|

| 453 |

<img src="https://github.com/ultralytics/assets/raw/main/im/image-contributors.png" /></a>

|

| 454 |

|

| 455 |

+

## <div align="center">License</div>

|

| 456 |

|

| 457 |

+

YOLOv5 在两种不同的 License 下可用:

|

| 458 |

|

| 459 |

+

- **AGPL-3.0 License**: 查看 [License](https://github.com/ultralytics/yolov5/blob/master/LICENSE) 文件的详细信息。

|

| 460 |

+

- **企业License**:在没有 AGPL-3.0 开源要求的情况下为商业产品开发提供更大的灵活性。典型用例是将 Ultralytics 软件和 AI 模型嵌入到商业产品和应用程序中。在以下位置申请企业许可证 [Ultralytics 许可](https://ultralytics.com/license) 。

|

| 461 |

|

| 462 |

+

## <div align="center">联系我们</div>

|

| 463 |

|

| 464 |

+

对于 YOLOv5 的错误报告和功能请求,请访问 [GitHub Issues](https://github.com/ultralytics/yolov5/issues),并加入我们的 [Discord](https://discord.gg/2wNGbc6g9X) 社区进行问题和讨论!

|

| 465 |

|

| 466 |

<br>

|

| 467 |

<div align="center">

|

|

|

|

| 483 |

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

| 484 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="" /></a>

|

| 485 |

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%" alt="" />

|

| 486 |

+

<a href="https://discord.gg/2wNGbc6g9X" style="text-decoration:none;">

|

| 487 |

<img src="https://github.com/ultralytics/assets/blob/main/social/logo-social-discord.png" width="3%" alt="" /></a>

|

| 488 |

</div>

|

| 489 |

|

lib/yolov5/requirements.txt

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# YOLOv5 requirements

|

| 2 |

+

# Usage: pip install -r requirements.txt

|

| 3 |

+

|

| 4 |

+

# Base ------------------------------------------------------------------------

|

| 5 |

+

gitpython>=3.1.30

|

| 6 |

+

matplotlib>=3.3

|

| 7 |

+

numpy>=1.18.5

|

| 8 |

+

opencv-python>=4.1.1

|

| 9 |

+

Pillow>=7.1.2

|

| 10 |

+

psutil # system resources

|

| 11 |

+

PyYAML>=5.3.1

|

| 12 |

+

requests>=2.23.0

|

| 13 |

+

scipy>=1.4.1

|

| 14 |

+

thop>=0.1.1 # FLOPs computation

|

| 15 |

+

torch>=1.7.0 # see https://pytorch.org/get-started/locally (recommended)

|

| 16 |

+

torchvision>=0.8.1

|

| 17 |

+

tqdm>=4.64.0

|

| 18 |

+

ultralytics>=8.0.111

|

| 19 |

+

# protobuf<=3.20.1 # https://github.com/ultralytics/yolov5/issues/8012

|

| 20 |

+

|

| 21 |

+

# Logging ---------------------------------------------------------------------

|

| 22 |

+

# tensorboard>=2.4.1

|

| 23 |

+

# clearml>=1.2.0

|

| 24 |

+

# comet

|

| 25 |

+

|

| 26 |

+

# Plotting --------------------------------------------------------------------

|

| 27 |

+

pandas>=1.1.4

|

| 28 |

+

seaborn>=0.11.0

|

| 29 |

+

|

| 30 |

+

# Export ----------------------------------------------------------------------

|

| 31 |

+

# coremltools>=6.0 # CoreML export

|

| 32 |

+

# onnx>=1.10.0 # ONNX export

|

| 33 |

+

# onnx-simplifier>=0.4.1 # ONNX simplifier

|

| 34 |

+

# nvidia-pyindex # TensorRT export

|

| 35 |

+

# nvidia-tensorrt # TensorRT export

|

| 36 |

+

# scikit-learn<=1.1.2 # CoreML quantization

|

| 37 |

+

# tensorflow>=2.4.0 # TF exports (-cpu, -aarch64, -macos)

|

| 38 |

+

# tensorflowjs>=3.9.0 # TF.js export

|

| 39 |

+

# openvino-dev # OpenVINO export

|

| 40 |

+

|

| 41 |

+

# Deploy ----------------------------------------------------------------------

|

| 42 |

+

setuptools>=65.5.1 # Snyk vulnerability fix

|

| 43 |

+

# tritonclient[all]~=2.24.0

|

| 44 |

+

|

| 45 |

+

# Extras ----------------------------------------------------------------------

|

| 46 |

+

# ipython # interactive notebook

|

| 47 |

+

# mss # screenshots

|

| 48 |

+

# albumentations>=1.0.3

|

| 49 |

+

# pycocotools>=2.0.6 # COCO mAP

|

lib/yolov5/tutorial.ipynb

CHANGED

|

@@ -31,7 +31,7 @@

|

|

| 31 |

" <a href=\"https://www.kaggle.com/ultralytics/yolov5\"><img src=\"https://kaggle.com/static/images/open-in-kaggle.svg\" alt=\"Open In Kaggle\"></a>\n",

|

| 32 |

"<br>\n",

|

| 33 |

"\n",

|

| 34 |

-