Spaces:

Runtime error

Runtime error

files

Browse files- app.py +170 -50

- clipseg/LICENSE +21 -0

- clipseg/Quickstart.ipynb +107 -0

- clipseg/Readme.md +84 -0

- clipseg/Tables.ipynb +349 -0

- clipseg/Visual_Feature_Engineering.ipynb +366 -0

- clipseg/datasets/coco_wrapper.py +99 -0

- clipseg/datasets/pascal_classes.json +1 -0

- clipseg/datasets/pascal_zeroshot.py +60 -0

- clipseg/datasets/pfe_dataset.py +129 -0

- clipseg/datasets/phrasecut.py +335 -0

- clipseg/datasets/utils.py +68 -0

- clipseg/environment.yml +15 -0

- clipseg/evaluation_utils.py +292 -0

- clipseg/example_image.jpg +0 -0

- clipseg/experiments/ablation.yaml +84 -0

- clipseg/experiments/coco.yaml +101 -0

- clipseg/experiments/pascal_1shot.yaml +101 -0

- clipseg/experiments/phrasecut.yaml +80 -0

- clipseg/general_utils.py +272 -0

- clipseg/metrics.py +271 -0

- clipseg/models/clipseg.py +552 -0

- clipseg/models/vitseg.py +286 -0

- clipseg/overview.png +0 -0

- clipseg/score.py +453 -0

- clipseg/setup.py +30 -0

- clipseg/training.py +266 -0

- clipseg/weights/rd64-uni.pth +3 -0

- init_image.png +0 -0

- inpainting.py +194 -0

- mask_image.png +0 -0

app.py

CHANGED

|

@@ -1,54 +1,174 @@

|

|

| 1 |

-

from diffusers import StableDiffusionInpaintPipeline

|

| 2 |

import gradio as gr

|

| 3 |

-

|

| 4 |

-

import imageio

|

| 5 |

-

from PIL import Image

|

| 6 |

from io import BytesIO

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

import os

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

|

| 45 |

-

|

| 46 |

-

|

| 47 |

-

|

| 48 |

-

|

| 49 |

-

|

| 50 |

-

|

| 51 |

-

|

| 52 |

-

|

| 53 |

-

|

| 54 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

import gradio as gr

|

| 2 |

+

|

|

|

|

|

|

|

| 3 |

from io import BytesIO

|

| 4 |

+

import requests

|

| 5 |

+

import PIL

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import numpy as np

|

| 8 |

import os

|

| 9 |

+

import uuid

|

| 10 |

+

import torch

|

| 11 |

+

from torch import autocast

|

| 12 |

+

import cv2

|

| 13 |

+

from matplotlib import pyplot as plt

|

| 14 |

+

from inpainting import StableDiffusionInpaintingPipeline

|

| 15 |

+

from torchvision import transforms

|

| 16 |

+

from clipseg.models.clipseg import CLIPDensePredT

|

| 17 |

+

|

| 18 |

+

auth_token = os.environ.get("API_TOKEN") or True

|

| 19 |

+

|

| 20 |

+

def download_image(url):

|

| 21 |

+

response = requests.get(url)

|

| 22 |

+

return PIL.Image.open(BytesIO(response.content)).convert("RGB")

|

| 23 |

+

|

| 24 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 25 |

+

pipe = StableDiffusionInpaintingPipeline.from_pretrained(

|

| 26 |

+

"CompVis/stable-diffusion-v1-4",

|

| 27 |

+

revision="fp16",

|

| 28 |

+

torch_dtype=torch.float16,

|

| 29 |

+

use_auth_token=auth_token,

|

| 30 |

+

).to(device)

|

| 31 |

+

|

| 32 |

+

model = CLIPDensePredT(version='ViT-B/16', reduce_dim=64)

|

| 33 |

+

model.eval()

|

| 34 |

+

model.load_state_dict(torch.load('./clipseg/weights/rd64-uni.pth', map_location=torch.device('cuda')), strict=False)

|

| 35 |

+

|

| 36 |

+

transform = transforms.Compose([

|

| 37 |

+

transforms.ToTensor(),

|

| 38 |

+

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

|

| 39 |

+

transforms.Resize((512, 512)),

|

| 40 |

+

])

|

| 41 |

+

|

| 42 |

+

def predict(radio, dict, word_mask, prompt=""):

|

| 43 |

+

if(radio == "draw a mask above"):

|

| 44 |

+

with autocast("cuda"):

|

| 45 |

+

init_image = dict["image"].convert("RGB").resize((512, 512))

|

| 46 |

+

mask = dict["mask"].convert("RGB").resize((512, 512))

|

| 47 |

+

else:

|

| 48 |

+

img = transform(dict["image"]).unsqueeze(0)

|

| 49 |

+

word_masks = [word_mask]

|

| 50 |

+

with torch.no_grad():

|

| 51 |

+

preds = model(img.repeat(len(word_masks),1,1,1), word_masks)[0]

|

| 52 |

+

init_image = dict['image'].convert('RGB').resize((512, 512))

|

| 53 |

+

filename = f"{uuid.uuid4()}.png"

|

| 54 |

+

plt.imsave(filename,torch.sigmoid(preds[0][0]))

|

| 55 |

+

img2 = cv2.imread(filename)

|

| 56 |

+

gray_image = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

|

| 57 |

+

(thresh, bw_image) = cv2.threshold(gray_image, 100, 255, cv2.THRESH_BINARY)

|

| 58 |

+

cv2.cvtColor(bw_image, cv2.COLOR_BGR2RGB)

|

| 59 |

+

mask = Image.fromarray(np.uint8(bw_image)).convert('RGB')

|

| 60 |

+

os.remove(filename)

|

| 61 |

+

with autocast("cuda"):

|

| 62 |

+

images = pipe(prompt = prompt, init_image=init_image, mask_image=mask, strength=0.8)["sample"]

|

| 63 |

+

return images[0]

|

| 64 |

+

|

| 65 |

+

# examples = [[dict(image="init_image.png", mask="mask_image.png"), "A panda sitting on a bench"]]

|

| 66 |

+

css = '''

|

| 67 |

+

.container {max-width: 1150px;margin: auto;padding-top: 1.5rem}

|

| 68 |

+

#image_upload{min-height:400px}

|

| 69 |

+

#image_upload [data-testid="image"], #image_upload [data-testid="image"] > div{min-height: 400px}

|

| 70 |

+

#mask_radio .gr-form{background:transparent; border: none}

|

| 71 |

+

#word_mask{margin-top: .75em !important}

|

| 72 |

+

#word_mask textarea:disabled{opacity: 0.3}

|

| 73 |

+

.footer {margin-bottom: 45px;margin-top: 35px;text-align: center;border-bottom: 1px solid #e5e5e5}

|

| 74 |

+

.footer>p {font-size: .8rem; display: inline-block; padding: 0 10px;transform: translateY(10px);background: white}

|

| 75 |

+

.dark .footer {border-color: #303030}

|

| 76 |

+

.dark .footer>p {background: #0b0f19}

|

| 77 |

+

.acknowledgments h4{margin: 1.25em 0 .25em 0;font-weight: bold;font-size: 115%}

|

| 78 |

+

#image_upload .touch-none{display: flex}

|

| 79 |

+

'''

|

| 80 |

+

def swap_word_mask(radio_option):

|

| 81 |

+

if(radio_option == "type what to mask below"):

|

| 82 |

+

return gr.update(interactive=True, placeholder="A cat")

|

| 83 |

+

else:

|

| 84 |

+

return gr.update(interactive=False, placeholder="Disabled")

|

| 85 |

|

| 86 |

+

image_blocks = gr.Blocks(css=css)

|

| 87 |

+

with image_blocks as demo:

|

| 88 |

+

gr.HTML(

|

| 89 |

+

"""

|

| 90 |

+

<div style="text-align: center; max-width: 650px; margin: 0 auto;">

|

| 91 |

+

<div

|

| 92 |

+

style="

|

| 93 |

+

display: inline-flex;

|

| 94 |

+

align-items: center;

|

| 95 |

+

gap: 0.8rem;

|

| 96 |

+

font-size: 1.75rem;

|

| 97 |

+

"

|

| 98 |

+

>

|

| 99 |

+

<svg

|

| 100 |

+

width="0.65em"

|

| 101 |

+

height="0.65em"

|

| 102 |

+

viewBox="0 0 115 115"

|

| 103 |

+

fill="none"

|

| 104 |

+

xmlns="http://www.w3.org/2000/svg"

|

| 105 |

+

>

|

| 106 |

+

<rect width="23" height="23" fill="white"></rect>

|

| 107 |

+

<rect y="69" width="23" height="23" fill="white"></rect>

|

| 108 |

+

<rect x="23" width="23" height="23" fill="#AEAEAE"></rect>

|

| 109 |

+

<rect x="23" y="69" width="23" height="23" fill="#AEAEAE"></rect>

|

| 110 |

+

<rect x="46" width="23" height="23" fill="white"></rect>

|

| 111 |

+

<rect x="46" y="69" width="23" height="23" fill="white"></rect>

|

| 112 |

+

<rect x="69" width="23" height="23" fill="black"></rect>

|

| 113 |

+

<rect x="69" y="69" width="23" height="23" fill="black"></rect>

|

| 114 |

+

<rect x="92" width="23" height="23" fill="#D9D9D9"></rect>

|

| 115 |

+

<rect x="92" y="69" width="23" height="23" fill="#AEAEAE"></rect>

|

| 116 |

+

<rect x="115" y="46" width="23" height="23" fill="white"></rect>

|

| 117 |

+

<rect x="115" y="115" width="23" height="23" fill="white"></rect>

|

| 118 |

+

<rect x="115" y="69" width="23" height="23" fill="#D9D9D9"></rect>

|

| 119 |

+

<rect x="92" y="46" width="23" height="23" fill="#AEAEAE"></rect>

|

| 120 |

+

<rect x="92" y="115" width="23" height="23" fill="#AEAEAE"></rect>

|

| 121 |

+

<rect x="92" y="69" width="23" height="23" fill="white"></rect>

|

| 122 |

+

<rect x="69" y="46" width="23" height="23" fill="white"></rect>

|

| 123 |

+

<rect x="69" y="115" width="23" height="23" fill="white"></rect>

|

| 124 |

+

<rect x="69" y="69" width="23" height="23" fill="#D9D9D9"></rect>

|

| 125 |

+

<rect x="46" y="46" width="23" height="23" fill="black"></rect>

|

| 126 |

+

<rect x="46" y="115" width="23" height="23" fill="black"></rect>

|

| 127 |

+

<rect x="46" y="69" width="23" height="23" fill="black"></rect>

|

| 128 |

+

<rect x="23" y="46" width="23" height="23" fill="#D9D9D9"></rect>

|

| 129 |

+

<rect x="23" y="115" width="23" height="23" fill="#AEAEAE"></rect>

|

| 130 |

+

<rect x="23" y="69" width="23" height="23" fill="black"></rect>

|

| 131 |

+

</svg>

|

| 132 |

+

<h1 style="font-weight: 900; margin-bottom: 7px;">

|

| 133 |

+

Stable Diffusion Multi Inpainting

|

| 134 |

+

</h1>

|

| 135 |

+

</div>

|

| 136 |

+

<p style="margin-bottom: 10px; font-size: 94%">

|

| 137 |

+

Inpaint Stable Diffusion by either drawing a mask or typing what to replace

|

| 138 |

+

</p>

|

| 139 |

+

</div>

|

| 140 |

+

"""

|

| 141 |

+

)

|

| 142 |

+

with gr.Row():

|

| 143 |

+

with gr.Column():

|

| 144 |

+

image = gr.Image(source='upload', tool='sketch', elem_id="image_upload", type="pil", label="Upload").style(height=400)

|

| 145 |

+

with gr.Box(elem_id="mask_radio").style(border=False):

|

| 146 |

+

radio = gr.Radio(["draw a mask above", "type what to mask below"], value="draw a mask above", show_label=False, interactive=True).style(container=False)

|

| 147 |

+

word_mask = gr.Textbox(label = "What to find in your image", interactive=False, elem_id="word_mask", placeholder="Disabled").style(container=False)

|

| 148 |

+

prompt = gr.Textbox(label = 'Your prompt (what you want to add in place of what you are removing)')

|

| 149 |

+

radio.change(fn=swap_word_mask, inputs=radio, outputs=word_mask,show_progress=False)

|

| 150 |

+

radio.change(None, inputs=[], outputs=image_blocks, _js = """

|

| 151 |

+

() => {

|

| 152 |

+

css_style = document.styleSheets[document.styleSheets.length - 1]

|

| 153 |

+

last_item = css_style.cssRules[css_style.cssRules.length - 1]

|

| 154 |

+

last_item.style.display = ["flex", ""].includes(last_item.style.display) ? "none" : "flex";

|

| 155 |

+

}""")

|

| 156 |

+

btn = gr.Button("Run")

|

| 157 |

+

with gr.Column():

|

| 158 |

+

result = gr.Image(label="Result")

|

| 159 |

+

btn.click(fn=predict, inputs=[radio, image, word_mask, prompt], outputs=result)

|

| 160 |

+

gr.HTML(

|

| 161 |

+

"""

|

| 162 |

+

<div class="footer">

|

| 163 |

+

<p>Model by <a href="https://huggingface.co/CompVis" style="text-decoration: underline;" target="_blank">CompVis</a> and <a href="https://huggingface.co/stabilityai" style="text-decoration: underline;" target="_blank">Stability AI</a> - Inpainting by <a href="https://github.com/nagolinc" style="text-decoration: underline;" target="_blank">nagolinc</a> and <a href="https://github.com/patil-suraj" style="text-decoration: underline;">patil-suraj</a>, inpainting with words by <a href="https://twitter.com/yvrjsharma/" style="text-decoration: underline;" target="_blank">@yvrjsharma</a> and <a href="https://twitter.com/1littlecoder" style="text-decoration: underline;">@1littlecoder</a> - Gradio Demo by 🤗 Hugging Face

|

| 164 |

+

</p>

|

| 165 |

+

</div>

|

| 166 |

+

<div class="acknowledgments">

|

| 167 |

+

<p><h4>LICENSE</h4>

|

| 168 |

+

The model is licensed with a <a href="https://huggingface.co/spaces/CompVis/stable-diffusion-license" style="text-decoration: underline;" target="_blank">CreativeML Open RAIL-M</a> license. The authors claim no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in this license. The license forbids you from sharing any content that violates any laws, produce any harm to a person, disseminate any personal information that would be meant for harm, spread misinformation and target vulnerable groups. For the full list of restrictions please <a href="https://huggingface.co/spaces/CompVis/stable-diffusion-license" target="_blank" style="text-decoration: underline;" target="_blank">read the license</a></p>

|

| 169 |

+

<p><h4>Biases and content acknowledgment</h4>

|

| 170 |

+

Despite how impressive being able to turn text into image is, beware to the fact that this model may output content that reinforces or exacerbates societal biases, as well as realistic faces, pornography and violence. The model was trained on the <a href="https://laion.ai/blog/laion-5b/" style="text-decoration: underline;" target="_blank">LAION-5B dataset</a>, which scraped non-curated image-text-pairs from the internet (the exception being the removal of illegal content) and is meant for research purposes. You can read more in the <a href="https://huggingface.co/CompVis/stable-diffusion-v1-4" style="text-decoration: underline;" target="_blank">model card</a></p>

|

| 171 |

+

</div>

|

| 172 |

+

"""

|

| 173 |

+

)

|

| 174 |

+

demo.launch()

|

clipseg/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

This license does not apply to the model weights.

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

clipseg/Quickstart.ipynb

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": [

|

| 9 |

+

"import torch\n",

|

| 10 |

+

"import requests\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"! wget https://owncloud.gwdg.de/index.php/s/ioHbRzFx6th32hn/download -O weights.zip\n",

|

| 13 |

+

"! unzip -d weights -j weights.zip\n",

|

| 14 |

+

"from models.clipseg import CLIPDensePredT\n",

|

| 15 |

+

"from PIL import Image\n",

|

| 16 |

+

"from torchvision import transforms\n",

|

| 17 |

+

"from matplotlib import pyplot as plt\n",

|

| 18 |

+

"\n",

|

| 19 |

+

"# load model\n",

|

| 20 |

+

"model = CLIPDensePredT(version='ViT-B/16', reduce_dim=64)\n",

|

| 21 |

+

"model.eval();\n",

|

| 22 |

+

"\n",

|

| 23 |

+

"# non-strict, because we only stored decoder weights (not CLIP weights)\n",

|

| 24 |

+

"model.load_state_dict(torch.load('weights/rd64-uni.pth', map_location=torch.device('cpu')), strict=False);"

|

| 25 |

+

]

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"cell_type": "markdown",

|

| 29 |

+

"metadata": {},

|

| 30 |

+

"source": [

|

| 31 |

+

"Load and normalize `example_image.jpg`. You can also load through an URL."

|

| 32 |

+

]

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

"cell_type": "code",

|

| 36 |

+

"execution_count": null,

|

| 37 |

+

"metadata": {},

|

| 38 |

+

"outputs": [],

|

| 39 |

+

"source": [

|

| 40 |

+

"# load and normalize image\n",

|

| 41 |

+

"input_image = Image.open('example_image.jpg')\n",

|

| 42 |

+

"\n",

|

| 43 |

+

"# or load from URL...\n",

|

| 44 |

+

"# image_url = 'https://farm5.staticflickr.com/4141/4856248695_03475782dc_z.jpg'\n",

|

| 45 |

+

"# input_image = Image.open(requests.get(image_url, stream=True).raw)\n",

|

| 46 |

+

"\n",

|

| 47 |

+

"transform = transforms.Compose([\n",

|

| 48 |

+

" transforms.ToTensor(),\n",

|

| 49 |

+

" transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),\n",

|

| 50 |

+

" transforms.Resize((352, 352)),\n",

|

| 51 |

+

"])\n",

|

| 52 |

+

"img = transform(input_image).unsqueeze(0)"

|

| 53 |

+

]

|

| 54 |

+

},

|

| 55 |

+

{

|

| 56 |

+

"cell_type": "markdown",

|

| 57 |

+

"metadata": {},

|

| 58 |

+

"source": [

|

| 59 |

+

"Predict and visualize (this might take a few seconds if running without GPU support)"

|

| 60 |

+

]

|

| 61 |

+

},

|

| 62 |

+

{

|

| 63 |

+

"cell_type": "code",

|

| 64 |

+

"execution_count": null,

|

| 65 |

+

"metadata": {},

|

| 66 |

+

"outputs": [],

|

| 67 |

+

"source": [

|

| 68 |

+

"prompts = ['a glass', 'something to fill', 'wood', 'a jar']\n",

|

| 69 |

+

"\n",

|

| 70 |

+

"# predict\n",

|

| 71 |

+

"with torch.no_grad():\n",

|

| 72 |

+

" preds = model(img.repeat(4,1,1,1), prompts)[0]\n",

|

| 73 |

+

"\n",

|

| 74 |

+

"# visualize prediction\n",

|

| 75 |

+

"_, ax = plt.subplots(1, 5, figsize=(15, 4))\n",

|

| 76 |

+

"[a.axis('off') for a in ax.flatten()]\n",

|

| 77 |

+

"ax[0].imshow(input_image)\n",

|

| 78 |

+

"[ax[i+1].imshow(torch.sigmoid(preds[i][0])) for i in range(4)];\n",

|

| 79 |

+

"[ax[i+1].text(0, -15, prompts[i]) for i in range(4)];"

|

| 80 |

+

]

|

| 81 |

+

}

|

| 82 |

+

],

|

| 83 |

+

"metadata": {

|

| 84 |

+

"interpreter": {

|

| 85 |

+

"hash": "800ed241f7db2bd3aa6942aa3be6809cdb30ee6b0a9e773dfecfa9fef1f4c586"

|

| 86 |

+

},

|

| 87 |

+

"kernelspec": {

|

| 88 |

+

"display_name": "Python 3",

|

| 89 |

+

"language": "python",

|

| 90 |

+

"name": "python3"

|

| 91 |

+

},

|

| 92 |

+

"language_info": {

|

| 93 |

+

"codemirror_mode": {

|

| 94 |

+

"name": "ipython",

|

| 95 |

+

"version": 3

|

| 96 |

+

},

|

| 97 |

+

"file_extension": ".py",

|

| 98 |

+

"mimetype": "text/x-python",

|

| 99 |

+

"name": "python",

|

| 100 |

+

"nbconvert_exporter": "python",

|

| 101 |

+

"pygments_lexer": "ipython3",

|

| 102 |

+

"version": "3.8.10"

|

| 103 |

+

}

|

| 104 |

+

},

|

| 105 |

+

"nbformat": 4,

|

| 106 |

+

"nbformat_minor": 4

|

| 107 |

+

}

|

clipseg/Readme.md

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

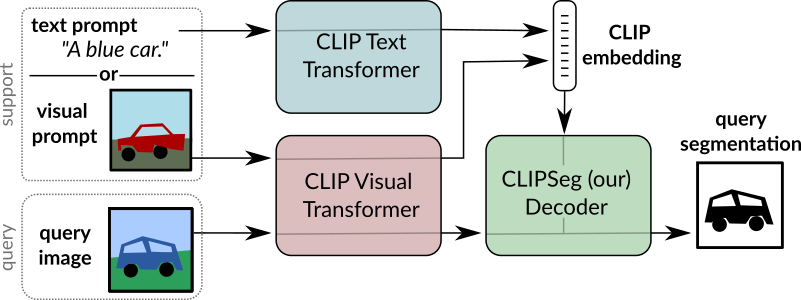

| 1 |

+

# Image Segmentation Using Text and Image Prompts

|

| 2 |

+

This repository contains the code used in the paper ["Image Segmentation Using Text and Image Prompts"](https://arxiv.org/abs/2112.10003).

|

| 3 |

+

|

| 4 |

+

**The Paper has been accepted to CVPR 2022!**

|

| 5 |

+

|

| 6 |

+

<img src="overview.png" alt="drawing" height="200em"/>

|

| 7 |

+

|

| 8 |

+

The systems allows to create segmentation models without training based on:

|

| 9 |

+

- An arbitrary text query

|

| 10 |

+

- Or an image with a mask highlighting stuff or an object.

|

| 11 |

+

|

| 12 |

+

### Quick Start

|

| 13 |

+

|

| 14 |

+

In the `Quickstart.ipynb` notebook we provide the code for using a pre-trained CLIPSeg model. If you run the notebook locally, make sure you downloaded the `rd64-uni.pth` weights, either manually or via git lfs extension.

|

| 15 |

+

It can also be used interactively using [MyBinder](https://mybinder.org/v2/gh/timojl/clipseg/HEAD?labpath=Quickstart.ipynb)

|

| 16 |

+

(please note that the VM does not use a GPU, thus inference takes a few seconds).

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

### Dependencies

|

| 20 |

+

This code base depends on pytorch, torchvision and clip (`pip install git+https://github.com/openai/CLIP.git`).

|

| 21 |

+

Additional dependencies are hidden for double blind review.

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

### Datasets

|

| 25 |

+

|

| 26 |

+

* `PhraseCut` and `PhraseCutPlus`: Referring expression dataset

|

| 27 |

+

* `PFEPascalWrapper`: Wrapper class for PFENet's Pascal-5i implementation

|

| 28 |

+

* `PascalZeroShot`: Wrapper class for PascalZeroShot

|

| 29 |

+

* `COCOWrapper`: Wrapper class for COCO.

|

| 30 |

+

|

| 31 |

+

### Models

|

| 32 |

+

|

| 33 |

+

* `CLIPDensePredT`: CLIPSeg model with transformer-based decoder.

|

| 34 |

+

* `ViTDensePredT`: CLIPSeg model with transformer-based decoder.

|

| 35 |

+

|

| 36 |

+

### Third Party Dependencies

|

| 37 |

+

For some of the datasets third party dependencies are required. Run the following commands in the `third_party` folder.

|

| 38 |

+

```bash

|

| 39 |

+

git clone https://github.com/cvlab-yonsei/JoEm

|

| 40 |

+

git clone https://github.com/Jia-Research-Lab/PFENet.git

|

| 41 |

+

git clone https://github.com/ChenyunWu/PhraseCutDataset.git

|

| 42 |

+

git clone https://github.com/juhongm999/hsnet.git

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

### Weights

|

| 46 |

+

|

| 47 |

+

The MIT license does not apply to these weights.

|

| 48 |

+

|

| 49 |

+

We provide two model weights, for D=64 (4.1MB) and D=16 (1.1MB).

|

| 50 |

+

```

|

| 51 |

+

wget https://owncloud.gwdg.de/index.php/s/ioHbRzFx6th32hn/download -O weights.zip

|

| 52 |

+

unzip -d weights -j weights.zip

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

### Training and Evaluation

|

| 57 |

+

|

| 58 |

+

To train use the `training.py` script with experiment file and experiment id parameters. E.g. `python training.py phrasecut.yaml 0` will train the first phrasecut experiment which is defined by the `configuration` and first `individual_configurations` parameters. Model weights will be written in `logs/`.

|

| 59 |

+

|

| 60 |

+

For evaluation use `score.py`. E.g. `python score.py phrasecut.yaml 0 0` will train the first phrasecut experiment of `test_configuration` and the first configuration in `individual_configurations`.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

### Usage of PFENet Wrappers

|

| 64 |

+

|

| 65 |

+

In order to use the dataset and model wrappers for PFENet, the PFENet repository needs to be cloned to the root folder.

|

| 66 |

+

`git clone https://github.com/Jia-Research-Lab/PFENet.git `

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

### License

|

| 70 |

+

|

| 71 |

+

The source code files in this repository (excluding model weights) are released under MIT license.

|

| 72 |

+

|

| 73 |

+

### Citation

|

| 74 |

+

```

|

| 75 |

+

@InProceedings{lueddecke22_cvpr,

|

| 76 |

+

author = {L\"uddecke, Timo and Ecker, Alexander},

|

| 77 |

+

title = {Image Segmentation Using Text and Image Prompts},

|

| 78 |

+

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

|

| 79 |

+

month = {June},

|

| 80 |

+

year = {2022},

|

| 81 |

+

pages = {7086-7096}

|

| 82 |

+

}

|

| 83 |

+

|

| 84 |

+

```

|

clipseg/Tables.ipynb

ADDED

|

@@ -0,0 +1,349 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": [

|

| 9 |

+

"%load_ext autoreload\n",

|

| 10 |

+

"%autoreload 2\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"import clip\n",

|

| 13 |

+

"from evaluation_utils import norm, denorm\n",

|

| 14 |

+

"from general_utils import *\n",

|

| 15 |

+

"from datasets.lvis_oneshot3 import LVIS_OneShot3, LVIS_OneShot"

|

| 16 |

+

]

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"cell_type": "markdown",

|

| 20 |

+

"metadata": {},

|

| 21 |

+

"source": [

|

| 22 |

+

"# PhraseCut"

|

| 23 |

+

]

|

| 24 |

+

},

|

| 25 |

+

{

|

| 26 |

+

"cell_type": "code",

|

| 27 |

+

"execution_count": null,

|

| 28 |

+

"metadata": {},

|

| 29 |

+

"outputs": [],

|

| 30 |

+

"source": [

|

| 31 |

+

"pc = experiment('experiments/phrasecut.yaml', nums=':6').dataframe()"

|

| 32 |

+

]

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

"cell_type": "code",

|

| 36 |

+

"execution_count": null,

|

| 37 |

+

"metadata": {},

|

| 38 |

+

"outputs": [],

|

| 39 |

+

"source": [

|

| 40 |

+

"tab1 = pc[['name', 'pc_miou_best', 'pc_fgiou_best', 'pc_ap']]"

|

| 41 |

+

]

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"cell_type": "code",

|

| 45 |

+

"execution_count": null,

|

| 46 |

+

"metadata": {},

|

| 47 |

+

"outputs": [],

|

| 48 |

+

"source": [

|

| 49 |

+

"cols = ['pc_miou_0.3', 'pc_fgiou_0.3', 'pc_ap']\n",

|

| 50 |

+

"tab1 = pc[['name'] + cols]\n",

|

| 51 |

+

"for k in cols:\n",

|

| 52 |

+

" tab1.loc[:, k] = (100 * tab1.loc[:, k]).round(1)\n",

|

| 53 |

+

"tab1.loc[:, 'name'] = ['CLIPSeg (PC+)', 'CLIPSeg (PC, $D=128$)', 'CLIPSeg (PC)', 'CLIP-Deconv', 'ViTSeg (PC+)', 'ViTSeg (PC)']\n",

|

| 54 |

+

"tab1.insert(1, 't', [0.3]*tab1.shape[0])\n",

|

| 55 |

+

"print(tab1.to_latex(header=False, index=False))"

|

| 56 |

+

]

|

| 57 |

+

},

|

| 58 |

+

{

|

| 59 |

+

"cell_type": "markdown",

|

| 60 |

+

"metadata": {},

|

| 61 |

+

"source": [

|

| 62 |

+

"For 0.1 threshold"

|

| 63 |

+

]

|

| 64 |

+

},

|

| 65 |

+

{

|

| 66 |

+

"cell_type": "code",

|

| 67 |

+

"execution_count": null,

|

| 68 |

+

"metadata": {},

|

| 69 |

+

"outputs": [],

|

| 70 |

+

"source": [

|

| 71 |

+

"cols = ['pc_miou_0.1', 'pc_fgiou_0.1', 'pc_ap']\n",

|

| 72 |

+

"tab1 = pc[['name'] + cols]\n",

|

| 73 |

+

"for k in cols:\n",

|

| 74 |

+

" tab1.loc[:, k] = (100 * tab1.loc[:, k]).round(1)\n",

|

| 75 |

+

"tab1.loc[:, 'name'] = ['CLIPSeg (PC+)', 'CLIPSeg (PC, $D=128$)', 'CLIPSeg (PC)', 'CLIP-Deconv', 'ViTSeg (PC+)', 'ViTSeg (PC)']\n",

|

| 76 |

+

"tab1.insert(1, 't', [0.1]*tab1.shape[0])\n",

|

| 77 |

+

"print(tab1.to_latex(header=False, index=False))"

|

| 78 |

+

]

|

| 79 |

+

},

|

| 80 |

+

{

|

| 81 |

+

"cell_type": "markdown",

|

| 82 |

+

"metadata": {},

|

| 83 |

+

"source": [

|

| 84 |

+

"# One-shot"

|

| 85 |

+

]

|

| 86 |

+

},

|

| 87 |

+

{

|

| 88 |

+

"cell_type": "markdown",

|

| 89 |

+

"metadata": {},

|

| 90 |

+

"source": [

|

| 91 |

+

"### Pascal"

|

| 92 |

+

]

|

| 93 |

+

},

|

| 94 |

+

{

|

| 95 |

+

"cell_type": "code",

|

| 96 |

+

"execution_count": null,

|

| 97 |

+

"metadata": {},

|

| 98 |

+

"outputs": [],

|

| 99 |

+

"source": [

|

| 100 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums=':19').dataframe()"

|

| 101 |

+

]

|

| 102 |

+

},

|

| 103 |

+

{

|

| 104 |

+

"cell_type": "code",

|

| 105 |

+

"execution_count": null,

|

| 106 |

+

"metadata": {},

|

| 107 |

+

"outputs": [],

|

| 108 |

+

"source": [

|

| 109 |

+

"pas[['name', 'pas_h2_miou_0.3', 'pas_h2_biniou_0.3', 'pas_h2_ap', 'pas_h2_fgiou_ct']]"

|

| 110 |

+

]

|

| 111 |

+

},

|

| 112 |

+

{

|

| 113 |

+

"cell_type": "code",

|

| 114 |

+

"execution_count": null,

|

| 115 |

+

"metadata": {},

|

| 116 |

+

"outputs": [],

|

| 117 |

+

"source": [

|

| 118 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums=':8').dataframe()\n",

|

| 119 |

+

"tab1 = pas[['pas_h2_miou_0.3', 'pas_h2_biniou_0.3', 'pas_h2_ap']]\n",

|

| 120 |

+

"print('CLIPSeg (PC+) & 0.3 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')\n",

|

| 121 |

+

"print('CLIPSeg (PC) & 0.3 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[4:8].mean(0).values), '\\\\\\\\')\n",

|

| 122 |

+

"\n",

|

| 123 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums='12:16').dataframe()\n",

|

| 124 |

+

"tab1 = pas[['pas_h2_miou_0.2', 'pas_h2_biniou_0.2', 'pas_h2_ap']]\n",

|

| 125 |

+

"print('CLIP-Deconv (PC+) & 0.2 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')\n",

|

| 126 |

+

"\n",

|

| 127 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums='16:20').dataframe()\n",

|

| 128 |

+

"tab1 = pas[['pas_t_miou_0.2', 'pas_t_biniou_0.2', 'pas_t_ap']]\n",

|

| 129 |

+

"print('ViTSeg (PC+) & 0.2 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')"

|

| 130 |

+

]

|

| 131 |

+

},

|

| 132 |

+

{

|

| 133 |

+

"cell_type": "markdown",

|

| 134 |

+

"metadata": {},

|

| 135 |

+

"source": [

|

| 136 |

+

"#### Pascal Zero-shot (in one-shot setting)\n",

|

| 137 |

+

"\n",

|

| 138 |

+

"Using the same setting as one-shot (hence different from the other zero-shot benchmark)"

|

| 139 |

+

]

|

| 140 |

+

},

|

| 141 |

+

{

|

| 142 |

+

"cell_type": "code",

|

| 143 |

+

"execution_count": null,

|

| 144 |

+

"metadata": {},

|

| 145 |

+

"outputs": [],

|

| 146 |

+

"source": [

|

| 147 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums=':8').dataframe()\n",

|

| 148 |

+

"tab1 = pas[['pas_t_miou_0.3', 'pas_t_biniou_0.3', 'pas_t_ap']]\n",

|

| 149 |

+

"print('CLIPSeg (PC+) & 0.3 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')\n",

|

| 150 |

+

"print('CLIPSeg (PC) & 0.3 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[4:8].mean(0).values), '\\\\\\\\')\n",

|

| 151 |

+

"\n",

|

| 152 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums='12:16').dataframe()\n",

|

| 153 |

+

"tab1 = pas[['pas_t_miou_0.3', 'pas_t_biniou_0.3', 'pas_t_ap']]\n",

|

| 154 |

+

"print('CLIP-Deconv (PC+) & 0.3 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')\n",

|

| 155 |

+

"\n",

|

| 156 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums='16:20').dataframe()\n",

|

| 157 |

+

"tab1 = pas[['pas_t_miou_0.2', 'pas_t_biniou_0.2', 'pas_t_ap']]\n",

|

| 158 |

+

"print('ViTSeg (PC+) & 0.2 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')"

|

| 159 |

+

]

|

| 160 |

+

},

|

| 161 |

+

{

|

| 162 |

+

"cell_type": "code",

|

| 163 |

+

"execution_count": null,

|

| 164 |

+

"metadata": {},

|

| 165 |

+

"outputs": [],

|

| 166 |

+

"source": [

|

| 167 |

+

"# without fixed thresholds...\n",

|

| 168 |

+

"\n",

|

| 169 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums=':8').dataframe()\n",

|

| 170 |

+

"tab1 = pas[['pas_t_best_miou', 'pas_t_best_biniou', 'pas_t_ap']]\n",

|

| 171 |

+

"print('CLIPSeg (PC+) & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')\n",

|

| 172 |

+

"print('CLIPSeg (PC) & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[4:8].mean(0).values), '\\\\\\\\')\n",

|

| 173 |

+

"\n",

|

| 174 |

+

"pas = experiment('experiments/pascal_1shot.yaml', nums='12:16').dataframe()\n",

|

| 175 |

+

"tab1 = pas[['pas_t_best_miou', 'pas_t_best_biniou', 'pas_t_ap']]\n",

|

| 176 |

+

"print('CLIP-Deconv (PC+) & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[0:4].mean(0).values), '\\\\\\\\')"

|

| 177 |

+

]

|

| 178 |

+

},

|

| 179 |

+

{

|

| 180 |

+

"cell_type": "markdown",

|

| 181 |

+

"metadata": {},

|

| 182 |

+

"source": [

|

| 183 |

+

"### COCO"

|

| 184 |

+

]

|

| 185 |

+

},

|

| 186 |

+

{

|

| 187 |

+

"cell_type": "code",

|

| 188 |

+

"execution_count": null,

|

| 189 |

+

"metadata": {},

|

| 190 |

+

"outputs": [],

|

| 191 |

+

"source": [

|

| 192 |

+

"coco = experiment('experiments/coco.yaml', nums=':29').dataframe()"

|

| 193 |

+

]

|

| 194 |

+

},

|

| 195 |

+

{

|

| 196 |

+

"cell_type": "code",

|

| 197 |

+

"execution_count": null,

|

| 198 |

+

"metadata": {},

|

| 199 |

+

"outputs": [],

|

| 200 |

+

"source": [

|

| 201 |

+

"tab1 = coco[['coco_h2_miou_0.1', 'coco_h2_biniou_0.1', 'coco_h2_ap']]\n",

|

| 202 |

+

"tab2 = coco[['coco_h2_miou_0.2', 'coco_h2_biniou_0.2', 'coco_h2_ap']]\n",

|

| 203 |

+

"tab3 = coco[['coco_h2_miou_best', 'coco_h2_biniou_best', 'coco_h2_ap']]\n",

|

| 204 |

+

"print('CLIPSeg (COCO) & 0.1 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[:4].mean(0).values), '\\\\\\\\')\n",

|

| 205 |

+

"print('CLIPSeg (COCO+N) & 0.1 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[4:8].mean(0).values), '\\\\\\\\')\n",

|

| 206 |

+

"print('CLIP-Deconv (COCO+N) & 0.1 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[12:16].mean(0).values), '\\\\\\\\')\n",

|

| 207 |

+

"print('ViTSeg (COCO) & 0.1 & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[8:12].mean(0).values), '\\\\\\\\')"

|

| 208 |

+

]

|

| 209 |

+

},

|

| 210 |

+

{

|

| 211 |

+

"cell_type": "markdown",

|

| 212 |

+

"metadata": {},

|

| 213 |

+

"source": [

|

| 214 |

+

"# Zero-shot"

|

| 215 |

+

]

|

| 216 |

+

},

|

| 217 |

+

{

|

| 218 |

+

"cell_type": "code",

|

| 219 |

+

"execution_count": null,

|

| 220 |

+

"metadata": {},

|

| 221 |

+

"outputs": [],

|

| 222 |

+

"source": [

|

| 223 |

+

"zs = experiment('experiments/pascal_0shot.yaml', nums=':11').dataframe()"

|

| 224 |

+

]

|

| 225 |

+

},

|

| 226 |

+

{

|

| 227 |

+

"cell_type": "code",

|

| 228 |

+

"execution_count": null,

|

| 229 |

+

"metadata": {},

|

| 230 |

+

"outputs": [],

|

| 231 |

+

"source": [

|

| 232 |

+

"\n",

|

| 233 |

+

"tab1 = zs[['pas_zs_seen', 'pas_zs_unseen']]\n",

|

| 234 |

+

"print('CLIPSeg (PC+) & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[8:9].values[0].tolist() + tab1[10:11].values[0].tolist()), '\\\\\\\\')\n",

|

| 235 |

+

"print('CLIP-Deconv & CLIP & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[2:3].values[0].tolist() + tab1[3:4].values[0].tolist()), '\\\\\\\\')\n",

|

| 236 |

+

"print('ViTSeg & ImageNet-1K & ' + ' & '.join(f'{x*100:.1f}' for x in tab1[4:5].values[0].tolist() + tab1[5:6].values[0].tolist()), '\\\\\\\\')"

|

| 237 |

+

]

|

| 238 |

+

},

|

| 239 |

+

{

|

| 240 |

+

"cell_type": "markdown",

|

| 241 |

+

"metadata": {},

|

| 242 |

+

"source": [

|

| 243 |

+

"# Ablation"

|

| 244 |

+

]

|

| 245 |

+

},

|

| 246 |

+

{

|

| 247 |

+

"cell_type": "code",

|

| 248 |

+

"execution_count": null,

|

| 249 |

+

"metadata": {},

|

| 250 |

+

"outputs": [],

|

| 251 |

+

"source": [

|

| 252 |

+

"ablation = experiment('experiments/ablation.yaml', nums=':8').dataframe()"

|

| 253 |

+

]

|

| 254 |

+

},

|

| 255 |

+

{

|

| 256 |

+

"cell_type": "code",

|

| 257 |

+

"execution_count": null,

|

| 258 |

+

"metadata": {},

|

| 259 |

+

"outputs": [],

|

| 260 |

+

"source": [

|

| 261 |

+

"tab1 = ablation[['name', 'pc_miou_best', 'pc_ap', 'pc-vis_miou_best', 'pc-vis_ap']]\n",

|

| 262 |

+

"for k in ['pc_miou_best', 'pc_ap', 'pc-vis_miou_best', 'pc-vis_ap']:\n",

|

| 263 |

+

" tab1.loc[:, k] = (100 * tab1.loc[:, k]).round(1)\n",

|

| 264 |

+

"tab1.loc[:, 'name'] = ['CLIPSeg', 'no CLIP pre-training', 'no-negatives', '50% negatives', 'no visual', '$D=16$', 'only layer 3', 'highlight mask']"

|

| 265 |

+

]

|

| 266 |

+

},

|

| 267 |

+

{

|

| 268 |

+

"cell_type": "code",

|

| 269 |

+

"execution_count": null,

|

| 270 |

+

"metadata": {},

|

| 271 |

+

"outputs": [],

|

| 272 |

+

"source": [

|

| 273 |

+

"print(tab1.loc[[0,1,4,5,6,7],:].to_latex(header=False, index=False))"

|

| 274 |

+

]

|

| 275 |

+

},

|

| 276 |

+

{

|

| 277 |

+

"cell_type": "code",

|

| 278 |

+

"execution_count": null,

|

| 279 |

+

"metadata": {},

|

| 280 |

+

"outputs": [],

|

| 281 |

+

"source": [

|

| 282 |

+

"print(tab1.loc[[0,1,4,5,6,7],:].to_latex(header=False, index=False))"

|

| 283 |

+

]

|

| 284 |

+

},

|

| 285 |

+

{

|

| 286 |

+

"cell_type": "markdown",

|

| 287 |

+

"metadata": {},

|

| 288 |

+

"source": [

|

| 289 |

+

"# Generalization"

|

| 290 |

+

]

|

| 291 |

+

},

|

| 292 |

+

{

|

| 293 |

+

"cell_type": "code",

|

| 294 |

+

"execution_count": null,

|

| 295 |

+

"metadata": {},

|

| 296 |

+

"outputs": [],

|

| 297 |

+

"source": [

|

| 298 |

+

"generalization = experiment('experiments/generalize.yaml').dataframe()"

|

| 299 |

+

]

|

| 300 |

+

},

|

| 301 |

+

{

|

| 302 |

+

"cell_type": "code",

|

| 303 |

+

"execution_count": null,

|

| 304 |

+

"metadata": {},

|

| 305 |

+

"outputs": [],

|

| 306 |

+

"source": [

|

| 307 |

+

"gen = generalization[['aff_best_fgiou', 'aff_ap', 'ability_best_fgiou', 'ability_ap', 'part_best_fgiou', 'part_ap']].values"

|

| 308 |

+

]

|

| 309 |

+

},

|

| 310 |

+

{

|

| 311 |

+

"cell_type": "code",

|

| 312 |

+

"execution_count": null,

|

| 313 |

+

"metadata": {},

|

| 314 |

+

"outputs": [],

|

| 315 |

+

"source": [

|

| 316 |

+

"print(\n",

|

| 317 |

+

" 'CLIPSeg (PC+) & ' + ' & '.join(f'{x*100:.1f}' for x in gen[1]) + ' \\\\\\\\ \\n' + \\\n",

|

| 318 |

+

" 'CLIPSeg (LVIS) & ' + ' & '.join(f'{x*100:.1f}' for x in gen[0]) + ' \\\\\\\\ \\n' + \\\n",

|

| 319 |

+

" 'CLIP-Deconv & ' + ' & '.join(f'{x*100:.1f}' for x in gen[2]) + ' \\\\\\\\ \\n' + \\\n",

|

| 320 |

+

" 'VITSeg & ' + ' & '.join(f'{x*100:.1f}' for x in gen[3]) + ' \\\\\\\\'\n",

|

| 321 |

+

")"

|

| 322 |

+

]

|

| 323 |

+

}

|

| 324 |

+

],

|

| 325 |

+

"metadata": {

|

| 326 |

+

"interpreter": {

|

| 327 |

+

"hash": "800ed241f7db2bd3aa6942aa3be6809cdb30ee6b0a9e773dfecfa9fef1f4c586"

|

| 328 |

+

},

|

| 329 |

+

"kernelspec": {

|

| 330 |

+

"display_name": "env2",

|

| 331 |

+

"language": "python",

|

| 332 |

+

"name": "env2"

|

| 333 |

+

},

|

| 334 |

+

"language_info": {

|

| 335 |

+

"codemirror_mode": {

|

| 336 |

+

"name": "ipython",

|

| 337 |

+

"version": 3

|

| 338 |

+

},

|

| 339 |

+

"file_extension": ".py",

|

| 340 |

+

"mimetype": "text/x-python",

|

| 341 |

+

"name": "python",

|

| 342 |

+

"nbconvert_exporter": "python",

|

| 343 |

+

"pygments_lexer": "ipython3",

|

| 344 |

+

"version": "3.8.8"

|

| 345 |

+

}

|

| 346 |

+

},

|

| 347 |

+

"nbformat": 4,

|

| 348 |

+

"nbformat_minor": 4

|

| 349 |

+

}

|

clipseg/Visual_Feature_Engineering.ipynb

ADDED

|

@@ -0,0 +1,366 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|