Spaces:

Running

Running

sc_ma

commited on

Commit

•

238735e

1

Parent(s):

5a9ffbd

Add auto_backgrounds.

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- auto_backgrounds.py +117 -0

- auto_draft.py +2 -1

- latex_templates/Summary/abstract.tex +0 -0

- latex_templates/Summary/backgrounds.tex +0 -0

- latex_templates/Summary/conclusion.tex +0 -0

- latex_templates/Summary/experiments.tex +0 -0

- latex_templates/Summary/fancyhdr.sty +485 -0

- latex_templates/Summary/iclr2022_conference.bst +1440 -0

- latex_templates/Summary/iclr2022_conference.sty +245 -0

- latex_templates/Summary/introduction.tex +0 -0

- latex_templates/Summary/math_commands.tex +508 -0

- latex_templates/Summary/methodology.tex +0 -0

- latex_templates/Summary/natbib.sty +1246 -0

- latex_templates/Summary/related works.tex +0 -0

- latex_templates/Summary/template.tex +33 -0

- outputs/outputs_20230420_235048/abstract.tex +1 -0

- outputs/outputs_20230420_235048/backgrounds.tex +26 -0

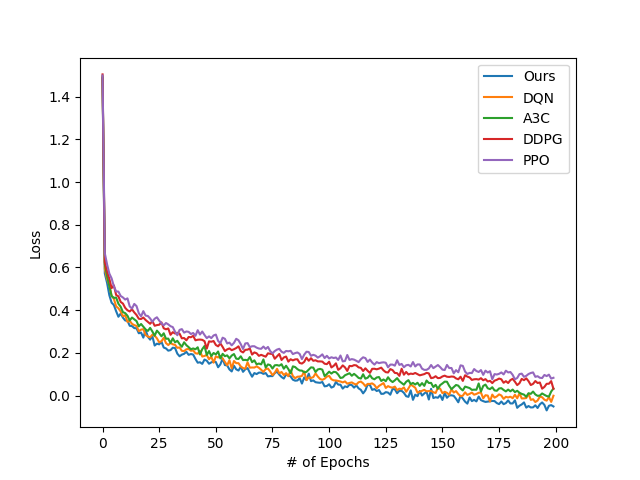

- outputs/outputs_20230420_235048/comparison.png +0 -0

- outputs/outputs_20230420_235048/conclusion.tex +6 -0

- outputs/outputs_20230420_235048/experiments.tex +31 -0

- outputs/outputs_20230420_235048/fancyhdr.sty +485 -0

- outputs/outputs_20230420_235048/generation.log +158 -0

- outputs/outputs_20230420_235048/iclr2022_conference.bst +1440 -0

- outputs/outputs_20230420_235048/iclr2022_conference.sty +245 -0

- outputs/outputs_20230420_235048/introduction.tex +10 -0

- outputs/outputs_20230420_235048/main.aux +78 -0

- outputs/outputs_20230420_235048/main.bbl +74 -0

- outputs/outputs_20230420_235048/main.blg +507 -0

- outputs/outputs_20230420_235048/main.log +470 -0

- outputs/outputs_20230420_235048/main.out +13 -0

- outputs/outputs_20230420_235048/main.pdf +0 -0

- outputs/outputs_20230420_235048/main.synctex.gz +0 -0

- outputs/outputs_20230420_235048/main.tex +34 -0

- outputs/outputs_20230420_235048/math_commands.tex +508 -0

- outputs/outputs_20230420_235048/methodology.tex +15 -0

- outputs/outputs_20230420_235048/natbib.sty +1246 -0

- outputs/outputs_20230420_235048/ref.bib +998 -0

- outputs/outputs_20230420_235048/related works.tex +18 -0

- outputs/outputs_20230420_235048/template.tex +34 -0

- outputs/outputs_20230421_000752/abstract.tex +0 -0

- outputs/outputs_20230421_000752/backgrounds.tex +20 -0

- outputs/outputs_20230421_000752/conclusion.tex +0 -0

- outputs/outputs_20230421_000752/experiments.tex +0 -0

- outputs/outputs_20230421_000752/fancyhdr.sty +485 -0

- outputs/outputs_20230421_000752/generation.log +123 -0

- outputs/outputs_20230421_000752/iclr2022_conference.bst +1440 -0

- outputs/outputs_20230421_000752/iclr2022_conference.sty +245 -0

- outputs/outputs_20230421_000752/introduction.tex +10 -0

- outputs/outputs_20230421_000752/main.aux +92 -0

- outputs/outputs_20230421_000752/main.bbl +122 -0

auto_backgrounds.py

ADDED

|

@@ -0,0 +1,117 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from utils.references import References

|

| 2 |

+

from utils.prompts import generate_bg_keywords_prompts, generate_bg_summary_prompts

|

| 3 |

+

from utils.gpt_interaction import get_responses, extract_responses, extract_keywords, extract_json

|

| 4 |

+

from utils.tex_processing import replace_title

|

| 5 |

+

import datetime

|

| 6 |

+

import shutil

|

| 7 |

+

import time

|

| 8 |

+

import logging

|

| 9 |

+

|

| 10 |

+

TOTAL_TOKENS = 0

|

| 11 |

+

TOTAL_PROMPTS_TOKENS = 0

|

| 12 |

+

TOTAL_COMPLETION_TOKENS = 0

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def log_usage(usage, generating_target, print_out=True):

|

| 16 |

+

global TOTAL_TOKENS

|

| 17 |

+

global TOTAL_PROMPTS_TOKENS

|

| 18 |

+

global TOTAL_COMPLETION_TOKENS

|

| 19 |

+

|

| 20 |

+

prompts_tokens = usage['prompt_tokens']

|

| 21 |

+

completion_tokens = usage['completion_tokens']

|

| 22 |

+

total_tokens = usage['total_tokens']

|

| 23 |

+

|

| 24 |

+

TOTAL_TOKENS += total_tokens

|

| 25 |

+

TOTAL_PROMPTS_TOKENS += prompts_tokens

|

| 26 |

+

TOTAL_COMPLETION_TOKENS += completion_tokens

|

| 27 |

+

|

| 28 |

+

message = f"For generating {generating_target}, {total_tokens} tokens have been used ({prompts_tokens} for prompts; {completion_tokens} for completion). " \

|

| 29 |

+

f"{TOTAL_TOKENS} tokens have been used in total."

|

| 30 |

+

if print_out:

|

| 31 |

+

print(message)

|

| 32 |

+

logging.info(message)

|

| 33 |

+

|

| 34 |

+

def pipeline(paper, section, save_to_path, model):

|

| 35 |

+

"""

|

| 36 |

+

The main pipeline of generating a section.

|

| 37 |

+

1. Generate prompts.

|

| 38 |

+

2. Get responses from AI assistant.

|

| 39 |

+

3. Extract the section text.

|

| 40 |

+

4. Save the text to .tex file.

|

| 41 |

+

:return usage

|

| 42 |

+

"""

|

| 43 |

+

print(f"Generating {section}...")

|

| 44 |

+

prompts = generate_bg_summary_prompts(paper, section)

|

| 45 |

+

gpt_response, usage = get_responses(prompts, model)

|

| 46 |

+

output = extract_responses(gpt_response)

|

| 47 |

+

paper["body"][section] = output

|

| 48 |

+

tex_file = save_to_path + f"{section}.tex"

|

| 49 |

+

if section == "abstract":

|

| 50 |

+

with open(tex_file, "w") as f:

|

| 51 |

+

f.write(r"\begin{abstract}")

|

| 52 |

+

with open(tex_file, "a") as f:

|

| 53 |

+

f.write(output)

|

| 54 |

+

with open(tex_file, "a") as f:

|

| 55 |

+

f.write(r"\end{abstract}")

|

| 56 |

+

else:

|

| 57 |

+

with open(tex_file, "w") as f:

|

| 58 |

+

f.write(f"\section{{{section}}}\n")

|

| 59 |

+

with open(tex_file, "a") as f:

|

| 60 |

+

f.write(output)

|

| 61 |

+

time.sleep(20)

|

| 62 |

+

print(f"{section} has been generated. Saved to {tex_file}.")

|

| 63 |

+

return usage

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

def generate_backgrounds(title, description="", template="ICLR2022", model="gpt-4"):

|

| 68 |

+

paper = {}

|

| 69 |

+

paper_body = {}

|

| 70 |

+

|

| 71 |

+

# Create a copy in the outputs folder.

|

| 72 |

+

now = datetime.datetime.now()

|

| 73 |

+

target_name = now.strftime("outputs_%Y%m%d_%H%M%S")

|

| 74 |

+

source_folder = f"latex_templates/{template}"

|

| 75 |

+

destination_folder = f"outputs/{target_name}"

|

| 76 |

+

shutil.copytree(source_folder, destination_folder)

|

| 77 |

+

|

| 78 |

+

bibtex_path = destination_folder + "/ref.bib"

|

| 79 |

+

save_to_path = destination_folder +"/"

|

| 80 |

+

replace_title(save_to_path, "A Survey on " + title)

|

| 81 |

+

logging.basicConfig( level=logging.INFO, filename=save_to_path+"generation.log")

|

| 82 |

+

|

| 83 |

+

# Generate keywords and references

|

| 84 |

+

print("Initialize the paper information ...")

|

| 85 |

+

prompts = generate_bg_keywords_prompts(title, description)

|

| 86 |

+

gpt_response, usage = get_responses(prompts, model)

|

| 87 |

+

keywords = extract_keywords(gpt_response)

|

| 88 |

+

log_usage(usage, "keywords")

|

| 89 |

+

|

| 90 |

+

ref = References(load_papers = "")

|

| 91 |

+

ref.collect_papers(keywords, method="arxiv")

|

| 92 |

+

all_paper_ids = ref.to_bibtex(bibtex_path) #todo: this will used to check if all citations are in this list

|

| 93 |

+

|

| 94 |

+

print(f"The paper information has been initialized. References are saved to {bibtex_path}.")

|

| 95 |

+

|

| 96 |

+

paper["title"] = title

|

| 97 |

+

paper["description"] = description

|

| 98 |

+

paper["references"] = ref.to_prompts() # to_prompts(top_papers)

|

| 99 |

+

paper["body"] = paper_body

|

| 100 |

+

paper["bibtex"] = bibtex_path

|

| 101 |

+

|

| 102 |

+

for section in ["introduction", "related works", "backgrounds"]:

|

| 103 |

+

try:

|

| 104 |

+

usage = pipeline(paper, section, save_to_path, model=model)

|

| 105 |

+

log_usage(usage, section)

|

| 106 |

+

except Exception as e:

|

| 107 |

+

print(f"Failed to generate {section} due to the error: {e}")

|

| 108 |

+

print(f"The paper {title} has been generated. Saved to {save_to_path}.")

|

| 109 |

+

|

| 110 |

+

if __name__ == "__main__":

|

| 111 |

+

title = "Reinforcement Learning"

|

| 112 |

+

description = ""

|

| 113 |

+

template = "Summary"

|

| 114 |

+

model = "gpt-4"

|

| 115 |

+

# model = "gpt-3.5-turbo"

|

| 116 |

+

|

| 117 |

+

generate_backgrounds(title, description, template, model)

|

auto_draft.py

CHANGED

|

@@ -123,7 +123,8 @@ def generate_draft(title, description="", template="ICLR2022", model="gpt-4"):

|

|

| 123 |

print(f"The paper {title} has been generated. Saved to {save_to_path}.")

|

| 124 |

|

| 125 |

if __name__ == "__main__":

|

| 126 |

-

title = "Training Adversarial Generative Neural Network with Adaptive Dropout Rate"

|

|

|

|

| 127 |

description = ""

|

| 128 |

template = "ICLR2022"

|

| 129 |

model = "gpt-4"

|

|

|

|

| 123 |

print(f"The paper {title} has been generated. Saved to {save_to_path}.")

|

| 124 |

|

| 125 |

if __name__ == "__main__":

|

| 126 |

+

# title = "Training Adversarial Generative Neural Network with Adaptive Dropout Rate"

|

| 127 |

+

title = "Playing Atari Game with Deep Reinforcement Learning"

|

| 128 |

description = ""

|

| 129 |

template = "ICLR2022"

|

| 130 |

model = "gpt-4"

|

latex_templates/Summary/abstract.tex

ADDED

|

File without changes

|

latex_templates/Summary/backgrounds.tex

ADDED

|

File without changes

|

latex_templates/Summary/conclusion.tex

ADDED

|

File without changes

|

latex_templates/Summary/experiments.tex

ADDED

|

File without changes

|

latex_templates/Summary/fancyhdr.sty

ADDED

|

@@ -0,0 +1,485 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

% fancyhdr.sty version 3.2

|

| 2 |

+

% Fancy headers and footers for LaTeX.

|

| 3 |

+

% Piet van Oostrum,

|

| 4 |

+

% Dept of Computer and Information Sciences, University of Utrecht,

|

| 5 |

+

% Padualaan 14, P.O. Box 80.089, 3508 TB Utrecht, The Netherlands

|

| 6 |

+

% Telephone: +31 30 2532180. Email: piet@cs.uu.nl

|

| 7 |

+

% ========================================================================

|

| 8 |

+

% LICENCE:

|

| 9 |

+

% This file may be distributed under the terms of the LaTeX Project Public

|

| 10 |

+

% License, as described in lppl.txt in the base LaTeX distribution.

|

| 11 |

+

% Either version 1 or, at your option, any later version.

|

| 12 |

+

% ========================================================================

|

| 13 |

+

% MODIFICATION HISTORY:

|

| 14 |

+

% Sep 16, 1994

|

| 15 |

+

% version 1.4: Correction for use with \reversemargin

|

| 16 |

+

% Sep 29, 1994:

|

| 17 |

+

% version 1.5: Added the \iftopfloat, \ifbotfloat and \iffloatpage commands

|

| 18 |

+

% Oct 4, 1994:

|

| 19 |

+

% version 1.6: Reset single spacing in headers/footers for use with

|

| 20 |

+

% setspace.sty or doublespace.sty

|

| 21 |

+

% Oct 4, 1994:

|

| 22 |

+

% version 1.7: changed \let\@mkboth\markboth to

|

| 23 |

+

% \def\@mkboth{\protect\markboth} to make it more robust

|

| 24 |

+

% Dec 5, 1994:

|

| 25 |

+

% version 1.8: corrections for amsbook/amsart: define \@chapapp and (more

|

| 26 |

+

% importantly) use the \chapter/sectionmark definitions from ps@headings if

|

| 27 |

+

% they exist (which should be true for all standard classes).

|

| 28 |

+

% May 31, 1995:

|

| 29 |

+

% version 1.9: The proposed \renewcommand{\headrulewidth}{\iffloatpage...

|

| 30 |

+

% construction in the doc did not work properly with the fancyplain style.

|

| 31 |

+

% June 1, 1995:

|

| 32 |

+

% version 1.91: The definition of \@mkboth wasn't restored on subsequent

|

| 33 |

+

% \pagestyle{fancy}'s.

|

| 34 |

+

% June 1, 1995:

|

| 35 |

+

% version 1.92: The sequence \pagestyle{fancyplain} \pagestyle{plain}

|

| 36 |

+

% \pagestyle{fancy} would erroneously select the plain version.

|

| 37 |

+

% June 1, 1995:

|

| 38 |

+

% version 1.93: \fancypagestyle command added.

|

| 39 |

+

% Dec 11, 1995:

|

| 40 |

+

% version 1.94: suggested by Conrad Hughes <chughes@maths.tcd.ie>

|

| 41 |

+

% CJCH, Dec 11, 1995: added \footruleskip to allow control over footrule

|

| 42 |

+

% position (old hardcoded value of .3\normalbaselineskip is far too high

|

| 43 |

+

% when used with very small footer fonts).

|

| 44 |

+

% Jan 31, 1996:

|

| 45 |

+

% version 1.95: call \@normalsize in the reset code if that is defined,

|

| 46 |

+

% otherwise \normalsize.

|

| 47 |

+

% this is to solve a problem with ucthesis.cls, as this doesn't

|

| 48 |

+

% define \@currsize. Unfortunately for latex209 calling \normalsize doesn't

|

| 49 |

+

% work as this is optimized to do very little, so there \@normalsize should

|

| 50 |

+

% be called. Hopefully this code works for all versions of LaTeX known to

|

| 51 |

+

% mankind.

|

| 52 |

+

% April 25, 1996:

|

| 53 |

+

% version 1.96: initialize \headwidth to a magic (negative) value to catch

|

| 54 |

+

% most common cases that people change it before calling \pagestyle{fancy}.

|

| 55 |

+

% Note it can't be initialized when reading in this file, because

|

| 56 |

+

% \textwidth could be changed afterwards. This is quite probable.

|

| 57 |

+

% We also switch to \MakeUppercase rather than \uppercase and introduce a

|

| 58 |

+

% \nouppercase command for use in headers. and footers.

|

| 59 |

+

% May 3, 1996:

|

| 60 |

+

% version 1.97: Two changes:

|

| 61 |

+

% 1. Undo the change in version 1.8 (using the pagestyle{headings} defaults

|

| 62 |

+

% for the chapter and section marks. The current version of amsbook and

|

| 63 |

+

% amsart classes don't seem to need them anymore. Moreover the standard

|

| 64 |

+

% latex classes don't use \markboth if twoside isn't selected, and this is

|

| 65 |

+

% confusing as \leftmark doesn't work as expected.

|

| 66 |

+

% 2. include a call to \ps@empty in ps@@fancy. This is to solve a problem

|

| 67 |

+

% in the amsbook and amsart classes, that make global changes to \topskip,

|

| 68 |

+

% which are reset in \ps@empty. Hopefully this doesn't break other things.

|

| 69 |

+

% May 7, 1996:

|

| 70 |

+

% version 1.98:

|

| 71 |

+

% Added % after the line \def\nouppercase

|

| 72 |

+

% May 7, 1996:

|

| 73 |

+

% version 1.99: This is the alpha version of fancyhdr 2.0

|

| 74 |

+

% Introduced the new commands \fancyhead, \fancyfoot, and \fancyhf.

|

| 75 |

+

% Changed \headrulewidth, \footrulewidth, \footruleskip to

|

| 76 |

+

% macros rather than length parameters, In this way they can be

|

| 77 |

+

% conditionalized and they don't consume length registers. There is no need

|

| 78 |

+

% to have them as length registers unless you want to do calculations with

|

| 79 |

+

% them, which is unlikely. Note that this may make some uses of them

|

| 80 |

+

% incompatible (i.e. if you have a file that uses \setlength or \xxxx=)

|

| 81 |

+

% May 10, 1996:

|

| 82 |

+

% version 1.99a:

|

| 83 |

+

% Added a few more % signs

|

| 84 |

+

% May 10, 1996:

|

| 85 |

+

% version 1.99b:

|

| 86 |

+

% Changed the syntax of \f@nfor to be resistent to catcode changes of :=

|

| 87 |

+

% Removed the [1] from the defs of \lhead etc. because the parameter is

|

| 88 |

+

% consumed by the \@[xy]lhead etc. macros.

|

| 89 |

+

% June 24, 1997:

|

| 90 |

+

% version 1.99c:

|

| 91 |

+

% corrected \nouppercase to also include the protected form of \MakeUppercase

|

| 92 |

+

% \global added to manipulation of \headwidth.

|

| 93 |

+

% \iffootnote command added.

|

| 94 |

+

% Some comments added about \@fancyhead and \@fancyfoot.

|

| 95 |

+

% Aug 24, 1998

|

| 96 |

+

% version 1.99d

|

| 97 |

+

% Changed the default \ps@empty to \ps@@empty in order to allow

|

| 98 |

+

% \fancypagestyle{empty} redefinition.

|

| 99 |

+

% Oct 11, 2000

|

| 100 |

+

% version 2.0

|

| 101 |

+

% Added LPPL license clause.

|

| 102 |

+

%

|

| 103 |

+

% A check for \headheight is added. An errormessage is given (once) if the

|

| 104 |

+

% header is too large. Empty headers don't generate the error even if

|

| 105 |

+

% \headheight is very small or even 0pt.

|

| 106 |

+

% Warning added for the use of 'E' option when twoside option is not used.

|

| 107 |

+

% In this case the 'E' fields will never be used.

|

| 108 |

+

%

|

| 109 |

+

% Mar 10, 2002

|

| 110 |

+

% version 2.1beta

|

| 111 |

+

% New command: \fancyhfoffset[place]{length}

|

| 112 |

+

% defines offsets to be applied to the header/footer to let it stick into

|

| 113 |

+

% the margins (if length > 0).

|

| 114 |

+

% place is like in fancyhead, except that only E,O,L,R can be used.

|

| 115 |

+

% This replaces the old calculation based on \headwidth and the marginpar

|

| 116 |

+

% area.

|

| 117 |

+

% \headwidth will be dynamically calculated in the headers/footers when

|

| 118 |

+

% this is used.

|

| 119 |

+

%

|

| 120 |

+

% Mar 26, 2002

|

| 121 |

+

% version 2.1beta2

|

| 122 |

+

% \fancyhfoffset now also takes h,f as possible letters in the argument to

|

| 123 |

+

% allow the header and footer widths to be different.

|

| 124 |

+

% New commands \fancyheadoffset and \fancyfootoffset added comparable to

|

| 125 |

+

% \fancyhead and \fancyfoot.

|

| 126 |

+

% Errormessages and warnings have been made more informative.

|

| 127 |

+

%

|

| 128 |

+

% Dec 9, 2002

|

| 129 |

+

% version 2.1

|

| 130 |

+

% The defaults for \footrulewidth, \plainheadrulewidth and

|

| 131 |

+

% \plainfootrulewidth are changed from \z@skip to 0pt. In this way when

|

| 132 |

+

% someone inadvertantly uses \setlength to change any of these, the value

|

| 133 |

+

% of \z@skip will not be changed, rather an errormessage will be given.

|

| 134 |

+

|

| 135 |

+

% March 3, 2004

|

| 136 |

+

% Release of version 3.0

|

| 137 |

+

|

| 138 |

+

% Oct 7, 2004

|

| 139 |

+

% version 3.1

|

| 140 |

+

% Added '\endlinechar=13' to \fancy@reset to prevent problems with

|

| 141 |

+

% includegraphics in header when verbatiminput is active.

|

| 142 |

+

|

| 143 |

+

% March 22, 2005

|

| 144 |

+

% version 3.2

|

| 145 |

+

% reset \everypar (the real one) in \fancy@reset because spanish.ldf does

|

| 146 |

+

% strange things with \everypar between << and >>.

|

| 147 |

+

|

| 148 |

+

\def\ifancy@mpty#1{\def\temp@a{#1}\ifx\temp@a\@empty}

|

| 149 |

+

|

| 150 |

+

\def\fancy@def#1#2{\ifancy@mpty{#2}\fancy@gbl\def#1{\leavevmode}\else

|

| 151 |

+

\fancy@gbl\def#1{#2\strut}\fi}

|

| 152 |

+

|

| 153 |

+

\let\fancy@gbl\global

|

| 154 |

+

|

| 155 |

+

\def\@fancyerrmsg#1{%

|

| 156 |

+

\ifx\PackageError\undefined

|

| 157 |

+

\errmessage{#1}\else

|

| 158 |

+

\PackageError{Fancyhdr}{#1}{}\fi}

|

| 159 |

+

\def\@fancywarning#1{%

|

| 160 |

+

\ifx\PackageWarning\undefined

|

| 161 |

+

\errmessage{#1}\else

|

| 162 |

+

\PackageWarning{Fancyhdr}{#1}{}\fi}

|

| 163 |

+

|

| 164 |

+

% Usage: \@forc \var{charstring}{command to be executed for each char}

|

| 165 |

+

% This is similar to LaTeX's \@tfor, but expands the charstring.

|

| 166 |

+

|

| 167 |

+

\def\@forc#1#2#3{\expandafter\f@rc\expandafter#1\expandafter{#2}{#3}}

|

| 168 |

+

\def\f@rc#1#2#3{\def\temp@ty{#2}\ifx\@empty\temp@ty\else

|

| 169 |

+

\f@@rc#1#2\f@@rc{#3}\fi}

|

| 170 |

+

\def\f@@rc#1#2#3\f@@rc#4{\def#1{#2}#4\f@rc#1{#3}{#4}}

|

| 171 |

+

|

| 172 |

+

% Usage: \f@nfor\name:=list\do{body}

|

| 173 |

+

% Like LaTeX's \@for but an empty list is treated as a list with an empty

|

| 174 |

+

% element

|

| 175 |

+

|

| 176 |

+

\newcommand{\f@nfor}[3]{\edef\@fortmp{#2}%

|

| 177 |

+

\expandafter\@forloop#2,\@nil,\@nil\@@#1{#3}}

|

| 178 |

+

|

| 179 |

+

% Usage: \def@ult \cs{defaults}{argument}

|

| 180 |

+

% sets \cs to the characters from defaults appearing in argument

|

| 181 |

+

% or defaults if it would be empty. All characters are lowercased.

|

| 182 |

+

|

| 183 |

+

\newcommand\def@ult[3]{%

|

| 184 |

+

\edef\temp@a{\lowercase{\edef\noexpand\temp@a{#3}}}\temp@a

|

| 185 |

+

\def#1{}%

|

| 186 |

+

\@forc\tmpf@ra{#2}%

|

| 187 |

+

{\expandafter\if@in\tmpf@ra\temp@a{\edef#1{#1\tmpf@ra}}{}}%

|

| 188 |

+

\ifx\@empty#1\def#1{#2}\fi}

|

| 189 |

+

%

|

| 190 |

+

% \if@in <char><set><truecase><falsecase>

|

| 191 |

+

%

|

| 192 |

+

\newcommand{\if@in}[4]{%

|

| 193 |

+

\edef\temp@a{#2}\def\temp@b##1#1##2\temp@b{\def\temp@b{##1}}%

|

| 194 |

+

\expandafter\temp@b#2#1\temp@b\ifx\temp@a\temp@b #4\else #3\fi}

|

| 195 |

+

|

| 196 |

+

\newcommand{\fancyhead}{\@ifnextchar[{\f@ncyhf\fancyhead h}%

|

| 197 |

+

{\f@ncyhf\fancyhead h[]}}

|

| 198 |

+

\newcommand{\fancyfoot}{\@ifnextchar[{\f@ncyhf\fancyfoot f}%

|

| 199 |

+

{\f@ncyhf\fancyfoot f[]}}

|

| 200 |

+

\newcommand{\fancyhf}{\@ifnextchar[{\f@ncyhf\fancyhf{}}%

|

| 201 |

+

{\f@ncyhf\fancyhf{}[]}}

|

| 202 |

+

|

| 203 |

+

% New commands for offsets added

|

| 204 |

+

|

| 205 |

+

\newcommand{\fancyheadoffset}{\@ifnextchar[{\f@ncyhfoffs\fancyheadoffset h}%

|

| 206 |

+

{\f@ncyhfoffs\fancyheadoffset h[]}}

|

| 207 |

+

\newcommand{\fancyfootoffset}{\@ifnextchar[{\f@ncyhfoffs\fancyfootoffset f}%

|

| 208 |

+

{\f@ncyhfoffs\fancyfootoffset f[]}}

|

| 209 |

+

\newcommand{\fancyhfoffset}{\@ifnextchar[{\f@ncyhfoffs\fancyhfoffset{}}%

|

| 210 |

+

{\f@ncyhfoffs\fancyhfoffset{}[]}}

|

| 211 |

+

|

| 212 |

+

% The header and footer fields are stored in command sequences with

|

| 213 |

+

% names of the form: \f@ncy<x><y><z> with <x> for [eo], <y> from [lcr]

|

| 214 |

+

% and <z> from [hf].

|

| 215 |

+

|

| 216 |

+

\def\f@ncyhf#1#2[#3]#4{%

|

| 217 |

+

\def\temp@c{}%

|

| 218 |

+

\@forc\tmpf@ra{#3}%

|

| 219 |

+

{\expandafter\if@in\tmpf@ra{eolcrhf,EOLCRHF}%

|

| 220 |

+

{}{\edef\temp@c{\temp@c\tmpf@ra}}}%

|

| 221 |

+

\ifx\@empty\temp@c\else

|

| 222 |

+

\@fancyerrmsg{Illegal char `\temp@c' in \string#1 argument:

|

| 223 |

+

[#3]}%

|

| 224 |

+

\fi

|

| 225 |

+

\f@nfor\temp@c{#3}%

|

| 226 |

+

{\def@ult\f@@@eo{eo}\temp@c

|

| 227 |

+

\if@twoside\else

|

| 228 |

+

\if\f@@@eo e\@fancywarning

|

| 229 |

+

{\string#1's `E' option without twoside option is useless}\fi\fi

|

| 230 |

+

\def@ult\f@@@lcr{lcr}\temp@c

|

| 231 |

+

\def@ult\f@@@hf{hf}{#2\temp@c}%

|

| 232 |

+

\@forc\f@@eo\f@@@eo

|

| 233 |

+

{\@forc\f@@lcr\f@@@lcr

|

| 234 |

+

{\@forc\f@@hf\f@@@hf

|

| 235 |

+

{\expandafter\fancy@def\csname

|

| 236 |

+

f@ncy\f@@eo\f@@lcr\f@@hf\endcsname

|

| 237 |

+

{#4}}}}}}

|

| 238 |

+

|

| 239 |

+

\def\f@ncyhfoffs#1#2[#3]#4{%

|

| 240 |

+

\def\temp@c{}%

|

| 241 |

+

\@forc\tmpf@ra{#3}%

|

| 242 |

+

{\expandafter\if@in\tmpf@ra{eolrhf,EOLRHF}%

|

| 243 |

+

{}{\edef\temp@c{\temp@c\tmpf@ra}}}%

|

| 244 |

+

\ifx\@empty\temp@c\else

|

| 245 |

+

\@fancyerrmsg{Illegal char `\temp@c' in \string#1 argument:

|

| 246 |

+

[#3]}%

|

| 247 |

+

\fi

|

| 248 |

+

\f@nfor\temp@c{#3}%

|

| 249 |

+

{\def@ult\f@@@eo{eo}\temp@c

|

| 250 |

+

\if@twoside\else

|

| 251 |

+

\if\f@@@eo e\@fancywarning

|

| 252 |

+

{\string#1's `E' option without twoside option is useless}\fi\fi

|

| 253 |

+

\def@ult\f@@@lcr{lr}\temp@c

|

| 254 |

+

\def@ult\f@@@hf{hf}{#2\temp@c}%

|

| 255 |

+

\@forc\f@@eo\f@@@eo

|

| 256 |

+

{\@forc\f@@lcr\f@@@lcr

|

| 257 |

+

{\@forc\f@@hf\f@@@hf

|

| 258 |

+

{\expandafter\setlength\csname

|

| 259 |

+

f@ncyO@\f@@eo\f@@lcr\f@@hf\endcsname

|

| 260 |

+

{#4}}}}}%

|

| 261 |

+

\fancy@setoffs}

|

| 262 |

+

|

| 263 |

+

% Fancyheadings version 1 commands. These are more or less deprecated,

|

| 264 |

+

% but they continue to work.

|

| 265 |

+

|

| 266 |

+

\newcommand{\lhead}{\@ifnextchar[{\@xlhead}{\@ylhead}}

|

| 267 |

+

\def\@xlhead[#1]#2{\fancy@def\f@ncyelh{#1}\fancy@def\f@ncyolh{#2}}

|

| 268 |

+

\def\@ylhead#1{\fancy@def\f@ncyelh{#1}\fancy@def\f@ncyolh{#1}}

|

| 269 |

+

|

| 270 |

+

\newcommand{\chead}{\@ifnextchar[{\@xchead}{\@ychead}}

|

| 271 |

+

\def\@xchead[#1]#2{\fancy@def\f@ncyech{#1}\fancy@def\f@ncyoch{#2}}

|

| 272 |

+

\def\@ychead#1{\fancy@def\f@ncyech{#1}\fancy@def\f@ncyoch{#1}}

|

| 273 |

+

|

| 274 |

+

\newcommand{\rhead}{\@ifnextchar[{\@xrhead}{\@yrhead}}

|

| 275 |

+

\def\@xrhead[#1]#2{\fancy@def\f@ncyerh{#1}\fancy@def\f@ncyorh{#2}}

|

| 276 |

+

\def\@yrhead#1{\fancy@def\f@ncyerh{#1}\fancy@def\f@ncyorh{#1}}

|

| 277 |

+

|

| 278 |

+

\newcommand{\lfoot}{\@ifnextchar[{\@xlfoot}{\@ylfoot}}

|

| 279 |

+

\def\@xlfoot[#1]#2{\fancy@def\f@ncyelf{#1}\fancy@def\f@ncyolf{#2}}

|

| 280 |

+

\def\@ylfoot#1{\fancy@def\f@ncyelf{#1}\fancy@def\f@ncyolf{#1}}

|

| 281 |

+

|

| 282 |

+

\newcommand{\cfoot}{\@ifnextchar[{\@xcfoot}{\@ycfoot}}

|

| 283 |

+

\def\@xcfoot[#1]#2{\fancy@def\f@ncyecf{#1}\fancy@def\f@ncyocf{#2}}

|

| 284 |

+

\def\@ycfoot#1{\fancy@def\f@ncyecf{#1}\fancy@def\f@ncyocf{#1}}

|

| 285 |

+

|

| 286 |

+

\newcommand{\rfoot}{\@ifnextchar[{\@xrfoot}{\@yrfoot}}

|

| 287 |

+

\def\@xrfoot[#1]#2{\fancy@def\f@ncyerf{#1}\fancy@def\f@ncyorf{#2}}

|

| 288 |

+

\def\@yrfoot#1{\fancy@def\f@ncyerf{#1}\fancy@def\f@ncyorf{#1}}

|

| 289 |

+

|

| 290 |

+

\newlength{\fancy@headwidth}

|

| 291 |

+

\let\headwidth\fancy@headwidth

|

| 292 |

+

\newlength{\f@ncyO@elh}

|

| 293 |

+

\newlength{\f@ncyO@erh}

|

| 294 |

+

\newlength{\f@ncyO@olh}

|

| 295 |

+

\newlength{\f@ncyO@orh}

|

| 296 |

+

\newlength{\f@ncyO@elf}

|

| 297 |

+

\newlength{\f@ncyO@erf}

|

| 298 |

+

\newlength{\f@ncyO@olf}

|

| 299 |

+

\newlength{\f@ncyO@orf}

|

| 300 |

+

\newcommand{\headrulewidth}{0.4pt}

|

| 301 |

+

\newcommand{\footrulewidth}{0pt}

|

| 302 |

+

\newcommand{\footruleskip}{.3\normalbaselineskip}

|

| 303 |

+

|

| 304 |

+

% Fancyplain stuff shouldn't be used anymore (rather

|

| 305 |

+

% \fancypagestyle{plain} should be used), but it must be present for

|

| 306 |

+

% compatibility reasons.

|

| 307 |

+

|

| 308 |

+

\newcommand{\plainheadrulewidth}{0pt}

|

| 309 |

+

\newcommand{\plainfootrulewidth}{0pt}

|

| 310 |

+

\newif\if@fancyplain \@fancyplainfalse

|

| 311 |

+

\def\fancyplain#1#2{\if@fancyplain#1\else#2\fi}

|

| 312 |

+

|

| 313 |

+

\headwidth=-123456789sp %magic constant

|

| 314 |

+

|

| 315 |

+

% Command to reset various things in the headers:

|

| 316 |

+

% a.o. single spacing (taken from setspace.sty)

|

| 317 |

+

% and the catcode of ^^M (so that epsf files in the header work if a

|

| 318 |

+

% verbatim crosses a page boundary)

|

| 319 |

+

% It also defines a \nouppercase command that disables \uppercase and

|

| 320 |

+

% \Makeuppercase. It can only be used in the headers and footers.

|

| 321 |

+

\let\fnch@everypar\everypar% save real \everypar because of spanish.ldf

|

| 322 |

+

\def\fancy@reset{\fnch@everypar{}\restorecr\endlinechar=13

|

| 323 |

+

\def\baselinestretch{1}%

|

| 324 |

+

\def\nouppercase##1{{\let\uppercase\relax\let\MakeUppercase\relax

|

| 325 |

+

\expandafter\let\csname MakeUppercase \endcsname\relax##1}}%

|

| 326 |

+

\ifx\undefined\@newbaseline% NFSS not present; 2.09 or 2e

|

| 327 |

+

\ifx\@normalsize\undefined \normalsize % for ucthesis.cls

|

| 328 |

+

\else \@normalsize \fi

|

| 329 |

+

\else% NFSS (2.09) present

|

| 330 |

+

\@newbaseline%

|

| 331 |

+

\fi}

|

| 332 |

+

|

| 333 |

+

% Initialization of the head and foot text.

|

| 334 |

+

|

| 335 |

+

% The default values still contain \fancyplain for compatibility.

|

| 336 |

+

\fancyhf{} % clear all

|

| 337 |

+

% lefthead empty on ``plain'' pages, \rightmark on even, \leftmark on odd pages

|

| 338 |

+

% evenhead empty on ``plain'' pages, \leftmark on even, \rightmark on odd pages

|

| 339 |

+

\if@twoside

|

| 340 |

+

\fancyhead[el,or]{\fancyplain{}{\sl\rightmark}}

|

| 341 |

+

\fancyhead[er,ol]{\fancyplain{}{\sl\leftmark}}

|

| 342 |

+

\else

|

| 343 |

+

\fancyhead[l]{\fancyplain{}{\sl\rightmark}}

|

| 344 |

+

\fancyhead[r]{\fancyplain{}{\sl\leftmark}}

|

| 345 |

+

\fi

|

| 346 |

+

\fancyfoot[c]{\rm\thepage} % page number

|

| 347 |

+

|

| 348 |

+

% Use box 0 as a temp box and dimen 0 as temp dimen.

|

| 349 |

+

% This can be done, because this code will always

|

| 350 |

+

% be used inside another box, and therefore the changes are local.

|

| 351 |

+

|

| 352 |

+

\def\@fancyvbox#1#2{\setbox0\vbox{#2}\ifdim\ht0>#1\@fancywarning

|

| 353 |

+

{\string#1 is too small (\the#1): ^^J Make it at least \the\ht0.^^J

|

| 354 |

+

We now make it that large for the rest of the document.^^J

|

| 355 |

+

This may cause the page layout to be inconsistent, however\@gobble}%

|

| 356 |

+

\dimen0=#1\global\setlength{#1}{\ht0}\ht0=\dimen0\fi

|

| 357 |

+

\box0}

|

| 358 |

+

|

| 359 |

+

% Put together a header or footer given the left, center and

|

| 360 |

+

% right text, fillers at left and right and a rule.

|

| 361 |

+

% The \lap commands put the text into an hbox of zero size,

|

| 362 |

+

% so overlapping text does not generate an errormessage.

|

| 363 |

+

% These macros have 5 parameters:

|

| 364 |

+

% 1. LEFTSIDE BEARING % This determines at which side the header will stick

|

| 365 |

+

% out. When \fancyhfoffset is used this calculates \headwidth, otherwise

|

| 366 |

+

% it is \hss or \relax (after expansion).

|

| 367 |

+

% 2. \f@ncyolh, \f@ncyelh, \f@ncyolf or \f@ncyelf. This is the left component.

|

| 368 |

+

% 3. \f@ncyoch, \f@ncyech, \f@ncyocf or \f@ncyecf. This is the middle comp.

|

| 369 |

+

% 4. \f@ncyorh, \f@ncyerh, \f@ncyorf or \f@ncyerf. This is the right component.

|

| 370 |

+

% 5. RIGHTSIDE BEARING. This is always \relax or \hss (after expansion).

|

| 371 |

+

|

| 372 |

+

\def\@fancyhead#1#2#3#4#5{#1\hbox to\headwidth{\fancy@reset

|

| 373 |

+

\@fancyvbox\headheight{\hbox

|

| 374 |

+

{\rlap{\parbox[b]{\headwidth}{\raggedright#2}}\hfill

|

| 375 |

+

\parbox[b]{\headwidth}{\centering#3}\hfill

|

| 376 |

+

\llap{\parbox[b]{\headwidth}{\raggedleft#4}}}\headrule}}#5}

|

| 377 |

+

|

| 378 |

+

\def\@fancyfoot#1#2#3#4#5{#1\hbox to\headwidth{\fancy@reset

|

| 379 |

+

\@fancyvbox\footskip{\footrule

|

| 380 |

+

\hbox{\rlap{\parbox[t]{\headwidth}{\raggedright#2}}\hfill

|

| 381 |

+

\parbox[t]{\headwidth}{\centering#3}\hfill

|

| 382 |

+

\llap{\parbox[t]{\headwidth}{\raggedleft#4}}}}}#5}

|

| 383 |

+

|

| 384 |

+

\def\headrule{{\if@fancyplain\let\headrulewidth\plainheadrulewidth\fi

|

| 385 |

+

\hrule\@height\headrulewidth\@width\headwidth \vskip-\headrulewidth}}

|

| 386 |

+

|

| 387 |

+

\def\footrule{{\if@fancyplain\let\footrulewidth\plainfootrulewidth\fi

|

| 388 |

+

\vskip-\footruleskip\vskip-\footrulewidth

|

| 389 |

+

\hrule\@width\headwidth\@height\footrulewidth\vskip\footruleskip}}

|

| 390 |

+

|

| 391 |

+

\def\ps@fancy{%

|

| 392 |

+

\@ifundefined{@chapapp}{\let\@chapapp\chaptername}{}%for amsbook

|

| 393 |

+

%

|

| 394 |

+

% Define \MakeUppercase for old LaTeXen.

|

| 395 |

+

% Note: we used \def rather than \let, so that \let\uppercase\relax (from

|

| 396 |

+

% the version 1 documentation) will still work.

|

| 397 |

+

%

|

| 398 |

+

\@ifundefined{MakeUppercase}{\def\MakeUppercase{\uppercase}}{}%

|

| 399 |

+

\@ifundefined{chapter}{\def\sectionmark##1{\markboth

|

| 400 |

+

{\MakeUppercase{\ifnum \c@secnumdepth>\z@

|

| 401 |

+

\thesection\hskip 1em\relax \fi ##1}}{}}%

|

| 402 |

+

\def\subsectionmark##1{\markright {\ifnum \c@secnumdepth >\@ne

|

| 403 |

+

\thesubsection\hskip 1em\relax \fi ##1}}}%

|

| 404 |

+

{\def\chaptermark##1{\markboth {\MakeUppercase{\ifnum \c@secnumdepth>\m@ne

|

| 405 |

+

\@chapapp\ \thechapter. \ \fi ##1}}{}}%

|

| 406 |

+

\def\sectionmark##1{\markright{\MakeUppercase{\ifnum \c@secnumdepth >\z@

|

| 407 |

+

\thesection. \ \fi ##1}}}}%

|

| 408 |

+

%\csname ps@headings\endcsname % use \ps@headings defaults if they exist

|

| 409 |

+

\ps@@fancy

|

| 410 |

+

\gdef\ps@fancy{\@fancyplainfalse\ps@@fancy}%

|

| 411 |

+

% Initialize \headwidth if the user didn't

|

| 412 |

+

%

|

| 413 |

+

\ifdim\headwidth<0sp

|

| 414 |

+

%

|

| 415 |

+

% This catches the case that \headwidth hasn't been initialized and the

|

| 416 |

+

% case that the user added something to \headwidth in the expectation that

|

| 417 |

+

% it was initialized to \textwidth. We compensate this now. This loses if

|

| 418 |

+

% the user intended to multiply it by a factor. But that case is more

|

| 419 |

+

% likely done by saying something like \headwidth=1.2\textwidth.

|

| 420 |

+

% The doc says you have to change \headwidth after the first call to

|

| 421 |

+

% \pagestyle{fancy}. This code is just to catch the most common cases were

|

| 422 |

+

% that requirement is violated.

|

| 423 |

+

%

|

| 424 |

+

\global\advance\headwidth123456789sp\global\advance\headwidth\textwidth

|

| 425 |

+

\fi}

|

| 426 |

+

\def\ps@fancyplain{\ps@fancy \let\ps@plain\ps@plain@fancy}

|

| 427 |

+

\def\ps@plain@fancy{\@fancyplaintrue\ps@@fancy}

|

| 428 |

+

\let\ps@@empty\ps@empty

|

| 429 |

+

\def\ps@@fancy{%

|

| 430 |

+

\ps@@empty % This is for amsbook/amsart, which do strange things with \topskip

|

| 431 |

+

\def\@mkboth{\protect\markboth}%

|

| 432 |

+

\def\@oddhead{\@fancyhead\fancy@Oolh\f@ncyolh\f@ncyoch\f@ncyorh\fancy@Oorh}%

|

| 433 |

+

\def\@oddfoot{\@fancyfoot\fancy@Oolf\f@ncyolf\f@ncyocf\f@ncyorf\fancy@Oorf}%

|

| 434 |

+

\def\@evenhead{\@fancyhead\fancy@Oelh\f@ncyelh\f@ncyech\f@ncyerh\fancy@Oerh}%

|

| 435 |

+

\def\@evenfoot{\@fancyfoot\fancy@Oelf\f@ncyelf\f@ncyecf\f@ncyerf\fancy@Oerf}%

|

| 436 |

+

}

|

| 437 |

+

% Default definitions for compatibility mode:

|

| 438 |

+

% These cause the header/footer to take the defined \headwidth as width

|

| 439 |

+

% And to shift in the direction of the marginpar area

|

| 440 |

+

|

| 441 |

+

\def\fancy@Oolh{\if@reversemargin\hss\else\relax\fi}

|

| 442 |

+

\def\fancy@Oorh{\if@reversemargin\relax\else\hss\fi}

|

| 443 |

+

\let\fancy@Oelh\fancy@Oorh

|

| 444 |

+

\let\fancy@Oerh\fancy@Oolh

|

| 445 |

+

|

| 446 |

+

\let\fancy@Oolf\fancy@Oolh

|

| 447 |

+

\let\fancy@Oorf\fancy@Oorh

|

| 448 |

+

\let\fancy@Oelf\fancy@Oelh

|

| 449 |

+

\let\fancy@Oerf\fancy@Oerh

|

| 450 |

+

|

| 451 |

+

% New definitions for the use of \fancyhfoffset

|

| 452 |

+

% These calculate the \headwidth from \textwidth and the specified offsets.

|

| 453 |

+

|

| 454 |

+

\def\fancy@offsolh{\headwidth=\textwidth\advance\headwidth\f@ncyO@olh

|

| 455 |

+

\advance\headwidth\f@ncyO@orh\hskip-\f@ncyO@olh}

|

| 456 |

+

\def\fancy@offselh{\headwidth=\textwidth\advance\headwidth\f@ncyO@elh

|

| 457 |

+

\advance\headwidth\f@ncyO@erh\hskip-\f@ncyO@elh}

|

| 458 |

+

|

| 459 |

+

\def\fancy@offsolf{\headwidth=\textwidth\advance\headwidth\f@ncyO@olf

|

| 460 |

+

\advance\headwidth\f@ncyO@orf\hskip-\f@ncyO@olf}

|

| 461 |

+

\def\fancy@offself{\headwidth=\textwidth\advance\headwidth\f@ncyO@elf

|

| 462 |

+

\advance\headwidth\f@ncyO@erf\hskip-\f@ncyO@elf}

|

| 463 |

+

|

| 464 |

+

\def\fancy@setoffs{%

|

| 465 |

+

% Just in case \let\headwidth\textwidth was used

|

| 466 |

+

\fancy@gbl\let\headwidth\fancy@headwidth

|

| 467 |

+

\fancy@gbl\let\fancy@Oolh\fancy@offsolh

|

| 468 |

+

\fancy@gbl\let\fancy@Oelh\fancy@offselh

|

| 469 |

+

\fancy@gbl\let\fancy@Oorh\hss

|

| 470 |

+

\fancy@gbl\let\fancy@Oerh\hss

|

| 471 |

+

\fancy@gbl\let\fancy@Oolf\fancy@offsolf

|

| 472 |

+

\fancy@gbl\let\fancy@Oelf\fancy@offself

|

| 473 |

+

\fancy@gbl\let\fancy@Oorf\hss

|

| 474 |

+

\fancy@gbl\let\fancy@Oerf\hss}

|

| 475 |

+

|

| 476 |

+

\newif\iffootnote

|

| 477 |

+

\let\latex@makecol\@makecol

|

| 478 |

+

\def\@makecol{\ifvoid\footins\footnotetrue\else\footnotefalse\fi

|

| 479 |

+

\let\topfloat\@toplist\let\botfloat\@botlist\latex@makecol}

|

| 480 |

+

\def\iftopfloat#1#2{\ifx\topfloat\empty #2\else #1\fi}

|

| 481 |

+

\def\ifbotfloat#1#2{\ifx\botfloat\empty #2\else #1\fi}

|

| 482 |

+

\def\iffloatpage#1#2{\if@fcolmade #1\else #2\fi}

|

| 483 |

+

|

| 484 |

+

\newcommand{\fancypagestyle}[2]{%

|

| 485 |

+

\@namedef{ps@#1}{\let\fancy@gbl\relax#2\relax\ps@fancy}}

|

latex_templates/Summary/iclr2022_conference.bst

ADDED

|

@@ -0,0 +1,1440 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|