Spaces:

Runtime error

Runtime error

anonymous

commited on

Commit

•

a2dba58

1

Parent(s):

9ac2c3a

first commit without models

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +65 -5

- app.py +192 -0

- auxiliary/notebooks_and_reporting/generate_figures.py +175 -0

- auxiliary/notebooks_and_reporting/print_table_results.py +0 -0

- auxiliary/notebooks_and_reporting/print_tests_shared_weights.py +222 -0

- auxiliary/notebooks_and_reporting/results_per_timestep.pdf +0 -0

- auxiliary/notebooks_and_reporting/results_per_timestep_dice.pdf +0 -0

- auxiliary/notebooks_and_reporting/results_per_timestep_prec_recall.pdf +0 -0

- auxiliary/notebooks_and_reporting/results_shared_weights.pdf +0 -0

- auxiliary/notebooks_and_reporting/visualisations.pdf +0 -0

- auxiliary/notebooks_and_reporting/visualisations.py +162 -0

- auxiliary/notebooks_and_reporting/visualisations2.pdf +0 -0

- auxiliary/postprocessing/run_tests.py +162 -0

- auxiliary/postprocessing/testing_shared_weights.py +145 -0

- auxiliary/preprocessing/CXR14_preprocessing_separate_data.py +31 -0

- auxiliary/preprocessing/JSRT_preprocessing_separate_data.py +26 -0

- config.py +84 -0

- data/JSRT_test_split.csv +26 -0

- data/JSRT_train_split.csv +198 -0

- data/JSRT_val_split.csv +26 -0

- data/correspondence_with_chestXray8.csv +101 -0

- data/test_split.csv +0 -0

- data/train_split.csv +0 -0

- data/val_split.csv +0 -0

- dataloaders/CXR14.py +74 -0

- dataloaders/JSRT.py +94 -0

- dataloaders/Montgomery.py +61 -0

- dataloaders/NIH.py +50 -0

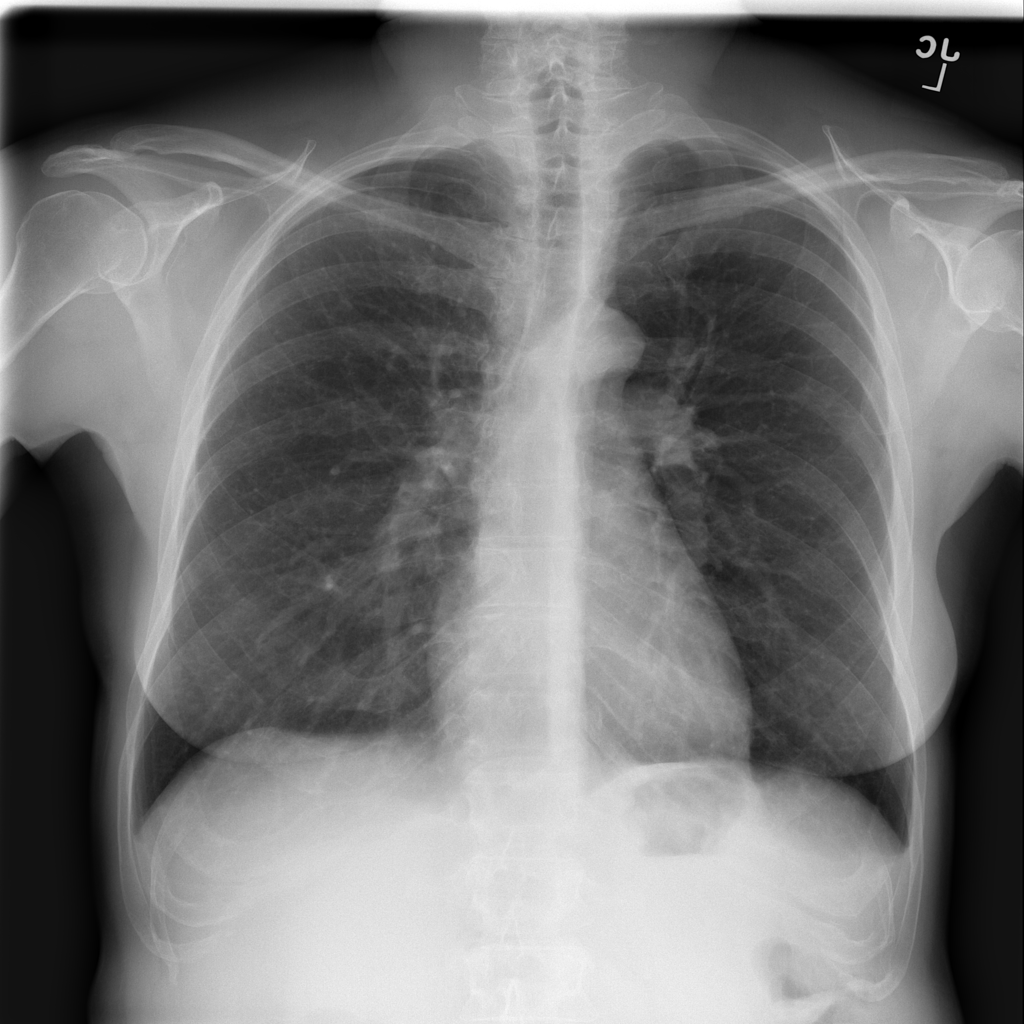

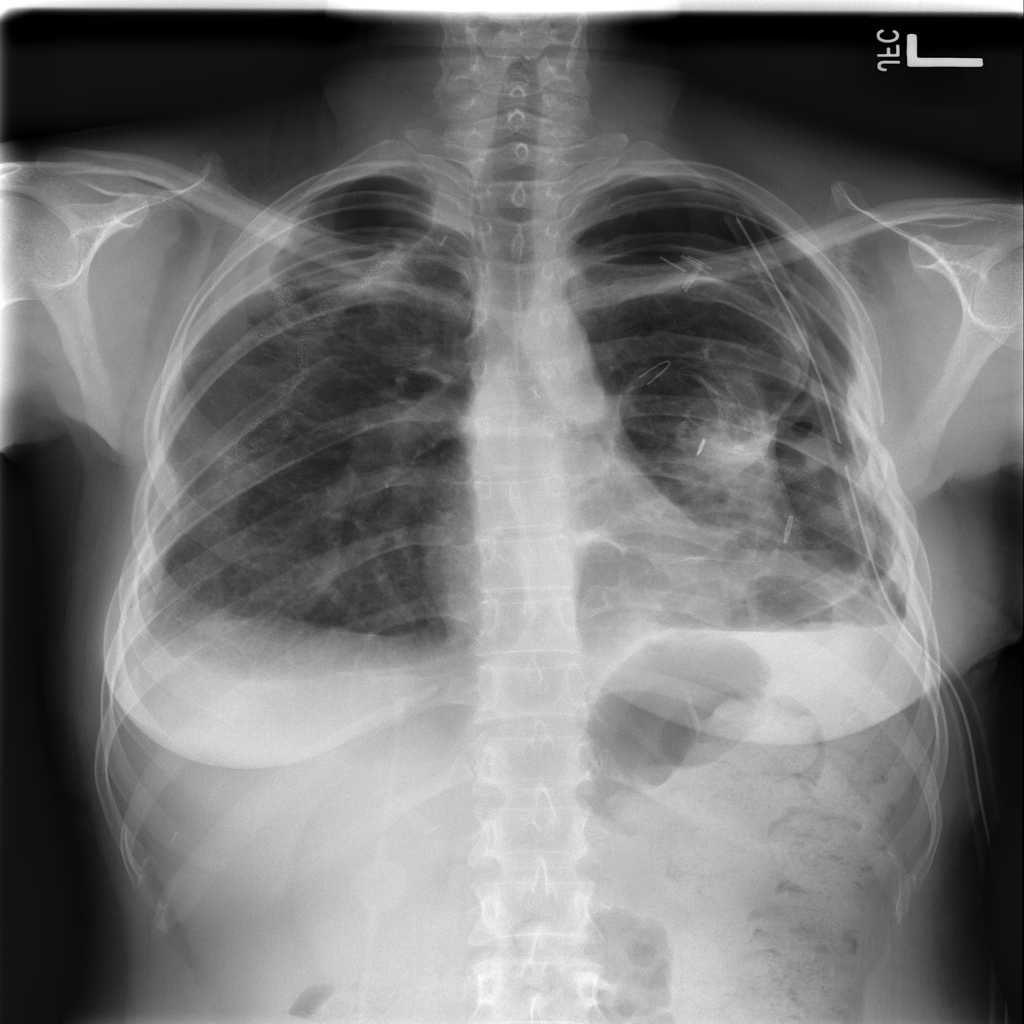

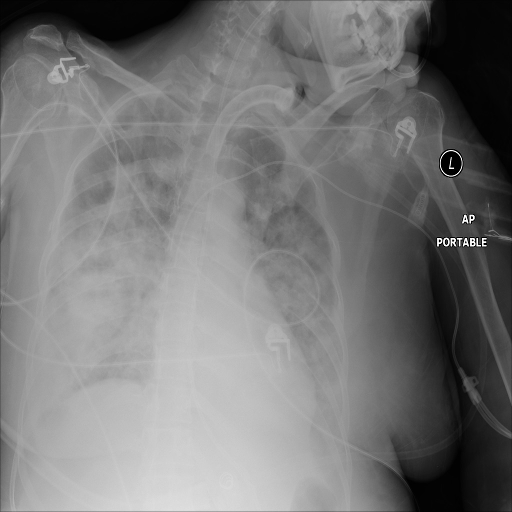

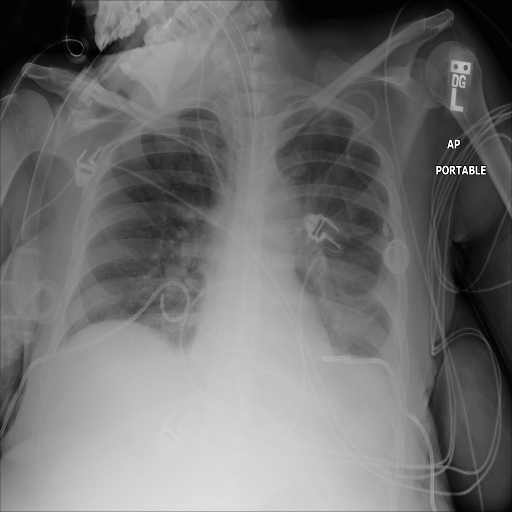

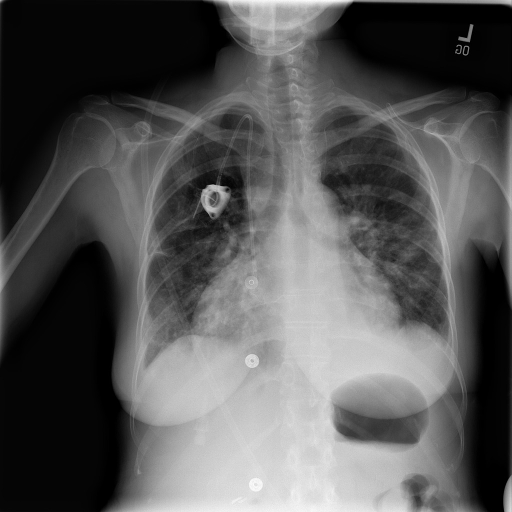

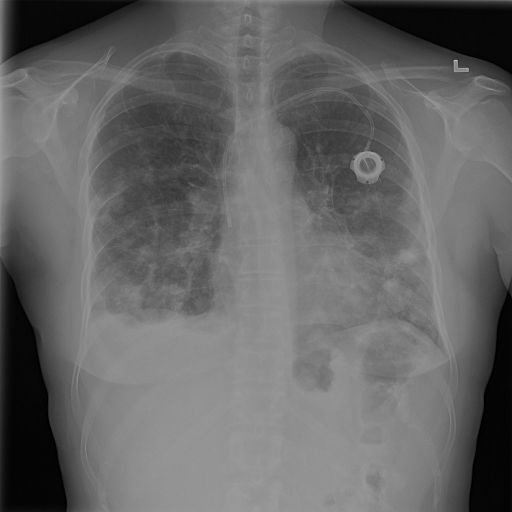

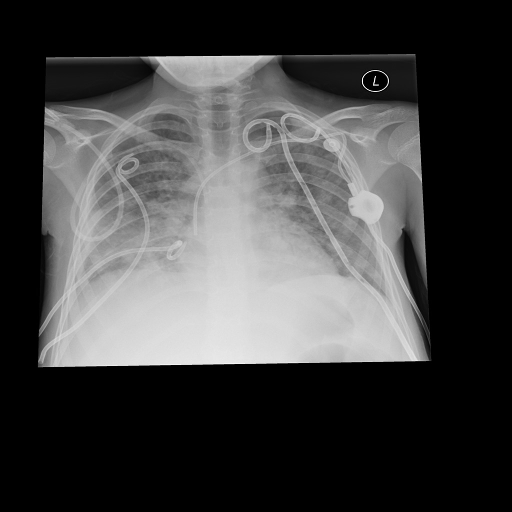

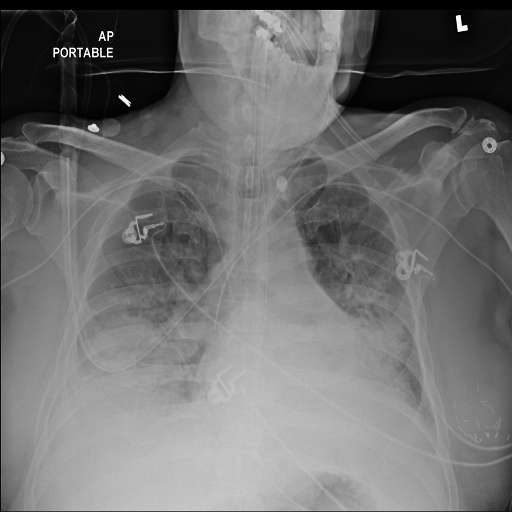

- img_examples/00015548_000.png +0 -0

- img_examples/00016568_041.png +0 -0

- img_examples/NIH_0006.png +0 -0

- img_examples/NIH_0012.png +0 -0

- img_examples/NIH_0014.png +0 -0

- img_examples/NIH_0019.png +0 -0

- img_examples/NIH_0024.png +0 -0

- img_examples/NIH_0035.png +0 -0

- img_examples/NIH_0051.png +0 -0

- img_examples/NIH_0055.png +0 -0

- img_examples/NIH_0076.png +0 -0

- img_examples/NIH_0094.png +0 -0

- img_examples/TEDM-model-visualisation.png +0 -0

- models/datasetDM_model.py +88 -0

- models/diffusion_model.py +301 -0

- models/global_local_cl.py +111 -0

- models/unet_model.py +375 -0

- requirements.txt +16 -0

- train.py +56 -0

- trainers/datasetDM_per_step.py +115 -0

- trainers/finetune_glob_cl.py +172 -0

- trainers/finetune_glob_loc_cl.py +172 -0

README.md

CHANGED

|

@@ -1,8 +1,8 @@

|

|

| 1 |

---

|

| 2 |

-

title: TEDM

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 3.35.2

|

| 8 |

app_file: app.py

|

|

@@ -10,4 +10,64 @@ pinned: false

|

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

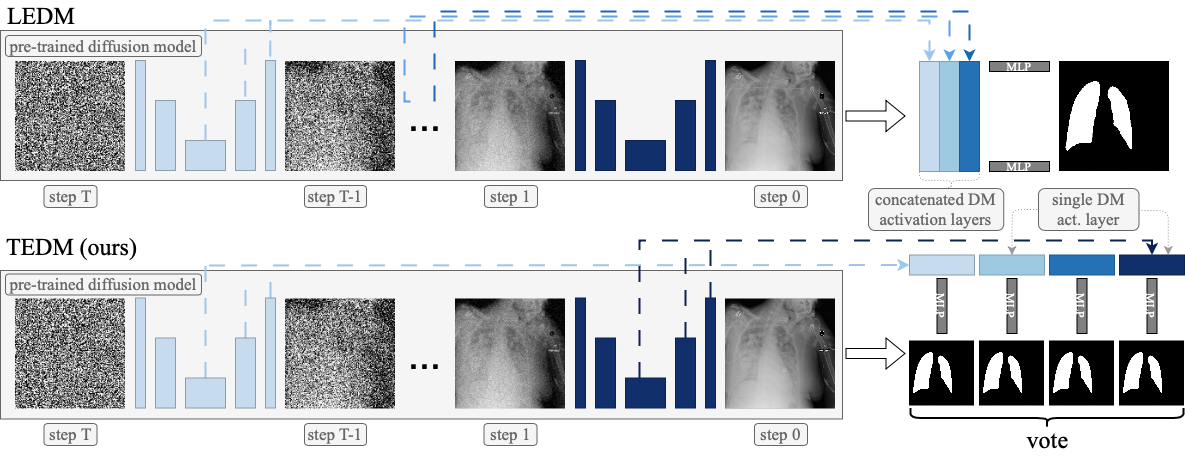

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: TEDM

|

| 3 |

+

emoji: 🐨

|

| 4 |

+

colorFrom: purple

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 3.35.2

|

| 8 |

app_file: app.py

|

|

|

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

+

# Timestep ensembling diffusion models for semi-supervised image segmentation

|

| 14 |

+

|

| 15 |

+

Results

|

| 16 |

+

|

| 17 |

+

| Training data size | 1 (1\%) | 3 (2\%) | 6 (3\%) | 12 (96\%) | 197 (100\%) |

|

| 18 |

+

|:------------------|:----------------------------------------------:|:-----------------------:|:-----------------------:|:-----------------------:|:-----------------------:|

|

| 19 |

+

| |JSRT (labelled in-domain) |

|

| 20 |

+

| Baseline | 84.4 $\pm$ 5.4 | 91.7 $\pm$ 3.7 | 93.3 $\pm$ 2.9 | 95.3 $\pm$ 2.3 | 97.3 $\pm$ 1.2 |

|

| 21 |

+

| LEDM | 90.8 $\pm$ 3.5 | 94.1 $\pm$ 1.6 | 95.5 $\pm$ 1.4 | 96.4 $\pm$ 1.4 | 97.0 $\pm$ 1.3 |

|

| 22 |

+

| LEDMe | **93.7 $\pm$ 2.6** | **95.5 $\pm$ 1.5** | **96.7 $\pm$ 1.5** | **97.0 $\pm$ 1.1** | **97.6 $\pm$ 1.2** |

|

| 23 |

+

| Ours | **93.1 $\pm$ 3.4** | 94.8 $\pm$ 1.4 | 95.8 $\pm$ 1.2 | 96.6 $\pm$ 1.1 | 97.3 $\pm$ 1.2 |

|

| 24 |

+

| |NIH (unlabelled in-domain) |

|

| 25 |

+

| Baseline | 68.5 $\pm$ 12.8 | 71.2 $\pm$ 15.1 | 71.4 $\pm$ 15.9 | 77.8 $\pm$ 14.0 | 81.5 $\pm$ 12.7 |

|

| 26 |

+

| LEDM | 63.3 $\pm$ 12.2 | 78.0 $\pm$ 10.1 | 81.2 $\pm$ 9.3 | 85.9 $\pm$ 7.4 | 88.9 $\pm$ 5.9 |

|

| 27 |

+

| LEDMe | 70.3 $\pm$ 11.4 | 78.3 $\pm$ 9.8 | 83.0 $\pm$ 8.6 | 84.4 $\pm$ 8.1 | 90.1 $\pm$ 5.3 |

|

| 28 |

+

| Ours | **80.3 $\pm$ 9.0** | **86.4 $\pm$ 6.2** | **89.2 $\pm$ 5.5** | **91.3 $\pm$ 4.1** | **92.9 $\pm$ 3.2** |

|

| 29 |

+

| | Montgomery (out-of-domain) |

|

| 30 |

+

| Baseline | 77.1 $\pm$ 12.0 | 83.0 $\pm$ 12.2 | 80.9 $\pm$ 14.7 | 83.8 $\pm$ 14.9 | 94.1 $\pm$ 6.6 |

|

| 31 |

+

| LEDM | 79.3 $\pm$ 8.1 | 85.9 $\pm$ 7.4 | 89.4 $\pm$ 6.7 | 92.3 $\pm$ 7.2 | 94.4 $\pm$ 7.2 |

|

| 32 |

+

| LEDMe | 80.7 $\pm$ 6.6 | 86.3 $\pm$ 6.5 | 89.5 $\pm$ 5.9 | 91.2 $\pm$ 5.6 | **95.3 $\pm$ 4.0** |

|

| 33 |

+

| Ours | **90.5 $\pm$ 5.3** | **91.4 $\pm$ 6.1** | **93.3 $\pm$ 6.0** | **94.6 $\pm$ 6.0** | 95.1 $\pm$ 6.9 |

|

| 34 |

+

|

| 35 |

+

## Training

|

| 36 |

+

|

| 37 |

+

- training the backbone

|

| 38 |

+

|

| 39 |

+

```python train.py --dataset CXR14 --data_dir <PATH TO CXR14 DATASET>```

|

| 40 |

+

|

| 41 |

+

- our method

|

| 42 |

+

|

| 43 |

+

```python train.py --experiment TEDM --data_dir <PATH TO JSRT DATASET> --n_labelled_images <TRAINING SET SIZE>```

|

| 44 |

+

|

| 45 |

+

- LEDM method

|

| 46 |

+

|

| 47 |

+

```python train.py --experiment LEDM --data_dir <PATH TO JSRT DATASET> --n_labelled_images <TRAINING SET SIZE>```

|

| 48 |

+

|

| 49 |

+

- LEDMe method

|

| 50 |

+

|

| 51 |

+

```python train.py --experiment LEDMe --data_dir <PATH TO JSRT DATASET> --n_labelled_images <TRAINING SET SIZE>```

|

| 52 |

+

|

| 53 |

+

- baseline method

|

| 54 |

+

|

| 55 |

+

```python train.py --experiment JSRT_baseline --data_dir <PATH TO JSRT DATASET> --n_labelled_images <TRAINING SET SIZE>```

|

| 56 |

+

|

| 57 |

+

## Testing

|

| 58 |

+

|

| 59 |

+

- update

|

| 60 |

+

- `DATADIR` in paths `dataloaders/JSRT.py`, `dataloaders/NIH.py` and `dataloaders/Montgomery.py`

|

| 61 |

+

- `NIHPATH`, `NIHFILE`, `MONPATH` and `MONFILE` in paths `auxiliary/postprocessing/run_tests.py` and `auxiliary/postprocessing/testing_shared_weights.py`

|

| 62 |

+

|

| 63 |

+

- for baseline and LEDM methods, run

|

| 64 |

+

|

| 65 |

+

```python auxiliary/postprocessing/run_tests.py --experiment <PATH TO LOG FOLDER>```

|

| 66 |

+

|

| 67 |

+

- for our method, run

|

| 68 |

+

|

| 69 |

+

```python auxiliary/postprocessing/testing_shared_weights.py --experiment <PATH TO LOG FOLDER>```

|

| 70 |

+

|

| 71 |

+

## Figures and reporting

|

| 72 |

+

|

| 73 |

+

VS Code notebooks can be found in `auxiliary/notebooks_and_reporting`.

|

app.py

ADDED

|

@@ -0,0 +1,192 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import gradio as gr

|

| 3 |

+

from PIL import Image

|

| 4 |

+

import torch

|

| 5 |

+

from torch import nn

|

| 6 |

+

from einops.layers.torch import Rearrange

|

| 7 |

+

from torchvision import transforms

|

| 8 |

+

from models.unet_model import Unet

|

| 9 |

+

from models.datasetDM_model import DatasetDM

|

| 10 |

+

from skimage import measure, segmentation

|

| 11 |

+

import cv2

|

| 12 |

+

from tqdm import tqdm

|

| 13 |

+

from einops import repeat

|

| 14 |

+

|

| 15 |

+

img_size = 128

|

| 16 |

+

font = cv2.FONT_HERSHEY_SIMPLEX

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

## %%

|

| 20 |

+

def load_img(img_file):

|

| 21 |

+

# assert type of input

|

| 22 |

+

if isinstance(img_file, np.ndarray):

|

| 23 |

+

img = torch.Tensor(img_file).float()

|

| 24 |

+

# make sure img is between 0 and 1

|

| 25 |

+

if img.max() > 1:

|

| 26 |

+

img /= 255

|

| 27 |

+

# resize

|

| 28 |

+

img = transforms.Resize(img_size)(img)

|

| 29 |

+

elif isinstance(img_file, str):

|

| 30 |

+

img = Image.open(img_file).convert('L').resize((img_size, img_size))

|

| 31 |

+

img = transforms.ToTensor()(img).float()

|

| 32 |

+

elif isinstance(img_file, Image.Image):

|

| 33 |

+

img = img_file.convert('L').resize((img_size, img_size))

|

| 34 |

+

img = transforms.ToTensor()(img).float()

|

| 35 |

+

else:

|

| 36 |

+

raise TypeError("Input must be a numpy array, PIL image, or filepath")

|

| 37 |

+

if len(img.shape) == 2:

|

| 38 |

+

img = img[None, None]

|

| 39 |

+

elif len(img.shape) == 3:

|

| 40 |

+

img = img[None]

|

| 41 |

+

else:

|

| 42 |

+

raise ValueError("Input must be a 2D or 3D array")

|

| 43 |

+

return img

|

| 44 |

+

|

| 45 |

+

def predict_baseline(img, checkpoint_path):

|

| 46 |

+

checkpoint = torch.load(checkpoint_path, map_location=torch.device("cpu"))

|

| 47 |

+

config = checkpoint["config"]

|

| 48 |

+

baseline = Unet(**vars(config))

|

| 49 |

+

baseline.load_state_dict(checkpoint["model_state_dict"])

|

| 50 |

+

baseline.eval()

|

| 51 |

+

return (torch.sigmoid(baseline(img)) > .5).float().squeeze().numpy()

|

| 52 |

+

|

| 53 |

+

def predict_LEDM(img, checkpoint_path):

|

| 54 |

+

checkpoint = torch.load(checkpoint_path, map_location=torch.device("cpu"))

|

| 55 |

+

config = checkpoint["config"]

|

| 56 |

+

config.verbose = False

|

| 57 |

+

LEDM = DatasetDM(config)

|

| 58 |

+

LEDM.load_state_dict(checkpoint["model_state_dict"])

|

| 59 |

+

LEDM.eval()

|

| 60 |

+

return (torch.sigmoid(LEDM(img)) > .5).float().squeeze().numpy()

|

| 61 |

+

|

| 62 |

+

def predict_TEDM(img, checkpoint_path):

|

| 63 |

+

checkpoint = torch.load(checkpoint_path, map_location=torch.device("cpu"))

|

| 64 |

+

config = checkpoint["config"]

|

| 65 |

+

config.verbose = False

|

| 66 |

+

TEDM = DatasetDM(config)

|

| 67 |

+

TEDM.classifier = nn.Sequential(

|

| 68 |

+

Rearrange('b (step act) h w -> (b step) act h w', step=len(TEDM.steps)),

|

| 69 |

+

nn.Conv2d(960, 128, 1),

|

| 70 |

+

nn.ReLU(),

|

| 71 |

+

nn.BatchNorm2d(128),

|

| 72 |

+

nn.Conv2d(128, 32, 1),

|

| 73 |

+

nn.ReLU(),

|

| 74 |

+

nn.BatchNorm2d(32),

|

| 75 |

+

nn.Conv2d(32, 1, config.out_channels)

|

| 76 |

+

)

|

| 77 |

+

TEDM.load_state_dict(checkpoint["model_state_dict"])

|

| 78 |

+

TEDM.eval()

|

| 79 |

+

return (torch.sigmoid(TEDM(img)).mean(0) > .5).float().squeeze().numpy()

|

| 80 |

+

|

| 81 |

+

predictors = {'Baseline': predict_baseline,

|

| 82 |

+

'Global CL': predict_baseline,

|

| 83 |

+

'Global & Local CL': predict_baseline,

|

| 84 |

+

'LEDM': predict_LEDM,

|

| 85 |

+

'LEDMe': predict_LEDM,

|

| 86 |

+

'TEDM': predict_TEDM}

|

| 87 |

+

model_folders = {

|

| 88 |

+

'Baseline': 'baseline',

|

| 89 |

+

'Global CL': 'global_finetune',

|

| 90 |

+

'Global & Local CL': 'glob_loc_finetune',

|

| 91 |

+

'LEDM': 'LEDM',

|

| 92 |

+

'LEDMe': 'LEDMe',

|

| 93 |

+

'TEDM': 'TEDM'

|

| 94 |

+

}

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

def postprocess(pred, img):

|

| 98 |

+

all_labels = measure.label(pred, background=0)

|

| 99 |

+

_, cn = np.unique(all_labels, return_counts=True)

|

| 100 |

+

# find the two largest connected components that are not the background

|

| 101 |

+

if len(cn) >= 3:

|

| 102 |

+

lungs = np.argsort(cn[1:])[-2:] + 1

|

| 103 |

+

all_labels[(all_labels!=lungs[0]) & (all_labels!=lungs[1])] = 0

|

| 104 |

+

all_labels[(all_labels==lungs[0]) | (all_labels==lungs[1])] = 1

|

| 105 |

+

# put all_labels into a cv2 object

|

| 106 |

+

if len(cn) > 1:

|

| 107 |

+

img = segmentation.mark_boundaries(img, all_labels, color=(1,0,0), mode='outer', background_label=0)

|

| 108 |

+

else:

|

| 109 |

+

img = repeat(img, 'h w -> h w c', c=3)

|

| 110 |

+

return img

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

def predict(img_file, models:list, training_sizes:list, seg_img=False, progress=gr.Progress()):

|

| 115 |

+

max_progress = len(models) * len(training_sizes)

|

| 116 |

+

n_progress = 0

|

| 117 |

+

progress((n_progress, max_progress), desc="Starting")

|

| 118 |

+

img = load_img(img_file)

|

| 119 |

+

print(img.shape)

|

| 120 |

+

preds = []

|

| 121 |

+

# sorting models so that they show as baseline - LEDM - LEDMe - TEDM

|

| 122 |

+

models = sorted(models, key=lambda x: 0 if x == 'Baseline' else 1 if x == 'Global CL' else 2 if x == 'Global & Local CL' else 3 if x == 'LEDM' else 4 if x == 'LEDMe' else 5)

|

| 123 |

+

|

| 124 |

+

for model in models:

|

| 125 |

+

print(model)

|

| 126 |

+

model_preds = []

|

| 127 |

+

for training_size in sorted(training_sizes):

|

| 128 |

+

#if n_progress < max_progress:

|

| 129 |

+

progress((n_progress, max_progress) , desc=f"Predicting {model} {training_size}")

|

| 130 |

+

n_progress += 1

|

| 131 |

+

print(training_size)

|

| 132 |

+

out = predictors[model](img, f"logs/{model_folders[model]}/{training_size}/best_model.pt")

|

| 133 |

+

writing_colour = (.5,.5,.5)

|

| 134 |

+

if seg_img:

|

| 135 |

+

out = postprocess(out, img.squeeze().numpy())

|

| 136 |

+

writing_colour = (1,1,1)

|

| 137 |

+

out = cv2.putText(np.array(out),f"{model} {training_size}",(5,125), font, .5, writing_colour,1, cv2.LINE_AA)

|

| 138 |

+

#ImageDraw.Draw(out).text((0,128), f"{model} {training_size}", fill=(255,0,0))

|

| 139 |

+

model_preds.append(np.asarray(out))

|

| 140 |

+

preds.append(np.concatenate(model_preds, axis=1))

|

| 141 |

+

prediction = np.concatenate(preds, axis=0)

|

| 142 |

+

if (prediction.shape[1] <=128*2):

|

| 143 |

+

pad = (330 - prediction.shape[1])//2

|

| 144 |

+

if len(prediction.shape) == 2:

|

| 145 |

+

prediction = np.pad(prediction, ((0,0), (pad, pad)), 'constant', constant_values=1)

|

| 146 |

+

else:

|

| 147 |

+

prediction = np.pad(prediction, ((0,0), (pad, pad), (0,0)), 'constant', constant_values=1)

|

| 148 |

+

return prediction

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

## %%

|

| 152 |

+

input = gr.Image( label="Chest X-ray", shape=(img_size, img_size), type="pil")

|

| 153 |

+

output = gr.Image(label="Segmentation", shape=(img_size, img_size))

|

| 154 |

+

## %%

|

| 155 |

+

demo = gr.Interface(

|

| 156 |

+

fn=predict,

|

| 157 |

+

inputs=[input,

|

| 158 |

+

gr.CheckboxGroup(["Baseline", "Global CL", "Global & Local CL", "LEDM", "LEDMe", "TEDM"], label="Model", value=["Baseline", "LEDM", "LEDMe", "TEDM"]),

|

| 159 |

+

gr.CheckboxGroup([1,3,6,12,197], label="Training size", value=[1,3,6,12,197]),

|

| 160 |

+

gr.Checkbox(label="Show masked image (otherwise show binary segmentation)", value=True),],

|

| 161 |

+

|

| 162 |

+

outputs=output,

|

| 163 |

+

examples = [

|

| 164 |

+

['img_examples/NIH_0006.png'],

|

| 165 |

+

['img_examples/NIH_0076.png'],

|

| 166 |

+

["img_examples/00016568_041.png"],

|

| 167 |

+

['img_examples/NIH_0024.png'],

|

| 168 |

+

['img_examples/00015548_000.png'],

|

| 169 |

+

['img_examples/NIH_0019.png'],

|

| 170 |

+

['img_examples/NIH_0094.png'],

|

| 171 |

+

['img_examples/NIH_0051.png'],

|

| 172 |

+

['img_examples/NIH_0012.png'],

|

| 173 |

+

['img_examples/NIH_0014.png'],

|

| 174 |

+

['img_examples/NIH_0055.png'],

|

| 175 |

+

['img_examples/NIH_0035.png'],

|

| 176 |

+

],

|

| 177 |

+

title="Chest X-ray Segmentation with TEDM.",

|

| 178 |

+

description="""<img src="file/img_examples/TEDM-model-visualisation.png"

|

| 179 |

+

alt="Markdown Monster icon"

|

| 180 |

+

style="margin-right: 10px;" />"""+

|

| 181 |

+

"\nMedical image segmentation is a challenging task, made more difficult by many datasets' limited size and annotations. Denoising diffusion probabilistic models (DDPM) have recently shown promise in modelling " +

|

| 182 |

+

"the distribution of natural images and were successfully applied to various medical imaging tasks. This work focuses on semi-supervised image segmentation using diffusion models, particularly addressing domain " +

|

| 183 |

+

"generalisation. Firstly, we demonstrate that smaller diffusion steps generate latent representations that are more robust for downstream tasks than larger steps. Secondly, we use this insight to propose an improved " +

|

| 184 |

+

"esembling scheme that leverages information-dense small steps and the regularising effect of larger steps to generate predictions. Our model shows significantly better performance in domain-shifted settings while " +

|

| 185 |

+

"retaining competitive performance in-domain. Overall, this work highlights the potential of DDPMs for semi-supervised medical image segmentation and provides insights into optimising their performance under domain shift."+

|

| 186 |

+

"\n\n\n When choosing 'Show masked image', we post-process the segmentation by choosing up to two largest connected components and drawing their outline. "+

|

| 187 |

+

"\nNote that each model takes 10-35 seconds to run on CPU. Choosing all models and all training sizes will take some time. "+

|

| 188 |

+

"We noticed that gradio sometimes fails on the first try. If it doesn't work, try again.",

|

| 189 |

+

cache_examples=False,

|

| 190 |

+

)

|

| 191 |

+

demo.queue().launch(debug=True)

|

| 192 |

+

#demo.queue().launch(share=True)

|

auxiliary/notebooks_and_reporting/generate_figures.py

ADDED

|

@@ -0,0 +1,175 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# %%

|

| 2 |

+

import numpy as np

|

| 3 |

+

import torch

|

| 4 |

+

from pathlib import Path

|

| 5 |

+

import os

|

| 6 |

+

import pandas as pd

|

| 7 |

+

import seaborn as sns

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

HEAD = Path(os.getcwd()).parent.parent

|

| 10 |

+

|

| 11 |

+

if __name__=="__main__":

|

| 12 |

+

# load baseline and LEDM data

|

| 13 |

+

metrics = {"dice": [], "precision": [], "recall": [], "exp": [], "datasize": [], "dataset":[]}

|

| 14 |

+

files_needed = ["JSRT_val_predictions.pt", "JSRT_test_predictions.pt", "NIH_predictions.pt", "Montgomery_predictions.pt",]

|

| 15 |

+

head = HEAD / 'logs'

|

| 16 |

+

for exp in ['baseline', 'LEDM']:

|

| 17 |

+

for datasize in [1, 3, 6, 12, 24, 49, 98, 197]:

|

| 18 |

+

if len(set(files_needed) - set(os.listdir(head / exp / str(datasize)))) == 0:

|

| 19 |

+

print(f"Experiment {exp} {datasize}")

|

| 20 |

+

output = torch.load(head / exp / str(datasize) / "JSRT_val_predictions.pt")

|

| 21 |

+

print(f"{output['dice'].mean()}\t{output['dice'].std()}")

|

| 22 |

+

for file in files_needed[1:]:

|

| 23 |

+

output = torch.load(head / exp / str(datasize) / file)

|

| 24 |

+

metrics_datasize = 197 if datasize == "None" else int(datasize)

|

| 25 |

+

metrics["dice"].append(output["dice"].numpy())

|

| 26 |

+

metrics["precision"].append(output["precision"].numpy())

|

| 27 |

+

metrics["recall"].append(output["recall"].numpy())

|

| 28 |

+

metrics["exp"].append(np.array([exp] * len(output["dice"])))

|

| 29 |

+

metrics["datasize"].append(np.array([int(datasize)] * len(output["dice"])))

|

| 30 |

+

metrics["dataset"].append(np.array([file.split("_")[0]]*len(output["dice"])))

|

| 31 |

+

else:

|

| 32 |

+

print(f"Experiment {exp} is missing files")

|

| 33 |

+

|

| 34 |

+

for key in metrics:

|

| 35 |

+

metrics[key] = np.concatenate([el.squeeze() for el in metrics[key]])

|

| 36 |

+

df = pd.DataFrame(metrics)

|

| 37 |

+

df.head()

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

# %% Load step data

|

| 41 |

+

metrics2 = {"dice": [], "precision": [], "recall": [], "exp": [], "datasize": [], "dataset":[], 'timestep':[]}

|

| 42 |

+

for timestep in [1, 10, 25, 50, 500, 950]:

|

| 43 |

+

exp = f"Step_{timestep}"

|

| 44 |

+

for datasize in [197, 98, 49, 24, 12, 6, 3, 1]:

|

| 45 |

+

if os.path.isdir(head / exp / str(datasize)):

|

| 46 |

+

if len(set(files_needed) - set(os.listdir(head / exp / str(datasize)))) == 0:

|

| 47 |

+

print(f"Experiment {datasize} {timestep}")

|

| 48 |

+

output = torch.load(head / exp / str(datasize)/ "JSRT_val_predictions.pt")

|

| 49 |

+

print(f"{output['dice'].mean()}\t{output['dice'].std()}")

|

| 50 |

+

for file in files_needed[1:]:

|

| 51 |

+

output = torch.load(head / exp / str(datasize) / file)

|

| 52 |

+

metrics_datasize = datasize if datasize is not None else 197

|

| 53 |

+

metrics2["dice"].append(output["dice"].numpy())

|

| 54 |

+

metrics2["precision"].append(output["precision"].numpy())

|

| 55 |

+

metrics2["recall"].append(output["recall"].numpy())

|

| 56 |

+

metrics2["exp"].append(np.array([exp] * len(output["dice"])))

|

| 57 |

+

metrics2["datasize"].append(np.array([metrics_datasize] * len(output["dice"])))

|

| 58 |

+

metrics2["dataset"].append(np.array([file.split("_")[0]]*len(output["dice"])))

|

| 59 |

+

metrics2["timestep"].append(np.array([timestep] * len(output["dice"])))

|

| 60 |

+

else:

|

| 61 |

+

print(f"Experiment {datasize} is missing files")

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

for key in metrics2:

|

| 65 |

+

metrics2[key] = np.concatenate(metrics2[key]).squeeze()

|

| 66 |

+

print(key, metrics2[key].shape)

|

| 67 |

+

df2 = pd.DataFrame(metrics2)

|

| 68 |

+

|

| 69 |

+

# %% figure with line for baseline and datasetDM and boxplots for the rest

|

| 70 |

+

# separating dice from precision and recall

|

| 71 |

+

font = 16

|

| 72 |

+

x = [1, 1, 3, 3, 6, 6, 12, 12, 24, 24, 49, 49, 197, 197]

|

| 73 |

+

plot_x = np.concatenate([np.array([-.4, .4]) + i for i in range(len(x)//2)]).flatten()

|

| 74 |

+

fig, axs = plt.subplots(3, 1, figsize=[12, 10])

|

| 75 |

+

sns.set_style("whitegrid")

|

| 76 |

+

m = 'dice'

|

| 77 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 78 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'baseline') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 79 |

+

ys_std = np.quantile(ys, (.25, .75), axis=1, )

|

| 80 |

+

axs[i ].fill_between(plot_x, ys_std[0], ys_std[1], alpha=.2, zorder=0, color='C6')

|

| 81 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'LEDM') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 82 |

+

ys_std = np.quantile(ys, (.25, .75), axis=1, )

|

| 83 |

+

axs[i ].fill_between(plot_x, ys_std[0], ys_std[1], alpha=.2, zorder=0, color='C8')

|

| 84 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'baseline') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 85 |

+

ys_mean = np.quantile(ys, .5, axis=1)

|

| 86 |

+

axs[i ].plot(plot_x, ys_mean, label="baseline", c='C6', zorder=0)

|

| 87 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'LEDM') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 88 |

+

ys_mean = np.quantile(ys, .5, axis=1)

|

| 89 |

+

axs[i ].plot(plot_x, ys_mean, label="LEDM" , c='C7', zorder=0)

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 93 |

+

temp_df = df2[(df2.dataset == dataset) & (df2.datasize != 98)]

|

| 94 |

+

out = sns.boxplot(data=temp_df, x="datasize", y=m, hue="timestep", ax=axs[i ], showfliers=False, saturation=1,)

|

| 95 |

+

axs[i ].set_title(f"{dataset}", fontsize=font)

|

| 96 |

+

axs[i ].set_xlabel("" )

|

| 97 |

+

y_min, _ = axs[i ].get_ylim()

|

| 98 |

+

axs[i ].set_ylim(y_min, 1)

|

| 99 |

+

h, l = axs[i].get_legend_handles_labels()

|

| 100 |

+

axs[i].get_legend().remove()

|

| 101 |

+

axs[i].set_ylabel("Dice", fontsize=font)

|

| 102 |

+

sns.despine(ax=axs[0 ], offset=10, trim=True, bottom=True)

|

| 103 |

+

sns.despine(ax=axs[1 ], offset=10, trim=True, bottom=True)

|

| 104 |

+

sns.despine(ax=axs[2 ], offset=10, trim=True)

|

| 105 |

+

axs[0].set_xticks([])

|

| 106 |

+

axs[1].set_xticks([])

|

| 107 |

+

axs[-1 ].set_xlabel("Training dataset size", fontsize=font)

|

| 108 |

+

# Shrink current axis by 20%

|

| 109 |

+

for i, ax in enumerate(axs):

|

| 110 |

+

box = ax.get_position()

|

| 111 |

+

ax.tick_params(axis='both', labelsize=font)

|

| 112 |

+

ax.set_position([box.x0, box.y0, box.width , box.height])

|

| 113 |

+

|

| 114 |

+

# Put a legend to the right of the current axis

|

| 115 |

+

|

| 116 |

+

fig.legend(h, ['baseline', 'LEDM'] + ['step ' + _l for _l in l[2:]], title="", ncol=4,

|

| 117 |

+

loc='center left', bbox_to_anchor=(0.2, -0.03), fontsize=font)

|

| 118 |

+

plt.tight_layout()

|

| 119 |

+

#plt.savefig("results_per_timestep.png")

|

| 120 |

+

plt.savefig("results_per_timestep_dice.pdf", bbox_inches='tight')

|

| 121 |

+

plt.show()

|

| 122 |

+

# %%

|

| 123 |

+

x = [1, 1, 3, 3, 6, 6, 12, 12, 24, 24, 49, 49, 197, 197]

|

| 124 |

+

plot_x = np.concatenate([np.array([-.4, .4]) + i for i in range(len(x)//2)]).flatten()

|

| 125 |

+

fig, axs = plt.subplots(3, 2, figsize=[15, 15])

|

| 126 |

+

sns.set_style("whitegrid")

|

| 127 |

+

for j, m in enumerate(["precision", "recall"]):

|

| 128 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 129 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'baseline') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 130 |

+

ys_std = np.quantile(ys, (.25, .75), axis=1, )

|

| 131 |

+

axs[i, j].fill_between(plot_x, ys_std[0], ys_std[1], alpha=.2, zorder=0, color='C6')

|

| 132 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'LEDM') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 133 |

+

ys_std = np.quantile(ys, (.25, .75), axis=1, )

|

| 134 |

+

axs[i, j].fill_between(plot_x, ys_std[0], ys_std[1], alpha=.2, zorder=0, color='C8')

|

| 135 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'baseline') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 136 |

+

ys_mean = np.quantile(ys, .5, axis=1)

|

| 137 |

+

axs[i, j].plot(plot_x, ys_mean, label="baseline", c='C6', zorder=0)

|

| 138 |

+

ys = np.stack([df.loc[(df.dataset == dataset)& (df.exp == 'LEDM') & (df.datasize == _x), m].to_numpy() for _x in x])

|

| 139 |

+

ys_mean = np.quantile(ys, .5, axis=1)

|

| 140 |

+

axs[i, j].plot(plot_x, ys_mean, label="LEDM" , c='C7', zorder=0)

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

##

|

| 144 |

+

temp_df = df2[(df2.dataset == dataset) & (df2.datasize != 98)]

|

| 145 |

+

out = sns.boxplot(data=temp_df, x="datasize", y=m, hue="timestep", ax=axs[i,j], showfliers=False, saturation=1)

|

| 146 |

+

axs[i,j].set_title(f"{dataset}", fontsize=font)

|

| 147 |

+

y_min, _ = axs[i,j].get_ylim()

|

| 148 |

+

axs[i,j].set_ylim(y_min, 1)

|

| 149 |

+

sns.despine(ax=axs[i,j], offset=10, trim=True)

|

| 150 |

+

h, l = axs[i,j].get_legend_handles_labels()

|

| 151 |

+

axs[i,j].get_legend().remove()

|

| 152 |

+

axs[i, 0].set_ylabel("Precison", fontsize=font)

|

| 153 |

+

axs[i, 1].set_ylabel("Recall", fontsize=font)

|

| 154 |

+

axs[i,j].set_xlabel("")

|

| 155 |

+

|

| 156 |

+

for ax in axs.flatten():

|

| 157 |

+

ax.tick_params(axis='both', labelsize=font)

|

| 158 |

+

for ax in [axs[:, 0], axs[:, 1]]:

|

| 159 |

+

sns.despine(ax=ax[0 ], offset=10, trim=True, bottom=True)

|

| 160 |

+

sns.despine(ax=ax[1 ], offset=10, trim=True, bottom=True)

|

| 161 |

+

sns.despine(ax=ax[2 ], offset=10, trim=True)

|

| 162 |

+

ax[0].set_xticks([])

|

| 163 |

+

ax[1].set_xticks([])

|

| 164 |

+

ax[-1 ].set_xlabel("Training dataset size", fontsize=font)

|

| 165 |

+

# Put a legend to the right of the current axis

|

| 166 |

+

|

| 167 |

+

|

| 168 |

+

fig.legend(h, ['baseline', 'LEDM'] + ['step ' + _l for _l in l[2:]], title="", ncol=4,

|

| 169 |

+

loc='center left', bbox_to_anchor=(0.25, -0.03), fontsize=font)

|

| 170 |

+

plt.tight_layout()

|

| 171 |

+

#plt.savefig("results_per_timestep.png")

|

| 172 |

+

plt.savefig("results_per_timestep_prec_recall.pdf", bbox_inches='tight')

|

| 173 |

+

plt.show()

|

| 174 |

+

|

| 175 |

+

# %%

|

auxiliary/notebooks_and_reporting/print_table_results.py

ADDED

|

File without changes

|

auxiliary/notebooks_and_reporting/print_tests_shared_weights.py

ADDED

|

@@ -0,0 +1,222 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# %%

|

| 2 |

+

import numpy as np

|

| 3 |

+

import torch

|

| 4 |

+

from pathlib import Path

|

| 5 |

+

import os

|

| 6 |

+

import pandas as pd

|

| 7 |

+

import seaborn as sns

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

HEAD = Path(os.getcwd()).parent.parent

|

| 10 |

+

|

| 11 |

+

if __name__=="__main__":

|

| 12 |

+

# load baseline and LEDM data

|

| 13 |

+

metrics = {"dice": [], "precision": [], "recall": [], "exp": [], "datasize": [], "dataset":[]}

|

| 14 |

+

files_needed = ["JSRT_val_predictions.pt", "JSRT_test_predictions.pt", "NIH_predictions.pt", "Montgomery_predictions.pt",]

|

| 15 |

+

head = HEAD / 'logs'

|

| 16 |

+

for exp in ['baseline', 'LEDM']:

|

| 17 |

+

for datasize in [1, 3, 6, 12, 24, 49, 98, 197]:

|

| 18 |

+

if len(set(files_needed) - set(os.listdir(head / exp / str(datasize)))) == 0:

|

| 19 |

+

print(f"Experiment {exp} {datasize}")

|

| 20 |

+

output = torch.load(head / exp / str(datasize) / "JSRT_val_predictions.pt")

|

| 21 |

+

print(f"{output['dice'].mean()}\t{output['dice'].std()}")

|

| 22 |

+

for file in files_needed[1:]:

|

| 23 |

+

output = torch.load(head / exp / str(datasize) / file)

|

| 24 |

+

metrics_datasize = 197 if datasize == "None" else int(datasize)

|

| 25 |

+

metrics["dice"].append(output["dice"].numpy())

|

| 26 |

+

metrics["precision"].append(output["precision"].numpy())

|

| 27 |

+

metrics["recall"].append(output["recall"].numpy())

|

| 28 |

+

metrics["exp"].append(np.array([exp] * len(output["dice"])))

|

| 29 |

+

metrics["datasize"].append(np.array([int(datasize)] * len(output["dice"])))

|

| 30 |

+

metrics["dataset"].append(np.array([file.split("_")[0]]*len(output["dice"])))

|

| 31 |

+

else:

|

| 32 |

+

print(f"Experiment {exp} is missing files")

|

| 33 |

+

|

| 34 |

+

for key in metrics:

|

| 35 |

+

metrics[key] = np.concatenate([el.squeeze() for el in metrics[key]])

|

| 36 |

+

df = pd.DataFrame(metrics)

|

| 37 |

+

df.head()

|

| 38 |

+

|

| 39 |

+

# %% load TEDM data

|

| 40 |

+

metrics3 = {"dice": [], "precision": [], "recall": [], "exp": [], "datasize": [], "dataset":[], }

|

| 41 |

+

exp = "TEDM"

|

| 42 |

+

for datasize in [1, 3, 6, 12, 24, 49, 98, 197]:

|

| 43 |

+

if len(set(files_needed) - set(os.listdir(head / exp / str(datasize) ))) == 0:

|

| 44 |

+

print(f"Experiment {datasize}")

|

| 45 |

+

output = torch.load(head / exp / str(datasize)/ "JSRT_val_predictions.pt")

|

| 46 |

+

print(f"{output['dice'].mean()}\t{output['dice'].std()}")

|

| 47 |

+

for file in files_needed[1:]:

|

| 48 |

+

output = torch.load(head / exp / str(datasize) / file)

|

| 49 |

+

|

| 50 |

+

metrics_datasize = datasize if datasize is not None else 197

|

| 51 |

+

metrics3["dice"].append(output["dice"].numpy())

|

| 52 |

+

metrics3["precision"].append(output["precision"].numpy())

|

| 53 |

+

metrics3["recall"].append(output["recall"].numpy())

|

| 54 |

+

metrics3["exp"].append(np.array(['TEDM'] * len(output["dice"])))

|

| 55 |

+

metrics3["datasize"].append(np.array([metrics_datasize] * len(output["dice"])))

|

| 56 |

+

metrics3["dataset"].append(np.array([file.split("_")[0]]*len(output["dice"])))

|

| 57 |

+

|

| 58 |

+

else:

|

| 59 |

+

print(f"Experiment {datasize} is missing files")

|

| 60 |

+

|

| 61 |

+

for key in metrics3:

|

| 62 |

+

metrics3[key] = np.concatenate(metrics3[key]).squeeze()

|

| 63 |

+

print(key, metrics3[key].shape)

|

| 64 |

+

df3 = pd.DataFrame(metrics3)

|

| 65 |

+

# %% Boxplot of TEDM vs LEDM and baseline

|

| 66 |

+

df4 = pd.concat([df, df3])

|

| 67 |

+

df4.datasize = df4.datasize.astype(int)

|

| 68 |

+

m='dice'

|

| 69 |

+

dataset="JSRT"

|

| 70 |

+

fig, axs = plt.subplots(3, 3, figsize=(20, 20))

|

| 71 |

+

for j, m in enumerate(["dice", "precision", "recall"]):

|

| 72 |

+

#axs[0,j].set_ylim(0.8, 1)

|

| 73 |

+

#axs[0,j].set_ylim(0.6, 1)

|

| 74 |

+

#axs[0,j].set_ylim(0.7, 1)

|

| 75 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 76 |

+

temp_df = df4[(df4.dataset == dataset)]

|

| 77 |

+

#sns.lineplot(data=df[df.dataset == dataset], x="datasize", y=m, hue="exp", ax=axs[i,j])

|

| 78 |

+

sns.boxplot(data=temp_df, x="datasize", y=m, ax=axs[i,j], hue="exp", showfliers=False, saturation=1,

|

| 79 |

+

hue_order=['baseline', 'LEDM', 'TEDM'])

|

| 80 |

+

axs[i,j].set_title(f"{dataset} {m}")

|

| 81 |

+

axs[i,j].set_xlabel("Training dataset size")

|

| 82 |

+

h, l = axs[i,j].get_legend_handles_labels()

|

| 83 |

+

axs[i,j].legend(h, ['Baseline', 'LEDM', 'TEDM (ours)'], title="", loc='lower right')

|

| 84 |

+

plt.tight_layout()

|

| 85 |

+

plt.savefig("results_shared_weights.pdf")

|

| 86 |

+

plt.show()

|

| 87 |

+

# %% Load LEDMe and Step 1

|

| 88 |

+

metrics2 = {"dice": [], "precision": [], "recall": [], "exp": [], "datasize": [], "dataset":[], }

|

| 89 |

+

for exp in ["LEDMe", 'Step_1']:

|

| 90 |

+

for datasize in [1, 3, 6, 12, 24, 49, 98, 197]:

|

| 91 |

+

if len(set(files_needed) - set(os.listdir(head / exp / str(datasize) ))) == 0:

|

| 92 |

+

print(f"Experiment {exp} {datasize}")

|

| 93 |

+

output = torch.load(head / exp / str(datasize)/ "JSRT_val_predictions.pt")

|

| 94 |

+

print(f"{output['dice'].mean()}\t{output['dice'].std()}")

|

| 95 |

+

for file in files_needed[1:]:

|

| 96 |

+

output = torch.load(head / exp / str(datasize) / file)

|

| 97 |

+

#print(f"{output['dice'].mean()*100:.3}\t{output['dice'].std()*100:.3}\t{output['precision'].mean()*100:.3}\t{output['precision'].std()*100:.3}\t{output['recall'].mean()*100:.3}\t{output['recall'].std()*100:.3}",

|

| 98 |

+

# end="\n\n\n\n")

|

| 99 |

+

metrics_datasize = 197 if datasize == "None" else datasize

|

| 100 |

+

metrics2["dice"].append(output["dice"].numpy())

|

| 101 |

+

metrics2["precision"].append(output["precision"].numpy())

|

| 102 |

+

metrics2["recall"].append(output["recall"].numpy())

|

| 103 |

+

metrics2["exp"].append(np.array([exp] * len(output["dice"])))

|

| 104 |

+

metrics2["datasize"].append(np.array([int(metrics_datasize)] * len(output["dice"])))

|

| 105 |

+

metrics2["dataset"].append(np.array([file.split("_")[0]]*len(output["dice"])))

|

| 106 |

+

else:

|

| 107 |

+

print(f"Experiment {exp} is missing files")

|

| 108 |

+

|

| 109 |

+

for key in metrics2:

|

| 110 |

+

metrics2[key] = np.concatenate(metrics2[key]).squeeze()

|

| 111 |

+

print(key, metrics2[key].shape)

|

| 112 |

+

df2 = pd.DataFrame(metrics2)

|

| 113 |

+

# %% Boxplot of TEDM vs LEDM and baseline, Step 1 and LEDMe

|

| 114 |

+

df4 = pd.concat([df, df3, df2])

|

| 115 |

+

df4.datasize = df4.datasize.astype(int)

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

m='dice'

|

| 119 |

+

dataset="JSRT"

|

| 120 |

+

fig, axs = plt.subplots(3, 3, figsize=(20, 20))

|

| 121 |

+

for j, m in enumerate(["dice", "precision", "recall"]):

|

| 122 |

+

|

| 123 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 124 |

+

temp_df = df4[(df4.dataset == dataset)]

|

| 125 |

+

#sns.lineplot(data=df[df.dataset == dataset], x="datasize", y=m, hue="exp", ax=axs[i,j])

|

| 126 |

+

sns.boxplot(data=temp_df, x="datasize", y=m, ax=axs[i,j], hue="exp", showfliers=False, saturation=1,

|

| 127 |

+

hue_order=['baseline', 'LEDM', 'Step_1', 'LEDMe', 'TEDM', ])

|

| 128 |

+

axs[i,j].set_title(f"{dataset} {m}")

|

| 129 |

+

axs[i,j].set_xlabel("Training dataset size")

|

| 130 |

+

h, l = axs[i,j].get_legend_handles_labels()

|

| 131 |

+

axs[i,j].legend(h, ['Baseline', 'LEDM', 'Step 1', 'LEDMe', 'TEDM'], title="", loc='lower right')

|

| 132 |

+

plt.tight_layout()

|

| 133 |

+

plt.savefig("results_shared_weights.pdf")

|

| 134 |

+

plt.show()

|

| 135 |

+

# %% Load TEDM ablation studies

|

| 136 |

+

metrics4 = {"dice": [], "precision": [], "recall": [], "exp": [], "datasize": [], "dataset":[], }

|

| 137 |

+

exp = "TEDM"

|

| 138 |

+

for datasize in [1, 3, 6, 12, 24, 49, 98, 197]:

|

| 139 |

+

if len(set(files_needed) - set(os.listdir(head / exp / str(datasize)))) == 0:

|

| 140 |

+

print(f"Experiment {datasize} ")

|

| 141 |

+

for step in [1,10,25]:

|

| 142 |

+

for file in files_needed[1:]:

|

| 143 |

+

output = torch.load(head / exp / str(datasize) / file.replace("predictions", f"timestep{step}_predictions"))

|

| 144 |

+

#print(f"{output['dice'].mean()*100:.3}\t{output['dice'].std()*100:.3}\t{output['precision'].mean()*100:.3}\t{output['precision'].std()*100:.3}\t{output['recall'].mean()*100:.3}\t{output['recall'].std()*100:.3}",

|

| 145 |

+

# end="\n\n\n\n")

|

| 146 |

+

metrics_datasize = datasize if datasize is not None else 197

|

| 147 |

+

metrics4["dice"].append(output["dice"].numpy())

|

| 148 |

+

metrics4["precision"].append(output["precision"].numpy())

|

| 149 |

+

metrics4["recall"].append(output["recall"].numpy())

|

| 150 |

+

metrics4["exp"].append(np.array([f'Step {step} (MLP)'] * len(output["dice"])))

|

| 151 |

+

metrics4["datasize"].append(np.array([metrics_datasize] * len(output["dice"])))

|

| 152 |

+

metrics4["dataset"].append(np.array([file.split("_")[0]]*len(output["dice"])))

|

| 153 |

+

#metrics3["timestep"].append(np.array(timestep * len(output["dice"])))

|

| 154 |

+

else:

|

| 155 |

+

print(f"Experiment {datasize} is missing files")

|

| 156 |

+

|

| 157 |

+

for key in metrics3:

|

| 158 |

+

metrics4[key] = np.concatenate(metrics4[key]).squeeze()

|

| 159 |

+

print(key, metrics4[key].shape)

|

| 160 |

+

df4 = pd.DataFrame(metrics4)

|

| 161 |

+

# %% Print inputs to paper table

|

| 162 |

+

df_all = pd.concat([df, df3, df2, df4])

|

| 163 |

+

df_all.datasize = df_all.datasize.astype(int)

|

| 164 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 165 |

+

temp_df = df_all.loc[(df_all.dataset == dataset) & (df_all.datasize.isin([1, 3, 6, 12, 197])), ["exp", "datasize", "dice"]]

|

| 166 |

+

print(dataset)

|

| 167 |

+

mean = temp_df.groupby(["exp", "datasize"]).mean().unstack() * 100

|

| 168 |

+

std = temp_df.groupby(["exp", "datasize"]).std().unstack() * 100

|

| 169 |

+

for exp, exp_name in zip(['baseline', 'LEDM','Step_1', 'Step 1 (MLP)',

|

| 170 |

+

'Step 10 (MLP)','Step 25 (MLP)', 'LEDMe', 'TEDM'],

|

| 171 |

+

['Baseline', 'DatasetDDPM', 'Step 1 (linear)','Step 1 (MLP)', 'Step 10 (MLP)','Step 25 (MLP)','DatasetDDPMe', 'Ours', ]):

|

| 172 |

+

|

| 173 |

+

print(exp_name, end='&\t')

|

| 174 |

+

print(f"{round(mean.loc[exp, ('dice', 1)],2):.3} $\pm$ {round(std.loc[exp, ('dice', 1)],1)}", end='&\t')

|

| 175 |

+

print(f"{round(mean.loc[exp, ('dice', 3)], 2):.3} $\pm$ {round(std.loc[exp, ('dice', 3)],1)}", end='&\t')

|

| 176 |

+

print(f"{round(mean.loc[exp, ('dice', 6)], 2):.3} $\pm$ {round(std.loc[exp, ('dice', 6)],1)}", end='&\t')

|

| 177 |

+

print(f"{round(mean.loc[exp, ('dice', 12)], 2):.3} $\pm$ {round(std.loc[exp, ('dice', 12)],1)}", end='&\t')

|

| 178 |

+

print(f"{round(mean.loc[exp, ('dice', 197)], 2):.3} $\pm$ {round(std.loc[exp, ('dice', 197)],1)}", end="""\\\\""")

|

| 179 |

+

|

| 180 |

+

print()

|

| 181 |

+

|

| 182 |

+

# %% Print inputs to paper appendix table

|

| 183 |

+

for i, dataset in enumerate(["JSRT", "NIH", "Montgomery"]):

|

| 184 |

+

print("\n" + dataset)

|

| 185 |

+

for m in ["precision", "recall"]:

|

| 186 |

+

temp_df = df_all.loc[(df_all.dataset == dataset) & (df_all.datasize.isin([1, 3, 6, 12, 24, 49, 98, 197])), ["exp", "datasize", m]]

|

| 187 |

+

print("\n"+m)

|

| 188 |

+

mean = temp_df.groupby(["exp", "datasize"]).mean().unstack() * 100

|

| 189 |

+

std = temp_df.groupby(["exp", "datasize"]).std().unstack() * 100

|

| 190 |

+

for exp, exp_name in zip(['baseline', 'LEDM','Step_1', 'LEDMe', 'TEDM'],

|

| 191 |

+

['Baseline', 'LEDM', 'Step 1 (linear)','LEDMe', 'TEDM (ours)',]):

|

| 192 |

+

|

| 193 |

+

print(exp_name, end='&\t')

|

| 194 |

+

print(f"{round(mean.loc[exp, (m, 1)],2):.3} $\pm$ {round(std.loc[exp, (m, 1)],1)}", end='&\t')

|

| 195 |

+

print(f"{round(mean.loc[exp, (m, 3)],2):.3} $\pm$ {round(std.loc[exp, (m, 3)],1)}", end='&\t')

|

| 196 |

+

print(f"{round(mean.loc[exp, (m, 6)],2):.3} $\pm$ {round(std.loc[exp, (m, 6)],1)}", end='&\t')

|

| 197 |

+

print(f"{round(mean.loc[exp, (m, 12)],2):.3} $\pm$ {round(std.loc[exp, (m, 12)],1)}", end='&\t')

|

| 198 |

+

print(f"{round(mean.loc[exp, (m, 197)],2):.3} $\pm$ {round(std.loc[exp, (m, 197)],1)}", end='\\\\')

|

| 199 |

+

|

| 200 |

+

|

| 201 |

+

print()

|

| 202 |

+

|

| 203 |

+

# %% Wilcoxon tests - to use interactively

|

| 204 |

+

from scipy.stats import wilcoxon

|

| 205 |

+

m ="precision"

|

| 206 |

+

m='recall'

|

| 207 |

+

dataset ="Montgomery"

|

| 208 |

+

dssize =12

|

| 209 |

+

|

| 210 |

+

exp = "baseline"

|

| 211 |

+

exp = 'Step_1'

|

| 212 |

+

exp = "LEDM"

|

| 213 |

+

exp="TEDM"

|

| 214 |

+

exp_2= 'LEDMe'

|

| 215 |

+

|

| 216 |

+

x = df_all.loc[(df_all.dataset == dataset) & (df_all.exp == exp_2) & (df_all.datasize == dssize), m].to_numpy()

|

| 217 |

+

y = df_all.loc[(df_all.dataset == dataset) & (df_all.exp == exp)& (df_all.datasize == dssize), m].to_numpy()

|

| 218 |

+

print(f"{m} - {dataset} - {dssize} - {exp_2}: {x.mean():.4}+/-{x.std():.3} ")

|

| 219 |

+

print(f"{m} - {dataset} - {dssize} - {exp}: {y.mean():.4}+/-{y.std():.3} ")

|

| 220 |

+

print(f"{m} - {dataset} - {dssize}: {wilcoxon(x, y=y, zero_method='wilcox', correction=False, alternative='two-sided',).pvalue:.3} obs given equal ")

|

| 221 |

+

print(f"{m} - {dataset} - {dssize}: {wilcoxon(x, y=y, zero_method='wilcox', correction=False, alternative='greater',).pvalue:.3} obs given {exp_2} < {exp} ")

|

| 222 |

+

print(f"{m} - {dataset} - {dssize}: {wilcoxon(x, y=y, zero_method='wilcox', correction=False, alternative='less',).pvalue:.3} obs given {exp_2} > {exp} ")

|

auxiliary/notebooks_and_reporting/results_per_timestep.pdf

ADDED

|

Binary file (79.6 kB). View file

|

|

|

auxiliary/notebooks_and_reporting/results_per_timestep_dice.pdf

ADDED

|

Binary file (177 kB). View file

|

|

|

auxiliary/notebooks_and_reporting/results_per_timestep_prec_recall.pdf

ADDED

|

Binary file (197 kB). View file

|

|

|

auxiliary/notebooks_and_reporting/results_shared_weights.pdf

ADDED

|

Binary file (66.2 kB). View file

|

|

|

auxiliary/notebooks_and_reporting/visualisations.pdf

ADDED

|

Binary file (296 kB). View file

|

|

|

auxiliary/notebooks_and_reporting/visualisations.py

ADDED

|

@@ -0,0 +1,162 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|