Spaces:

Runtime error

Runtime error

Updated the architecture descriptions, images and caption text for the display of the architectures

Browse files

config/architectures.json

CHANGED

|

@@ -2,7 +2,7 @@

|

|

| 2 |

"architectures": [

|

| 3 |

{

|

| 4 |

"name": "1. Baseline LLM",

|

| 5 |

-

"description": "

|

| 6 |

"steps": [

|

| 7 |

{"class": "InputRequestScreener"},

|

| 8 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://yl89ru8gdr1wkbej.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Unmodified Meta Llama 2 chat", "system_prompt": "You are a helpful agent.", "max_new_tokens": 1000}},

|

|

@@ -12,16 +12,17 @@

|

|

| 12 |

},

|

| 13 |

{

|

| 14 |

"name": "2. Fine-tuning Architecture",

|

| 15 |

-

"description": "

|

| 16 |

"steps": [

|

| 17 |

{"class": "InputRequestScreener"},

|

| 18 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://kl6kq9j1yw3hoj4e.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 19 |

{"class": "OutputResponseScreener"}

|

| 20 |

-

]

|

|

|

|

| 21 |

},

|

| 22 |

{

|

| 23 |

"name": "3. RAG Architecture",

|

| 24 |

-

"description": "

|

| 25 |

"steps": [

|

| 26 |

{"class": "InputRequestScreener"},

|

| 27 |

{"class": "RetrievalAugmentor", "params": {"vector_store": "02_baseline_products"}},

|

|

@@ -33,47 +34,51 @@

|

|

| 33 |

},

|

| 34 |

{

|

| 35 |

"name": "4a. Fine-tuning Architecture evolution V5",

|

| 36 |

-

"description": "

|

| 37 |

"steps": [

|

| 38 |

{"class": "InputRequestScreener"},

|

| 39 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://pgzu02dvzupp5sml.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 40 |

{"class": "OutputResponseScreener"}

|

| 41 |

-

]

|

|

|

|

| 42 |

},

|

| 43 |

{

|

| 44 |

"name": "4b. Fine-tuning Architecture evolution V6",

|

| 45 |

-

"description": "

|

| 46 |

"steps": [

|

| 47 |

{"class": "InputRequestScreener"},

|

| 48 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://ln8i9z4ecjqora6d.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 49 |

{"class": "OutputResponseScreener"}

|

| 50 |

-

]

|

|

|

|

| 51 |

},

|

| 52 |

{

|

| 53 |

"name": "4c. Fine-tuning Architecture evolution V7",

|

| 54 |

-

"description": "

|

| 55 |

"steps": [

|

| 56 |

{"class": "InputRequestScreener"},

|

| 57 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://kl6kq9j1yw3hoj4e.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 58 |

{"class": "OutputResponseScreener"}

|

| 59 |

-

]

|

|

|

|

| 60 |

},

|

| 61 |

{

|

| 62 |

"name": "5a. Performance test (safety off) Fine-tuning",

|

| 63 |

-

"description": "

|

| 64 |

"steps": [

|

| 65 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://kl6kq9j1yw3hoj4e.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}}

|

| 66 |

-

]

|

|

|

|

| 67 |

},

|

| 68 |

{

|

| 69 |

"name": "5b. Performance test (safety off) RAG",

|

| 70 |

-

"description": "

|

| 71 |

"steps": [

|

| 72 |

{"class": "RetrievalAugmentor", "params": {"vector_store": "02_baseline_products"}},

|

| 73 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://yl89ru8gdr1wkbej.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Unmodified Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor. Please answer the following customer question, answering only from the facts provided. Answer based on the background provided, do not make things up, and say if you cannot answer.", "max_new_tokens": 1000}},

|

| 74 |

{"class": "ResponseTrimmer", "params": {"regexes": ["^.{0,20}information provided[0-9A-Za-z,]*? ", "^.{0,20}background[0-9A-Za-z,]*? "]}}

|

| 75 |

],

|

| 76 |

-

"img": "

|

| 77 |

}

|

| 78 |

]

|

| 79 |

}

|

|

|

|

| 2 |

"architectures": [

|

| 3 |

{

|

| 4 |

"name": "1. Baseline LLM",

|

| 5 |

+

"description": "This architecture represents a baseline control. It includes safety components checking both the query and the response. The core of the architecture is powered by the unmodified Llama 2 7 billion parameter chat model. This model has never seen any of the private data, so the expectation is it will perform poorly, and it is included just as a comparative control.",

|

| 6 |

"steps": [

|

| 7 |

{"class": "InputRequestScreener"},

|

| 8 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://yl89ru8gdr1wkbej.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Unmodified Meta Llama 2 chat", "system_prompt": "You are a helpful agent.", "max_new_tokens": 1000}},

|

|

|

|

| 12 |

},

|

| 13 |

{

|

| 14 |

"name": "2. Fine-tuning Architecture",

|

| 15 |

+

"description": "This architecture is the final version of a fine-tuned LLM (version 7 of the fine-tuning iterations) based approach. Compared to the baseline architecture, the LLM which is the core of the question answering is replaced by one which has been further trained on questions and answers derived from the private data. It should therefore be able to answer questions about the ElectroHome products.",

|

| 16 |

"steps": [

|

| 17 |

{"class": "InputRequestScreener"},

|

| 18 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://kl6kq9j1yw3hoj4e.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 19 |

{"class": "OutputResponseScreener"}

|

| 20 |

+

],

|

| 21 |

+

"img": "architecture_fine_tuned_v7.jpg"

|

| 22 |

},

|

| 23 |

{

|

| 24 |

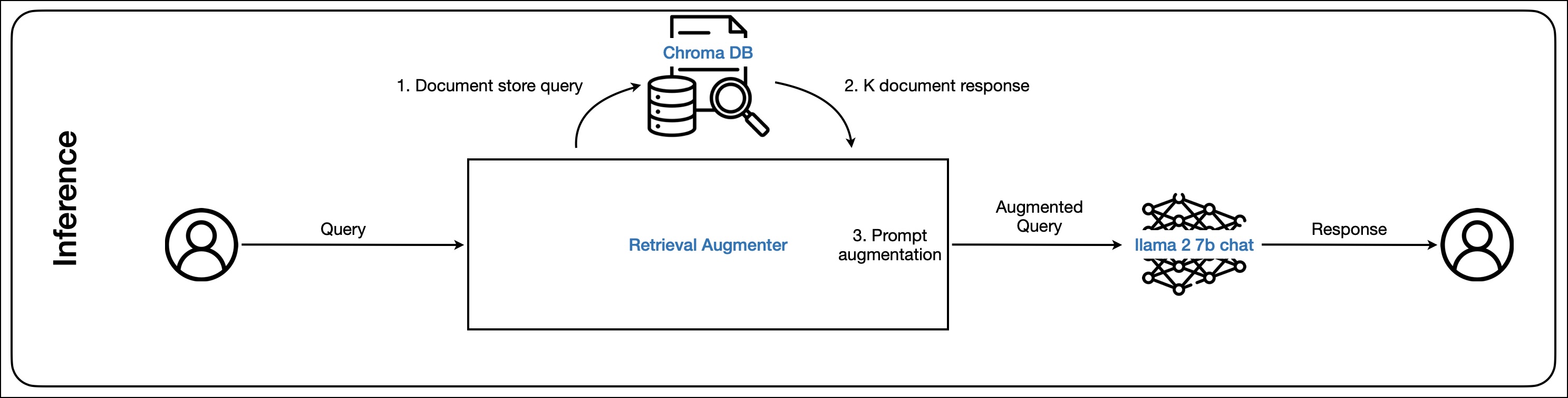

"name": "3. RAG Architecture",

|

| 25 |

+

"description": "This architecture is the RAG based approach. The underlying LLM for this architecture is unchanged from the baseline, but the system also includes a vector database where relevant documents can be retrieved and the LLM prompt augmented. This process gives the LLM more context for the question which should all it to answer questions about the ElectroHome products.",

|

| 26 |

"steps": [

|

| 27 |

{"class": "InputRequestScreener"},

|

| 28 |

{"class": "RetrievalAugmentor", "params": {"vector_store": "02_baseline_products"}},

|

|

|

|

| 34 |

},

|

| 35 |

{

|

| 36 |

"name": "4a. Fine-tuning Architecture evolution V5",

|

| 37 |

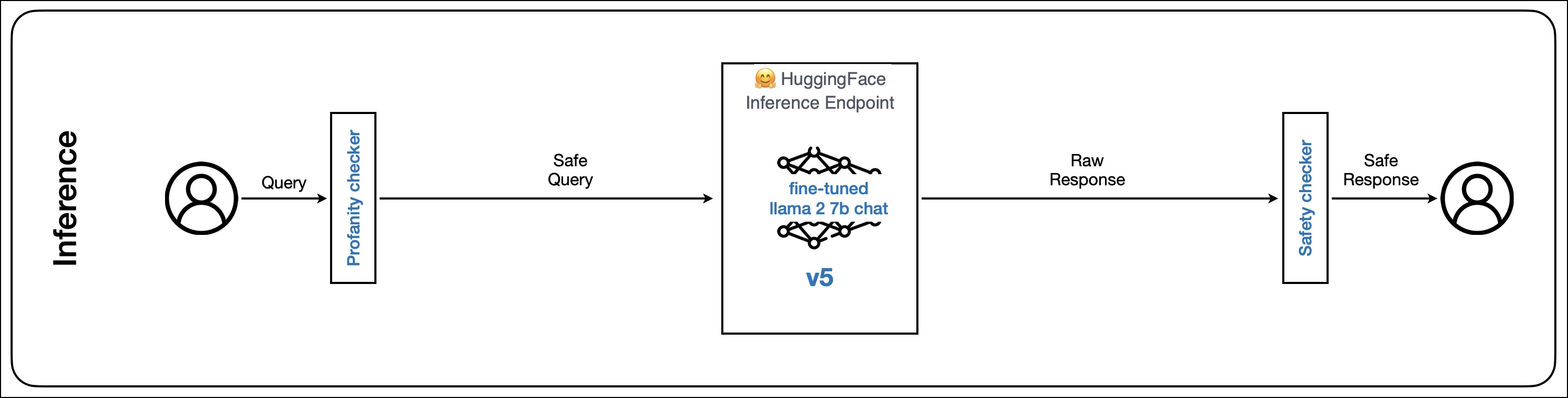

+

"description": "This fine-tuning architecture features version 5 of the fine-tuned LLM, which was subject to ~1.25 hours of training. It is included to allow testing of progressive learning of the LLM over the fine-tuning process.",

|

| 38 |

"steps": [

|

| 39 |

{"class": "InputRequestScreener"},

|

| 40 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://pgzu02dvzupp5sml.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 41 |

{"class": "OutputResponseScreener"}

|

| 42 |

+

],

|

| 43 |

+

"img": "architecture_fine_tuned_v5.jpg"

|

| 44 |

},

|

| 45 |

{

|

| 46 |

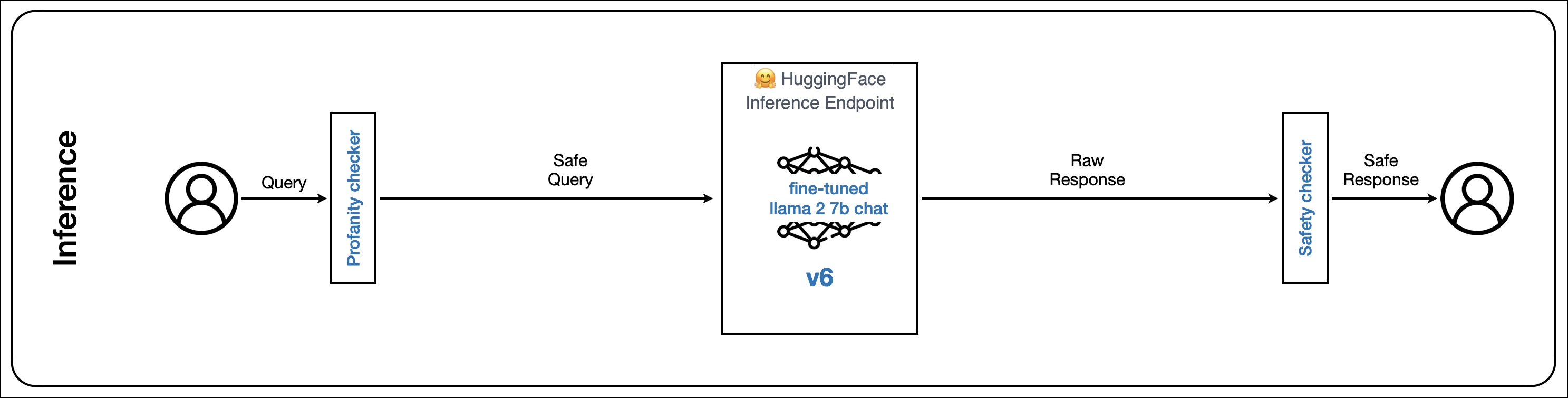

"name": "4b. Fine-tuning Architecture evolution V6",

|

| 47 |

+

"description": "This fine-tuning architecture features version 6 of the fine-tuned LLM, which was subject to ~3 hours of training. It is included to allow testing of progressive learning of the LLM over the fine-tuning process.",

|

| 48 |

"steps": [

|

| 49 |

{"class": "InputRequestScreener"},

|

| 50 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://ln8i9z4ecjqora6d.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 51 |

{"class": "OutputResponseScreener"}

|

| 52 |

+

],

|

| 53 |

+

"img": "architecture_fine_tuned_v6.jpg"

|

| 54 |

},

|

| 55 |

{

|

| 56 |

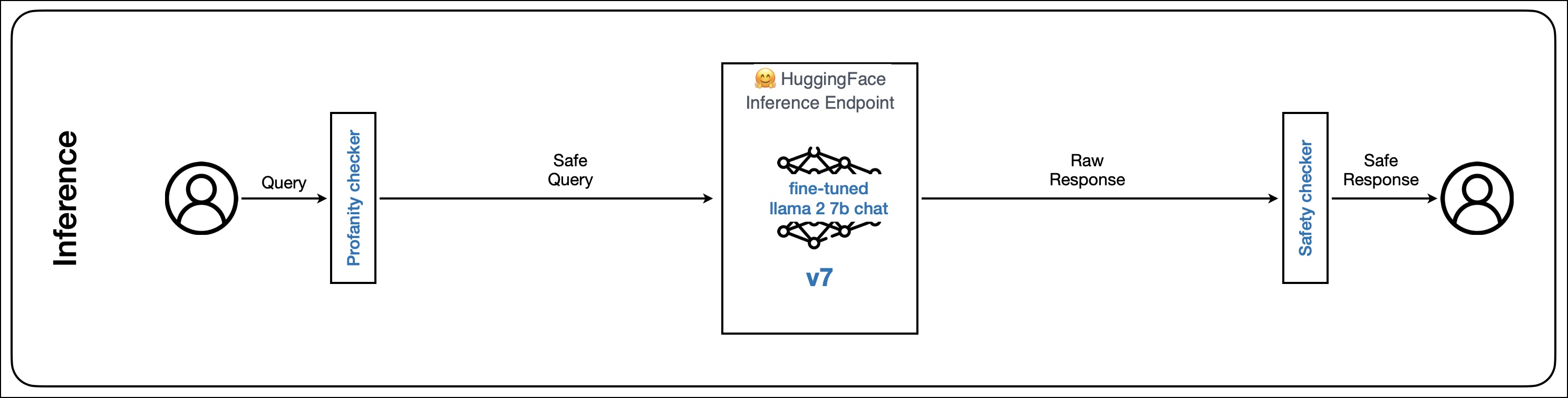

"name": "4c. Fine-tuning Architecture evolution V7",

|

| 57 |

+

"description": "This fine-tuning architecture is identical to architecture 2, and features version 7 of the fine-tuned LLM, which was subject to ~12 hours of training. It is included to allow testing of progressive learning of the LLM over the fine-tuning process.",

|

| 58 |

"steps": [

|

| 59 |

{"class": "InputRequestScreener"},

|

| 60 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://kl6kq9j1yw3hoj4e.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}},

|

| 61 |

{"class": "OutputResponseScreener"}

|

| 62 |

+

],

|

| 63 |

+

"img": "architecture_fine_tuned_v7.jpg"

|

| 64 |

},

|

| 65 |

{

|

| 66 |

"name": "5a. Performance test (safety off) Fine-tuning",

|

| 67 |

+

"description": "This architecture is the fine-tuning model (architecture 2) but with the safety components removed. The reason for this is because the safety components also include an LLM call to the baseline model, so for a fair test these are disabled to be able to compare the core differentiating elements of fine-tuning vs RAG.",

|

| 68 |

"steps": [

|

| 69 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://kl6kq9j1yw3hoj4e.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Fine-Tuned Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor for the ElectroHome company. Please answer customer questions and do not mention other brands. Answer succinctly with facts, and if you cannot answer please say so.", "max_new_tokens": 1000, "prompt_style": "multi_line_with_roles"}}

|

| 70 |

+

],

|

| 71 |

+

"img": "architecture_fine_tuned_performance_test.jpg"

|

| 72 |

},

|

| 73 |

{

|

| 74 |

"name": "5b. Performance test (safety off) RAG",

|

| 75 |

+

"description": "This architecture is the RAG model (architecture 3) but with the safety components removed. The reason for this is because the safety components also include an LLM call to the baseline model, so for a fair test these are disabled to be able to compare the core differentiating elements of fine-tuning vs RAG.",

|

| 76 |

"steps": [

|

| 77 |

{"class": "RetrievalAugmentor", "params": {"vector_store": "02_baseline_products"}},

|

| 78 |

{"class": "HFInferenceEndpoint", "params": {"endpoint_url": "https://yl89ru8gdr1wkbej.eu-west-1.aws.endpoints.huggingface.cloud","model_name": "Unmodified Meta Llama 2 chat", "system_prompt": "You are a helpful domestic appliance advisor. Please answer the following customer question, answering only from the facts provided. Answer based on the background provided, do not make things up, and say if you cannot answer.", "max_new_tokens": 1000}},

|

| 79 |

{"class": "ResponseTrimmer", "params": {"regexes": ["^.{0,20}information provided[0-9A-Za-z,]*? ", "^.{0,20}background[0-9A-Za-z,]*? "]}}

|

| 80 |

],

|

| 81 |

+

"img": "architecture_rag_performance_test.jpg"

|

| 82 |

}

|

| 83 |

]

|

| 84 |

}

|

img/architecture_fine_tuned_performance_test.jpg

ADDED

|

img/architecture_fine_tuned_v5.jpg

ADDED

|

img/architecture_fine_tuned_v6.jpg

ADDED

|

img/architecture_fine_tuned_v7.jpg

ADDED

|

img/architecture_rag_performance_test.jpg

ADDED

|

pages/010_LLM_Architectures.py

CHANGED

|

@@ -87,7 +87,7 @@ def show_architecture(architecture: str) -> None:

|

|

| 87 |

st.write(arch.description)

|

| 88 |

if arch.img is not None:

|

| 89 |

img = os.path.join(img_dir, arch.img)

|

| 90 |

-

st.image(img, caption=f'

|

| 91 |

table_data = []

|

| 92 |

for j, s in enumerate(arch.steps, start=1):

|

| 93 |

table_data.append(

|

|

|

|

| 87 |

st.write(arch.description)

|

| 88 |

if arch.img is not None:

|

| 89 |

img = os.path.join(img_dir, arch.img)

|

| 90 |

+

st.image(img, caption=f'{arch.name} As Built', width=1000)

|

| 91 |

table_data = []

|

| 92 |

for j, s in enumerate(arch.steps, start=1):

|

| 93 |

table_data.append(

|