Spaces:

Runtime error

Runtime error

First commit

Browse files- app.py +126 -0

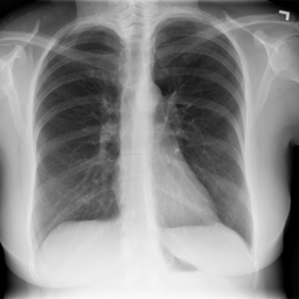

- assets/Examples/Lung_1.png +0 -0

- assets/Examples/Lung_2.png +0 -0

- assets/Examples/Lung_3.png +0 -0

- assets/Examples/Lung_4.png +0 -0

- requirements.txt +5 -0

- utils/metrics.py +26 -0

- utils/model.py +66 -0

- utils/weights/Unet_finetune_v3.h5 +3 -0

app.py

ADDED

|

@@ -0,0 +1,126 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from PIL import Image

|

| 3 |

+

import numpy as np

|

| 4 |

+

import cv2

|

| 5 |

+

import tensorflow as tf

|

| 6 |

+

from utils.model import Unet_3

|

| 7 |

+

|

| 8 |

+

st.header("Segmentación de pulmón con Rayos X")

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

st.markdown(

|

| 12 |

+

"""

|

| 13 |

+

Este es un demo para la clase de Platzi, utilizando un módelo de segmentación desarrollado como parte de mi trabajo de grado.

|

| 14 |

+

|

| 15 |

+

Más Información del modelo se puede encontrar en el siguiente artículo:

|

| 16 |

+

|

| 17 |

+

https://www.sciencedirect.com/science/article/pii/S2666827021000694?via%3Dihub

|

| 18 |

+

|

| 19 |

+

"""

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

## Creamos la función del modelo con tensorflow

|

| 23 |

+

model=Unet_3()

|

| 24 |

+

|

| 25 |

+

model.load_weights("utils/weights/Unet_finetune_v3.h5")

|

| 26 |

+

|

| 27 |

+

## Permitimos a la usuaria cargar una imagen

|

| 28 |

+

archivo_imagen = st.file_uploader("Sube aquí tu imagen.", type=["png", "jpg", "jpeg"])

|

| 29 |

+

|

| 30 |

+

## Si una imagen tiene más de un canal entonces se convierte a escala de grises (1 canal)

|

| 31 |

+

def convertir_one_channel(img):

|

| 32 |

+

if len(img.shape) > 2:

|

| 33 |

+

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

|

| 34 |

+

return img

|

| 35 |

+

else:

|

| 36 |

+

return img

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def convertir_rgb(img):

|

| 40 |

+

if len(img.shape) == 2:

|

| 41 |

+

img = cv2.cvtColor(img, cv2.COLOR_GRAY2RGB)

|

| 42 |

+

return img

|

| 43 |

+

else:

|

| 44 |

+

return img

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

## Manipularemos la interfaz para que podamos usar imágenes ejemplo

|

| 48 |

+

## Si el usuario da click en un ejemplo entonces el modelo correrá con él

|

| 49 |

+

ejemplos = ["Lung_1.png", "Lung_2.png", "Lung_3.png","Lung_4.png"]

|

| 50 |

+

path="assets/Examples/"

|

| 51 |

+

## Creamos tres columnas; en cada una estará una imagen ejemplo

|

| 52 |

+

col1, col2, col3, col4 = st.columns(4)

|

| 53 |

+

with col1:

|

| 54 |

+

## Se carga la imagen y se muestra en la interfaz

|

| 55 |

+

ex = Image.open(path+ejemplos[0])

|

| 56 |

+

st.image(ex, width=200)

|

| 57 |

+

## Si oprime el botón entonces usaremos ese ejemplo en el modelo

|

| 58 |

+

if st.button("Corre este ejemplo 1"):

|

| 59 |

+

archivo_imagen = path+ejemplos[0]

|

| 60 |

+

|

| 61 |

+

with col2:

|

| 62 |

+

ex1 = Image.open(path+ejemplos[1])

|

| 63 |

+

st.image(ex1, width=200)

|

| 64 |

+

if st.button("Corre este ejemplo 2"):

|

| 65 |

+

archivo_imagen = path+ejemplos[1]

|

| 66 |

+

|

| 67 |

+

with col3:

|

| 68 |

+

ex2 = Image.open(path+ejemplos[2])

|

| 69 |

+

st.image(ex2, width=200)

|

| 70 |

+

if st.button("Corre este ejemplo 3"):

|

| 71 |

+

archivo_imagen = path+ejemplos[2]

|

| 72 |

+

|

| 73 |

+

with col4:

|

| 74 |

+

ex2 = Image.open(path+ejemplos[3])

|

| 75 |

+

st.image(ex2, width=200)

|

| 76 |

+

if st.button("Corre este ejemplo 4"):

|

| 77 |

+

archivo_imagen = path+ejemplos[3]

|

| 78 |

+

|

| 79 |

+

## Si tenemos una imagen para ingresar en el modelo entonces

|

| 80 |

+

## la procesamos e ingresamos al modelo

|

| 81 |

+

if archivo_imagen is not None:

|

| 82 |

+

## Cargamos la imagen con PIL, la mostramos y la convertimos a un array de NumPy

|

| 83 |

+

img = Image.open(archivo_imagen)

|

| 84 |

+

st.image(img, width=850)

|

| 85 |

+

img = np.asarray(img)

|

| 86 |

+

|

| 87 |

+

#Variables estadisticas de las imagenes con las que se entrenó

|

| 88 |

+

mean_seg=0.5397013

|

| 89 |

+

std_seg=0.21162419

|

| 90 |

+

|

| 91 |

+

## Procesamos la imagen para ingresarla al modelo

|

| 92 |

+

img_cv = convertir_one_channel(img)

|

| 93 |

+

#Normalizacion

|

| 94 |

+

maxi=np.max(img_cv)

|

| 95 |

+

mini=np.min(img_cv)

|

| 96 |

+

img_N=(img_cv-mini)/(maxi-mini)

|

| 97 |

+

#Estandarizacion

|

| 98 |

+

img_P=(img_N-mean_seg)/std_seg

|

| 99 |

+

|

| 100 |

+

## Ingresamos el array de NumPy al modelo

|

| 101 |

+

mask=model.predict(np.expand_dims(np.expand_dims(img_P,axis=-1),axis=0))

|

| 102 |

+

#mask=np.resize(mask,(224,224))

|

| 103 |

+

predicted=np.array(mask>0.5).astype(int)

|

| 104 |

+

img_seg_1=img*mask

|

| 105 |

+

|

| 106 |

+

## Regresamos la imagen a su forma original y agregamos las máscaras de la segmentación

|

| 107 |

+

predicted = cv2.resize(

|

| 108 |

+

predicted, (img.shape[1], img.shape[0]), interpolation=cv2.INTER_LANCZOS4

|

| 109 |

+

)

|

| 110 |

+

mask = np.uint8(predicted * 255) #

|

| 111 |

+

_, mask = cv2.threshold(

|

| 112 |

+

mask, thresh=0, maxval=255, type=cv2.THRESH_BINARY + cv2.THRESH_OTSU

|

| 113 |

+

)

|

| 114 |

+

kernel = np.ones((5, 5), dtype=np.float32)

|

| 115 |

+

mask = cv2.morphologyEx(mask, cv2.MORPH_OPEN, kernel, iterations=1)

|

| 116 |

+

mask = cv2.morphologyEx(mask, cv2.MORPH_CLOSE, kernel, iterations=1)

|

| 117 |

+

cnts, hieararch = cv2.findContours(mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

|

| 118 |

+

output = cv2.drawContours(convertir_one_channel(img), cnts, -1, (255, 0, 0), 3)

|

| 119 |

+

|

| 120 |

+

## Si obtuvimos exitosamente un resultadod entonces lo mostramos en la interfaz

|

| 121 |

+

if output is not None:

|

| 122 |

+

st.subheader("Segmentación:")

|

| 123 |

+

st.write(output.shape)

|

| 124 |

+

st.image(output, width=850)

|

| 125 |

+

|

| 126 |

+

st.markdown("Gracias por usar nuestro demo! Nos vemos pronto")

|

assets/Examples/Lung_1.png

ADDED

|

assets/Examples/Lung_2.png

ADDED

|

assets/Examples/Lung_3.png

ADDED

|

assets/Examples/Lung_4.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

tensorflow

|

| 2 |

+

numpy

|

| 3 |

+

Pillow

|

| 4 |

+

scipy

|

| 5 |

+

opencv-python

|

utils/metrics.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

from tensorflow.keras import backend as K

|

| 3 |

+

from tensorflow.keras.losses import binary_crossentropy

|

| 4 |

+

import numpy as np

|

| 5 |

+

import tensorflow as tf

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def dice_coef(y_true, y_pred):

|

| 10 |

+

smooth = 1

|

| 11 |

+

intersection = K.sum(K.abs(y_true * y_pred), axis=-1)

|

| 12 |

+

return (2. * intersection + smooth) / (K.sum(K.square(y_true),-1) + K.sum(K.square(y_pred),-1) + smooth)

|

| 13 |

+

|

| 14 |

+

def dice_coef_loss(y_true, y_pred):

|

| 15 |

+

return 1-dice_coef(y_true, y_pred)

|

| 16 |

+

|

| 17 |

+

def iou(y_true, y_pred):

|

| 18 |

+

def f(y_true, y_pred):

|

| 19 |

+

intersection = (y_true * y_pred).sum()

|

| 20 |

+

smooth = 1

|

| 21 |

+

union = y_true.sum() + y_pred.sum() - intersection

|

| 22 |

+

x = (intersection + smooth) / (union + smooth)

|

| 23 |

+

x = x.astype(np.float32)

|

| 24 |

+

return x

|

| 25 |

+

return tf.numpy_function(f, [y_true, y_pred], tf.float32)

|

| 26 |

+

|

utils/model.py

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import tensorflow as tf

|

| 2 |

+

from metrics import *

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

#Modelo Segmentacion

|

| 7 |

+

def Unet_3():

|

| 8 |

+

inputs = tf.keras.layers.Input(shape=(224, 224, 1), name='input')

|

| 9 |

+

#s = tf.keras.layers.Lambda(lambda x: x / 255)(inputs)

|

| 10 |

+

|

| 11 |

+

#Contraction path

|

| 12 |

+

c1 = tf.keras.layers.Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(inputs)

|

| 13 |

+

c1 = tf.keras.layers.Dropout(0.1)(c1)

|

| 14 |

+

c1 = tf.keras.layers.Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c1)

|

| 15 |

+

p1 = tf.keras.layers.MaxPooling2D((2, 2))(c1)

|

| 16 |

+

|

| 17 |

+

c2 = tf.keras.layers.Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p1)

|

| 18 |

+

c2 = tf.keras.layers.Dropout(0.1)(c2)

|

| 19 |

+

c2 = tf.keras.layers.Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c2)

|

| 20 |

+

p2 = tf.keras.layers.MaxPooling2D((2, 2))(c2)

|

| 21 |

+

|

| 22 |

+

c3 = tf.keras.layers.Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p2)

|

| 23 |

+

c3 = tf.keras.layers.Dropout(0.2)(c3)

|

| 24 |

+

c3 = tf.keras.layers.Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c3)

|

| 25 |

+

p3 = tf.keras.layers.MaxPooling2D((2, 2))(c3)

|

| 26 |

+

|

| 27 |

+

c4 = tf.keras.layers.Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p3)

|

| 28 |

+

c4 = tf.keras.layers.Dropout(0.2)(c4)

|

| 29 |

+

c4 = tf.keras.layers.Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c4)

|

| 30 |

+

p4 = tf.keras.layers.MaxPooling2D(pool_size=(2, 2))(c4)

|

| 31 |

+

|

| 32 |

+

c5 = tf.keras.layers.Conv2D(1024, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(p4)

|

| 33 |

+

c5 = tf.keras.layers.Dropout(0.3)(c5)

|

| 34 |

+

c5 = tf.keras.layers.Conv2D(1024, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c5)

|

| 35 |

+

|

| 36 |

+

#Expansive path

|

| 37 |

+

u6 = tf.keras.layers.Conv2DTranspose(512, (2, 2), strides=(2, 2), padding='same')(c5)

|

| 38 |

+

u6 = tf.keras.layers.concatenate([u6, c4])

|

| 39 |

+

c6 = tf.keras.layers.Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u6)

|

| 40 |

+

c6 = tf.keras.layers.Dropout(0.2)(c6)

|

| 41 |

+

c6 = tf.keras.layers.Conv2D(512, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c6)

|

| 42 |

+

|

| 43 |

+

u7 = tf.keras.layers.Conv2DTranspose(256, (2, 2), strides=(2, 2), padding='same')(c6)

|

| 44 |

+

u7 = tf.keras.layers.concatenate([u7, c3])

|

| 45 |

+

c7 = tf.keras.layers.Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u7)

|

| 46 |

+

c7 = tf.keras.layers.Dropout(0.2)(c7)

|

| 47 |

+

c7 = tf.keras.layers.Conv2D(256, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c7)

|

| 48 |

+

|

| 49 |

+

u8 = tf.keras.layers.Conv2DTranspose(128, (2, 2), strides=(2, 2), padding='same')(c7)

|

| 50 |

+

u8 = tf.keras.layers.concatenate([u8, c2])

|

| 51 |

+

c8 = tf.keras.layers.Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u8)

|

| 52 |

+

c8 = tf.keras.layers.Dropout(0.1)(c8)

|

| 53 |

+

c8 = tf.keras.layers.Conv2D(128, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c8)

|

| 54 |

+

|

| 55 |

+

u9 = tf.keras.layers.Conv2DTranspose(64, (2, 2), strides=(2, 2), padding='same')(c8)

|

| 56 |

+

u9 = tf.keras.layers.concatenate([u9, c1], axis=3)

|

| 57 |

+

c9 = tf.keras.layers.Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(u9)

|

| 58 |

+

c9 = tf.keras.layers.Dropout(0.1)(c9)

|

| 59 |

+

c9 = tf.keras.layers.Conv2D(64, (3, 3), activation='relu', kernel_initializer='he_normal', padding='same')(c9)

|

| 60 |

+

|

| 61 |

+

outputs = tf.keras.layers.Conv2D(1, (1, 1), activation='sigmoid')(c9)

|

| 62 |

+

|

| 63 |

+

model = tf.keras.Model(inputs=[inputs], outputs=[outputs])

|

| 64 |

+

model.compile(optimizer='adam', loss=dice_coef_loss, metrics=['accuracy',dice_coef,iou,])

|

| 65 |

+

print('Modelo importado correctamente')

|

| 66 |

+

return model

|

utils/weights/Unet_finetune_v3.h5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a50e633c4159865871d47b43b3ba4e33f81ed89ef7a57ea802b1e518ba56194e

|

| 3 |

+

size 372596200

|