Spaces:

Runtime error

Runtime error

Ahsen Khaliq

commited on

Commit

•

47c361d

1

Parent(s):

5a02527

Upload ParallelWaveGAN/README.md

Browse files- ParallelWaveGAN/README.md +461 -0

ParallelWaveGAN/README.md

ADDED

|

@@ -0,0 +1,461 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Parallel WaveGAN implementation with Pytorch

|

| 2 |

+

|

| 3 |

+

[](https://pypi.org/project/parallel-wavegan/)   [](https://colab.research.google.com/github/espnet/notebook/blob/master/espnet2_tts_realtime_demo.ipynb)

|

| 4 |

+

|

| 5 |

+

This repository provides **UNOFFICIAL** pytorch implementations of the following models:

|

| 6 |

+

- [Parallel WaveGAN](https://arxiv.org/abs/1910.11480)

|

| 7 |

+

- [MelGAN](https://arxiv.org/abs/1910.06711)

|

| 8 |

+

- [Multiband-MelGAN](https://arxiv.org/abs/2005.05106)

|

| 9 |

+

- [HiFi-GAN](https://arxiv.org/abs/2010.05646)

|

| 10 |

+

- [StyleMelGAN](https://arxiv.org/abs/2011.01557)

|

| 11 |

+

|

| 12 |

+

You can combine these state-of-the-art non-autoregressive models to build your own great vocoder!

|

| 13 |

+

|

| 14 |

+

Please check our samples in [our demo HP](https://kan-bayashi.github.io/ParallelWaveGAN).

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

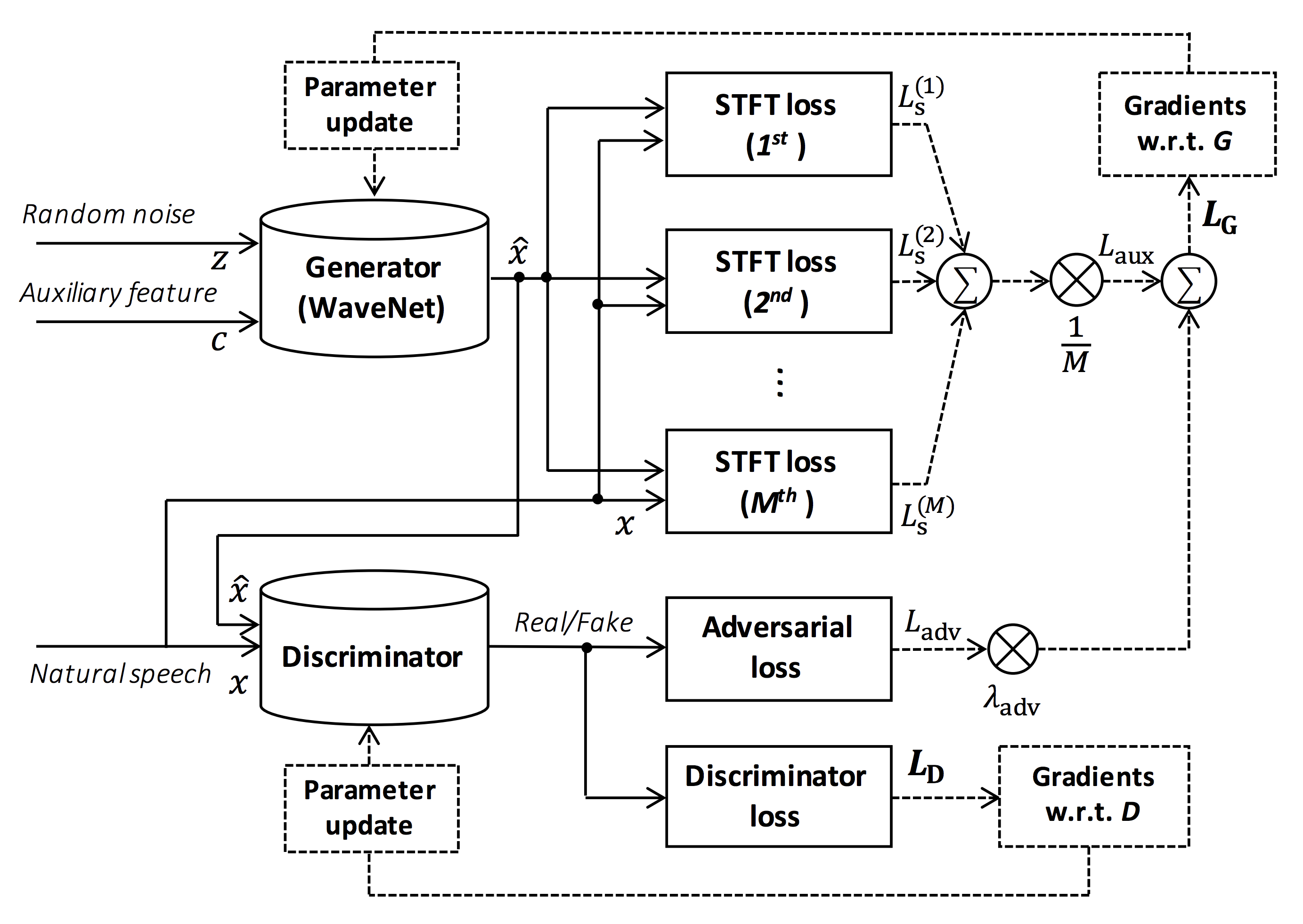

> Source of the figure: https://arxiv.org/pdf/1910.11480.pdf

|

| 19 |

+

|

| 20 |

+

The goal of this repository is to provide real-time neural vocoder, which is compatible with [ESPnet-TTS](https://github.com/espnet/espnet).

|

| 21 |

+

Also, this repository can be combined with [NVIDIA/tacotron2](https://github.com/NVIDIA/tacotron2)-based implementation (See [this comment](https://github.com/kan-bayashi/ParallelWaveGAN/issues/169#issuecomment-649320778)).

|

| 22 |

+

|

| 23 |

+

You can try the real-time end-to-end text-to-speech demonstration in Google Colab!

|

| 24 |

+

- Real-time demonstration with ESPnet2 [](https://colab.research.google.com/github/espnet/notebook/blob/master/espnet2_tts_realtime_demo.ipynb)

|

| 25 |

+

- Real-time demonstration with ESPnet1 [](https://colab.research.google.com/github/espnet/notebook/blob/master/tts_realtime_demo.ipynb)

|

| 26 |

+

|

| 27 |

+

## What's new

|

| 28 |

+

|

| 29 |

+

- 2021/08/24 Add more pretrained models of StyleMelGAN and HiFi-GAN.

|

| 30 |

+

- 2021/08/07 Add initial pretrained models of StyleMelGAN and HiFi-GAN.

|

| 31 |

+

- 2021/08/03 Support [StyleMelGAN](https://arxiv.org/abs/2011.01557) generator and discriminator!

|

| 32 |

+

- 2021/08/02 Support [HiFi-GAN](https://arxiv.org/abs/2010.05646) generator and discriminator!

|

| 33 |

+

- 2020/10/07 [JSSS](https://sites.google.com/site/shinnosuketakamichi/research-topics/jsss_corpus) recipe is available!

|

| 34 |

+

- 2020/08/19 [Real-time demo with ESPnet2](https://colab.research.google.com/github/espnet/notebook/blob/master/espnet2_tts_realtime_demo.ipynb) is available!

|

| 35 |

+

- 2020/05/29 [VCTK, JSUT, and CSMSC multi-band MelGAN pretrained model](#Results) is available!

|

| 36 |

+

- 2020/05/27 [New LJSpeech multi-band MelGAN pretrained model](#Results) is available!

|

| 37 |

+

- 2020/05/24 [LJSpeech full-band MelGAN pretrained model](#Results) is available!

|

| 38 |

+

- 2020/05/22 [LJSpeech multi-band MelGAN pretrained model](#Results) is available!

|

| 39 |

+

- 2020/05/16 [Multi-band MelGAN](https://arxiv.org/abs/2005.05106) is available!

|

| 40 |

+

- 2020/03/25 [LibriTTS pretrained models](#Results) are available!

|

| 41 |

+

- 2020/03/17 [Tensorflow conversion example notebook](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/notebooks/convert_melgan_from_pytorch_to_tensorflow.ipynb) is available (Thanks, [@dathudeptrai](https://github.com/dathudeptrai))!

|

| 42 |

+

- 2020/03/16 [LibriTTS recipe](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1) is available!

|

| 43 |

+

- 2020/03/12 [PWG G + MelGAN D + STFT-loss samples](#Results) are available!

|

| 44 |

+

- 2020/03/12 Multi-speaker English recipe [egs/vctk/voc1](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/vctk/voc1) is available!

|

| 45 |

+

- 2020/02/22 [MelGAN G + MelGAN D + STFT-loss samples](#Results) are available!

|

| 46 |

+

- 2020/02/12 Support [MelGAN](https://arxiv.org/abs/1910.06711)'s discriminator!

|

| 47 |

+

- 2020/02/08 Support [MelGAN](https://arxiv.org/abs/1910.06711)'s generator!

|

| 48 |

+

|

| 49 |

+

## Requirements

|

| 50 |

+

|

| 51 |

+

This repository is tested on Ubuntu 20.04 with a GPU Titan V.

|

| 52 |

+

|

| 53 |

+

- Python 3.6+

|

| 54 |

+

- Cuda 10.0+

|

| 55 |

+

- CuDNN 7+

|

| 56 |

+

- NCCL 2+ (for distributed multi-gpu training)

|

| 57 |

+

- libsndfile (you can install via `sudo apt install libsndfile-dev` in ubuntu)

|

| 58 |

+

- jq (you can install via `sudo apt install jq` in ubuntu)

|

| 59 |

+

- sox (you can install via `sudo apt install sox` in ubuntu)

|

| 60 |

+

|

| 61 |

+

Different cuda version should be working but not explicitly tested.

|

| 62 |

+

All of the codes are tested on Pytorch 1.4, 1.5.1, 1.7.1, 1.8.1, and 1.9.

|

| 63 |

+

|

| 64 |

+

Pytorch 1.6 works but there are some issues in cpu mode (See #198).

|

| 65 |

+

|

| 66 |

+

## Setup

|

| 67 |

+

|

| 68 |

+

You can select the installation method from two alternatives.

|

| 69 |

+

|

| 70 |

+

### A. Use pip

|

| 71 |

+

|

| 72 |

+

```bash

|

| 73 |

+

$ git clone https://github.com/kan-bayashi/ParallelWaveGAN.git

|

| 74 |

+

$ cd ParallelWaveGAN

|

| 75 |

+

$ pip install -e .

|

| 76 |

+

# If you want to use distributed training, please install

|

| 77 |

+

# apex manually by following https://github.com/NVIDIA/apex

|

| 78 |

+

$ ...

|

| 79 |

+

```

|

| 80 |

+

Note that your cuda version must be exactly matched with the version used for the pytorch binary to install apex.

|

| 81 |

+

To install pytorch compiled with different cuda version, see `tools/Makefile`.

|

| 82 |

+

|

| 83 |

+

### B. Make virtualenv

|

| 84 |

+

|

| 85 |

+

```bash

|

| 86 |

+

$ git clone https://github.com/kan-bayashi/ParallelWaveGAN.git

|

| 87 |

+

$ cd ParallelWaveGAN/tools

|

| 88 |

+

$ make

|

| 89 |

+

# If you want to use distributed training, please run following

|

| 90 |

+

# command to install apex.

|

| 91 |

+

$ make apex

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

Note that we specify cuda version used to compile pytorch wheel.

|

| 95 |

+

If you want to use different cuda version, please check `tools/Makefile` to change the pytorch wheel to be installed.

|

| 96 |

+

|

| 97 |

+

## Recipe

|

| 98 |

+

|

| 99 |

+

This repository provides [Kaldi](https://github.com/kaldi-asr/kaldi)-style recipes, as the same as [ESPnet](https://github.com/espnet/espnet).

|

| 100 |

+

Currently, the following recipes are supported.

|

| 101 |

+

|

| 102 |

+

- [LJSpeech](https://keithito.com/LJ-Speech-Dataset/): English female speaker

|

| 103 |

+

- [JSUT](https://sites.google.com/site/shinnosuketakamichi/publication/jsut): Japanese female speaker

|

| 104 |

+

- [JSSS](https://sites.google.com/site/shinnosuketakamichi/research-topics/jsss_corpus): Japanese female speaker

|

| 105 |

+

- [CSMSC](https://www.data-baker.com/open_source.html): Mandarin female speaker

|

| 106 |

+

- [CMU Arctic](http://www.festvox.org/cmu_arctic/): English speakers

|

| 107 |

+

- [JNAS](http://research.nii.ac.jp/src/en/JNAS.html): Japanese multi-speaker

|

| 108 |

+

- [VCTK](https://homepages.inf.ed.ac.uk/jyamagis/page3/page58/page58.html): English multi-speaker

|

| 109 |

+

- [LibriTTS](https://arxiv.org/abs/1904.02882): English multi-speaker

|

| 110 |

+

- [YesNo](https://arxiv.org/abs/1904.02882): English speaker (For debugging)

|

| 111 |

+

|

| 112 |

+

To run the recipe, please follow the below instruction.

|

| 113 |

+

|

| 114 |

+

```bash

|

| 115 |

+

# Let us move on the recipe directory

|

| 116 |

+

$ cd egs/ljspeech/voc1

|

| 117 |

+

|

| 118 |

+

# Run the recipe from scratch

|

| 119 |

+

$ ./run.sh

|

| 120 |

+

|

| 121 |

+

# You can change config via command line

|

| 122 |

+

$ ./run.sh --conf <your_customized_yaml_config>

|

| 123 |

+

|

| 124 |

+

# You can select the stage to start and stop

|

| 125 |

+

$ ./run.sh --stage 2 --stop_stage 2

|

| 126 |

+

|

| 127 |

+

# If you want to specify the gpu

|

| 128 |

+

$ CUDA_VISIBLE_DEVICES=1 ./run.sh --stage 2

|

| 129 |

+

|

| 130 |

+

# If you want to resume training from 10000 steps checkpoint

|

| 131 |

+

$ ./run.sh --stage 2 --resume <path>/<to>/checkpoint-10000steps.pkl

|

| 132 |

+

```

|

| 133 |

+

|

| 134 |

+

See more info about the recipes in [this README](./egs/README.md).

|

| 135 |

+

|

| 136 |

+

## Speed

|

| 137 |

+

|

| 138 |

+

The decoding speed is RTF = 0.016 with TITAN V, much faster than the real-time.

|

| 139 |

+

|

| 140 |

+

```bash

|

| 141 |

+

[decode]: 100%|██████████| 250/250 [00:30<00:00, 8.31it/s, RTF=0.0156]

|

| 142 |

+

2019-11-03 09:07:40,480 (decode:127) INFO: finished generation of 250 utterances (RTF = 0.016).

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

Even on the CPU (Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz 16 threads), it can generate less than the real-time.

|

| 146 |

+

|

| 147 |

+

```bash

|

| 148 |

+

[decode]: 100%|██████████| 250/250 [22:16<00:00, 5.35s/it, RTF=0.841]

|

| 149 |

+

2019-11-06 09:04:56,697 (decode:129) INFO: finished generation of 250 utterances (RTF = 0.734).

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

If you use MelGAN's generator, the decoding speed will be further faster.

|

| 153 |

+

|

| 154 |

+

```bash

|

| 155 |

+

# On CPU (Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz 16 threads)

|

| 156 |

+

[decode]: 100%|██████████| 250/250 [04:00<00:00, 1.04it/s, RTF=0.0882]

|

| 157 |

+

2020-02-08 10:45:14,111 (decode:142) INFO: Finished generation of 250 utterances (RTF = 0.137).

|

| 158 |

+

|

| 159 |

+

# On GPU (TITAN V)

|

| 160 |

+

[decode]: 100%|██████████| 250/250 [00:06<00:00, 36.38it/s, RTF=0.00189]

|

| 161 |

+

2020-02-08 05:44:42,231 (decode:142) INFO: Finished generation of 250 utterances (RTF = 0.002).

|

| 162 |

+

```

|

| 163 |

+

|

| 164 |

+

If you use Multi-band MelGAN's generator, the decoding speed will be much further faster.

|

| 165 |

+

|

| 166 |

+

```bash

|

| 167 |

+

# On CPU (Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz 16 threads)

|

| 168 |

+

[decode]: 100%|██████████| 250/250 [01:47<00:00, 2.95it/s, RTF=0.048]

|

| 169 |

+

2020-05-22 15:37:19,771 (decode:151) INFO: Finished generation of 250 utterances (RTF = 0.059).

|

| 170 |

+

|

| 171 |

+

# On GPU (TITAN V)

|

| 172 |

+

[decode]: 100%|██████████| 250/250 [00:05<00:00, 43.67it/s, RTF=0.000928]

|

| 173 |

+

2020-05-22 15:35:13,302 (decode:151) INFO: Finished generation of 250 utterances (RTF = 0.001).

|

| 174 |

+

```

|

| 175 |

+

|

| 176 |

+

If you want to accelerate the inference more, it is worthwhile to try the conversion from pytorch to tensorflow.

|

| 177 |

+

The example of the conversion is available in [the notebook](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/notebooks/convert_melgan_from_pytorch_to_tensorflow.ipynb) (Provided by [@dathudeptrai](https://github.com/dathudeptrai)).

|

| 178 |

+

|

| 179 |

+

## Results

|

| 180 |

+

|

| 181 |

+

Here the results are summarized in the table.

|

| 182 |

+

You can listen to the samples and download pretrained models from the link to our google drive.

|

| 183 |

+

|

| 184 |

+

| Model | Conf | Lang | Fs [Hz] | Mel range [Hz] | FFT / Hop / Win [pt] | # iters |

|

| 185 |

+

| :----------------------------------------------------------------------------------------------------------- | :-------------------------------------------------------------------------------------------------------------------------: | :---: | :-----: | :------------: | :------------------: | :-----: |

|

| 186 |

+

| [ljspeech_parallel_wavegan.v1](https://drive.google.com/open?id=1wdHr1a51TLeo4iKrGErVKHVFyq6D17TU) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/parallel_wavegan.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 400k |

|

| 187 |

+

| [ljspeech_parallel_wavegan.v1.long](https://drive.google.com/open?id=1XRn3s_wzPF2fdfGshLwuvNHrbgD0hqVS) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/parallel_wavegan.v1.long.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 188 |

+

| [ljspeech_parallel_wavegan.v1.no_limit](https://drive.google.com/open?id=1NoD3TCmKIDHHtf74YsScX8s59aZFOFJA) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/parallel_wavegan.v1.no_limit.yaml) | EN | 22.05k | None | 1024 / 256 / None | 400k |

|

| 189 |

+

| [ljspeech_parallel_wavegan.v3](https://drive.google.com/open?id=1a5Q2KiJfUQkVFo5Bd1IoYPVicJGnm7EL) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/parallel_wavegan.v3.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 3M |

|

| 190 |

+

| [ljspeech_melgan.v1](https://drive.google.com/open?id=1z0vO1UMFHyeCdCLAmd7Moewi4QgCb07S) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/melgan.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 400k |

|

| 191 |

+

| [ljspeech_melgan.v1.long](https://drive.google.com/open?id=1RqNGcFO7Geb6-4pJtMbC9-ph_WiWA14e) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/melgan.v1.long.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 192 |

+

| [ljspeech_melgan_large.v1](https://drive.google.com/open?id=1KQt-gyxbG6iTZ4aVn9YjQuaGYjAleYs8) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/melgan_large.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 400k |

|

| 193 |

+

| [ljspeech_melgan_large.v1.long](https://drive.google.com/open?id=1ogEx-wiQS7HVtdU0_TmlENURIe4v2erC) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/melgan_large.v1.long.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 194 |

+

| [ljspeech_melgan.v3](https://drive.google.com/open?id=1eXkm_Wf1YVlk5waP4Vgqd0GzMaJtW3y5) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/melgan.v3.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 2M |

|

| 195 |

+

| [ljspeech_melgan.v3.long](https://drive.google.com/open?id=1u1w4RPefjByX8nfsL59OzU2KgEksBhL1) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/melgan.v3.long.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 4M |

|

| 196 |

+

| [ljspeech_full_band_melgan.v1](https://drive.google.com/open?id=1RQqkbnoow0srTDYJNYA7RJ5cDRC5xB-t) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/full_band_melgan.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 197 |

+

| [ljspeech_full_band_melgan.v2](https://drive.google.com/open?id=1d9DWOzwOyxT1K5lPnyMqr2nED62vlHaX) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/full_band_melgan.v2.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 198 |

+

| [ljspeech_multi_band_melgan.v1](https://drive.google.com/open?id=1ls_YxCccQD-v6ADbG6qXlZ8f30KrrhLT) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/multi_band_melgan.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 199 |

+

| [ljspeech_multi_band_melgan.v2](https://drive.google.com/open?id=1wevYP2HQ7ec2fSixTpZIX0sNBtYZJz_I) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/multi_band_melgan.v2.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1M |

|

| 200 |

+

| [ljspeech_hifigan.v1](https://drive.google.com/open?id=18_R5-pGHDIbIR1QvrtBZwVRHHpBy5xiZ) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/hifigan.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 2.5M |

|

| 201 |

+

| [ljspeech_style_melgan.v1](https://drive.google.com/open?id=1WFlVknhyeZhTT5R6HznVJCJ4fwXKtb3B) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/ljspeech/voc1/conf/style_melgan.v1.yaml) | EN | 22.05k | 80-7600 | 1024 / 256 / None | 1.5M |

|

| 202 |

+

| [jsut_parallel_wavegan.v1](https://drive.google.com/open?id=1UDRL0JAovZ8XZhoH0wi9jj_zeCKb-AIA) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/jsut/voc1/conf/parallel_wavegan.v1.yaml) | JP | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 203 |

+

| [jsut_multi_band_melgan.v2](https://drive.google.com/open?id=1E4fe0c5gMLtmSS0Hrzj-9nUbMwzke4PS) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/jsut/voc1/conf/multi_band_melgan.v2.yaml) | JP | 24k | 80-7600 | 2048 / 300 / 1200 | 1M |

|

| 204 |

+

| [just_hifigan.v1](https://drive.google.com/open?id=1TY88141UWzQTAQXIPa8_g40QshuqVj6Y) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/jsut/voc1/conf/hifigan.v1.yaml) | JP | 24k | 80-7600 | 2048 / 300 / 1200 | 2.5M |

|

| 205 |

+

| [just_style_melgan.v1](https://drive.google.com/open?id=1-qKAC0zLya6iKMngDERbSzBYD4JHmGdh) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/jsut/voc1/conf/style_melgan.v1.yaml) | JP | 24k | 80-7600 | 2048 / 300 / 1200 | 1.5M |

|

| 206 |

+

| [csmsc_parallel_wavegan.v1](https://drive.google.com/open?id=1C2nu9nOFdKcEd-D9xGquQ0bCia0B2v_4) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/csmsc/voc1/conf/parallel_wavegan.v1.yaml) | ZH | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 207 |

+

| [csmsc_multi_band_melgan.v2](https://drive.google.com/open?id=1F7FwxGbvSo1Rnb5kp0dhGwimRJstzCrz) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/csmsc/voc1/conf/multi_band_melgan.v2.yaml) | ZH | 24k | 80-7600 | 2048 / 300 / 1200 | 1M |

|

| 208 |

+

| [csmsc_hifigan.v1](https://drive.google.com/open?id=1gTkVloMqteBfSRhTrZGdOBBBRsGd3qt8) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/csmsc/voc1/conf/hifigan.v1.yaml) | ZH | 24k | 80-7600 | 2048 / 300 / 1200 | 2.5M |

|

| 209 |

+

| [csmsc_style_melgan.v1](https://drive.google.com/open?id=1gl4P5W_ST_nnv0vjurs7naVm5UJqkZIn) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/csmsc/voc1/conf/style_melgan.v1.yaml) | ZH | 24k | 80-7600 | 2048 / 300 / 1200 | 1.5M |

|

| 210 |

+

| [arctic_slt_parallel_wavegan.v1](https://drive.google.com/open?id=1xG9CmSED2TzFdklD6fVxzf7kFV2kPQAJ) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/arctic/voc1/conf/parallel_wavegan.v1.yaml) | EN | 16k | 80-7600 | 1024 / 256 / None | 400k |

|

| 211 |

+

| [jnas_parallel_wavegan.v1](https://drive.google.com/open?id=1n_hkxPxryVXbp6oHM1NFm08q0TcoDXz1) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/jnas/voc1/conf/parallel_wavegan.v1.yaml) | JP | 16k | 80-7600 | 1024 / 256 / None | 400k |

|

| 212 |

+

| [vctk_parallel_wavegan.v1](https://drive.google.com/open?id=1dGTu-B7an2P5sEOepLPjpOaasgaSnLpi) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/vctk/voc1/conf/parallel_wavegan.v1.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 213 |

+

| [vctk_parallel_wavegan.v1.long](https://drive.google.com/open?id=1qoocM-VQZpjbv5B-zVJpdraazGcPL0So) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/vctk/voc1/conf/parallel_wavegan.v1.long.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 1M |

|

| 214 |

+

| [vctk_multi_band_melgan.v2](https://drive.google.com/open?id=17EkB4hSKUEDTYEne-dNHtJT724hdivn4) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/vctk/voc1/conf/multi_band_melgan.v2.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 1M |

|

| 215 |

+

| [vctk_hifigan.v1](https://drive.google.com/open?id=17fu7ukS97m-8StXPc6ltW8a3hr0fsQBP) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/vctk/voc1/conf/hifigan.v1.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 2.5M |

|

| 216 |

+

| [vctk_style_melgan.v1](https://drive.google.com/open?id=1kfJgzDgrOFYxTfVTNbTHcnyq--cc6plo) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/vctk/voc1/conf/style_melgan.v1.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 1.5M |

|

| 217 |

+

| [libritts_parallel_wavegan.v1](https://drive.google.com/open?id=1pb18Nd2FCYWnXfStszBAEEIMe_EZUJV0) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/parallel_wavegan.v1.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 218 |

+

| [libritts_parallel_wavegan.v1.long](https://drive.google.com/open?id=15ibzv-uTeprVpwT946Hl1XUYDmg5Afwz) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/parallel_wavegan.v1.long.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 1M |

|

| 219 |

+

| [libritts_multi_band_melgan.v2](https://drive.google.com/open?id=1jfB15igea6tOQ0hZJGIvnpf3QyNhTLnq) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/multi_band_melgan.v2.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 1M |

|

| 220 |

+

| [libritts_hifigan.v1](https://drive.google.com/open?id=10jBLsjQT3LvR-3GgPZpRvWIWvpGjzDnM) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/hifigan.v1.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 2.5M |

|

| 221 |

+

| [libritts_style_melgan.v1](https://drive.google.com/open?id=1OPpYbrqYOJ_hHNGSQHzUxz_QZWWBwV9r) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/style_melgan.v1.yaml) | EN | 24k | 80-7600 | 2048 / 300 / 1200 | 1.5M |

|

| 222 |

+

| [kss_parallel_wavegan.v1](https://drive.google.com/open?id=1n5kitXZqPHUr-veoUKCyfJvb3p1g0VlY) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/parallel_wavegan.v1.yaml) | KO | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 223 |

+

| [hui_acg_hokuspokus_parallel_wavegan.v1](https://drive.google.com/open?id=1rwzpIwb65xbW5fFPsqPWdforsk4U-vDg) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/parallel_wavegan.v1.yaml) | DE | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 224 |

+

| [ruslan_parallel_wavegan.v1](https://drive.google.com/open?id=1QGuesaRKGful0bUTTaFZdbjqHNhy2LpE) | [link](https://github.com/kan-bayashi/ParallelWaveGAN/blob/master/egs/libritts/voc1/conf/parallel_wavegan.v1.yaml) | RU | 24k | 80-7600 | 2048 / 300 / 1200 | 400k |

|

| 225 |

+

|

| 226 |

+

Please access at [our google drive](https://drive.google.com/open?id=1sd_QzcUNnbiaWq7L0ykMP7Xmk-zOuxTi) to check more results.

|

| 227 |

+

|

| 228 |

+

## How-to-use pretrained models

|

| 229 |

+

|

| 230 |

+

### Analysis-synthesis

|

| 231 |

+

|

| 232 |

+

Here the minimal code is shown to perform analysis-synthesis using the pretrained model.

|

| 233 |

+

|

| 234 |

+

```bash

|

| 235 |

+

# Please make sure you installed `parallel_wavegan`

|

| 236 |

+

# If not, please install via pip

|

| 237 |

+

$ pip install parallel_wavegan

|

| 238 |

+

|

| 239 |

+

# You can download the pretrained model from terminal

|

| 240 |

+

$ python << EOF

|

| 241 |

+

from parallel_wavegan.utils import download_pretrained_model

|

| 242 |

+

download_pretrained_model("<pretrained_model_tag>", "pretrained_model")

|

| 243 |

+

EOF

|

| 244 |

+

|

| 245 |

+

# You can get all of available pretrained models as follows:

|

| 246 |

+

$ python << EOF

|

| 247 |

+

from parallel_wavegan.utils import PRETRAINED_MODEL_LIST

|

| 248 |

+

print(PRETRAINED_MODEL_LIST.keys())

|

| 249 |

+

EOF

|

| 250 |

+

|

| 251 |

+

# Now you can find downloaded pretrained model in `pretrained_model/<pretrain_model_tag>/`

|

| 252 |

+

$ ls pretrain_model/<pretrain_model_tag>

|

| 253 |

+

checkpoint-400000steps.pkl config.yml stats.h5

|

| 254 |

+

|

| 255 |

+

# These files can also be downloaded manually from the above results

|

| 256 |

+

|

| 257 |

+

# Please put an audio file in `sample` directory to perform analysis-synthesis

|

| 258 |

+

$ ls sample/

|

| 259 |

+

sample.wav

|

| 260 |

+

|

| 261 |

+

# Then perform feature extraction -> feature normalization -> synthesis

|

| 262 |

+

$ parallel-wavegan-preprocess \

|

| 263 |

+

--config pretrain_model/<pretrain_model_tag>/config.yml \

|

| 264 |

+

--rootdir sample \

|

| 265 |

+

--dumpdir dump/sample/raw

|

| 266 |

+

100%|████████████████████████████████████████| 1/1 [00:00<00:00, 914.19it/s]

|

| 267 |

+

$ parallel-wavegan-normalize \

|

| 268 |

+

--config pretrain_model/<pretrain_model_tag>/config.yml \

|

| 269 |

+

--rootdir dump/sample/raw \

|

| 270 |

+

--dumpdir dump/sample/norm \

|

| 271 |

+

--stats pretrain_model/<pretrain_model_tag>/stats.h5

|

| 272 |

+

2019-11-13 13:44:29,574 (normalize:87) INFO: the number of files = 1.

|

| 273 |

+

100%|████████████████████████████████████████| 1/1 [00:00<00:00, 513.13it/s]

|

| 274 |

+

$ parallel-wavegan-decode \

|

| 275 |

+

--checkpoint pretrain_model/<pretrain_model_tag>/checkpoint-400000steps.pkl \

|

| 276 |

+

--dumpdir dump/sample/norm \

|

| 277 |

+

--outdir sample

|

| 278 |

+

2019-11-13 13:44:31,229 (decode:91) INFO: the number of features to be decoded = 1.

|

| 279 |

+

[decode]: 100%|███████████████████| 1/1 [00:00<00:00, 18.33it/s, RTF=0.0146]

|

| 280 |

+

2019-11-13 13:44:37,132 (decode:129) INFO: finished generation of 1 utterances (RTF = 0.015).

|

| 281 |

+

|

| 282 |

+

# You can skip normalization step (on-the-fly normalization, feature extraction -> synthesis)

|

| 283 |

+

$ parallel-wavegan-preprocess \

|

| 284 |

+

--config pretrain_model/<pretrain_model_tag>/config.yml \

|

| 285 |

+

--rootdir sample \

|

| 286 |

+

--dumpdir dump/sample/raw

|

| 287 |

+

100%|████████████████████████████████████████| 1/1 [00:00<00:00, 914.19it/s]

|

| 288 |

+

$ parallel-wavegan-decode \

|

| 289 |

+

--checkpoint pretrain_model/<pretrain_model_tag>/checkpoint-400000steps.pkl \

|

| 290 |

+

--dumpdir dump/sample/raw \

|

| 291 |

+

--normalize-before \

|

| 292 |

+

--outdir sample

|

| 293 |

+

2019-11-13 13:44:31,229 (decode:91) INFO: the number of features to be decoded = 1.

|

| 294 |

+

[decode]: 100%|███████████████████| 1/1 [00:00<00:00, 18.33it/s, RTF=0.0146]

|

| 295 |

+

2019-11-13 13:44:37,132 (decode:129) INFO: finished generation of 1 utterances (RTF = 0.015).

|

| 296 |

+

|

| 297 |

+

# you can find the generated speech in `sample` directory

|

| 298 |

+

$ ls sample

|

| 299 |

+

sample.wav sample_gen.wav

|

| 300 |

+

```

|

| 301 |

+

|

| 302 |

+

### Decoding with ESPnet-TTS model's features

|

| 303 |

+

|

| 304 |

+

Here, I show the procedure to generate waveforms with features generated by [ESPnet-TTS](https://github.com/espnet/espnet) models.

|

| 305 |

+

|

| 306 |

+

```bash

|

| 307 |

+

# Make sure you already finished running the recipe of ESPnet-TTS.

|

| 308 |

+

# You must use the same feature settings for both Text2Mel and Mel2Wav models.

|

| 309 |

+

# Let us move on "ESPnet" recipe directory

|

| 310 |

+

$ cd /path/to/espnet/egs/<recipe_name>/tts1

|

| 311 |

+

$ pwd

|

| 312 |

+

/path/to/espnet/egs/<recipe_name>/tts1

|

| 313 |

+

|

| 314 |

+

# If you use ESPnet2, move on `egs2/`

|

| 315 |

+

$ cd /path/to/espnet/egs2/<recipe_name>/tts1

|

| 316 |

+

$ pwd

|

| 317 |

+

/path/to/espnet/egs2/<recipe_name>/tts1

|

| 318 |

+

|

| 319 |

+

# Please install this repository in ESPnet conda (or virtualenv) environment

|

| 320 |

+

$ . ./path.sh && pip install -U parallel_wavegan

|

| 321 |

+

|

| 322 |

+

# You can download the pretrained model from terminal

|

| 323 |

+

$ python << EOF

|

| 324 |

+

from parallel_wavegan.utils import download_pretrained_model

|

| 325 |

+

download_pretrained_model("<pretrained_model_tag>", "pretrained_model")

|

| 326 |

+

EOF

|

| 327 |

+

|

| 328 |

+

# You can get all of available pretrained models as follows:

|

| 329 |

+

$ python << EOF

|

| 330 |

+

from parallel_wavegan.utils import PRETRAINED_MODEL_LIST

|

| 331 |

+

print(PRETRAINED_MODEL_LIST.keys())

|

| 332 |

+

EOF

|

| 333 |

+

|

| 334 |

+

# You can find downloaded pretrained model in `pretrained_model/<pretrain_model_tag>/`

|

| 335 |

+

$ ls pretrain_model/<pretrain_model_tag>

|

| 336 |

+

checkpoint-400000steps.pkl config.yml stats.h5

|

| 337 |

+

|

| 338 |

+

# These files can also be downloaded manually from the above results

|

| 339 |

+

```

|

| 340 |

+

|

| 341 |

+

**Case 1**: If you use the same dataset for both Text2Mel and Mel2Wav

|

| 342 |

+

|

| 343 |

+

```bash

|

| 344 |

+

# In this case, you can directly use generated features for decoding.

|

| 345 |

+

# Please specify `feats.scp` path for `--feats-scp`, which is located in

|

| 346 |

+

# exp/<your_model_dir>/outputs_*_decode/<set_name>/feats.scp.

|

| 347 |

+

# Note that do not use outputs_*decode_denorm/<set_name>/feats.scp since

|

| 348 |

+

# it is de-normalized features (the input for PWG is normalized features).

|

| 349 |

+

$ parallel-wavegan-decode \

|

| 350 |

+

--checkpoint pretrain_model/<pretrain_model_tag>/checkpoint-400000steps.pkl \

|

| 351 |

+

--feats-scp exp/<your_model_dir>/outputs_*_decode/<set_name>/feats.scp \

|

| 352 |

+

--outdir <path_to_outdir>

|

| 353 |

+

|

| 354 |

+

# In the case of ESPnet2, the generated feature can be found in

|

| 355 |

+

# exp/<your_model_dir>/decode_*/<set_name>/norm/feats.scp.

|

| 356 |

+

$ parallel-wavegan-decode \

|

| 357 |

+

--checkpoint pretrain_model/<pretrain_model_tag>/checkpoint-400000steps.pkl \

|

| 358 |

+

--feats-scp exp/<your_model_dir>/decode_*/<set_name>/norm/feats.scp \

|

| 359 |

+

--outdir <path_to_outdir>

|

| 360 |

+

|

| 361 |

+

# You can find the generated waveforms in <path_to_outdir>/.

|

| 362 |

+

$ ls <path_to_outdir>

|

| 363 |

+

utt_id_1_gen.wav utt_id_2_gen.wav ... utt_id_N_gen.wav

|

| 364 |

+

```

|

| 365 |

+

|

| 366 |

+

**Case 2**: If you use different datasets for Text2Mel and Mel2Wav models

|

| 367 |

+

|

| 368 |

+

```bash

|

| 369 |

+

# In this case, you must provide `--normalize-before` option additionally.

|

| 370 |

+

# And use `feats.scp` of de-normalized generated features.

|

| 371 |

+

|

| 372 |

+

# ESPnet1 case

|

| 373 |

+

$ parallel-wavegan-decode \

|

| 374 |

+

--checkpoint pretrain_model/<pretrain_model_tag>/checkpoint-400000steps.pkl \

|

| 375 |

+

--feats-scp exp/<your_model_dir>/outputs_*_decode_denorm/<set_name>/feats.scp \

|

| 376 |

+

--outdir <path_to_outdir> \

|

| 377 |

+

--normalize-before

|

| 378 |

+

|

| 379 |

+

# ESPnet2 case

|

| 380 |

+

$ parallel-wavegan-decode \

|

| 381 |

+

--checkpoint pretrain_model/<pretrain_model_tag>/checkpoint-400000steps.pkl \

|

| 382 |

+

--feats-scp exp/<your_model_dir>/decode_*/<set_name>/denorm/feats.scp \

|

| 383 |

+

--outdir <path_to_outdir> \

|

| 384 |

+

--normalize-before

|

| 385 |

+

|

| 386 |

+

# You can find the generated waveforms in <path_to_outdir>/.

|

| 387 |

+

$ ls <path_to_outdir>

|

| 388 |

+

utt_id_1_gen.wav utt_id_2_gen.wav ... utt_id_N_gen.wav

|

| 389 |

+

```

|

| 390 |

+

|

| 391 |

+

If you want to combine these models in python, you can try the real-time demonstration in Google Colab!

|

| 392 |

+

- Real-time demonstration with ESPnet2 [](https://colab.research.google.com/github/espnet/notebook/blob/master/espnet2_tts_realtime_demo.ipynb)

|

| 393 |

+

- Real-time demonstration with ESPnet1 [](https://colab.research.google.com/github/espnet/notebook/blob/master/tts_realtime_demo.ipynb)

|

| 394 |

+

|

| 395 |

+

### Decoding with dumped npy files

|

| 396 |

+

|

| 397 |

+

Sometimes we want to decode with dumped npy files, which are mel-spectrogram generated by TTS models.

|

| 398 |

+

Please make sure you used the same feature extraction settings of the pretrained vocoder (`fs`, `fft_size`, `hop_size`, `win_length`, `fmin`, and `fmax`).

|

| 399 |

+

Only the difference of `log_base` can be changed with some post-processings (we use log 10 instead of natural log as a default).

|

| 400 |

+

See detail in [the comment](https://github.com/kan-bayashi/ParallelWaveGAN/issues/169#issuecomment-649320778).

|

| 401 |

+

|

| 402 |

+

```bash

|

| 403 |

+

# Generate dummy npy file of mel-spectrogram

|

| 404 |

+

$ ipython

|

| 405 |

+

[ins] In [1]: import numpy as np

|

| 406 |

+

[ins] In [2]: x = np.random.randn(512, 80) # (#frames, #mels)

|

| 407 |

+

[ins] In [3]: np.save("dummy_1.npy", x)

|

| 408 |

+

[ins] In [4]: y = np.random.randn(256, 80) # (#frames, #mels)

|

| 409 |

+

[ins] In [5]: np.save("dummy_2.npy", y)

|

| 410 |

+

[ins] In [6]: exit

|

| 411 |

+

|

| 412 |

+

# Make scp file (key-path format)

|

| 413 |

+

$ find -name "*.npy" | awk '{print "dummy_" NR " " $1}' > feats.scp

|

| 414 |

+

|

| 415 |

+

# Check (<utt_id> <path>)

|

| 416 |

+

$ cat feats.scp

|

| 417 |

+

dummy_1 ./dummy_1.npy

|

| 418 |

+

dummy_2 ./dummy_2.npy

|

| 419 |

+

|

| 420 |

+

# Decode without feature normalization

|

| 421 |

+

# This case assumes that the input mel-spectrogram is normalized with the same statistics of the pretrained model.

|

| 422 |

+

$ parallel-wavegan-decode \

|

| 423 |

+

--checkpoint /path/to/checkpoint-400000steps.pkl \

|

| 424 |

+

--feats-scp ./feats.scp \

|

| 425 |

+

--outdir wav

|

| 426 |

+

2021-08-10 09:13:07,624 (decode:140) INFO: The number of features to be decoded = 2.

|

| 427 |

+

[decode]: 100%|████████████████████████████████████████| 2/2 [00:00<00:00, 13.84it/s, RTF=0.00264]

|

| 428 |

+

2021-08-10 09:13:29,660 (decode:174) INFO: Finished generation of 2 utterances (RTF = 0.005).

|

| 429 |

+

|

| 430 |

+

# Decode with feature normalization

|

| 431 |

+

# This case assumes that the input mel-spectrogram is not normalized.

|

| 432 |

+

$ parallel-wavegan-decode \

|

| 433 |

+

--checkpoint /path/to/checkpoint-400000steps.pkl \

|

| 434 |

+

--feats-scp ./feats.scp \

|

| 435 |

+

--normalize-before \

|

| 436 |

+

--outdir wav

|

| 437 |

+

2021-08-10 09:13:07,624 (decode:140) INFO: The number of features to be decoded = 2.

|

| 438 |

+

[decode]: 100%|████████████████████████████████████████| 2/2 [00:00<00:00, 13.84it/s, RTF=0.00264]

|

| 439 |

+

2021-08-10 09:13:29,660 (decode:174) INFO: Finished generation of 2 utterances (RTF = 0.005).

|

| 440 |

+

```

|

| 441 |

+

|

| 442 |

+

## References

|

| 443 |

+

|

| 444 |

+

- [Parallel WaveGAN](https://arxiv.org/abs/1910.11480)

|

| 445 |

+

- [r9y9/wavenet_vocoder](https://github.com/r9y9/wavenet_vocoder)

|

| 446 |

+

- [LiyuanLucasLiu/RAdam](https://github.com/LiyuanLucasLiu/RAdam)

|

| 447 |

+

- [MelGAN](https://arxiv.org/abs/1910.06711)

|

| 448 |

+

- [descriptinc/melgan-neurips](https://github.com/descriptinc/melgan-neurips)

|

| 449 |

+

- [Multi-band MelGAN](https://arxiv.org/abs/2005.05106)

|

| 450 |

+

- [HiFi-GAN](https://arxiv.org/abs/2010.05646)

|

| 451 |

+

- [jik876/hifi-gan](https://github.com/jik876/hifi-gan)

|

| 452 |

+

- [StyleMelGAN](https://arxiv.org/abs/2011.01557)

|

| 453 |

+

|

| 454 |

+

## Acknowledgement

|

| 455 |

+

|

| 456 |

+

The author would like to thank Ryuichi Yamamoto ([@r9y9](https://github.com/r9y9)) for his great repository, paper, and valuable discussions.

|

| 457 |

+

|

| 458 |

+

## Author

|

| 459 |

+

|

| 460 |

+

Tomoki Hayashi ([@kan-bayashi](https://github.com/kan-bayashi))

|

| 461 |

+

E-mail: `hayashi.tomoki<at>g.sp.m.is.nagoya-u.ac.jp`

|