first draft

Browse files- .gitattributes +19 -0

- added_tokens.json +1 -0

- app.py +58 -0

- config.json +13 -0

- donut/__init__.py +16 -0

- donut/__pycache__/__init__.cpython-311.pyc +0 -0

- donut/__pycache__/model.cpython-311.pyc +0 -0

- donut/__pycache__/util.cpython-311.pyc +0 -0

- donut/_version.py +6 -0

- donut/model.py +609 -0

- donut/util.py +344 -0

- images/belgium_2.PNG +3 -0

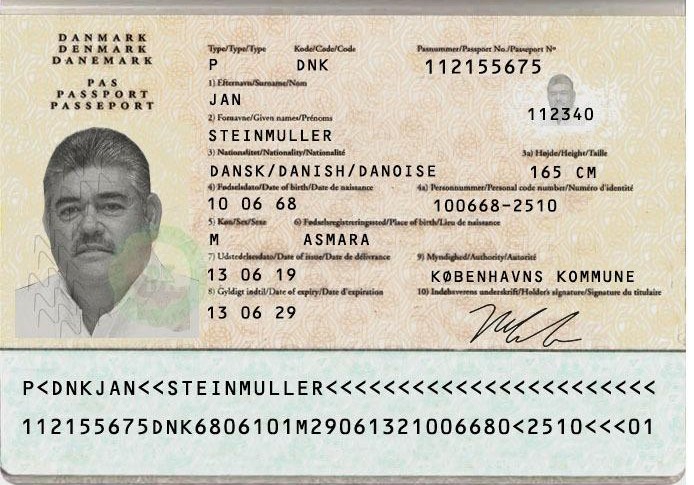

- images/denmark_2.jpeg +0 -0

- images/estonia.PNG +3 -0

- images/guiana.PNG +3 -0

- images/iraq.PNG +3 -0

- images/ireland.PNG +3 -0

- images/mali_2.PNG +3 -0

- images/newzealand_4.PNG +3 -0

- images/poland_3.PNG +3 -0

- images/portugal_3.PNG +3 -0

- images/singapore_3.PNG +3 -0

- images/spain.PNG +3 -0

- images/spain_3.PNG +3 -0

- images/suriname.PNG +3 -0

- images/switzerland_2.PNG +3 -0

- images/switzerland_4.PNG +3 -0

- images/thailand_5.PNG +3 -0

- images/togo_2.PNG +3 -0

- images/uk.PNG +3 -0

- images/uk_3.PNG +3 -0

- pytorch_model.bin +3 -0

- requirements.txt +6 -0

- sentencepiece.bpe.model +3 -0

- special_tokens_map.json +1 -0

- tokenizer_config.json +1 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,22 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/belgium_2.PNG filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/estonia.PNG filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

images/guiana.PNG filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

images/iraq.PNG filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

images/ireland.PNG filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

images/mali_2.PNG filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

images/newzealand_4.PNG filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

images/poland_3.PNG filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

images/portugal_3.PNG filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

images/singapore_3.PNG filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

images/spain_3.PNG filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

images/spain.PNG filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

images/suriname.PNG filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

images/switzerland_2.PNG filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

images/switzerland_4.PNG filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

images/thailand_5.PNG filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

images/togo_2.PNG filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

images/uk_3.PNG filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

images/uk.PNG filter=lfs diff=lfs merge=lfs -text

|

added_tokens.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"<sep/>": 57522, "<s_iitcdip>": 57523, "<s_synthdog>": 57524, "<-1/>": 57525, "</s_MachineReadableZone>": 57526, "<s_MachineReadableZone>": 57527, "<s_INPUT_data>": 57528}

|

app.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import gradio as gr

|

| 3 |

+

import os

|

| 4 |

+

import torch

|

| 5 |

+

|

| 6 |

+

from donut import DonutModel

|

| 7 |

+

from PIL import Image

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def demo_process_vqa(input_img, question):

|

| 11 |

+

global pretrained_model, task_prompt, task_name

|

| 12 |

+

input_img = Image.fromarray(input_img)

|

| 13 |

+

user_prompt = task_prompt.replace("{user_input}", question)

|

| 14 |

+

return pretrained_model.inference(input_img, prompt=user_prompt)["predictions"][0]

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def demo_process(input_img):

|

| 18 |

+

global pretrained_model, task_prompt, task_name

|

| 19 |

+

input_img = Image.fromarray(input_img)

|

| 20 |

+

best_output = pretrained_model.inference(image=input_img, prompt=task_prompt)["predictions"][0]

|

| 21 |

+

return best_output["text_sequence"].split(" </s_MachineReadableZone>")[0]

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

if __name__ == "__main__":

|

| 25 |

+

parser = argparse.ArgumentParser()

|

| 26 |

+

parser.add_argument("--task", type=str, default="s_passport")

|

| 27 |

+

parser.add_argument("--pretrained_path", type=str, default=os.getcwd())

|

| 28 |

+

parser.add_argument("--port", type=int, default=12345)

|

| 29 |

+

parser.add_argument("--url", type=str, default="0.0.0.0")

|

| 30 |

+

parser.add_argument("--sample_img_path", type=str)

|

| 31 |

+

args, left_argv = parser.parse_known_args()

|

| 32 |

+

|

| 33 |

+

task_name = args.task

|

| 34 |

+

if "docvqa" == task_name:

|

| 35 |

+

task_prompt = "<s_docvqa><s_question>{user_input}</s_question><s_answer>"

|

| 36 |

+

else: # rvlcdip, cord, ...

|

| 37 |

+

task_prompt = f"<s_{task_name}>"

|

| 38 |

+

|

| 39 |

+

example_sample = [os.path.join("images", image) for image in os.listdir("images")]

|

| 40 |

+

if args.sample_img_path:

|

| 41 |

+

example_sample.append(args.sample_img_path)

|

| 42 |

+

|

| 43 |

+

pretrained_model = DonutModel.from_pretrained(args.pretrained_path)

|

| 44 |

+

|

| 45 |

+

if torch.cuda.is_available():

|

| 46 |

+

pretrained_model.half()

|

| 47 |

+

device = torch.device("cuda")

|

| 48 |

+

pretrained_model.to(device)

|

| 49 |

+

|

| 50 |

+

pretrained_model.eval()

|

| 51 |

+

|

| 52 |

+

gr.Interface(

|

| 53 |

+

fn=demo_process_vqa if task_name == "docvqa" else demo_process,

|

| 54 |

+

inputs=["image", "text"] if task_name == "docvqa" else "image",

|

| 55 |

+

outputs="text",

|

| 56 |

+

title="Demo of MRZ Extraction model based on 🍩 architecture",

|

| 57 |

+

examples=example_sample if example_sample else None

|

| 58 |

+

).launch()

|

config.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": ".",

|

| 3 |

+

"align_long_axis": false,

|

| 4 |

+

"architectures": [

|

| 5 |

+

"DonutModel"

|

| 6 |

+

],

|

| 7 |

+

"input_size": [1280,960],

|

| 8 |

+

"max_length": 768,

|

| 9 |

+

"model_type": "donut",

|

| 10 |

+

"torch_dtype": "float32",

|

| 11 |

+

"transformers_version": "4.11.3",

|

| 12 |

+

"window_size": 10

|

| 13 |

+

}

|

donut/__init__.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Donut

|

| 3 |

+

Copyright (c) 2022-present NAVER Corp.

|

| 4 |

+

MIT License

|

| 5 |

+

"""

|

| 6 |

+

from .model import DonutConfig, DonutModel

|

| 7 |

+

from .util import DonutDataset, JSONParseEvaluator, load_json, save_json

|

| 8 |

+

|

| 9 |

+

__all__ = [

|

| 10 |

+

"DonutConfig",

|

| 11 |

+

"DonutModel",

|

| 12 |

+

"DonutDataset",

|

| 13 |

+

"JSONParseEvaluator",

|

| 14 |

+

"load_json",

|

| 15 |

+

"save_json",

|

| 16 |

+

]

|

donut/__pycache__/__init__.cpython-311.pyc

ADDED

|

Binary file (565 Bytes). View file

|

|

|

donut/__pycache__/model.cpython-311.pyc

ADDED

|

Binary file (31.3 kB). View file

|

|

|

donut/__pycache__/util.cpython-311.pyc

ADDED

|

Binary file (18.1 kB). View file

|

|

|

donut/_version.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Donut

|

| 3 |

+

Copyright (c) 2022-present NAVER Corp.

|

| 4 |

+

MIT License

|

| 5 |

+

"""

|

| 6 |

+

__version__ = "1.0.9"

|

donut/model.py

ADDED

|

@@ -0,0 +1,609 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Donut

|

| 3 |

+

Copyright (c) 2022-present NAVER Corp.

|

| 4 |

+

MIT License

|

| 5 |

+

"""

|

| 6 |

+

import math

|

| 7 |

+

import os

|

| 8 |

+

import re

|

| 9 |

+

from typing import Any, List, Optional, Union

|

| 10 |

+

|

| 11 |

+

import numpy as np

|

| 12 |

+

import PIL

|

| 13 |

+

import timm

|

| 14 |

+

import torch

|

| 15 |

+

import torch.nn as nn

|

| 16 |

+

import torch.nn.functional as F

|

| 17 |

+

from PIL import ImageOps

|

| 18 |

+

from timm.data.constants import IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD

|

| 19 |

+

from timm.models.swin_transformer import SwinTransformer

|

| 20 |

+

from torchvision import transforms

|

| 21 |

+

from torchvision.transforms.functional import resize, rotate

|

| 22 |

+

from transformers import MBartConfig, MBartForCausalLM, XLMRobertaTokenizer

|

| 23 |

+

from transformers.file_utils import ModelOutput

|

| 24 |

+

from transformers.modeling_utils import PretrainedConfig, PreTrainedModel

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

class SwinEncoder(nn.Module):

|

| 28 |

+

r"""

|

| 29 |

+

Donut encoder based on SwinTransformer

|

| 30 |

+

Set the initial weights and configuration with a pretrained SwinTransformer and then

|

| 31 |

+

modify the detailed configurations as a Donut Encoder

|

| 32 |

+

|

| 33 |

+

Args:

|

| 34 |

+

input_size: Input image size (width, height)

|

| 35 |

+

align_long_axis: Whether to rotate image if height is greater than width

|

| 36 |

+

window_size: Window size(=patch size) of SwinTransformer

|

| 37 |

+

encoder_layer: Number of layers of SwinTransformer encoder

|

| 38 |

+

name_or_path: Name of a pretrained model name either registered in huggingface.co. or saved in local.

|

| 39 |

+

otherwise, `swin_base_patch4_window12_384` will be set (using `timm`).

|

| 40 |

+

"""

|

| 41 |

+

|

| 42 |

+

def __init__(

|

| 43 |

+

self,

|

| 44 |

+

input_size: List[int],

|

| 45 |

+

align_long_axis: bool,

|

| 46 |

+

window_size: int,

|

| 47 |

+

encoder_layer: List[int],

|

| 48 |

+

name_or_path: Union[str, bytes, os.PathLike] = None,

|

| 49 |

+

):

|

| 50 |

+

super().__init__()

|

| 51 |

+

self.input_size = input_size

|

| 52 |

+

self.align_long_axis = align_long_axis

|

| 53 |

+

self.window_size = window_size

|

| 54 |

+

self.encoder_layer = encoder_layer

|

| 55 |

+

|

| 56 |

+

self.to_tensor = transforms.Compose(

|

| 57 |

+

[

|

| 58 |

+

transforms.ToTensor(),

|

| 59 |

+

transforms.Normalize(IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD),

|

| 60 |

+

]

|

| 61 |

+

)

|

| 62 |

+

|

| 63 |

+

self.model = SwinTransformer(

|

| 64 |

+

img_size=self.input_size,

|

| 65 |

+

depths=self.encoder_layer,

|

| 66 |

+

window_size=self.window_size,

|

| 67 |

+

patch_size=4,

|

| 68 |

+

embed_dim=128,

|

| 69 |

+

num_heads=[4, 8, 16, 32],

|

| 70 |

+

num_classes=0,

|

| 71 |

+

)

|

| 72 |

+

|

| 73 |

+

# weight init with swin

|

| 74 |

+

if not name_or_path:

|

| 75 |

+

swin_state_dict = timm.create_model("swin_base_patch4_window12_384", pretrained=True).state_dict()

|

| 76 |

+

new_swin_state_dict = self.model.state_dict()

|

| 77 |

+

for x in new_swin_state_dict:

|

| 78 |

+

if x.endswith("relative_position_index") or x.endswith("attn_mask"):

|

| 79 |

+

pass

|

| 80 |

+

elif (

|

| 81 |

+

x.endswith("relative_position_bias_table")

|

| 82 |

+

and self.model.layers[0].blocks[0].attn.window_size[0] != 12

|

| 83 |

+

):

|

| 84 |

+

pos_bias = swin_state_dict[x].unsqueeze(0)[0]

|

| 85 |

+

old_len = int(math.sqrt(len(pos_bias)))

|

| 86 |

+

new_len = int(2 * window_size - 1)

|

| 87 |

+

pos_bias = pos_bias.reshape(1, old_len, old_len, -1).permute(0, 3, 1, 2)

|

| 88 |

+

pos_bias = F.interpolate(pos_bias, size=(new_len, new_len), mode="bicubic", align_corners=False)

|

| 89 |

+

new_swin_state_dict[x] = pos_bias.permute(0, 2, 3, 1).reshape(1, new_len ** 2, -1).squeeze(0)

|

| 90 |

+

else:

|

| 91 |

+

new_swin_state_dict[x] = swin_state_dict[x]

|

| 92 |

+

self.model.load_state_dict(new_swin_state_dict)

|

| 93 |

+

|

| 94 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 95 |

+

"""

|

| 96 |

+

Args:

|

| 97 |

+

x: (batch_size, num_channels, height, width)

|

| 98 |

+

"""

|

| 99 |

+

x = self.model.patch_embed(x)

|

| 100 |

+

x = self.model.pos_drop(x)

|

| 101 |

+

x = self.model.layers(x)

|

| 102 |

+

return x

|

| 103 |

+

|

| 104 |

+

def prepare_input(self, img: PIL.Image.Image, random_padding: bool = False) -> torch.Tensor:

|

| 105 |

+

"""

|

| 106 |

+

Convert PIL Image to tensor according to specified input_size after following steps below:

|

| 107 |

+

- resize

|

| 108 |

+

- rotate (if align_long_axis is True and image is not aligned longer axis with canvas)

|

| 109 |

+

- pad

|

| 110 |

+

"""

|

| 111 |

+

img = img.convert("RGB")

|

| 112 |

+

if self.align_long_axis and (

|

| 113 |

+

(self.input_size[0] > self.input_size[1] and img.width > img.height)

|

| 114 |

+

or (self.input_size[0] < self.input_size[1] and img.width < img.height)

|

| 115 |

+

):

|

| 116 |

+

img = rotate(img, angle=-90, expand=True)

|

| 117 |

+

img = resize(img, min(self.input_size))

|

| 118 |

+

img.thumbnail((self.input_size[1], self.input_size[0]))

|

| 119 |

+

delta_width = self.input_size[1] - img.width

|

| 120 |

+

delta_height = self.input_size[0] - img.height

|

| 121 |

+

if random_padding:

|

| 122 |

+

pad_width = np.random.randint(low=0, high=delta_width + 1)

|

| 123 |

+

pad_height = np.random.randint(low=0, high=delta_height + 1)

|

| 124 |

+

else:

|

| 125 |

+

pad_width = delta_width // 2

|

| 126 |

+

pad_height = delta_height // 2

|

| 127 |

+

padding = (

|

| 128 |

+

pad_width,

|

| 129 |

+

pad_height,

|

| 130 |

+

delta_width - pad_width,

|

| 131 |

+

delta_height - pad_height,

|

| 132 |

+

)

|

| 133 |

+

return self.to_tensor(ImageOps.expand(img, padding))

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

class BARTDecoder(nn.Module):

|

| 137 |

+

"""

|

| 138 |

+

Donut Decoder based on Multilingual BART

|

| 139 |

+

Set the initial weights and configuration with a pretrained multilingual BART model,

|

| 140 |

+

and modify the detailed configurations as a Donut decoder

|

| 141 |

+

|

| 142 |

+

Args:

|

| 143 |

+

decoder_layer:

|

| 144 |

+

Number of layers of BARTDecoder

|

| 145 |

+

max_position_embeddings:

|

| 146 |

+

The maximum sequence length to be trained

|

| 147 |

+

name_or_path:

|

| 148 |

+

Name of a pretrained model name either registered in huggingface.co. or saved in local,

|

| 149 |

+

otherwise, `hyunwoongko/asian-bart-ecjk` will be set (using `transformers`)

|

| 150 |

+

"""

|

| 151 |

+

|

| 152 |

+

def __init__(

|

| 153 |

+

self, decoder_layer: int, max_position_embeddings: int, name_or_path: Union[str, bytes, os.PathLike] = None

|

| 154 |

+

):

|

| 155 |

+

super().__init__()

|

| 156 |

+

self.decoder_layer = decoder_layer

|

| 157 |

+

self.max_position_embeddings = max_position_embeddings

|

| 158 |

+

|

| 159 |

+

self.tokenizer = XLMRobertaTokenizer.from_pretrained(

|

| 160 |

+

"hyunwoongko/asian-bart-ecjk" if not name_or_path else name_or_path

|

| 161 |

+

)

|

| 162 |

+

|

| 163 |

+

self.model = MBartForCausalLM(

|

| 164 |

+

config=MBartConfig(

|

| 165 |

+

is_decoder=True,

|

| 166 |

+

is_encoder_decoder=False,

|

| 167 |

+

add_cross_attention=True,

|

| 168 |

+

decoder_layers=self.decoder_layer,

|

| 169 |

+

max_position_embeddings=self.max_position_embeddings,

|

| 170 |

+

vocab_size=len(self.tokenizer),

|

| 171 |

+

scale_embedding=True,

|

| 172 |

+

add_final_layer_norm=True,

|

| 173 |

+

)

|

| 174 |

+

)

|

| 175 |

+

self.model.forward = self.forward # to get cross attentions and utilize `generate` function

|

| 176 |

+

|

| 177 |

+

self.model.config.is_encoder_decoder = True # to get cross-attention

|

| 178 |

+

self.add_special_tokens(["<sep/>"]) # <sep/> is used for representing a list in a JSON

|

| 179 |

+

self.model.model.decoder.embed_tokens.padding_idx = self.tokenizer.pad_token_id

|

| 180 |

+

self.model.prepare_inputs_for_generation = self.prepare_inputs_for_inference

|

| 181 |

+

|

| 182 |

+

# weight init with asian-bart

|

| 183 |

+

if not name_or_path:

|

| 184 |

+

bart_state_dict = MBartForCausalLM.from_pretrained("hyunwoongko/asian-bart-ecjk").state_dict()

|

| 185 |

+

new_bart_state_dict = self.model.state_dict()

|

| 186 |

+

for x in new_bart_state_dict:

|

| 187 |

+

if x.endswith("embed_positions.weight") and self.max_position_embeddings != 1024:

|

| 188 |

+

new_bart_state_dict[x] = torch.nn.Parameter(

|

| 189 |

+

self.resize_bart_abs_pos_emb(

|

| 190 |

+

bart_state_dict[x],

|

| 191 |

+

self.max_position_embeddings

|

| 192 |

+

+ 2, # https://github.com/huggingface/transformers/blob/v4.11.3/src/transformers/models/mbart/modeling_mbart.py#L118-L119

|

| 193 |

+

)

|

| 194 |

+

)

|

| 195 |

+

elif x.endswith("embed_tokens.weight") or x.endswith("lm_head.weight"):

|

| 196 |

+

new_bart_state_dict[x] = bart_state_dict[x][: len(self.tokenizer), :]

|

| 197 |

+

else:

|

| 198 |

+

new_bart_state_dict[x] = bart_state_dict[x]

|

| 199 |

+

self.model.load_state_dict(new_bart_state_dict)

|

| 200 |

+

|

| 201 |

+

def add_special_tokens(self, list_of_tokens: List[str]):

|

| 202 |

+

"""

|

| 203 |

+

Add special tokens to tokenizer and resize the token embeddings

|

| 204 |

+

"""

|

| 205 |

+

newly_added_num = self.tokenizer.add_special_tokens({"additional_special_tokens": sorted(set(list_of_tokens))})

|

| 206 |

+

if newly_added_num > 0:

|

| 207 |

+

self.model.resize_token_embeddings(len(self.tokenizer))

|

| 208 |

+

|

| 209 |

+

def prepare_inputs_for_inference(self, input_ids: torch.Tensor, encoder_outputs: torch.Tensor, past=None, use_cache: bool = None, attention_mask: torch.Tensor = None):

|

| 210 |

+

"""

|

| 211 |

+

Args:

|

| 212 |

+

input_ids: (batch_size, sequence_lenth)

|

| 213 |

+

Returns:

|

| 214 |

+

input_ids: (batch_size, sequence_length)

|

| 215 |

+

attention_mask: (batch_size, sequence_length)

|

| 216 |

+

encoder_hidden_states: (batch_size, sequence_length, embedding_dim)

|

| 217 |

+

"""

|

| 218 |

+

attention_mask = input_ids.ne(self.tokenizer.pad_token_id).long()

|

| 219 |

+

if past is not None:

|

| 220 |

+

input_ids = input_ids[:, -1:]

|

| 221 |

+

output = {

|

| 222 |

+

"input_ids": input_ids,

|

| 223 |

+

"attention_mask": attention_mask,

|

| 224 |

+

"past_key_values": past,

|

| 225 |

+

"use_cache": use_cache,

|

| 226 |

+

"encoder_hidden_states": encoder_outputs.last_hidden_state,

|

| 227 |

+

}

|

| 228 |

+

return output

|

| 229 |

+

|

| 230 |

+

def forward(

|

| 231 |

+

self,

|

| 232 |

+

input_ids,

|

| 233 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 234 |

+

encoder_hidden_states: Optional[torch.Tensor] = None,

|

| 235 |

+

past_key_values: Optional[torch.Tensor] = None,

|

| 236 |

+

labels: Optional[torch.Tensor] = None,

|

| 237 |

+

use_cache: bool = None,

|

| 238 |

+

output_attentions: Optional[torch.Tensor] = None,

|

| 239 |

+

output_hidden_states: Optional[torch.Tensor] = None,

|

| 240 |

+

return_dict: bool = None,

|

| 241 |

+

):

|

| 242 |

+

"""

|

| 243 |

+

A forward fucntion to get cross attentions and utilize `generate` function

|

| 244 |

+

|

| 245 |

+

Source:

|

| 246 |

+

https://github.com/huggingface/transformers/blob/v4.11.3/src/transformers/models/mbart/modeling_mbart.py#L1669-L1810

|

| 247 |

+

|

| 248 |

+

Args:

|

| 249 |

+

input_ids: (batch_size, sequence_length)

|

| 250 |

+

attention_mask: (batch_size, sequence_length)

|

| 251 |

+

encoder_hidden_states: (batch_size, sequence_length, hidden_size)

|

| 252 |

+

|

| 253 |

+

Returns:

|

| 254 |

+

loss: (1, )

|

| 255 |

+

logits: (batch_size, sequence_length, hidden_dim)

|

| 256 |

+

hidden_states: (batch_size, sequence_length, hidden_size)

|

| 257 |

+

decoder_attentions: (batch_size, num_heads, sequence_length, sequence_length)

|

| 258 |

+

cross_attentions: (batch_size, num_heads, sequence_length, sequence_length)

|

| 259 |

+

"""

|

| 260 |

+

output_attentions = output_attentions if output_attentions is not None else self.model.config.output_attentions

|

| 261 |

+

output_hidden_states = (

|

| 262 |

+

output_hidden_states if output_hidden_states is not None else self.model.config.output_hidden_states

|

| 263 |

+

)

|

| 264 |

+

return_dict = return_dict if return_dict is not None else self.model.config.use_return_dict

|

| 265 |

+

outputs = self.model.model.decoder(

|

| 266 |

+

input_ids=input_ids,

|

| 267 |

+

attention_mask=attention_mask,

|

| 268 |

+

encoder_hidden_states=encoder_hidden_states,

|

| 269 |

+

past_key_values=past_key_values,

|

| 270 |

+

use_cache=use_cache,

|

| 271 |

+

output_attentions=output_attentions,

|

| 272 |

+

output_hidden_states=output_hidden_states,

|

| 273 |

+

return_dict=return_dict,

|

| 274 |

+

)

|

| 275 |

+

|

| 276 |

+

logits = self.model.lm_head(outputs[0])

|

| 277 |

+

|

| 278 |

+

loss = None

|

| 279 |

+

if labels is not None:

|

| 280 |

+

loss_fct = nn.CrossEntropyLoss(ignore_index=-100)

|

| 281 |

+

loss = loss_fct(logits.view(-1, self.model.config.vocab_size), labels.view(-1))

|

| 282 |

+

|

| 283 |

+

if not return_dict:

|

| 284 |

+

output = (logits,) + outputs[1:]

|

| 285 |

+

return (loss,) + output if loss is not None else output

|

| 286 |

+

|

| 287 |

+

return ModelOutput(

|

| 288 |

+

loss=loss,

|

| 289 |

+

logits=logits,

|

| 290 |

+

past_key_values=outputs.past_key_values,

|

| 291 |

+

hidden_states=outputs.hidden_states,

|

| 292 |

+

decoder_attentions=outputs.attentions,

|

| 293 |

+

cross_attentions=outputs.cross_attentions,

|

| 294 |

+

)

|

| 295 |

+

|

| 296 |

+

@staticmethod

|

| 297 |

+

def resize_bart_abs_pos_emb(weight: torch.Tensor, max_length: int) -> torch.Tensor:

|

| 298 |

+

"""

|

| 299 |

+

Resize position embeddings

|

| 300 |

+

Truncate if sequence length of Bart backbone is greater than given max_length,

|

| 301 |

+

else interpolate to max_length

|

| 302 |

+

"""

|

| 303 |

+

if weight.shape[0] > max_length:

|

| 304 |

+

weight = weight[:max_length, ...]

|

| 305 |

+

else:

|

| 306 |

+

weight = (

|

| 307 |

+

F.interpolate(

|

| 308 |

+

weight.permute(1, 0).unsqueeze(0),

|

| 309 |

+

size=max_length,

|

| 310 |

+

mode="linear",

|

| 311 |

+

align_corners=False,

|

| 312 |

+

)

|

| 313 |

+

.squeeze(0)

|

| 314 |

+

.permute(1, 0)

|

| 315 |

+

)

|

| 316 |

+

return weight

|

| 317 |

+

|

| 318 |

+

|

| 319 |

+

class DonutConfig(PretrainedConfig):

|

| 320 |

+

r"""

|

| 321 |

+

This is the configuration class to store the configuration of a [`DonutModel`]. It is used to

|

| 322 |

+

instantiate a Donut model according to the specified arguments, defining the model architecture

|

| 323 |

+

|

| 324 |

+

Args:

|

| 325 |

+

input_size:

|

| 326 |

+

Input image size (canvas size) of Donut.encoder, SwinTransformer in this codebase

|

| 327 |

+

align_long_axis:

|

| 328 |

+

Whether to rotate image if height is greater than width

|

| 329 |

+

window_size:

|

| 330 |

+

Window size of Donut.encoder, SwinTransformer in this codebase

|

| 331 |

+

encoder_layer:

|

| 332 |

+

Depth of each Donut.encoder Encoder layer, SwinTransformer in this codebase

|

| 333 |

+

decoder_layer:

|

| 334 |

+

Number of hidden layers in the Donut.decoder, such as BART

|

| 335 |

+

max_position_embeddings

|

| 336 |

+

Trained max position embeddings in the Donut decoder,

|

| 337 |

+

if not specified, it will have same value with max_length

|

| 338 |

+

max_length:

|

| 339 |

+

Max position embeddings(=maximum sequence length) you want to train

|

| 340 |

+

name_or_path:

|

| 341 |

+

Name of a pretrained model name either registered in huggingface.co. or saved in local

|

| 342 |

+

"""

|

| 343 |

+

|

| 344 |

+

model_type = "donut"

|

| 345 |

+

|

| 346 |

+

def __init__(

|

| 347 |

+

self,

|

| 348 |

+

input_size: List[int] = [2560, 1920],

|

| 349 |

+

align_long_axis: bool = False,

|

| 350 |

+

window_size: int = 10,

|

| 351 |

+

encoder_layer: List[int] = [2, 2, 14, 2],

|

| 352 |

+

decoder_layer: int = 4,

|

| 353 |

+

max_position_embeddings: int = None,

|

| 354 |

+

max_length: int = 1536,

|

| 355 |

+

name_or_path: Union[str, bytes, os.PathLike] = "",

|

| 356 |

+

**kwargs,

|

| 357 |

+

):

|

| 358 |

+

super().__init__()

|

| 359 |

+

self.input_size = input_size

|

| 360 |

+

self.align_long_axis = align_long_axis

|

| 361 |

+

self.window_size = window_size

|

| 362 |

+

self.encoder_layer = encoder_layer

|

| 363 |

+

self.decoder_layer = decoder_layer

|

| 364 |

+

self.max_position_embeddings = max_length if max_position_embeddings is None else max_position_embeddings

|

| 365 |

+

self.max_length = max_length

|

| 366 |

+

self.name_or_path = name_or_path

|

| 367 |

+

|

| 368 |

+

|

| 369 |

+

class DonutModel(PreTrainedModel):

|

| 370 |

+

r"""

|

| 371 |

+

Donut: an E2E OCR-free Document Understanding Transformer.

|

| 372 |

+

The encoder maps an input document image into a set of embeddings,

|

| 373 |

+

the decoder predicts a desired token sequence, that can be converted to a structured format,

|

| 374 |

+

given a prompt and the encoder output embeddings

|

| 375 |

+

"""

|

| 376 |

+

config_class = DonutConfig

|

| 377 |

+

base_model_prefix = "donut"

|

| 378 |

+

|

| 379 |

+

def __init__(self, config: DonutConfig):

|

| 380 |

+

super().__init__(config)

|

| 381 |

+

self.config = config

|

| 382 |

+

self.encoder = SwinEncoder(

|

| 383 |

+

input_size=self.config.input_size,

|

| 384 |

+

align_long_axis=self.config.align_long_axis,

|

| 385 |

+

window_size=self.config.window_size,

|

| 386 |

+

encoder_layer=self.config.encoder_layer,

|

| 387 |

+

name_or_path=self.config.name_or_path,

|

| 388 |

+

)

|

| 389 |

+

self.decoder = BARTDecoder(

|

| 390 |

+

max_position_embeddings=self.config.max_position_embeddings,

|

| 391 |

+

decoder_layer=self.config.decoder_layer,

|

| 392 |

+

name_or_path=self.config.name_or_path,

|

| 393 |

+

)

|

| 394 |

+

|

| 395 |

+

def forward(self, image_tensors: torch.Tensor, decoder_input_ids: torch.Tensor, decoder_labels: torch.Tensor):

|

| 396 |

+

"""

|

| 397 |

+

Calculate a loss given an input image and a desired token sequence,

|

| 398 |

+

the model will be trained in a teacher-forcing manner

|

| 399 |

+

|

| 400 |

+

Args:

|

| 401 |

+

image_tensors: (batch_size, num_channels, height, width)

|

| 402 |

+

decoder_input_ids: (batch_size, sequence_length, embedding_dim)

|

| 403 |

+

decode_labels: (batch_size, sequence_length)

|

| 404 |

+

"""

|

| 405 |

+

encoder_outputs = self.encoder(image_tensors)

|

| 406 |

+

decoder_outputs = self.decoder(

|

| 407 |

+

input_ids=decoder_input_ids,

|

| 408 |

+

encoder_hidden_states=encoder_outputs,

|

| 409 |

+

labels=decoder_labels,

|

| 410 |

+

)

|

| 411 |

+

return decoder_outputs

|

| 412 |

+

|

| 413 |

+

def inference(

|

| 414 |

+

self,

|

| 415 |

+

image: PIL.Image = None,

|

| 416 |

+

prompt: str = None,

|

| 417 |

+

image_tensors: Optional[torch.Tensor] = None,

|

| 418 |

+

prompt_tensors: Optional[torch.Tensor] = None,

|

| 419 |

+

return_json: bool = True,

|

| 420 |

+

return_attentions: bool = False,

|

| 421 |

+

):

|

| 422 |

+

"""

|

| 423 |

+

Generate a token sequence in an auto-regressive manner,

|

| 424 |

+

the generated token sequence is convereted into an ordered JSON format

|

| 425 |

+

|

| 426 |

+

Args:

|

| 427 |

+

image: input document image (PIL.Image)

|

| 428 |

+

prompt: task prompt (string) to guide Donut Decoder generation

|

| 429 |

+

image_tensors: (1, num_channels, height, width)

|

| 430 |

+

convert prompt to tensor if image_tensor is not fed

|

| 431 |

+

prompt_tensors: (1, sequence_length)

|

| 432 |

+

convert image to tensor if prompt_tensor is not fed

|

| 433 |

+

"""

|

| 434 |

+

# prepare backbone inputs (image and prompt)

|

| 435 |

+

if image is None and image_tensors is None:

|

| 436 |

+

raise ValueError("Expected either image or image_tensors")

|

| 437 |

+

if all(v is None for v in {prompt, prompt_tensors}):

|

| 438 |

+

raise ValueError("Expected either prompt or prompt_tensors")

|

| 439 |

+

|

| 440 |

+

if image_tensors is None:

|

| 441 |

+

image_tensors = self.encoder.prepare_input(image).unsqueeze(0)

|

| 442 |

+

|

| 443 |

+

if self.device.type == "cuda": # half is not compatible in cpu implementation.

|

| 444 |

+

image_tensors = image_tensors.half()

|

| 445 |

+

image_tensors = image_tensors.to(self.device)

|

| 446 |

+

|

| 447 |

+

if prompt_tensors is None:

|

| 448 |

+

prompt_tensors = self.decoder.tokenizer(prompt, add_special_tokens=False, return_tensors="pt")["input_ids"]

|

| 449 |

+

|

| 450 |

+

prompt_tensors = prompt_tensors.to(self.device)

|

| 451 |

+

|

| 452 |

+

last_hidden_state = self.encoder(image_tensors)

|

| 453 |

+

if self.device.type != "cuda":

|

| 454 |

+

last_hidden_state = last_hidden_state.to(torch.float32)

|

| 455 |

+

|

| 456 |

+

encoder_outputs = ModelOutput(last_hidden_state=last_hidden_state, attentions=None)

|

| 457 |

+

|

| 458 |

+

if len(encoder_outputs.last_hidden_state.size()) == 1:

|

| 459 |

+

encoder_outputs.last_hidden_state = encoder_outputs.last_hidden_state.unsqueeze(0)

|

| 460 |

+

if len(prompt_tensors.size()) == 1:

|

| 461 |

+

prompt_tensors = prompt_tensors.unsqueeze(0)

|

| 462 |

+

|

| 463 |

+

# get decoder output

|

| 464 |

+

decoder_output = self.decoder.model.generate(

|

| 465 |

+

decoder_input_ids=prompt_tensors,

|

| 466 |

+

encoder_outputs=encoder_outputs,

|

| 467 |

+

max_length=self.config.max_length,

|

| 468 |

+

early_stopping=True,

|

| 469 |

+

pad_token_id=self.decoder.tokenizer.pad_token_id,

|

| 470 |

+

eos_token_id=self.decoder.tokenizer.eos_token_id,

|

| 471 |

+

use_cache=True,

|

| 472 |

+

num_beams=1,

|

| 473 |

+

bad_words_ids=[[self.decoder.tokenizer.unk_token_id]],

|

| 474 |

+

return_dict_in_generate=True,

|

| 475 |

+

output_attentions=return_attentions,

|

| 476 |

+

)

|

| 477 |

+

|

| 478 |

+

output = {"predictions": list()}

|

| 479 |

+

for seq in self.decoder.tokenizer.batch_decode(decoder_output.sequences):

|

| 480 |

+

seq = seq.replace(self.decoder.tokenizer.eos_token, "").replace(self.decoder.tokenizer.pad_token, "")

|

| 481 |

+

seq = re.sub(r"<.*?>", "", seq, count=1).strip() # remove first task start token

|

| 482 |

+

if return_json:

|

| 483 |

+

output["predictions"].append(self.token2json(seq))

|

| 484 |

+

else:

|

| 485 |

+

output["predictions"].append(seq)

|

| 486 |

+

|

| 487 |

+

if return_attentions:

|

| 488 |

+

output["attentions"] = {

|

| 489 |

+

"self_attentions": decoder_output.decoder_attentions,

|

| 490 |

+

"cross_attentions": decoder_output.cross_attentions,

|

| 491 |

+

}

|

| 492 |

+

|

| 493 |

+

return output

|

| 494 |

+

|

| 495 |

+

def json2token(self, obj: Any, update_special_tokens_for_json_key: bool = True, sort_json_key: bool = True):

|

| 496 |

+

"""

|

| 497 |

+

Convert an ordered JSON object into a token sequence

|

| 498 |

+

"""

|

| 499 |

+

if type(obj) == dict:

|

| 500 |

+

if len(obj) == 1 and "text_sequence" in obj:

|

| 501 |

+

return obj["text_sequence"]

|

| 502 |

+

else:

|

| 503 |

+

output = ""

|

| 504 |

+

if sort_json_key:

|

| 505 |

+

keys = sorted(obj.keys(), reverse=True)

|

| 506 |

+

else:

|

| 507 |

+

keys = obj.keys()

|

| 508 |

+

for k in keys:

|

| 509 |

+

if update_special_tokens_for_json_key:

|

| 510 |

+

self.decoder.add_special_tokens([fr"<s_{k}>", fr"</s_{k}>"])

|

| 511 |

+

output += (

|

| 512 |

+

fr"<s_{k}>"

|

| 513 |

+

+ self.json2token(obj[k], update_special_tokens_for_json_key, sort_json_key)

|

| 514 |

+

+ fr"</s_{k}>"

|

| 515 |

+

)

|

| 516 |

+

return output

|

| 517 |

+

elif type(obj) == list:

|

| 518 |

+

return r"<sep/>".join(

|

| 519 |

+

[self.json2token(item, update_special_tokens_for_json_key, sort_json_key) for item in obj]

|

| 520 |

+

)

|

| 521 |

+

else:

|

| 522 |

+

obj = str(obj)

|

| 523 |

+

if f"<{obj}/>" in self.decoder.tokenizer.all_special_tokens:

|

| 524 |

+

obj = f"<{obj}/>" # for categorical special tokens

|

| 525 |

+

return obj

|

| 526 |

+

|

| 527 |

+

def token2json(self, tokens, is_inner_value=False):

|

| 528 |

+

"""

|

| 529 |

+

Convert a (generated) token seuqnce into an ordered JSON format

|

| 530 |

+

"""

|

| 531 |

+

output = dict()

|

| 532 |

+

|

| 533 |

+

while tokens:

|

| 534 |

+

start_token = re.search(r"<s_(.*?)>", tokens, re.IGNORECASE)

|

| 535 |

+

if start_token is None:

|

| 536 |

+

break

|

| 537 |

+

key = start_token.group(1)

|

| 538 |

+

end_token = re.search(fr"</s_{key}>", tokens, re.IGNORECASE)

|

| 539 |

+

start_token = start_token.group()

|

| 540 |

+

if end_token is None:

|

| 541 |

+

tokens = tokens.replace(start_token, "")

|

| 542 |

+

else:

|

| 543 |

+

end_token = end_token.group()

|

| 544 |

+

start_token_escaped = re.escape(start_token)

|

| 545 |

+

end_token_escaped = re.escape(end_token)

|

| 546 |

+

content = re.search(f"{start_token_escaped}(.*?){end_token_escaped}", tokens, re.IGNORECASE)

|

| 547 |

+

if content is not None:

|

| 548 |

+

content = content.group(1).strip()

|

| 549 |

+

if r"<s_" in content and r"</s_" in content: # non-leaf node

|

| 550 |

+

value = self.token2json(content, is_inner_value=True)

|

| 551 |

+

if value:

|

| 552 |

+

if len(value) == 1:

|

| 553 |

+

value = value[0]

|

| 554 |

+

output[key] = value

|

| 555 |

+

else: # leaf nodes

|

| 556 |

+

output[key] = []

|

| 557 |

+

for leaf in content.split(r"<sep/>"):

|

| 558 |

+

leaf = leaf.strip()

|

| 559 |

+

if (

|

| 560 |

+

leaf in self.decoder.tokenizer.get_added_vocab()

|

| 561 |

+

and leaf[0] == "<"

|

| 562 |

+

and leaf[-2:] == "/>"

|

| 563 |

+

):

|

| 564 |

+

leaf = leaf[1:-2] # for categorical special tokens

|

| 565 |

+

output[key].append(leaf)

|

| 566 |

+

if len(output[key]) == 1:

|

| 567 |

+

output[key] = output[key][0]

|

| 568 |

+

|

| 569 |

+

tokens = tokens[tokens.find(end_token) + len(end_token) :].strip()

|

| 570 |

+

if tokens[:6] == r"<sep/>": # non-leaf nodes

|

| 571 |

+

return [output] + self.token2json(tokens[6:], is_inner_value=True)

|

| 572 |

+

|

| 573 |

+

if len(output):

|

| 574 |

+

return [output] if is_inner_value else output

|

| 575 |

+

else:

|

| 576 |

+

return [] if is_inner_value else {"text_sequence": tokens}

|

| 577 |

+

|

| 578 |

+

@classmethod

|

| 579 |

+

def from_pretrained(

|

| 580 |

+

cls,

|

| 581 |

+

pretrained_model_name_or_path: Union[str, bytes, os.PathLike],

|

| 582 |

+

*model_args,

|

| 583 |

+

**kwargs,

|

| 584 |

+

):

|

| 585 |

+

r"""

|

| 586 |

+

Instantiate a pretrained donut model from a pre-trained model configuration

|

| 587 |

+

|

| 588 |

+

Args:

|

| 589 |

+

pretrained_model_name_or_path:

|

| 590 |

+

Name of a pretrained model name either registered in huggingface.co. or saved in local,

|

| 591 |

+

e.g., `naver-clova-ix/donut-base`, or `naver-clova-ix/donut-base-finetuned-rvlcdip`

|

| 592 |

+

"""

|

| 593 |

+

model = super(DonutModel, cls).from_pretrained(pretrained_model_name_or_path, revision="official", *model_args, **kwargs)

|

| 594 |

+

|

| 595 |

+

# truncate or interplolate position embeddings of donut decoder

|

| 596 |

+

max_length = kwargs.get("max_length", model.config.max_position_embeddings)

|

| 597 |

+

if (

|

| 598 |

+

max_length != model.config.max_position_embeddings

|

| 599 |

+

): # if max_length of trained model differs max_length you want to train

|

| 600 |

+

model.decoder.model.model.decoder.embed_positions.weight = torch.nn.Parameter(

|

| 601 |

+

model.decoder.resize_bart_abs_pos_emb(

|

| 602 |

+

model.decoder.model.model.decoder.embed_positions.weight,

|

| 603 |

+

max_length

|

| 604 |

+

+ 2, # https://github.com/huggingface/transformers/blob/v4.11.3/src/transformers/models/mbart/modeling_mbart.py#L118-L119

|

| 605 |

+

)

|

| 606 |

+

)

|

| 607 |

+

model.config.max_position_embeddings = max_length

|

| 608 |

+

|

| 609 |

+

return model

|

donut/util.py

ADDED

|

@@ -0,0 +1,344 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|