Commit

•

01f50ae

1

Parent(s):

fb6f6e7

Upload 8 files

Browse files- .streamlit/config.toml +3 -0

- Demo.py +123 -0

- Dockerfile +70 -0

- images/Sentiment-Analysis.jpg +0 -0

- images/dataset.png +0 -0

- images/johnsnowlabs-sentiment-output.png +0 -0

- pages/Workflow & Model Overview.py +224 -0

- requirements.txt +5 -0

.streamlit/config.toml

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[theme]

|

| 2 |

+

base="light"

|

| 3 |

+

primaryColor="#29B4E8"

|

Demo.py

ADDED

|

@@ -0,0 +1,123 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import sparknlp

|

| 3 |

+

import os

|

| 4 |

+

import pandas as pd

|

| 5 |

+

|

| 6 |

+

from sparknlp.base import *

|

| 7 |

+

from sparknlp.annotator import *

|

| 8 |

+

from pyspark.ml import Pipeline

|

| 9 |

+

from sparknlp.pretrained import PretrainedPipeline

|

| 10 |

+

|

| 11 |

+

# Page configuration

|

| 12 |

+

st.set_page_config(

|

| 13 |

+

layout="wide",

|

| 14 |

+

page_title="Spark NLP Demos App",

|

| 15 |

+

initial_sidebar_state="auto"

|

| 16 |

+

)

|

| 17 |

+

|

| 18 |

+

# CSS for styling

|

| 19 |

+

st.markdown("""

|

| 20 |

+

<style>

|

| 21 |

+

.main-title {

|

| 22 |

+

font-size: 36px;

|

| 23 |

+

color: #4A90E2;

|

| 24 |

+

font-weight: bold;

|

| 25 |

+

text-align: center;

|

| 26 |

+

}

|

| 27 |

+

.section p, .section ul {

|

| 28 |

+

color: #666666;

|

| 29 |

+

}

|

| 30 |

+

</style>

|

| 31 |

+

""", unsafe_allow_html=True)

|

| 32 |

+

|

| 33 |

+

@st.cache_resource

|

| 34 |

+

def init_spark():

|

| 35 |

+

return sparknlp.start()

|

| 36 |

+

|

| 37 |

+

@st.cache_resource

|

| 38 |

+

def create_pipeline(model):

|

| 39 |

+

documentAssembler = DocumentAssembler()\

|

| 40 |

+

.setInputCol("text")\

|

| 41 |

+

.setOutputCol("document")

|

| 42 |

+

|

| 43 |

+

use = UniversalSentenceEncoder.pretrained("tfhub_use", "en")\

|

| 44 |

+

.setInputCols(["document"])\

|

| 45 |

+

.setOutputCol("sentence_embeddings")

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

sentimentdl = ClassifierDLModel.pretrained(model)\

|

| 49 |

+

.setInputCols(["sentence_embeddings"])\

|

| 50 |

+

.setOutputCol("sentiment")

|

| 51 |

+

|

| 52 |

+

nlpPipeline = Pipeline(stages = [documentAssembler, use, sentimentdl])

|

| 53 |

+

|

| 54 |

+

return nlpPipeline

|

| 55 |

+

|

| 56 |

+

def fit_data(pipeline, data):

|

| 57 |

+

empty_df = spark.createDataFrame([['']]).toDF('text')

|

| 58 |

+

pipeline_model = pipeline.fit(empty_df)

|

| 59 |

+

model = LightPipeline(pipeline_model)

|

| 60 |

+

results = model.fullAnnotate(data)[0]

|

| 61 |

+

|

| 62 |

+

return results['sentiment'][0].result

|

| 63 |

+

|

| 64 |

+

# Set up the page layout

|

| 65 |

+

st.markdown('<div class="main-title">State-of-the-Art Emotion Detecter in Tweets with Spark NLP</div>', unsafe_allow_html=True)

|

| 66 |

+

|

| 67 |

+

# Sidebar content

|

| 68 |

+

model = st.sidebar.selectbox(

|

| 69 |

+

"Choose the pretrained model",

|

| 70 |

+

["classifierdl_use_emotion"],

|

| 71 |

+

help="For more info about the models visit: https://sparknlp.org/models"

|

| 72 |

+

)

|

| 73 |

+

|

| 74 |

+

# Reference notebook link in sidebar

|

| 75 |

+

link = """

|

| 76 |

+

<a href="https://colab.research.google.com/github/JohnSnowLabs/spark-nlp-workshop/blob/master/tutorials/streamlit_notebooks/SENTIMENT_EN_EMOTION.ipynb">

|

| 77 |

+

<img src="https://colab.research.google.com/assets/colab-badge.svg" style="zoom: 1.3" alt="Open In Colab"/>

|

| 78 |

+

</a>

|

| 79 |

+

"""

|

| 80 |

+

st.sidebar.markdown('Reference notebook:')

|

| 81 |

+

st.sidebar.markdown(link, unsafe_allow_html=True)

|

| 82 |

+

|

| 83 |

+

# Load examples

|

| 84 |

+

examples = [

|

| 85 |

+

"I am SO happy the news came out in time for my birthday this weekend! My inner 7-year-old cannot WAIT!",

|

| 86 |

+

"That moment when you see your friend in a commercial. Hahahaha!",

|

| 87 |

+

"My soul has just been pierced by the most evil look from @rickosborneorg. A mini panic attack & chill in bones followed soon after.",

|

| 88 |

+

"For some reason I woke up thinkin it was Friday then I got to school and realized its really Monday -_-",

|

| 89 |

+

"I'd probably explode into a jillion pieces from the inablility to contain all of my if I had a Whataburger patty melt right now. #drool",

|

| 90 |

+

"These are not emotions. They are simply irrational thoughts feeding off of an emotion",

|

| 91 |

+

"Found out im gonna be with sarah bo barah in ny for one day!!! Eggcitement :)",

|

| 92 |

+

"That awkward moment when you find a perfume box full of sensors!",

|

| 93 |

+

"Just home from group celebration - dinner at Trattoria Gianni, then Hershey Felder's performance - AMAZING!!",

|

| 94 |

+

"Nooooo! My dad turned off the internet so I can't listen to band music!"

|

| 95 |

+

]

|

| 96 |

+

|

| 97 |

+

st.subheader("Automatically identify Joy, Surprise, Fear, Sadness in Tweets using out pretrained Spark NLP DL classifier.")

|

| 98 |

+

|

| 99 |

+

selected_text = st.selectbox("Select a sample", examples)

|

| 100 |

+

custom_input = st.text_input("Try it for yourself!")

|

| 101 |

+

|

| 102 |

+

if custom_input:

|

| 103 |

+

selected_text = custom_input

|

| 104 |

+

elif selected_text:

|

| 105 |

+

selected_text = selected_text

|

| 106 |

+

|

| 107 |

+

st.subheader('Selected Text')

|

| 108 |

+

st.write(selected_text)

|

| 109 |

+

|

| 110 |

+

# Initialize Spark and create pipeline

|

| 111 |

+

spark = init_spark()

|

| 112 |

+

pipeline = create_pipeline(model)

|

| 113 |

+

output = fit_data(pipeline, selected_text)

|

| 114 |

+

|

| 115 |

+

# Display output sentence

|

| 116 |

+

if output == 'joy':

|

| 117 |

+

st.markdown("""<h3>This seems like a <span style="color: #f0a412">{}</span> tweet. <span style="font-size:35px;">😂</span></h3>""".format('joyous'), unsafe_allow_html=True)

|

| 118 |

+

elif output == 'surprise':

|

| 119 |

+

st.markdown("""<h3>This seems like a <span style="color: #209DDC">{}</span> tweet. <span style="font-size:35px;">😊</span></h3>""".format('surprised'), unsafe_allow_html=True)

|

| 120 |

+

elif output == 'sadness':

|

| 121 |

+

st.markdown("""<h3>This seems like a <span style="color: #8F7F6C">{}</span> tweet. <span style="font-size:35px;">😟</span></h3>""".format('sad'), unsafe_allow_html=True)

|

| 122 |

+

elif output == 'fear':

|

| 123 |

+

st.markdown("""<h3>This seems like a <span style="color: #B64434">{}</span> tweet. <span style="font-size:35px;">😱</span></h3>""".format('fearful'), unsafe_allow_html=True)

|

Dockerfile

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Download base image ubuntu 18.04

|

| 2 |

+

FROM ubuntu:18.04

|

| 3 |

+

|

| 4 |

+

# Set environment variables

|

| 5 |

+

ENV NB_USER jovyan

|

| 6 |

+

ENV NB_UID 1000

|

| 7 |

+

ENV HOME /home/${NB_USER}

|

| 8 |

+

|

| 9 |

+

# Install required packages

|

| 10 |

+

RUN apt-get update && apt-get install -y \

|

| 11 |

+

tar \

|

| 12 |

+

wget \

|

| 13 |

+

bash \

|

| 14 |

+

rsync \

|

| 15 |

+

gcc \

|

| 16 |

+

libfreetype6-dev \

|

| 17 |

+

libhdf5-serial-dev \

|

| 18 |

+

libpng-dev \

|

| 19 |

+

libzmq3-dev \

|

| 20 |

+

python3 \

|

| 21 |

+

python3-dev \

|

| 22 |

+

python3-pip \

|

| 23 |

+

unzip \

|

| 24 |

+

pkg-config \

|

| 25 |

+

software-properties-common \

|

| 26 |

+

graphviz \

|

| 27 |

+

openjdk-8-jdk \

|

| 28 |

+

ant \

|

| 29 |

+

ca-certificates-java \

|

| 30 |

+

&& apt-get clean \

|

| 31 |

+

&& update-ca-certificates -f;

|

| 32 |

+

|

| 33 |

+

# Install Python 3.8 and pip

|

| 34 |

+

RUN add-apt-repository ppa:deadsnakes/ppa \

|

| 35 |

+

&& apt-get update \

|

| 36 |

+

&& apt-get install -y python3.8 python3-pip \

|

| 37 |

+

&& apt-get clean;

|

| 38 |

+

|

| 39 |

+

# Set up JAVA_HOME

|

| 40 |

+

ENV JAVA_HOME /usr/lib/jvm/java-8-openjdk-amd64/

|

| 41 |

+

RUN mkdir -p ${HOME} \

|

| 42 |

+

&& echo "export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/" >> ${HOME}/.bashrc \

|

| 43 |

+

&& chown -R ${NB_UID}:${NB_UID} ${HOME}

|

| 44 |

+

|

| 45 |

+

# Create a new user named "jovyan" with user ID 1000

|

| 46 |

+

RUN useradd -m -u ${NB_UID} ${NB_USER}

|

| 47 |

+

|

| 48 |

+

# Switch to the "jovyan" user

|

| 49 |

+

USER ${NB_USER}

|

| 50 |

+

|

| 51 |

+

# Set home and path variables for the user

|

| 52 |

+

ENV HOME=/home/${NB_USER} \

|

| 53 |

+

PATH=/home/${NB_USER}/.local/bin:$PATH

|

| 54 |

+

|

| 55 |

+

# Set the working directory to the user's home directory

|

| 56 |

+

WORKDIR ${HOME}

|

| 57 |

+

|

| 58 |

+

# Upgrade pip and install Python dependencies

|

| 59 |

+

RUN python3.8 -m pip install --upgrade pip

|

| 60 |

+

COPY requirements.txt /tmp/requirements.txt

|

| 61 |

+

RUN python3.8 -m pip install -r /tmp/requirements.txt

|

| 62 |

+

|

| 63 |

+

# Copy the application code into the container at /home/jovyan

|

| 64 |

+

COPY --chown=${NB_USER}:${NB_USER} . ${HOME}

|

| 65 |

+

|

| 66 |

+

# Expose port for Streamlit

|

| 67 |

+

EXPOSE 7860

|

| 68 |

+

|

| 69 |

+

# Define the entry point for the container

|

| 70 |

+

ENTRYPOINT ["streamlit", "run", "Demo.py", "--server.port=7860", "--server.address=0.0.0.0"]

|

images/Sentiment-Analysis.jpg

ADDED

|

images/dataset.png

ADDED

|

images/johnsnowlabs-sentiment-output.png

ADDED

|

pages/Workflow & Model Overview.py

ADDED

|

@@ -0,0 +1,224 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

# Custom CSS for better styling

|

| 4 |

+

st.markdown("""

|

| 5 |

+

<style>

|

| 6 |

+

.main-title {

|

| 7 |

+

font-size: 36px;

|

| 8 |

+

color: #4A90E2;

|

| 9 |

+

font-weight: bold;

|

| 10 |

+

text-align: center;

|

| 11 |

+

}

|

| 12 |

+

.sub-title {

|

| 13 |

+

font-size: 24px;

|

| 14 |

+

color: #4A90E2;

|

| 15 |

+

margin-top: 20px;

|

| 16 |

+

}

|

| 17 |

+

.section {

|

| 18 |

+

background-color: #f9f9f9;

|

| 19 |

+

padding: 15px;

|

| 20 |

+

border-radius: 10px;

|

| 21 |

+

margin-top: 20px;

|

| 22 |

+

}

|

| 23 |

+

.section h2 {

|

| 24 |

+

font-size: 22px;

|

| 25 |

+

color: #4A90E2;

|

| 26 |

+

}

|

| 27 |

+

.section p, .section ul {

|

| 28 |

+

color: #666666;

|

| 29 |

+

}

|

| 30 |

+

.link {

|

| 31 |

+

color: #4A90E2;

|

| 32 |

+

text-decoration: none;

|

| 33 |

+

}

|

| 34 |

+

</style>

|

| 35 |

+

""", unsafe_allow_html=True)

|

| 36 |

+

|

| 37 |

+

# Introduction

|

| 38 |

+

st.markdown('<div class="main-title">Sentiment Analysis with Spark NLP</div>', unsafe_allow_html=True)

|

| 39 |

+

|

| 40 |

+

st.markdown("""

|

| 41 |

+

<div class="section">

|

| 42 |

+

<p>Welcome to the Spark NLP Sentiment Analysis Demo App! Sentiment analysis is an automated process capable of understanding the feelings or opinions that underlie a text. This process is considered a text classification and is one of the most interesting subfields of NLP. Using Spark NLP, it is possible to analyze the sentiment in a text with high accuracy.</p>

|

| 43 |

+

<p>This app demonstrates how to use Spark NLP's SentimentDetector to perform sentiment analysis using a rule-based approach.</p>

|

| 44 |

+

</div>

|

| 45 |

+

""", unsafe_allow_html=True)

|

| 46 |

+

|

| 47 |

+

st.image('images/Sentiment-Analysis.jpg',caption="Difference between rule-based and machine learning based sentiment analysis applications", use_column_width='auto')

|

| 48 |

+

|

| 49 |

+

# About Sentiment Analysis

|

| 50 |

+

st.markdown('<div class="sub-title">About Sentiment Analysis</div>', unsafe_allow_html=True)

|

| 51 |

+

st.markdown("""

|

| 52 |

+

<div class="section">

|

| 53 |

+

<p>Sentiment analysis studies the subjective information in an expression, such as opinions, appraisals, emotions, or attitudes towards a topic, person, or entity. Expressions can be classified as positive, negative, or neutral — in some cases, even more detailed.</p>

|

| 54 |

+

<p>Some popular sentiment analysis applications include social media monitoring, customer support management, and analyzing customer feedback.</p>

|

| 55 |

+

</div>

|

| 56 |

+

""", unsafe_allow_html=True)

|

| 57 |

+

|

| 58 |

+

# Using SentimentDetector in Spark NLP

|

| 59 |

+

st.markdown('<div class="sub-title">Using SentimentDetector in Spark NLP</div>', unsafe_allow_html=True)

|

| 60 |

+

st.markdown("""

|

| 61 |

+

<div class="section">

|

| 62 |

+

<p>The SentimentDetector annotator in Spark NLP uses a rule-based approach to analyze the sentiment in text data. This method involves using a set of predefined rules or patterns to classify text as positive, negative, or neutral.</p>

|

| 63 |

+

<p>Spark NLP also provides Machine Learning (ML) and Deep Learning (DL) solutions for sentiment analysis. If you are interested in those approaches, please check the <a class="link" href="https://nlp.johnsnowlabs.com/docs/en/annotators#viveknsentiment" target="_blank" rel="noopener">ViveknSentiment </a> and <a class="link" href="https://nlp.johnsnowlabs.com/docs/en/annotators#sentimentdl" target="_blank" rel="noopener">SentimentDL</a> annotators of Spark NLP.</p>

|

| 64 |

+

</div>

|

| 65 |

+

""", unsafe_allow_html=True)

|

| 66 |

+

|

| 67 |

+

st.markdown('<h2 class="sub-title">Example Usage in Python</h2>', unsafe_allow_html=True)

|

| 68 |

+

st.markdown('<p>Here’s how you can implement sentiment analysis using the SentimentDetector annotator in Spark NLP:</p>', unsafe_allow_html=True)

|

| 69 |

+

|

| 70 |

+

# Setup Instructions

|

| 71 |

+

st.markdown('<div class="sub-title">Setup</div>', unsafe_allow_html=True)

|

| 72 |

+

st.markdown('<p>To install Spark NLP in Python, use your favorite package manager (conda, pip, etc.). For example:</p>', unsafe_allow_html=True)

|

| 73 |

+

st.code("""

|

| 74 |

+

pip install spark-nlp

|

| 75 |

+

pip install pyspark

|

| 76 |

+

""", language="bash")

|

| 77 |

+

|

| 78 |

+

st.markdown("<p>Then, import Spark NLP and start a Spark session:</p>", unsafe_allow_html=True)

|

| 79 |

+

st.code("""

|

| 80 |

+

import sparknlp

|

| 81 |

+

|

| 82 |

+

# Start Spark Session

|

| 83 |

+

spark = sparknlp.start()

|

| 84 |

+

""", language='python')

|

| 85 |

+

|

| 86 |

+

# load data

|

| 87 |

+

st.markdown('<div class="sub-title">Start by loading the Dataset, Lemmas and the Sentiment Dictionary.</div>', unsafe_allow_html=True)

|

| 88 |

+

st.code("""

|

| 89 |

+

!wget -N https://s3.amazonaws.com/auxdata.johnsnowlabs.com/public/resources/en/lemma-corpus-small/lemmas_small.txt -P /tmp

|

| 90 |

+

!wget -N https://s3.amazonaws.com/auxdata.johnsnowlabs.com/public/resources/en/sentiment-corpus/default-sentiment-dict.txt -P /tmp

|

| 91 |

+

""", language="bash")

|

| 92 |

+

|

| 93 |

+

st.image('images/dataset.png', caption="First few lines of the lemmas and sentiment dictionary", use_column_width='auto')

|

| 94 |

+

|

| 95 |

+

# Sentiment Analysis Example

|

| 96 |

+

st.markdown('<div class="sub-title">Example Usage: Sentiment Analysis with SentimentDetector</div>', unsafe_allow_html=True)

|

| 97 |

+

st.code('''

|

| 98 |

+

from sparknlp.base import DocumentAssembler, Pipeline, Finisher

|

| 99 |

+

from sparknlp.annotator import (

|

| 100 |

+

SentenceDetector,

|

| 101 |

+

Tokenizer,

|

| 102 |

+

Lemmatizer,

|

| 103 |

+

SentimentDetector

|

| 104 |

+

)

|

| 105 |

+

import pyspark.sql.functions as F

|

| 106 |

+

|

| 107 |

+

# Step 1: Transforms raw texts to document annotation

|

| 108 |

+

document_assembler = (

|

| 109 |

+

DocumentAssembler()

|

| 110 |

+

.setInputCol("text")

|

| 111 |

+

.setOutputCol("document")

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

# Step 2: Sentence Detection

|

| 115 |

+

sentence_detector = SentenceDetector().setInputCols(["document"]).setOutputCol("sentence")

|

| 116 |

+

|

| 117 |

+

# Step 3: Tokenization

|

| 118 |

+

tokenizer = Tokenizer().setInputCols(["sentence"]).setOutputCol("token")

|

| 119 |

+

|

| 120 |

+

# Step 4: Lemmatization

|

| 121 |

+

lemmatizer = (

|

| 122 |

+

Lemmatizer()

|

| 123 |

+

.setInputCols("token")

|

| 124 |

+

.setOutputCol("lemma")

|

| 125 |

+

.setDictionary("/tmp/lemmas_small.txt", key_delimiter="->", value_delimiter="\\t")

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

# Step 5: Sentiment Detection

|

| 129 |

+

sentiment_detector = (

|

| 130 |

+

SentimentDetector()

|

| 131 |

+

.setInputCols(["lemma", "sentence"])

|

| 132 |

+

.setOutputCol("sentiment_score")

|

| 133 |

+

.setDictionary("/tmp/default-sentiment-dict.txt", ",")

|

| 134 |

+

)

|

| 135 |

+

|

| 136 |

+

# Step 6: Finisher

|

| 137 |

+

finisher = (

|

| 138 |

+

Finisher()

|

| 139 |

+

.setInputCols(["sentiment_score"])

|

| 140 |

+

.setOutputCols(["sentiment"])

|

| 141 |

+

)

|

| 142 |

+

|

| 143 |

+

# Define the pipeline

|

| 144 |

+

pipeline = Pipeline(

|

| 145 |

+

stages=[

|

| 146 |

+

document_assembler,

|

| 147 |

+

sentence_detector,

|

| 148 |

+

tokenizer,

|

| 149 |

+

lemmatizer,

|

| 150 |

+

sentiment_detector,

|

| 151 |

+

finisher,

|

| 152 |

+

]

|

| 153 |

+

)

|

| 154 |

+

|

| 155 |

+

# Create a spark Data Frame with an example sentence

|

| 156 |

+

data = spark.createDataFrame(

|

| 157 |

+

[

|

| 158 |

+

["The restaurant staff is really nice"]

|

| 159 |

+

]

|

| 160 |

+

).toDF("text") # use the column name `text` defined in the pipeline as input

|

| 161 |

+

|

| 162 |

+

# Fit-transform to get predictions

|

| 163 |

+

result = pipeline.fit(data).transform(data).show(truncate=50)

|

| 164 |

+

''', language='python')

|

| 165 |

+

|

| 166 |

+

st.text("""

|

| 167 |

+

+-----------------------------------+----------+

|

| 168 |

+

| text| sentiment|

|

| 169 |

+

+-----------------------------------+----------+

|

| 170 |

+

|The restaurant staff is really nice|[positive]|

|

| 171 |

+

+-----------------------------------+----------+

|

| 172 |

+

""")

|

| 173 |

+

|

| 174 |

+

st.markdown("""

|

| 175 |

+

<p>The code snippet demonstrates how to set up a pipeline in Spark NLP to perform sentiment analysis on text data using the SentimentDetector annotator. The resulting DataFrame contains the sentiment predictions.</p>

|

| 176 |

+

""", unsafe_allow_html=True)

|

| 177 |

+

|

| 178 |

+

# One-liner Alternative

|

| 179 |

+

st.markdown('<div class="sub-title">One-liner Alternative</div>', unsafe_allow_html=True)

|

| 180 |

+

st.markdown("""

|

| 181 |

+

<div class="section">

|

| 182 |

+

<p>In October 2022, John Snow Labs released the open-source <code>johnsnowlabs</code> library that contains all the company products, open-source and licensed, under one common library. This simplified the workflow, especially for users working with more than one of the libraries (e.g., Spark NLP + Healthcare NLP). This new library is a wrapper on all of John Snow Lab’s libraries and can be installed with pip:</p>

|

| 183 |

+

<p><code>pip install johnsnowlabs</code></p>

|

| 184 |

+

</div>

|

| 185 |

+

""", unsafe_allow_html=True)

|

| 186 |

+

|

| 187 |

+

st.markdown('<p>To run sentiment analysis with one line of code, we can simply:</p>', unsafe_allow_html=True)

|

| 188 |

+

st.code("""

|

| 189 |

+

# Import the NLP module which contains Spark NLP and NLU libraries

|

| 190 |

+

from johnsnowlabs import nlp

|

| 191 |

+

|

| 192 |

+

sample_text = "The restaurant staff is really nice"

|

| 193 |

+

|

| 194 |

+

# Returns a pandas DataFrame, we select the desired columns

|

| 195 |

+

nlp.load('en.sentiment').predict(sample_text, output_level='sentence')

|

| 196 |

+

""", language='python')

|

| 197 |

+

|

| 198 |

+

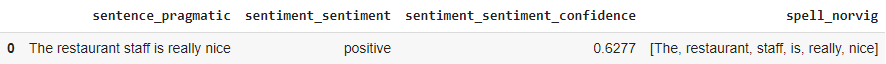

st.image('images/johnsnowlabs-sentiment-output.png', use_column_width='auto')

|

| 199 |

+

|

| 200 |

+

st.markdown("""

|

| 201 |

+

<p>This approach demonstrates how to use the <code>johnsnowlabs</code> library to perform sentiment analysis with a single line of code. The resulting DataFrame contains the sentiment predictions.</p>

|

| 202 |

+

""", unsafe_allow_html=True)

|

| 203 |

+

|

| 204 |

+

# Conclusion

|

| 205 |

+

st.markdown("""

|

| 206 |

+

<div class="section">

|

| 207 |

+

<h2>Conclusion</h2>

|

| 208 |

+

<p>In this app, we demonstrated how to use Spark NLP's SentimentDetector annotator to perform sentiment analysis on text data. These powerful tools enable users to efficiently process large datasets and identify sentiment, providing deeper insights for various applications. By integrating these annotators into your NLP pipelines, you can enhance text understanding, information extraction, and customer sentiment analysis.</p>

|

| 209 |

+

</div>

|

| 210 |

+

""", unsafe_allow_html=True)

|

| 211 |

+

|

| 212 |

+

# References and Additional Information

|

| 213 |

+

st.markdown('<div class="sub-title">For additional information, please check the following references.</div>', unsafe_allow_html=True)

|

| 214 |

+

|

| 215 |

+

st.markdown("""

|

| 216 |

+

<div class="section">

|

| 217 |

+

<ul>

|

| 218 |

+

<li>Documentation : <a href="https://nlp.johnsnowlabs.com/docs/en/transformers#sentiment" target="_blank" rel="noopener">SentimentDetector</a></li>

|

| 219 |

+

<li>Python Docs : <a href="https://nlp.johnsnowlabs.com/api/python/reference/autosummary/sparknlp/annotator/sentiment/index.html#sparknlp.annotator.sentiment.SentimentDetector" target="_blank" rel="noopener">SentimentDetector</a></li>

|

| 220 |

+

<li>Scala Docs : <a href="https://nlp.johnsnowlabs.com/api/com/johnsnowlabs/nlp/annotators/sentiment/SentimentDetector.html" target="_blank" rel="noopener">SentimentDetector</a></li>

|

| 221 |

+

<li>Example Notebook : <a href="https://github.com/JohnSnowLabs/spark-nlp-workshop/blob/master/jupyter/training/english/classification/Sentiment%20Analysis.ipynb" target="_blank" rel="noopener">Sentiment Analysis</a></li>

|

| 222 |

+

</ul>

|

| 223 |

+

</div>

|

| 224 |

+

""", unsafe_allow_html=True)

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

streamlit

|

| 2 |

+

pandas

|

| 3 |

+

numpy

|

| 4 |

+

spark-nlp

|

| 5 |

+

pyspark

|