Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .DS_Store +0 -0

- .env.example +33 -0

- .gitattributes +5 -0

- .github/FUNDING.yml +13 -0

- .github/ISSUE_TEMPLATE/bug_report.md +27 -0

- .github/ISSUE_TEMPLATE/feature_request.md +20 -0

- .github/PULL_REQUEST_TEMPLATE.yml +25 -0

- .github/dependabot.yml +14 -0

- .github/workflows/label.yml +22 -0

- .github/workflows/pylint.yml +23 -0

- .github/workflows/python-publish.yml +32 -0

- .github/workflows/quality.yml +23 -0

- .github/workflows/ruff.yml +8 -0

- .github/workflows/run_test.yml +23 -0

- .github/workflows/stale.yml +27 -0

- .github/workflows/test.yml +49 -0

- .github/workflows/unit-test.yml +45 -0

- .github/workflows/update_space.yml +28 -0

- .gitignore +182 -0

- .readthedocs.yml +13 -0

- =0.3.0 +0 -0

- =3.38.0 +64 -0

- CONTRIBUTING.md +248 -0

- Dockerfile +48 -0

- LICENSE +201 -0

- README.md +306 -8

- api/__init__.py +0 -0

- api/app.py +47 -0

- api/olds/container.py +62 -0

- api/olds/main.py +130 -0

- api/olds/worker.py +44 -0

- apps/discord.py +38 -0

- docs/applications/customer_support.md +42 -0

- docs/applications/enterprise.md +0 -0

- docs/applications/marketing_agencies.md +64 -0

- docs/architecture.md +358 -0

- docs/assets/css/extra.css +7 -0

- docs/assets/img/SwarmsLogoIcon.png +0 -0

- docs/assets/img/swarmsbanner.png +0 -0

- docs/assets/img/tools/output.png +0 -0

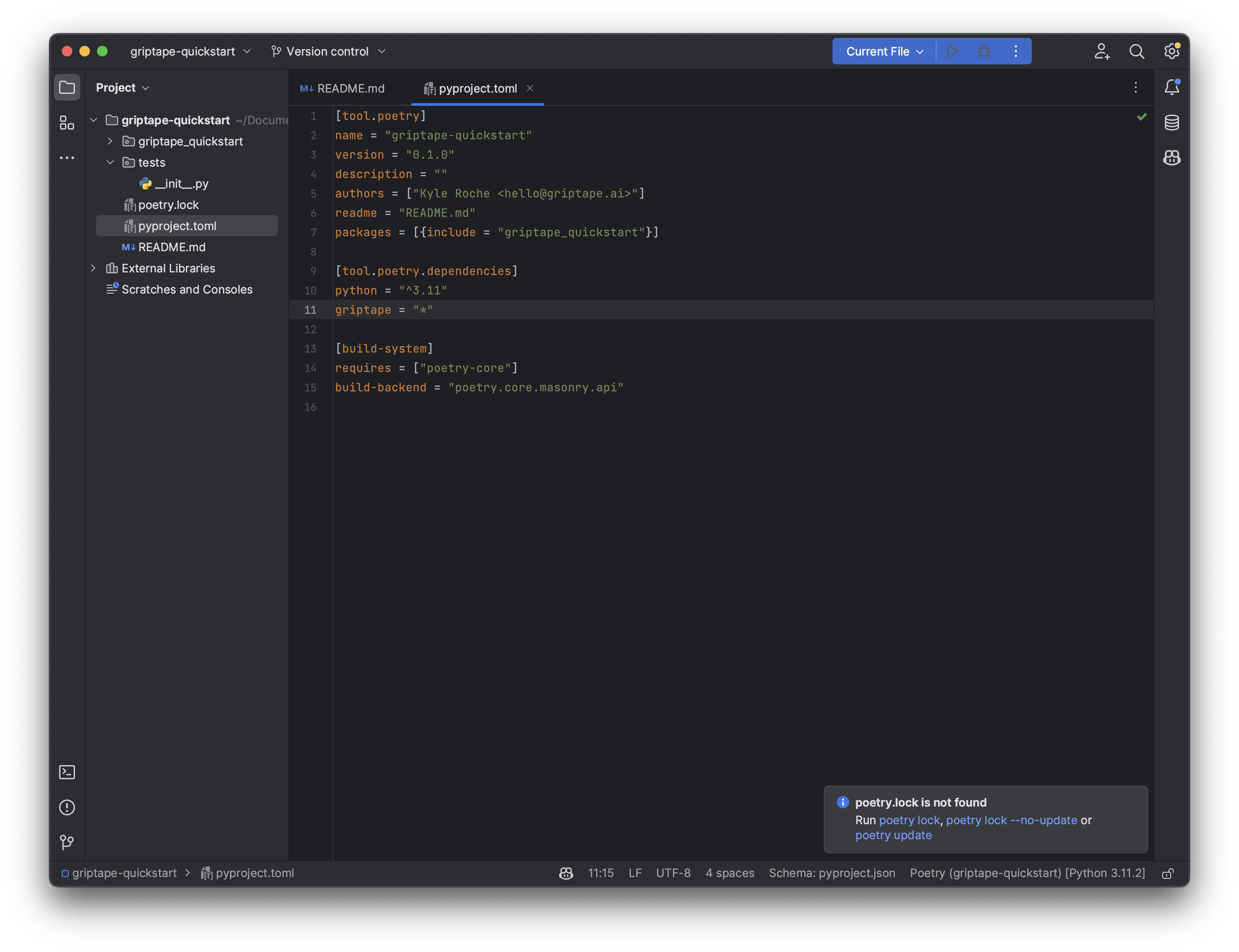

- docs/assets/img/tools/poetry_setup.png +0 -0

- docs/assets/img/tools/toml.png +0 -0

- docs/bounties.md +86 -0

- docs/checklist.md +122 -0

- docs/contributing.md +123 -0

- docs/demos.md +9 -0

- docs/design.md +152 -0

- docs/distribution.md +469 -0

- docs/examples/count-tokens.md +29 -0

- docs/examples/index.md +3 -0

.DS_Store

ADDED

|

Binary file (8.2 kB). View file

|

|

|

.env.example

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

OPENAI_API_KEY="your_openai_api_key_here"

|

| 2 |

+

GOOGLE_API_KEY=""

|

| 3 |

+

ANTHROPIC_API_KEY=""

|

| 4 |

+

|

| 5 |

+

WOLFRAM_ALPHA_APPID="your_wolfram_alpha_appid_here"

|

| 6 |

+

ZAPIER_NLA_API_KEY="your_zapier_nla_api_key_here"

|

| 7 |

+

|

| 8 |

+

EVAL_PORT=8000

|

| 9 |

+

MODEL_NAME="gpt-4"

|

| 10 |

+

CELERY_BROKER_URL="redis://localhost:6379"

|

| 11 |

+

|

| 12 |

+

SERVER="http://localhost:8000"

|

| 13 |

+

USE_GPU=True

|

| 14 |

+

PLAYGROUND_DIR="playground"

|

| 15 |

+

|

| 16 |

+

LOG_LEVEL="INFO"

|

| 17 |

+

BOT_NAME="Orca"

|

| 18 |

+

|

| 19 |

+

WINEDB_HOST="your_winedb_host_here"

|

| 20 |

+

WINEDB_PASSWORD="your_winedb_password_here"

|

| 21 |

+

BING_SEARCH_URL="your_bing_search_url_here"

|

| 22 |

+

|

| 23 |

+

BING_SUBSCRIPTION_KEY="your_bing_subscription_key_here"

|

| 24 |

+

SERPAPI_API_KEY="your_serpapi_api_key_here"

|

| 25 |

+

IFTTTKey="your_iftttkey_here"

|

| 26 |

+

|

| 27 |

+

BRAVE_API_KEY="your_brave_api_key_here"

|

| 28 |

+

SPOONACULAR_KEY="your_spoonacular_key_here"

|

| 29 |

+

HF_API_KEY="your_huggingface_api_key_here"

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

REDIS_HOST=

|

| 33 |

+

REDIS_PORT=

|

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/Agora-Banner-blend.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/swarms_demo.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

swarms/agents/models/segment_anything/assets/masks1.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

swarms/agents/models/segment_anything/assets/minidemo.gif filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

swarms/agents/models/segment_anything/assets/notebook2.png filter=lfs diff=lfs merge=lfs -text

|

.github/FUNDING.yml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# These are supported funding model platforms

|

| 2 |

+

|

| 3 |

+

github: [kyegomez]

|

| 4 |

+

patreon: # Replace with a single Patreon username

|

| 5 |

+

open_collective: # Replace with a single Open Collective username

|

| 6 |

+

ko_fi: # Replace with a single Ko-fi username

|

| 7 |

+

tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

|

| 8 |

+

community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

|

| 9 |

+

liberapay: # Replace with a single Liberapay username

|

| 10 |

+

issuehunt: # Replace with a single IssueHunt username

|

| 11 |

+

otechie: # Replace with a single Otechie username

|

| 12 |

+

lfx_crowdfunding: # Replace with a single LFX Crowdfunding project-name e.g., cloud-foundry

|

| 13 |

+

custom: #Nothing

|

.github/ISSUE_TEMPLATE/bug_report.md

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Bug report

|

| 3 |

+

about: Create a report to help us improve

|

| 4 |

+

title: "[BUG] "

|

| 5 |

+

labels: bug

|

| 6 |

+

assignees: kyegomez

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**Describe the bug**

|

| 11 |

+

A clear and concise description of what the bug is.

|

| 12 |

+

|

| 13 |

+

**To Reproduce**

|

| 14 |

+

Steps to reproduce the behavior:

|

| 15 |

+

1. Go to '...'

|

| 16 |

+

2. Click on '....'

|

| 17 |

+

3. Scroll down to '....'

|

| 18 |

+

4. See error

|

| 19 |

+

|

| 20 |

+

**Expected behavior**

|

| 21 |

+

A clear and concise description of what you expected to happen.

|

| 22 |

+

|

| 23 |

+

**Screenshots**

|

| 24 |

+

If applicable, add screenshots to help explain your problem.

|

| 25 |

+

|

| 26 |

+

**Additional context**

|

| 27 |

+

Add any other context about the problem here.

|

.github/ISSUE_TEMPLATE/feature_request.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Feature request

|

| 3 |

+

about: Suggest an idea for this project

|

| 4 |

+

title: ''

|

| 5 |

+

labels: ''

|

| 6 |

+

assignees: 'kyegomez'

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**Is your feature request related to a problem? Please describe.**

|

| 11 |

+

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

| 12 |

+

|

| 13 |

+

**Describe the solution you'd like**

|

| 14 |

+

A clear and concise description of what you want to happen.

|

| 15 |

+

|

| 16 |

+

**Describe alternatives you've considered**

|

| 17 |

+

A clear and concise description of any alternative solutions or features you've considered.

|

| 18 |

+

|

| 19 |

+

**Additional context**

|

| 20 |

+

Add any other context or screenshots about the feature request here.

|

.github/PULL_REQUEST_TEMPLATE.yml

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- Thank you for contributing to Swarms!

|

| 2 |

+

|

| 3 |

+

Replace this comment with:

|

| 4 |

+

- Description: a description of the change,

|

| 5 |

+

- Issue: the issue # it fixes (if applicable),

|

| 6 |

+

- Dependencies: any dependencies required for this change,

|

| 7 |

+

- Tag maintainer: for a quicker response, tag the relevant maintainer (see below),

|

| 8 |

+

- Twitter handle: we announce bigger features on Twitter. If your PR gets announced and you'd like a mention, we'll gladly shout you out!

|

| 9 |

+

|

| 10 |

+

If you're adding a new integration, please include:

|

| 11 |

+

1. a test for the integration, preferably unit tests that do not rely on network access,

|

| 12 |

+

2. an example notebook showing its use.

|

| 13 |

+

|

| 14 |

+

Maintainer responsibilities:

|

| 15 |

+

- General / Misc / if you don't know who to tag: kye@apac.ai

|

| 16 |

+

- DataLoaders / VectorStores / Retrievers: kye@apac.ai

|

| 17 |

+

- Models / Prompts: kye@apac.ai

|

| 18 |

+

- Memory: kye@apac.ai

|

| 19 |

+

- Agents / Tools / Toolkits: kye@apac.ai

|

| 20 |

+

- Tracing / Callbacks: kye@apac.ai

|

| 21 |

+

- Async: kye@apac.ai

|

| 22 |

+

|

| 23 |

+

If no one reviews your PR within a few days, feel free to kye@apac.ai

|

| 24 |

+

|

| 25 |

+

See contribution guidelines for more information on how to write/run tests, lint, etc: https://github.com/kyegomez/swarms

|

.github/dependabot.yml

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# https://docs.github.com/en/code-security/supply-chain-security/keeping-your-dependencies-updated-automatically/configuration-options-for-dependency-updates

|

| 2 |

+

|

| 3 |

+

version: 2

|

| 4 |

+

updates:

|

| 5 |

+

- package-ecosystem: "github-actions"

|

| 6 |

+

directory: "/"

|

| 7 |

+

schedule:

|

| 8 |

+

interval: "weekly"

|

| 9 |

+

|

| 10 |

+

- package-ecosystem: "pip"

|

| 11 |

+

directory: "/"

|

| 12 |

+

schedule:

|

| 13 |

+

interval: "weekly"

|

| 14 |

+

|

.github/workflows/label.yml

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This workflow will triage pull requests and apply a label based on the

|

| 2 |

+

# paths that are modified in the pull request.

|

| 3 |

+

#

|

| 4 |

+

# To use this workflow, you will need to set up a .github/labeler.yml

|

| 5 |

+

# file with configuration. For more information, see:

|

| 6 |

+

# https://github.com/actions/labeler

|

| 7 |

+

|

| 8 |

+

name: Labeler

|

| 9 |

+

on: [pull_request_target]

|

| 10 |

+

|

| 11 |

+

jobs:

|

| 12 |

+

label:

|

| 13 |

+

|

| 14 |

+

runs-on: ubuntu-latest

|

| 15 |

+

permissions:

|

| 16 |

+

contents: read

|

| 17 |

+

pull-requests: write

|

| 18 |

+

|

| 19 |

+

steps:

|

| 20 |

+

- uses: actions/labeler@v4

|

| 21 |

+

with:

|

| 22 |

+

repo-token: "${{ secrets.GITHUB_TOKEN }}"

|

.github/workflows/pylint.yml

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Pylint

|

| 2 |

+

|

| 3 |

+

on: [push]

|

| 4 |

+

|

| 5 |

+

jobs:

|

| 6 |

+

build:

|

| 7 |

+

runs-on: ubuntu-latest

|

| 8 |

+

strategy:

|

| 9 |

+

matrix:

|

| 10 |

+

python-version: ["3.8", "3.9", "3.10"]

|

| 11 |

+

steps:

|

| 12 |

+

- uses: actions/checkout@v4

|

| 13 |

+

- name: Set up Python ${{ matrix.python-version }}

|

| 14 |

+

uses: actions/setup-python@v4

|

| 15 |

+

with:

|

| 16 |

+

python-version: ${{ matrix.python-version }}

|

| 17 |

+

- name: Install dependencies

|

| 18 |

+

run: |

|

| 19 |

+

python -m pip install --upgrade pip

|

| 20 |

+

pip install pylint

|

| 21 |

+

- name: Analysing the code with pylint

|

| 22 |

+

run: |

|

| 23 |

+

pylint $(git ls-files '*.py')

|

.github/workflows/python-publish.yml

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

name: Upload Python Package

|

| 3 |

+

|

| 4 |

+

on:

|

| 5 |

+

release:

|

| 6 |

+

types: [published]

|

| 7 |

+

|

| 8 |

+

permissions:

|

| 9 |

+

contents: read

|

| 10 |

+

|

| 11 |

+

jobs:

|

| 12 |

+

deploy:

|

| 13 |

+

|

| 14 |

+

runs-on: ubuntu-latest

|

| 15 |

+

|

| 16 |

+

steps:

|

| 17 |

+

- uses: actions/checkout@v4

|

| 18 |

+

- name: Set up Python

|

| 19 |

+

uses: actions/setup-python@v4

|

| 20 |

+

with:

|

| 21 |

+

python-version: '3.x'

|

| 22 |

+

- name: Install dependencies

|

| 23 |

+

run: |

|

| 24 |

+

python -m pip install --upgrade pip

|

| 25 |

+

pip install build

|

| 26 |

+

- name: Build package

|

| 27 |

+

run: python -m build

|

| 28 |

+

- name: Publish package

|

| 29 |

+

uses: pypa/gh-action-pypi-publish@f8c70e705ffc13c3b4d1221169b84f12a75d6ca8

|

| 30 |

+

with:

|

| 31 |

+

user: __token__

|

| 32 |

+

password: ${{ secrets.PYPI_API_TOKEN }}

|

.github/workflows/quality.yml

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Quality

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [ "main" ]

|

| 6 |

+

pull_request:

|

| 7 |

+

branches: [ "main" ]

|

| 8 |

+

|

| 9 |

+

jobs:

|

| 10 |

+

lint:

|

| 11 |

+

runs-on: ubuntu-latest

|

| 12 |

+

strategy:

|

| 13 |

+

fail-fast: false

|

| 14 |

+

steps:

|

| 15 |

+

- name: Checkout actions

|

| 16 |

+

uses: actions/checkout@v4

|

| 17 |

+

with:

|

| 18 |

+

fetch-depth: 0

|

| 19 |

+

- name: Init environment

|

| 20 |

+

uses: ./.github/actions/init-environment

|

| 21 |

+

- name: Run linter

|

| 22 |

+

run: |

|

| 23 |

+

pylint `git diff --name-only --diff-filter=d origin/main HEAD | grep -E '\.py$' | tr '\n' ' '`

|

.github/workflows/ruff.yml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Ruff

|

| 2 |

+

on: [ push, pull_request ]

|

| 3 |

+

jobs:

|

| 4 |

+

ruff:

|

| 5 |

+

runs-on: ubuntu-latest

|

| 6 |

+

steps:

|

| 7 |

+

- uses: actions/checkout@v4

|

| 8 |

+

- uses: chartboost/ruff-action@v1

|

.github/workflows/run_test.yml

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Python application test

|

| 2 |

+

|

| 3 |

+

on: [push]

|

| 4 |

+

|

| 5 |

+

jobs:

|

| 6 |

+

build:

|

| 7 |

+

|

| 8 |

+

runs-on: ubuntu-latest

|

| 9 |

+

|

| 10 |

+

steps:

|

| 11 |

+

- uses: actions/checkout@v4

|

| 12 |

+

- name: Set up Python 3.8

|

| 13 |

+

uses: actions/setup-python@v4

|

| 14 |

+

with:

|

| 15 |

+

python-version: 3.8

|

| 16 |

+

- name: Install dependencies

|

| 17 |

+

run: |

|

| 18 |

+

python -m pip install --upgrade pip

|

| 19 |

+

pip install pytest

|

| 20 |

+

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

| 21 |

+

- name: Run tests with pytest

|

| 22 |

+

run: |

|

| 23 |

+

pytest tests/

|

.github/workflows/stale.yml

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This workflow warns and then closes issues and PRs that have had no activity for a specified amount of time.

|

| 2 |

+

#

|

| 3 |

+

# You can adjust the behavior by modifying this file.

|

| 4 |

+

# For more information, see:

|

| 5 |

+

# https://github.com/actions/stale

|

| 6 |

+

name: Mark stale issues and pull requests

|

| 7 |

+

|

| 8 |

+

on:

|

| 9 |

+

schedule:

|

| 10 |

+

- cron: '26 12 * * *'

|

| 11 |

+

|

| 12 |

+

jobs:

|

| 13 |

+

stale:

|

| 14 |

+

|

| 15 |

+

runs-on: ubuntu-latest

|

| 16 |

+

permissions:

|

| 17 |

+

issues: write

|

| 18 |

+

pull-requests: write

|

| 19 |

+

|

| 20 |

+

steps:

|

| 21 |

+

- uses: actions/stale@v8

|

| 22 |

+

with:

|

| 23 |

+

repo-token: ${{ secrets.GITHUB_TOKEN }}

|

| 24 |

+

stale-issue-message: 'Stale issue message'

|

| 25 |

+

stale-pr-message: 'Stale pull request message'

|

| 26 |

+

stale-issue-label: 'no-issue-activity'

|

| 27 |

+

stale-pr-label: 'no-pr-activity'

|

.github/workflows/test.yml

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: test

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [master]

|

| 6 |

+

pull_request:

|

| 7 |

+

workflow_dispatch:

|

| 8 |

+

|

| 9 |

+

env:

|

| 10 |

+

POETRY_VERSION: "1.4.2"

|

| 11 |

+

|

| 12 |

+

jobs:

|

| 13 |

+

build:

|

| 14 |

+

runs-on: ubuntu-latest

|

| 15 |

+

strategy:

|

| 16 |

+

matrix:

|

| 17 |

+

python-version:

|

| 18 |

+

- "3.8"

|

| 19 |

+

- "3.9"

|

| 20 |

+

- "3.10"

|

| 21 |

+

- "3.11"

|

| 22 |

+

test_type:

|

| 23 |

+

- "core"

|

| 24 |

+

- "extended"

|

| 25 |

+

name: Python ${{ matrix.python-version }} ${{ matrix.test_type }}

|

| 26 |

+

steps:

|

| 27 |

+

- uses: actions/checkout@v4

|

| 28 |

+

- name: Set up Python ${{ matrix.python-version }}

|

| 29 |

+

uses: "./.github/actions/poetry_setup"

|

| 30 |

+

with:

|

| 31 |

+

python-version: ${{ matrix.python-version }}

|

| 32 |

+

poetry-version: "1.4.2"

|

| 33 |

+

cache-key: ${{ matrix.test_type }}

|

| 34 |

+

install-command: |

|

| 35 |

+

if [ "${{ matrix.test_type }}" == "core" ]; then

|

| 36 |

+

echo "Running core tests, installing dependencies with poetry..."

|

| 37 |

+

poetry install

|

| 38 |

+

else

|

| 39 |

+

echo "Running extended tests, installing dependencies with poetry..."

|

| 40 |

+

poetry install -E extended_testing

|

| 41 |

+

fi

|

| 42 |

+

- name: Run ${{matrix.test_type}} tests

|

| 43 |

+

run: |

|

| 44 |

+

if [ "${{ matrix.test_type }}" == "core" ]; then

|

| 45 |

+

make test

|

| 46 |

+

else

|

| 47 |

+

make extended_tests

|

| 48 |

+

fi

|

| 49 |

+

shell: bash

|

.github/workflows/unit-test.yml

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: build

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [ main ]

|

| 6 |

+

pull_request:

|

| 7 |

+

branches: [ main ]

|

| 8 |

+

|

| 9 |

+

jobs:

|

| 10 |

+

|

| 11 |

+

build:

|

| 12 |

+

|

| 13 |

+

runs-on: ubuntu-latest

|

| 14 |

+

|

| 15 |

+

steps:

|

| 16 |

+

- uses: actions/checkout@v4

|

| 17 |

+

|

| 18 |

+

- name: Setup Python

|

| 19 |

+

uses: actions/setup-python@v4

|

| 20 |

+

with:

|

| 21 |

+

python-version: '3.10'

|

| 22 |

+

|

| 23 |

+

- name: Install dependencies

|

| 24 |

+

run: pip install -r requirements.txt

|

| 25 |

+

|

| 26 |

+

- name: Run Python unit tests

|

| 27 |

+

run: python3 -m unittest tests/swarms

|

| 28 |

+

|

| 29 |

+

- name: Verify that the Docker image for the action builds

|

| 30 |

+

run: docker build . --file Dockerfile

|

| 31 |

+

|

| 32 |

+

- name: Integration test 1

|

| 33 |

+

uses: ./

|

| 34 |

+

with:

|

| 35 |

+

input-one: something

|

| 36 |

+

input-two: true

|

| 37 |

+

|

| 38 |

+

- name: Integration test 2

|

| 39 |

+

uses: ./

|

| 40 |

+

with:

|

| 41 |

+

input-one: something else

|

| 42 |

+

input-two: false

|

| 43 |

+

|

| 44 |

+

- name: Verify integration test results

|

| 45 |

+

run: python3 -m unittest unittesting/swarms

|

.github/workflows/update_space.yml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Run Python script

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- discord-bot

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

build:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

|

| 12 |

+

steps:

|

| 13 |

+

- name: Checkout

|

| 14 |

+

uses: actions/checkout@v2

|

| 15 |

+

|

| 16 |

+

- name: Set up Python

|

| 17 |

+

uses: actions/setup-python@v2

|

| 18 |

+

with:

|

| 19 |

+

python-version: '3.9'

|

| 20 |

+

|

| 21 |

+

- name: Install Gradio

|

| 22 |

+

run: python -m pip install gradio

|

| 23 |

+

|

| 24 |

+

- name: Log in to Hugging Face

|

| 25 |

+

run: python -c 'import huggingface_hub; huggingface_hub.login(token="${{ secrets.hf_token }}")'

|

| 26 |

+

|

| 27 |

+

- name: Deploy to Spaces

|

| 28 |

+

run: gradio deploy

|

.gitignore

ADDED

|

@@ -0,0 +1,182 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

| 2 |

+

.venv/

|

| 3 |

+

|

| 4 |

+

.env

|

| 5 |

+

|

| 6 |

+

image/

|

| 7 |

+

audio/

|

| 8 |

+

video/

|

| 9 |

+

dataframe/

|

| 10 |

+

|

| 11 |

+

static/generated

|

| 12 |

+

swarms/__pycache__

|

| 13 |

+

venv

|

| 14 |

+

.DS_Store

|

| 15 |

+

|

| 16 |

+

.DS_STORE

|

| 17 |

+

swarms/agents/.DS_Store

|

| 18 |

+

|

| 19 |

+

_build

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

.DS_STORE

|

| 23 |

+

# Byte-compiled / optimized / DLL files

|

| 24 |

+

__pycache__/

|

| 25 |

+

*.py[cod]

|

| 26 |

+

*$py.class

|

| 27 |

+

|

| 28 |

+

# C extensions

|

| 29 |

+

*.so

|

| 30 |

+

|

| 31 |

+

# Distribution / packaging

|

| 32 |

+

.Python

|

| 33 |

+

build/

|

| 34 |

+

develop-eggs/

|

| 35 |

+

dist/

|

| 36 |

+

downloads/

|

| 37 |

+

eggs/

|

| 38 |

+

.eggs/

|

| 39 |

+

lib/

|

| 40 |

+

lib64/

|

| 41 |

+

parts/

|

| 42 |

+

sdist/

|

| 43 |

+

var/

|

| 44 |

+

wheels/

|

| 45 |

+

share/python-wheels/

|

| 46 |

+

*.egg-info/

|

| 47 |

+

.installed.cfg

|

| 48 |

+

*.egg

|

| 49 |

+

MANIFEST

|

| 50 |

+

|

| 51 |

+

# PyInstaller

|

| 52 |

+

# Usually these files are written by a python script from a template

|

| 53 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 54 |

+

*.manifest

|

| 55 |

+

*.spec

|

| 56 |

+

|

| 57 |

+

# Installer logs

|

| 58 |

+

pip-log.txt

|

| 59 |

+

pip-delete-this-directory.txt

|

| 60 |

+

|

| 61 |

+

# Unit test / coverage reports

|

| 62 |

+

htmlcov/

|

| 63 |

+

.tox/

|

| 64 |

+

.nox/

|

| 65 |

+

.coverage

|

| 66 |

+

.coverage.*

|

| 67 |

+

.cache

|

| 68 |

+

nosetests.xml

|

| 69 |

+

coverage.xml

|

| 70 |

+

*.cover

|

| 71 |

+

*.py,cover

|

| 72 |

+

.hypothesis/

|

| 73 |

+

.pytest_cache/

|

| 74 |

+

cover/

|

| 75 |

+

|

| 76 |

+

# Translations

|

| 77 |

+

*.mo

|

| 78 |

+

*.pot

|

| 79 |

+

|

| 80 |

+

# Django stuff:

|

| 81 |

+

*.log

|

| 82 |

+

local_settings.py

|

| 83 |

+

db.sqlite3

|

| 84 |

+

db.sqlite3-journal

|

| 85 |

+

|

| 86 |

+

# Flask stuff:

|

| 87 |

+

instance/

|

| 88 |

+

.webassets-cache

|

| 89 |

+

|

| 90 |

+

# Scrapy stuff:

|

| 91 |

+

.scrapy

|

| 92 |

+

|

| 93 |

+

# Sphinx documentation

|

| 94 |

+

docs/_build/

|

| 95 |

+

|

| 96 |

+

# PyBuilder

|

| 97 |

+

.pybuilder/

|

| 98 |

+

target/

|

| 99 |

+

|

| 100 |

+

# Jupyter Notebook

|

| 101 |

+

.ipynb_checkpoints

|

| 102 |

+

|

| 103 |

+

# IPython

|

| 104 |

+

profile_default/

|

| 105 |

+

ipython_config.py

|

| 106 |

+

.DS_Store

|

| 107 |

+

# pyenv

|

| 108 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 109 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 110 |

+

# .python-version

|

| 111 |

+

|

| 112 |

+

# pipenv

|

| 113 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 114 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 115 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 116 |

+

# install all needed dependencies.

|

| 117 |

+

#Pipfile.lock

|

| 118 |

+

|

| 119 |

+

# poetry

|

| 120 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 121 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 122 |

+

# commonly ignored for libraries.

|

| 123 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 124 |

+

#poetry.lock

|

| 125 |

+

|

| 126 |

+

# pdm

|

| 127 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 128 |

+

#pdm.lock

|

| 129 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 130 |

+

# in version control.

|

| 131 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 132 |

+

.pdm.toml

|

| 133 |

+

|

| 134 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 135 |

+

__pypackages__/

|

| 136 |

+

|

| 137 |

+

# Celery stuff

|

| 138 |

+

celerybeat-schedule

|

| 139 |

+

celerybeat.pid

|

| 140 |

+

|

| 141 |

+

# SageMath parsed files

|

| 142 |

+

*.sage.py

|

| 143 |

+

|

| 144 |

+

# Environments

|

| 145 |

+

.env

|

| 146 |

+

.venv

|

| 147 |

+

env/

|

| 148 |

+

venv/

|

| 149 |

+

ENV/

|

| 150 |

+

env.bak/

|

| 151 |

+

venv.bak/

|

| 152 |

+

|

| 153 |

+

# Spyder project settings

|

| 154 |

+

.spyderproject

|

| 155 |

+

.spyproject

|

| 156 |

+

|

| 157 |

+

# Rope project settings

|

| 158 |

+

.ropeproject

|

| 159 |

+

|

| 160 |

+

# mkdocs documentation

|

| 161 |

+

/site

|

| 162 |

+

|

| 163 |

+

# mypy

|

| 164 |

+

.mypy_cache/

|

| 165 |

+

.dmypy.json

|

| 166 |

+

dmypy.json

|

| 167 |

+

|

| 168 |

+

# Pyre type checker

|

| 169 |

+

.pyre/

|

| 170 |

+

|

| 171 |

+

# pytype static type analyzer

|

| 172 |

+

.pytype/

|

| 173 |

+

|

| 174 |

+

# Cython debug symbols

|

| 175 |

+

cython_debug/

|

| 176 |

+

|

| 177 |

+

# PyCharm

|

| 178 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 179 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 180 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 181 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 182 |

+

#.idea/

|

.readthedocs.yml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version: 2

|

| 2 |

+

|

| 3 |

+

build:

|

| 4 |

+

os: ubuntu-22.04

|

| 5 |

+

tools:

|

| 6 |

+

python: "3.11"

|

| 7 |

+

|

| 8 |

+

mkdocs:

|

| 9 |

+

configuration: mkdocs.yml

|

| 10 |

+

|

| 11 |

+

python:

|

| 12 |

+

install:

|

| 13 |

+

- requirements: requirements.txt

|

=0.3.0

ADDED

|

File without changes

|

=3.38.0

ADDED

|

@@ -0,0 +1,64 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Defaulting to user installation because normal site-packages is not writeable

|

| 2 |

+

Requirement already satisfied: gradio_client in /home/zack/.local/lib/python3.10/site-packages (0.2.5)

|

| 3 |

+

Requirement already satisfied: gradio in /home/zack/.local/lib/python3.10/site-packages (3.33.1)

|

| 4 |

+

Requirement already satisfied: fsspec in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (2023.5.0)

|

| 5 |

+

Requirement already satisfied: httpx in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (0.24.1)

|

| 6 |

+

Requirement already satisfied: huggingface-hub>=0.13.0 in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (0.16.4)

|

| 7 |

+

Requirement already satisfied: packaging in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (23.2)

|

| 8 |

+

Requirement already satisfied: requests in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (2.27.1)

|

| 9 |

+

Requirement already satisfied: typing-extensions in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (4.8.0)

|

| 10 |

+

Requirement already satisfied: websockets in /home/zack/.local/lib/python3.10/site-packages (from gradio_client) (11.0.3)

|

| 11 |

+

Requirement already satisfied: aiofiles in /home/zack/.local/lib/python3.10/site-packages (from gradio) (23.1.0)

|

| 12 |

+

Requirement already satisfied: aiohttp in /home/zack/.local/lib/python3.10/site-packages (from gradio) (3.8.4)

|

| 13 |

+

Requirement already satisfied: altair>=4.2.0 in /home/zack/.local/lib/python3.10/site-packages (from gradio) (4.2.2)

|

| 14 |

+

Requirement already satisfied: fastapi in /home/zack/.local/lib/python3.10/site-packages (from gradio) (0.100.1)

|

| 15 |

+

Requirement already satisfied: ffmpy in /home/zack/.local/lib/python3.10/site-packages (from gradio) (0.3.0)

|

| 16 |

+

Requirement already satisfied: jinja2 in /home/zack/.local/lib/python3.10/site-packages (from gradio) (3.1.2)

|

| 17 |

+

Requirement already satisfied: markdown-it-py[linkify]>=2.0.0 in /home/zack/.local/lib/python3.10/site-packages (from gradio) (2.2.0)

|

| 18 |

+

Requirement already satisfied: markupsafe in /home/zack/.local/lib/python3.10/site-packages (from gradio) (2.1.3)

|

| 19 |

+

Requirement already satisfied: matplotlib in /home/zack/.local/lib/python3.10/site-packages (from gradio) (3.1.3)

|

| 20 |

+

Requirement already satisfied: mdit-py-plugins<=0.3.3 in /home/zack/.local/lib/python3.10/site-packages (from gradio) (0.3.3)

|

| 21 |

+

Requirement already satisfied: numpy in /home/zack/.local/lib/python3.10/site-packages (from gradio) (1.22.4)

|

| 22 |

+

Requirement already satisfied: orjson in /home/zack/.local/lib/python3.10/site-packages (from gradio) (3.9.7)

|

| 23 |

+

Requirement already satisfied: pandas in /home/zack/.local/lib/python3.10/site-packages (from gradio) (1.4.2)

|

| 24 |

+

Requirement already satisfied: pillow in /home/zack/.local/lib/python3.10/site-packages (from gradio) (9.5.0)

|

| 25 |

+

Requirement already satisfied: pydantic in /home/zack/.local/lib/python3.10/site-packages (from gradio) (1.8.2)

|

| 26 |

+

Requirement already satisfied: pydub in /home/zack/.local/lib/python3.10/site-packages (from gradio) (0.25.1)

|

| 27 |

+

Requirement already satisfied: pygments>=2.12.0 in /home/zack/.local/lib/python3.10/site-packages (from gradio) (2.16.1)

|

| 28 |

+

Requirement already satisfied: python-multipart in /home/zack/.local/lib/python3.10/site-packages (from gradio) (0.0.6)

|

| 29 |

+

Requirement already satisfied: pyyaml in /home/zack/.local/lib/python3.10/site-packages (from gradio) (6.0)

|

| 30 |

+

Requirement already satisfied: semantic-version in /home/zack/.local/lib/python3.10/site-packages (from gradio) (2.10.0)

|

| 31 |

+

Requirement already satisfied: uvicorn>=0.14.0 in /home/zack/.local/lib/python3.10/site-packages (from gradio) (0.18.3)

|

| 32 |

+

Requirement already satisfied: entrypoints in /home/zack/.local/lib/python3.10/site-packages (from altair>=4.2.0->gradio) (0.4)

|

| 33 |

+

Requirement already satisfied: jsonschema>=3.0 in /home/zack/.local/lib/python3.10/site-packages (from altair>=4.2.0->gradio) (4.19.1)

|

| 34 |

+

Requirement already satisfied: toolz in /home/zack/.local/lib/python3.10/site-packages (from altair>=4.2.0->gradio) (0.12.0)

|

| 35 |

+

Requirement already satisfied: filelock in /home/zack/.local/lib/python3.10/site-packages (from huggingface-hub>=0.13.0->gradio_client) (3.12.4)

|

| 36 |

+

Requirement already satisfied: tqdm>=4.42.1 in /home/zack/.local/lib/python3.10/site-packages (from huggingface-hub>=0.13.0->gradio_client) (4.64.0)

|

| 37 |

+

Requirement already satisfied: mdurl~=0.1 in /home/zack/.local/lib/python3.10/site-packages (from markdown-it-py[linkify]>=2.0.0->gradio) (0.1.2)

|

| 38 |

+

Requirement already satisfied: linkify-it-py<3,>=1 in /home/zack/.local/lib/python3.10/site-packages (from markdown-it-py[linkify]>=2.0.0->gradio) (2.0.2)

|

| 39 |

+

Requirement already satisfied: python-dateutil>=2.8.1 in /home/zack/.local/lib/python3.10/site-packages (from pandas->gradio) (2.8.2)

|

| 40 |

+

Requirement already satisfied: pytz>=2020.1 in /usr/lib/python3/dist-packages (from pandas->gradio) (2022.1)

|

| 41 |

+

Requirement already satisfied: click>=7.0 in /home/zack/.local/lib/python3.10/site-packages (from uvicorn>=0.14.0->gradio) (8.1.6)

|

| 42 |

+

Requirement already satisfied: h11>=0.8 in /home/zack/.local/lib/python3.10/site-packages (from uvicorn>=0.14.0->gradio) (0.14.0)

|

| 43 |

+

Requirement already satisfied: attrs>=17.3.0 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (23.1.0)

|

| 44 |

+

Requirement already satisfied: charset-normalizer<4.0,>=2.0 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (2.0.12)

|

| 45 |

+

Requirement already satisfied: multidict<7.0,>=4.5 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (6.0.4)

|

| 46 |

+

Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (4.0.2)

|

| 47 |

+

Requirement already satisfied: yarl<2.0,>=1.0 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (1.9.2)

|

| 48 |

+

Requirement already satisfied: frozenlist>=1.1.1 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (1.3.3)

|

| 49 |

+

Requirement already satisfied: aiosignal>=1.1.2 in /home/zack/.local/lib/python3.10/site-packages (from aiohttp->gradio) (1.3.1)

|

| 50 |

+

Requirement already satisfied: starlette<0.28.0,>=0.27.0 in /home/zack/.local/lib/python3.10/site-packages (from fastapi->gradio) (0.27.0)

|

| 51 |

+

Requirement already satisfied: certifi in /home/zack/.local/lib/python3.10/site-packages (from httpx->gradio_client) (2023.5.7)

|

| 52 |

+

Requirement already satisfied: httpcore<0.18.0,>=0.15.0 in /home/zack/.local/lib/python3.10/site-packages (from httpx->gradio_client) (0.17.0)

|

| 53 |

+

Requirement already satisfied: idna in /home/zack/.local/lib/python3.10/site-packages (from httpx->gradio_client) (3.4)

|

| 54 |

+

Requirement already satisfied: sniffio in /home/zack/.local/lib/python3.10/site-packages (from httpx->gradio_client) (1.3.0)

|

| 55 |

+

Requirement already satisfied: cycler>=0.10 in /usr/lib/python3/dist-packages (from matplotlib->gradio) (0.11.0)

|

| 56 |

+

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/lib/python3/dist-packages (from matplotlib->gradio) (1.3.2)

|

| 57 |

+

Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /usr/lib/python3/dist-packages (from matplotlib->gradio) (2.4.7)

|

| 58 |

+

Requirement already satisfied: urllib3<1.27,>=1.21.1 in /home/zack/.local/lib/python3.10/site-packages (from requests->gradio_client) (1.26.17)

|

| 59 |

+

Requirement already satisfied: anyio<5.0,>=3.0 in /home/zack/.local/lib/python3.10/site-packages (from httpcore<0.18.0,>=0.15.0->httpx->gradio_client) (3.6.2)

|

| 60 |

+

Requirement already satisfied: jsonschema-specifications>=2023.03.6 in /home/zack/.local/lib/python3.10/site-packages (from jsonschema>=3.0->altair>=4.2.0->gradio) (2023.7.1)

|

| 61 |

+

Requirement already satisfied: referencing>=0.28.4 in /home/zack/.local/lib/python3.10/site-packages (from jsonschema>=3.0->altair>=4.2.0->gradio) (0.30.2)

|

| 62 |

+

Requirement already satisfied: rpds-py>=0.7.1 in /home/zack/.local/lib/python3.10/site-packages (from jsonschema>=3.0->altair>=4.2.0->gradio) (0.10.3)

|

| 63 |

+

Requirement already satisfied: uc-micro-py in /home/zack/.local/lib/python3.10/site-packages (from linkify-it-py<3,>=1->markdown-it-py[linkify]>=2.0.0->gradio) (1.0.2)

|

| 64 |

+

Requirement already satisfied: six>=1.5 in /usr/lib/python3/dist-packages (from python-dateutil>=2.8.1->pandas->gradio) (1.16.0)

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,248 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Contributing to Swarms

|

| 2 |

+

|

| 3 |

+

Hi there! Thank you for even being interested in contributing to Swarms.

|

| 4 |

+

As an open source project in a rapidly developing field, we are extremely open

|

| 5 |

+

to contributions, whether they be in the form of new features, improved infra, better documentation, or bug fixes.

|

| 6 |

+

|

| 7 |

+

## 🗺️ Guidelines

|

| 8 |

+

|

| 9 |

+

### 👩💻 Contributing Code

|

| 10 |

+

|

| 11 |

+

To contribute to this project, please follow a ["fork and pull request"](https://docs.github.com/en/get-started/quickstart/contributing-to-projects) workflow.

|

| 12 |

+

Please do not try to push directly to this repo unless you are maintainer.

|

| 13 |

+

|

| 14 |

+

Please follow the checked-in pull request template when opening pull requests. Note related issues and tag relevant

|

| 15 |

+

maintainers.

|

| 16 |

+

|

| 17 |

+

Pull requests cannot land without passing the formatting, linting and testing checks first. See

|

| 18 |

+

[Common Tasks](#-common-tasks) for how to run these checks locally.

|

| 19 |

+

|

| 20 |

+

It's essential that we maintain great documentation and testing. If you:

|

| 21 |

+

- Fix a bug

|

| 22 |

+

- Add a relevant unit or integration test when possible. These live in `tests/unit_tests` and `tests/integration_tests`.

|

| 23 |

+

- Make an improvement

|

| 24 |

+

- Update any affected example notebooks and documentation. These lives in `docs`.

|

| 25 |

+

- Update unit and integration tests when relevant.

|

| 26 |

+

- Add a feature

|

| 27 |

+

- Add a demo notebook in `docs/modules`.

|

| 28 |

+

- Add unit and integration tests.

|

| 29 |

+

|

| 30 |

+

We're a small, building-oriented team. If there's something you'd like to add or change, opening a pull request is the

|

| 31 |

+

best way to get our attention.

|

| 32 |

+

|

| 33 |

+

### 🚩GitHub Issues

|

| 34 |

+

|

| 35 |

+

Our [issues](https://github.com/kyegomez/Swarms/issues) page is kept up to date

|

| 36 |

+

with bugs, improvements, and feature requests.

|

| 37 |

+

|

| 38 |

+

There is a taxonomy of labels to help with sorting and discovery of issues of interest. Please use these to help

|

| 39 |

+

organize issues.

|

| 40 |

+

|

| 41 |

+

If you start working on an issue, please assign it to yourself.

|

| 42 |

+

|

| 43 |

+

If you are adding an issue, please try to keep it focused on a single, modular bug/improvement/feature.

|

| 44 |

+

If two issues are related, or blocking, please link them rather than combining them.

|

| 45 |

+

|

| 46 |

+

We will try to keep these issues as up to date as possible, though

|

| 47 |

+

with the rapid rate of develop in this field some may get out of date.

|

| 48 |

+

If you notice this happening, please let us know.

|

| 49 |

+

|

| 50 |

+

### 🙋Getting Help

|

| 51 |

+

|

| 52 |

+

Our goal is to have the simplest developer setup possible. Should you experience any difficulty getting setup, please

|

| 53 |

+

contact a maintainer! Not only do we want to help get you unblocked, but we also want to make sure that the process is

|

| 54 |

+