Spaces:

Runtime error

Runtime error

Commit

•

c41cba9

1

Parent(s):

e3540ce

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .env.example +9 -0

- .gitattributes +4 -0

- .gitignore +162 -0

- CODE_OF_CONDUCT.md +9 -0

- Dockerfile +43 -0

- LICENSE +21 -0

- README.md +165 -7

- SECURITY.md +41 -0

- SUPPORT.md +25 -0

- assets/method2_xyz.png +3 -0

- assets/som_bench_bottom.jpg +0 -0

- assets/som_bench_upper.jpg +0 -0

- assets/som_gpt4v_demo.mp4 +3 -0

- assets/som_logo.png +0 -0

- assets/som_toolbox_interface.jpg +0 -0

- assets/teaser.png +3 -0

- benchmark/README.md +96 -0

- client.py +36 -0

- configs/seem_focall_unicl_lang_v1.yaml +401 -0

- configs/semantic_sam_only_sa-1b_swinL.yaml +524 -0

- demo_gpt4v_som.py +226 -0

- demo_som.py +181 -0

- deploy.py +720 -0

- deploy_requirements.txt +9 -0

- docker-build-ec2.yml.j2 +44 -0

- download_ckpt.sh +3 -0

- entrypoint.sh +10 -0

- examples/gpt-4v-som-example.jpg +0 -0

- examples/ironing_man.jpg +0 -0

- examples/ironing_man_som.png +0 -0

- examples/som_logo.png +0 -0

- gpt4v.py +69 -0

- ops/cuda-repo-ubuntu2004-11-8-local_11.8.0-520.61.05-1_amd64.deb +3 -0

- ops/functions/__init__.py +13 -0

- ops/functions/ms_deform_attn_func.py +72 -0

- ops/make.sh +35 -0

- ops/modules/__init__.py +12 -0

- ops/modules/ms_deform_attn.py +125 -0

- ops/setup.py +78 -0

- ops/src/cpu/ms_deform_attn_cpu.cpp +46 -0

- ops/src/cpu/ms_deform_attn_cpu.h +38 -0

- ops/src/cuda/ms_deform_attn_cuda.cu +158 -0

- ops/src/cuda/ms_deform_attn_cuda.h +35 -0

- ops/src/cuda/ms_deform_im2col_cuda.cuh +1332 -0

- ops/src/ms_deform_attn.h +67 -0

- ops/src/vision.cpp +21 -0

- ops/test.py +92 -0

- sam_vit_h_4b8939.pth +3 -0

- seem_focall_v1.pt +3 -0

- swinl_only_sam_many2many.pth +3 -0

.env.example

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

AWS_ACCESS_KEY_ID=

|

| 2 |

+

AWS_SECRET_ACCESS_KEY=

|

| 3 |

+

AWS_REGION=

|

| 4 |

+

GITHUB_OWNER=

|

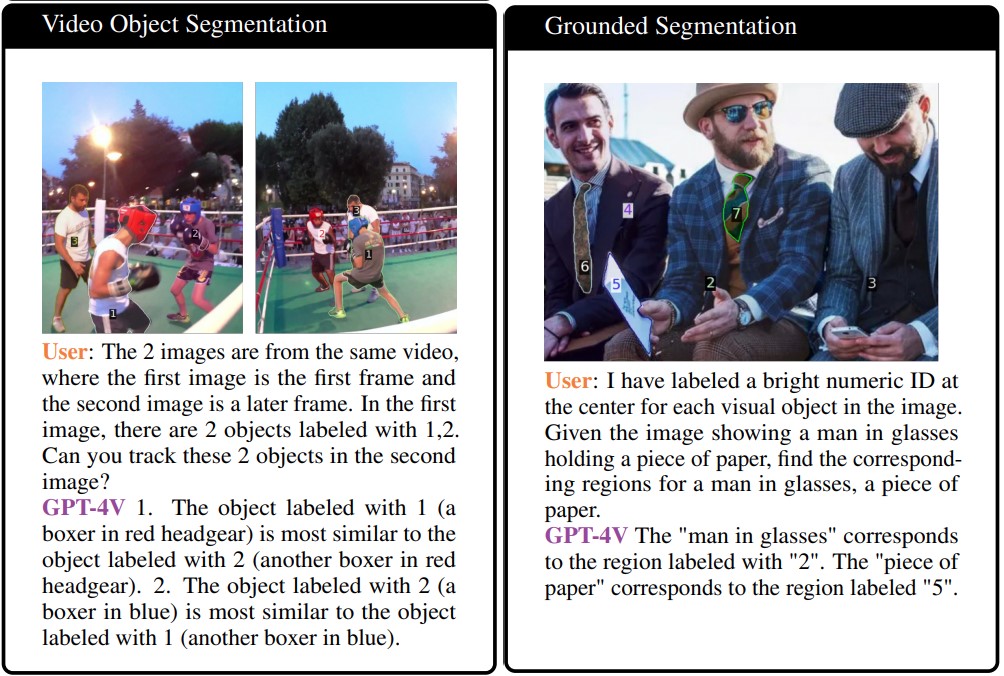

| 5 |

+

GITHUB_REPO=

|

| 6 |

+

GITHUB_TOKEN=

|

| 7 |

+

PROJECT_NAME=

|

| 8 |

+

# optional

|

| 9 |

+

OPENAI_API_KEY=

|

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/method2_xyz.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/som_gpt4v_demo.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/teaser.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

ops/cuda-repo-ubuntu2004-11-8-local_11.8.0-520.61.05-1_amd64.deb filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,162 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 110 |

+

.pdm.toml

|

| 111 |

+

|

| 112 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 113 |

+

__pypackages__/

|

| 114 |

+

|

| 115 |

+

# Celery stuff

|

| 116 |

+

celerybeat-schedule

|

| 117 |

+

celerybeat.pid

|

| 118 |

+

|

| 119 |

+

# SageMath parsed files

|

| 120 |

+

*.sage.py

|

| 121 |

+

|

| 122 |

+

# Environments

|

| 123 |

+

.env

|

| 124 |

+

.venv

|

| 125 |

+

env/

|

| 126 |

+

venv/

|

| 127 |

+

ENV/

|

| 128 |

+

env.bak/

|

| 129 |

+

venv.bak/

|

| 130 |

+

|

| 131 |

+

# Spyder project settings

|

| 132 |

+

.spyderproject

|

| 133 |

+

.spyproject

|

| 134 |

+

|

| 135 |

+

# Rope project settings

|

| 136 |

+

.ropeproject

|

| 137 |

+

|

| 138 |

+

# mkdocs documentation

|

| 139 |

+

/site

|

| 140 |

+

|

| 141 |

+

# mypy

|

| 142 |

+

.mypy_cache/

|

| 143 |

+

.dmypy.json

|

| 144 |

+

dmypy.json

|

| 145 |

+

|

| 146 |

+

# Pyre type checker

|

| 147 |

+

.pyre/

|

| 148 |

+

|

| 149 |

+

# pytype static type analyzer

|

| 150 |

+

.pytype/

|

| 151 |

+

|

| 152 |

+

# Cython debug symbols

|

| 153 |

+

cython_debug/

|

| 154 |

+

|

| 155 |

+

# PyCharm

|

| 156 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 157 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 158 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 159 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 160 |

+

#.idea/

|

| 161 |

+

|

| 162 |

+

*.sw[m-p]

|

CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Microsoft Open Source Code of Conduct

|

| 2 |

+

|

| 3 |

+

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

|

| 4 |

+

|

| 5 |

+

Resources:

|

| 6 |

+

|

| 7 |

+

- [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/)

|

| 8 |

+

- [Microsoft Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/)

|

| 9 |

+

- Contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with questions or concerns

|

Dockerfile

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM nvidia/cuda:12.3.1-devel-ubuntu22.04

|

| 2 |

+

|

| 3 |

+

# Install system dependencies

|

| 4 |

+

RUN apt-get update && \

|

| 5 |

+

apt-get install -y \

|

| 6 |

+

python3-pip python3-dev git ninja-build wget \

|

| 7 |

+

ffmpeg libsm6 libxext6 \

|

| 8 |

+

openmpi-bin libopenmpi-dev && \

|

| 9 |

+

ln -sf /usr/bin/python3 /usr/bin/python && \

|

| 10 |

+

ln -sf /usr/bin/pip3 /usr/bin/pip

|

| 11 |

+

|

| 12 |

+

# Set the working directory in the container

|

| 13 |

+

WORKDIR /usr/src/app

|

| 14 |

+

|

| 15 |

+

# Copy the current directory contents into the container at /usr/src/app

|

| 16 |

+

COPY . .

|

| 17 |

+

|

| 18 |

+

ENV FORCE_CUDA=1

|

| 19 |

+

|

| 20 |

+

# Upgrade pip

|

| 21 |

+

RUN python -m pip install --upgrade pip

|

| 22 |

+

|

| 23 |

+

# Install Python dependencies

|

| 24 |

+

RUN pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu123 \

|

| 25 |

+

&& pip install git+https://github.com/UX-Decoder/Segment-Everything-Everywhere-All-At-Once.git@33f2c898fdc8d7c95dda014a4b9ebe4e413dbb2b \

|

| 26 |

+

&& pip install git+https://github.com/facebookresearch/segment-anything.git \

|

| 27 |

+

&& pip install git+https://github.com/UX-Decoder/Semantic-SAM.git@package \

|

| 28 |

+

&& cd ops && bash make.sh && cd .. \

|

| 29 |

+

&& pip install mpi4py \

|

| 30 |

+

&& pip install openai \

|

| 31 |

+

&& pip install gradio==4.17.0

|

| 32 |

+

|

| 33 |

+

# Download pretrained models

|

| 34 |

+

RUN sh download_ckpt.sh

|

| 35 |

+

|

| 36 |

+

# Make port 6092 available to the world outside this container

|

| 37 |

+

EXPOSE 6092

|

| 38 |

+

|

| 39 |

+

# Make Gradio server accessible outside 127.0.0.1

|

| 40 |

+

ENV GRADIO_SERVER_NAME="0.0.0.0"

|

| 41 |

+

|

| 42 |

+

RUN chmod +x /usr/src/app/entrypoint.sh

|

| 43 |

+

CMD ["/usr/src/app/entrypoint.sh"]

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Microsoft Corporation.

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE

|

README.md

CHANGED

|

@@ -1,12 +1,170 @@

|

|

| 1 |

---

|

| 2 |

title: SoM

|

| 3 |

-

|

| 4 |

-

colorFrom: green

|

| 5 |

-

colorTo: pink

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: SoM

|

| 3 |

+

app_file: demo_som.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 4.17.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# <img src="assets/som_logo.png" alt="Logo" width="40" height="40" align="left"> Set-of-Mark Visual Prompting for GPT-4V

|

| 8 |

|

| 9 |

+

:grapes: \[[Read our arXiv Paper](https://arxiv.org/pdf/2310.11441.pdf)\] :apple: \[[Project Page](https://som-gpt4v.github.io/)\]

|

| 10 |

+

|

| 11 |

+

[Jianwei Yang](https://jwyang.github.io/)\*⚑, [Hao Zhang](https://haozhang534.github.io/)\*, [Feng Li](https://fengli-ust.github.io/)\*, [Xueyan Zou](https://maureenzou.github.io/)\*, [Chunyuan Li](https://chunyuan.li/), [Jianfeng Gao](https://www.microsoft.com/en-us/research/people/jfgao/)

|

| 12 |

+

|

| 13 |

+

\* Core Contributors ⚑ Project Lead

|

| 14 |

+

|

| 15 |

+

### Introduction

|

| 16 |

+

|

| 17 |

+

We present **S**et-**o**f-**M**ark (SoM) prompting, simply overlaying a number of spatial and speakable marks on the images, to unleash the visual grounding abilities in the strongest LMM -- GPT-4V. **Let's using visual prompting for vision**!

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

### GPT-4V + SoM Demo

|

| 23 |

+

|

| 24 |

+

https://github.com/microsoft/SoM/assets/3894247/8f827871-7ebd-4a5e-bef5-861516c4427b

|

| 25 |

+

|

| 26 |

+

### 🔥 News

|

| 27 |

+

|

| 28 |

+

* [11/21] Thanks to Roboflow and @SkalskiP, a [huggingface demo](https://huggingface.co/spaces/Roboflow/SoM) for SoM + GPT-4V is online! Try it out!

|

| 29 |

+

* [11/07] We released the vision benchmark we used to evaluate GPT-4V with SoM prompting! Check out the [benchmark page](https://github.com/microsoft/SoM/tree/main/benchmark)!

|

| 30 |

+

|

| 31 |

+

* [11/07] Now that GPT-4V API has been released, we are releasing a demo integrating SoM into GPT-4V!

|

| 32 |

+

```bash

|

| 33 |

+

export OPENAI_API_KEY=YOUR_API_KEY

|

| 34 |

+

python demo_gpt4v_som.py

|

| 35 |

+

```

|

| 36 |

+

|

| 37 |

+

* [10/23] We released the SoM toolbox code for generating set-of-mark prompts for GPT-4V. Try it out!

|

| 38 |

+

|

| 39 |

+

### 🔗 Fascinating Applications

|

| 40 |

+

|

| 41 |

+

Fascinating applications of SoM in GPT-4V:

|

| 42 |

+

* [11/13/2023] [Smartphone GUI Navigation boosted by Set-of-Mark Prompting](https://github.com/zzxslp/MM-Navigator)

|

| 43 |

+

* [11/05/2023] [Zero-shot Anomaly Detection with GPT-4V and SoM prompting](https://github.com/zhangzjn/GPT-4V-AD)

|

| 44 |

+

* [10/21/2023] [Web UI Navigation Agent inspired by Set-of-Mark Prompting](https://github.com/ddupont808/GPT-4V-Act)

|

| 45 |

+

* [10/20/2023] [Set-of-Mark Prompting Reimplementation by @SkalskiP from Roboflow](https://github.com/SkalskiP/SoM.git)

|

| 46 |

+

|

| 47 |

+

### 🔗 Related Works

|

| 48 |

+

|

| 49 |

+

Our method compiles the following models to generate the set of marks:

|

| 50 |

+

|

| 51 |

+

- [Mask DINO](https://github.com/IDEA-Research/MaskDINO): State-of-the-art closed-set image segmentation model

|

| 52 |

+

- [OpenSeeD](https://github.com/IDEA-Research/OpenSeeD): State-of-the-art open-vocabulary image segmentation model

|

| 53 |

+

- [GroundingDINO](https://github.com/IDEA-Research/GroundingDINO): State-of-the-art open-vocabulary object detection model

|

| 54 |

+

- [SEEM](https://github.com/UX-Decoder/Segment-Everything-Everywhere-All-At-Once): Versatile, promptable, interactive and semantic-aware segmentation model

|

| 55 |

+

- [Semantic-SAM](https://github.com/UX-Decoder/Semantic-SAM): Segment and recognize anything at any granularity

|

| 56 |

+

- [Segment Anything](https://github.com/facebookresearch/segment-anything): Segment anything

|

| 57 |

+

|

| 58 |

+

We are standing on the shoulder of the giant GPT-4V ([playground](https://chat.openai.com/))!

|

| 59 |

+

|

| 60 |

+

### :rocket: Quick Start

|

| 61 |

+

|

| 62 |

+

* Install segmentation packages

|

| 63 |

+

|

| 64 |

+

```bash

|

| 65 |

+

# install SEEM

|

| 66 |

+

pip install git+https://github.com/UX-Decoder/Segment-Everything-Everywhere-All-At-Once.git@package

|

| 67 |

+

# install SAM

|

| 68 |

+

pip install git+https://github.com/facebookresearch/segment-anything.git

|

| 69 |

+

# install Semantic-SAM

|

| 70 |

+

pip install git+https://github.com/UX-Decoder/Semantic-SAM.git@package

|

| 71 |

+

# install Deformable Convolution for Semantic-SAM

|

| 72 |

+

cd ops && sh make.sh && cd ..

|

| 73 |

+

|

| 74 |

+

# common error fix:

|

| 75 |

+

python -m pip install 'git+https://github.com/MaureenZOU/detectron2-xyz.git'

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

* Download the pretrained models

|

| 79 |

+

|

| 80 |

+

```bash

|

| 81 |

+

sh download_ckpt.sh

|

| 82 |

+

```

|

| 83 |

+

|

| 84 |

+

* Run the demo

|

| 85 |

+

|

| 86 |

+

```bash

|

| 87 |

+

python demo_som.py

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

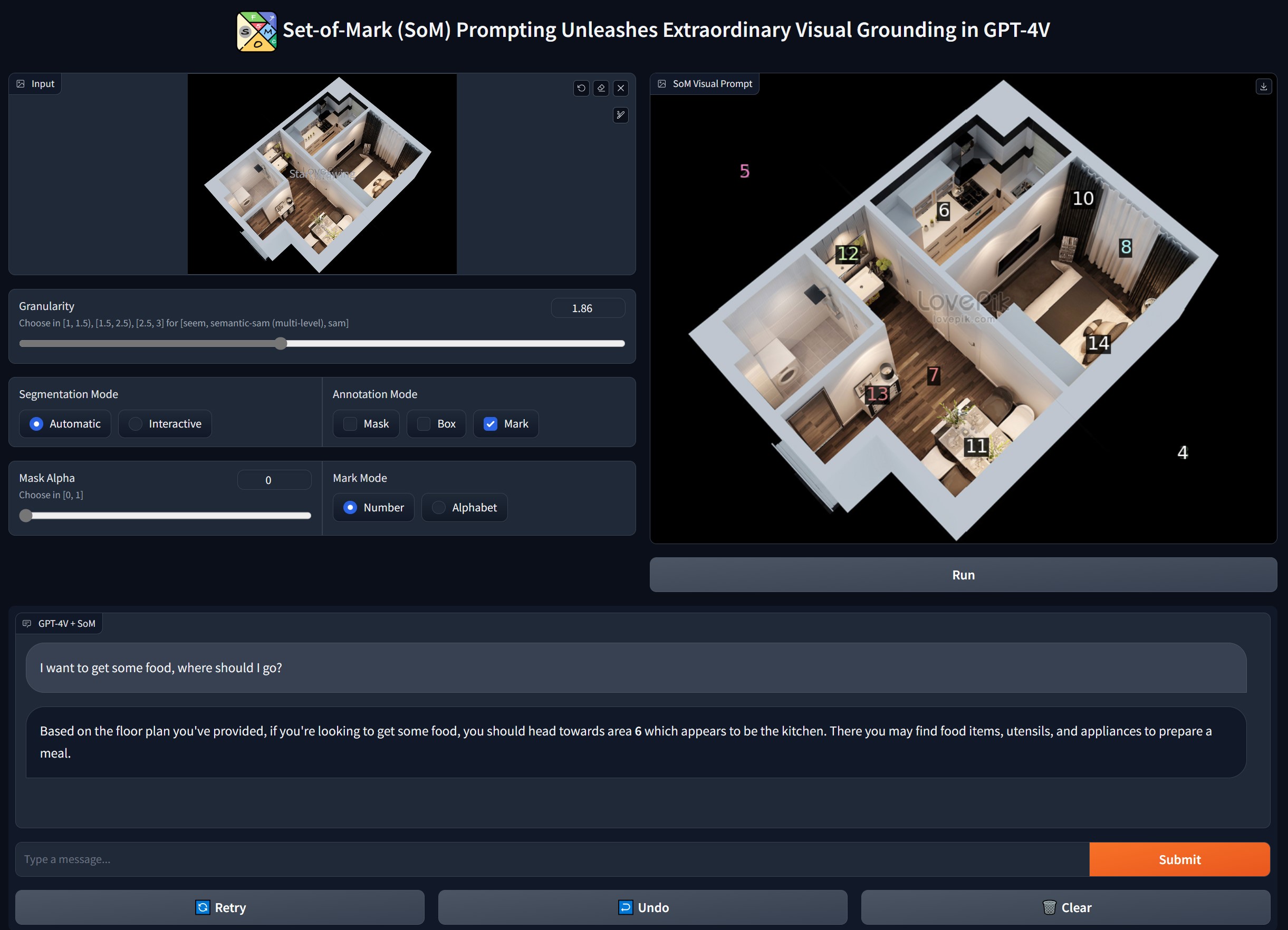

And you will see this interface:

|

| 91 |

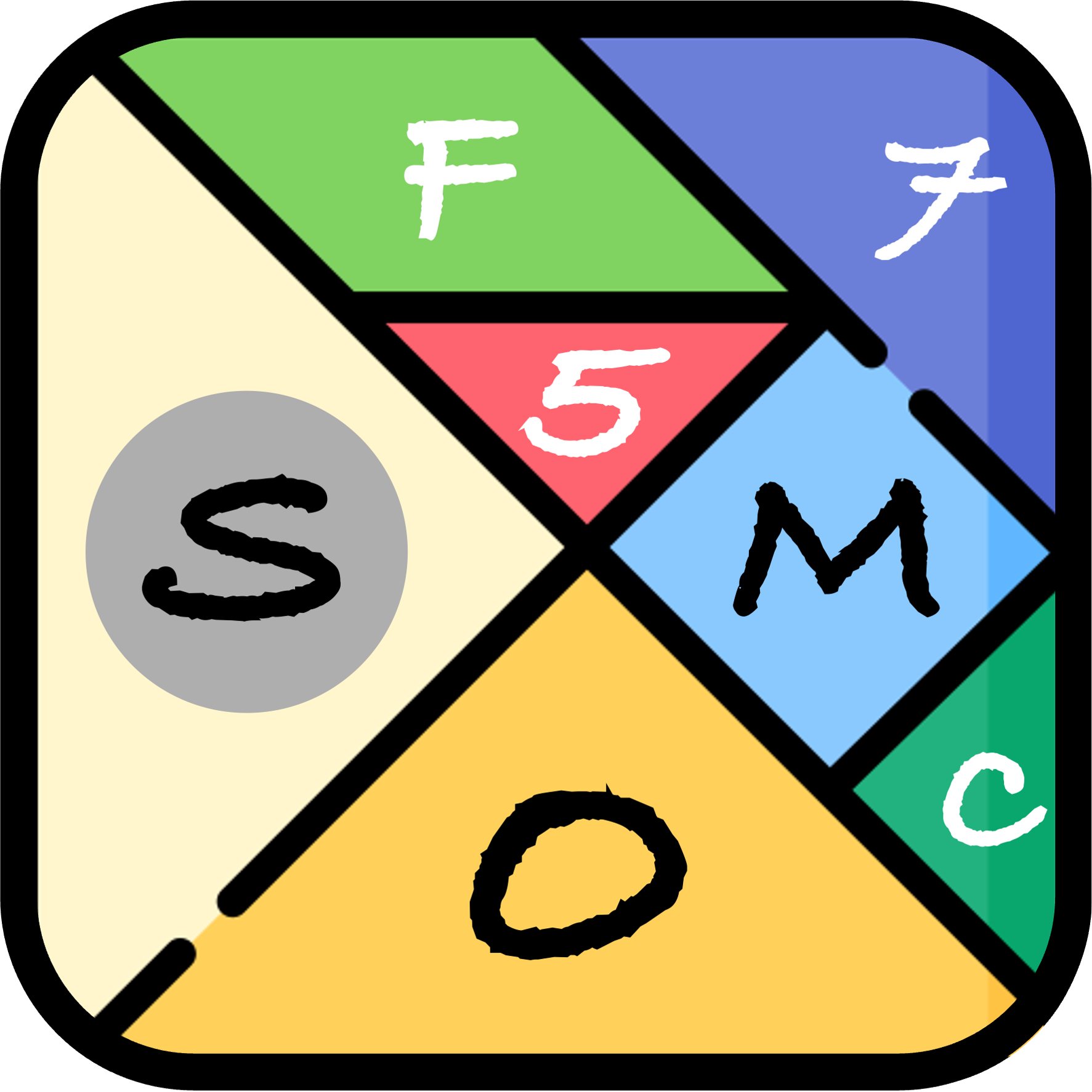

+

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

## Deploy to AWS

|

| 95 |

+

|

| 96 |

+

To deploy SoM to EC2 on AWS via Github Actions:

|

| 97 |

+

|

| 98 |

+

1. Fork this repository and clone your fork to your local machine.

|

| 99 |

+

2. Follow the instructions at the top of `deploy.py`.

|

| 100 |

+

|

| 101 |

+

### :point_right: Comparing standard GPT-4V and its combination with SoM Prompting

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

### :round_pushpin: SoM Toolbox for image partition

|

| 105 |

+

|

| 106 |

+

Users can select which granularity of masks to generate, and which mode to use between automatic (top) and interactive (bottom). A higher alpha blending value (0.4) is used for better visualization.

|

| 107 |

+

### :unicorn: Interleaved Prompt

|

| 108 |

+

SoM enables interleaved prompts which include textual and visual content. The visual content can be represented using the region indices.

|

| 109 |

+

<img width="975" alt="Screenshot 2023-10-18 at 10 06 18" src="https://github.com/microsoft/SoM/assets/34880758/859edfda-ab04-450c-bd28-93762460ac1d">

|

| 110 |

+

|

| 111 |

+

### :medal_military: Mark types used in SoM

|

| 112 |

+

|

| 113 |

+

### :volcano: Evaluation tasks examples

|

| 114 |

+

<img width="946" alt="Screenshot 2023-10-18 at 10 12 18" src="https://github.com/microsoft/SoM/assets/34880758/f5e0c0b0-58de-4b60-bf01-4906dbcb229e">

|

| 115 |

+

|

| 116 |

+

## Use case

|

| 117 |

+

### :tulip: Grounded Reasoning and Cross-Image Reference

|

| 118 |

+

|

| 119 |

+

<img width="972" alt="Screenshot 2023-10-18 at 10 10 41" src="https://github.com/microsoft/SoM/assets/34880758/033cd16c-876c-4c03-961e-590a4189bc9e">

|

| 120 |

+

|

| 121 |

+

In comparison to GPT-4V without SoM, adding marks enables GPT-4V to ground the

|

| 122 |

+

reasoning on detailed contents of the image (Left). Clear object cross-image references are observed

|

| 123 |

+

on the right.

|

| 124 |

+

17

|

| 125 |

+

### :camping: Problem Solving

|

| 126 |

+

<img width="972" alt="Screenshot 2023-10-18 at 10 18 03" src="https://github.com/microsoft/SoM/assets/34880758/8b112126-d164-47d7-b18c-b4b51b903d57">

|

| 127 |

+

|

| 128 |

+

Case study on solving CAPTCHA. GPT-4V gives the wrong answer with a wrong number

|

| 129 |

+

of squares while finding the correct squares with corresponding marks after SoM prompting.

|

| 130 |

+

### :mountain_snow: Knowledge Sharing

|

| 131 |

+

<img width="733" alt="Screenshot 2023-10-18 at 10 18 44" src="https://github.com/microsoft/SoM/assets/34880758/dc753c3f-ada8-47a4-83f1-1576bcfb146a">

|

| 132 |

+

|

| 133 |

+

Case study on an image of dish for GPT-4V. GPT-4V does not produce a grounded answer

|

| 134 |

+

with the original image. Based on SoM prompting, GPT-4V not only speaks out the ingredients but

|

| 135 |

+

also corresponds them to the regions.

|

| 136 |

+

### :mosque: Personalized Suggestion

|

| 137 |

+

<img width="733" alt="Screenshot 2023-10-18 at 10 19 12" src="https://github.com/microsoft/SoM/assets/34880758/88188c90-84f2-49c6-812e-44770b0c2ca5">

|

| 138 |

+

|

| 139 |

+

SoM-pormpted GPT-4V gives very precise suggestions while the original one fails, even

|

| 140 |

+

with hallucinated foods, e.g., soft drinks

|

| 141 |

+

### :blossom: Tool Usage Instruction

|

| 142 |

+

<img width="734" alt="Screenshot 2023-10-18 at 10 19 39" src="https://github.com/microsoft/SoM/assets/34880758/9b35b143-96af-41bd-ad83-9c1f1e0f322f">

|

| 143 |

+

Likewise, GPT4-V with SoM can help to provide thorough tool usage instruction

|

| 144 |

+

, teaching

|

| 145 |

+

users the function of each button on a controller. Note that this image is not fully labeled, while

|

| 146 |

+

GPT-4V can also provide information about the non-labeled buttons.

|

| 147 |

+

|

| 148 |

+

### :sunflower: 2D Game Planning

|

| 149 |

+

<img width="730" alt="Screenshot 2023-10-18 at 10 20 03" src="https://github.com/microsoft/SoM/assets/34880758/0bc86109-5512-4dee-aac9-bab0ef96ed4c">

|

| 150 |

+

|

| 151 |

+

GPT-4V with SoM gives a reasonable suggestion on how to achieve a goal in a gaming

|

| 152 |

+

scenario.

|

| 153 |

+

### :mosque: Simulated Navigation

|

| 154 |

+

<img width="729" alt="Screenshot 2023-10-18 at 10 21 24" src="https://github.com/microsoft/SoM/assets/34880758/7f139250-5350-4790-a35c-444ec2ec883b">

|

| 155 |

+

|

| 156 |

+

### :deciduous_tree: Results

|

| 157 |

+

We conduct experiments on various vision tasks to verify the effectiveness of our SoM. Results show that GPT4V+SoM outperforms specialists on most vision tasks and is comparable to MaskDINO on COCO panoptic segmentation.

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

## :black_nib: Citation

|

| 161 |

+

|

| 162 |

+

If you find our work helpful for your research, please consider citing the following BibTeX entry.

|

| 163 |

+

```bibtex

|

| 164 |

+

@article{yang2023setofmark,

|

| 165 |

+

title={Set-of-Mark Prompting Unleashes Extraordinary Visual Grounding in GPT-4V},

|

| 166 |

+

author={Jianwei Yang and Hao Zhang and Feng Li and Xueyan Zou and Chunyuan Li and Jianfeng Gao},

|

| 167 |

+

journal={arXiv preprint arXiv:2310.11441},

|

| 168 |

+

year={2023},

|

| 169 |

+

}

|

| 170 |

+

```

|

SECURITY.md

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- BEGIN MICROSOFT SECURITY.MD V0.0.9 BLOCK -->

|

| 2 |

+

|

| 3 |

+

## Security

|

| 4 |

+

|

| 5 |

+

Microsoft takes the security of our software products and services seriously, which includes all source code repositories managed through our GitHub organizations, which include [Microsoft](https://github.com/Microsoft), [Azure](https://github.com/Azure), [DotNet](https://github.com/dotnet), [AspNet](https://github.com/aspnet) and [Xamarin](https://github.com/xamarin).

|

| 6 |

+

|

| 7 |

+

If you believe you have found a security vulnerability in any Microsoft-owned repository that meets [Microsoft's definition of a security vulnerability](https://aka.ms/security.md/definition), please report it to us as described below.

|

| 8 |

+

|

| 9 |

+

## Reporting Security Issues

|

| 10 |

+

|

| 11 |

+

**Please do not report security vulnerabilities through public GitHub issues.**

|

| 12 |

+

|

| 13 |

+

Instead, please report them to the Microsoft Security Response Center (MSRC) at [https://msrc.microsoft.com/create-report](https://aka.ms/security.md/msrc/create-report).

|

| 14 |

+

|

| 15 |

+

If you prefer to submit without logging in, send email to [secure@microsoft.com](mailto:secure@microsoft.com). If possible, encrypt your message with our PGP key; please download it from the [Microsoft Security Response Center PGP Key page](https://aka.ms/security.md/msrc/pgp).

|

| 16 |

+

|

| 17 |

+

You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Additional information can be found at [microsoft.com/msrc](https://www.microsoft.com/msrc).

|

| 18 |

+

|

| 19 |

+

Please include the requested information listed below (as much as you can provide) to help us better understand the nature and scope of the possible issue:

|

| 20 |

+

|

| 21 |

+

* Type of issue (e.g. buffer overflow, SQL injection, cross-site scripting, etc.)

|

| 22 |

+

* Full paths of source file(s) related to the manifestation of the issue

|

| 23 |

+

* The location of the affected source code (tag/branch/commit or direct URL)

|

| 24 |

+

* Any special configuration required to reproduce the issue

|

| 25 |

+

* Step-by-step instructions to reproduce the issue

|

| 26 |

+

* Proof-of-concept or exploit code (if possible)

|

| 27 |

+

* Impact of the issue, including how an attacker might exploit the issue

|

| 28 |

+

|

| 29 |

+

This information will help us triage your report more quickly.

|

| 30 |

+

|

| 31 |

+

If you are reporting for a bug bounty, more complete reports can contribute to a higher bounty award. Please visit our [Microsoft Bug Bounty Program](https://aka.ms/security.md/msrc/bounty) page for more details about our active programs.

|

| 32 |

+

|

| 33 |

+

## Preferred Languages

|

| 34 |

+

|

| 35 |

+

We prefer all communications to be in English.

|

| 36 |

+

|

| 37 |

+

## Policy

|

| 38 |

+

|

| 39 |

+

Microsoft follows the principle of [Coordinated Vulnerability Disclosure](https://aka.ms/security.md/cvd).

|

| 40 |

+

|

| 41 |

+

<!-- END MICROSOFT SECURITY.MD BLOCK -->

|

SUPPORT.md

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# TODO: The maintainer of this repo has not yet edited this file

|

| 2 |

+

|

| 3 |

+

**REPO OWNER**: Do you want Customer Service & Support (CSS) support for this product/project?

|

| 4 |

+

|

| 5 |

+

- **No CSS support:** Fill out this template with information about how to file issues and get help.

|

| 6 |

+

- **Yes CSS support:** Fill out an intake form at [aka.ms/onboardsupport](https://aka.ms/onboardsupport). CSS will work with/help you to determine next steps.

|

| 7 |

+

- **Not sure?** Fill out an intake as though the answer were "Yes". CSS will help you decide.

|

| 8 |

+

|

| 9 |

+

*Then remove this first heading from this SUPPORT.MD file before publishing your repo.*

|

| 10 |

+

|

| 11 |

+

# Support

|

| 12 |

+

|

| 13 |

+

## How to file issues and get help

|

| 14 |

+

|

| 15 |

+

This project uses GitHub Issues to track bugs and feature requests. Please search the existing

|

| 16 |

+

issues before filing new issues to avoid duplicates. For new issues, file your bug or

|

| 17 |

+

feature request as a new Issue.

|

| 18 |

+

|

| 19 |

+

For help and questions about using this project, please **REPO MAINTAINER: INSERT INSTRUCTIONS HERE

|

| 20 |

+

FOR HOW TO ENGAGE REPO OWNERS OR COMMUNITY FOR HELP. COULD BE A STACK OVERFLOW TAG OR OTHER

|

| 21 |

+

CHANNEL. WHERE WILL YOU HELP PEOPLE?**.

|

| 22 |

+

|

| 23 |

+

## Microsoft Support Policy

|

| 24 |

+

|

| 25 |

+

Support for this **PROJECT or PRODUCT** is limited to the resources listed above.

|

assets/method2_xyz.png

ADDED

|

Git LFS Details

|

assets/som_bench_bottom.jpg

ADDED

|

assets/som_bench_upper.jpg

ADDED

|

assets/som_gpt4v_demo.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c6a8f8b077dcbe8f7b693b51045a0ded80a1681565c793c2dba3c90d3836b5c4

|

| 3 |

+

size 50609514

|

assets/som_logo.png

ADDED

|

assets/som_toolbox_interface.jpg

ADDED

|

assets/teaser.png

ADDED

|

Git LFS Details

|

benchmark/README.md

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

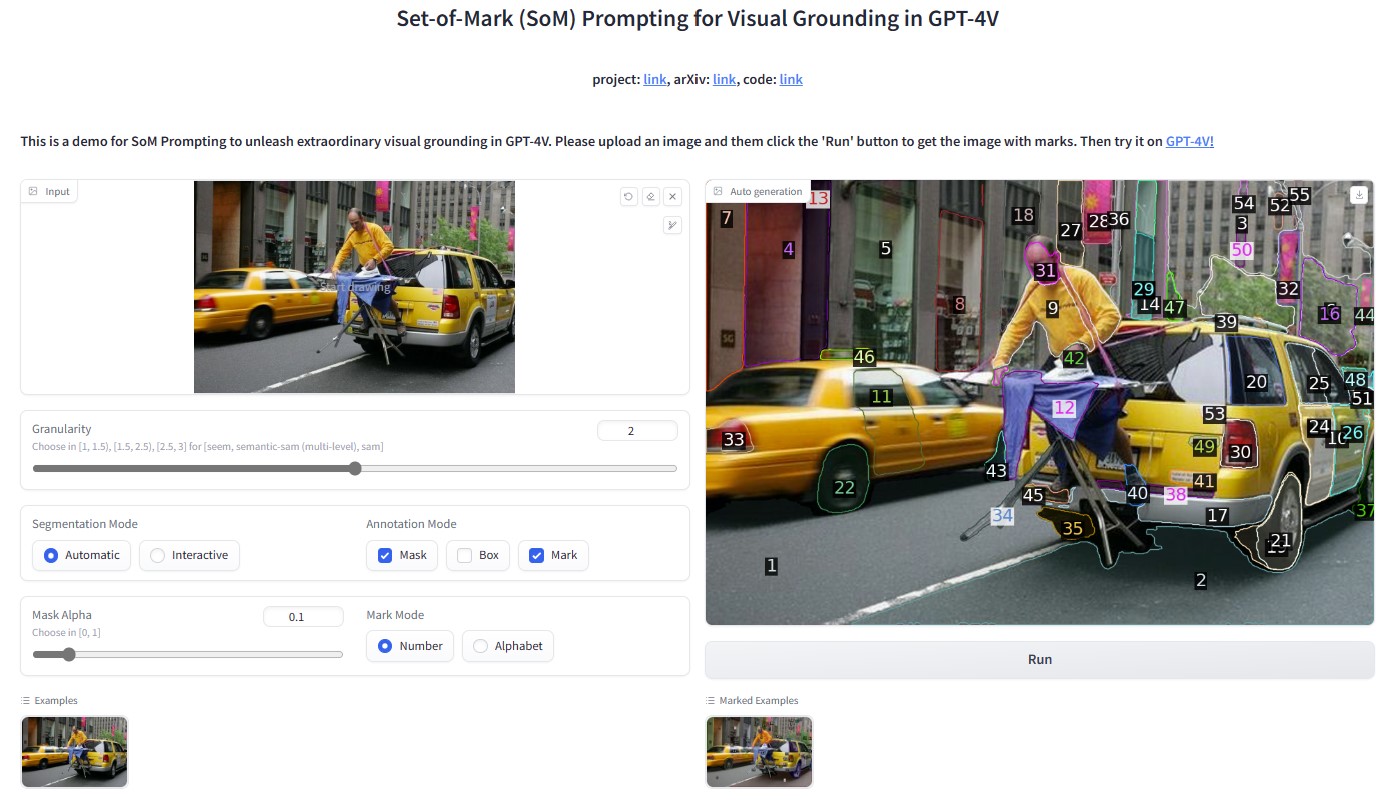

# SoM-Bench: Evaluating Visual Grounding with Visual Prompting

|

| 2 |

+

|

| 3 |

+

We build a new benchmark called SoM-Bench to evaluate the visual grounding capability of LLMs with visual prompting.

|

| 4 |

+

|

| 5 |

+

## Dataset

|

| 6 |

+

|

| 7 |

+

| Vision Taks | Source | #Images | #Instances | Marks | Metric | Data

|

| 8 |

+

| -------- | -------- | -------- | -------- | -------- | -------- | -------- |

|

| 9 |

+

| Open-Vocab Segmentation | [COCO](https://cocodataset.org/#home) | 100 | 567 | Numeric IDs and Masks | Precision | [Download](https://github.com/microsoft/SoM/releases/download/v1.0/coco_ovseg.zip)

|

| 10 |

+

| Open-Vocab Segmentation | [ADE20K](https://groups.csail.mit.edu/vision/datasets/ADE20K/) | 100 | 488 | Numeric IDs and Masks | Precision | [Download](https://github.com/microsoft/SoM/releases/download/v1.0/ade20k_ovseg.zip)

|

| 11 |

+

| Phrase Grounding | [Flickr30K](https://shannon.cs.illinois.edu/DenotationGraph/) | 100 | 274 | Numeric IDs and Masks and Boxes | Recall @ 1 | [Download](https://github.com/microsoft/SoM/releases/download/v1.0/flickr30k_grounding.zip)

|

| 12 |

+

| Referring Comprehension | [RefCOCO](https://github.com/lichengunc/refer) | 100 | 177 | Numeric IDs and Masks | ACC @ 0.5 | [Download](https://github.com/microsoft/SoM/releases/download/v1.0/refcocog_refseg.zip)

|

| 13 |

+

| Referring Segmentation | [RefCOCO](https://github.com/lichengunc/refer) | 100 | 177 | Numeric IDs and Masks | mIoU | [Download](https://github.com/microsoft/SoM/releases/download/v1.0/refcocog_refseg.zip)

|

| 14 |

+

|

| 15 |

+

## Dataset Structure

|

| 16 |

+

|

| 17 |

+

### Open-Vocab Segmentation on COCO

|

| 18 |

+

|

| 19 |

+

We provide COCO in the following structure:

|

| 20 |

+

|

| 21 |

+

```

|

| 22 |

+

coco_ovseg

|

| 23 |

+

├── som_images

|

| 24 |

+

├── 000000000285_0.jpg

|

| 25 |

+

├── 000000000872_0.jpg

|

| 26 |

+

|── 000000000872_5.jpg

|

| 27 |

+

├── ...

|

| 28 |

+

├── 000000002153_5.jpg

|

| 29 |

+

└── 000000002261_0.jpg

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

For some of the samples, the regions are very dense, so we split the regions into multiple groups of size 5,. For example, `000000000872_0.jpg` has 5 regions, and `000000000872_5.jpg` has the other 5 regions. Note that you can use the image_id to track the original image.

|

| 33 |

+

|

| 34 |

+

We used the following language prompt for the task:

|

| 35 |

+

```

|

| 36 |

+

I have labeled a bright numeric ID at the center for each visual object in the image. Please enumerate their names. You must answer by selecting from the following names: [COCO Vocabulary]

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

### Open-Vocab Segmentation on ADE20K

|

| 40 |

+

|

| 41 |

+

```

|

| 42 |

+

ade20k_ovseg

|

| 43 |

+

├── som_images

|

| 44 |

+

├── ADE_val_00000001_0.jpg

|

| 45 |

+

├── ADE_val_00000001_5.jpg

|

| 46 |

+

|── ADE_val_00000011_5.jpg

|

| 47 |

+

├── ...

|

| 48 |

+

├── ADE_val_00000039_5.jpg

|

| 49 |

+

└── ADE_val_00000040_0.jpg

|

| 50 |

+

```

|

| 51 |

+

Similar to COCO, the regions in ADE20K are also very dense, so we split the regions into multiple groups of size 5,. For example, `ADE_val_00000001_0.jpg` has 5 regions, and `ADE_val_00000001_5.jpg` has the other 5 regions. Note that you can use the image_id to track the original image.

|

| 52 |

+

|

| 53 |

+

We used the following language prompt for the task:

|

| 54 |

+

```

|

| 55 |

+

I have labeled a bright numeric ID at the center for each visual object in the image. Please enumerate their names. You must answer by selecting from the following names: [ADE20K Vocabulary]

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

### Phrase Grounding on Flickr30K

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

flickr30k_grounding

|

| 62 |

+

├── som_images

|

| 63 |

+

├── 14868339.jpg

|

| 64 |

+

├── 14868339_wbox.jpg

|

| 65 |

+

|── 14868339.json

|

| 66 |

+

├── ...

|

| 67 |

+

├── 302740416.jpg

|

| 68 |

+

|── 319185571_wbox.jpg

|

| 69 |

+

└── 302740416.json

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

For Flickr30K, we provide the image with numeric IDs and masks, and also the image with additional bounding boxes. The json file containing the ground truth bounding boxes and the corresponding phrases. Note that the bounding boxes are in the format of [x1, y1, x2, y2].

|

| 73 |

+

|

| 74 |

+

We used the following language prompt for the task:

|

| 75 |

+

```

|

| 76 |

+

I have labeled a bright numeric ID at the center for each visual object in the image. Given the image showing a man in glasses holding a piece of paper, find the corresponding regions for a man in glasses, a piece of paper.

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

### Referring Expression Comprehension and Segmentation on RefCOCOg

|

| 80 |

+

|

| 81 |

+

```

|

| 82 |

+

refcocog_refseg

|

| 83 |

+

├── som_images

|

| 84 |

+

├── 000000000795.jpg

|

| 85 |

+

|── 000000000795.json

|

| 86 |

+

├── ...

|

| 87 |

+

|── 000000007852.jpg

|

| 88 |

+

└── 000000007852.json

|

| 89 |

+

```

|

| 90 |

+

|

| 91 |

+

For RefCOCOg, we provide the image with numeric IDs and masks, and also the json file containing the referring expressions and the corresponding referring ids.

|

| 92 |

+

|

| 93 |

+

We used the following language prompt for the task:

|

| 94 |

+

```

|

| 95 |

+

I have labeled a bright numeric ID at the center for each visual object in the image. Please tell me the IDs for: The laptop behind the beer bottle; Laptop turned on.

|

| 96 |

+

```

|

client.py

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This module provides a command-line interface to interact with the SoM server.

|

| 3 |

+

|

| 4 |

+

The server URL is printed during deployment via `python deploy.py run`.

|

| 5 |

+

|

| 6 |

+

Usage:

|

| 7 |

+

python client.py "http://<server_ip>:6092"

|

| 8 |

+

"""

|

| 9 |

+

|

| 10 |

+

import fire

|

| 11 |

+

from gradio_client import Client

|

| 12 |

+

from loguru import logger

|

| 13 |

+

|

| 14 |

+

def predict(server_url: str):

|

| 15 |

+

"""

|

| 16 |

+

Makes a prediction using the Gradio client with the provided IP address.

|

| 17 |

+

|

| 18 |

+

Args:

|

| 19 |

+

server_url (str): The URL of the SoM Gradio server.

|

| 20 |

+

"""

|

| 21 |

+

client = Client(server_url)

|

| 22 |

+

result = client.predict(

|

| 23 |

+

{

|

| 24 |

+

"background": "https://raw.githubusercontent.com/gradio-app/gradio/main/test/test_files/bus.png",

|

| 25 |

+

}, # filepath in 'parameter_1' Image component

|

| 26 |

+

2.5, # float (numeric value between 1 and 3) in 'Granularity' Slider component

|

| 27 |

+

"Automatic", # Literal['Automatic', 'Interactive'] in 'Segmentation Mode' Radio component

|

| 28 |

+

0.5, # float (numeric value between 0 and 1) in 'Mask Alpha' Slider component

|

| 29 |

+

"Number", # Literal['Number', 'Alphabet'] in 'Mark Mode' Radio component

|

| 30 |

+

["Mark"], # List[Literal['Mask', 'Box', 'Mark']] in 'Annotation Mode' Checkboxgroup component

|

| 31 |

+

api_name="/inference"

|

| 32 |

+

)

|

| 33 |

+

logger.info(result)

|

| 34 |

+

|

| 35 |

+

if __name__ == "__main__":

|

| 36 |

+

fire.Fire(predict)

|

configs/seem_focall_unicl_lang_v1.yaml

ADDED

|

@@ -0,0 +1,401 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# --------------------------------------------------------

|

| 2 |

+

# X-Decoder -- Generalized Decoding for Pixel, Image, and Language

|

| 3 |

+

# Copyright (c) 2022 Microsoft

|

| 4 |

+

# Licensed under The MIT License [see LICENSE for details]

|

| 5 |

+

# Written by Xueyan Zou (xueyan@cs.wisc.edu)

|

| 6 |

+

# --------------------------------------------------------

|

| 7 |

+

|

| 8 |

+

# Define Test/Trainer/Saving

|

| 9 |

+

PIPELINE: XDecoderPipeline

|

| 10 |

+

TRAINER: xdecoder

|

| 11 |

+

SAVE_DIR: '../../data/output/test'

|

| 12 |

+

base_path: "./"

|

| 13 |

+

|

| 14 |

+

# Resume Logistic

|

| 15 |

+

RESUME: false

|

| 16 |

+

WEIGHT: false

|

| 17 |

+

RESUME_FROM: ''

|

| 18 |

+

EVAL_AT_START: False

|

| 19 |

+

|

| 20 |

+

# Logging and Debug

|

| 21 |

+

WANDB: False

|

| 22 |

+

LOG_EVERY: 100

|

| 23 |

+

FIND_UNUSED_PARAMETERS: false

|

| 24 |

+

|

| 25 |

+

# Speed up training

|

| 26 |

+

FP16: false

|

| 27 |

+

PORT: '36873'

|

| 28 |

+

|

| 29 |

+

# misc

|

| 30 |

+

LOADER:

|

| 31 |

+

JOINT: False

|

| 32 |

+

KEY_DATASET: 'coco'

|

| 33 |

+

|

| 34 |

+

##################

|

| 35 |

+

# Task settings