This view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +7 -0

- README.md +460 -7

- app.py +97 -0

- app1.py +87 -0

- data/__init__.py +114 -0

- data/base_dataset.py +231 -0

- data/image_folder.py +62 -0

- data/one_dataset.py +40 -0

- data/single_dataset.py +40 -0

- data/unaligned_dataset.py +72 -0

- detect.py +66 -0

- imgs/horse.jpg +0 -0

- imgs/monet.jpg +0 -0

- models/__init__.py +66 -0

- models/base_model.py +213 -0

- models/cycle_gan_model.py +170 -0

- models/networks.py +767 -0

- models/test_model.py +86 -0

- options/__init__.py +4 -0

- options/base_options.py +149 -0

- options/detect_options.py +25 -0

- options/test_options.py +28 -0

- options/train_options.py +42 -0

- requirements.txt +12 -0

- scripts/download_cyclegan_model.sh +11 -0

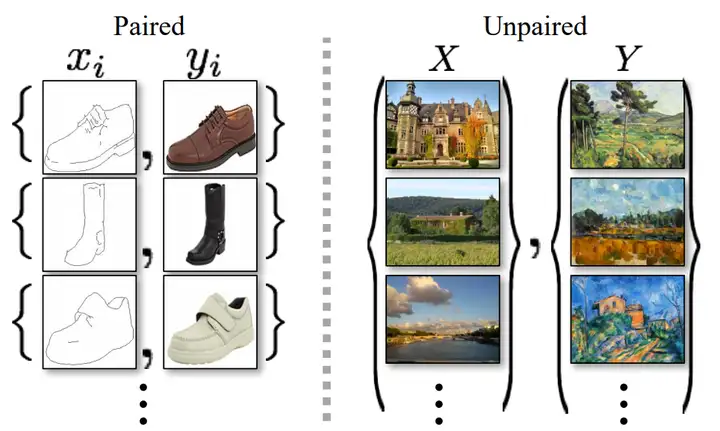

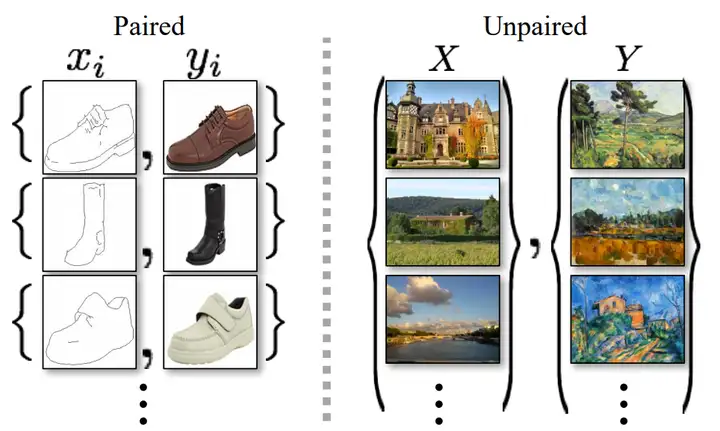

- scripts/test_before_push.py +51 -0

- scripts/test_cyclegan.sh +2 -0

- scripts/test_single.sh +2 -0

- scripts/train_cyclegan.sh +2 -0

- util/__init__.py +2 -0

- util/get_data.py +97 -0

- util/html.py +95 -0

- util/image_pool.py +60 -0

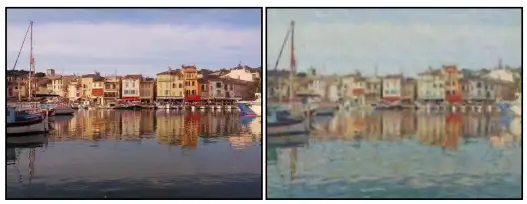

- util/streamlit/css.css +3 -0

- util/tools.py +23 -0

- util/util.py +135 -0

- util/visualizer.py +297 -0

- weights/detect/apple2orange.pth +3 -0

- weights/detect/cityscapes_label2photo.pth +3 -0

- weights/detect/cityscapes_photo2label.pth +3 -0

- weights/detect/facades_label2photo.pth +3 -0

- weights/detect/facades_photo2label.pth +3 -0

- weights/detect/horse2zebra.pth +3 -0

- weights/detect/iphone2dslr_flower_2.pth +3 -0

- weights/detect/latest_net_G_A.pth +3 -0

- weights/detect/latest_net_G_A0.pth +3 -0

- weights/detect/latest_net_G_A1.pth +3 -0

- weights/detect/latest_net_G_B.pth +3 -0

- weights/detect/latest_net_G_B0.pth +3 -0

- weights/detect/latest_net_G_B1.pth +3 -0

.gitignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

datasets/

|

| 2 |

+

run/

|

| 3 |

+

checkpoints/

|

| 4 |

+

results/

|

| 5 |

+

!weights/detect/

|

| 6 |

+

start.ipynb

|

| 7 |

+

*.pyc

|

README.md

CHANGED

|

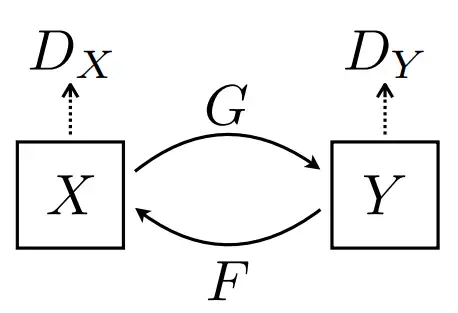

@@ -1,12 +1,465 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

sdk_version: 3.23.0

|

| 8 |

app_file: app.py

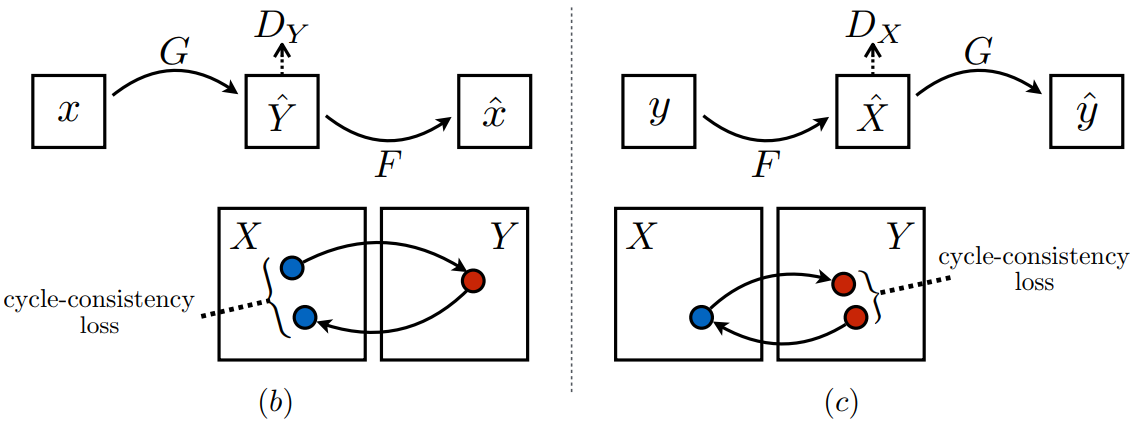

|

| 9 |

pinned: false

|

|

|

|

|

|

|

| 10 |

---

|

| 11 |

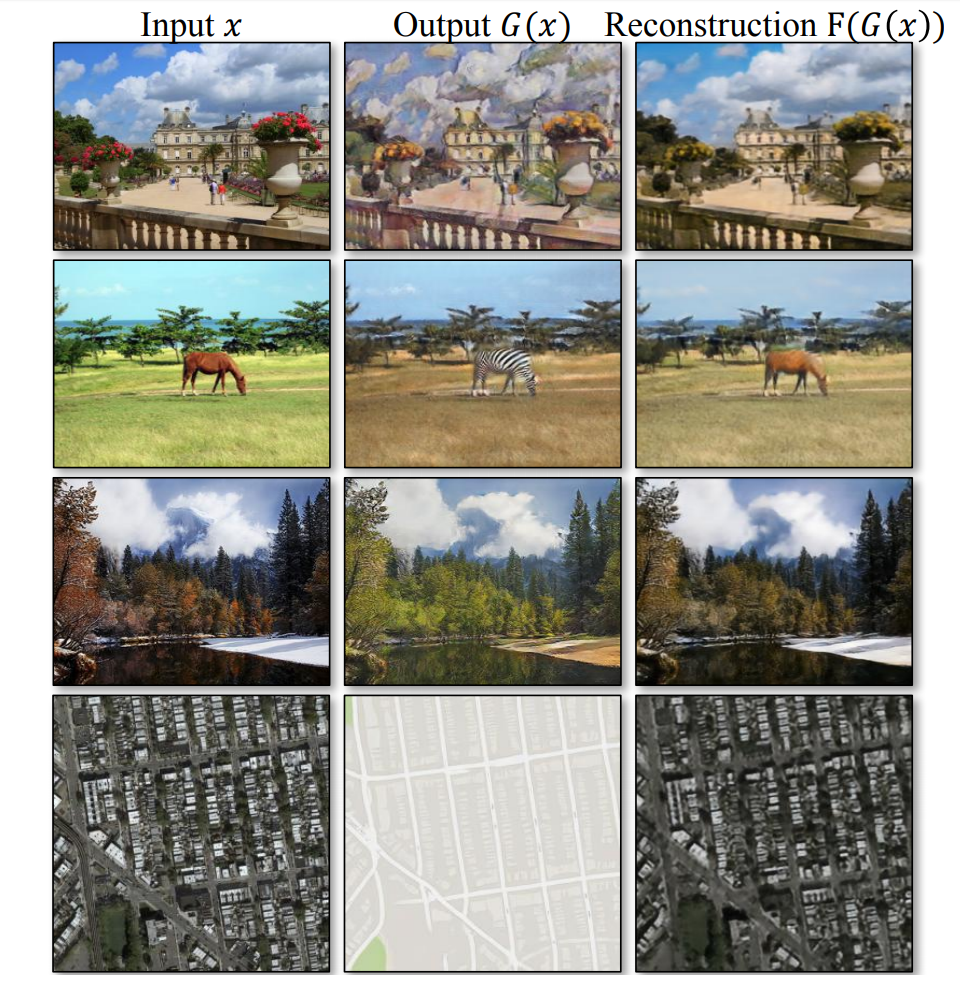

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

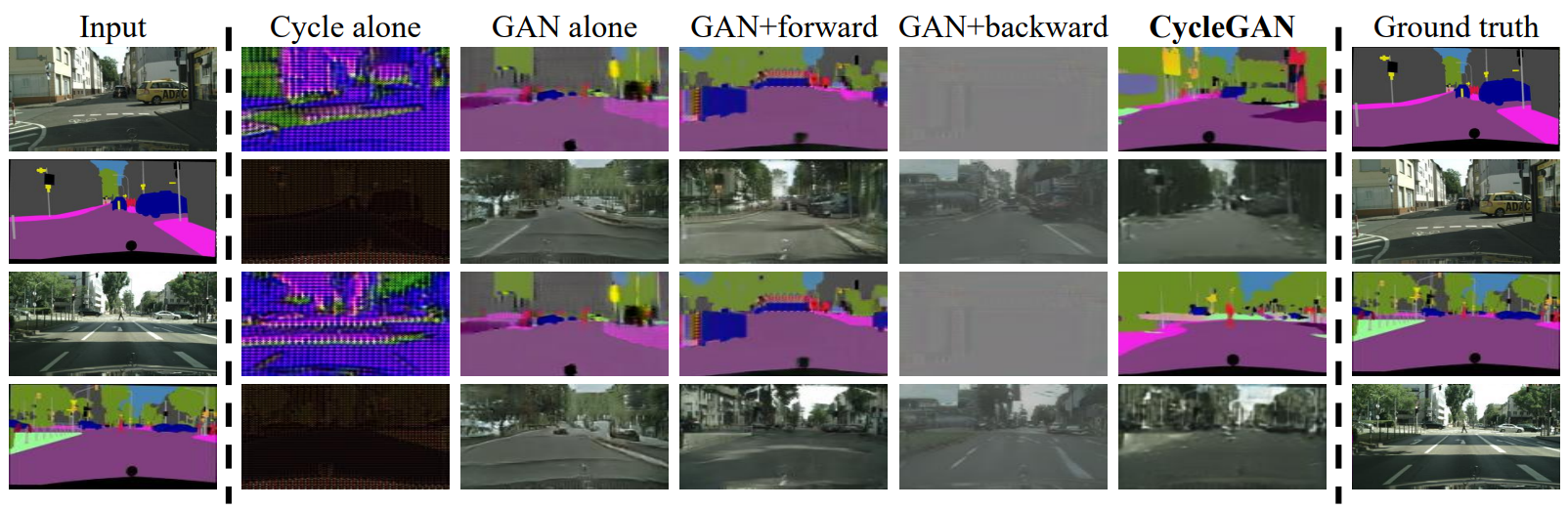

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

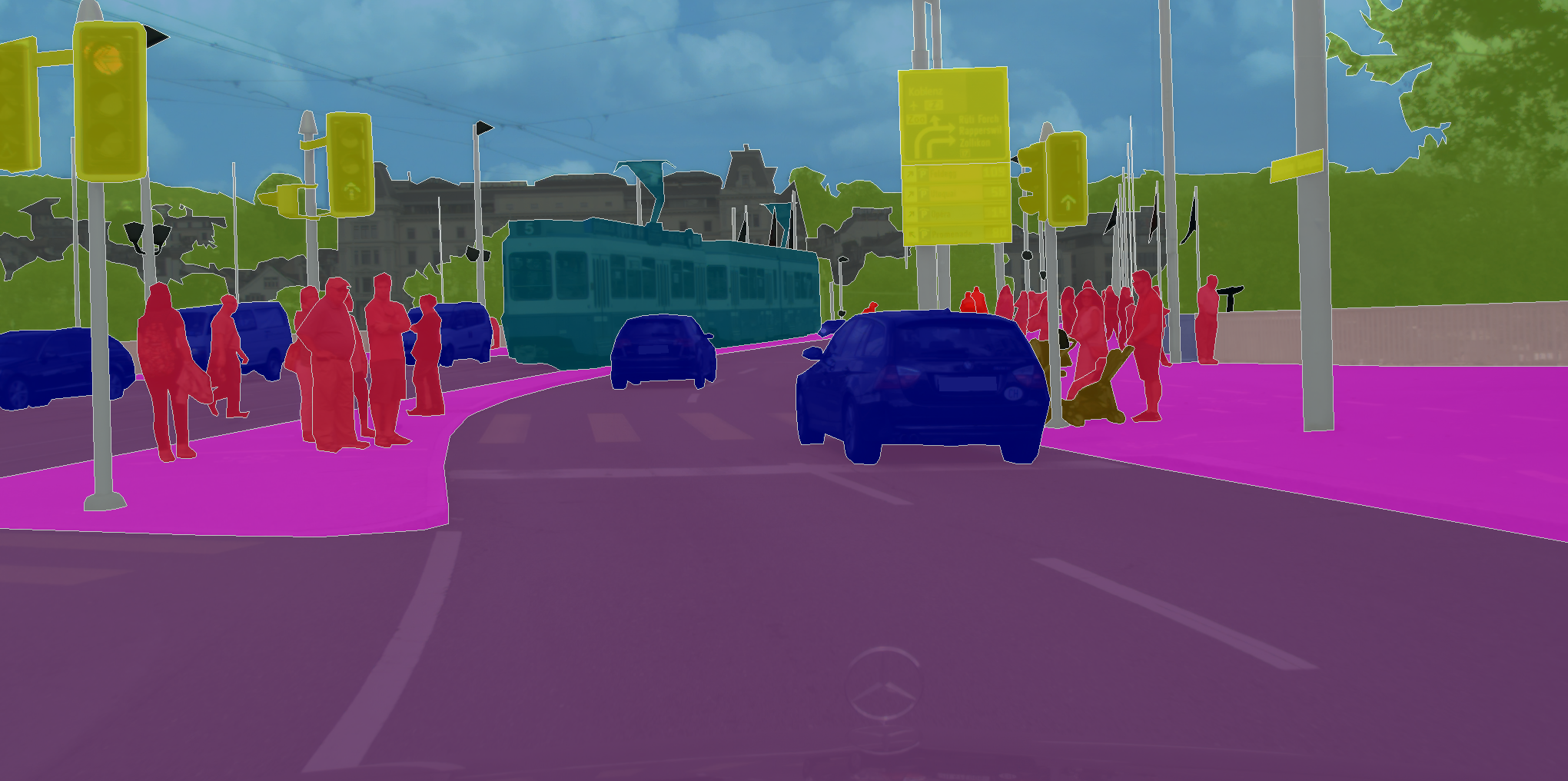

|

|

|

|

|

|

|

|

|

|

|

|

|

|

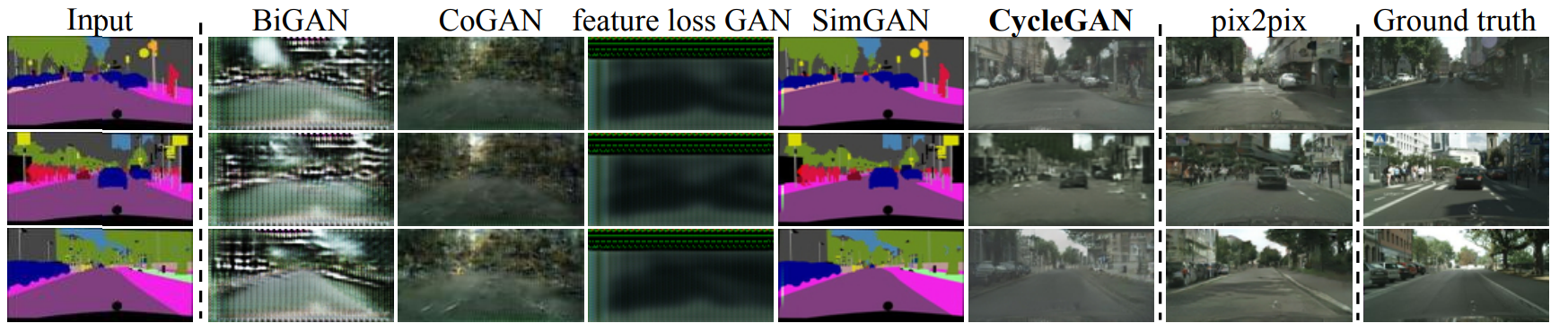

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

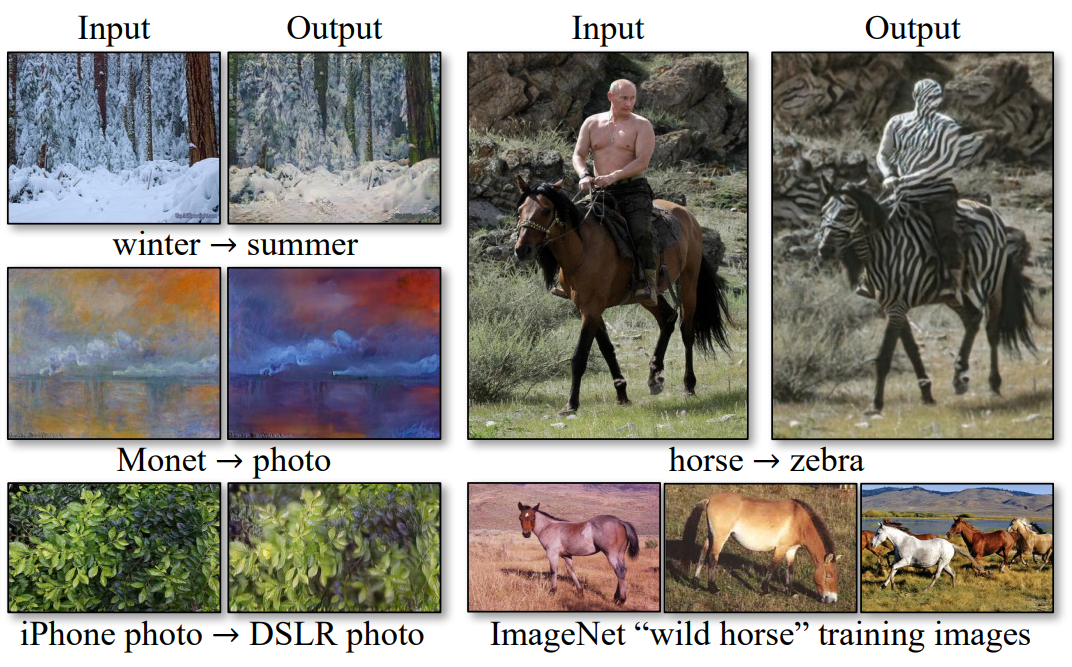

|

|

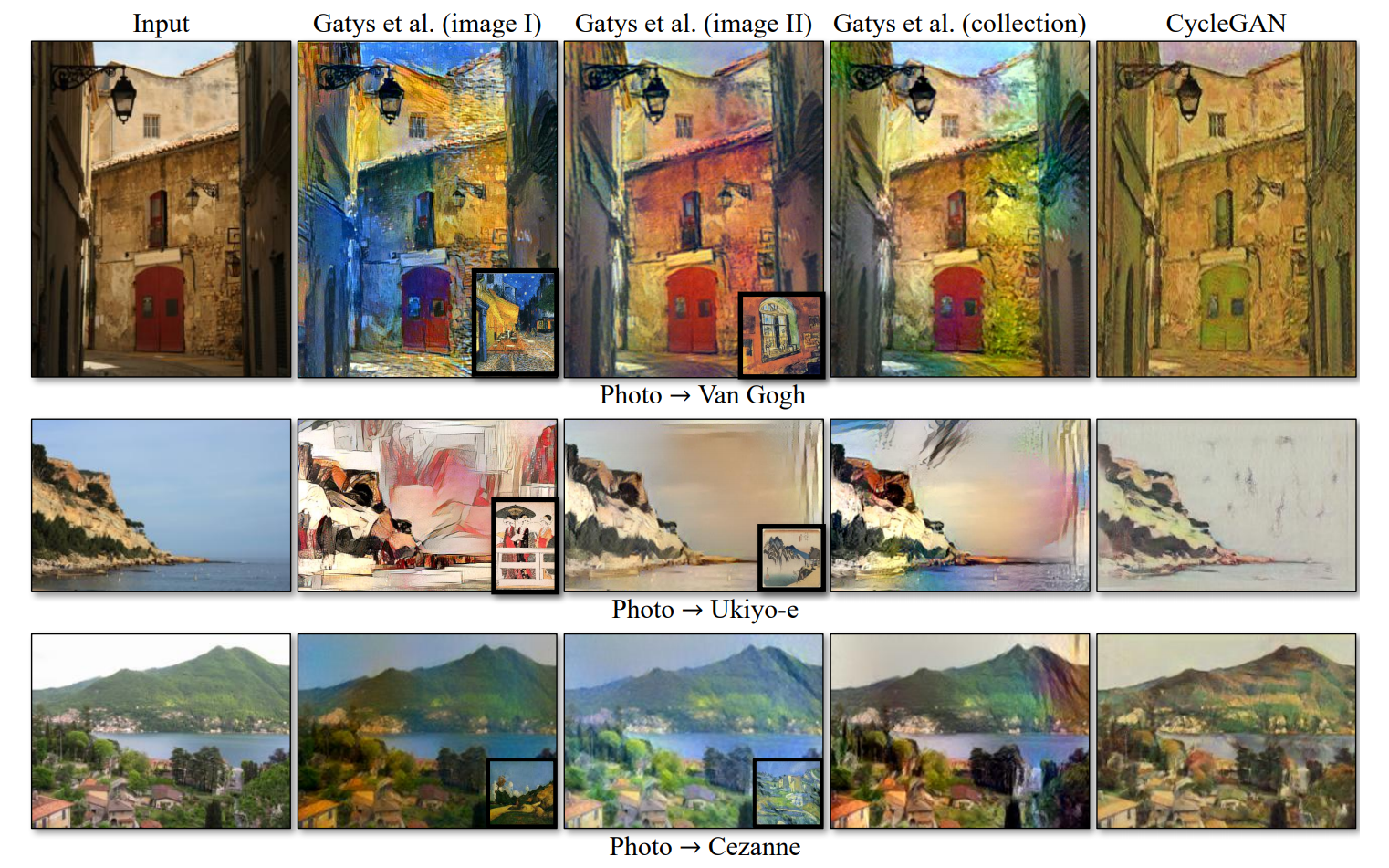

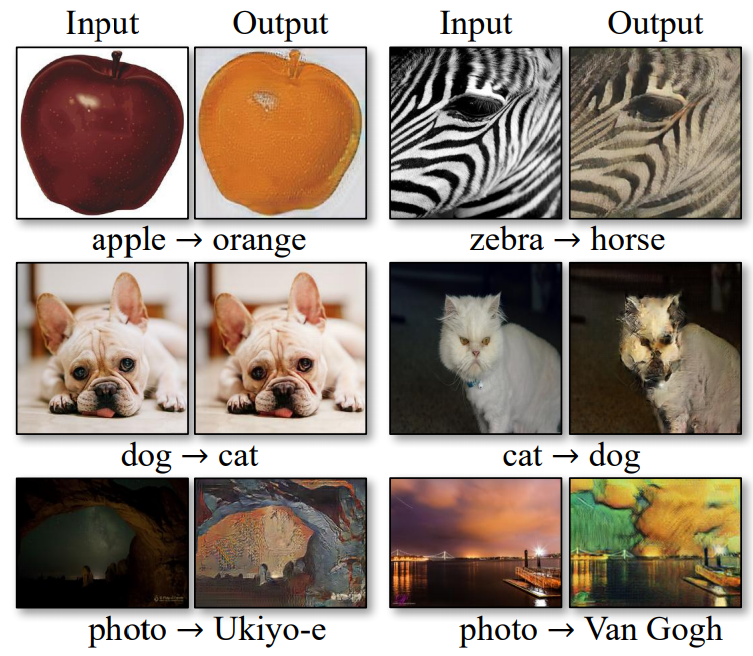

|

|

|

|

|

|

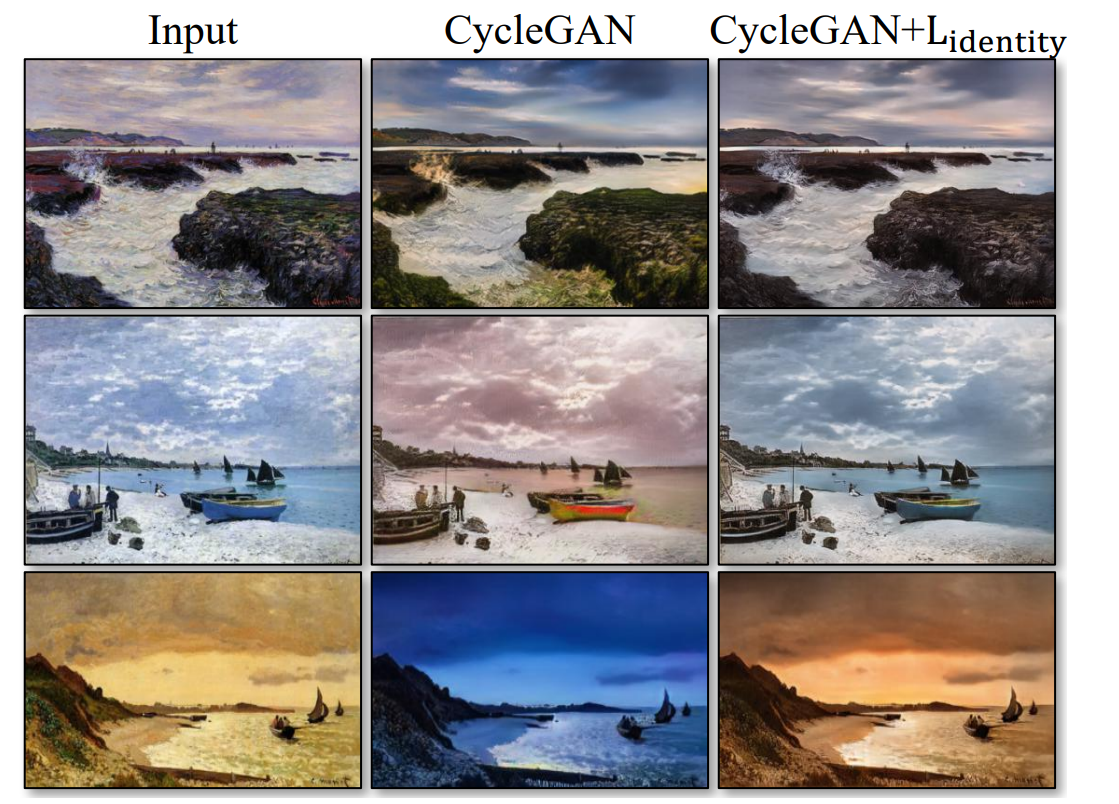

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

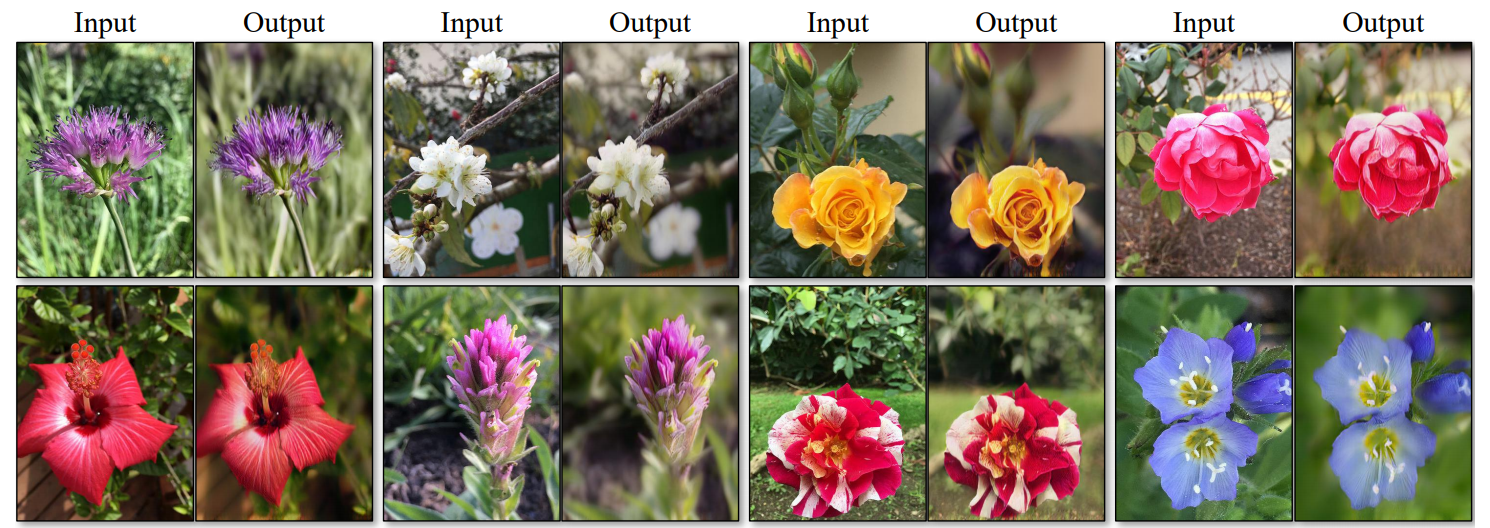

|

|

|

|

|

|

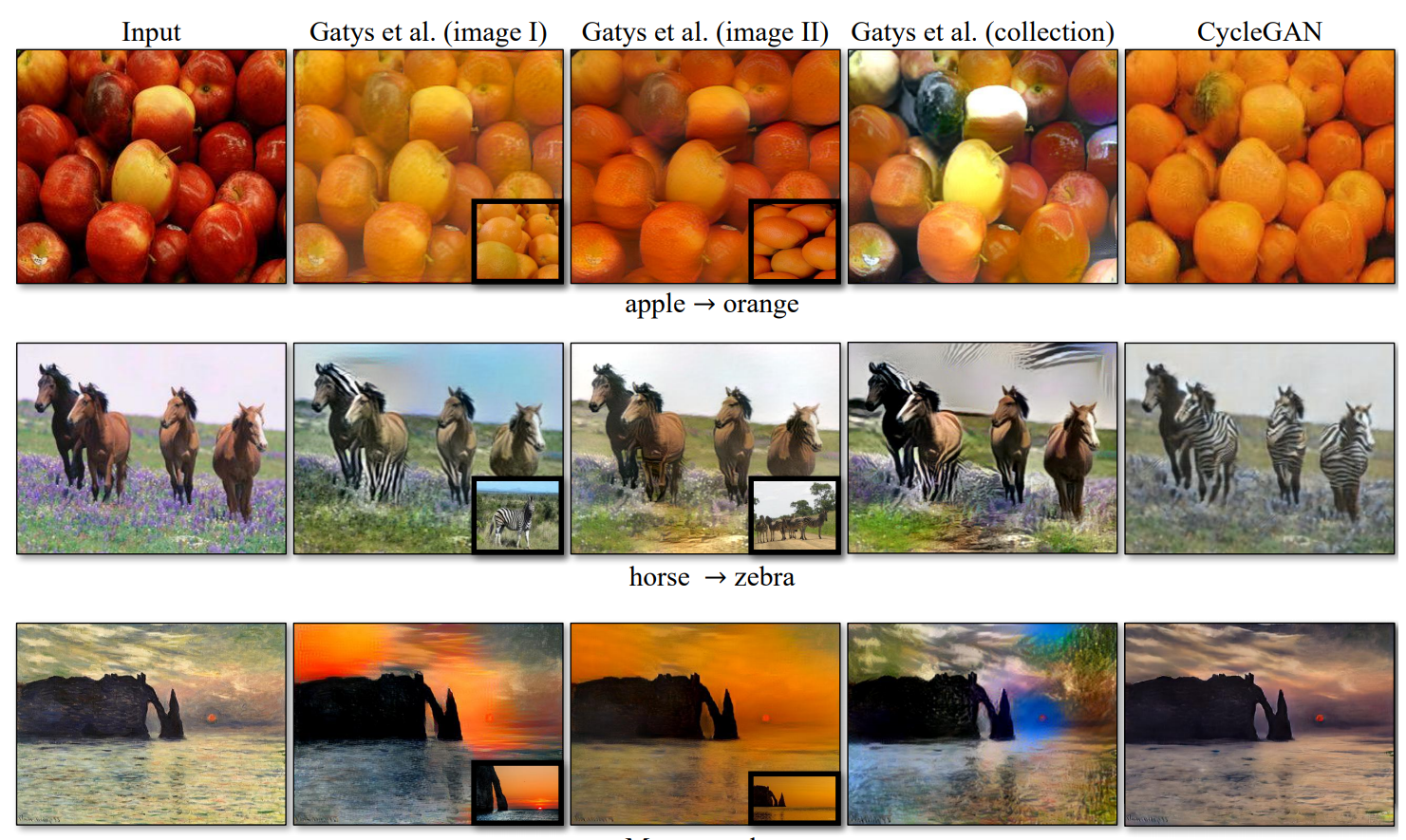

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

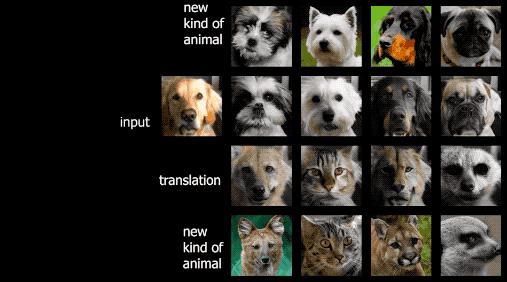

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: README

|

| 3 |

+

aliases:

|

| 4 |

+

emoji: 🖼️

|

| 5 |

+

tags: [CycleGAN]

|

| 6 |

+

date: 2023-02-21

|

|

|

|

| 7 |

app_file: app.py

|

| 8 |

pinned: false

|

| 9 |

+

sdk: streamlit

|

| 10 |

+

sdk_version: 1.17.0

|

| 11 |

---

|

| 12 |

|

| 13 |

+

>[CycleGAN 论文原文 arXiv](https://arxiv.org/pdf/1703.10593.pdf)

|

| 14 |

+

|

| 15 |

+

>

|

| 16 |

+

|

| 17 |

+

>这是文章作者GitHub上的 junyanz

|

| 18 |

+

|

| 19 |

+

> [CycleGAN junyanz,作者自己用lua 在GitHub 上的实现](https://github.com/junyanz/CycleGAN)

|

| 20 |

+

>

|

| 21 |

+

>

|

| 22 |

+

>

|

| 23 |

+

> 这是GitHub上面其他人实现的 LYnnHo

|

| 24 |

+

|

| 25 |

+

# 摘要:

|

| 26 |

+

|

| 27 |

+

图像到**图像**的**翻译 (Image-to-Image translation)** 是一种视觉上和图像上的问题,它的目标是使用成对的图像作为训练集,(让机器)学习从输入图像到输出图像的映射。然而,在很多任务中,成对的训练数据无法得到。

|

| 28 |

+

|

| 29 |

+

我们提出一种在缺少成对数据的情况下,(让机器)学习从源数据域X到目标数据域Y 的方法。我们的目标是使用一个对抗损失函数,学习映射G:X → Y ,使得判别器难以区分图片 G(X) 与 图片Y。因为这样子的映射受到巨大的限制,所以我们为映射G 添加了一个相反的映射F:Y → X,使他们成对,同时加入一个循环一致性损失函数 (cycle consistency loss),以确保 F(G(X)) ≈ X(反之亦然)。

|

| 30 |

+

|

| 31 |

+

在缺少成对训练数据的情况下,我们比较了风格迁移、物体变形、季节转换、照片增强等任务下的定性结果。经过定性比较,我们的方法表现得比先前的方法更好。

|

| 32 |

+

|

| 33 |

+

# 介绍

|

| 34 |

+

|

| 35 |

+

在1873年某个明媚的春日,当莫奈(Claude Monet) 在Argenteuil 的塞纳河畔(the bank of Seine) 放置他的画架时,他究竟看到了什么?如果彩色照片在当时就被发明了,那么这个场景就可以被记录下来——碧蓝的天空倒映在波光粼粼的河面上。莫奈通过他细致的笔触与明亮的色板,将这一场景传达出来。

|

| 36 |

+

|

| 37 |

+

如果莫奈画画的事情发生在 Cassis 小港口的一个凉爽的夏夜,那么会发生什么?

|

| 38 |

+

|

| 39 |

+

漫步在挂满莫奈画作的画廊里,我们可以想象他会如何在画作上呈现出这样的场景:也许是淡雅的夜色,加上惊艳的几笔,还有变化平缓的光影范围。

|

| 40 |

+

|

| 41 |

+

我们可以想象所有的这些东西,尽管从未见过莫奈画作与对应场景的真实照片一对一地放在一起。与此不同的是:我们已经见过许多风景照和莫奈的照片。我们可以推断出这两类图片风格的差异,然后想象出“翻译”后的图像。

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

photo → Monet

|

| 46 |

+

|

| 47 |

+

在这篇文章中,我们提出了一个学习做相同事情的方法:在没有成对图像的情况下,刻画一个图像数据集的特征,并弄清楚如何将这些特征转化为另外一个图像数据集的特征。

|

| 48 |

+

|

| 49 |

+

这个问题可以被描述成概念更加广泛的图像到图像的翻译 (Image-to-Image translation),从给定的场景x 完成一张图像到另一个场景 y 的转换。举例:从灰度图片到彩色图片、从图像到语义标签(semantic labels) 、从轮廓到图片。发展了多年的计算机视觉、图像处理、计算图像图形学(computational photography, and graphics ?) 学界提出了有力的监督学习翻译系统,它需要成对的数据

|

| 50 |

+

|

| 51 |

+

(就像那个 pix2pix 模型一样)。

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

需要成对数据的 pix2pix

|

| 56 |

+

|

| 57 |

+

然而,获取成对的数据比较困难,也耗费资金。例如:只有几个成对的语义分割数据集,并且它们很小。特别是为艺术风格迁移之类的图像任务获取成对的数据就更难了,进行复杂的输出已经很难了,更何况是进行艺术创作。对于许多任务而言,就像物体变形(例如 斑马-马),这一类任务的输出更加不容易定义。

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

wild horse → zebra

|

| 62 |

+

|

| 63 |

+

- 因此我们寻找一种算法可以学习如何在没有成对数据的情况下,在两个场景之间进行转换。

|

| 64 |

+

- 我们假设在两个数据域直接存在某种联系——例如:每中场景中的每幅图片在另一个场景中都有它对应的图像,(我们让机器)去学习这个转换关系。尽管缺乏成对的监督学习样本,我们仍然可以在集合层面使用监督学习:我们在数据域X中给出一组图像,在数据域Y 中给出另外一组图像。我们可以训练一种映射G : X → Y 使得 输出,判别器的功能是将生成样本和真实样本 区分开,我们的实验正是要让 和 无法被判别器区分开。理论上,这一项将包括符合 经验分布的的输出���布(通常这要求映射G 是随机的)。从而存在一个最佳的映射将数据域 翻译为数据域 ,使得 数据域(有相同的分布)。然而,这样的翻译不能保证独立分布的输入 和输出 是有意义的一对——因为有无限多种映射 可以由输入的 导出相同的。此外,在实际中我们发现很难单独地优化判别器:当输出图图片从输入映射到输出的时候,标准的程序经常因为一些众所周知的问题而导致奔溃,使得优化无法继续。

|

| 65 |

+

|

| 66 |

+

为了解决这些问题,我们需要往我们的模型中添加其他结构。因此我们利用 **翻译应该具有“循环稳定性”(translation should be "cycle consistent")** 的这个性质,某种意义上,我们将一个句子从英语翻译到法语,再从法语翻译回英语,那么我们将会得到一相同的句子。从数学上讲,如果我们有一个翻译器 G : X → Y 与另一个翻译器 F : Y → X ,那么 G 与 F 彼此是相反的,这一对映射是双射(bijections) 。

|

| 67 |

+

|

| 68 |

+

于是我们将这个结构应用到 映射 G 和 F 的同步训练中,并且加入一个 循环稳定损失函数(_cycle consistency loss)_ 以确保到达与。将这个损失函数与判别器在数据域X 与数据域Y 的对抗损失函数结合起来,就可以得到非成对图像到图像的目标转换。

|

| 69 |

+

|

| 70 |

+

我们将这个方法应用广泛的领域上,包括风格迁移、物体变形、季节转换、照片增强。与以前的方法比较,以前的方法依既赖过多的人工定义与调节,又依赖于共享的内部参数,在比较中也表明我们的方法要优于这些(合格?)基准线(out method outperforms these baselines )。我们提供了这个模型在 [PyTorch](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) 和 [Torch](https://github.com/junyanz/CycleGAN) 上的实现代码,[点击这个网址](https://junyanz.github.io/CycleGAN/)进行访问。

|

| 71 |

+

|

| 72 |

+

## 相关工作

|

| 73 |

+

|

| 74 |

+

对抗生成网络 Generative Adversarial Networks (GANs) 在图像生成、图像编辑、表征学习(representation learning) 等领域以及取得了令人瞩目的成就。在最近,条件图像的生成的方法也采用了相同的思路,例如 从文本到图像 (text2image)、图像修复(image inpainting)、视频预测(future prediction),与其他领域(视频和三维数据)。

|

| 75 |

+

|

| 76 |

+

对抗生成网络成功的关键是:通过对抗损失(adversarial loss)促使生成器生成的图像在原则上无法与真实图像区分开来。图像生成正是许多计算机图像生成任务的优化目标,这种损失对在这类任务上特别有用。我们采用对抗损失来学习映射,使得翻译得到的图片难以与目标域的图像区分开。

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

需要成对训练图片的 pix2pix

|

| 81 |

+

|

| 82 |

+

**Image-to-Image Translation 图像到图像的翻译**

|

| 83 |

+

|

| 84 |

+

这个想法可以追溯到Hertzmann的 图像类比 (Image Analogies),这个模型在一对输入输出的训练图像上采用了无参数的纹理模型。最近 (2017) 的更多方法使用 输入-输出样例数据集训练卷积神经网络。我们的研究建立在 Isola 的 pix2pix 框架上,这个框架使用了条件对抗生成网络去学习从输入到输出的映射。相似的想法也已经应用在多个不同的任务上,例如:从轮廓、图像属性、布局语义 (semantic layouts) 生成图片。然而,与就如先前的工作不同,我们可以从不成对的训练图片中,学习到这种映射。

|

| 85 |

+

|

| 86 |

+

**Unpaired Image-to-Image Translation 不成对的图像到图像的翻译**

|

| 87 |

+

|

| 88 |

+

其他的几个不同的旨在关联两个数据域 X和Y 的方法,也解决了不成对数据的问题。

|

| 89 |

+

|

| 90 |

+

最近,Rosales 提出了一个贝叶斯框架,通过对原图像以及从多风格图像中得到的似然项 (likelihood term) 进行计算,得到一个基于区块 (patch-based)、基于先验信息的马尔可夫随机场;更近一点的研究,有 CoGAN 和 跨模态场景网络 (corss-modal scene networks) 使用了权重共享策略 去学习跨领域 (across domains) 的共同表示;同时期的研究,有 刘洺堉 用变分自编码器与对抗生成网络结合起来,拓展了原先的网络框架。同时期另一个方向的研究,有 尝试 (encourages) 共享有特定“内容 (content) ”的特征,即便输入和输出的信息有不同的“风格 (style) ”。这些方法也使用了对抗网络,并添加了一些项目,促使输出的内容在预先定义的度量空间内,更加接近于输入,就像 标签分类空间 (class label space) ,图片像素空间 (image pixel space), 以及图片特征空间 (image feature space) 。

|

| 91 |

+

|

| 92 |

+

> 不同于其他方法,我们的设计不依赖于 特定任务 以及 预定义输入输出似然函数,我们也不需要要求输入和输出数据处于一个相同的低纬度嵌入空间 (low-dimensional embedding space) 。因此我们的模型是适用于各种图像任务的通用解决方案。我们直接把本文的方案与先前的、现在的几种方案在第五节进行对比。

|

| 93 |

+

|

| 94 |

+

**Cycle Consitency 循环一致性**

|

| 95 |

+

|

| 96 |

+

把可传递性 (transitivity) 作为结构数据正则化的手段由来已久。近十年来,在视觉追踪 (visual tracking) 任务里,确保简单的前后向传播一致 (simple forward-backward consistency) 已经成为一个标准。在语言处理领域,通过“反向翻译与核对(back translation and reconcilation) ”验证并提高人工翻译的质量,机器翻译也是如此。更近一些的研究,使用到使用高阶循环一致性的有:动作检测、三维目标匹配,协同分割(co-segmentation) ,稠密语义分割校准(desnse semantic alignment),景物深度估计(depth estimation) 。

|

| 97 |

+

|

| 98 |

+

> 下面两篇文章的与我的工作比较相似,他们也使用了循环一致性损失体现传递性,从而监督卷积网络的训练:基于左右眼一致性的单眼景物深度估计 (Unsupervised monocular depth estimation with left-right consistency) —— Godard 通过三维引导的循环一致性学习稠密的对应关系 (Learning dense correspondence via 3d-guided cycle consistency) —— T. Zhou.

|

| 99 |

+

|

| 100 |

+

我们引入了相似的损失使得两个生成器G与F 保持彼此一致。同时期的研究,Z. Yi. 受到机器翻译对偶学习的启发,独立地使用了一个与我们类型的结构,用于不成对的图像到图像的翻译——Dualgan: Unsupervised dual learning for image-to-image translation.

|

| 101 |

+

|

| 102 |

+

**神经网络风格迁移 Neural Style Transfer**

|

| 103 |

+

|

| 104 |

+

神经网络风格迁移是优化 图像到图像翻译 的另外一种方法,通过比较不同风格的两种图像(一张是普通图片,另一张是另外一种风格的图片(一般来讲是绘画作品))并将一幅图像的内容和另一幅的风格组合起来,基于预训练期间对伽马矩阵进行统计从而得到深层次的特征,再对这些特征进行匹配,最终创造新的图像。

|

| 105 |

+

|

| 106 |

+

另一方面,我们主要关注的是:通过刻画更高层级外观结构之间的对应关系,学习两个图像集之间的映射,而不仅是两张特定图片之间的映射。因此,我们的方法可以应用在其他任务上,例如从 绘画 → 图片,物体变形(object transfiguration),等那些单样品转换方法表现不好的地方。我们在 5.2节 比较了这两种方法。

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

## 公式推导

|

| 111 |

+

|

| 112 |

+

我们的目标是学习两个数据域 X 与 Y 之间的映射函数,定义数据集合与数据分布,与模型的两个映射,其中:

|

| 113 |

+

|

| 114 |

+

另外,我们引入了两个判别函数:

|

| 115 |

+

|

| 116 |

+

- 用于区分{x} 与 {F(y)} 的 D_X

|

| 117 |

+

- 用于区分{y} 与 {G(x)} 的 D_Y 。

|

| 118 |

+

|

| 119 |

+

我们的构建的模型包含两类组件(Our objective contains two types of terms):

|

| 120 |

+

|

| 121 |

+

- 对抗损失(adversarial losses),使生成的图片在分布上更接近于目标图片;

|

| 122 |

+

- 循环一致性损失(cycle consistency losses),防止学习到的映射 G与F 相互矛盾。

|

| 123 |

+

|

| 124 |

+

## 对抗损失(adversarial losses)

|

| 125 |

+

|

| 126 |

+

我们为两个映射函数都设置了对抗损失。对于映射函数G 和它的判别器 D_Y ,我们有如下的表达式:

|

| 127 |

+

|

| 128 |

+

$$

|

| 129 |

+

\begin{align} \mathcal{L}_{GAN}(G,D_Y,X,Y) &= \mathbb{E}_{y \sim p_{data}(y)} \big[log (D_Y(Y) ) \big] \\ &+ \mathbb{E}_{x \sim p_{data}(x)} \big[log (1-D_Y(G(x))~) \big] \end{align}

|

| 130 |

+

$$

|

| 131 |

+

|

| 132 |

+

当映射G 试图生成与数据域Y相似的图片 G(x) 的时候,判别器也在试着将生成的图片从原图中区分出来。映射G 希望通过优化减小的项目与 映射F 希望优化增大的项目 相对抗,另一个映射F 也是如此。这两个相互对称的结构用公式表达就是:

|

| 133 |

+

|

| 134 |

+

$$

|

| 135 |

+

min_G \ max_{D_Y} \ \mathcal{L}_{GAN} (G,D_Y,X,Y) \\ min_F \ max_{D_X} \ \mathcal{L}_{GAN} (F,D_X,Y, X)

|

| 136 |

+

$$

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

图3(b), 图3(c)

|

| 141 |

+

|

| 142 |

+

## 循环一致性损失(cycle consistency loss)

|

| 143 |

+

|

| 144 |

+

理论上对抗训练可以学习到 映射G与F,并生成与目标域 Y与X 相似的分布的输出(严格地讲,这要求映射G与F 应该是一个随机函数。然而,当一个网络拥有足够大的容量,那么输入任何随机排列的图片,它都可以映射到与目标图片相匹配的输出分布。因此,不能保证单独依靠对抗损失而学习到的映射可以将每个单独输入的 x_i 映射到期望得到的 y_i 。

|

| 145 |

+

|

| 146 |

+

为了进一步减少函数映射可能的得到的空间大小,我们认为学习的的得到的函数应该具有循环一致性(cycle-consistent): 如图3(b) 所示,数据域X 中的每一张图片x 在循环翻译中,应该可以让x 回到翻译的原点,反之亦然,即 前向、后向循环一致,换言之: x \rightarrow G(x) \rightarrow F(G(x)) \approx x \\ y \rightarrow F(y) \rightarrow G(F(y)) \approx y

|

| 147 |

+

|

| 148 |

+

我们使用循环一致性损失作为激励,于是有:

|

| 149 |

+

|

| 150 |

+

\begin{align} \mathcal{L}_{cyc}(G, F) &= \mathbb{E}_{x \sim p_{data}(x)}[ || F(G(x)) - x ||_1] \\ &+ \mathbb{E}_{y \sim p_{data}(y)}[ || G(F(y)) - y ||_1] \end{align}

|

| 151 |

+

|

| 152 |

+

初步实验中,我们也尝试用F(G(x)) 与x 之间、G(F(Y)) 与y 之间的对抗损失替代上面的L1 范数,但是没有观察到更好的性能。

|

| 153 |

+

|

| 154 |

+

如图4 所示,加入循环一致性损失最终使得模型重构的图像F(G(x)) 与输入的图像x 十分匹配。

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

图4, cycleGAN 的循环一致性

|

| 159 |

+

|

| 160 |

+

## 3.3 完整的模型对象(Full Objective)

|

| 161 |

+

|

| 162 |

+

我们完整的模型对象如下,其中,_λ_ 控制两个对象的相对重要性。

|

| 163 |

+

|

| 164 |

+

\begin{align} \mathcal{L}(G, F, D_X, D_Y) &= \mathcal{L}_{GAN}(G,D_Y, X, Y) \\ &+ \mathcal{L}_{GAN}(F,D_X, Y, X) \\ &+ \lambda \mathcal{L}_{cyc}(G, F) \\ \end{align}

|

| 165 |

+

|

| 166 |

+

我们希望解决映射的学习问题:

|

| 167 |

+

|

| 168 |

+

G^*, \ F^* = \arg \min_{G, F} \max_{D_X, D_Y} \ \mathcal{L}(G, F, D_X, D_Y)

|

| 169 |

+

|

| 170 |

+

请注意,我们的模型可以视为训练了两个自动编码器(auto-encoder):

|

| 171 |

+

|

| 172 |

+

F \circ G: X \rightarrow X \\ G \circ F: Y \rightarrow Y

|

| 173 |

+

|

| 174 |

+

然而,每一个自动编码器都有它特殊的内部结构:它们通过中间介质将图片映射到自身,并且这个中间介质属于另一个数据域。这样的一种配置可以视为是使用了对抗损失训练瓶颈层(bottle-neck layer) 去匹配任意目标分布的“对抗性自动编码器”(advesarial auto-encoders)。在我们的例子中,目标分布是中间介质Yi 分布于数据域Y 的自动编码器X_i \rightarrow Y_i \rightarrow X_i 。

|

| 175 |

+

|

| 176 |

+

在 5.1.4节,我们将我们的方法与消去了 完整对象的模型 (ablations of the full objective) 进行比较,包括只包含对抗损失 \mathcal{L}_{GAN} 、只包含循环一致性损失\mathcal{L}_{cyc},根据我们的经验,在模型中加入这两个对象,对获得高质量的结果而言十分重要。我们也对单向的循环损失模型进行评估,它的结果表明:单向的循环对这个问题的约束不够充分,因而不足以使训练获得足够的正则化。(a single cycle is not sufficient to regularize teh training for this under-constrained problem)

|

| 177 |

+

|

| 178 |

+

## 4. 实现(Implementation)

|

| 179 |

+

|

| 180 |

+

**网络结构**

|

| 181 |

+

|

| 182 |

+

我们采用了**J. Johnson文章**中的生成网络架构。这个网络包含两个步长为2 的卷积层,几个残差模块,两个步长为1/2 的**转置卷积层**(transposed concolution; 原文是fractionally-strided convolutions)。我们使用了6个模块去处理 128x128 的图片,以及9个模块去处理256x256的高分辨率训练图片。与Johnson 的方法类似,我们对每个实例使用了正则化(instance normalization)。我们使用了 70x70 的PatchGANs 作为我的判别器网络,这个网络用来判断图片覆盖的70x70补丁是否来自于原图。比起全图的鉴别器,这样的补丁层级的鉴别器有更少的参数,并且可以以完全卷积的方式处理任意尺寸的图像。

|

| 183 |

+

|

| 184 |

+

> **J. Johnson文章**:指的是李飞飞他们的那篇文章:感知损失在 实时风格迁移 与 超分辨率 上的应用 (Perceptual losses for real-time style transfer and super-resolution)

|

| 185 |

+

>

|

| 186 |

+

> 分数步长卷积 (fractionally-strided convolution):也就是 转置卷积层 Transposed Convolution,也有人叫 反卷积、逆卷积(deconvolution),不过这个过程不是卷积的逆过程,所以我建议用 **转置卷积层**称呼它。

|

| 187 |

+

>

|

| 188 |

+

> PatchGANs:使用条件GAN 实现图片到图片的翻译 Image-to-Image Translation with Conditional Adversarial Networks ——本文作者是这篇文章的第二作者

|

| 189 |

+

|

| 190 |

+

**训练细节**

|

| 191 |

+

|

| 192 |

+

我们把学界近期的两个技术拿来用做我们的模型里,用于稳定模型的训练。

|

| 193 |

+

|

| 194 |

+

第一,对于 \mathcal{L}_{GAN} ,我们使用**最小二乘损失**(least squares loss) 取代 原来的负对数似然损失(就是LeCun 的那个)。在训练时,这个损失函数有更好的稳定性,并且可以生成更高质量的结果。实际上,对于GAN的损失函数,我们为两个映射 G(X) 与D(X) 各自训练了一个损失函数。

|

| 195 |

+

|

| 196 |

+

\mathcal{L}_{GAN}(G,D,X,Y) \\ G.minimize \big( \mathbb{E}_{x \sim p_{data}(x)}[ || G(F(y)) - y ||_1] \big) \\ D.minimize \big( \mathbb{E}_{y \sim p_{data}(y)}[ || F(G(x)) - x ||_1] \big)

|

| 197 |

+

|

| 198 |

+

第二,为了减小模型训练时候的震荡(oscillation),我们遵循Shrivastava 的策略——在更新判别器的时候,使用生成的图片历史,而不是生成器最新一次生成的图片。我们把最近50次生成的图片保存为缓存。

|

| 199 |

+

|

| 200 |

+

在每一次实验中,在计算损失函数的时候,我们都把下面公式内的 \lambda 设置为10,我们使用Adam 进行批次大小为1 的更新。所有的网络都是把学习率设置为0.0002 后,从头开始训练的。在前100次训练中,我们保持相同的学习率,并且在100次训练后,我们保持学习率向0的方向线性减少。第七节(附录7)记录了关于数据集、模型结构、训练程序的更多细节

|

| 201 |

+

|

| 202 |

+

\begin{align} \mathcal{L}_{GAN}(G, F, D_X, D_Y) &= \mathcal{L}_{GAN}(G,D_Y, X, Y) \\ &+ \mathcal{L}_{GAN}(F,D_X, Y, X) \\ &+ \mathcal{L}_{GAN}(G, F) \cdot \lambda \\ \end{align}

|

| 203 |

+

|

| 204 |

+

> **最小二乘损失**:来自于——LSGANs, Least squares generative adversarial networks

|

| 205 |

+

|

| 206 |

+

## 5.结果

|

| 207 |

+

|

| 208 |

+

首先,我们将我们的方法与最近的 训练图片不成对的图片翻译 方法对比,并且使用的是成对的数据集,评估的时候使用的是 标记正确的成对图片。然后我们将我们的方案与方案的几种变体一同比较,研究了 对抗损失 与 循环一致性损失 的重要性。最后,在只有不成对图片存在的情况下,我们在更大的范围内,展示了我们算法的泛化性能。

|

| 209 |

+

|

| 210 |

+

为了简洁起见,我们把这个模型称为 CycleGAN。在[我们的网站](https://junyanz.github.io/CycleGAN/)上,你可以找到所有的研究成果,包括[PyTorch](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) 和 [Torch](https://github.com/junyanz/CycleGAN) 的实现代码。

|

| 211 |

+

|

| 212 |

+

## 5.1 评估

|

| 213 |

+

|

| 214 |

+

我们使用与pix2pix 相同的评估数据集,与现行的几个基线(baseline) 进行了定量与定性的比较。这些任务包括了 在城市景观数据集(Cityscapes dataset) 上的 语义标签↔照片,在谷歌地图上获取的 地图↔卫星图片。我们在整个损失函数上进行了模型简化测试(ablation study 模型消融研究 )。

|

| 215 |

+

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

完整的cycleGAN 与 模型简化测试(ablation study 模型消融研究)

|

| 219 |

+

|

| 220 |

+

## **5.1.1 评估指标 (Evalution Metrics)**

|

| 221 |

+

|

| 222 |

+

**AMT感知研究(AMT perceptual studies)** 在 地图↔卫星图片的任务中,我们在亚马逊 **真 · 人工 智能平台**(Amazon Mechanical Turk) 上,建立了“图片真伪判别”的任务,来评估我们的输出的图片的真实性。我们遵循Isola 等人相同的感知研究方法,不同的是:我每个算法收集了25个实验参与者 (participant) 的结果 。会有一系列成对的真伪图片 展示给实验参与者观看,参与者需要点击选择他认为是真实图片的那一张,(另外一张伪造的图片是由我们的算法生成的,或者是其他基线算法(baselines) 生成的)。每一轮的前10个试验用来练习,并将他们答案的正误 反馈给试验参与者。每一轮只会测试一种算法,每一个试验参与者只允许参与测试一轮。

|

| 223 |

+

|

| 224 |

+

|

| 225 |

+

|

| 226 |

+

亚马逊 真 · 人工 智能平台(Amazon Mechanical Turk)

|

| 227 |

+

|

| 228 |

+

请注意,我们报告中的数字,不能直接与其他文章中报告的数字进行比较,因为我们的正确标定图片与他们有轻微的不同,并且实验的参与人员也有可能不同(由于实验室在不同的时间进行的)。因此,我们报告中的数字,用来在这篇文章内部进行比较。

|

| 229 |

+

|

| 230 |

+

**FCN score (全卷积网络得分 full-convolutional network score )** 虽然感知研究可能是评估图像真实性的黄金准则,但是我们也在寻求不需要人类经验的 自动质量检测方法。为此,我们采用**Isola 的 "FCN score"** 来进行 城市景观标签↔照片 转换任务的评估。全卷积网络的根据现成的语义分割模型 来测量评估图片的可解释性。全卷积从一幅图片 预测出整张图片的语义标签地图,然后使用标准的语义分割模型,各自生成图片与输入图片 的标签地图,然后使用它们用来描述的标准语义进行比较。直觉上,我们从标签地图得到“路上的车”的生成图片,然后把生成的图片输入全卷积网络,如果可以从全卷积网络上也得到“路上的车”的标签地图,那么就是我们恢复成功了。

|

| 231 |

+

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

城市景观语义分割 Cityscapes dataset

|

| 235 |

+

|

| 236 |

+

**语义分割指标(Semantic segmentation metrics )** 为了评估 图片→标签 的性能,我们使用了城市景观的标准指标(standard metrics from the CItyscapes benchmark ),包括了每一个像素的准确率,每个等级的准确率,类别交并比(Class IoU, class Intersection-Over-Union) 平均值。

|

| 237 |

+

|

| 238 |

+

> AMT:亚马逊的 **真 · 人工 智能平台**(Amazon Mechanical Turk),这个平台就是Amazon 在网站上发布任务,由系统分发任务给人类领取,然后按照自己内部的算法支付酬劳,是真的 人工的 智能平台。其实应该叫 人力手动 智能平台。 AMT perceptual studies AMT感知研究,指的就是把自己的结果让人力手动地 去评估。

|

| 239 |

+

>

|

| 240 |

+

> Isola 等人的研究:用条件对抗网络进行图像到图像的翻译 Imageto-image translation with conditional adversarial networks. In CVPR, 2017. 包括了用 FCN score 对图片进行评估。

|

| 241 |

+

|

| 242 |

+

## **5.1.2 基线模型(baselines)**

|

| 243 |

+

|

| 244 |

+

**CoGAN(Coupled GAN)** 这个模型训练一个对抗生成网络,两个生成网络分别生成数据域X 与 数据域Y,前几层都进行权重绑定,并共享对数据潜在的表达。从X 到Y 的翻译可以通过寻找相同的潜在表达 来生成图片X,然后把这个潜在的表达翻译成风格Y 。

|

| 245 |

+

|

| 246 |

+

**SimGAN** 与我们的方法类似,用对抗损失训练了一个从X 到Y 的翻译,正则项 ||x-G(x)||_1 用来惩罚图片在像素层级上过大的改动。

|

| 247 |

+

|

| 248 |

+

**Feature loss + GAN** 我们还测试了SimGAN的变体——使用的预训练网络(VGG-16, relu4_2 ) 在图像深度特征(deep image features) 上计算L1 损失,而不是在RGB值上计算。像这样在深度特征空间上计算距离,有时候也被称为是使用“感知损失(perceptual loss )”。

|

| 249 |

+

|

| 250 |

+

**BiGAN/ ALI** 无条件约束GAN 训练了生成器G: Z→X ,将一个随机噪声映射为图片x 。BiGAN 和ALI 也建议学习逆向的映射F: X→Z 。虽然它们一开始的设计是 学习将潜在的向量z 映射为图片x ,我们实现了相同的组件,将原始图片x 映射到 目标图片y 。

|

| 251 |

+

|

| 252 |

+

**pix2pix** 我们也比较了在成对数据上训练的pix2pix模型,想看看在不使用成对训练数据的情况下,我们能够如何接近这个天花板。

|

| 253 |

+

|

| 254 |

+

为了公平起见,我们使用了与我们的方法 相同的架构和细节实现了这些基线模型,除了CoGAN,CoGAN建立在共享 潜在表达 而输出图片的生成器上。因此我们使用了CoGAN实现的公共版本。

|

| 255 |

+

|

| 256 |

+

> **BiGAN** V. Dumoulin. Adversarially learned inference. In ICLR, 2017.

|

| 257 |

+

> **ALI** ?? J. Donahue. Adversarial feature learning. In ICLR, 2017

|

| 258 |

+

> **pix2pix** P.Isola Imageto-image translation with conditional adversarial networks. In CVPR, 2017.

|

| 259 |

+

|

| 260 |

+

|

| 261 |

+

|

| 262 |

+

可以接受不成对训练数据的cycleGAN 和 需要成对数据的pix2pix

|

| 263 |

+

|

| 264 |

+

5.1.3 与基线模型相比较

|

| 265 |

+

|

| 266 |

+

5.1.4 对损失函数的分析

|

| 267 |

+

|

| 268 |

+

5.1.5 图片重构质量

|

| 269 |

+

|

| 270 |

+

5.1.6 成对数据集的其他结果

|

| 271 |

+

|

| 272 |

+

## 5.2 应用(Applications)

|

| 273 |

+

|

| 274 |

+

我们演示了 成对的训练数据不存在时 cycleGAN 的几种应用方法。可以看第七节 附录,以获取更多关于数据集的细节。我们观察到训练集上的翻译 比测试上的更有吸引力,应用的所有训练与测试的数据都可以在[我们项目的网站](https://junyanz.github.io/CycleGAN/)上看到。

|

| 275 |

+

|

| 276 |

+

**风格迁移 (Collection style transfer)** 我们用Flickr 和 WikiArt 上下载的风景图片,训练了一个模型。注意,与最近的“**神经网络风格迁移(neural style transfer)**” 不同,我们的方法学习的是 对整个艺术作品数据集的仿造。因此,我们可以学习 以梵高的风格生成图片,而不仅是学习 星夜(Starry Night) 这一幅画的风格。我们对 塞尚(Cezanne),莫奈(Monet),梵高(Van Gogh) 以及日本浮世绘 这每个艺术风格 都构建了一个数据集,他们的数量大小分别是 526,1073,400,563 张。

|

| 277 |

+

|

| 278 |

+

**物件变形(Object transfiguration)** 训练这个模型用来将ImageNet 上的一个类别的物件 转化为另外一个类型的物物件(每个类型包含了约1000张的训练图片)。Turmukhambetov 提出了一个子空间的模型将一类物件转变为同一类别的另外一个物件。而我们的方法侧重于两个(来自于不同类别)而视觉上相似的的物件之间的变形。

|

| 279 |

+

|

| 280 |

+

**季节转换(Season transfer)** 这个模型在 从Flickr上下载的Yosemite风景照上训练,其中包括 854张冬季(冰雪) 和 1273张 夏季的图片。

|

| 281 |

+

|

| 282 |

+

从画作中生成照片(Photo generation from paintings) 为了建立映射 画作→照片,我们发现:添加一个鼓励颜色成分保留的损失 对映射的学习有帮助。特别是采用了Taigman 的技术后,当提供目标域的真实样本 作为生成器的输入时,对生成器进行正则化以接近恒等映射(identity mapping)

|

| 283 |

+

|

| 284 |

+

\begin{align} \mathcal{L}_{GAN}(G, F) &= \mathbb{E}_{y \sim p_{data}}[||G(y)-y||_1] \\ &+ \mathbb{E}_{x \sim p_{data}}[||F(x)-x||_1] \end{align}

|

| 285 |

+

|

| 286 |

+

在不使用 \mathcal{L}_{identity} 时,生成器G与F 可以自由地改变输入图像的色调,而这是不必要的。例如,当学习莫奈的画作和Flicker 的照片时,生成器经常将白天的画作映射到 黄昏时拍的照片,因为这样的映射可能在 对抗损失和循环一致性损失 的启用下同样有效。这种恒等映射在图9 中可以看到。

|

| 287 |

+

|

| 288 |

+

|

| 289 |

+

|

| 290 |

+

恒等映射(identity mapping)

|

| 291 |

+

|

| 292 |

+

> **神经网络风格迁移 neural style transfer** L. A. Gatys. Image style transfer using convolutional neural networks. CVPR, 2016

|

| 293 |

+

>

|

| 294 |

+

> 使用 条件纹理成分分析 为物体的外观建模 D. **Turmukhambetov**. Modeling object appearance using context-conditioned component analysis. In CVPR, 2015.

|

| 295 |

+

|

| 296 |

+

图12 中,展示的是将莫奈的画作翻译成照片,图12 与 图9 显示的是 包含了训练集的结果,而本文中的其他实验,我们仅显示测试集的结果。因为训练集不包含成对的图片,所以为测试集提供合理的翻译结果是一项非凡的任务(也许可以复活莫奈让他对着相同的景色画一张?——括号内��话是我自己加的)确实,自从莫奈再也不能创作新的绘画作品后,泛化无法看到原画作的测试集并不是一个迫切的问题。(Indeed, since Monet is no longer able to create new paintings, generalization to unseen, “test set”, paintings is not a pressing problem感谢

|

| 297 |

+

|

| 298 |

+

[@LIEBE](https://www.zhihu.com/people/a078ee299d4a8f2b598c6d1d1e83b4b1)

|

| 299 |

+

|

| 300 |

+

在评论区给出的翻译)

|

| 301 |

+

|

| 302 |

+

**图片增强(Photo enhancement)** 图14 中,我们的方法可以用在生成景深较浅的照片上。我们从Flickr下载花朵的照片,用来训练模型。数据源由智能手机拍摄的花朵图片组成,因为光圈小,所以通常具有较浅的景深。目标域包含了由 拥有更大光圈的单反相机拍摄的图片。我们的模型 用智能手机拍摄的浅光圈图片,成功地生成有大光圈拍摄效果的图片。

|

| 303 |

+

|

| 304 |

+

|

| 305 |

+

|

| 306 |

+

景深增强

|

| 307 |

+

|

| 308 |

+

**与神经风格迁移相比(Comparison with Neural style transfer)** 我们与神经风格迁移在照片风格化任务上相比,我们的图片可以产生出 具备整个数据集风格 的图片。为了在整个风格数据集上,把我们的方法与神经风格迁移相比较,我们在目标域上。计算了平均Gram 矩阵,并且使用这个矩阵转移 神经风格迁移的“平均风格”。

|

| 309 |

+

|

| 310 |

+

图16 演示了在其他转移任务上 相似的比较,我们发现 神经风格迁移需要寻找一个尽可能与所需输出 相贴近的目标风格图像,但是依然无法产生足够真实的照片,而我们的方法成功地生成与目标域相似,并且比较自然的结果。

|

| 311 |

+

|

| 312 |

+

|

| 313 |

+

|

| 314 |

+

cycleGAN 与Gatys 的神经风格迁移相比(Comparison with Neural style transfer)

|

| 315 |

+

|

| 316 |

+

|

| 317 |

+

|

| 318 |

+

cycleGAN 与Gatys 的神经风格迁移相比,这个更明显

|

| 319 |

+

|

| 320 |

+

## 6. 局限与讨论

|

| 321 |

+

|

| 322 |

+

虽然我们的方法在多种案例下,取得了令人信服的结果,但是这些结果并不都是一直那么好。图17 就展示了几个典型的失败案例。在包括了涉及颜色和纹理变形的任务上,与上面的许多报告提及的一样,我们的方法经常是成功的。我们还探索了需要几何变换的任务,但是收效甚微(limit success)。举例说明:狗→猫 转换的任务,对翻译的学习 退化为对输入的图片进行最小限度的转换。这可能是由于我们对生成器结构的选择造成的,我们生成器的架构是为了在外观更改上的任务上拥有更好的性能而量身定制的。处理更多和更加极端的变化,尤其是几何变换,是未来工作的重点问题。

|

| 323 |

+

|

| 324 |

+

训练集的分布特征也会造成一些案例的失败。例如,我们的方法在转换 马→斑马 的时候发生了错乱,因为我们的模型只在ImageNet 上训练了 野马和斑马 这两个类别,而没有包括人类骑马的图片。所以普京骑马的那一张,把普京变成斑马人了。

|

| 325 |

+

|

| 326 |

+

|

| 327 |

+

|

| 328 |

+

把普京变成斑马人,等

|

| 329 |

+

|

| 330 |

+

|

| 331 |

+

|

| 332 |

+

猫 → 狗 ,苹果 → 橙子 ,形状的变换不足

|

| 333 |

+

|

| 334 |

+

我们也发现在成对图片训练 和 非成对训练 之间存在无法消弭的差距。在一些案例里面,这个差距似乎特别难以消除,甚至不可能消除。为了消除(模型对数据理解上的)歧义,模型可能需要一些弱语义监督。集成的弱监督或者半监督数据也许能够造就更有力的翻译器,这些数据依然只会占完全监督系统中的一小部分。

|

| 335 |

+

|

| 336 |

+

尽管如此,在多种情况下,完全使用不成对的数据依然是足够可行的,我们应该使用。这篇论文拓展了“无监督”配置可能使用范围。

|

| 337 |

+

|

| 338 |

+

> 论文原文中的部分图表,我没有给出

|

| 339 |

+

> **致谢部分,我用的是谷歌翻译2018-10-25 16:27:43**

|

| 340 |

+

> **第七节的附录,我用的是谷歌翻译2018-10-25 16:27:43**

|

| 341 |

+

|

| 342 |

+

**!!!下面都是谷歌翻译!!!**

|

| 343 |

+

|

| 344 |

+

**致谢:**我们感谢Aaron Hertzmann,Shiry Ginosar,Deepak Pathak,Bryan Russell,Eli Shechtman,Richard Zhang和Tinghui Zhou的许多有益评论。这项工作部分得到了NSF SMA1514512,NSF IIS-1633310,Google Research Award,Intel Corp以及NVIDIA的硬件捐赠的支持。JYZ由Facebook Graduate Fellowship支持,TP由三星奖学金支持。用于风格转移的照片由AE拍摄,主要在法国拍摄。

|

| 345 |

+

|

| 346 |

+

## **7.附录7.1。**

|

| 347 |

+

|

| 348 |

+

训练细节所有网络(边缘除外)均从头开始训练,学习率为0.0002。在实践中,我们将目标除以2,同时优化D,这减慢了D学习的速率,相对于G的速率。我们保持前100个时期的相同学习速率并将速率线性衰减到零。接下来的100个时代。权重从高斯分布初始化,均值为0,标准差为0.02。

|

| 349 |

+

|

| 350 |

+

Cityscapes标签↔Photo2975训练图像来自Cityscapes训练集[4],��像大小为128×128。我们使用Cityscapes val集进行测试。

|

| 351 |

+

|

| 352 |

+

Maps↔aerial照片1096个训练图像是从谷歌地图[22]中删除的,图像大小为256×256。图像来自纽约市内及周边地区。然后将数据分成火车并测试采样区域的中位数纬度(添加缓冲区以确保测试集中没有出现训练像素)。建筑立面标签照片来自CMP Facade数据库的400张训练图像[40]。边缘→鞋子来自UT Zappos50K数据集的大约50,000个训练图像[60]。该模型经过5个时期的训练。

|

| 353 |

+

|

| 354 |

+

Horse↔Zebra和Apple↔Orange我们使用关键字“野马”,“斑马”,“苹果”和“脐橙”从ImageNet [5]下载图像。图像缩放为256×256像素。每个班级的训练集大小为马:939,斑马:1177,苹果:996,橙色:1020.

|

| 355 |

+

|

| 356 |

+

Summer↔WinterYosemite使用带有标签yosemite和datetaken字段的Flickr API下载图像。修剪了黑白照片。图像缩放为256×256像素。每个班级的培训规模为夏季:1273,冬季:854。照片艺术风格转移艺术图像从[http://Wikiart.org](http://Wikiart.org)下载。一些素描或**过于淫秽的艺术品**都是手工修剪过的。这些照片是使用标签横向和横向摄影的组合从Flickr下载的。黑白照片被修剪。图像缩放为256×256像素。每个班级的训练集大小为Monet:1074,Cezanne:584,Van Gogh:401,Ukiyo-e:1433,照片:6853。Monet数据集被特别修剪为仅包括风景画,而Van Gogh仅包括他的后期作品代表了他最知名的艺术风格。

|

| 357 |

+

|

| 358 |

+

Photo↔Art风格转移艺术图像从[http://Wikiart.org](http://Wikiart.org)下载。一些素描或过于淫秽的艺术品都是手工修剪过的。这些照片是使用标签横向和横向摄影的组合从Flickr下载的。黑白照片被修剪。图像缩放为256×256像素。每个班级的训练集大小为Monet:1074,Cezanne:584,Van Gogh:401,Ukiyo-e:1433,照片:6853。

|

| 359 |

+

|

| 360 |

+

Monet数据集被特别修剪为仅包括风景画,而Van Gogh仅包括他的后期作品代表了他最知名的艺术风格。莫奈的画作→照片为了在保存记忆的同时实现高分辨率,我们使用矩形图像的随机方形作物进行训练。为了生成结果,我们将具有正确宽高比的宽度为512像素的图像作为输入传递给生成器网络。身份映射损失的权重为0.5λ,其中λ是周期一致性损失的权重,我们设置λ= 10.

|

| 361 |

+

|

| 362 |

+

花卉照片增强智能手机拍摄的花卉图像是通过搜索Apple iPhone 5拍摄的照片从Flickr下载的,5s或6,搜索文本花。具有浅DoF的DSLR图像也通过搜索标签flower,dof从Flickr下载。将图像按比例缩放到360像素宽。使用重量0.5λ的同一性映射损失。智能手机和DSLR数据集的训练集大小分别为1813和3326。

|

| 363 |

+

|

| 364 |

+

7.2。网络架构

|

| 365 |

+

|

| 366 |

+

我们提供PyTorch和Torch实现。

|

| 367 |

+

|

| 368 |

+

**发电机架构** 我们采用Johnson等人的架构。[23]。我们使用6个块用于128×128个训练图像,9个块用于256×256或更高分辨率的训练图像。下面,我们遵循Johnson等人的Github存储库中使用的命名约定。

|

| 369 |

+

|

| 370 |

+

令c7s1-k表示具有k个滤波器和步幅1的7×7Convolution-InstanceNormReLU层.dk表示具有k个滤波器和步幅2的3×3卷积 - 实例范数 - ReLU层。反射填充用于减少伪像。Rk表示包含两个3×3卷积层的残余块,在两个层上具有相同数量的滤波器。uk表示具有k个滤波器和步幅1 2的3×3分数跨度-ConvolutionInstanceNorm-ReLU层。

|

| 371 |

+

|

| 372 |

+

具有6个块的网络包括:c7s1-32,d64,d128,R128,R128,R128,R128,R128,R128,u64,u32,c7s1-3

|

| 373 |

+

|

| 374 |

+

具有9个块的网络包括:c7s1-32,d64,d128,R128,R128,R128,R128,R128,R128,R128,R128,R128,u64 u32,c7s1-3

|

| 375 |

+

|

| 376 |

+

**鉴别器架构** 对于鉴别器网络,我们使用70×70 PatchGAN [22]。设Ck表示具有k个滤波器和步幅2的4×4卷积 - 实例范数 - LeakyReLU层。在最后一层之后,我们应用卷积来产生1维输出。我们不将InstanceNorm用于第一个C64层。我们使用泄漏的ReLU,斜率为0.2。鉴别器架构是:C64-C128-C256-C512

|

| 377 |

+

|

| 378 |

+

## **欢迎讨论 ^_^**

|

| 379 |

+

|

| 380 |

+

## 对评论区的回复:

|

| 381 |

+

|

| 382 |

+

- 几个关于翻译的建议

|

| 383 |

+

- 如猫狗转换这种一对多的转换,有其他模型能学到吗?有,但是受应用场景限制

|

| 384 |

+

- CycleGAN如何保证不发生交叉映射?在满足双射(bijections)的情况下,保持循环一致

|

| 385 |

+

|

| 386 |

+

> [@LIEBE](https://www.zhihu.com/people/a078ee299d4a8f2b598c6d1d1e83b4b1)

|

| 387 |

+

>

|

| 388 |

+

>

|

| 389 |

+

> 这里有几个小小的(关于翻译的)建议,不知道我理解得正不正确:

|

| 390 |

+

|

| 391 |

+

采用并在正文改正:upper bound:天花板×,上限√。作者 写**光圈小,景深深(大)**是正确的,谢谢提醒,我自己弄错了。

|

| 392 |

+

|

| 393 |

+

1.translation可以翻成转化?(“图像间的转化”读起来更直白一点?)我坚持把translation直接翻译成「翻译」,理由如下:论文原文有“a sentence from English to French, and then translate it back from French to English”, CycleGAN 的灵感是从「**语言翻译**」中来的,**英语→法语→英语,图像域A→图像域B→图像域A**。而[Pix2Pix - Image-to-Image Translation with Conditional Adversarial Networks - 2018](https://zhuanlan.zhihu.com/p/45394148/h%3Cb%3Ettps://arxiv.org/pdf/1611.%3C/b%3E07004.pdf)的论文原文也有“. Just as a concept may be expressed in either **English or French**, a scene may be rendered as an RGB image, ... ...”,因此我没有翻成**转化**。

|

| 394 |

+

|

| 395 |

+

4.第5.1节 的评估那里是还没翻译完吗?考不考虑手动翻译一遍7.附录呢?不打算翻译,我认为这部分内容不看不会影响我们对CycleGAN的理解。

|

| 396 |

+

|

| 397 |

+

> [@小厮](https://www.zhihu.com/people/071d206346dc5dcf22339f44bfd324c2)

|

| 398 |

+

>

|

| 399 |

+

> 2019-08-14

|

| 400 |

+

> cyclegan的话,更强调循环一致,猫狗转换这种,对于一只猫转换为一只狗,一只狗转换为一只猫,存在很多**1-多的关系**。因为不存在明显一一对应关系,那么目标就变成学习一个足够真实(和源分布尽可能相似)的分布。**对于猫狗转换这种,别的gan可以学习学到嘛?**

|

| 401 |

+

|

| 402 |

+

我的回答:有,但是受应用场景限制。例如:

|

| 403 |

+

|

| 404 |

+

小样本无监督图片翻译 [Few-Shot Unsupervised Image-to-Image Translation - 英伟达 2019-05 论文pdf](https://arxiv.org/pdf/1905.01723.pdf) (从视频上看,做的非常好,但是我还没有机会去复现它)

|

| 405 |

+

|

| 406 |

+

知乎相关介绍 [英伟达最新图像转换器火了!万物皆可换脸,试玩开放 - 新智元](https://zhuanlan.zhihu.com/p/65297812)

|

| 407 |

+

|

| 408 |

+

|

| 409 |

+

|

| 410 |

+

让各种食物变成炒面,仔细观察可以看到图片翻译前后,其结构是高度对应的

|

| 411 |

+

|

| 412 |

+

|

| 413 |

+

|

| 414 |

+

让狗变成其他动物,上面是以上是张嘴、歪头的动图

|

| 415 |

+

|

| 416 |

+

另一个,人类的转换,人脸到人脸转换,有 DeepFace等开源工具可以实现。人脸到其他脸,有它可以实现→ ,论文 [Landmark Assisted CycleGAN for Cartoon Face Generation - 香港中文 - 贾佳亚](https://arxiv.org/abs/1907.01424) 。

|

| 417 |

+

|

| 418 |

+

知乎相关介绍: [用于卡通人脸生成的关键点辅助CycleGAN](https://zhuanlan.zhihu.com/p/73995207)

|

| 419 |

+

|

| 420 |

+

|

| 421 |

+

|

| 422 |

+

> [@信息门下走狗](https://www.zhihu.com/people/d67ee185c975baea76cf8d401876f9d3)

|

| 423 |

+

>

|

| 424 |

+

> 2018-11-02

|

| 425 |

+

> 极端的例子,假定就是马和斑马的变换,马与斑马都分两种姿态,一类是站着,一类是趴着,那么对于系统设计的代价函数而言,我完全可以把所有站着的马都映射为趴着的斑马,趴着的马变成站着的斑马,然后逆向映射把趴着的斑马映射回站着的马。这个映射的代价函数和正常保持马的姿态的映射是一样的。那么系统是如何保证**不发生这种交叉映射**的呢

|

| 426 |

+

|

| 427 |

+

我的回答:在满足双射(bijections)的情况下,保持循环一致性。

|

| 428 |

+

|

| 429 |

+

文章第二节的**Cycle Consitency 循环一致性**提到:加入循环一致性损失 (cycle consistency loss),可以保证了映射的正确,在语言处理领域也是如此。

|

| 430 |

+

文章第一节的介绍部分,提到:从数学上讲,如果我们有一个翻译器 G : X → Y 与另一个翻译器 F : Y → X ,那么 G 与 F 彼此是相反的,这一对映射是双射(bijections) 。系统通过**Cycle Consitency 循环一致性**,保证不发生**趴着的野马**,**站着的斑马**这种交叉映射,从数学上讲,原理是[双射(wikipedia: bijections)](https://en.wikipedia.org/wiki/Bijection),即参与映射的两个集合,里面的元素必然是一一对应的。

|

| 431 |

+

|

| 432 |

+

野马集合 斑马集合

|

| 433 |

+

|

| 434 |

+

- 站姿1的野马 ⇋ 站姿1的斑马

|

| 435 |

+

- 站姿2的野马 ⇋ 站姿2的斑马

|

| 436 |

+

- ...

|

| 437 |

+

- 跪姿1的野马 ⇋ 跪姿1的斑马

|

| 438 |

+

- ...

|

| 439 |

+

|

| 440 |

+

↑符合循环一致性的双射

|

| 441 |

+

|

| 442 |

+

假如在某一次随机重启后,cycleGAN恰好学到了 站姿⇋跪姿 的转化,我们**不期望的交叉映射**发生了,有如下:

|

| 443 |

+

|

| 444 |

+

野马集合 斑马集合

|

| 445 |

+

|

| 446 |

+

- 站姿1的野马 ⇋ 跪姿1的斑马

|

| 447 |

+

- 站姿2的野马 ⇋ 跪姿2的斑马

|

| 448 |

+

- ...

|

| 449 |

+

- 跪姿1的野马 ⇋ 站姿1的斑马

|

| 450 |

+

- ...

|

| 451 |

+

|

| 452 |

+

其实经过分析,我们会发现**无法证明 对于站姿n,有稳定的跪姿n 与其一一对应**,**所以这种交叉映射是不符合双射的。**不符合双射的两个映射,不满足**循环一致性**。然而,由于野马斑马外形差别不大,导致站姿n的野马 到 站姿n斑马 总是存在稳定的一一对应的姿态可以相互转换,所以,可以预见到,在执行图片到图片的翻译任务时,当训练到收敛的时候,循环稳定性可以得到正确的结果,避免交叉映射。

|

| 453 |

+

|

| 454 |

+

根据文章中提到的:使用cycleGAN 可以进行 野马 ⇋斑马 的转换,但是无法转化形态差异比较大的 猫 ⇋ 狗。 因为从 猫 ⇋ 狗 之间的转化,**不能严格满足 双射 的要求**,当存在许多差异明显的猫狗时,因为循环一致性并不能指导模型,导致��多的交叉映射破坏循环一致性,从而无法训练得到满意的模型。

|

| 455 |

+

|

| 456 |

+

|

| 457 |

+

|

| 458 |

+

文章中提及的 失败案例(形态差异大的) 猫 ⇋ 狗

|

| 459 |

+

|

| 460 |

+

所以,我的结论:

|

| 461 |

+

|

| 462 |

+

- 野马 ⇋ 斑马:满足双射,可以避免交叉映射,符合循环一致性,训练效果好

|

| 463 |

+

- 猫 ⇋ 狗:不能严格满足双射,无法完全避免交叉映射,破坏了循环一致性,训练效果不佳

|

| 464 |

+

|

| 465 |

+

cyclegan的话,更强调循环一致,猫狗转换这种,对于一只猫转换为一只狗,一只狗转换为一只猫,存在很多1-多的关系。因为不存在明显一一对应关系,那么目标就变成学习一个足够真实(和源分布尽可能相似)的分布。对于猫狗转换这种,别的gan可以学习学到嘛?

|

app.py

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

实现web界面

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

|

| 7 |

+

import gradio as gr

|

| 8 |

+

from detect import detect

|

| 9 |

+

from util import get_all_weights

|

| 10 |

+

|

| 11 |

+

app_introduce = """

|

| 12 |

+

# CycleGAN

|

| 13 |

+

功能:上传本地文件、选择转换风格

|

| 14 |

+

"""

|

| 15 |

+

|

| 16 |

+

css = """

|

| 17 |

+

# :root{

|

| 18 |

+

# --block-background-fill: #f3f3f3;

|

| 19 |

+

# }

|

| 20 |

+

footer{

|

| 21 |

+

display:none!important;

|

| 22 |

+

}

|

| 23 |

+

"""

|

| 24 |

+

|

| 25 |

+

demo = gr.Blocks(

|

| 26 |

+

# theme=gr.themes.Soft(),

|

| 27 |

+

css=css

|

| 28 |

+

)

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

def add_img(img):

|

| 32 |

+

imgs = []

|

| 33 |

+

for style in get_all_weights():

|

| 34 |

+

fake_img = detect(img, style=style)

|

| 35 |

+

imgs.append(fake_img)

|

| 36 |

+

return imgs

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def tab1(label="上传单张图片"):

|

| 40 |

+

default_img_paths = [

|

| 41 |

+

[Path.cwd().joinpath("./imgs/horse.jpg"), "horse2zebra"],

|

| 42 |

+

[Path.cwd().joinpath("./imgs/monet.jpg"), "monet2photo"],

|

| 43 |

+

]

|

| 44 |

+

|

| 45 |

+

with gr.Tab(label):

|

| 46 |

+

with gr.Row():

|

| 47 |

+

with gr.Column():

|

| 48 |

+

img = gr.Image(type="pil", label="选择需要进行风格转换的图片")

|

| 49 |

+

style = gr.Dropdown(choices=get_all_weights(), label="转换的风格")

|

| 50 |

+

detect_btn = gr.Button("♻️风格转换")

|

| 51 |

+

with gr.Column():

|

| 52 |

+

out_img = gr.Image(label="风格图")

|

| 53 |

+

detect_btn.click(fn=detect, inputs=[img, style], outputs=[out_img])

|

| 54 |

+

gr.Examples(default_img_paths, inputs=[img, style])

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

def tab2(label="单图多风格试转换"):

|

| 58 |

+

with gr.Tab(label):

|

| 59 |

+

with gr.Row():

|

| 60 |

+

with gr.Column(scale=1):

|

| 61 |

+

img = gr.Image(type="pil", label="选择需要进行风格转换的图片")

|

| 62 |

+

gr.Markdown("上传一张图片,会将所有风格推理一遍。")

|

| 63 |

+

btn = gr.Button("♻️风格转换")

|

| 64 |

+

with gr.Column(scale=2):

|

| 65 |

+

gallery = gr.Gallery(

|

| 66 |

+

label="风格图",

|

| 67 |

+

elem_id="gallery",

|

| 68 |

+

).style(grid=[3], height="auto")

|

| 69 |

+

btn.click(fn=add_img, inputs=[img], outputs=gallery)

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def tab3(label="参数设置"):

|

| 73 |

+

from detect import opt

|

| 74 |

+

|

| 75 |

+

with gr.Tab(label):

|

| 76 |

+

with gr.Column():

|

| 77 |

+

for k, v in sorted(vars(opt).items()):

|

| 78 |

+

if type(v) == bool:

|

| 79 |

+

gr.Checkbox(label=k, value=v)

|

| 80 |

+

elif type(v) == (int or float):

|

| 81 |

+

gr.Number(label=k, value=v)

|

| 82 |

+

elif type(v) == list:

|

| 83 |

+

gr.CheckboxGroup(label=k, value=v)

|

| 84 |

+

else:

|

| 85 |

+

gr.Textbox(label=k, value=v)

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

with demo:

|

| 89 |

+

gr.Markdown(app_introduce)

|

| 90 |

+

tab1()

|

| 91 |

+

tab2()

|

| 92 |

+

tab3()

|

| 93 |

+

if __name__ == "__main__":

|

| 94 |

+

demo.launch(share=True)

|

| 95 |

+

# 如果不以`demo`命名,`gradio app.py`会报错`Error loading ASGI app. Attribute "demo.app" not found in module "app".`

|

| 96 |

+

# 注意gradio库的reload.py的头信息 $ gradio app.py my_demo, to use variable names other than "demo"

|

| 97 |

+

# my_demo 是定义的变量。离谱o(╥﹏╥)o

|

app1.py

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 2023年2月23日

|

| 2 |

+

"""

|

| 3 |

+

实现web界面

|

| 4 |

+

|

| 5 |

+

>>> streamlit run app.py

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

from io import BytesIO

|

| 9 |

+

from pathlib import Path

|

| 10 |

+

|

| 11 |

+

import streamlit as st

|

| 12 |

+

from detect import detect, opt

|

| 13 |

+

from PIL import Image

|

| 14 |

+

from util import get_all_weights

|

| 15 |

+

|

| 16 |

+

"""

|

| 17 |

+

# CycleGAN

|

| 18 |

+

功能:上传本地文件、选择转换风格

|

| 19 |

+

"""

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def load_css(css_path="./util/streamlit/css.css"):

|

| 23 |

+

"""

|

| 24 |

+

加载CSS文件

|

| 25 |

+

:param css_path: CSS文件路径

|

| 26 |

+

"""

|

| 27 |

+

if Path(css_path).exists():

|

| 28 |

+

with open(css_path) as f:

|

| 29 |

+

# 将CSS文件内容插入到HTML中

|

| 30 |

+

st.markdown(

|

| 31 |

+

f"""<style>{f.read()}</style>""",

|

| 32 |

+

unsafe_allow_html=True,

|

| 33 |

+

)

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def load_img_file(file):

|

| 37 |

+

"""读取图片文件"""

|

| 38 |

+

img = Image.open(BytesIO(file.read()))

|

| 39 |

+

st.image(img, use_column_width=True) # 显示图片

|

| 40 |

+

return img

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def set_style_options(label: str, frame=st):

|

| 44 |

+

"""风格选项"""

|

| 45 |

+

style_options = get_all_weights()

|

| 46 |

+

options = [None] + style_options # 默认空

|

| 47 |

+

style_param = frame.selectbox(label=label, options=options)

|

| 48 |

+

return style_param

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

# load_css()

|

| 52 |

+

tab_mul2mul, tab_mul2one, tab_set = st.tabs(["多图多风格转换", "多图同风格转换", "参数"])

|

| 53 |

+

|

| 54 |

+

with tab_mul2mul:

|

| 55 |

+

uploaded_files = st.file_uploader(label="选择本地图片", accept_multiple_files=True, key=1)

|

| 56 |

+

if uploaded_files:

|

| 57 |

+

for idx, uploaded_file in enumerate(uploaded_files):

|

| 58 |

+

colL, colR = st.columns(2)

|

| 59 |

+

with colL:

|

| 60 |

+

img = load_img_file(uploaded_file)

|

| 61 |

+

style = set_style_options(label=str(uploaded_file), frame=st)

|

| 62 |

+

with colR:

|

| 63 |

+

if style:

|

| 64 |

+

fake_img = detect(img=img, style=style)

|

| 65 |

+

st.image(fake_img, caption="", use_column_width=True)

|

| 66 |

+

|

| 67 |

+

with tab_set:

|

| 68 |

+

colL, colR = st.columns([1, 3])

|

| 69 |

+

for k, v in sorted(vars(opt).items()):

|

| 70 |

+

st.text_input(label=k, value=v, disabled=True)

|

| 71 |

+

# st.selectbox("ss", options=opt.parse_args())

|

| 72 |

+

confidence_threshold = st.slider("Confidence threshold", 0.0, 1.0, 0.5, 0.01)

|

| 73 |

+

opt.no_dropout = st.radio("no_droput", [True, False])

|

| 74 |

+

|

| 75 |

+

with tab_mul2one:

|

| 76 |

+

uploaded_files = st.file_uploader(label="选择本地图片", accept_multiple_files=True, key=2)

|

| 77 |

+

if uploaded_files:

|

| 78 |

+

colL, colR = st.columns(2)

|

| 79 |

+

with colL:

|

| 80 |

+

imgs = [load_img_file(ii) for ii in uploaded_files]

|

| 81 |

+

with colR:

|

| 82 |

+

style = set_style_options(label="选择风格", frame=st)

|

| 83 |

+

if style:

|

| 84 |

+

if st.button("♻️风格转换", use_container_width=True):

|

| 85 |

+

for img in imgs:

|

| 86 |

+

fake_img = detect(img, style)

|

| 87 |

+

st.image(fake_img, caption="", use_column_width=True)

|

data/__init__.py

ADDED

|

@@ -0,0 +1,114 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""不同的模型使用不同的数据集

|

| 2 |

+

|

| 3 |

+

比如有监督模型使用的都是成对的训练数据、无监督模型使用的数据集不必使用成对的数据

|

| 4 |

+

This package includes all the modules related to data loading and preprocessing

|

| 5 |

+

|

| 6 |

+

To add a custom dataset class called 'dummy', you need to add a file called 'dummy_dataset.py' and define a subclass 'DummyDataset' inherited from BaseDataset.

|

| 7 |

+

You need to implement four functions:

|

| 8 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 9 |

+

-- <__len__>: return the size of dataset.

|

| 10 |

+

-- <__getitem__>: get a data point from data loader.

|

| 11 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 12 |

+

|

| 13 |

+

Now you can use the dataset class by specifying a flag '--dataset_mode dummy'.

|

| 14 |

+

See our template dataset class 'template_dataset.py' for more details.

|

| 15 |

+

"""

|

| 16 |

+

|

| 17 |

+

import pickle

|

| 18 |

+

import importlib

|

| 19 |

+

import torch.utils.data

|

| 20 |

+

from .base_dataset import BaseDataset

|

| 21 |

+

from .one_dataset import *

|

| 22 |

+

|

| 23 |

+

__all__ = [OneDataset]

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def find_dataset_by_name(dataset_name: str):

|

| 27 |

+

"""按照数据集名称来寻找所对应的dataset类进行动态导入

|

| 28 |

+

Import the module "data/[dataset_name]_dataset.py".

|

| 29 |

+

|

| 30 |

+

In the file, the class called DatasetNameDataset() will

|

| 31 |

+

be instantiated. It has to be a subclass of BaseDataset,

|

| 32 |

+

and it is case-insensitive.

|

| 33 |

+

"""

|

| 34 |

+

dataset_filename = "data." + dataset_name + "_dataset"

|

| 35 |

+

datasetlib = importlib.import_module(dataset_filename)

|

| 36 |

+

|

| 37 |

+

dataset = None

|

| 38 |

+

target_dataset_name = dataset_name.replace("_", "") + "dataset"

|

| 39 |

+

for name, cls in datasetlib.__dict__.items():

|

| 40 |

+

if name.lower() == target_dataset_name.lower() and issubclass(cls, BaseDataset):

|

| 41 |

+

dataset = cls

|

| 42 |

+

|

| 43 |

+

if dataset is None:

|

| 44 |

+

raise NotImplementedError(f"In {dataset_filename}.py, there should be a subclass of BaseDataset with class " f"name that matches {target_dataset_name} in lowercase.")

|

| 45 |

+

return dataset

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def get_option_setter(dataset_name):

|

| 49 |

+

"""Return the static method <modify_commandline_options> of the dataset class."""

|

| 50 |

+

dataset_class = find_dataset_by_name(dataset_name)

|

| 51 |

+

return dataset_class.modify_commandline_options

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def create_dataset(opt):

|

| 55 |

+

"""Create a dataset given the option.

|

| 56 |

+

|

| 57 |

+

This function wraps the class CustomDatasetDataLoader.

|

| 58 |

+

This is the main interface between this package and 'train.py'/'test.py'

|

| 59 |

+

|

| 60 |

+

Example:

|

| 61 |

+

>>> from data import create_dataset

|

| 62 |

+

>>> dataset = create_dataset(opt)

|

| 63 |

+

"""

|

| 64 |

+

data_loader = CustomDatasetDataLoader(opt)

|

| 65 |

+

dataset = data_loader.load_data()

|

| 66 |

+

return dataset

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

class CustomDatasetDataLoader:

|

| 70 |

+

"""Wrapper class of Dataset class that performs multi-threading data loading"""

|

| 71 |

+

|

| 72 |

+

def __init__(self, opt):

|

| 73 |

+

"""Initialize this class

|

| 74 |

+

|

| 75 |

+

Step 1: create a dataset instance given the name [dataset_mode]

|

| 76 |

+

Step 2: create a multi-threading data loader.

|

| 77 |

+

"""

|

| 78 |

+

self.opt = opt

|

| 79 |

+

dataset_file = f"datasets/{opt.name}.pkl"

|

| 80 |

+

if not Path(dataset_file).exists():

|

| 81 |

+

# 判断数据集类型(成对/不成对),得到相应的类包

|

| 82 |

+

dataset_class = find_dataset_by_name(opt.dataset_mode)

|

| 83 |

+

# 传入数据集路径到类包中,得到数据集

|

| 84 |

+

self.dataset = dataset_class(opt)

|

| 85 |

+

# 打包下次直接使用

|

| 86 |

+

# 打包后文件也很大,暂时就这样

|

| 87 |

+

print("pickle dump dataset...")

|

| 88 |

+

pickle.dump(self.dataset, open(dataset_file, 'wb'))

|

| 89 |

+

else:

|

| 90 |

+

print("pickle load dataset...")

|

| 91 |

+

self.dataset = pickle.load(open(dataset_file, 'rb'))

|

| 92 |

+

print("dataset [%s] was created" % type(self.dataset).__name__)

|

| 93 |

+

|

| 94 |

+

self.dataloader = torch.utils.data.DataLoader(

|

| 95 |

+

self.dataset,

|

| 96 |

+

batch_size=opt.batch_size,

|

| 97 |

+

shuffle=not opt.serial_batches,

|

| 98 |

+

num_workers=int(opt.num_threads),

|

| 99 |

+

)

|

| 100 |

+

|

| 101 |

+

def load_data(self):

|

| 102 |

+

print(f"The number of training images = {len(self)}")

|

| 103 |

+

return self

|

| 104 |

+

|

| 105 |

+

def __iter__(self):

|

| 106 |

+

"""Return a batch of data"""

|

| 107 |

+

for i, data in enumerate(self.dataloader):

|

| 108 |

+

if i * self.opt.batch_size >= self.opt.max_dataset_size:

|

| 109 |

+

break

|

| 110 |

+

yield data

|

| 111 |

+

|

| 112 |

+

def __len__(self):

|

| 113 |

+

"""Return the number of data in the dataset"""

|

| 114 |

+

return min(len(self.dataset), self.opt.max_dataset_size)

|

data/base_dataset.py

ADDED

|

@@ -0,0 +1,231 @@

|