Upload 82 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +4 -0

- chains/__pycache__/local_doc_qa.cpython-310.pyc +0 -0

- chains/dialogue_answering/__init__.py +7 -0

- chains/dialogue_answering/__main__.py +36 -0

- chains/dialogue_answering/base.py +99 -0

- chains/dialogue_answering/prompts.py +22 -0

- chains/local_doc_qa.py +347 -0

- chains/text_load.py +52 -0

- configs/__pycache__/model_config.cpython-310.pyc +0 -0

- configs/model_config - 副本.py +269 -0

- configs/model_config.py +202 -0

- docs/API.md +1042 -0

- docs/CHANGELOG.md +32 -0

- docs/FAQ.md +179 -0

- docs/INSTALL.md +55 -0

- docs/Issue-with-Installing-Packages-Using-pip-in-Anaconda.md +114 -0

- docs/StartOption.md +76 -0

- docs/cli.md +49 -0

- docs/fastchat.md +24 -0

- docs/启动API服务.md +37 -0

- docs/在Anaconda中使用pip安装包无效问题.md +125 -0

- flagged/component 2/tmp1x130c0q.json +1 -0

- flagged/log.csv +2 -0

- img/docker_logs.png +0 -0

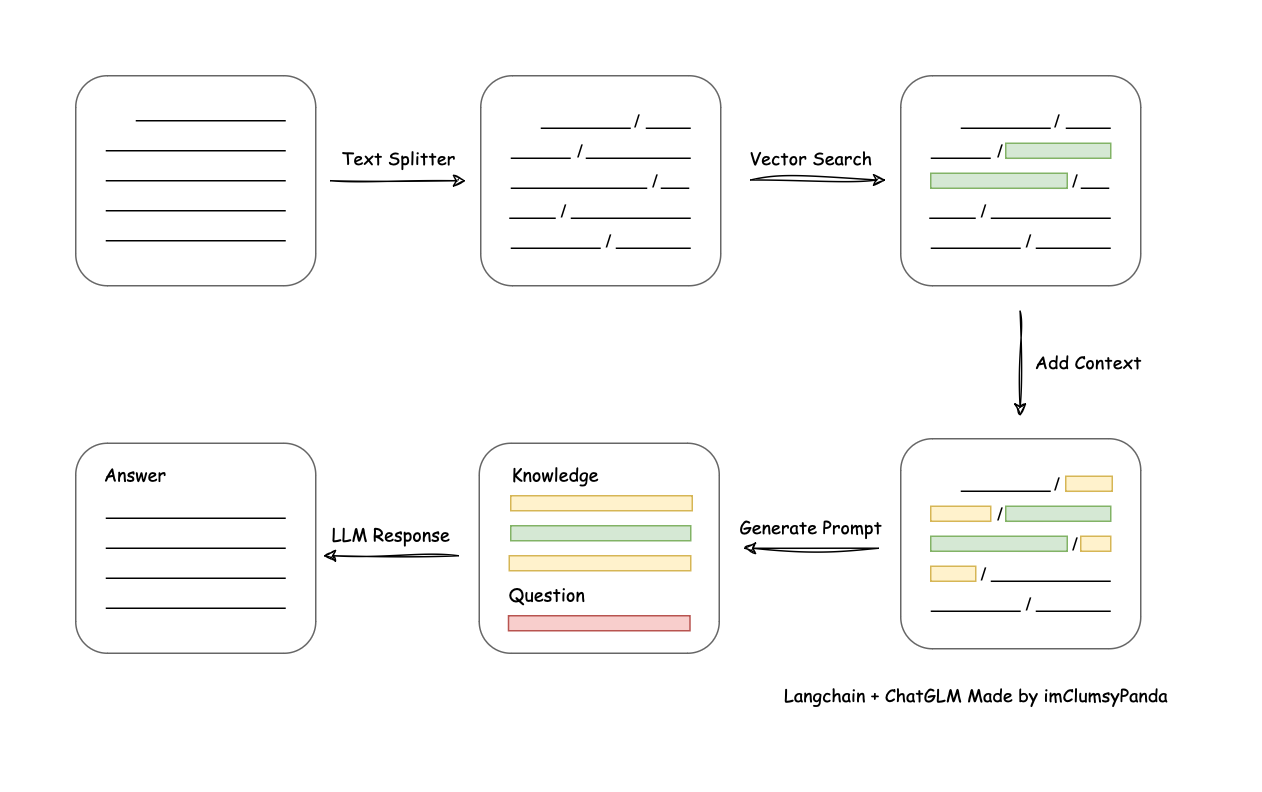

- img/langchain+chatglm.png +3 -0

- img/langchain+chatglm2.png +0 -0

- img/qr_code_43.jpg +0 -0

- img/qr_code_44.jpg +0 -0

- img/qr_code_45.jpg +0 -0

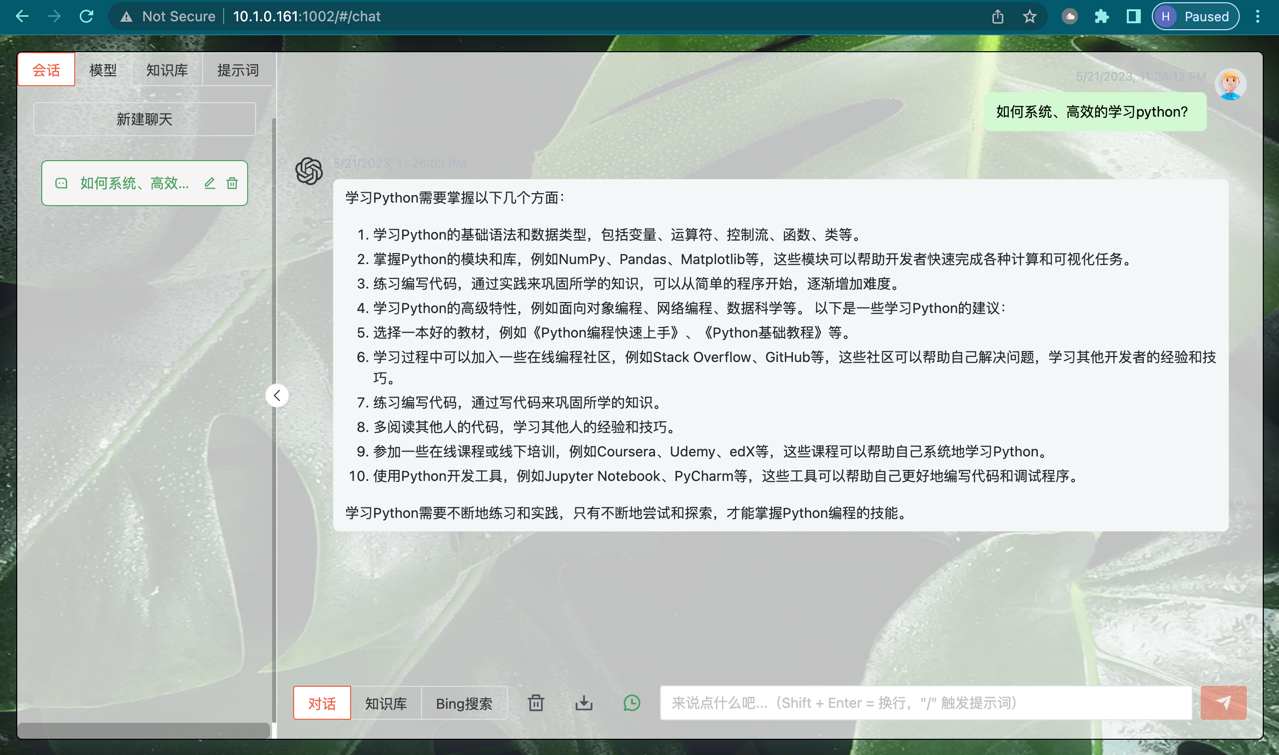

- img/vue_0521_0.png +0 -0

- img/vue_0521_1.png +3 -0

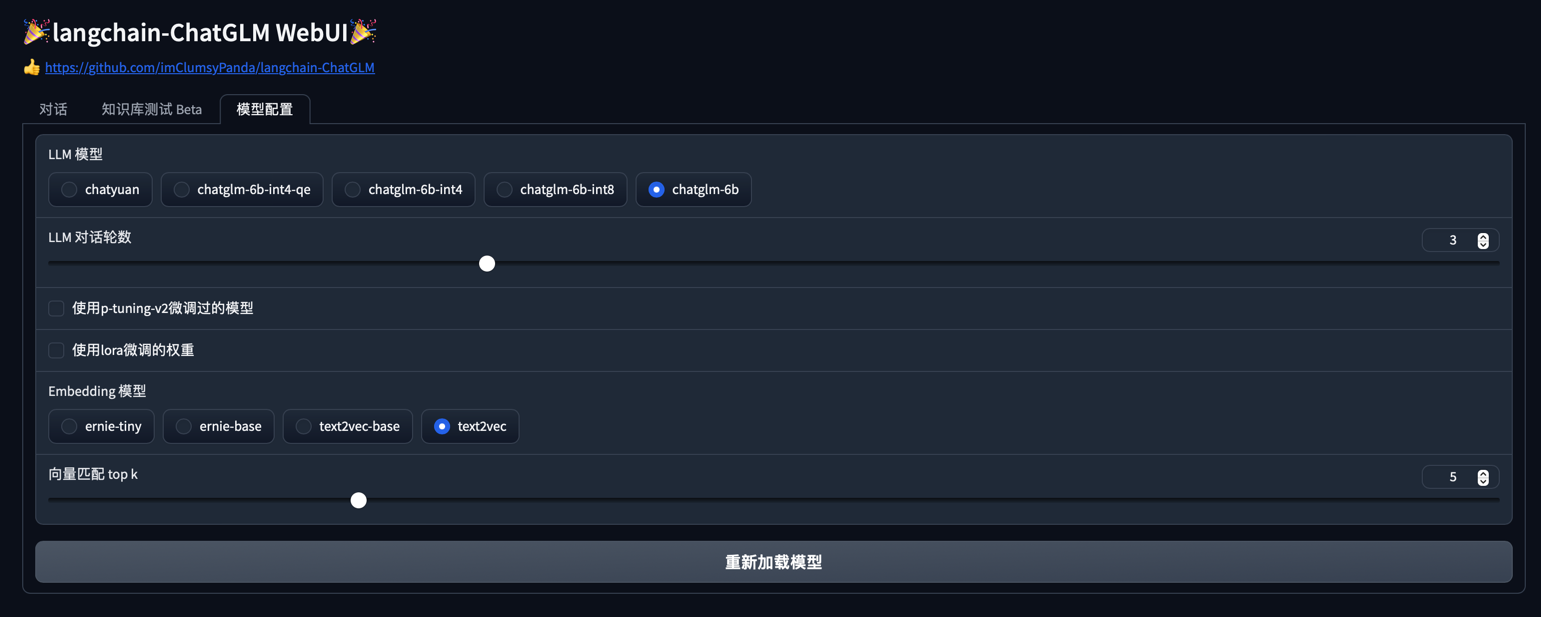

- img/vue_0521_2.png +3 -0

- img/webui_0419.png +0 -0

- img/webui_0510_0.png +0 -0

- img/webui_0510_1.png +0 -0

- img/webui_0510_2.png +0 -0

- img/webui_0521_0.png +0 -0

- knowledge_base/samples/content/README.md +212 -0

- knowledge_base/samples/content/test.jpg +0 -0

- knowledge_base/samples/content/test.pdf +0 -0

- knowledge_base/samples/content/test.txt +835 -0

- knowledge_base/samples/isssues_merge/langchain-ChatGLM_closed.csv +173 -0

- knowledge_base/samples/isssues_merge/langchain-ChatGLM_closed.jsonl +172 -0

- knowledge_base/samples/isssues_merge/langchain-ChatGLM_closed.xlsx +0 -0

- knowledge_base/samples/isssues_merge/langchain-ChatGLM_open.csv +324 -0

- knowledge_base/samples/isssues_merge/langchain-ChatGLM_open.jsonl +323 -0

- knowledge_base/samples/isssues_merge/langchain-ChatGLM_open.xlsx +0 -0

- knowledge_base/samples/vector_store/index.faiss +3 -0

- knowledge_base/samples/vector_store/index.pkl +3 -0

- loader/RSS_loader.py +54 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

img/langchain+chatglm.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

img/vue_0521_1.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

img/vue_0521_2.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

knowledge_base/samples/vector_store/index.faiss filter=lfs diff=lfs merge=lfs -text

|

chains/__pycache__/local_doc_qa.cpython-310.pyc

ADDED

|

Binary file (11.3 kB). View file

|

|

|

chains/dialogue_answering/__init__.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .base import (

|

| 2 |

+

DialogueWithSharedMemoryChains

|

| 3 |

+

)

|

| 4 |

+

|

| 5 |

+

__all__ = [

|

| 6 |

+

"DialogueWithSharedMemoryChains"

|

| 7 |

+

]

|

chains/dialogue_answering/__main__.py

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

import os

|

| 3 |

+

import argparse

|

| 4 |

+

import asyncio

|

| 5 |

+

from argparse import Namespace

|

| 6 |

+

sys.path.append(os.path.dirname(os.path.abspath(__file__)) + '/../../')

|

| 7 |

+

from chains.dialogue_answering import *

|

| 8 |

+

from langchain.llms import OpenAI

|

| 9 |

+

from models.base import (BaseAnswer,

|

| 10 |

+

AnswerResult)

|

| 11 |

+

import models.shared as shared

|

| 12 |

+

from models.loader.args import parser

|

| 13 |

+

from models.loader import LoaderCheckPoint

|

| 14 |

+

|

| 15 |

+

async def dispatch(args: Namespace):

|

| 16 |

+

|

| 17 |

+

args_dict = vars(args)

|

| 18 |

+

shared.loaderCheckPoint = LoaderCheckPoint(args_dict)

|

| 19 |

+

llm_model_ins = shared.loaderLLM()

|

| 20 |

+

if not os.path.isfile(args.dialogue_path):

|

| 21 |

+

raise FileNotFoundError(f'Invalid dialogue file path for demo mode: "{args.dialogue_path}"')

|

| 22 |

+

llm = OpenAI(temperature=0)

|

| 23 |

+

dialogue_instance = DialogueWithSharedMemoryChains(zero_shot_react_llm=llm, ask_llm=llm_model_ins, params=args_dict)

|

| 24 |

+

|

| 25 |

+

dialogue_instance.agent_chain.run(input="What did David say before, summarize it")

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

if __name__ == '__main__':

|

| 29 |

+

|

| 30 |

+

parser.add_argument('--dialogue-path', default='', type=str, help='dialogue-path')

|

| 31 |

+

parser.add_argument('--embedding-model', default='', type=str, help='embedding-model')

|

| 32 |

+

args = parser.parse_args(['--dialogue-path', '/home/dmeck/Downloads/log.txt',

|

| 33 |

+

'--embedding-mode', '/media/checkpoint/text2vec-large-chinese/'])

|

| 34 |

+

loop = asyncio.new_event_loop()

|

| 35 |

+

asyncio.set_event_loop(loop)

|

| 36 |

+

loop.run_until_complete(dispatch(args))

|

chains/dialogue_answering/base.py

ADDED

|

@@ -0,0 +1,99 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from langchain.base_language import BaseLanguageModel

|

| 2 |

+

from langchain.agents import ZeroShotAgent, Tool, AgentExecutor

|

| 3 |

+

from langchain.memory import ConversationBufferMemory, ReadOnlySharedMemory

|

| 4 |

+

from langchain.chains import LLMChain, RetrievalQA

|

| 5 |

+

from langchain.embeddings.huggingface import HuggingFaceEmbeddings

|

| 6 |

+

from langchain.prompts import PromptTemplate

|

| 7 |

+

from langchain.text_splitter import CharacterTextSplitter

|

| 8 |

+

from langchain.vectorstores import Chroma

|

| 9 |

+

|

| 10 |

+

from loader import DialogueLoader

|

| 11 |

+

from chains.dialogue_answering.prompts import (

|

| 12 |

+

DIALOGUE_PREFIX,

|

| 13 |

+

DIALOGUE_SUFFIX,

|

| 14 |

+

SUMMARY_PROMPT

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

class DialogueWithSharedMemoryChains:

|

| 19 |

+

zero_shot_react_llm: BaseLanguageModel = None

|

| 20 |

+

ask_llm: BaseLanguageModel = None

|

| 21 |

+

embeddings: HuggingFaceEmbeddings = None

|

| 22 |

+

embedding_model: str = None

|

| 23 |

+

vector_search_top_k: int = 6

|

| 24 |

+

dialogue_path: str = None

|

| 25 |

+

dialogue_loader: DialogueLoader = None

|

| 26 |

+

device: str = None

|

| 27 |

+

|

| 28 |

+

def __init__(self, zero_shot_react_llm: BaseLanguageModel = None, ask_llm: BaseLanguageModel = None,

|

| 29 |

+

params: dict = None):

|

| 30 |

+

self.zero_shot_react_llm = zero_shot_react_llm

|

| 31 |

+

self.ask_llm = ask_llm

|

| 32 |

+

params = params or {}

|

| 33 |

+

self.embedding_model = params.get('embedding_model', 'GanymedeNil/text2vec-large-chinese')

|

| 34 |

+

self.vector_search_top_k = params.get('vector_search_top_k', 6)

|

| 35 |

+

self.dialogue_path = params.get('dialogue_path', '')

|

| 36 |

+

self.device = 'cuda' if params.get('use_cuda', False) else 'cpu'

|

| 37 |

+

|

| 38 |

+

self.dialogue_loader = DialogueLoader(self.dialogue_path)

|

| 39 |

+

self._init_cfg()

|

| 40 |

+

self._init_state_of_history()

|

| 41 |

+

self.memory_chain, self.memory = self._agents_answer()

|

| 42 |

+

self.agent_chain = self._create_agent_chain()

|

| 43 |

+

|

| 44 |

+

def _init_cfg(self):

|

| 45 |

+

model_kwargs = {

|

| 46 |

+

'device': self.device

|

| 47 |

+

}

|

| 48 |

+

self.embeddings = HuggingFaceEmbeddings(model_name=self.embedding_model, model_kwargs=model_kwargs)

|

| 49 |

+

|

| 50 |

+

def _init_state_of_history(self):

|

| 51 |

+

documents = self.dialogue_loader.load()

|

| 52 |

+

text_splitter = CharacterTextSplitter(chunk_size=3, chunk_overlap=1)

|

| 53 |

+

texts = text_splitter.split_documents(documents)

|

| 54 |

+

docsearch = Chroma.from_documents(texts, self.embeddings, collection_name="state-of-history")

|

| 55 |

+

self.state_of_history = RetrievalQA.from_chain_type(llm=self.ask_llm, chain_type="stuff",

|

| 56 |

+

retriever=docsearch.as_retriever())

|

| 57 |

+

|

| 58 |

+

def _agents_answer(self):

|

| 59 |

+

|

| 60 |

+

memory = ConversationBufferMemory(memory_key="chat_history")

|

| 61 |

+

readonly_memory = ReadOnlySharedMemory(memory=memory)

|

| 62 |

+

memory_chain = LLMChain(

|

| 63 |

+

llm=self.ask_llm,

|

| 64 |

+

prompt=SUMMARY_PROMPT,

|

| 65 |

+

verbose=True,

|

| 66 |

+

memory=readonly_memory, # use the read-only memory to prevent the tool from modifying the memory

|

| 67 |

+

)

|

| 68 |

+

return memory_chain, memory

|

| 69 |

+

|

| 70 |

+

def _create_agent_chain(self):

|

| 71 |

+

dialogue_participants = self.dialogue_loader.dialogue.participants_to_export()

|

| 72 |

+

tools = [

|

| 73 |

+

Tool(

|

| 74 |

+

name="State of Dialogue History System",

|

| 75 |

+

func=self.state_of_history.run,

|

| 76 |

+

description=f"Dialogue with {dialogue_participants} - The answers in this section are very useful "

|

| 77 |

+

f"when searching for chat content between {dialogue_participants}. Input should be a "

|

| 78 |

+

f"complete question. "

|

| 79 |

+

),

|

| 80 |

+

Tool(

|

| 81 |

+

name="Summary",

|

| 82 |

+

func=self.memory_chain.run,

|

| 83 |

+

description="useful for when you summarize a conversation. The input to this tool should be a string, "

|

| 84 |

+

"representing who will read this summary. "

|

| 85 |

+

)

|

| 86 |

+

]

|

| 87 |

+

|

| 88 |

+

prompt = ZeroShotAgent.create_prompt(

|

| 89 |

+

tools,

|

| 90 |

+

prefix=DIALOGUE_PREFIX,

|

| 91 |

+

suffix=DIALOGUE_SUFFIX,

|

| 92 |

+

input_variables=["input", "chat_history", "agent_scratchpad"]

|

| 93 |

+

)

|

| 94 |

+

|

| 95 |

+

llm_chain = LLMChain(llm=self.zero_shot_react_llm, prompt=prompt)

|

| 96 |

+

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

|

| 97 |

+

agent_chain = AgentExecutor.from_agent_and_tools(agent=agent, tools=tools, verbose=True, memory=self.memory)

|

| 98 |

+

|

| 99 |

+

return agent_chain

|

chains/dialogue_answering/prompts.py

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from langchain.prompts.prompt import PromptTemplate

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

SUMMARY_TEMPLATE = """This is a conversation between a human and a bot:

|

| 5 |

+

|

| 6 |

+

{chat_history}

|

| 7 |

+

|

| 8 |

+

Write a summary of the conversation for {input}:

|

| 9 |

+

"""

|

| 10 |

+

|

| 11 |

+

SUMMARY_PROMPT = PromptTemplate(

|

| 12 |

+

input_variables=["input", "chat_history"],

|

| 13 |

+

template=SUMMARY_TEMPLATE

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

DIALOGUE_PREFIX = """Have a conversation with a human,Analyze the content of the conversation.

|

| 17 |

+

You have access to the following tools: """

|

| 18 |

+

DIALOGUE_SUFFIX = """Begin!

|

| 19 |

+

|

| 20 |

+

{chat_history}

|

| 21 |

+

Question: {input}

|

| 22 |

+

{agent_scratchpad}"""

|

chains/local_doc_qa.py

ADDED

|

@@ -0,0 +1,347 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from langchain.embeddings.huggingface import HuggingFaceEmbeddings

|

| 2 |

+

from vectorstores import MyFAISS

|

| 3 |

+

from langchain.document_loaders import UnstructuredFileLoader, TextLoader, CSVLoader

|

| 4 |

+

from configs.model_config import *

|

| 5 |

+

import datetime

|

| 6 |

+

from textsplitter import ChineseTextSplitter

|

| 7 |

+

from typing import List

|

| 8 |

+

from utils import torch_gc

|

| 9 |

+

from tqdm import tqdm

|

| 10 |

+

from pypinyin import lazy_pinyin

|

| 11 |

+

from loader import UnstructuredPaddleImageLoader, UnstructuredPaddlePDFLoader

|

| 12 |

+

from models.base import (BaseAnswer,

|

| 13 |

+

AnswerResult)

|

| 14 |

+

from models.loader.args import parser

|

| 15 |

+

from models.loader import LoaderCheckPoint

|

| 16 |

+

import models.shared as shared

|

| 17 |

+

from agent import bing_search

|

| 18 |

+

from langchain.docstore.document import Document

|

| 19 |

+

from functools import lru_cache

|

| 20 |

+

from textsplitter.zh_title_enhance import zh_title_enhance

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

# patch HuggingFaceEmbeddings to make it hashable

|

| 24 |

+

def _embeddings_hash(self):

|

| 25 |

+

return hash(self.model_name)

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

HuggingFaceEmbeddings.__hash__ = _embeddings_hash

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

# will keep CACHED_VS_NUM of vector store caches

|

| 32 |

+

@lru_cache(CACHED_VS_NUM)

|

| 33 |

+

def load_vector_store(vs_path, embeddings):

|

| 34 |

+

return MyFAISS.load_local(vs_path, embeddings)

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def tree(filepath, ignore_dir_names=None, ignore_file_names=None):

|

| 38 |

+

"""返回两个列表,第一个列表为 filepath 下全部文件的完整路径, 第二个为对应的文件名"""

|

| 39 |

+

if ignore_dir_names is None:

|

| 40 |

+

ignore_dir_names = []

|

| 41 |

+

if ignore_file_names is None:

|

| 42 |

+

ignore_file_names = []

|

| 43 |

+

ret_list = []

|

| 44 |

+

if isinstance(filepath, str):

|

| 45 |

+

if not os.path.exists(filepath):

|

| 46 |

+

print("路径不存在")

|

| 47 |

+

return None, None

|

| 48 |

+

elif os.path.isfile(filepath) and os.path.basename(filepath) not in ignore_file_names:

|

| 49 |

+

return [filepath], [os.path.basename(filepath)]

|

| 50 |

+

elif os.path.isdir(filepath) and os.path.basename(filepath) not in ignore_dir_names:

|

| 51 |

+

for file in os.listdir(filepath):

|

| 52 |

+

fullfilepath = os.path.join(filepath, file)

|

| 53 |

+

if os.path.isfile(fullfilepath) and os.path.basename(fullfilepath) not in ignore_file_names:

|

| 54 |

+

ret_list.append(fullfilepath)

|

| 55 |

+

if os.path.isdir(fullfilepath) and os.path.basename(fullfilepath) not in ignore_dir_names:

|

| 56 |

+

ret_list.extend(tree(fullfilepath, ignore_dir_names, ignore_file_names)[0])

|

| 57 |

+

return ret_list, [os.path.basename(p) for p in ret_list]

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def load_file(filepath, sentence_size=SENTENCE_SIZE, using_zh_title_enhance=ZH_TITLE_ENHANCE):

|

| 61 |

+

if filepath.lower().endswith(".md"):

|

| 62 |

+

loader = UnstructuredFileLoader(filepath, mode="elements")

|

| 63 |

+

docs = loader.load()

|

| 64 |

+

elif filepath.lower().endswith(".txt"):

|

| 65 |

+

loader = TextLoader(filepath, autodetect_encoding=True)

|

| 66 |

+

textsplitter = ChineseTextSplitter(pdf=False, sentence_size=sentence_size)

|

| 67 |

+

docs = loader.load_and_split(textsplitter)

|

| 68 |

+

elif filepath.lower().endswith(".pdf"):

|

| 69 |

+

loader = UnstructuredPaddlePDFLoader(filepath)

|

| 70 |

+

textsplitter = ChineseTextSplitter(pdf=True, sentence_size=sentence_size)

|

| 71 |

+

docs = loader.load_and_split(textsplitter)

|

| 72 |

+

elif filepath.lower().endswith(".jpg") or filepath.lower().endswith(".png"):

|

| 73 |

+

loader = UnstructuredPaddleImageLoader(filepath, mode="elements")

|

| 74 |

+

textsplitter = ChineseTextSplitter(pdf=False, sentence_size=sentence_size)

|

| 75 |

+

docs = loader.load_and_split(text_splitter=textsplitter)

|

| 76 |

+

elif filepath.lower().endswith(".csv"):

|

| 77 |

+

loader = CSVLoader(filepath)

|

| 78 |

+

docs = loader.load()

|

| 79 |

+

else:

|

| 80 |

+

loader = UnstructuredFileLoader(filepath, mode="elements")

|

| 81 |

+

textsplitter = ChineseTextSplitter(pdf=False, sentence_size=sentence_size)

|

| 82 |

+

docs = loader.load_and_split(text_splitter=textsplitter)

|

| 83 |

+

if using_zh_title_enhance:

|

| 84 |

+

docs = zh_title_enhance(docs)

|

| 85 |

+

write_check_file(filepath, docs)

|

| 86 |

+

return docs

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def write_check_file(filepath, docs):

|

| 90 |

+

folder_path = os.path.join(os.path.dirname(filepath), "tmp_files")

|

| 91 |

+

if not os.path.exists(folder_path):

|

| 92 |

+

os.makedirs(folder_path)

|

| 93 |

+

fp = os.path.join(folder_path, 'load_file.txt')

|

| 94 |

+

with open(fp, 'a+', encoding='utf-8') as fout:

|

| 95 |

+

fout.write("filepath=%s,len=%s" % (filepath, len(docs)))

|

| 96 |

+

fout.write('\n')

|

| 97 |

+

for i in docs:

|

| 98 |

+

fout.write(str(i))

|

| 99 |

+

fout.write('\n')

|

| 100 |

+

fout.close()

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

def generate_prompt(related_docs: List[str],

|

| 104 |

+

query: str,

|

| 105 |

+

prompt_template: str = PROMPT_TEMPLATE, ) -> str:

|

| 106 |

+

context = "\n".join([doc.page_content for doc in related_docs])

|

| 107 |

+

prompt = prompt_template.replace("{question}", query).replace("{context}", context)

|

| 108 |

+

return prompt

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

def search_result2docs(search_results):

|

| 112 |

+

docs = []

|

| 113 |

+

for result in search_results:

|

| 114 |

+

doc = Document(page_content=result["snippet"] if "snippet" in result.keys() else "",

|

| 115 |

+

metadata={"source": result["link"] if "link" in result.keys() else "",

|

| 116 |

+

"filename": result["title"] if "title" in result.keys() else ""})

|

| 117 |

+

docs.append(doc)

|

| 118 |

+

return docs

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

class LocalDocQA:

|

| 122 |

+

llm: BaseAnswer = None

|

| 123 |

+

embeddings: object = None

|

| 124 |

+

top_k: int = VECTOR_SEARCH_TOP_K

|

| 125 |

+

chunk_size: int = CHUNK_SIZE

|

| 126 |

+

chunk_conent: bool = True

|

| 127 |

+

score_threshold: int = VECTOR_SEARCH_SCORE_THRESHOLD

|

| 128 |

+

|

| 129 |

+

def init_cfg(self,

|

| 130 |

+

embedding_model: str = EMBEDDING_MODEL,

|

| 131 |

+

embedding_device=EMBEDDING_DEVICE,

|

| 132 |

+

llm_model: BaseAnswer = None,

|

| 133 |

+

top_k=VECTOR_SEARCH_TOP_K,

|

| 134 |

+

):

|

| 135 |

+

self.llm = llm_model

|

| 136 |

+

self.embeddings = HuggingFaceEmbeddings(model_name="C:/Users/Administrator/text2vec-large-chinese",

|

| 137 |

+

model_kwargs={'device': embedding_device})

|

| 138 |

+

# self.embeddings = HuggingFaceEmbeddings(model_name=embedding_model_dict[embedding_model],

|

| 139 |

+

# model_kwargs={'device': embedding_device})

|

| 140 |

+

|

| 141 |

+

self.top_k = top_k

|

| 142 |

+

|

| 143 |

+

def init_knowledge_vector_store(self,

|

| 144 |

+

filepath: str or List[str],

|

| 145 |

+

vs_path: str or os.PathLike = None,

|

| 146 |

+

sentence_size=SENTENCE_SIZE):

|

| 147 |

+

loaded_files = []

|

| 148 |

+

failed_files = []

|

| 149 |

+

if isinstance(filepath, str):

|

| 150 |

+

if not os.path.exists(filepath):

|

| 151 |

+

print("路径不存在")

|

| 152 |

+

return None

|

| 153 |

+

elif os.path.isfile(filepath):

|

| 154 |

+

file = os.path.split(filepath)[-1]

|

| 155 |

+

try:

|

| 156 |

+

docs = load_file(filepath, sentence_size)

|

| 157 |

+

logger.info(f"{file} 已成功加载")

|

| 158 |

+

loaded_files.append(filepath)

|

| 159 |

+

except Exception as e:

|

| 160 |

+

logger.error(e)

|

| 161 |

+

logger.info(f"{file} 未能成功加载")

|

| 162 |

+

return None

|

| 163 |

+

elif os.path.isdir(filepath):

|

| 164 |

+

docs = []

|

| 165 |

+

for fullfilepath, file in tqdm(zip(*tree(filepath, ignore_dir_names=['tmp_files'])), desc="加载文件"):

|

| 166 |

+

try:

|

| 167 |

+

docs += load_file(fullfilepath, sentence_size)

|

| 168 |

+

loaded_files.append(fullfilepath)

|

| 169 |

+

except Exception as e:

|

| 170 |

+

logger.error(e)

|

| 171 |

+

failed_files.append(file)

|

| 172 |

+

|

| 173 |

+

if len(failed_files) > 0:

|

| 174 |

+

logger.info("以下文件未能成功加载:")

|

| 175 |

+

for file in failed_files:

|

| 176 |

+

logger.info(f"{file}\n")

|

| 177 |

+

|

| 178 |

+

else:

|

| 179 |

+

docs = []

|

| 180 |

+

for file in filepath:

|

| 181 |

+

try:

|

| 182 |

+

docs += load_file(file)

|

| 183 |

+

logger.info(f"{file} 已成功加载")

|

| 184 |

+

loaded_files.append(file)

|

| 185 |

+

except Exception as e:

|

| 186 |

+

logger.error(e)

|

| 187 |

+

logger.info(f"{file} 未能成功加载")

|

| 188 |

+

if len(docs) > 0:

|

| 189 |

+

logger.info("文件加载完毕,正在生成向量库")

|

| 190 |

+

if vs_path and os.path.isdir(vs_path) and "index.faiss" in os.listdir(vs_path):

|

| 191 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 192 |

+

vector_store.add_documents(docs)

|

| 193 |

+

torch_gc()

|

| 194 |

+

else:

|

| 195 |

+

if not vs_path:

|

| 196 |

+

vs_path = os.path.join(KB_ROOT_PATH,

|

| 197 |

+

f"""{"".join(lazy_pinyin(os.path.splitext(file)[0]))}_FAISS_{datetime.datetime.now().strftime("%Y%m%d_%H%M%S")}""",

|

| 198 |

+

"vector_store")

|

| 199 |

+

vector_store = MyFAISS.from_documents(docs, self.embeddings) # docs 为Document列表

|

| 200 |

+

torch_gc()

|

| 201 |

+

|

| 202 |

+

vector_store.save_local(vs_path)

|

| 203 |

+

return vs_path, loaded_files

|

| 204 |

+

else:

|

| 205 |

+

logger.info("文件均未成功加载,请检查依赖包或替换为其他文件再次上传。")

|

| 206 |

+

return None, loaded_files

|

| 207 |

+

|

| 208 |

+

def one_knowledge_add(self, vs_path, one_title, one_conent, one_content_segmentation, sentence_size):

|

| 209 |

+

try:

|

| 210 |

+

if not vs_path or not one_title or not one_conent:

|

| 211 |

+

logger.info("知识库添加错误,请确认知识库名字、标题、内容是否正确!")

|

| 212 |

+

return None, [one_title]

|

| 213 |

+

docs = [Document(page_content=one_conent + "\n", metadata={"source": one_title})]

|

| 214 |

+

if not one_content_segmentation:

|

| 215 |

+

text_splitter = ChineseTextSplitter(pdf=False, sentence_size=sentence_size)

|

| 216 |

+

docs = text_splitter.split_documents(docs)

|

| 217 |

+

if os.path.isdir(vs_path) and os.path.isfile(vs_path + "/index.faiss"):

|

| 218 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 219 |

+

vector_store.add_documents(docs)

|

| 220 |

+

else:

|

| 221 |

+

vector_store = MyFAISS.from_documents(docs, self.embeddings) ##docs 为Document列表

|

| 222 |

+

torch_gc()

|

| 223 |

+

vector_store.save_local(vs_path)

|

| 224 |

+

return vs_path, [one_title]

|

| 225 |

+

except Exception as e:

|

| 226 |

+

logger.error(e)

|

| 227 |

+

return None, [one_title]

|

| 228 |

+

|

| 229 |

+

def get_knowledge_based_answer(self, query, vs_path, chat_history=[], streaming: bool = STREAMING):

|

| 230 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 231 |

+

vector_store.chunk_size = self.chunk_size

|

| 232 |

+

vector_store.chunk_conent = self.chunk_conent

|

| 233 |

+

vector_store.score_threshold = self.score_threshold

|

| 234 |

+

related_docs_with_score = vector_store.similarity_search_with_score(query, k=self.top_k)

|

| 235 |

+

torch_gc()

|

| 236 |

+

if len(related_docs_with_score) > 0:

|

| 237 |

+

prompt = generate_prompt(related_docs_with_score, query)

|

| 238 |

+

else:

|

| 239 |

+

prompt = query

|

| 240 |

+

|

| 241 |

+

for answer_result in self.llm.generatorAnswer(prompt=prompt, history=chat_history,

|

| 242 |

+

streaming=streaming):

|

| 243 |

+

resp = answer_result.llm_output["answer"]

|

| 244 |

+

history = answer_result.history

|

| 245 |

+

history[-1][0] = query

|

| 246 |

+

response = {"query": query,

|

| 247 |

+

"result": resp,

|

| 248 |

+

"source_documents": related_docs_with_score}

|

| 249 |

+

yield response, history

|

| 250 |

+

|

| 251 |

+

# query 查询内容

|

| 252 |

+

# vs_path 知识库路径

|

| 253 |

+

# chunk_conent 是否启用上下文关联

|

| 254 |

+

# score_threshold 搜索匹配score阈值

|

| 255 |

+

# vector_search_top_k 搜索知识库内容条数,默认搜索5条结果

|

| 256 |

+

# chunk_sizes 匹配单段内容的连接上下文长度

|

| 257 |

+

def get_knowledge_based_conent_test(self, query, vs_path, chunk_conent,

|

| 258 |

+

score_threshold=VECTOR_SEARCH_SCORE_THRESHOLD,

|

| 259 |

+

vector_search_top_k=VECTOR_SEARCH_TOP_K, chunk_size=CHUNK_SIZE):

|

| 260 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 261 |

+

# FAISS.similarity_search_with_score_by_vector = similarity_search_with_score_by_vector

|

| 262 |

+

vector_store.chunk_conent = chunk_conent

|

| 263 |

+

vector_store.score_threshold = score_threshold

|

| 264 |

+

vector_store.chunk_size = chunk_size

|

| 265 |

+

related_docs_with_score = vector_store.similarity_search_with_score(query, k=vector_search_top_k)

|

| 266 |

+

if not related_docs_with_score:

|

| 267 |

+

response = {"query": query,

|

| 268 |

+

"source_documents": []}

|

| 269 |

+

return response, ""

|

| 270 |

+

torch_gc()

|

| 271 |

+

prompt = "\n".join([doc.page_content for doc in related_docs_with_score])

|

| 272 |

+

response = {"query": query,

|

| 273 |

+

"source_documents": related_docs_with_score}

|

| 274 |

+

return response, prompt

|

| 275 |

+

|

| 276 |

+

def get_search_result_based_answer(self, query, chat_history=[], streaming: bool = STREAMING):

|

| 277 |

+

results = bing_search(query)

|

| 278 |

+

result_docs = search_result2docs(results)

|

| 279 |

+

prompt = generate_prompt(result_docs, query)

|

| 280 |

+

|

| 281 |

+

for answer_result in self.llm.generatorAnswer(prompt=prompt, history=chat_history,

|

| 282 |

+

streaming=streaming):

|

| 283 |

+

resp = answer_result.llm_output["answer"]

|

| 284 |

+

history = answer_result.history

|

| 285 |

+

history[-1][0] = query

|

| 286 |

+

response = {"query": query,

|

| 287 |

+

"result": resp,

|

| 288 |

+

"source_documents": result_docs}

|

| 289 |

+

yield response, history

|

| 290 |

+

|

| 291 |

+

def delete_file_from_vector_store(self,

|

| 292 |

+

filepath: str or List[str],

|

| 293 |

+

vs_path):

|

| 294 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 295 |

+

status = vector_store.delete_doc(filepath)

|

| 296 |

+

return status

|

| 297 |

+

|

| 298 |

+

def update_file_from_vector_store(self,

|

| 299 |

+

filepath: str or List[str],

|

| 300 |

+

vs_path,

|

| 301 |

+

docs: List[Document],):

|

| 302 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 303 |

+

status = vector_store.update_doc(filepath, docs)

|

| 304 |

+

return status

|

| 305 |

+

|

| 306 |

+

def list_file_from_vector_store(self,

|

| 307 |

+

vs_path,

|

| 308 |

+

fullpath=False):

|

| 309 |

+

vector_store = load_vector_store(vs_path, self.embeddings)

|

| 310 |

+

docs = vector_store.list_docs()

|

| 311 |

+

if fullpath:

|

| 312 |

+

return docs

|

| 313 |

+

else:

|

| 314 |

+

return [os.path.split(doc)[-1] for doc in docs]

|

| 315 |

+

|

| 316 |

+

|

| 317 |

+

if __name__ == "__main__":

|

| 318 |

+

# 初始化消息

|

| 319 |

+

args = None

|

| 320 |

+

args = parser.parse_args(args=['--model-dir', '/media/checkpoint/', '--model', 'chatglm-6b', '--no-remote-model'])

|

| 321 |

+

|

| 322 |

+

args_dict = vars(args)

|

| 323 |

+

shared.loaderCheckPoint = LoaderCheckPoint(args_dict)

|

| 324 |

+

llm_model_ins = shared.loaderLLM()

|

| 325 |

+

llm_model_ins.set_history_len(LLM_HISTORY_LEN)

|

| 326 |

+

|

| 327 |

+

local_doc_qa = LocalDocQA()

|

| 328 |

+

local_doc_qa.init_cfg(llm_model=llm_model_ins)

|

| 329 |

+

query = "本项目使用的embedding模型是什么,消耗多少显存"

|

| 330 |

+

vs_path = "/media/gpt4-pdf-chatbot-langchain/dev-langchain-ChatGLM/vector_store/test"

|

| 331 |

+

last_print_len = 0

|

| 332 |

+

# for resp, history in local_doc_qa.get_knowledge_based_answer(query=query,

|

| 333 |

+

# vs_path=vs_path,

|

| 334 |

+

# chat_history=[],

|

| 335 |

+

# streaming=True):

|

| 336 |

+

for resp, history in local_doc_qa.get_search_result_based_answer(query=query,

|

| 337 |

+

chat_history=[],

|

| 338 |

+

streaming=True):

|

| 339 |

+

print(resp["result"][last_print_len:], end="", flush=True)

|

| 340 |

+

last_print_len = len(resp["result"])

|

| 341 |

+

source_text = [f"""出处 [{inum + 1}] {doc.metadata['source'] if doc.metadata['source'].startswith("http")

|

| 342 |

+

else os.path.split(doc.metadata['source'])[-1]}:\n\n{doc.page_content}\n\n"""

|

| 343 |

+

# f"""相关度:{doc.metadata['score']}\n\n"""

|

| 344 |

+

for inum, doc in

|

| 345 |

+

enumerate(resp["source_documents"])]

|

| 346 |

+

logger.info("\n\n" + "\n\n".join(source_text))

|

| 347 |

+

pass

|

chains/text_load.py

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import pinecone

|

| 3 |

+

from tqdm import tqdm

|

| 4 |

+

from langchain.llms import OpenAI

|

| 5 |

+

from langchain.text_splitter import SpacyTextSplitter

|

| 6 |

+

from langchain.document_loaders import TextLoader

|

| 7 |

+

from langchain.document_loaders import DirectoryLoader

|

| 8 |

+

from langchain.indexes import VectorstoreIndexCreator

|

| 9 |

+

from langchain.embeddings.openai import OpenAIEmbeddings

|

| 10 |

+

from langchain.vectorstores import Pinecone

|

| 11 |

+

|

| 12 |

+

#一些配置文件

|

| 13 |

+

openai_key="你的key" # 注册 openai.com 后获得

|

| 14 |

+

pinecone_key="你的key" # 注册 app.pinecone.io 后获得

|

| 15 |

+

pinecone_index="你的库" #app.pinecone.io 获得

|

| 16 |

+

pinecone_environment="你的Environment" # 登录pinecone后,在indexes页面 查看Environment

|

| 17 |

+

pinecone_namespace="你的Namespace" #如果不存在自动创建

|

| 18 |

+

|

| 19 |

+

#科学上网你懂得

|

| 20 |

+

os.environ['HTTP_PROXY'] = 'http://127.0.0.1:7890'

|

| 21 |

+

os.environ['HTTPS_PROXY'] = 'http://127.0.0.1:7890'

|

| 22 |

+

|

| 23 |

+

#初始化pinecone

|

| 24 |

+

pinecone.init(

|

| 25 |

+

api_key=pinecone_key,

|

| 26 |

+

environment=pinecone_environment

|

| 27 |

+

)

|

| 28 |

+

index = pinecone.Index(pinecone_index)

|

| 29 |

+

|

| 30 |

+

#初始化OpenAI的embeddings

|

| 31 |

+

embeddings = OpenAIEmbeddings(openai_api_key=openai_key)

|

| 32 |

+

|

| 33 |

+

#初始化text_splitter

|

| 34 |

+

text_splitter = SpacyTextSplitter(pipeline='zh_core_web_sm',chunk_size=1000,chunk_overlap=200)

|

| 35 |

+

|

| 36 |

+

# 读取目录下所有后缀是txt的文件

|

| 37 |

+

loader = DirectoryLoader('../docs', glob="**/*.txt", loader_cls=TextLoader)

|

| 38 |

+

|

| 39 |

+

#读取文本文件

|

| 40 |

+

documents = loader.load()

|

| 41 |

+

|

| 42 |

+

# 使用text_splitter对文档进行分割

|

| 43 |

+

split_text = text_splitter.split_documents(documents)

|

| 44 |

+

try:

|

| 45 |

+

for document in tqdm(split_text):

|

| 46 |

+

# 获取向量并储存到pinecone

|

| 47 |

+

Pinecone.from_documents([document], embeddings, index_name=pinecone_index)

|

| 48 |

+

except Exception as e:

|

| 49 |

+

print(f"Error: {e}")

|

| 50 |

+

quit()

|

| 51 |

+

|

| 52 |

+

|

configs/__pycache__/model_config.cpython-310.pyc

ADDED

|

Binary file (2.96 kB). View file

|

|

|

configs/model_config - 副本.py

ADDED

|

@@ -0,0 +1,269 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.cuda

|

| 2 |

+

import torch.backends

|

| 3 |

+

import os

|

| 4 |

+

import logging

|

| 5 |

+

import uuid

|

| 6 |

+

|

| 7 |

+

LOG_FORMAT = "%(levelname) -5s %(asctime)s" "-1d: %(message)s"

|

| 8 |

+

logger = logging.getLogger()

|

| 9 |

+

logger.setLevel(logging.INFO)

|

| 10 |

+

logging.basicConfig(format=LOG_FORMAT)

|

| 11 |

+

|

| 12 |

+

# 在以下字典中修改属性值,以指定本地embedding模型存储位置

|

| 13 |

+

# 如将 "text2vec": "GanymedeNil/text2vec-large-chinese" 修改为 "text2vec": "User/Downloads/text2vec-large-chinese"

|

| 14 |

+

# 此处请写绝对路径

|

| 15 |

+

embedding_model_dict = {

|

| 16 |

+

"ernie-tiny": "nghuyong/ernie-3.0-nano-zh",

|

| 17 |

+

"ernie-base": "nghuyong/ernie-3.0-base-zh",

|

| 18 |

+

"text2vec-base": "shibing624/text2vec-base-chinese",

|

| 19 |

+

"text2vec": "GanymedeNil/text2vec-large-chinese",

|

| 20 |

+

"m3e-small": "moka-ai/m3e-small",

|

| 21 |

+

"m3e-base": "moka-ai/m3e-base",

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

# Embedding model name

|

| 25 |

+

EMBEDDING_MODEL = "text2vec"

|

| 26 |

+

|

| 27 |

+

# Embedding running device

|

| 28 |

+

EMBEDDING_DEVICE = "cuda" if torch.cuda.is_available() else "mps" if torch.backends.mps.is_available() else "cpu"

|

| 29 |

+

|

| 30 |

+

# supported LLM models

|

| 31 |

+

# llm_model_dict 处理了loader的一些预设行为,如加载位置,模型名称,模型处理器实例

|

| 32 |

+

# 在以下字典中修改属性值,以指定本地 LLM 模型存储位置

|

| 33 |

+

# 如将 "chatglm-6b" 的 "local_model_path" 由 None 修改为 "User/Downloads/chatglm-6b"

|

| 34 |

+

# 此处请写绝对路径

|

| 35 |

+

llm_model_dict = {

|

| 36 |

+

"chatglm-6b-int4-qe": {

|

| 37 |

+

"name": "chatglm-6b-int4-qe",

|

| 38 |

+

"pretrained_model_name": "THUDM/chatglm-6b-int4-qe",

|

| 39 |

+

"local_model_path": None,

|

| 40 |

+

"provides": "ChatGLMLLMChain"

|

| 41 |

+

},

|

| 42 |

+

"chatglm-6b-int4": {

|

| 43 |

+

"name": "chatglm-6b-int4",

|

| 44 |

+

"pretrained_model_name": "THUDM/chatglm-6b-int4",

|

| 45 |

+

"local_model_path": None,

|

| 46 |

+

"provides": "ChatGLMLLMChain"

|

| 47 |

+

},

|

| 48 |

+

"chatglm-6b-int8": {

|

| 49 |

+

"name": "chatglm-6b-int8",

|

| 50 |

+

"pretrained_model_name": "THUDM/chatglm-6b-int8",

|

| 51 |

+

"local_model_path": None,

|

| 52 |

+

"provides": "ChatGLMLLMChain"

|

| 53 |

+

},

|

| 54 |

+

"chatglm-6b": {

|

| 55 |

+

"name": "chatglm-6b",

|

| 56 |

+

"pretrained_model_name": "THUDM/chatglm-6b",

|

| 57 |

+

"local_model_path": None,

|

| 58 |

+

"provides": "ChatGLMLLMChain"

|

| 59 |

+

},

|

| 60 |

+

"chatglm2-6b": {

|

| 61 |

+

"name": "chatglm2-6b",

|

| 62 |

+

"pretrained_model_name": "THUDM/chatglm2-6b",

|

| 63 |

+

"local_model_path": None,

|

| 64 |

+

"provides": "ChatGLMLLMChain"

|

| 65 |

+

},

|

| 66 |

+

"chatglm2-6b-int4": {

|

| 67 |

+

"name": "chatglm2-6b-int4",

|

| 68 |

+

"pretrained_model_name": "THUDM/chatglm2-6b-int4",

|

| 69 |

+

"local_model_path": None,

|

| 70 |

+

"provides": "ChatGLMLLMChain"

|

| 71 |

+

},

|

| 72 |

+

"chatglm2-6b-int8": {

|

| 73 |

+

"name": "chatglm2-6b-int8",

|

| 74 |

+

"pretrained_model_name": "THUDM/chatglm2-6b-int8",

|

| 75 |

+

"local_model_path": None,

|

| 76 |

+

"provides": "ChatGLMLLMChain"

|

| 77 |

+

},

|

| 78 |

+

"chatyuan": {

|

| 79 |

+

"name": "chatyuan",

|

| 80 |

+

"pretrained_model_name": "ClueAI/ChatYuan-large-v2",

|

| 81 |

+

"local_model_path": None,

|

| 82 |

+

"provides": "MOSSLLMChain"

|

| 83 |

+

},

|

| 84 |

+

"moss": {

|

| 85 |

+

"name": "moss",

|

| 86 |

+

"pretrained_model_name": "fnlp/moss-moon-003-sft",

|

| 87 |

+

"local_model_path": None,

|

| 88 |

+

"provides": "MOSSLLMChain"

|

| 89 |

+

},

|

| 90 |

+

"vicuna-13b-hf": {

|

| 91 |

+

"name": "vicuna-13b-hf",

|

| 92 |

+

"pretrained_model_name": "vicuna-13b-hf",

|

| 93 |

+

"local_model_path": None,

|

| 94 |

+

"provides": "LLamaLLMChain"

|

| 95 |

+

},

|

| 96 |

+

"vicuna-7b-hf": {

|

| 97 |

+

"name": "vicuna-13b-hf",

|

| 98 |

+

"pretrained_model_name": "vicuna-13b-hf",

|

| 99 |

+

"local_model_path": None,

|

| 100 |

+

"provides": "LLamaLLMChain"

|

| 101 |

+

},

|

| 102 |

+

# 直接调用返回requests.exceptions.ConnectionError错误,需要通过huggingface_hub包里的snapshot_download函数

|

| 103 |

+

# 下载模型,如果snapshot_download还是返回网络错误,多试几次,一般是可以的,

|

| 104 |

+

# 如果仍然不行,则应该是网络加了防火墙(在服务器上这种情况比较常见),基本只能从别的设备上下载,

|

| 105 |

+

# 然后转移到目标设备了.

|

| 106 |

+

"bloomz-7b1": {

|

| 107 |

+

"name": "bloomz-7b1",

|

| 108 |

+

"pretrained_model_name": "bigscience/bloomz-7b1",

|

| 109 |

+

"local_model_path": None,

|

| 110 |

+

"provides": "MOSSLLMChain"

|

| 111 |

+

|

| 112 |

+

},

|

| 113 |

+

# 实测加载bigscience/bloom-3b需要170秒左右,暂不清楚为什么这么慢

|

| 114 |

+

# 应与它要加载专有token有关

|

| 115 |

+

"bloom-3b": {

|

| 116 |

+

"name": "bloom-3b",

|

| 117 |

+

"pretrained_model_name": "bigscience/bloom-3b",

|

| 118 |

+

"local_model_path": None,

|

| 119 |

+

"provides": "MOSSLLMChain"

|

| 120 |

+

|

| 121 |

+

},

|

| 122 |

+

"baichuan-7b": {

|

| 123 |

+

"name": "baichuan-7b",

|

| 124 |

+

"pretrained_model_name": "baichuan-inc/baichuan-7B",

|

| 125 |

+

"local_model_path": None,

|

| 126 |

+

"provides": "MOSSLLMChain"

|

| 127 |

+

},

|

| 128 |

+

# llama-cpp模型的兼容性问题参考https://github.com/abetlen/llama-cpp-python/issues/204

|

| 129 |

+

"ggml-vicuna-13b-1.1-q5": {

|

| 130 |

+

"name": "ggml-vicuna-13b-1.1-q5",

|

| 131 |

+

"pretrained_model_name": "lmsys/vicuna-13b-delta-v1.1",

|

| 132 |

+

# 这里需要下载好模型的路径,如果下载模型是默认路径则它会下载到用户工作区的

|

| 133 |

+

# /.cache/huggingface/hub/models--vicuna--ggml-vicuna-13b-1.1/

|

| 134 |

+

# 还有就是由于本项目加载模型的方式设置的比较严格,下载完成后仍需手动修改模型的文件名

|

| 135 |

+

# 将其设置为与Huggface Hub一致的文件名

|

| 136 |

+

# 此外不同时期的ggml格式并不兼容,因此不同时期的ggml需要安装不同的llama-cpp-python库,且实测pip install 不好使

|

| 137 |

+

# 需要手动从https://github.com/abetlen/llama-cpp-python/releases/tag/下载对应的wheel安装

|

| 138 |

+

# 实测v0.1.63与本模型的vicuna/ggml-vicuna-13b-1.1/ggml-vic13b-q5_1.bin可以兼容

|

| 139 |

+

"local_model_path": f'''{"/".join(os.path.abspath(__file__).split("/")[:3])}/.cache/huggingface/hub/models--vicuna--ggml-vicuna-13b-1.1/blobs/''',

|

| 140 |

+

"provides": "LLamaLLMChain"

|

| 141 |

+

},

|

| 142 |

+

|

| 143 |

+

# 通过 fastchat 调用的模型请参考如下格式

|

| 144 |

+

"fastchat-chatglm-6b": {

|

| 145 |

+

"name": "chatglm-6b", # "name"修改为fastchat服务中的"model_name"

|

| 146 |

+

"pretrained_model_name": "chatglm-6b",

|

| 147 |

+

"local_model_path": None,

|

| 148 |

+

"provides": "FastChatOpenAILLMChain", # 使用fastchat api时,需保证"provides"为"FastChatOpenAILLMChain"

|

| 149 |

+

"api_base_url": "http://localhost:8000/v1", # "name"修改为fastchat服务中的"api_base_url"

|

| 150 |

+

"api_key": "EMPTY"

|

| 151 |

+

},

|

| 152 |

+

"fastchat-chatglm2-6b": {

|

| 153 |

+

"name": "chatglm2-6b", # "name"修改为fastchat服务中的"model_name"

|

| 154 |

+

"pretrained_model_name": "chatglm2-6b",

|

| 155 |

+

"local_model_path": None,

|

| 156 |

+

"provides": "FastChatOpenAILLMChain", # 使用fastchat api时,需保证"provides"为"FastChatOpenAILLMChain"

|

| 157 |

+

"api_base_url": "http://localhost:8000/v1" # "name"修改为fastchat服务中的"api_base_url"

|

| 158 |

+

},

|

| 159 |

+

|

| 160 |

+

# 通过 fastchat 调用的模型请参考如下格式

|

| 161 |

+

"fastchat-vicuna-13b-hf": {

|

| 162 |

+

"name": "vicuna-13b-hf", # "name"修改为fastchat服务中的"model_name"

|

| 163 |

+

"pretrained_model_name": "vicuna-13b-hf",

|

| 164 |

+

"local_model_path": None,

|

| 165 |

+

"provides": "FastChatOpenAILLMChain", # 使用fastchat api时,需保证"provides"为"FastChatOpenAILLMChain"

|

| 166 |

+

"api_base_url": "http://localhost:8000/v1", # "name"修改为fastchat服务中的"api_base_url"

|

| 167 |

+

"api_key": "EMPTY"

|

| 168 |

+

},

|

| 169 |

+

# 调用chatgpt时如果报出: urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='api.openai.com', port=443):

|

| 170 |

+

# Max retries exceeded with url: /v1/chat/completions

|

| 171 |

+

# 则需要将urllib3版本修改为1.25.11

|

| 172 |

+

|

| 173 |

+

# 如果报出:raise NewConnectionError(

|

| 174 |

+

# urllib3.exceptions.NewConnectionError: <urllib3.connection.HTTPSConnection object at 0x000001FE4BDB85E0>:

|

| 175 |

+

# Failed to establish a new connection: [WinError 10060]

|

| 176 |

+

# 则是因为内地和香港的IP都被OPENAI封了,需要挂切换为日本、新加坡等地

|

| 177 |

+

"openai-chatgpt-3.5": {

|

| 178 |

+

"name": "gpt-3.5-turbo",

|

| 179 |

+

"pretrained_model_name": "gpt-3.5-turbo",

|

| 180 |

+

"provides": "FastChatOpenAILLMChain",

|

| 181 |

+

"local_model_path": None,

|

| 182 |

+

"api_base_url": "https://api.openapi.com/v1",

|

| 183 |

+

"api_key": ""

|

| 184 |

+

},

|

| 185 |

+

|

| 186 |

+

}

|

| 187 |

+

|

| 188 |

+

# LLM 名称

|

| 189 |

+

LLM_MODEL = "chatglm-6b"

|

| 190 |

+

# 量化加载8bit 模型

|

| 191 |

+