Spaces:

Configuration error

Configuration error

Upload 62 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .env.example +59 -0

- .gitattributes +0 -1

- .gitignore +6 -0

- .vscode/settings.json +2 -0

- README.md +40 -10

- docker/huggingface/Dockerfile +11 -0

- docker/render/Dockerfile +27 -0

- docs/deploy-huggingface.md +95 -0

- docs/deploy-render.md +51 -0

- docs/huggingface-createspace.png +0 -0

- docs/huggingface-dockerfile.png +0 -0

- docs/huggingface-savedockerfile.png +0 -0

- docs/logging-sheets.md +61 -0

- docs/openapi-admin-users.yaml +204 -0

- docs/user-management.md +65 -0

- package-lock.json +0 -0

- package.json +49 -0

- render.yaml +10 -0

- src/admin/routes.ts +36 -0

- src/admin/users.ts +114 -0

- src/config.ts +425 -0

- src/info-page.ts +267 -0

- src/key-management/anthropic/provider.ts +212 -0

- src/key-management/index.ts +68 -0

- src/key-management/key-pool.ts +106 -0

- src/key-management/openai/checker.ts +278 -0

- src/key-management/openai/provider.ts +360 -0

- src/logger.ts +6 -0

- src/prompt-logging/backends/index.ts +1 -0

- src/prompt-logging/backends/sheets.ts +426 -0

- src/prompt-logging/index.ts +21 -0

- src/prompt-logging/log-queue.ts +116 -0

- src/proxy/anthropic.ts +196 -0

- src/proxy/auth/gatekeeper.ts +77 -0

- src/proxy/auth/user-store.ts +212 -0

- src/proxy/check-origin.ts +46 -0

- src/proxy/kobold.ts +112 -0

- src/proxy/middleware/common.ts +143 -0

- src/proxy/middleware/request/add-anthropic-preamble.ts +32 -0

- src/proxy/middleware/request/add-key.ts +67 -0

- src/proxy/middleware/request/finalize-body.ts +14 -0

- src/proxy/middleware/request/index.ts +47 -0

- src/proxy/middleware/request/language-filter.ts +51 -0

- src/proxy/middleware/request/limit-completions.ts +16 -0

- src/proxy/middleware/request/limit-output-tokens.ts +60 -0

- src/proxy/middleware/request/md-request.ts +93 -0

- src/proxy/middleware/request/milk-zoomers.ts +49 -0

- src/proxy/middleware/request/preprocess.ts +30 -0

- src/proxy/middleware/request/privilege-check.ts +56 -0

- src/proxy/middleware/request/set-api-format.ts +13 -0

.env.example

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copy this file to .env and fill in the values you wish to change. Most already

|

| 2 |

+

# have sensible defaults. See config.ts for more details.

|

| 3 |

+

|

| 4 |

+

# PORT=7860

|

| 5 |

+

# SERVER_TITLE=Coom Tunnel

|

| 6 |

+

# MODEL_RATE_LIMIT=4

|

| 7 |

+

# MAX_OUTPUT_TOKENS_OPENAI=300

|

| 8 |

+

# MAX_OUTPUT_TOKENS_ANTHROPIC=900

|

| 9 |

+

# LOG_LEVEL=info

|

| 10 |

+

# REJECT_DISALLOWED=false

|

| 11 |

+

# REJECT_MESSAGE="This content violates /aicg/'s acceptable use policy."

|

| 12 |

+

# CHECK_KEYS=true

|

| 13 |

+

# QUOTA_DISPLAY_MODE=full

|

| 14 |

+

# QUEUE_MODE=fair

|

| 15 |

+

# BLOCKED_ORIGINS=reddit.com,9gag.com

|

| 16 |

+

# BLOCK_MESSAGE="You must be over the age of majority in your country to use this service."

|

| 17 |

+

# BLOCK_REDIRECT="https://roblox.com/"

|

| 18 |

+

|

| 19 |

+

# Note: CHECK_KEYS is disabled by default in local development mode, but enabled

|

| 20 |

+

# by default in production mode.

|

| 21 |

+

|

| 22 |

+

# Optional settings for user management. See docs/user-management.md.

|

| 23 |

+

# GATEKEEPER=none

|

| 24 |

+

# GATEKEEPER_STORE=memory

|

| 25 |

+

# MAX_IPS_PER_USER=20

|

| 26 |

+

|

| 27 |

+

# Optional settings for prompt logging. See docs/logging-sheets.md.

|

| 28 |

+

# PROMPT_LOGGING=false

|

| 29 |

+

|

| 30 |

+

# ------------------------------------------------------------------------------

|

| 31 |

+

# The values below are secret -- make sure they are set securely.

|

| 32 |

+

# For Huggingface, set them via the Secrets section in your Space's config UI.

|

| 33 |

+

# For Render, create a "secret file" called .env using the Environment tab.

|

| 34 |

+

|

| 35 |

+

# You can add multiple keys by separating them with a comma.

|

| 36 |

+

OPENAI_KEY=sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

|

| 37 |

+

ANTHROPIC_KEY=sk-ant-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

|

| 38 |

+

|

| 39 |

+

# TEMPORARY: This will eventually be replaced by a more robust system.

|

| 40 |

+

# You can adjust the models used when sending OpenAI prompts to /anthropic.

|

| 41 |

+

# Refer to Anthropic's docs for more info (note that they don't list older

|

| 42 |

+

# versions of the models, but they still work).

|

| 43 |

+

# CLAUDE_SMALL_MODEL=claude-v1.2

|

| 44 |

+

# CLAUDE_BIG_MODEL=claude-v1-100k

|

| 45 |

+

|

| 46 |

+

# You can require a Bearer token for requests when using proxy_token gatekeeper.

|

| 47 |

+

# PROXY_KEY=your-secret-key

|

| 48 |

+

|

| 49 |

+

# You can set an admin key for user management when using user_token gatekeeper.

|

| 50 |

+

# ADMIN_KEY=your-very-secret-key

|

| 51 |

+

|

| 52 |

+

# These are used for various persistence features. Refer to the docs for more

|

| 53 |

+

# info.

|

| 54 |

+

# FIREBASE_KEY=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

|

| 55 |

+

# FIREBASE_RTDB_URL=https://xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx.firebaseio.com

|

| 56 |

+

|

| 57 |

+

# This is only relevant if you want to use the prompt logging feature.

|

| 58 |

+

# GOOGLE_SHEETS_SPREADSHEET_ID=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

|

| 59 |

+

# GOOGLE_SHEETS_KEY=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

|

.gitattributes

CHANGED

|

@@ -25,7 +25,6 @@

|

|

| 25 |

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*.wasm filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 25 |

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 28 |

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.wasm filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.env

|

| 2 |

+

.venv

|

| 3 |

+

.vscode

|

| 4 |

+

build

|

| 5 |

+

greeting.md

|

| 6 |

+

node_modules

|

.vscode/settings.json

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

}

|

README.md

CHANGED

|

@@ -1,12 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

-

title: WORKALRSGDJHX

|

| 3 |

-

emoji: 🐨

|

| 4 |

-

colorFrom: purple

|

| 5 |

-

colorTo: yellow

|

| 6 |

-

sdk: streamlit

|

| 7 |

-

sdk_version: 1.21.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

---

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# OAI Reverse Proxy

|

| 2 |

+

|

| 3 |

+

Reverse proxy server for the OpenAI and Anthropic APIs. Forwards text generation requests while rejecting administrative/billing requests. Includes optional rate limiting and prompt filtering to prevent abuse.

|

| 4 |

+

|

| 5 |

+

### Table of Contents

|

| 6 |

+

- [What is this?](#what-is-this)

|

| 7 |

+

- [Why?](#why)

|

| 8 |

+

- [Usage Instructions](#setup-instructions)

|

| 9 |

+

- [Deploy to Huggingface (Recommended)](#deploy-to-huggingface-recommended)

|

| 10 |

+

- [Deploy to Repl.it (WIP)](#deploy-to-replit-wip)

|

| 11 |

+

- [Local Development](#local-development)

|

| 12 |

+

|

| 13 |

+

## What is this?

|

| 14 |

+

If you would like to provide a friend access to an API via keys you own, you can use this to keep your keys safe while still allowing them to generate text with the API. You can also use this if you'd like to build a client-side application which uses the OpenAI or Anthropic APIs, but don't want to build your own backend. You should never embed your real API keys in a client-side application. Instead, you can have your frontend connect to this reverse proxy and forward requests to the downstream service.

|

| 15 |

+

|

| 16 |

+

This keeps your keys safe and allows you to use the rate limiting and prompt filtering features of the proxy to prevent abuse.

|

| 17 |

+

|

| 18 |

+

## Why?

|

| 19 |

+

OpenAI keys have full account permissions. They can revoke themselves, generate new keys, modify spend quotas, etc. **You absolutely should not share them, post them publicly, nor embed them in client-side applications as they can be easily stolen.**

|

| 20 |

+

|

| 21 |

+

This proxy only forwards text generation requests to the downstream service and rejects requests which would otherwise modify your account.

|

| 22 |

+

|

| 23 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

+

## Usage Instructions

|

| 26 |

+

If you'd like to run your own instance of this proxy, you'll need to deploy it somewhere and configure it with your API keys. A few easy options are provided below, though you can also deploy it to any other service you'd like.

|

| 27 |

+

|

| 28 |

+

### Deploy to Huggingface (Recommended)

|

| 29 |

+

[See here for instructions on how to deploy to a Huggingface Space.](./docs/deploy-huggingface.md)

|

| 30 |

+

|

| 31 |

+

### Deploy to Render

|

| 32 |

+

[See here for instructions on how to deploy to Render.com.](./docs/deploy-render.md)

|

| 33 |

+

|

| 34 |

+

## Local Development

|

| 35 |

+

To run the proxy locally for development or testing, install Node.js >= 18.0.0 and follow the steps below.

|

| 36 |

+

|

| 37 |

+

1. Clone the repo

|

| 38 |

+

2. Install dependencies with `npm install`

|

| 39 |

+

3. Create a `.env` file in the root of the project and add your API keys. See the [.env.example](./.env.example) file for an example.

|

| 40 |

+

4. Start the server in development mode with `npm run start:dev`.

|

| 41 |

+

|

| 42 |

+

You can also use `npm run start:dev:tsc` to enable project-wide type checking at the cost of slower startup times. `npm run type-check` can be used to run type checking without starting the server.

|

docker/huggingface/Dockerfile

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM node:18-bullseye-slim

|

| 2 |

+

RUN apt-get update && \

|

| 3 |

+

apt-get install -y git

|

| 4 |

+

RUN git clone https://gitgud.io/khanon/oai-reverse-proxy.git /app

|

| 5 |

+

WORKDIR /app

|

| 6 |

+

RUN npm install

|

| 7 |

+

COPY Dockerfile greeting.md* .env* ./

|

| 8 |

+

RUN npm run build

|

| 9 |

+

EXPOSE 7860

|

| 10 |

+

ENV NODE_ENV=production

|

| 11 |

+

CMD [ "npm", "start" ]

|

docker/render/Dockerfile

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# syntax = docker/dockerfile:1.2

|

| 2 |

+

|

| 3 |

+

FROM node:18-bullseye-slim

|

| 4 |

+

RUN apt-get update && \

|

| 5 |

+

apt-get install -y curl

|

| 6 |

+

|

| 7 |

+

# Unlike Huggingface, Render can only deploy straight from a git repo and

|

| 8 |

+

# doesn't allow you to create or modify arbitrary files via the web UI.

|

| 9 |

+

# To use a greeting file, set `GREETING_URL` to a URL that points to a raw

|

| 10 |

+

# text file containing your greeting, such as a GitHub Gist.

|

| 11 |

+

|

| 12 |

+

# You may need to clear the build cache if you change the greeting, otherwise

|

| 13 |

+

# Render will use the cached layer from the previous build.

|

| 14 |

+

|

| 15 |

+

WORKDIR /app

|

| 16 |

+

ARG GREETING_URL

|

| 17 |

+

RUN if [ -n "$GREETING_URL" ]; then \

|

| 18 |

+

curl -sL "$GREETING_URL" > greeting.md; \

|

| 19 |

+

fi

|

| 20 |

+

COPY package*.json greeting.md* ./

|

| 21 |

+

RUN npm install

|

| 22 |

+

COPY . .

|

| 23 |

+

RUN npm run build

|

| 24 |

+

RUN --mount=type=secret,id=_env,dst=/etc/secrets/.env cat /etc/secrets/.env >> .env

|

| 25 |

+

EXPOSE 10000

|

| 26 |

+

ENV NODE_ENV=production

|

| 27 |

+

CMD [ "npm", "start" ]

|

docs/deploy-huggingface.md

ADDED

|

@@ -0,0 +1,95 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

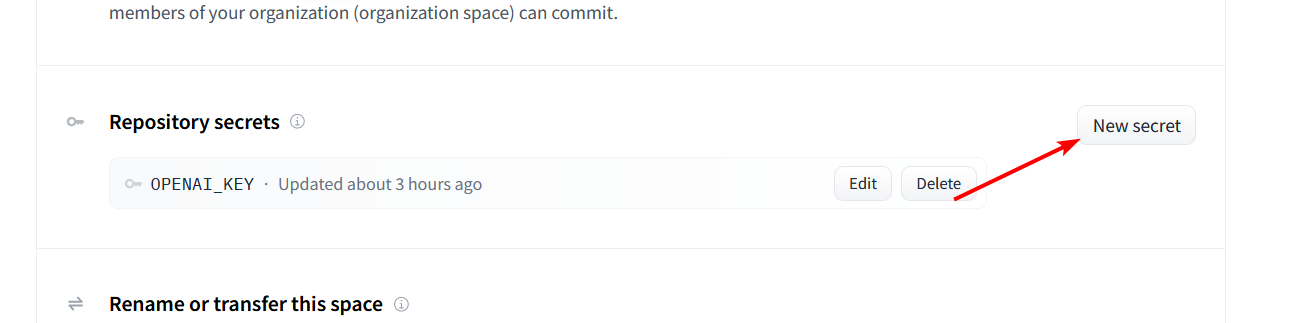

| 1 |

+

# Deploy to Huggingface Space

|

| 2 |

+

|

| 3 |

+

This repository can be deployed to a [Huggingface Space](https://huggingface.co/spaces). This is a free service that allows you to run a simple server in the cloud. You can use it to safely share your OpenAI API key with a friend.

|

| 4 |

+

|

| 5 |

+

### 1. Get an API key

|

| 6 |

+

- Go to [OpenAI](https://openai.com/) and sign up for an account. You can use a free trial key for this as long as you provide SMS verification.

|

| 7 |

+

- Claude is not publicly available yet, but if you have access to it via the [Anthropic](https://www.anthropic.com/) closed beta, you can also use that key with the proxy.

|

| 8 |

+

|

| 9 |

+

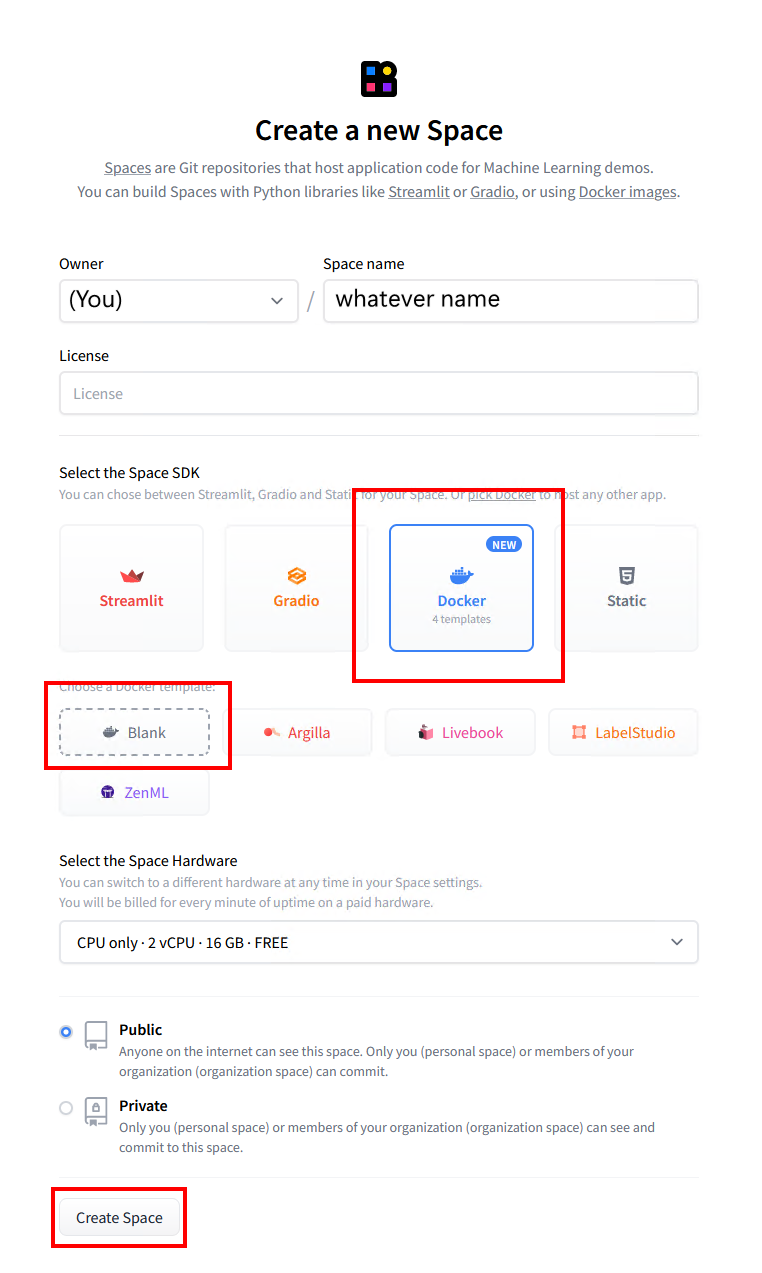

### 2. Create an empty Huggingface Space

|

| 10 |

+

- Go to [Huggingface](https://huggingface.co/) and sign up for an account.

|

| 11 |

+

- Once logged in, [create a new Space](https://huggingface.co/new-space).

|

| 12 |

+

- Provide a name for your Space and select "Docker" as the SDK. Select "Blank" for the template.

|

| 13 |

+

- Click "Create Space" and wait for the Space to be created.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

### 3. Create an empty Dockerfile

|

| 18 |

+

- Once your Space is created, you'll see an option to "Create the Dockerfile in your browser". Click that link.

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

- Paste the following into the text editor and click "Save".

|

| 22 |

+

```dockerfile

|

| 23 |

+

FROM node:18-bullseye-slim

|

| 24 |

+

RUN apt-get update && \

|

| 25 |

+

apt-get install -y git

|

| 26 |

+

RUN git clone https://gitgud.io/khanon/oai-reverse-proxy.git /app

|

| 27 |

+

WORKDIR /app

|

| 28 |

+

RUN npm install

|

| 29 |

+

COPY Dockerfile greeting.md* .env* ./

|

| 30 |

+

RUN npm run build

|

| 31 |

+

EXPOSE 7860

|

| 32 |

+

ENV NODE_ENV=production

|

| 33 |

+

CMD [ "npm", "start" ]

|

| 34 |

+

```

|

| 35 |

+

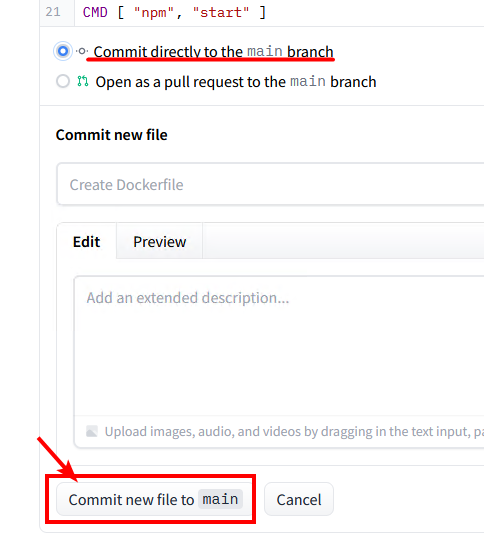

- Click "Commit new file to `main`" to save the Dockerfile.

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

### 4. Set your API key as a secret

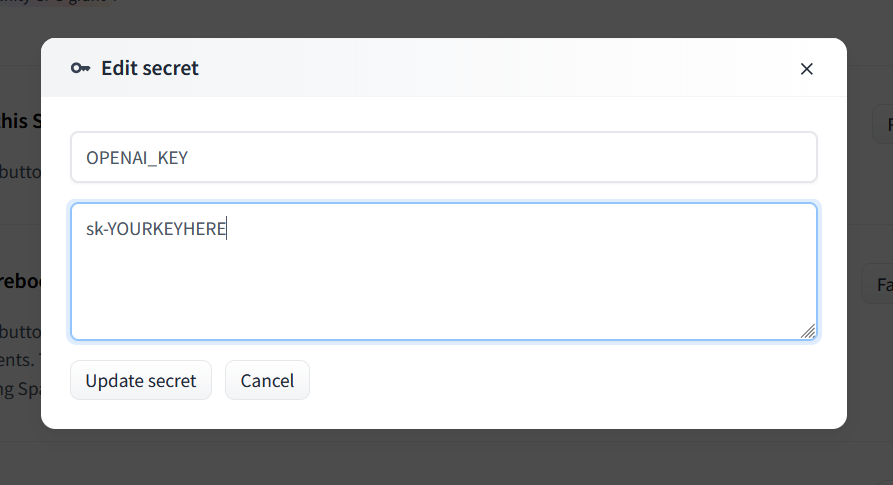

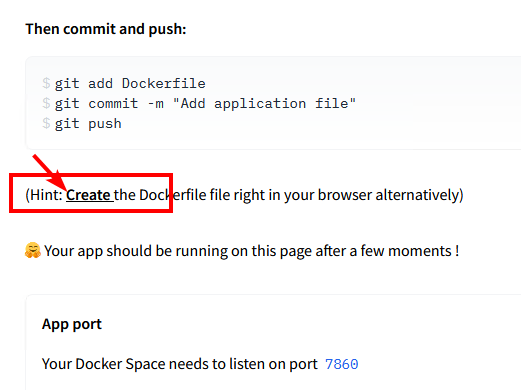

|

| 40 |

+

- Click the Settings button in the top right corner of your repository.

|

| 41 |

+

- Scroll down to the `Repository Secrets` section and click `New Secret`.

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

- Enter `OPENAI_KEY` as the name and your OpenAI API key as the value.

|

| 46 |

+

- For Claude, set `ANTHROPIC_KEY` instead.

|

| 47 |

+

- You can use both types of keys at the same time if you want.

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

### 5. Deploy the server

|

| 52 |

+

- Your server should automatically deploy when you add the secret, but if not you can select `Factory Reboot` from that same Settings menu.

|

| 53 |

+

|

| 54 |

+

### 6. Share the link

|

| 55 |

+

- The Service Info section below should show the URL for your server. You can share this with anyone to safely give them access to your API key.

|

| 56 |

+

- Your friend doesn't need any API key of their own, they just need your link.

|

| 57 |

+

|

| 58 |

+

# Optional

|

| 59 |

+

|

| 60 |

+

## Updating the server

|

| 61 |

+

|

| 62 |

+

To update your server, go to the Settings menu and select `Factory Reboot`. This will pull the latest version of the code from GitHub and restart the server.

|

| 63 |

+

|

| 64 |

+

Note that if you just perform a regular Restart, the server will be restarted with the same code that was running before.

|

| 65 |

+

|

| 66 |

+

## Adding a greeting message

|

| 67 |

+

|

| 68 |

+

You can create a Markdown file called `greeting.md` to display a message on the Server Info page. This is a good place to put instructions for how to use the server.

|

| 69 |

+

|

| 70 |

+

## Customizing the server

|

| 71 |

+

|

| 72 |

+

The server will be started with some default configuration, but you can override it by adding a `.env` file to your Space. You can use Huggingface's web editor to create a new `.env` file alongside your Dockerfile. Huggingface will restart your server automatically when you save the file.

|

| 73 |

+

|

| 74 |

+

Here are some example settings:

|

| 75 |

+

```shell

|

| 76 |

+

# Requests per minute per IP address

|

| 77 |

+

MODEL_RATE_LIMIT=4

|

| 78 |

+

# Max tokens to request from OpenAI

|

| 79 |

+

MAX_OUTPUT_TOKENS_OPENAI=256

|

| 80 |

+

# Max tokens to request from Anthropic (Claude)

|

| 81 |

+

MAX_OUTPUT_TOKENS_ANTHROPIC=512

|

| 82 |

+

# Block prompts containing disallowed characters

|

| 83 |

+

REJECT_DISALLOWED=false

|

| 84 |

+

REJECT_MESSAGE="This content violates /aicg/'s acceptable use policy."

|

| 85 |

+

# Show exact quota usage on the Server Info page

|

| 86 |

+

QUOTA_DISPLAY_MODE=full

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

See `.env.example` for a full list of available settings, or check `config.ts` for details on what each setting does.

|

| 90 |

+

|

| 91 |

+

## Restricting access to the server

|

| 92 |

+

|

| 93 |

+

If you want to restrict access to the server, you can set a `PROXY_KEY` secret. This key will need to be passed in the Authentication header of every request to the server, just like an OpenAI API key.

|

| 94 |

+

|

| 95 |

+

Add this using the same method as the OPENAI_KEY secret above. Don't add this to your `.env` file because that file is public and anyone can see it.

|

docs/deploy-render.md

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Deploy to Render.com

|

| 2 |

+

Render.com offers a free tier that includes 750 hours of compute time per month. This is enough to run a single proxy instance 24/7. Instances shut down after 15 minutes without traffic but start up again automatically when a request is received.

|

| 3 |

+

|

| 4 |

+

### 1. Create account

|

| 5 |

+

- [Sign up for Render.com](https://render.com/) to create an account and access the dashboard.

|

| 6 |

+

|

| 7 |

+

### 2. Create a service using a Blueprint

|

| 8 |

+

Render allows you to deploy and auutomatically configure a repository containing a [render.yaml](../render.yaml) file using its Blueprints feature. This is the easiest way to get started.

|

| 9 |

+

|

| 10 |

+

- Click the **Blueprints** tab at the top of the dashboard.

|

| 11 |

+

- Click **New Blueprint Instance**.

|

| 12 |

+

- Under **Public Git repository**, enter `https://gitlab.com/khanon/oai-proxy`.

|

| 13 |

+

- Note that this is not the GitGud repository, but a mirror on GitLab.

|

| 14 |

+

- Click **Continue**.

|

| 15 |

+

- Under **Blueprint Name**, enter a name.

|

| 16 |

+

- Under **Branch**, enter `main`.

|

| 17 |

+

- Click **Apply**.

|

| 18 |

+

|

| 19 |

+

The service will be created according to the instructions in the `render.yaml` file. Don't wait for it to complete as it will fail due to missing environment variables. Instead, proceed to the next step.

|

| 20 |

+

|

| 21 |

+

### 3. Set environment variables

|

| 22 |

+

- Return to the **Dashboard** tab.

|

| 23 |

+

- Click the name of the service you just created, which may show as "Deploy failed".

|

| 24 |

+

- Click the **Environment** tab.

|

| 25 |

+

- Click **Add Secret File**.

|

| 26 |

+

- Under **Filename**, enter `.env`.

|

| 27 |

+

- Under **Contents**, enter all of your environment variables, one per line, in the format `NAME=value`.

|

| 28 |

+

- For example, `OPENAI_KEY=sk-abc123`.

|

| 29 |

+

- Click **Save Changes**.

|

| 30 |

+

|

| 31 |

+

The service will automatically rebuild and deploy with the new environment variables. This will take a few minutes. The link to your deployed proxy will appear at the top of the page.

|

| 32 |

+

|

| 33 |

+

If you want to change the URL, go to the **Settings** tab of your Web Service and click the **Edit** button next to **Name**. You can also set a custom domain, though I haven't tried this yet.

|

| 34 |

+

|

| 35 |

+

# Optional

|

| 36 |

+

|

| 37 |

+

## Updating the server

|

| 38 |

+

|

| 39 |

+

To update your server, go to the page for your Web Service and click **Manual Deploy** > **Deploy latest commit**. This will pull the latest version of the code and redeploy the server.

|

| 40 |

+

|

| 41 |

+

_If you have trouble with this, you can also try selecting **Clear build cache & deploy** instead from the same menu._

|

| 42 |

+

|

| 43 |

+

## Adding a greeting message

|

| 44 |

+

|

| 45 |

+

To show a greeting message on the Server Info page, set the `GREETING_URL` environment variable within Render to the URL of a Markdown file. This URL should point to a raw text file, not an HTML page. You can use a public GitHub Gist or GitLab Snippet for this. For example: `GREETING_URL=https://gitlab.com/-/snippets/2542011/raw/main/greeting.md`. You can change the title of the page by setting the `SERVER_TITLE` environment variable.

|

| 46 |

+

|

| 47 |

+

Don't set `GREETING_URL` in the `.env` secret file you created earlier; it must be set in Render's environment variables section for it to work correctly.

|

| 48 |

+

|

| 49 |

+

## Customizing the server

|

| 50 |

+

|

| 51 |

+

You can customize the server by editing the `.env` configuration you created earlier. Refer to [.env.example](../.env.example) for a list of all available configuration options. Further information can be found in the [config.ts](../src/config.ts) file.

|

docs/huggingface-createspace.png

ADDED

|

docs/huggingface-dockerfile.png

ADDED

|

docs/huggingface-savedockerfile.png

ADDED

|

docs/logging-sheets.md

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Warning

|

| 2 |

+

**I strongly suggest against using this feature with a Google account that you care about.** Depending on the content of the prompts people submit, Google may flag the spreadsheet as containing inappropriate content. This seems to prevent you from sharing that spreadsheet _or any others on the account. This happened with my throwaway account during testing; the existing shared spreadsheet continues to work but even completely new spreadsheets are flagged and cannot be shared.

|

| 3 |

+

|

| 4 |

+

I'll be looking into alternative storage backends but you should not use this implementation with a Google account you care about, or even one remotely connected to your main accounts (as Google has a history of linking accounts together via IPs/browser fingerprinting). Use a VPN and completely isolated VM to be safe.

|

| 5 |

+

|

| 6 |

+

# Configuring Google Sheets Prompt Logging

|

| 7 |

+

This proxy can log incoming prompts and model responses to Google Sheets. Some configuration on the Google side is required to enable this feature. The APIs used are free, but you will need a Google account and a Google Cloud Platform project.

|

| 8 |

+

|

| 9 |

+

NOTE: Concurrency is not supported. Don't connect two instances of the server to the same spreadsheet or bad things will happen.

|

| 10 |

+

|

| 11 |

+

## Prerequisites

|

| 12 |

+

- A Google account

|

| 13 |

+

- **USE A THROWAWAY ACCOUNT!**

|

| 14 |

+

- A Google Cloud Platform project

|

| 15 |

+

|

| 16 |

+

### 0. Create a Google Cloud Platform Project

|

| 17 |

+

_A Google Cloud Platform project is required to enable programmatic access to Google Sheets. If you already have a project, skip to the next step. You can also see the [Google Cloud Platform documentation](https://developers.google.com/workspace/guides/create-project) for more information._

|

| 18 |

+

|

| 19 |

+

- Go to the Google Cloud Platform Console and [create a new project](https://console.cloud.google.com/projectcreate).

|

| 20 |

+

|

| 21 |

+

### 1. Enable the Google Sheets API

|

| 22 |

+

_The Google Sheets API must be enabled for your project. You can also see the [Google Sheets API documentation](https://developers.google.com/sheets/api/quickstart/nodejs) for more information._

|

| 23 |

+

|

| 24 |

+

- Go to the [Google Sheets API page](https://console.cloud.google.com/apis/library/sheets.googleapis.com) and click **Enable**, then fill in the form to enable the Google Sheets API for your project.

|

| 25 |

+

<!-- TODO: Add screenshot of Enable page and describe filling out the form -->

|

| 26 |

+

|

| 27 |

+

### 2. Create a Service Account

|

| 28 |

+

_A service account is required to authenticate the proxy to Google Sheets._

|

| 29 |

+

|

| 30 |

+

- Once the Google Sheets API is enabled, click the **Credentials** tab on the Google Sheets API page.

|

| 31 |

+

- Click **Create credentials** and select **Service account**.

|

| 32 |

+

- Provide a name for the service account and click **Done** (the second and third steps can be skipped).

|

| 33 |

+

|

| 34 |

+

### 3. Download the Service Account Key

|

| 35 |

+

_Once your account is created, you'll need to download the key file and include it in the proxy's secrets configuration._

|

| 36 |

+

|

| 37 |

+

- Click the Service Account you just created in the list of service accounts for the API.

|

| 38 |

+

- Click the **Keys** tab and click **Add key**, then select **Create new key**.

|

| 39 |

+

- Select **JSON** as the key type and click **Create**.

|

| 40 |

+

|

| 41 |

+

The JSON file will be downloaded to your computer.

|

| 42 |

+

|

| 43 |

+

### 4. Set the Service Account key as a Secret

|

| 44 |

+

_The JSON key file must be set as a secret in the proxy's configuration. Because files cannot be included in the secrets configuration, you'll need to base64 encode the file's contents and paste the encoded string as the value of the `GOOGLE_SHEETS_KEY` secret._

|

| 45 |

+

|

| 46 |

+

- Open the JSON key file in a text editor and copy the contents.

|

| 47 |

+

- Visit the [base64 encode/decode tool](https://www.base64encode.org/) and paste the contents into the box, then click **Encode**.

|

| 48 |

+

- Copy the encoded string and paste it as the value of the `GOOGLE_SHEETS_KEY` secret in the deployment's secrets configuration.

|

| 49 |

+

- **WARNING:** Don't reveal this string publically. The `.env` file is NOT private -- unless you're running the proxy locally, you should not use it to store secrets!

|

| 50 |

+

|

| 51 |

+

### 5. Create a new spreadsheet and share it with the service account

|

| 52 |

+

_The service account must be given permission to access the logging spreadsheet. Each service account has a unique email address, which can be found in the JSON key file; share the spreadsheet with that email address just as you would share it with another user._

|

| 53 |

+

|

| 54 |

+

- Open the JSON key file in a text editor and copy the value of the `client_email` field.

|

| 55 |

+

- Open the spreadsheet you want to log to, or create a new one, and click **File > Share**.

|

| 56 |

+

- Paste the service account's email address into the **Add people or groups** field. Ensure the service account has **Editor** permissions, then click **Done**.

|

| 57 |

+

|

| 58 |

+

### 6. Set the spreadsheet ID as a Secret

|

| 59 |

+

_The spreadsheet ID must be set as a secret in the proxy's configuration. The spreadsheet ID can be found in the URL of the spreadsheet. For example, the spreadsheet ID for `https://docs.google.com/spreadsheets/d/1X2Y3Z/edit#gid=0` is `1X2Y3Z`. The ID isn't necessarily a sensitive value if you intend for the spreadsheet to be public, but it's still recommended to set it as a secret._

|

| 60 |

+

|

| 61 |

+

- Copy the spreadsheet ID and paste it as the value of the `GOOGLE_SHEETS_SPREADSHEET_ID` secret in the deployment's secrets configuration.

|

docs/openapi-admin-users.yaml

ADDED

|

@@ -0,0 +1,204 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Shat out by GPT-4, I did not check for correctness beyond a cursory glance

|

| 2 |

+

openapi: 3.0.0

|

| 3 |

+

info:

|

| 4 |

+

version: 1.0.0

|

| 5 |

+

title: User Management API

|

| 6 |

+

paths:

|

| 7 |

+

/admin/users:

|

| 8 |

+

get:

|

| 9 |

+

summary: List all users

|

| 10 |

+

operationId: getUsers

|

| 11 |

+

responses:

|

| 12 |

+

"200":

|

| 13 |

+

description: A list of users

|

| 14 |

+

content:

|

| 15 |

+

application/json:

|

| 16 |

+

schema:

|

| 17 |

+

type: object

|

| 18 |

+

properties:

|

| 19 |

+

users:

|

| 20 |

+

type: array

|

| 21 |

+

items:

|

| 22 |

+

$ref: "#/components/schemas/User"

|

| 23 |

+

count:

|

| 24 |

+

type: integer

|

| 25 |

+

format: int32

|

| 26 |

+

post:

|

| 27 |

+

summary: Create a new user

|

| 28 |

+

operationId: createUser

|

| 29 |

+

responses:

|

| 30 |

+

"200":

|

| 31 |

+

description: The created user's token

|

| 32 |

+

content:

|

| 33 |

+

application/json:

|

| 34 |

+

schema:

|

| 35 |

+

type: object

|

| 36 |

+

properties:

|

| 37 |

+

token:

|

| 38 |

+

type: string

|

| 39 |

+

put:

|

| 40 |

+

summary: Bulk upsert users

|

| 41 |

+

operationId: bulkUpsertUsers

|

| 42 |

+

requestBody:

|

| 43 |

+

content:

|

| 44 |

+

application/json:

|

| 45 |

+

schema:

|

| 46 |

+

type: object

|

| 47 |

+

properties:

|

| 48 |

+

users:

|

| 49 |

+

type: array

|

| 50 |

+

items:

|

| 51 |

+

$ref: "#/components/schemas/User"

|

| 52 |

+

responses:

|

| 53 |

+

"200":

|

| 54 |

+

description: The upserted users

|

| 55 |

+

content:

|

| 56 |

+

application/json:

|

| 57 |

+

schema:

|

| 58 |

+

type: object

|

| 59 |

+

properties:

|

| 60 |

+

upserted_users:

|

| 61 |

+

type: array

|

| 62 |

+

items:

|

| 63 |

+

$ref: "#/components/schemas/User"

|

| 64 |

+

count:

|

| 65 |

+

type: integer

|

| 66 |

+

format: int32

|

| 67 |

+

"400":

|

| 68 |

+

description: Bad request

|

| 69 |

+

content:

|

| 70 |

+

application/json:

|

| 71 |

+

schema:

|

| 72 |

+

type: object

|

| 73 |

+

properties:

|

| 74 |

+

error:

|

| 75 |

+

type: string

|

| 76 |

+

|

| 77 |

+

/admin/users/{token}:

|

| 78 |

+

get:

|

| 79 |

+

summary: Get a user by token

|

| 80 |

+

operationId: getUser

|

| 81 |

+

parameters:

|

| 82 |

+

- name: token

|

| 83 |

+

in: path

|

| 84 |

+

required: true

|

| 85 |

+

schema:

|

| 86 |

+

type: string

|

| 87 |

+

responses:

|

| 88 |

+

"200":

|

| 89 |

+

description: A user

|

| 90 |

+

content:

|

| 91 |

+

application/json:

|

| 92 |

+

schema:

|

| 93 |

+

$ref: "#/components/schemas/User"

|

| 94 |

+

"404":

|

| 95 |

+

description: Not found

|

| 96 |

+

content:

|

| 97 |

+

application/json:

|

| 98 |

+

schema:

|

| 99 |

+

type: object

|

| 100 |

+

properties:

|

| 101 |

+

error:

|

| 102 |

+

type: string

|

| 103 |

+

put:

|

| 104 |

+

summary: Update a user by token

|

| 105 |

+

operationId: upsertUser

|

| 106 |

+

parameters:

|

| 107 |

+

- name: token

|

| 108 |

+

in: path

|

| 109 |

+

required: true

|

| 110 |

+

schema:

|

| 111 |

+

type: string

|

| 112 |

+

requestBody:

|

| 113 |

+

content:

|

| 114 |

+

application/json:

|

| 115 |

+

schema:

|

| 116 |

+

$ref: "#/components/schemas/User"

|

| 117 |

+

responses:

|

| 118 |

+

"200":

|

| 119 |

+

description: The updated user

|

| 120 |

+

content:

|

| 121 |

+

application/json:

|

| 122 |

+

schema:

|

| 123 |

+

$ref: "#/components/schemas/User"

|

| 124 |

+

"400":

|

| 125 |

+

description: Bad request

|

| 126 |

+

content:

|

| 127 |

+

application/json:

|

| 128 |

+

schema:

|

| 129 |

+

type: object

|

| 130 |

+

properties:

|

| 131 |

+

error:

|

| 132 |

+

type: string

|

| 133 |

+

delete:

|

| 134 |

+

summary: Disables the user with the given token

|

| 135 |

+

description: Optionally accepts a `disabledReason` query parameter. Returns the disabled user.

|

| 136 |

+

parameters:

|

| 137 |

+

- in: path

|

| 138 |

+

name: token

|

| 139 |

+

required: true

|

| 140 |

+

schema:

|

| 141 |

+

type: string

|

| 142 |

+

description: The token of the user to disable

|

| 143 |

+

- in: query

|

| 144 |

+

name: disabledReason

|

| 145 |

+

required: false

|

| 146 |

+

schema:

|

| 147 |

+

type: string

|

| 148 |

+

description: The reason for disabling the user

|

| 149 |

+

responses:

|

| 150 |

+

'200':

|

| 151 |

+

description: The disabled user

|

| 152 |

+

content:

|

| 153 |

+

application/json:

|

| 154 |

+

schema:

|

| 155 |

+

$ref: '#/components/schemas/User'

|

| 156 |

+

'400':

|

| 157 |

+

description: Bad request

|

| 158 |

+

content:

|

| 159 |

+

application/json:

|

| 160 |

+

schema:

|

| 161 |

+

type: object

|

| 162 |

+

properties:

|

| 163 |

+

error:

|

| 164 |

+

type: string

|

| 165 |

+

'404':

|

| 166 |

+

description: Not found

|

| 167 |

+

content:

|

| 168 |

+

application/json:

|

| 169 |

+

schema:

|

| 170 |

+

type: object

|

| 171 |

+

properties:

|

| 172 |

+

error:

|

| 173 |

+

type: string

|

| 174 |

+

components:

|

| 175 |

+

schemas:

|

| 176 |

+

User:

|

| 177 |

+

type: object

|

| 178 |

+

properties:

|

| 179 |

+

token:

|

| 180 |

+

type: string

|

| 181 |

+

ip:

|

| 182 |

+

type: array

|

| 183 |

+

items:

|

| 184 |

+

type: string

|

| 185 |

+

type:

|

| 186 |

+

type: string

|

| 187 |

+

enum: ["normal", "special"]

|

| 188 |

+

promptCount:

|

| 189 |

+

type: integer

|

| 190 |

+

format: int32

|

| 191 |

+

tokenCount:

|

| 192 |

+

type: integer

|

| 193 |

+

format: int32

|

| 194 |

+

createdAt:

|

| 195 |

+

type: integer

|

| 196 |

+

format: int64

|

| 197 |

+

lastUsedAt:

|

| 198 |

+

type: integer

|

| 199 |

+

format: int64

|

| 200 |

+

disabledAt:

|

| 201 |

+

type: integer

|

| 202 |

+

format: int64

|

| 203 |

+

disabledReason:

|

| 204 |

+

type: string

|

docs/user-management.md

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# User Management

|

| 2 |

+

|

| 3 |

+

The proxy supports several different user management strategies. You can choose the one that best fits your needs by setting the `GATEKEEPER` environment variable.

|

| 4 |

+

|

| 5 |

+

Several of these features require you to set secrets in your environment. If using Huggingface Spaces to deploy, do not set these in your `.env` file because that file is public and anyone can see it.

|

| 6 |

+

|

| 7 |

+

## Table of Contents

|

| 8 |

+

- [No user management](#no-user-management-gatekeepernone)

|

| 9 |

+

- [Single-password authentication](#single-password-authentication-gatekeeperproxy_key)

|

| 10 |

+

- [Per-user authentication](#per-user-authentication-gatekeeperuser_token)

|

| 11 |

+

- [Memory](#memory)

|

| 12 |

+

- [Firebase Realtime Database](#firebase-realtime-database)

|

| 13 |

+

- [Firebase setup instructions](#firebase-setup-instructions)

|

| 14 |

+

|

| 15 |

+

## No user management (`GATEKEEPER=none`)

|

| 16 |

+

|

| 17 |

+

This is the default mode. The proxy will not require any authentication to access the server and offers basic IP-based rate limiting and anti-abuse features.

|

| 18 |

+

|

| 19 |

+

## Single-password authentication (`GATEKEEPER=proxy_key`)

|

| 20 |

+

|

| 21 |

+

This mode allows you to set a password that must be passed in the `Authentication` header of every request to the server as a bearer token. This is useful if you want to restrict access to the server, but don't want to create a separate account for every user.

|

| 22 |

+

|

| 23 |

+

To set the password, create a `PROXY_KEY` secret in your environment.

|

| 24 |

+

|

| 25 |

+

## Per-user authentication (`GATEKEEPER=user_token`)

|

| 26 |

+

|

| 27 |

+

This mode allows you to provision separate Bearer tokens for each user. You can manage users via the /admin/users REST API, which itself requires an admin Bearer token.

|

| 28 |

+

|

| 29 |

+

To begin, set `ADMIN_KEY` to a secret value. This will be used to authenticate requests to the /admin/users REST API.

|

| 30 |

+

|

| 31 |

+

[You can find an OpenAPI specification for the /admin/users REST API here.](openapi-admin-users.yaml)

|

| 32 |

+

|

| 33 |

+

By default, the proxy will store user data in memory. Naturally, this means that user data will be lost when the proxy is restarted, though you can use the bulk user import/export feature to save and restore user data manually or via a script. However, the proxy also supports persisting user data to an external data store with some additional configuration.

|

| 34 |

+

|

| 35 |

+

Below are the supported data stores and their configuration options.

|

| 36 |

+

|

| 37 |

+

### Memory

|

| 38 |

+

|

| 39 |

+

This is the default data store (`GATEKEEPER_STORE=memory`) User data will be stored in memory and will be lost when the proxy is restarted. You are responsible for downloading and re-uploading user data via the REST API if you want to persist it.

|

| 40 |

+

|

| 41 |

+

### Firebase Realtime Database

|

| 42 |

+

|

| 43 |

+

To use Firebase Realtime Database to persist user data, set the following environment variables:

|

| 44 |

+

- `GATEKEEPER_STORE`: Set this to `firebase_rtdb`

|

| 45 |

+

- **Secret** `FIREBASE_RTDB_URL`: The URL of your Firebase Realtime Database, e.g. `https://my-project-default-rtdb.firebaseio.com`

|

| 46 |

+

- **Secret** `FIREBASE_KEY`: A base-64 encoded service account key for your Firebase project. Refer to the instructions below for how to create this key.

|

| 47 |

+

|

| 48 |

+

**Firebase setup instructions**

|

| 49 |

+

|

| 50 |

+

1. Go to the [Firebase console](https://console.firebase.google.com/) and click "Add project", then follow the prompts to create a new project.

|

| 51 |

+

2. From the **Project Overview** page, click **All products** in the left sidebar, then click **Realtime Database**.

|

| 52 |

+

3. Click **Create database** and choose **Start in test mode**. Click **Enable**.

|

| 53 |

+

- Test mode is fine for this use case as it still requires authentication to access the database. You may wish to set up more restrictive rules if you plan to use the database for other purposes.

|

| 54 |

+

- The reference URL for the database will be displayed on the page. You will need this later.

|

| 55 |

+

4. Click the gear icon next to **Project Overview** in the left sidebar, then click **Project settings**.

|

| 56 |

+

5. Click the **Service accounts** tab, then click **Generate new private key**.

|

| 57 |

+

6. The downloaded file contains your key. Encode it as base64 and set it as the `FIREBASE_KEY` secret in your environment.

|

| 58 |

+

7. Set `FIREBASE_RTDB_URL` to the reference URL of your Firebase Realtime Database, e.g. `https://my-project-default-rtdb.firebaseio.com`.

|

| 59 |

+

8. Set `GATEKEEPER_STORE` to `firebase_rtdb` in your environment if you haven't already.

|

| 60 |

+

|

| 61 |

+

The proxy will attempt to connect to your Firebase Realtime Database at startup and will throw an error if it cannot connect. If you see this error, check that your `FIREBASE_RTDB_URL` and `FIREBASE_KEY` secrets are set correctly.

|

| 62 |

+

|

| 63 |

+

---

|

| 64 |

+

|

| 65 |

+

Users are loaded from the database and changes are flushed periodically. You can use the PUT /admin/users API to bulk import users and force a flush to the database.

|

package-lock.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

package.json

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "oai-reverse-proxy",

|

| 3 |

+

"version": "1.0.0",

|

| 4 |

+

"description": "Reverse proxy for the OpenAI API",

|

| 5 |

+

"scripts": {

|

| 6 |

+

"build:watch": "esbuild src/server.ts --outfile=build/server.js --platform=node --target=es2020 --format=cjs --bundle --sourcemap --watch",

|

| 7 |

+

"build": "tsc",

|

| 8 |

+

"start:dev": "concurrently \"npm run build:watch\" \"npm run start:watch\"",

|

| 9 |

+

"start:dev:tsc": "nodemon --watch src --exec ts-node --transpile-only src/server.ts",

|

| 10 |

+

"start:watch": "nodemon --require source-map-support/register build/server.js",

|

| 11 |

+

"start:replit": "tsc && node build/server.js",

|

| 12 |

+

"start": "node build/server.js",

|

| 13 |

+

"type-check": "tsc --noEmit"

|

| 14 |

+

},

|

| 15 |

+

"engines": {

|

| 16 |

+

"node": ">=18.0.0"

|

| 17 |

+

},

|

| 18 |

+

"author": "",

|

| 19 |

+

"license": "MIT",

|

| 20 |

+

"dependencies": {

|

| 21 |

+

"axios": "^1.3.5",

|

| 22 |

+

"cors": "^2.8.5",

|

| 23 |

+

"dotenv": "^16.0.3",

|

| 24 |

+

"express": "^4.18.2",

|

| 25 |

+

"firebase-admin": "^11.8.0",

|

| 26 |

+

"googleapis": "^117.0.0",

|

| 27 |

+

"http-proxy-middleware": "^3.0.0-beta.1",

|

| 28 |

+

"openai": "^3.2.1",

|

| 29 |

+

"pino": "^8.11.0",

|

| 30 |

+

"pino-http": "^8.3.3",

|

| 31 |

+

"showdown": "^2.1.0",

|

| 32 |

+

"uuid": "^9.0.0",

|

| 33 |

+

"zlib": "^1.0.5",

|

| 34 |

+

"zod": "^3.21.4"

|

| 35 |

+

},

|

| 36 |

+

"devDependencies": {

|

| 37 |

+

"@types/cors": "^2.8.13",

|

| 38 |

+

"@types/express": "^4.17.17",

|

| 39 |

+

"@types/showdown": "^2.0.0",

|

| 40 |

+

"@types/uuid": "^9.0.1",

|

| 41 |

+

"concurrently": "^8.0.1",

|

| 42 |

+

"esbuild": "^0.17.16",

|

| 43 |

+

"esbuild-register": "^3.4.2",

|

| 44 |

+

"nodemon": "^2.0.22",

|

| 45 |

+

"source-map-support": "^0.5.21",

|

| 46 |

+

"ts-node": "^10.9.1",

|

| 47 |

+

"typescript": "^5.0.4"

|

| 48 |

+

}

|

| 49 |

+

}

|

render.yaml

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

services:

|

| 2 |

+

- type: web

|

| 3 |

+

name: oai-proxy

|

| 4 |

+

env: docker

|

| 5 |

+

repo: https://gitlab.com/khanon/oai-proxy.git

|

| 6 |

+

region: oregon

|

| 7 |

+

plan: free

|

| 8 |

+

branch: main

|

| 9 |

+

healthCheckPath: /health

|

| 10 |

+

dockerfilePath: ./docker/render/Dockerfile

|

src/admin/routes.ts

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import { RequestHandler, Router } from "express";

|

| 2 |

+

import { config } from "../config";

|

| 3 |

+

import { usersRouter } from "./users";

|

| 4 |

+

|

| 5 |

+

const ADMIN_KEY = config.adminKey;

|

| 6 |

+

const failedAttempts = new Map<string, number>();

|

| 7 |

+

|

| 8 |

+

const adminRouter = Router();

|

| 9 |

+

|

| 10 |

+

const auth: RequestHandler = (req, res, next) => {

|

| 11 |

+

const token = req.headers.authorization?.slice("Bearer ".length);

|

| 12 |

+

const attempts = failedAttempts.get(req.ip) ?? 0;

|

| 13 |

+

if (attempts > 5) {

|

| 14 |

+

req.log.warn(

|

| 15 |

+

{ ip: req.ip, token },

|

| 16 |

+

`Blocked request to admin API due to too many failed attempts`

|

| 17 |

+

);

|

| 18 |

+

return res.status(401).json({ error: "Too many attempts" });

|

| 19 |

+

}

|

| 20 |

+

|

| 21 |

+

if (token !== ADMIN_KEY) {

|

| 22 |

+

const newAttempts = attempts + 1;

|

| 23 |

+

failedAttempts.set(req.ip, newAttempts);

|

| 24 |

+

req.log.warn(

|

| 25 |

+

{ ip: req.ip, attempts: newAttempts, token },

|

| 26 |

+

`Attempted admin API request with invalid token`

|

| 27 |

+

);

|