Upload 12 files

Browse files- LICENSE +400 -0

- README.md +191 -8

- app (1).py +122 -0

- config.py +59 -0

- dataset.py +224 -0

- gitattributes +5 -0

- index.html +139 -0

- inference_onnx.py +63 -0

- loss.py +145 -0

- main.py +131 -0

- requirements (1).txt +18 -0

- sample.wav +0 -0

LICENSE

ADDED

|

@@ -0,0 +1,400 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Attribution-NonCommercial 4.0 International

|

| 3 |

+

|

| 4 |

+

=======================================================================

|

| 5 |

+

|

| 6 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and

|

| 7 |

+

does not provide legal services or legal advice. Distribution of

|

| 8 |

+

Creative Commons public licenses does not create a lawyer-client or

|

| 9 |

+

other relationship. Creative Commons makes its licenses and related

|

| 10 |

+

information available on an "as-is" basis. Creative Commons gives no

|

| 11 |

+

warranties regarding its licenses, any material licensed under their

|

| 12 |

+

terms and conditions, or any related information. Creative Commons

|

| 13 |

+

disclaims all liability for damages resulting from their use to the

|

| 14 |

+

fullest extent possible.

|

| 15 |

+

|

| 16 |

+

Using Creative Commons Public Licenses

|

| 17 |

+

|

| 18 |

+

Creative Commons public licenses provide a standard set of terms and

|

| 19 |

+

conditions that creators and other rights holders may use to share

|

| 20 |

+

original works of authorship and other material subject to copyright

|

| 21 |

+

and certain other rights specified in the public license below. The

|

| 22 |

+

following considerations are for informational purposes only, are not

|

| 23 |

+

exhaustive, and do not form part of our licenses.

|

| 24 |

+

|

| 25 |

+

Considerations for licensors: Our public licenses are

|

| 26 |

+

intended for use by those authorized to give the public

|

| 27 |

+

permission to use material in ways otherwise restricted by

|

| 28 |

+

copyright and certain other rights. Our licenses are

|

| 29 |

+

irrevocable. Licensors should read and understand the terms

|

| 30 |

+

and conditions of the license they choose before applying it.

|

| 31 |

+

Licensors should also secure all rights necessary before

|

| 32 |

+

applying our licenses so that the public can reuse the

|

| 33 |

+

material as expected. Licensors should clearly mark any

|

| 34 |

+

material not subject to the license. This includes other CC-

|

| 35 |

+

licensed material, or material used under an exception or

|

| 36 |

+

limitation to copyright. More considerations for licensors:

|

| 37 |

+

wiki.creativecommons.org/Considerations_for_licensors

|

| 38 |

+

|

| 39 |

+

Considerations for the public: By using one of our public

|

| 40 |

+

licenses, a licensor grants the public permission to use the

|

| 41 |

+

licensed material under specified terms and conditions. If

|

| 42 |

+

the licensor's permission is not necessary for any reason--for

|

| 43 |

+

example, because of any applicable exception or limitation to

|

| 44 |

+

copyright--then that use is not regulated by the license. Our

|

| 45 |

+

licenses grant only permissions under copyright and certain

|

| 46 |

+

other rights that a licensor has authority to grant. Use of

|

| 47 |

+

the licensed material may still be restricted for other

|

| 48 |

+

reasons, including because others have copyright or other

|

| 49 |

+

rights in the material. A licensor may make special requests,

|

| 50 |

+

such as asking that all changes be marked or described.

|

| 51 |

+

Although not required by our licenses, you are encouraged to

|

| 52 |

+

respect those requests where reasonable. More_considerations

|

| 53 |

+

for the public:

|

| 54 |

+

wiki.creativecommons.org/Considerations_for_licensees

|

| 55 |

+

|

| 56 |

+

=======================================================================

|

| 57 |

+

|

| 58 |

+

Creative Commons Attribution-NonCommercial 4.0 International Public

|

| 59 |

+

License

|

| 60 |

+

|

| 61 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 62 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 63 |

+

Attribution-NonCommercial 4.0 International Public License ("Public

|

| 64 |

+

License"). To the extent this Public License may be interpreted as a

|

| 65 |

+

contract, You are granted the Licensed Rights in consideration of Your

|

| 66 |

+

acceptance of these terms and conditions, and the Licensor grants You

|

| 67 |

+

such rights in consideration of benefits the Licensor receives from

|

| 68 |

+

making the Licensed Material available under these terms and

|

| 69 |

+

conditions.

|

| 70 |

+

|

| 71 |

+

Section 1 -- Definitions.

|

| 72 |

+

|

| 73 |

+

a. Adapted Material means material subject to Copyright and Similar

|

| 74 |

+

Rights that is derived from or based upon the Licensed Material

|

| 75 |

+

and in which the Licensed Material is translated, altered,

|

| 76 |

+

arranged, transformed, or otherwise modified in a manner requiring

|

| 77 |

+

permission under the Copyright and Similar Rights held by the

|

| 78 |

+

Licensor. For purposes of this Public License, where the Licensed

|

| 79 |

+

Material is a musical work, performance, or sound recording,

|

| 80 |

+

Adapted Material is always produced where the Licensed Material is

|

| 81 |

+

synched in timed relation with a moving image.

|

| 82 |

+

|

| 83 |

+

b. Adapter's License means the license You apply to Your Copyright

|

| 84 |

+

and Similar Rights in Your contributions to Adapted Material in

|

| 85 |

+

accordance with the terms and conditions of this Public License.

|

| 86 |

+

|

| 87 |

+

c. Copyright and Similar Rights means copyright and/or similar rights

|

| 88 |

+

closely related to copyright including, without limitation,

|

| 89 |

+

performance, broadcast, sound recording, and Sui Generis Database

|

| 90 |

+

Rights, without regard to how the rights are labeled or

|

| 91 |

+

categorized. For purposes of this Public License, the rights

|

| 92 |

+

specified in Section 2(b)(1)-(2) are not Copyright and Similar

|

| 93 |

+

Rights.

|

| 94 |

+

d. Effective Technological Measures means those measures that, in the

|

| 95 |

+

absence of proper authority, may not be circumvented under laws

|

| 96 |

+

fulfilling obligations under Article 11 of the WIPO Copyright

|

| 97 |

+

Treaty adopted on December 20, 1996, and/or similar international

|

| 98 |

+

agreements.

|

| 99 |

+

|

| 100 |

+

e. Exceptions and Limitations means fair use, fair dealing, and/or

|

| 101 |

+

any other exception or limitation to Copyright and Similar Rights

|

| 102 |

+

that applies to Your use of the Licensed Material.

|

| 103 |

+

|

| 104 |

+

f. Licensed Material means the artistic or literary work, database,

|

| 105 |

+

or other material to which the Licensor applied this Public

|

| 106 |

+

License.

|

| 107 |

+

|

| 108 |

+

g. Licensed Rights means the rights granted to You subject to the

|

| 109 |

+

terms and conditions of this Public License, which are limited to

|

| 110 |

+

all Copyright and Similar Rights that apply to Your use of the

|

| 111 |

+

Licensed Material and that the Licensor has authority to license.

|

| 112 |

+

|

| 113 |

+

h. Licensor means the individual(s) or entity(ies) granting rights

|

| 114 |

+

under this Public License.

|

| 115 |

+

|

| 116 |

+

i. NonCommercial means not primarily intended for or directed towards

|

| 117 |

+

commercial advantage or monetary compensation. For purposes of

|

| 118 |

+

this Public License, the exchange of the Licensed Material for

|

| 119 |

+

other material subject to Copyright and Similar Rights by digital

|

| 120 |

+

file-sharing or similar means is NonCommercial provided there is

|

| 121 |

+

no payment of monetary compensation in connection with the

|

| 122 |

+

exchange.

|

| 123 |

+

|

| 124 |

+

j. Share means to provide material to the public by any means or

|

| 125 |

+

process that requires permission under the Licensed Rights, such

|

| 126 |

+

as reproduction, public display, public performance, distribution,

|

| 127 |

+

dissemination, communication, or importation, and to make material

|

| 128 |

+

available to the public including in ways that members of the

|

| 129 |

+

public may access the material from a place and at a time

|

| 130 |

+

individually chosen by them.

|

| 131 |

+

|

| 132 |

+

k. Sui Generis Database Rights means rights other than copyright

|

| 133 |

+

resulting from Directive 96/9/EC of the European Parliament and of

|

| 134 |

+

the Council of 11 March 1996 on the legal protection of databases,

|

| 135 |

+

as amended and/or succeeded, as well as other essentially

|

| 136 |

+

equivalent rights anywhere in the world.

|

| 137 |

+

|

| 138 |

+

l. You means the individual or entity exercising the Licensed Rights

|

| 139 |

+

under this Public License. Your has a corresponding meaning.

|

| 140 |

+

|

| 141 |

+

Section 2 -- Scope.

|

| 142 |

+

|

| 143 |

+

a. License grant.

|

| 144 |

+

|

| 145 |

+

1. Subject to the terms and conditions of this Public License,

|

| 146 |

+

the Licensor hereby grants You a worldwide, royalty-free,

|

| 147 |

+

non-sublicensable, non-exclusive, irrevocable license to

|

| 148 |

+

exercise the Licensed Rights in the Licensed Material to:

|

| 149 |

+

|

| 150 |

+

a. reproduce and Share the Licensed Material, in whole or

|

| 151 |

+

in part, for NonCommercial purposes only; and

|

| 152 |

+

|

| 153 |

+

b. produce, reproduce, and Share Adapted Material for

|

| 154 |

+

NonCommercial purposes only.

|

| 155 |

+

|

| 156 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where

|

| 157 |

+

Exceptions and Limitations apply to Your use, this Public

|

| 158 |

+

License does not apply, and You do not need to comply with

|

| 159 |

+

its terms and conditions.

|

| 160 |

+

|

| 161 |

+

3. Term. The term of this Public License is specified in Section

|

| 162 |

+

6(a).

|

| 163 |

+

|

| 164 |

+

4. Media and formats; technical modifications allowed. The

|

| 165 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 166 |

+

all media and formats whether now known or hereafter created,

|

| 167 |

+

and to make technical modifications necessary to do so. The

|

| 168 |

+

Licensor waives and/or agrees not to assert any right or

|

| 169 |

+

authority to forbid You from making technical modifications

|

| 170 |

+

necessary to exercise the Licensed Rights, including

|

| 171 |

+

technical modifications necessary to circumvent Effective

|

| 172 |

+

Technological Measures. For purposes of this Public License,

|

| 173 |

+

simply making modifications authorized by this Section 2(a)

|

| 174 |

+

(4) never produces Adapted Material.

|

| 175 |

+

|

| 176 |

+

5. Downstream recipients.

|

| 177 |

+

|

| 178 |

+

a. Offer from the Licensor -- Licensed Material. Every

|

| 179 |

+

recipient of the Licensed Material automatically

|

| 180 |

+

receives an offer from the Licensor to exercise the

|

| 181 |

+

Licensed Rights under the terms and conditions of this

|

| 182 |

+

Public License.

|

| 183 |

+

|

| 184 |

+

b. No downstream restrictions. You may not offer or impose

|

| 185 |

+

any additional or different terms or conditions on, or

|

| 186 |

+

apply any Effective Technological Measures to, the

|

| 187 |

+

Licensed Material if doing so restricts exercise of the

|

| 188 |

+

Licensed Rights by any recipient of the Licensed

|

| 189 |

+

Material.

|

| 190 |

+

|

| 191 |

+

6. No endorsement. Nothing in this Public License constitutes or

|

| 192 |

+

may be construed as permission to assert or imply that You

|

| 193 |

+

are, or that Your use of the Licensed Material is, connected

|

| 194 |

+

with, or sponsored, endorsed, or granted official status by,

|

| 195 |

+

the Licensor or others designated to receive attribution as

|

| 196 |

+

provided in Section 3(a)(1)(A)(i).

|

| 197 |

+

|

| 198 |

+

b. Other rights.

|

| 199 |

+

|

| 200 |

+

1. Moral rights, such as the right of integrity, are not

|

| 201 |

+

licensed under this Public License, nor are publicity,

|

| 202 |

+

privacy, and/or other similar personality rights; however, to

|

| 203 |

+

the extent possible, the Licensor waives and/or agrees not to

|

| 204 |

+

assert any such rights held by the Licensor to the limited

|

| 205 |

+

extent necessary to allow You to exercise the Licensed

|

| 206 |

+

Rights, but not otherwise.

|

| 207 |

+

|

| 208 |

+

2. Patent and trademark rights are not licensed under this

|

| 209 |

+

Public License.

|

| 210 |

+

|

| 211 |

+

3. To the extent possible, the Licensor waives any right to

|

| 212 |

+

collect royalties from You for the exercise of the Licensed

|

| 213 |

+

Rights, whether directly or through a collecting society

|

| 214 |

+

under any voluntary or waivable statutory or compulsory

|

| 215 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 216 |

+

reserves any right to collect such royalties, including when

|

| 217 |

+

the Licensed Material is used other than for NonCommercial

|

| 218 |

+

purposes.

|

| 219 |

+

|

| 220 |

+

Section 3 -- License Conditions.

|

| 221 |

+

|

| 222 |

+

Your exercise of the Licensed Rights is expressly made subject to the

|

| 223 |

+

following conditions.

|

| 224 |

+

|

| 225 |

+

a. Attribution.

|

| 226 |

+

|

| 227 |

+

1. If You Share the Licensed Material (including in modified

|

| 228 |

+

form), You must:

|

| 229 |

+

|

| 230 |

+

a. retain the following if it is supplied by the Licensor

|

| 231 |

+

with the Licensed Material:

|

| 232 |

+

|

| 233 |

+

i. identification of the creator(s) of the Licensed

|

| 234 |

+

Material and any others designated to receive

|

| 235 |

+

attribution, in any reasonable manner requested by

|

| 236 |

+

the Licensor (including by pseudonym if

|

| 237 |

+

designated);

|

| 238 |

+

|

| 239 |

+

ii. a copyright notice;

|

| 240 |

+

|

| 241 |

+

iii. a notice that refers to this Public License;

|

| 242 |

+

|

| 243 |

+

iv. a notice that refers to the disclaimer of

|

| 244 |

+

warranties;

|

| 245 |

+

|

| 246 |

+

v. a URI or hyperlink to the Licensed Material to the

|

| 247 |

+

extent reasonably practicable;

|

| 248 |

+

|

| 249 |

+

b. indicate if You modified the Licensed Material and

|

| 250 |

+

retain an indication of any previous modifications; and

|

| 251 |

+

|

| 252 |

+

c. indicate the Licensed Material is licensed under this

|

| 253 |

+

Public License, and include the text of, or the URI or

|

| 254 |

+

hyperlink to, this Public License.

|

| 255 |

+

|

| 256 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 257 |

+

reasonable manner based on the medium, means, and context in

|

| 258 |

+

which You Share the Licensed Material. For example, it may be

|

| 259 |

+

reasonable to satisfy the conditions by providing a URI or

|

| 260 |

+

hyperlink to a resource that includes the required

|

| 261 |

+

information.

|

| 262 |

+

|

| 263 |

+

3. If requested by the Licensor, You must remove any of the

|

| 264 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 265 |

+

reasonably practicable.

|

| 266 |

+

|

| 267 |

+

4. If You Share Adapted Material You produce, the Adapter's

|

| 268 |

+

License You apply must not prevent recipients of the Adapted

|

| 269 |

+

Material from complying with this Public License.

|

| 270 |

+

|

| 271 |

+

Section 4 -- Sui Generis Database Rights.

|

| 272 |

+

|

| 273 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 274 |

+

apply to Your use of the Licensed Material:

|

| 275 |

+

|

| 276 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right

|

| 277 |

+

to extract, reuse, reproduce, and Share all or a substantial

|

| 278 |

+

portion of the contents of the database for NonCommercial purposes

|

| 279 |

+

only;

|

| 280 |

+

|

| 281 |

+

b. if You include all or a substantial portion of the database

|

| 282 |

+

contents in a database in which You have Sui Generis Database

|

| 283 |

+

Rights, then the database in which You have Sui Generis Database

|

| 284 |

+

Rights (but not its individual contents) is Adapted Material; and

|

| 285 |

+

|

| 286 |

+

c. You must comply with the conditions in Section 3(a) if You Share

|

| 287 |

+

all or a substantial portion of the contents of the database.

|

| 288 |

+

|

| 289 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 290 |

+

replace Your obligations under this Public License where the Licensed

|

| 291 |

+

Rights include other Copyright and Similar Rights.

|

| 292 |

+

|

| 293 |

+

Section 5 -- Disclaimer of Warranties and Limitation of Liability.

|

| 294 |

+

|

| 295 |

+

a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

|

| 296 |

+

EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

|

| 297 |

+

AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

|

| 298 |

+

ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

|

| 299 |

+

IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

|

| 300 |

+

WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

|

| 301 |

+

PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

|

| 302 |

+

ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

|

| 303 |

+

KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

|

| 304 |

+

ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

|

| 305 |

+

|

| 306 |

+

b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

|

| 307 |

+

TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

|

| 308 |

+

NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

|

| 309 |

+

INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

|

| 310 |

+

COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

|

| 311 |

+

USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

|

| 312 |

+

ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

|

| 313 |

+

DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

|

| 314 |

+

IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

|

| 315 |

+

|

| 316 |

+

c. The disclaimer of warranties and limitation of liability provided

|

| 317 |

+

above shall be interpreted in a manner that, to the extent

|

| 318 |

+

possible, most closely approximates an absolute disclaimer and

|

| 319 |

+

waiver of all liability.

|

| 320 |

+

|

| 321 |

+

Section 6 -- Term and Termination.

|

| 322 |

+

|

| 323 |

+

a. This Public License applies for the term of the Copyright and

|

| 324 |

+

Similar Rights licensed here. However, if You fail to comply with

|

| 325 |

+

this Public License, then Your rights under this Public License

|

| 326 |

+

terminate automatically.

|

| 327 |

+

|

| 328 |

+

b. Where Your right to use the Licensed Material has terminated under

|

| 329 |

+

Section 6(a), it reinstates:

|

| 330 |

+

|

| 331 |

+

1. automatically as of the date the violation is cured, provided

|

| 332 |

+

it is cured within 30 days of Your discovery of the

|

| 333 |

+

violation; or

|

| 334 |

+

|

| 335 |

+

2. upon express reinstatement by the Licensor.

|

| 336 |

+

|

| 337 |

+

For the avoidance of doubt, this Section 6(b) does not affect any

|

| 338 |

+

right the Licensor may have to seek remedies for Your violations

|

| 339 |

+

of this Public License.

|

| 340 |

+

|

| 341 |

+

c. For the avoidance of doubt, the Licensor may also offer the

|

| 342 |

+

Licensed Material under separate terms or conditions or stop

|

| 343 |

+

distributing the Licensed Material at any time; however, doing so

|

| 344 |

+

will not terminate this Public License.

|

| 345 |

+

|

| 346 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 347 |

+

License.

|

| 348 |

+

|

| 349 |

+

Section 7 -- Other Terms and Conditions.

|

| 350 |

+

|

| 351 |

+

a. The Licensor shall not be bound by any additional or different

|

| 352 |

+

terms or conditions communicated by You unless expressly agreed.

|

| 353 |

+

|

| 354 |

+

b. Any arrangements, understandings, or agreements regarding the

|

| 355 |

+

Licensed Material not stated herein are separate from and

|

| 356 |

+

independent of the terms and conditions of this Public License.

|

| 357 |

+

|

| 358 |

+

Section 8 -- Interpretation.

|

| 359 |

+

|

| 360 |

+

a. For the avoidance of doubt, this Public License does not, and

|

| 361 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 362 |

+

conditions on any use of the Licensed Material that could lawfully

|

| 363 |

+

be made without permission under this Public License.

|

| 364 |

+

|

| 365 |

+

b. To the extent possible, if any provision of this Public License is

|

| 366 |

+

deemed unenforceable, it shall be automatically reformed to the

|

| 367 |

+

minimum extent necessary to make it enforceable. If the provision

|

| 368 |

+

cannot be reformed, it shall be severed from this Public License

|

| 369 |

+

without affecting the enforceability of the remaining terms and

|

| 370 |

+

conditions.

|

| 371 |

+

|

| 372 |

+

c. No term or condition of this Public License will be waived and no

|

| 373 |

+

failure to comply consented to unless expressly agreed to by the

|

| 374 |

+

Licensor.

|

| 375 |

+

|

| 376 |

+

d. Nothing in this Public License constitutes or may be interpreted

|

| 377 |

+

as a limitation upon, or waiver of, any privileges and immunities

|

| 378 |

+

that apply to the Licensor or You, including from the legal

|

| 379 |

+

processes of any jurisdiction or authority.

|

| 380 |

+

|

| 381 |

+

=======================================================================

|

| 382 |

+

|

| 383 |

+

Creative Commons is not a party to its public

|

| 384 |

+

licenses. Notwithstanding, Creative Commons may elect to apply one of

|

| 385 |

+

its public licenses to material it publishes and in those instances

|

| 386 |

+

will be considered the “Licensor.” The text of the Creative Commons

|

| 387 |

+

public licenses is dedicated to the public domain under the CC0 Public

|

| 388 |

+

Domain Dedication. Except for the limited purpose of indicating that

|

| 389 |

+

material is shared under a Creative Commons public license or as

|

| 390 |

+

otherwise permitted by the Creative Commons policies published at

|

| 391 |

+

creativecommons.org/policies, Creative Commons does not authorize the

|

| 392 |

+

use of the trademark "Creative Commons" or any other trademark or logo

|

| 393 |

+

of Creative Commons without its prior written consent including,

|

| 394 |

+

without limitation, in connection with any unauthorized modifications

|

| 395 |

+

to any of its public licenses or any other arrangements,

|

| 396 |

+

understandings, or agreements concerning use of licensed material. For

|

| 397 |

+

the avoidance of doubt, this paragraph does not form part of the

|

| 398 |

+

public licenses.

|

| 399 |

+

|

| 400 |

+

Creative Commons may be contacted at creativecommons.org.

|

README.md

CHANGED

|

@@ -1,13 +1,196 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: streamlit

|

| 7 |

-

|

| 8 |

app_file: app.py

|

| 9 |

-

|

| 10 |

-

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

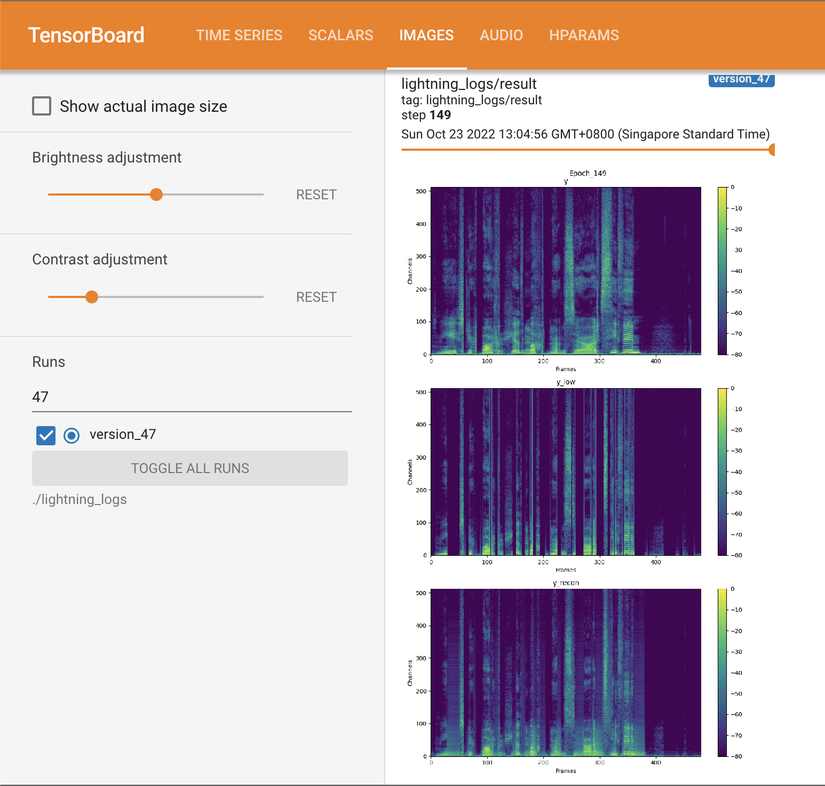

| 2 |

+

title: FRN

|

| 3 |

+

emoji: 📉

|

| 4 |

+

colorFrom: gray

|

| 5 |

+

colorTo: red

|

| 6 |

sdk: streamlit

|

| 7 |

+

pinned: true

|

| 8 |

app_file: app.py

|

| 9 |

+

sdk_version: 1.10.0

|

| 10 |

+

python_version: 3.8

|

| 11 |

---

|

| 12 |

|

| 13 |

+

# FRN - Full-band Recurrent Network Official Implementation

|

| 14 |

+

|

| 15 |

+

**Improving performance of real-time full-band blind packet-loss concealment with predictive network - ICASSP 2023**

|

| 16 |

+

|

| 17 |

+

[](https://arxiv.org/abs/2211.04071)

|

| 18 |

+

[](https://github.com/Crystalsound/FRN/)

|

| 19 |

+

[](https://github.com/Crystalsound/FRN/commits)

|

| 20 |

+

|

| 21 |

+

## License and citation

|

| 22 |

+

|

| 23 |

+

This repository is released under the CC-BY-NC 4.0. license as found in the LICENSE file.

|

| 24 |

+

|

| 25 |

+

If you use our software, please cite as below.

|

| 26 |

+

For future queries, please contact [anh.nguyen@namitech.io](mailto:anh.nguyen@namitech.io).

|

| 27 |

+

|

| 28 |

+

Copyright © 2022 NAMI TECHNOLOGY JSC, Inc. All rights reserved.

|

| 29 |

+

|

| 30 |

+

```

|

| 31 |

+

@misc{Nguyen2022ImprovingPO,

|

| 32 |

+

title={Improving performance of real-time full-band blind packet-loss concealment with predictive network},

|

| 33 |

+

author={Viet-Anh Nguyen and Anh H. T. Nguyen and Andy W. H. Khong},

|

| 34 |

+

year={2022},

|

| 35 |

+

eprint={2211.04071},

|

| 36 |

+

archivePrefix={arXiv},

|

| 37 |

+

primaryClass={cs.LG}

|

| 38 |

+

}

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

# 1. Results

|

| 42 |

+

|

| 43 |

+

Our model achieved a significant gain over baselines. Here, we include the predicted packet loss concealment

|

| 44 |

+

mean-opinion-score (PLCMOS) using Microsoft's [PLCMOS](https://github.com/microsoft/PLC-Challenge/tree/main/PLCMOS)

|

| 45 |

+

service. Please refer to our paper for more benchmarks.

|

| 46 |

+

|

| 47 |

+

| Model | PLCMOS |

|

| 48 |

+

|---------|-----------|

|

| 49 |

+

| Input | 3.517 |

|

| 50 |

+

| tPLC | 3.463 |

|

| 51 |

+

| TFGAN | 3.645 |

|

| 52 |

+

| **FRN** | **3.655** |

|

| 53 |

+

|

| 54 |

+

We also provide several audio samples in [https://crystalsound.github.io/FRN/](https://crystalsound.github.io/FRN/) for

|

| 55 |

+

comparison.

|

| 56 |

+

|

| 57 |

+

# 2. Installation

|

| 58 |

+

|

| 59 |

+

## Setup

|

| 60 |

+

|

| 61 |

+

### Clone the repo

|

| 62 |

+

|

| 63 |

+

```

|

| 64 |

+

$ git clone https://github.com/Crystalsound/FRN.git

|

| 65 |

+

$ cd FRN

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

### Install dependencies

|

| 69 |

+

|

| 70 |

+

* Our implementation requires the `libsndfile` libraries for the Python packages `soundfile`. On Ubuntu, they can be

|

| 71 |

+

easily installed using `apt-get`:

|

| 72 |

+

```

|

| 73 |

+

$ apt-get update && apt-get install libsndfile-dev

|

| 74 |

+

```

|

| 75 |

+

* Create a Python 3.8 environment. Conda is recommended:

|

| 76 |

+

```

|

| 77 |

+

$ conda create -n frn python=3.8

|

| 78 |

+

$ conda activate frn

|

| 79 |

+

```

|

| 80 |

+

|

| 81 |

+

* Install the requirements:

|

| 82 |

+

```

|

| 83 |

+

$ pip install -r requirements.txt

|

| 84 |

+

```

|

| 85 |

+

|

| 86 |

+

# 3. Data preparation

|

| 87 |

+

|

| 88 |

+

In our paper, we conduct experiments on the [VCTK](https://datashare.ed.ac.uk/handle/10283/3443) dataset.

|

| 89 |

+

|

| 90 |

+

* Download and extract the datasets:

|

| 91 |

+

```

|

| 92 |

+

$ wget http://www.udialogue.org/download/VCTK-Corpus.tar.gz -O data/vctk/VCTK-Corpus.tar.gz

|

| 93 |

+

$ tar -zxvf data/vctk/VCTK-Corpus.tar.gz -C data/vctk/ --strip-components=1

|

| 94 |

+

```

|

| 95 |

+

|

| 96 |

+

After extracting the datasets, your `./data` directory should look like this:

|

| 97 |

+

|

| 98 |

+

```

|

| 99 |

+

.

|

| 100 |

+

|--data

|

| 101 |

+

|--vctk

|

| 102 |

+

|--wav48

|

| 103 |

+

|--p225

|

| 104 |

+

|--p225_001.wav

|

| 105 |

+

...

|

| 106 |

+

|--train.txt

|

| 107 |

+

|--test.txt

|

| 108 |

+

```

|

| 109 |

+

* In order to load the datasets, text files that contain training and testing audio paths are required. We have

|

| 110 |

+

prepared `train.txt` and `test.txt` files in `./data/vctk` directory.

|

| 111 |

+

|

| 112 |

+

# 4. Run the code

|

| 113 |

+

|

| 114 |

+

## Configuration

|

| 115 |

+

|

| 116 |

+

`config.py` is the most important file. Here, you can find all the configurations related to experiment setups,

|

| 117 |

+

datasets, models, training, testing, etc. Although the config file has been explained thoroughly, we recommend reading

|

| 118 |

+

our paper to fully understand each parameter.

|

| 119 |

+

|

| 120 |

+

## Training

|

| 121 |

+

|

| 122 |

+

* Adjust training hyperparameters in `config.py`. We provide the pretrained predictor in `lightning_logs/predictor` as stated in our paper. The FRN model can be trained entirely from scratch and will work as well. In this case, initiate `PLCModel(..., pred_ckpt_path=None)`.

|

| 123 |

+

|

| 124 |

+

* Run `main.py`:

|

| 125 |

+

```

|

| 126 |

+

$ python main.py --mode train

|

| 127 |

+

```

|

| 128 |

+

* Each run will create a version in `./lightning_logs`, where the model checkpoint and hyperparameters are saved. In

|

| 129 |

+

case you want to continue training from one of these versions, just set the argument `--version` of the above command

|

| 130 |

+

to your desired version number. For example:

|

| 131 |

+

```

|

| 132 |

+

# resume from version 0

|

| 133 |

+

$ python main.py --mode train --version 0

|

| 134 |

+

```

|

| 135 |

+

* To monitor the training curves as well as inspect model output visualization, run the tensorboard:

|

| 136 |

+

```

|

| 137 |

+

$ tensorboard --logdir=./lightning_logs --bind_all

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

## Evaluation

|

| 142 |

+

|

| 143 |

+

In our paper, we evaluated with 2 masking methods: simulation using Markov Chain and employing real traces in PLC

|

| 144 |

+

Challenge.

|

| 145 |

+

|

| 146 |

+

* Get the blind test set with loss traces:

|

| 147 |

+

```

|

| 148 |

+

$ wget http://plcchallenge2022pub.blob.core.windows.net/plcchallengearchive/blind.tar.gz

|

| 149 |

+

$ tar -xvf blind.tar.gz -C test_samples

|

| 150 |

+

```

|

| 151 |

+

* Modify `config.py` to change evaluation setup if necessary.

|

| 152 |

+

* Run `main.py` with a version number to be evaluated:

|

| 153 |

+

```

|

| 154 |

+

$ python main.py --mode eval --version 0

|

| 155 |

+

```

|

| 156 |

+

During the evaluation, several output samples are saved to `CONFIG.LOG.sample_path` for sanity testing.

|

| 157 |

+

|

| 158 |

+

## Configure a new dataset

|

| 159 |

+

|

| 160 |

+

Our implementation currently works with the VCTK dataset but can be easily extensible to a new one.

|

| 161 |

+

|

| 162 |

+

* Firstly, you need to prepare `train.txt` and `test.txt`. See `./data/vctk/train.txt` and `./data/vctk/test.txt` for

|

| 163 |

+

example.

|

| 164 |

+

* Secondly, add a new dictionary to `CONFIG.DATA.data_dir`:

|

| 165 |

+

```

|

| 166 |

+

{

|

| 167 |

+

'root': 'path/to/data/directory',

|

| 168 |

+

'train': 'path/to/train.txt',

|

| 169 |

+

'test': 'path/to/test.txt'

|

| 170 |

+

}

|

| 171 |

+

```

|

| 172 |

+

**Important:** Make sure each line in `train.txt` and `test.txt` joining with `'root'` is a valid path to its

|

| 173 |

+

corresponding audio file.

|

| 174 |

+

|

| 175 |

+

# 5. Audio generation

|

| 176 |

+

|

| 177 |

+

* In order to generate output audios, you need to modify `CONFIG.TEST.in_dir` to your input directory.

|

| 178 |

+

* Run `main.py`:

|

| 179 |

+

```

|

| 180 |

+

python main.py --mode test --version 0

|

| 181 |

+

```

|

| 182 |

+

The generated audios are saved to `CONFIG.TEST.out_dir`.

|

| 183 |

+

|

| 184 |

+

## ONNX inferencing

|

| 185 |

+

We provide ONNX inferencing scripts and the best ONNX model (converted from the best checkpoint)

|

| 186 |

+

at `lightning_logs/best_model.onnx`.

|

| 187 |

+

* Convert a checkpoint to an ONNX model:

|

| 188 |

+

```

|

| 189 |

+

python main.py --mode onnx --version 0

|

| 190 |

+

```

|

| 191 |

+

The converted ONNX model will be saved to `lightning_logs/version_0/checkpoints`.

|

| 192 |

+

* Put test audios in `test_samples` and inference with the converted ONNX model (see `inference_onnx.py` for more

|

| 193 |

+

details):

|

| 194 |

+

```

|

| 195 |

+

python inference_onnx.py --onnx_path lightning_logs/version_0/frn.onnx

|

| 196 |

+

```

|

app (1).py

ADDED

|

@@ -0,0 +1,122 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import librosa

|

| 3 |

+

import soundfile as sf

|

| 4 |

+

import librosa.display

|

| 5 |

+

from config import CONFIG

|

| 6 |

+

import torch

|

| 7 |

+

from dataset import MaskGenerator

|

| 8 |

+

import onnxruntime, onnx

|

| 9 |

+

import matplotlib.pyplot as plt

|

| 10 |

+

import numpy as np

|

| 11 |

+

from matplotlib.backends.backend_agg import FigureCanvasAgg as FigureCanvas

|

| 12 |

+

|

| 13 |

+

@st.cache

|

| 14 |

+

def load_model():

|

| 15 |

+

path = 'lightning_logs/version_0/checkpoints/frn.onnx'

|

| 16 |

+

onnx_model = onnx.load(path)

|

| 17 |

+

options = onnxruntime.SessionOptions()

|

| 18 |

+

options.intra_op_num_threads = 2

|

| 19 |

+

options.graph_optimization_level = onnxruntime.GraphOptimizationLevel.ORT_ENABLE_ALL

|

| 20 |

+

session = onnxruntime.InferenceSession(path, options)

|

| 21 |

+

input_names = [x.name for x in session.get_inputs()]

|

| 22 |

+

output_names = [x.name for x in session.get_outputs()]

|

| 23 |

+

return session, onnx_model, input_names, output_names

|

| 24 |

+

|

| 25 |

+

def inference(re_im, session, onnx_model, input_names, output_names):

|

| 26 |

+

inputs = {input_names[i]: np.zeros([d.dim_value for d in _input.type.tensor_type.shape.dim],

|

| 27 |

+

dtype=np.float32)

|

| 28 |

+

for i, _input in enumerate(onnx_model.graph.input)

|

| 29 |

+

}

|

| 30 |

+

|

| 31 |

+

output_audio = []

|

| 32 |

+

for t in range(re_im.shape[0]):

|

| 33 |

+

inputs[input_names[0]] = re_im[t]

|

| 34 |

+

out, prev_mag, predictor_state, mlp_state = session.run(output_names, inputs)

|

| 35 |

+

inputs[input_names[1]] = prev_mag

|

| 36 |

+

inputs[input_names[2]] = predictor_state

|

| 37 |

+

inputs[input_names[3]] = mlp_state

|

| 38 |

+

output_audio.append(out)

|

| 39 |

+

|

| 40 |

+

output_audio = torch.tensor(np.concatenate(output_audio, 0))

|

| 41 |

+

output_audio = output_audio.permute(1, 0, 2).contiguous()

|

| 42 |

+

output_audio = torch.view_as_complex(output_audio)

|

| 43 |

+

output_audio = torch.istft(output_audio, window, stride, window=hann)

|

| 44 |

+

return output_audio.numpy()

|

| 45 |

+

|

| 46 |

+

def visualize(hr, lr, recon):

|

| 47 |

+

sr = CONFIG.DATA.sr

|

| 48 |

+

window_size = 1024

|

| 49 |

+

window = np.hanning(window_size)

|

| 50 |

+

|

| 51 |

+

stft_hr = librosa.core.spectrum.stft(hr, n_fft=window_size, hop_length=512, window=window)

|

| 52 |

+

stft_hr = 2 * np.abs(stft_hr) / np.sum(window)

|

| 53 |

+

|

| 54 |

+

stft_lr = librosa.core.spectrum.stft(lr, n_fft=window_size, hop_length=512, window=window)

|

| 55 |

+

stft_lr = 2 * np.abs(stft_lr) / np.sum(window)

|

| 56 |

+

|

| 57 |

+

stft_recon = librosa.core.spectrum.stft(recon, n_fft=window_size, hop_length=512, window=window)

|

| 58 |

+

stft_recon = 2 * np.abs(stft_recon) / np.sum(window)

|

| 59 |

+

|

| 60 |

+

fig, (ax1, ax2, ax3) = plt.subplots(3, 1, sharey=True, sharex=True, figsize=(16, 10))

|

| 61 |

+

ax1.title.set_text('Target signal')

|

| 62 |

+

ax2.title.set_text('Lossy signal')

|

| 63 |

+

ax3.title.set_text('Enhanced signal')

|

| 64 |

+

|

| 65 |

+

canvas = FigureCanvas(fig)

|

| 66 |

+

p = librosa.display.specshow(librosa.amplitude_to_db(stft_hr), ax=ax1, y_axis='linear', x_axis='time', sr=sr)

|

| 67 |

+

p = librosa.display.specshow(librosa.amplitude_to_db(stft_lr), ax=ax2, y_axis='linear', x_axis='time', sr=sr)

|

| 68 |

+

p = librosa.display.specshow(librosa.amplitude_to_db(stft_recon), ax=ax3, y_axis='linear', x_axis='time', sr=sr)

|

| 69 |

+

return fig

|

| 70 |

+

|

| 71 |

+

packet_size = CONFIG.DATA.EVAL.packet_size

|

| 72 |

+

window = CONFIG.DATA.window_size

|

| 73 |

+

stride = CONFIG.DATA.stride

|

| 74 |

+

|

| 75 |

+

title = 'Packet Loss Concealment'

|

| 76 |

+

st.set_page_config(page_title=title, page_icon=":sound:")

|

| 77 |

+

st.title(title)

|

| 78 |

+

|

| 79 |

+

st.subheader('Upload audio')

|

| 80 |

+

uploaded_file = st.file_uploader("Upload your audio file (.wav) at 48 kHz sampling rate")

|

| 81 |

+

|

| 82 |

+

is_file_uploaded = uploaded_file is not None

|

| 83 |

+

if not is_file_uploaded:

|

| 84 |

+

uploaded_file = 'sample.wav'

|

| 85 |

+

|

| 86 |

+

target, sr = librosa.load(uploaded_file, sr=48000)

|

| 87 |

+

target = target[:packet_size * (len(target) // packet_size)]

|

| 88 |

+

|

| 89 |

+

st.text('Audio sample')

|

| 90 |

+

st.audio(uploaded_file)

|

| 91 |

+

|

| 92 |

+

st.subheader('Choose expected packet loss rate')

|

| 93 |

+

slider = [st.slider("Expected loss rate for Markov Chain loss generator", 0, 100, step=1)]

|

| 94 |

+

loss_percent = float(slider[0])/100

|

| 95 |

+

mask_gen = MaskGenerator(is_train=False, probs=[(1 - loss_percent, loss_percent)])

|

| 96 |

+

lossy_input = target.copy().reshape(-1, packet_size)

|

| 97 |

+

mask = mask_gen.gen_mask(len(lossy_input), seed=0)[:, np.newaxis]

|

| 98 |

+

lossy_input *= mask

|

| 99 |

+

lossy_input = lossy_input.reshape(-1)

|

| 100 |

+

hann = torch.sqrt(torch.hann_window(window))

|

| 101 |

+

lossy_input_tensor = torch.tensor(lossy_input)

|

| 102 |

+

re_im = torch.stft(lossy_input_tensor, window, stride, window=hann, return_complex=False).permute(1, 0, 2).unsqueeze(

|

| 103 |

+

1).numpy().astype(np.float32)

|

| 104 |

+

session, onnx_model, input_names, output_names = load_model()

|

| 105 |

+

|

| 106 |

+

if st.button('Conceal lossy audio!'):

|

| 107 |

+

with st.spinner('Please wait for completion'):

|

| 108 |

+

output = inference(re_im, session, onnx_model, input_names, output_names)

|

| 109 |

+

|

| 110 |

+

st.subheader('Visualization')

|

| 111 |

+

fig = visualize(target, lossy_input, output)

|

| 112 |

+

st.pyplot(fig)

|

| 113 |

+

st.success('Done!')

|

| 114 |

+

sf.write('target.wav', target, sr)

|

| 115 |

+

sf.write('lossy.wav', lossy_input, sr)

|

| 116 |

+

sf.write('enhanced.wav', output, sr)

|

| 117 |

+

st.text('Original audio')

|

| 118 |

+

st.audio('target.wav')

|

| 119 |

+

st.text('Lossy audio')

|

| 120 |

+

st.audio('lossy.wav')

|

| 121 |

+

st.text('Enhanced audio')

|

| 122 |

+

st.audio('enhanced.wav')

|

config.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

class CONFIG:

|

| 2 |

+

gpus = "0,1" # List of gpu devices

|

| 3 |

+

|

| 4 |

+

class TRAIN:

|

| 5 |

+

batch_size = 90 # number of audio files per batch

|

| 6 |

+

lr = 1e-4 # learning rate

|

| 7 |

+

epochs = 150 # max training epochs

|

| 8 |

+

workers = 12 # number of dataloader workers

|

| 9 |

+

val_split = 0.1 # validation set proportion

|

| 10 |

+

clipping_val = 1.0 # gradient clipping value

|

| 11 |

+

patience = 3 # learning rate scheduler's patience

|

| 12 |

+

factor = 0.5 # learning rate reduction factor

|

| 13 |

+

|

| 14 |

+

# Model config

|

| 15 |

+

class MODEL:

|

| 16 |

+

enc_layers = 4 # number of MLP blocks in the encoder

|

| 17 |

+

enc_in_dim = 384 # dimension of the input projection layer in the encoder

|

| 18 |

+

enc_dim = 768 # dimension of the MLP blocks

|

| 19 |

+

pred_dim = 512 # dimension of the LSTM in the predictor

|

| 20 |

+

pred_layers = 1 # number of LSTM layers in the predictor

|

| 21 |

+

|

| 22 |

+

# Dataset config

|

| 23 |

+

class DATA:

|

| 24 |

+

dataset = 'vctk' # dataset to use

|

| 25 |

+

'''

|

| 26 |

+

Dictionary that specifies paths to root directories and train/test text files of each datasets.

|

| 27 |

+

'root' is the path to the dataset and each line of the train.txt/test.txt files should contains the path to an

|

| 28 |

+

audio file from 'root'.

|

| 29 |

+

'''

|

| 30 |

+

data_dir = {'vctk': {'root': 'data/vctk/wav48',

|

| 31 |

+

'train': "data/vctk/train.txt",

|

| 32 |

+

'test': "data/vctk/test.txt"},

|

| 33 |

+

}

|

| 34 |

+

|

| 35 |

+

assert dataset in data_dir.keys(), 'Unknown dataset.'

|

| 36 |

+

sr = 48000 # audio sampling rate

|

| 37 |

+

audio_chunk_len = 122880 # size of chunk taken in each audio files

|

| 38 |

+

window_size = 960 # window size of the STFT operation, equivalent to packet size

|

| 39 |

+

stride = 480 # stride of the STFT operation

|

| 40 |

+

|

| 41 |

+

class TRAIN:

|

| 42 |

+

packet_sizes = [256, 512, 768, 960, 1024,

|

| 43 |

+

1536] # packet sizes for training. All sizes should be divisible by 'audio_chunk_len'

|

| 44 |

+

transition_probs = ((0.9, 0.1), (0.5, 0.1), (0.5, 0.5)) # list of trainsition probs for Markow Chain

|

| 45 |

+

|

| 46 |

+

class EVAL:

|

| 47 |

+

packet_size = 960 # 20ms

|

| 48 |

+

transition_probs = [(0.9, 0.1)] # (0.9, 0.1) ~ 10%; (0.8, 0.2) ~ 20%; (0.6, 0.4) ~ 40%

|

| 49 |

+

masking = 'gen' # whether using simulation or real traces from Microsoft to generate masks

|

| 50 |

+

assert masking in ['gen', 'real']

|

| 51 |

+

trace_path = 'test_samples/blind/lossy_singals' # must be clarified if masking = 'real'

|

| 52 |