Spaces:

No application file

No application file

Upload 253 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +4 -0

- langchain-ChatGLM-master/.github/ISSUE_TEMPLATE/bug_report.md +35 -0

- langchain-ChatGLM-master/.github/ISSUE_TEMPLATE/feature_request.md +23 -0

- langchain-ChatGLM-master/.gitignore +177 -0

- langchain-ChatGLM-master/CONTRIBUTING.md +22 -0

- langchain-ChatGLM-master/Dockerfile +36 -0

- langchain-ChatGLM-master/Dockerfile-cuda +14 -0

- langchain-ChatGLM-master/LICENSE +201 -0

- langchain-ChatGLM-master/README.md +235 -0

- langchain-ChatGLM-master/README_en.md +247 -0

- langchain-ChatGLM-master/agent/__init__.py +1 -0

- langchain-ChatGLM-master/agent/agent模式实验.ipynb +747 -0

- langchain-ChatGLM-master/agent/agent模式测试.ipynb +557 -0

- langchain-ChatGLM-master/agent/bing_search.py +19 -0

- langchain-ChatGLM-master/agent/custom_agent.py +128 -0

- langchain-ChatGLM-master/agent/custom_search.py +46 -0

- langchain-ChatGLM-master/api.py +465 -0

- langchain-ChatGLM-master/chains/dialogue_answering/__init__.py +7 -0

- langchain-ChatGLM-master/chains/dialogue_answering/__main__.py +36 -0

- langchain-ChatGLM-master/chains/dialogue_answering/base.py +99 -0

- langchain-ChatGLM-master/chains/dialogue_answering/prompts.py +22 -0

- langchain-ChatGLM-master/chains/local_doc_qa.py +341 -0

- langchain-ChatGLM-master/chains/modules/embeddings.py +34 -0

- langchain-ChatGLM-master/chains/modules/vectorstores.py +121 -0

- langchain-ChatGLM-master/chains/text_load.py +52 -0

- langchain-ChatGLM-master/cli.bat +2 -0

- langchain-ChatGLM-master/cli.py +86 -0

- langchain-ChatGLM-master/cli.sh +2 -0

- langchain-ChatGLM-master/cli_demo.py +66 -0

- langchain-ChatGLM-master/configs/model_config.py +176 -0

- langchain-ChatGLM-master/docs/API.md +1042 -0

- langchain-ChatGLM-master/docs/CHANGELOG.md +32 -0

- langchain-ChatGLM-master/docs/FAQ.md +179 -0

- langchain-ChatGLM-master/docs/INSTALL.md +47 -0

- langchain-ChatGLM-master/docs/Issue-with-Installing-Packages-Using-pip-in-Anaconda.md +114 -0

- langchain-ChatGLM-master/docs/StartOption.md +76 -0

- langchain-ChatGLM-master/docs/cli.md +49 -0

- langchain-ChatGLM-master/docs/fastchat.md +24 -0

- langchain-ChatGLM-master/docs/在Anaconda中使用pip安装包无效问题.md +125 -0

- langchain-ChatGLM-master/img/langchain+chatglm.png +3 -0

- langchain-ChatGLM-master/img/langchain+chatglm2.png +0 -0

- langchain-ChatGLM-master/img/qr_code_32.jpg +0 -0

- langchain-ChatGLM-master/img/vue_0521_0.png +0 -0

- langchain-ChatGLM-master/img/vue_0521_1.png +3 -0

- langchain-ChatGLM-master/img/vue_0521_2.png +3 -0

- langchain-ChatGLM-master/img/webui_0419.png +0 -0

- langchain-ChatGLM-master/img/webui_0510_0.png +0 -0

- langchain-ChatGLM-master/img/webui_0510_1.png +0 -0

- langchain-ChatGLM-master/img/webui_0510_2.png +0 -0

- langchain-ChatGLM-master/img/webui_0521_0.png +0 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

langchain-ChatGLM-master/img/langchain+chatglm.png filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

langchain-ChatGLM-master/img/vue_0521_1.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

langchain-ChatGLM-master/img/vue_0521_2.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

langchain-ChatGLM-master/knowledge_base/samples/vector_store/index.faiss filter=lfs diff=lfs merge=lfs -text

|

langchain-ChatGLM-master/.github/ISSUE_TEMPLATE/bug_report.md

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Bug 报告 / Bug Report

|

| 3 |

+

about: 报告项目中的错误或问题 / Report errors or issues in the project

|

| 4 |

+

title: "[BUG] 简洁阐述问题 / Concise description of the issue"

|

| 5 |

+

labels: bug

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**问题描述 / Problem Description**

|

| 11 |

+

用简洁明了的语言描述这个问题 / Describe the problem in a clear and concise manner.

|

| 12 |

+

|

| 13 |

+

**复现问题的步骤 / Steps to Reproduce**

|

| 14 |

+

1. 执行 '...' / Run '...'

|

| 15 |

+

2. 点击 '...' / Click '...'

|

| 16 |

+

3. 滚动到 '...' / Scroll to '...'

|

| 17 |

+

4. 问题出现 / Problem occurs

|

| 18 |

+

|

| 19 |

+

**预期的结果 / Expected Result**

|

| 20 |

+

描述应该出现的结果 / Describe the expected result.

|

| 21 |

+

|

| 22 |

+

**实际结果 / Actual Result**

|

| 23 |

+

描述实际发生的结果 / Describe the actual result.

|

| 24 |

+

|

| 25 |

+

**环境信息 / Environment Information**

|

| 26 |

+

- langchain-ChatGLM 版本/commit 号:(例如:v1.0.0 或 commit 123456) / langchain-ChatGLM version/commit number: (e.g., v1.0.0 or commit 123456)

|

| 27 |

+

- 是否使用 Docker 部署(是/否):是 / Is Docker deployment used (yes/no): yes

|

| 28 |

+

- 使用的模型(ChatGLM-6B / ClueAI/ChatYuan-large-v2 等):ChatGLM-6B / Model used (ChatGLM-6B / ClueAI/ChatYuan-large-v2, etc.): ChatGLM-6B

|

| 29 |

+

- 使用的 Embedding 模型(GanymedeNil/text2vec-large-chinese 等):GanymedeNil/text2vec-large-chinese / Embedding model used (GanymedeNil/text2vec-large-chinese, etc.): GanymedeNil/text2vec-large-chinese

|

| 30 |

+

- 操作系统及版本 / Operating system and version:

|

| 31 |

+

- Python 版本 / Python version:

|

| 32 |

+

- 其他相关环境信息 / Other relevant environment information:

|

| 33 |

+

|

| 34 |

+

**附加信息 / Additional Information**

|

| 35 |

+

添加与问题相关的任何其他信息 / Add any other information related to the issue.

|

langchain-ChatGLM-master/.github/ISSUE_TEMPLATE/feature_request.md

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: 功能请求 / Feature Request

|

| 3 |

+

about: 为项目提出新功能或建议 / Propose new features or suggestions for the project

|

| 4 |

+

title: "[FEATURE] 简洁阐述功能 / Concise description of the feature"

|

| 5 |

+

labels: enhancement

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**功能描述 / Feature Description**

|

| 11 |

+

用简洁明了的语言描述所需的功能 / Describe the desired feature in a clear and concise manner.

|

| 12 |

+

|

| 13 |

+

**解决的问题 / Problem Solved**

|

| 14 |

+

解释此功能如何解决现有问题或改进项目 / Explain how this feature solves existing problems or improves the project.

|

| 15 |

+

|

| 16 |

+

**实现建议 / Implementation Suggestions**

|

| 17 |

+

如果可能,请提供关于如何实现此功能的建议 / If possible, provide suggestions on how to implement this feature.

|

| 18 |

+

|

| 19 |

+

**替代方案 / Alternative Solutions**

|

| 20 |

+

描述您考虑过的替代方案 / Describe alternative solutions you have considered.

|

| 21 |

+

|

| 22 |

+

**其他信息 / Additional Information**

|

| 23 |

+

添加与功能请求相关的任何其他信息 / Add any other information related to the feature request.

|

langchain-ChatGLM-master/.gitignore

ADDED

|

@@ -0,0 +1,177 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*/**/__pycache__/

|

| 4 |

+

*.py[cod]

|

| 5 |

+

*$py.class

|

| 6 |

+

|

| 7 |

+

# C extensions

|

| 8 |

+

*.so

|

| 9 |

+

|

| 10 |

+

# Distribution / packaging

|

| 11 |

+

.Python

|

| 12 |

+

build/

|

| 13 |

+

develop-eggs/

|

| 14 |

+

dist/

|

| 15 |

+

downloads/

|

| 16 |

+

eggs/

|

| 17 |

+

.eggs/

|

| 18 |

+

lib/

|

| 19 |

+

lib64/

|

| 20 |

+

parts/

|

| 21 |

+

sdist/

|

| 22 |

+

var/

|

| 23 |

+

wheels/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

cover/

|

| 54 |

+

|

| 55 |

+

# Translations

|

| 56 |

+

*.mo

|

| 57 |

+

*.pot

|

| 58 |

+

|

| 59 |

+

# Django stuff:

|

| 60 |

+

*.log

|

| 61 |

+

local_settings.py

|

| 62 |

+

db.sqlite3

|

| 63 |

+

db.sqlite3-journal

|

| 64 |

+

|

| 65 |

+

# Flask stuff:

|

| 66 |

+

instance/

|

| 67 |

+

.webassets-cache

|

| 68 |

+

|

| 69 |

+

# Scrapy stuff:

|

| 70 |

+

.scrapy

|

| 71 |

+

|

| 72 |

+

# Sphinx documentation

|

| 73 |

+

docs/_build/

|

| 74 |

+

|

| 75 |

+

# PyBuilder

|

| 76 |

+

.pybuilder/

|

| 77 |

+

target/

|

| 78 |

+

|

| 79 |

+

# Jupyter Notebook

|

| 80 |

+

.ipynb_checkpoints

|

| 81 |

+

|

| 82 |

+

# IPython

|

| 83 |

+

profile_default/

|

| 84 |

+

ipython_config.py

|

| 85 |

+

|

| 86 |

+

# pyenv

|

| 87 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 88 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 89 |

+

# .python-version

|

| 90 |

+

|

| 91 |

+

# pipenv

|

| 92 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 93 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 94 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 95 |

+

# install all needed dependencies.

|

| 96 |

+

#Pipfile.lock

|

| 97 |

+

|

| 98 |

+

# poetry

|

| 99 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 100 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 101 |

+

# commonly ignored for libraries.

|

| 102 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 103 |

+

#poetry.lock

|

| 104 |

+

|

| 105 |

+

# pdm

|

| 106 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 107 |

+

#pdm.lock

|

| 108 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 109 |

+

# in version control.

|

| 110 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 111 |

+

.pdm.toml

|

| 112 |

+

|

| 113 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 114 |

+

__pypackages__/

|

| 115 |

+

|

| 116 |

+

# Celery stuff

|

| 117 |

+

celerybeat-schedule

|

| 118 |

+

celerybeat.pid

|

| 119 |

+

|

| 120 |

+

# SageMath parsed files

|

| 121 |

+

*.sage.py

|

| 122 |

+

|

| 123 |

+

# Environments

|

| 124 |

+

.env

|

| 125 |

+

.venv

|

| 126 |

+

env/

|

| 127 |

+

venv/

|

| 128 |

+

ENV/

|

| 129 |

+

env.bak/

|

| 130 |

+

venv.bak/

|

| 131 |

+

|

| 132 |

+

# Spyder project settings

|

| 133 |

+

.spyderproject

|

| 134 |

+

.spyproject

|

| 135 |

+

|

| 136 |

+

# Rope project settings

|

| 137 |

+

.ropeproject

|

| 138 |

+

|

| 139 |

+

# mkdocs documentation

|

| 140 |

+

/site

|

| 141 |

+

|

| 142 |

+

# mypy

|

| 143 |

+

.mypy_cache/

|

| 144 |

+

.dmypy.json

|

| 145 |

+

dmypy.json

|

| 146 |

+

|

| 147 |

+

# Pyre type checker

|

| 148 |

+

.pyre/

|

| 149 |

+

|

| 150 |

+

# pytype static type analyzer

|

| 151 |

+

.pytype/

|

| 152 |

+

|

| 153 |

+

# Cython debug symbols

|

| 154 |

+

cython_debug/

|

| 155 |

+

|

| 156 |

+

# PyCharm

|

| 157 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 158 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 159 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 160 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 161 |

+

.idea/

|

| 162 |

+

|

| 163 |

+

# Other files

|

| 164 |

+

output/*

|

| 165 |

+

log/*

|

| 166 |

+

.chroma

|

| 167 |

+

vector_store/*

|

| 168 |

+

content/*

|

| 169 |

+

api_content/*

|

| 170 |

+

knowledge_base/*

|

| 171 |

+

|

| 172 |

+

llm/*

|

| 173 |

+

embedding/*

|

| 174 |

+

|

| 175 |

+

pyrightconfig.json

|

| 176 |

+

loader/tmp_files

|

| 177 |

+

flagged/*

|

langchain-ChatGLM-master/CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 贡献指南

|

| 2 |

+

|

| 3 |

+

欢迎!我们是一个非常友好的社区,非常高兴您想要帮助我们让这个应用程序变得更好。但是,请您遵循一些通用准则以保持组织有序。

|

| 4 |

+

|

| 5 |

+

1. 确保为您要修复的错误或要添加的功能创建了一个[问题](https://github.com/imClumsyPanda/langchain-ChatGLM/issues),尽可能保持它们小。

|

| 6 |

+

2. 请使用 `git pull --rebase` 来拉取和衍合上游的更新。

|

| 7 |

+

3. 将提交合并为格式良好的提交。在提交说明中单独一行提到要解决的问题,如`Fix #<bug>`(有关更多可以使用的关键字,请参见[将拉取请求链接到问题](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue))。

|

| 8 |

+

4. 推送到`dev`。在说明中提到正在解决的问题。

|

| 9 |

+

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

# Contribution Guide

|

| 13 |

+

|

| 14 |

+

Welcome! We're a pretty friendly community, and we're thrilled that you want to help make this app even better. However, we ask that you follow some general guidelines to keep things organized around here.

|

| 15 |

+

|

| 16 |

+

1. Make sure an [issue](https://github.com/imClumsyPanda/langchain-ChatGLM/issues) is created for the bug you're about to fix, or feature you're about to add. Keep them as small as possible.

|

| 17 |

+

|

| 18 |

+

2. Please use `git pull --rebase` to fetch and merge updates from the upstream.

|

| 19 |

+

|

| 20 |

+

3. Rebase commits into well-formatted commits. Mention the issue being resolved in the commit message on a line all by itself like `Fixes #<bug>` (refer to [Linking a pull request to an issue](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue) for more keywords you can use).

|

| 21 |

+

|

| 22 |

+

4. Push into `dev`. Mention which bug is being resolved in the description.

|

langchain-ChatGLM-master/Dockerfile

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.8

|

| 2 |

+

|

| 3 |

+

MAINTAINER "chatGLM"

|

| 4 |

+

|

| 5 |

+

COPY agent /chatGLM/agent

|

| 6 |

+

|

| 7 |

+

COPY chains /chatGLM/chains

|

| 8 |

+

|

| 9 |

+

COPY configs /chatGLM/configs

|

| 10 |

+

|

| 11 |

+

COPY content /chatGLM/content

|

| 12 |

+

|

| 13 |

+

COPY models /chatGLM/models

|

| 14 |

+

|

| 15 |

+

COPY nltk_data /chatGLM/content

|

| 16 |

+

|

| 17 |

+

COPY requirements.txt /chatGLM/

|

| 18 |

+

|

| 19 |

+

COPY cli_demo.py /chatGLM/

|

| 20 |

+

|

| 21 |

+

COPY textsplitter /chatGLM/

|

| 22 |

+

|

| 23 |

+

COPY webui.py /chatGLM/

|

| 24 |

+

|

| 25 |

+

WORKDIR /chatGLM

|

| 26 |

+

|

| 27 |

+

RUN pip install --user torch torchvision tensorboard cython -i https://pypi.tuna.tsinghua.edu.cn/simple

|

| 28 |

+

# RUN pip install --user 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

|

| 29 |

+

|

| 30 |

+

# RUN pip install --user 'git+https://github.com/facebookresearch/fvcore'

|

| 31 |

+

# install detectron2

|

| 32 |

+

# RUN git clone https://github.com/facebookresearch/detectron2

|

| 33 |

+

|

| 34 |

+

RUN pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple/ --trusted-host pypi.tuna.tsinghua.edu.cn

|

| 35 |

+

|

| 36 |

+

CMD ["python","-u", "webui.py"]

|

langchain-ChatGLM-master/Dockerfile-cuda

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM nvidia/cuda:12.1.0-runtime-ubuntu20.04

|

| 2 |

+

LABEL MAINTAINER="chatGLM"

|

| 3 |

+

|

| 4 |

+

COPY . /chatGLM/

|

| 5 |

+

|

| 6 |

+

WORKDIR /chatGLM

|

| 7 |

+

|

| 8 |

+

RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone

|

| 9 |

+

RUN apt-get update -y && apt-get install python3 python3-pip curl libgl1 libglib2.0-0 -y && apt-get clean

|

| 10 |

+

RUN curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && python3 get-pip.py

|

| 11 |

+

|

| 12 |

+

RUN pip3 install -r requirements.txt -i https://pypi.mirrors.ustc.edu.cn/simple/ && rm -rf `pip3 cache dir`

|

| 13 |

+

|

| 14 |

+

CMD ["python3","-u", "webui.py"]

|

langchain-ChatGLM-master/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

langchain-ChatGLM-master/README.md

ADDED

|

@@ -0,0 +1,235 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 基于本地知识库的 ChatGLM 等大语言模型应用实现

|

| 2 |

+

|

| 3 |

+

## 介绍

|

| 4 |

+

|

| 5 |

+

🌍 [_READ THIS IN ENGLISH_](README_en.md)

|

| 6 |

+

|

| 7 |

+

🤖️ 一种利用 [langchain](https://github.com/hwchase17/langchain) 思想实现的基于本地知识库的问答应用,目标期望建立一套对中文场景与开源模型支持友好、可离线运行的知识库问答解决方案。

|

| 8 |

+

|

| 9 |

+

💡 受 [GanymedeNil](https://github.com/GanymedeNil) 的项目 [document.ai](https://github.com/GanymedeNil/document.ai) 和 [AlexZhangji](https://github.com/AlexZhangji) 创建的 [ChatGLM-6B Pull Request](https://github.com/THUDM/ChatGLM-6B/pull/216) 启发,建立了全流程可使用开源模型实现的本地知识库问答应用。现已支持使用 [ChatGLM-6B](https://github.com/THUDM/ChatGLM-6B) 等大语言模型直接接入,或通过 [fastchat](https://github.com/lm-sys/FastChat) api 形式接入 Vicuna, Alpaca, LLaMA, Koala, RWKV 等模型。

|

| 10 |

+

|

| 11 |

+

✅ 本项目中 Embedding 默认选用的是 [GanymedeNil/text2vec-large-chinese](https://huggingface.co/GanymedeNil/text2vec-large-chinese/tree/main),LLM 默认选用的是 [ChatGLM-6B](https://github.com/THUDM/ChatGLM-6B)。依托上述模型,本项目可实现全部使用**开源**模型**离线私有部署**。

|

| 12 |

+

|

| 13 |

+

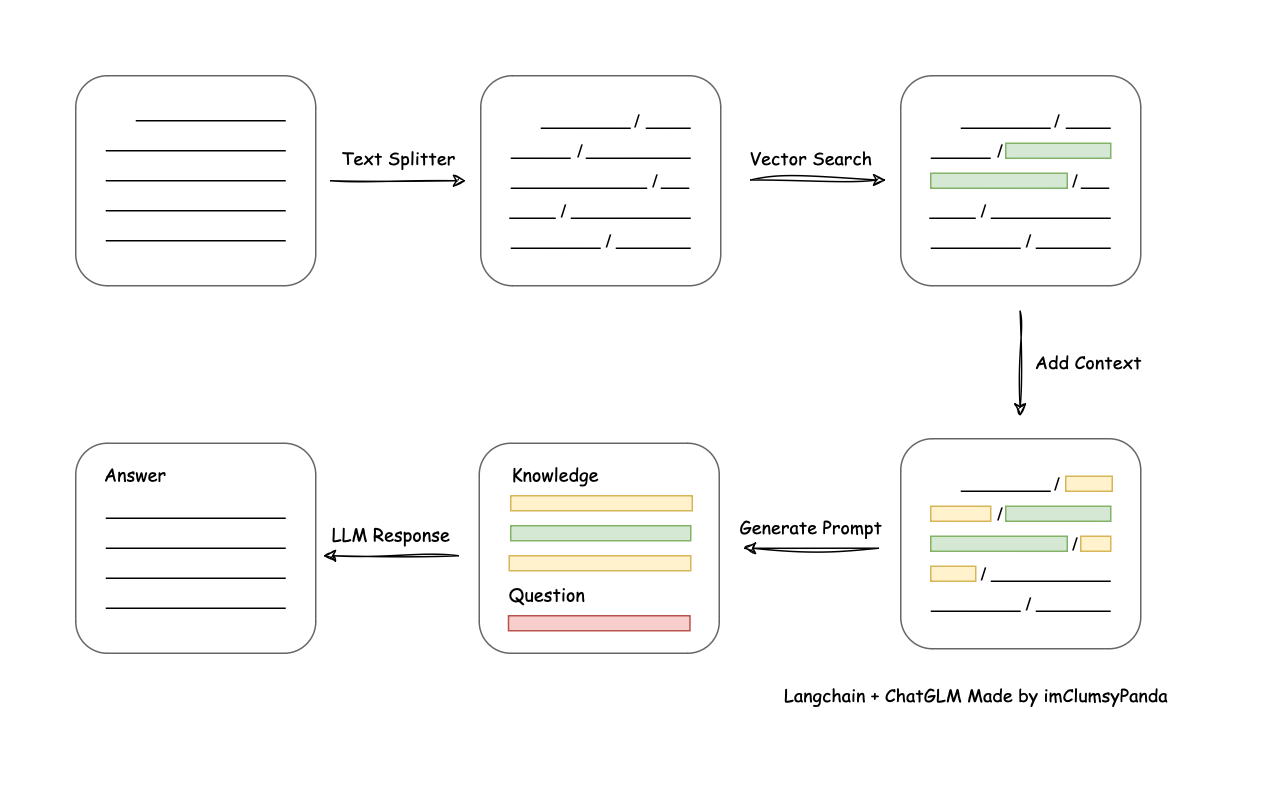

⛓️ 本项目实现原理如下图所示,过程包括加载文件 -> 读取文本 -> 文本分割 -> 文本向量化 -> 问句向量化 -> 在文本向量中匹配出与问句向量最相似的`top k`个 -> 匹配出的文本作为上下文和问题一起添加到`prompt`中 -> 提交给`LLM`生成回答。

|

| 14 |

+

|

| 15 |

+

📺 [原理介绍视频](https://www.bilibili.com/video/BV13M4y1e7cN/?share_source=copy_web&vd_source=e6c5aafe684f30fbe41925d61ca6d514)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

从文档处理角度来看,实现流程如下:

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

🚩 本项目未涉及微调、训练过程,但可利用微调或训练对本项目效果进行优化。

|

| 25 |

+

|

| 26 |

+

🌐 [AutoDL 镜像](https://www.codewithgpu.com/i/imClumsyPanda/langchain-ChatGLM/langchain-ChatGLM)

|

| 27 |

+

|

| 28 |

+

📓 [ModelWhale 在线运行项目](https://www.heywhale.com/mw/project/643977aa446c45f4592a1e59)

|

| 29 |

+

|

| 30 |

+

## 变更日志

|

| 31 |

+

|

| 32 |

+

参见 [变更日志](docs/CHANGELOG.md)。

|

| 33 |

+

|

| 34 |

+

## 硬件需求

|

| 35 |

+

|

| 36 |

+

- ChatGLM-6B 模型硬件需求

|

| 37 |

+

|

| 38 |

+

注:如未将模型下载至本地,请执行前检查`$HOME/.cache/huggingface/`文件夹剩余空间,模型文件下载至本地需要 15 GB 存储空间。

|

| 39 |

+

注:一些其它的可选启动项见[项目启动选项](docs/StartOption.md)

|

| 40 |

+

模型下载方法可参考 [常见问题](docs/FAQ.md) 中 Q8。

|

| 41 |

+

|

| 42 |

+

| **量化等级** | **最低 GPU 显存**(推理) | **最低 GPU 显存**(高效参数微调) |

|

| 43 |

+

| -------------- | ------------------------- | --------------------------------- |

|

| 44 |

+

| FP16(无量化) | 13 GB | 14 GB |

|

| 45 |

+

| INT8 | 8 GB | 9 GB |

|

| 46 |

+

| INT4 | 6 GB | 7 GB |

|

| 47 |

+

|

| 48 |

+

- MOSS 模型硬件需求

|

| 49 |

+

|

| 50 |

+

注:如未将模型下载至本地,请执行前检查`$HOME/.cache/huggingface/`文件夹剩余空间,模型文件下载至本地需要 70 GB 存储空间

|

| 51 |

+

|

| 52 |

+

模型下载方法可参考 [常见问题](docs/FAQ.md) 中 Q8。

|

| 53 |

+

|

| 54 |

+

| **量化等级** | **最低 GPU 显存**(推理) | **最低 GPU 显存**(高效参数微调) |

|

| 55 |

+

|-------------------|-----------------------| --------------------------------- |

|

| 56 |

+

| FP16(无量化) | 68 GB | - |

|

| 57 |

+

| INT8 | 20 GB | - |

|

| 58 |

+

|

| 59 |

+

- Embedding 模型硬件需求

|

| 60 |

+

|

| 61 |

+

本项目中默认选用的 Embedding 模型 [GanymedeNil/text2vec-large-chinese](https://huggingface.co/GanymedeNil/text2vec-large-chinese/tree/main) 约占用显存 3GB,也可修改为在 CPU 中运行。

|

| 62 |

+

|

| 63 |

+

## Docker 部署

|

| 64 |

+

为了能让容器使用主机GPU资源,需要在主机上安装 [NVIDIA Container Toolkit](https://github.com/NVIDIA/nvidia-container-toolkit)。具体安装步骤如下:

|

| 65 |

+

```shell

|

| 66 |

+

sudo apt-get update

|

| 67 |

+

sudo apt-get install -y nvidia-container-toolkit-base

|

| 68 |

+

sudo systemctl daemon-reload

|

| 69 |

+

sudo systemctl restart docker

|

| 70 |

+

```

|

| 71 |

+

安装完成后,可以使用以下命令编译镜像和启动容器:

|

| 72 |

+

```

|

| 73 |

+

docker build -f Dockerfile-cuda -t chatglm-cuda:latest .

|

| 74 |

+

docker run --gpus all -d --name chatglm -p 7860:7860 chatglm-cuda:latest

|

| 75 |

+

|

| 76 |

+

#若要使用离线模型,请配置好模型路径,然后此repo挂载到Container

|

| 77 |

+

docker run --gpus all -d --name chatglm -p 7860:7860 -v ~/github/langchain-ChatGLM:/chatGLM chatglm-cuda:latest

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

## 开发部署

|

| 82 |

+

|

| 83 |

+

### 软件需求

|

| 84 |

+

|

| 85 |

+

本项目已在 Python 3.8.1 - 3.10,CUDA 11.7 环境下完成测试。已在 Windows、ARM 架构的 macOS、Linux 系统中完成测试。

|

| 86 |

+

|

| 87 |

+

vue前端需要node18环境

|

| 88 |

+

|

| 89 |

+

### 从本地加载模型

|

| 90 |

+

|

| 91 |

+

请参考 [THUDM/ChatGLM-6B#从本地加载模型](https://github.com/THUDM/ChatGLM-6B#从本地加载��型)

|

| 92 |

+

|

| 93 |

+

### 1. 安装环境

|

| 94 |

+

|

| 95 |

+

参见 [安装指南](docs/INSTALL.md)。

|

| 96 |

+

|

| 97 |

+

### 2. 设置模型默认参数

|

| 98 |

+

|

| 99 |

+

在开始执行 Web UI 或命令行交互前,请先检查 [configs/model_config.py](configs/model_config.py) 中的各项模型参数设计是否符合需求。

|

| 100 |

+

|

| 101 |

+

如需通过 fastchat 以 api 形式调用 llm,请参考 [fastchat 调用实现](docs/fastchat.md)

|

| 102 |

+

|

| 103 |

+

### 3. 执行脚本体验 Web UI 或命令行交互

|

| 104 |

+

|

| 105 |

+

> 注:鉴于环境部署过程中可能遇到问题,建议首先测试命令行脚本。建议命令行脚本测试可正常运行后再运行 Web UI。

|

| 106 |

+

|

| 107 |

+

执行 [cli_demo.py](cli_demo.py) 脚本体验**命令行交互**:

|

| 108 |

+

```shell

|

| 109 |

+

$ python cli_demo.py

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

或执行 [webui.py](webui.py) 脚本体验 **Web 交互**

|

| 113 |

+

|

| 114 |

+

```shell

|

| 115 |

+

$ python webui.py

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

或执行 [api.py](api.py) 利用 fastapi 部署 API

|

| 119 |

+

```shell

|

| 120 |

+

$ python api.py

|

| 121 |

+

```

|

| 122 |

+

或成功部署 API 后,执行以下脚本体验基于 VUE 的前端页面

|

| 123 |

+

```shell

|

| 124 |

+

$ cd views

|

| 125 |

+

|

| 126 |

+

$ pnpm i

|

| 127 |

+

|

| 128 |

+

$ npm run dev

|

| 129 |

+

```

|

| 130 |

+

|

| 131 |

+

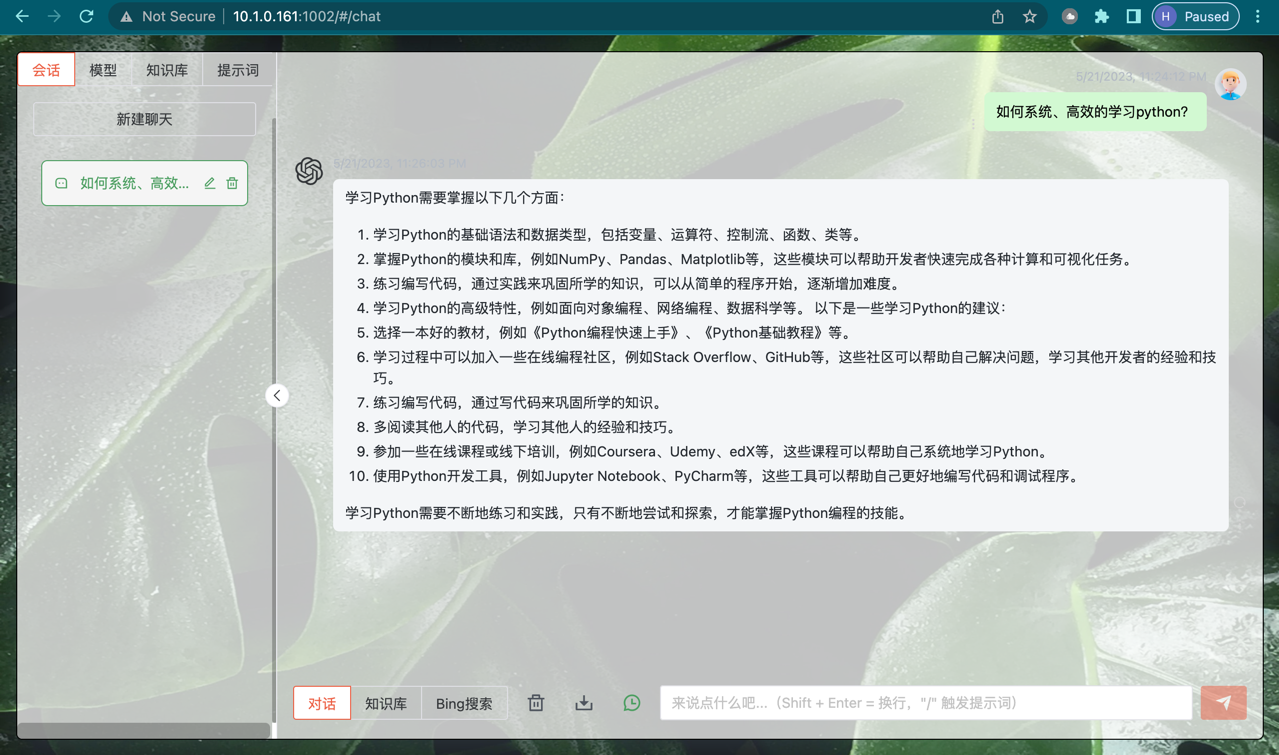

VUE 前端界面如下图所示:

|

| 132 |

+

1. `对话` 界面

|

| 133 |

+

|

| 134 |

+

2. `知识库问答` 界面

|

| 135 |

+

|

| 136 |

+

3. `Bing搜索` 界面

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

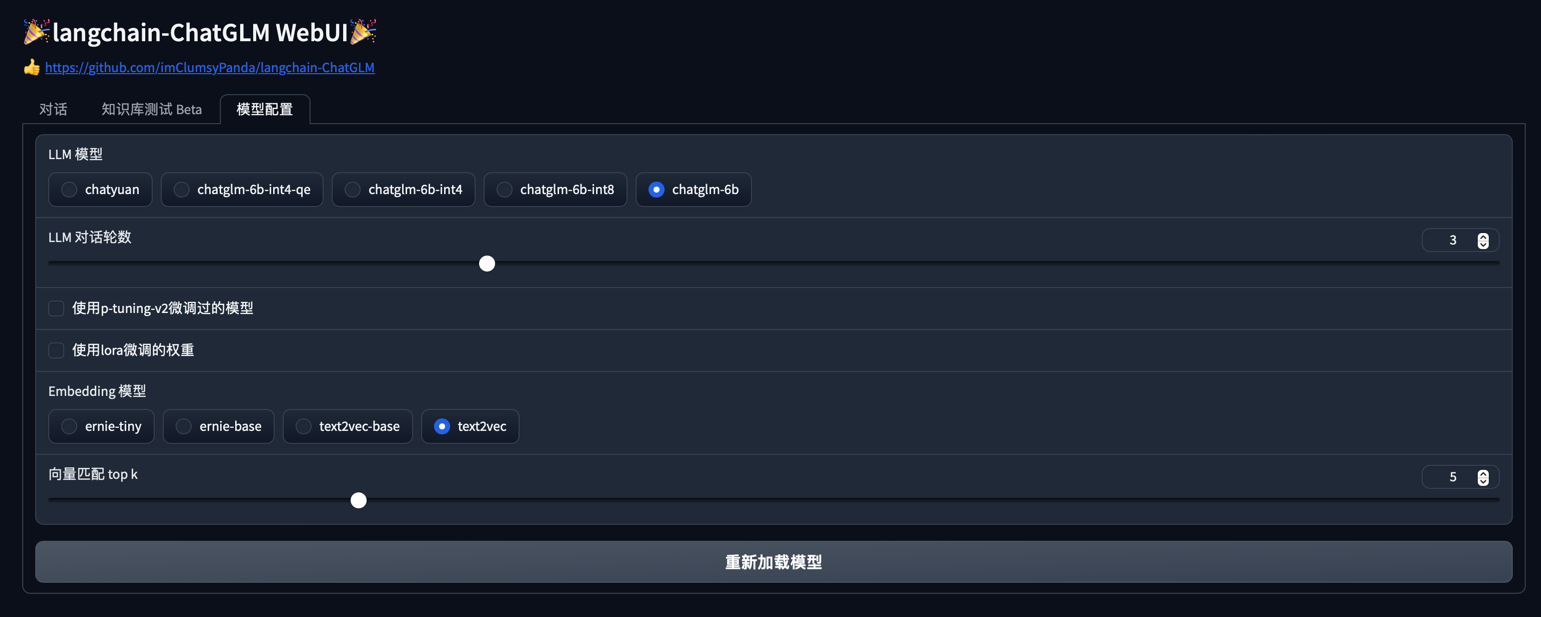

WebUI 界面如下图所示:

|

| 140 |

+

1. `对话` Tab 界面

|

| 141 |

+

|

| 142 |

+

2. `知识库测试 Beta` Tab 界面

|

| 143 |

+

|

| 144 |

+

3. `模型配置` Tab 界面

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

Web UI 可以实现如下功能:

|

| 148 |

+

|

| 149 |

+

1. 运行前自动读取`configs/model_config.py`中`LLM`及`Embedding`模型枚举及默认模型设置运行模型,如需重新加载模型,可在 `模型配置` Tab 重新选择后点击 `重新加载模型` 进行模型加载;

|

| 150 |

+

2. 可手动调节保留对话历史长度、匹配知识库文段数量,可根据显存大小自行调节;

|

| 151 |

+

3. `对话` Tab 具备模式选择功能,可选择 `LLM对话` 与 `知识库问答` 模式进行对话,支持流式对话;

|

| 152 |

+

4. 添加 `配置知识库` 功能,支持选择已有知识库或新建知识库,并可向知识库中**新增**上传文件/文件夹,使用文件上传组件选择好文件后点击 `上传文件并加载知识库`,会将所选上传文档数据加载至知识库中,并基于更新后知识库进行问答;

|

| 153 |

+

5. 新增 `知识库测试 Beta` Tab,可用于测试不同文本切分方法与检索相关度阈值设置,暂不支持将测试参数作为 `对话` Tab 设置参数。

|

| 154 |

+

6. 后续版本中将会增加对知识库的修改或删除,及知识库中已导入文件的查看。

|

| 155 |

+

|

| 156 |

+

### 常见问题

|

| 157 |

+

|

| 158 |

+

参见 [常见问题](docs/FAQ.md)。

|

| 159 |

+

|

| 160 |

+

## Demo

|

| 161 |

+

|

| 162 |

+

以问题`chatglm-6b 的局限性具体体现在哪里,如何实现改进`为例:

|

| 163 |

+

|

| 164 |

+

未使用 langchain 接入本地文档时:

|

| 165 |

+

|

| 166 |

+

>ChatGLM-6B 是一个基于 GPT-3 的聊天机器人,它的局限性主要体现在以下几个方面:

|

| 167 |

+

>1. 语言模型的精度:GPT-3 是一种大型的语言模型,它的精度比较高,但是仍然有一些局限性。例如,它可能无法准确地理解某些方言、口音或者复杂的句子结构。

|

| 168 |

+

>2. 知识图谱的完整性:GPT-3 可以生成一些知识图谱,但是其完整性和准确性可能不够。例如,它可能无法准确地理解某些领域的专业知识和术语。

|

| 169 |

+

>3. 对话生成的连贯性:GPT-3 可以生成一些连贯的对话,但是其生成的对话可能不够自然。例如,它可能会生成一些虚假的、不连贯的对话或者误解用户的意图。

|

| 170 |

+

>

|

| 171 |

+

>为了改进 ChatGLM-6B,可以考虑以下几个方面:

|

| 172 |

+

>1. 提高语言模型的精度:可以通过增加训练数据、使用更好的语言模型架构、优化模型训练过程等方式来提高语言模型的精度。

|

| 173 |

+

>2. 增强知识图谱的完整性:可以通过增加知识图谱的维度、使用更好的知识图谱构建方法、优化知识图谱的匹配算法等方式来增强知识图谱的完整性。

|

| 174 |

+

>3. 提高对话生成的连贯性:可以通过增加对话生成模型的上下文信息、使用更好的上下文信息提取方法、优化对话生成模型的算法等方式来提高对话生成的连贯性。

|

| 175 |

+

>4. 引入更多的人类反馈:人类反馈可以帮助 ChatGLM-6B 更好地理解用户的意图和需求,从而提高对话生成的质量和准确性。可以通过引入更多的人类客服、使用人类反馈收集器等方式来引入更多的人类反馈。

|

| 176 |

+

|

| 177 |

+

使用 langchain 接入 ChatGLM-6B 项目的 README.md 文件后 ChatGLM 的回答:

|

| 178 |

+

>ChatGLM-6B 是一个基于深度学习的自然语言处理模型,它在回答问题方面表现出色。但是,它也有一些局限性,具体如下:

|

| 179 |

+

>1. 语言理解有限:ChatGLM-6B 只学习了中文自然语言,对于其他语言可能会存在理解上的局限性。

|

| 180 |

+

>2. 知识储备不足:ChatGLM-6B 的训练数据集只包含了中文语料,因此它可能无法回答一些非中文的问题或者某些特定领域的问题。

|

| 181 |

+

>3. 数据量有限:ChatGLM-6B 的训练数据集只有几百万条记录,因此它可能无法回答一些非常具体或者复杂的问题。

|

| 182 |

+

>

|

| 183 |

+

>为了改进 ChatGLM-6B,可以考虑以下几个方面:

|

| 184 |

+

>1. 学习更多的语言知识:可以学习其他语言的自然语言处理技术,扩大语言理解的范围。

|

| 185 |

+

>2. 扩大知识储备:可以收集更多的中文语料,或者使用其他语言的数据集来扩充知识储备。

|

| 186 |

+

>3. 增加数据量:可以使用更大的数据集来训练 ChatGLM-6B,提高模型的表现。

|

| 187 |

+

>4. 引入更多的评估指标:可以引入更多的评估指标来评估模型的表现,从而发现 ChatGLM-6B 存在的不足和局限性。

|

| 188 |

+

>5. 改进模型架构:可以改进 ChatGLM-6B 的模型架构,提高模型的性能和表现。例如,可以使用更大的神经网络或者改进的卷积神经网络结构。

|

| 189 |

+

|

| 190 |

+

## 路线图

|

| 191 |

+

|

| 192 |

+

- [ ] Langchain 应用

|

| 193 |

+

- [x] 接入非结构化文档(已支持 md、pdf、docx、txt 文件格式)

|

| 194 |

+

- [x] jpg 与 png 格式图片的 OCR 文字识别

|

| 195 |

+

- [x] 搜索引擎接入

|

| 196 |

+

- [ ] 本地网页接入

|

| 197 |

+

- [ ] 结构化数据接入(如 csv、Excel、SQL 等)

|

| 198 |

+

- [ ] 知识图谱/图数据库接入

|

| 199 |

+

- [ ] Agent 实现

|

| 200 |

+

- [x] 增加更多 LLM 模型支持

|

| 201 |

+

- [x] [THUDM/chatglm-6b](https://huggingface.co/THUDM/chatglm-6b)

|

| 202 |

+

- [x] [THUDM/chatglm-6b-int8](https://huggingface.co/THUDM/chatglm-6b-int8)

|

| 203 |

+

- [x] [THUDM/chatglm-6b-int4](https://huggingface.co/THUDM/chatglm-6b-int4)

|

| 204 |

+

- [x] [THUDM/chatglm-6b-int4-qe](https://huggingface.co/THUDM/chatglm-6b-int4-qe)

|

| 205 |

+

- [x] [ClueAI/ChatYuan-large-v2](https://huggingface.co/ClueAI/ChatYuan-large-v2)

|

| 206 |

+

- [x] [fnlp/moss-moon-003-sft](https://huggingface.co/fnlp/moss-moon-003-sft)

|

| 207 |

+

- [x] 支持通过调用 [fastchat](https://github.com/lm-sys/FastChat) api 调用 llm

|

| 208 |

+

- [x] 增加更多 Embedding 模型支持

|

| 209 |

+

- [x] [nghuyong/ernie-3.0-nano-zh](https://huggingface.co/nghuyong/ernie-3.0-nano-zh)

|

| 210 |

+

- [x] [nghuyong/ernie-3.0-base-zh](https://huggingface.co/nghuyong/ernie-3.0-base-zh)

|

| 211 |

+

- [x] [shibing624/text2vec-base-chinese](https://huggingface.co/shibing624/text2vec-base-chinese)

|

| 212 |

+

- [x] [GanymedeNil/text2vec-large-chinese](https://huggingface.co/GanymedeNil/text2vec-large-chinese)

|

| 213 |

+

- [x] [moka-ai/m3e-small](https://huggingface.co/moka-ai/m3e-small)

|

| 214 |

+

- [x] [moka-ai/m3e-base](https://huggingface.co/moka-ai/m3e-base)

|

| 215 |

+

- [ ] Web UI

|

| 216 |

+

- [x] 基于 gradio 实现 Web UI DEMO

|

| 217 |

+

- [x] 基于 streamlit 实现 Web UI DEMO

|

| 218 |

+

- [x] 添加输出内容及错误提示

|

| 219 |

+

- [x] 引用标注

|

| 220 |

+

- [ ] 增加知识库管理

|

| 221 |

+

- [x] 选择知识库开始问答

|

| 222 |

+

- [x] 上传文件/文件夹至知识库

|

| 223 |

+

- [x] 知识库测试

|

| 224 |

+

- [ ] 删除知识库中文件

|

| 225 |

+

- [x] 支持搜索引擎问答

|

| 226 |

+

- [ ] 增加 API 支持

|

| 227 |

+

- [x] 利用 fastapi 实现 API 部署方式

|

| 228 |

+

- [ ] 实现调用 API 的 Web UI Demo

|

| 229 |

+

- [x] VUE 前端

|

| 230 |

+

|

| 231 |

+

## 项目交流群

|

| 232 |

+

<img src="img/qr_code_32.jpg" alt="二维码" width="300" height="300" />

|

| 233 |

+

|

| 234 |

+

|

| 235 |

+

🎉 langchain-ChatGLM 项目微信交流群,如果你也对本项目感兴趣,欢迎加入群聊参与讨论交流。

|

langchain-ChatGLM-master/README_en.md

ADDED

|

@@ -0,0 +1,247 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|