Spaces:

Sleeping

Dev (#9)

Browse files* stramlit service

streamlit run main.py

* draft with model for emotion recognition (minixception)

* streamlit with polarbar, videoplayer, table with timelines

added VIDEO_PATH

video length check

* add::bar_chart

* change main -> app

* import pandas

* Update pyproject.toml

added libs for run without AImodel

* seconds_to_time

* make new folder in root and save video

VIDEO_PATH

* draw readme

* delete readme

* show ENG readme homepage

show eng readme with img

* ....

* VideoRemotionRecognizer class with _analyze and emotions_summary methods implemented

* implement emotions_timestamps method

* correct linters settings

* update poetry lock

* add comments

* add neutral emotion filtering and refactor period_length constant

* add progress logs for video processing

* paths fixed

* streamlit added

* fix video reading stop

* worked) 3 min max video

no progress bar ( no demo inside button (

* video max time 3 min

* add Development section

* add Installation section

* add Dockerfile

* add requirements.txt

* add huggingface configuration; fix streamlit version

* put video under git lfs

* remove video from usual git

---------

Co-authored-by: Misha <krz.m@ya.ru>

Co-authored-by: Muzafarov Danil <muzafarov.danil@mail.ru>

Co-authored-by: Muzafarov Danil <118075209+muzafarovdan@users.noreply.github.com>

Co-authored-by: AshimIsha <30750212+AshimIsha@users.noreply.github.com>

- .gitattributes +1 -0

- Dockerfile +15 -0

- README.md +145 -1

- Readme_for_main_page.txt +44 -0

- __pycache__/emrec_model.cpython-311.pyc +0 -0

- app.py +56 -0

- emrec_model.py +125 -0

- img/2.png +0 -0

- img/3.png +0 -0

- img/boy.png +0 -0

- img/girl.png +0 -0

- plotlycharts/__pycache__/charts.cpython-311.pyc +0 -0

- plotlycharts/charts.py +45 -0

- poetry.lock +0 -0

- pyproject.toml +27 -1

- requirements.txt +94 -0

- sidebars/__pycache__/video_analyse.cpython-311.pyc +0 -0

- sidebars/video_analyse.py +159 -0

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Videos/tomp3_cc_Zoom_Class_Meeting_Downing_Sociology_220_10222020_1080p.mp4 filter=lfs diff=lfs merge=lfs -text

|

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.10

|

| 2 |

+

|

| 3 |

+

RUN apt-get update && apt-get install ffmpeg libsm6 libxext6 -y

|

| 4 |

+

|

| 5 |

+

RUN mkdir /app

|

| 6 |

+

COPY . /app

|

| 7 |

+

|

| 8 |

+

WORKDIR /app

|

| 9 |

+

|

| 10 |

+

RUN pip install -r requirements.txt

|

| 11 |

+

|

| 12 |

+

EXPOSE 8501

|

| 13 |

+

HEALTHCHECK CMD curl --fail http://localhost:8501/_stcore/health

|

| 14 |

+

|

| 15 |

+

ENTRYPOINT ["streamlit", "run", "app.py", "--server.port=8501", "--server.address=0.0.0.0"]

|

|

@@ -1,3 +1,147 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

# EdControl

|

| 2 |

|

| 3 |

-

Automated analytics service that tracks students' emotional responses during online classes.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: EdControl

|

| 3 |

+

emoji: 👨🏼🎓

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: green

|

| 6 |

+

sdk: streamlit

|

| 7 |

+

sdk_version: 1.25.0

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

# EdControl

|

| 13 |

|

| 14 |

+

Automated analytics service that tracks students' emotional responses during online classes.

|

| 15 |

+

|

| 16 |

+

## Problem

|

| 17 |

+

На сегодняшний день, существует большое количество платформ для онлайн-обучения. Существуют как крупные онлайн-школы, так и более малые по количеству учащихся. И из-за большого потока клиентов необходимо набирать преподавателей для проведения занятий. И менеджеры не всегда могут понять на сколько преподаватель компетентен в области soft skills при подаче материала. Следовательно, необходимо вовремя определять агрессивных и не умеющих контролировать свои эмоции преподавателей, для сохранения репутации компании.

|

| 18 |

+

|

| 19 |

+

Today, there are a large number of platforms for online learning. There are both large online schools and smaller ones in terms of the number of students. And because of the large flow of clients, it is necessary to recruit teachers to conduct classes. And managers can not always understand how much the teacher is competent in the field of soft skills when submitting material. Therefore, it is necessary to identify aggressive and unable to control their emotions teachers in time to preserve the reputation of the company.

|

| 20 |

+

|

| 21 |

+

## Solving the problem

|

| 22 |

+

Наш продукт предлагает решение данной проблемы оценки образования, помогая онлайн платформам экономить время менеджеров при ручном просмотре видео-уроков преподавателей и повышать бизнес метрики компании, выявляя на ранней стадии не компетентных преподавателей. Проблема решается путем распознавания негативных эмоций клиента во время онлайн-урока с преподавателем. Вы загружаете запись видео-урока в наш сервис и получаете dashboard с информацией и аналитикой по всему уроку. Также на данном dash-board, при выявлении каких-либо негативных ситуаций, можно увидеть конкретные timestamp, когда была замечена эмоция и на сколько она велика.

|

| 23 |

+

|

| 24 |

+

Our product offers a solution to this problem of education assessment, helping online platforms save managers' time when manually viewing teachers' video lessons and improve the company's business metrics by identifying non-competent teachers at an early stage. The problem is solved by recognizing the client's negative emotions during an online lesson with a teacher. You upload a video lesson recording to our service and get a dashboard with information and analytics throughout the lesson. Also on this dashboard, when identifying any negative situations, you can see the specific timestamp when the emotion was noticed and how big it is.

|

| 25 |

+

|

| 26 |

+

## Implementation

|

| 27 |

+

На данный момент реализованы:

|

| 28 |

+

- Эмоциональное оценка состояния человека

|

| 29 |

+

- Аналитика и визуализация данных для удобного анализа видео-урока

|

| 30 |

+

- Рекомендации преподавателю для проведения последующих уроков, при обнаружении каких-либо проблем

|

| 31 |

+

|

| 32 |

+

Модель с помощью CV определяет эмоцию, которую испытывает человек в данный момент времени и отображает глубину эмоции в шкале от 0 до 100:

|

| 33 |

+

|

| 34 |

+

Currently implemented:

|

| 35 |

+

- Emotional assessment of a person's condition

|

| 36 |

+

- Data analytics and visualization for convenient video lesson analysis

|

| 37 |

+

- Recommendations to the teacher for subsequent lessons, if any problems are found

|

| 38 |

+

|

| 39 |

+

The CV model determines the emotion that a person is experiencing at a given time and displays the depth of emotion on a scale from 0 to 100:

|

| 40 |

+

|

| 41 |

+

<p align="center"><img src="img/girl.png" alt="girl" width=40%>

|

| 42 |

+

<img src="img/boy.png" alt="boy" width=40%>

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

## The appearance of the service

|

| 47 |

+

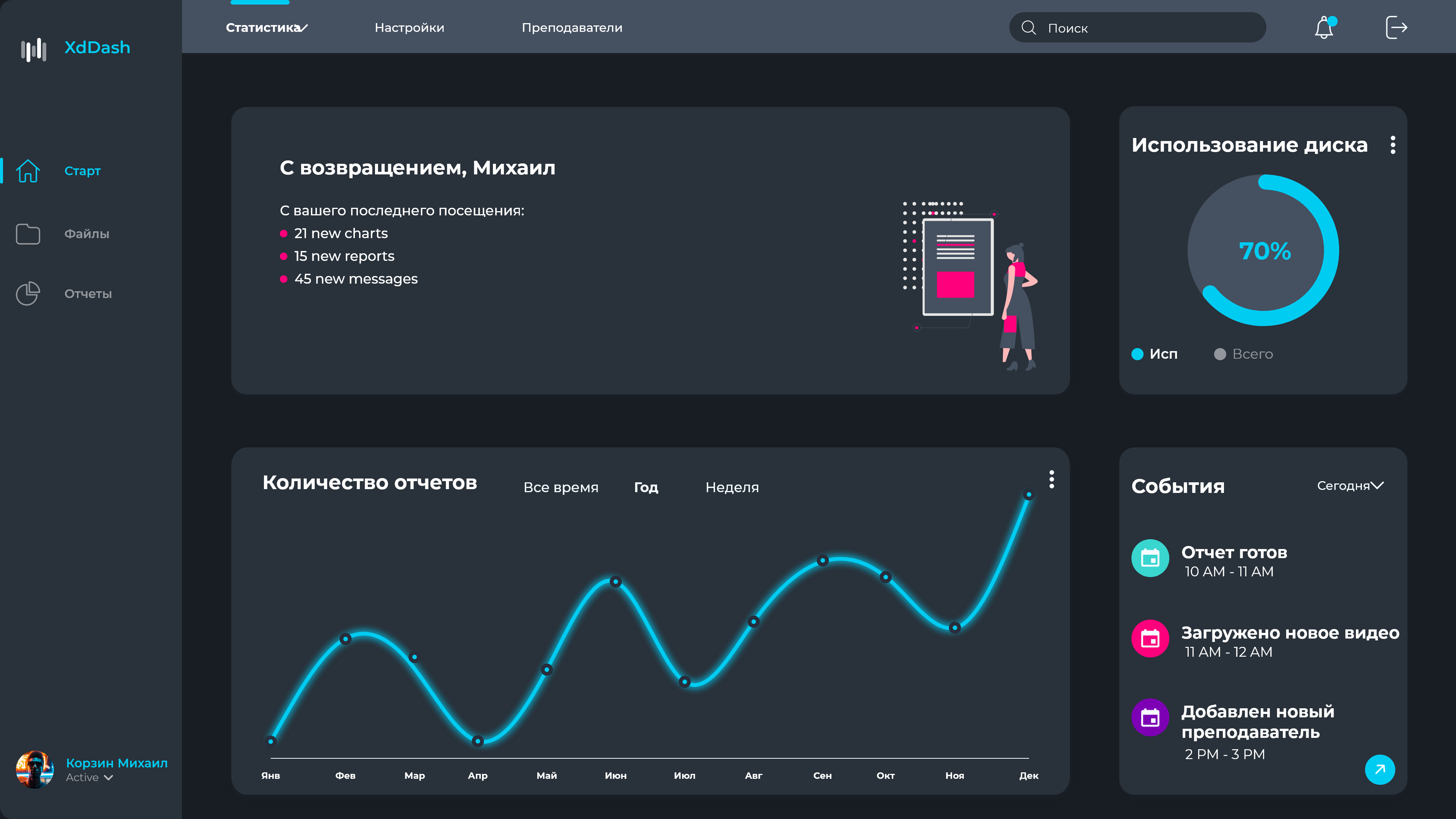

Внешний вид сервиса интуитивно понятен для пользователей и его главная страница выглядит так:

|

| 48 |

+

|

| 49 |

+

The appearance of the service is intuitive for users and its main page looks like this:

|

| 50 |

+

<p align="center"><img src="/img/3.png" width=90% alt="Main page"></p>

|

| 51 |

+

|

| 52 |

+

После успешной обработки загруженного видео вы можете получить аналитику и рекомендации:

|

| 53 |

+

|

| 54 |

+

After successfully processing the uploaded video, you can get analytics and recommendations:

|

| 55 |

+

<p align="center"><img src="/img/1.png" width=90% alt="Uploaded page"></p>

|

| 56 |

+

|

| 57 |

+

## Installation

|

| 58 |

+

|

| 59 |

+

1\. Install [git](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) and [docker engine](https://docs.docker.com/engine/install/)

|

| 60 |

+

|

| 61 |

+

2\. Clone the project:

|

| 62 |

+

|

| 63 |

+

```bash

|

| 64 |

+

git clone https://github.com/Wander1ngW1nd/EdControl

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

3\. Build an image:

|

| 68 |

+

|

| 69 |

+

```bash

|

| 70 |

+

docker build -t edcontrol_image EdControl

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

4\. Run application container:

|

| 74 |

+

|

| 75 |

+

```bash

|

| 76 |

+

docker run --name edcontrol_app -dp 8501 edcontrol_image

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

5\. Figure out which port was assigned to your application:

|

| 80 |

+

|

| 81 |

+

```bash

|

| 82 |

+

docker port edcontrol_app

|

| 83 |

+

```

|

| 84 |

+

You will see the similar output:

|

| 85 |

+

|

| 86 |

+

```

|

| 87 |

+

8501/tcp -> 0.0.0.0:<your_port_number>

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

6\. Go to:

|

| 91 |

+

```

|

| 92 |

+

http://0.0.0.0:<your_port_number>

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

Now you can use the app!

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

## Development

|

| 99 |

+

|

| 100 |

+

### Dependencies Management

|

| 101 |

+

|

| 102 |

+

Project’s dependencies are managed by [poetry](https://python-poetry.org/). So, all the dependencies and configuration parameters are listed in [pyproject.toml](pyproject.toml).

|

| 103 |

+

|

| 104 |

+

To install the dependencies, follow these steps:

|

| 105 |

+

|

| 106 |

+

1\. Install [git](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) and [poetry](https://python-poetry.org/docs/#installation)

|

| 107 |

+

|

| 108 |

+

2\. Clone the project and go to the corresponding directory:

|

| 109 |

+

|

| 110 |

+

```bash

|

| 111 |

+

git clone https://github.com/Wander1ngW1nd/EdControl

|

| 112 |

+

cd EdControl

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

3\. (Optional) If your python version does not match the requirements specified in [pyproject.toml](pyproject.toml), [install one of the matching versions](https://realpython.com/installing-python)

|

| 116 |

+

|

| 117 |

+

4\. Create virtual environment and activate it

|

| 118 |

+

|

| 119 |

+

```bash

|

| 120 |

+

poetry shell

|

| 121 |

+

```

|

| 122 |

+

|

| 123 |

+

5\. Install dependencies

|

| 124 |

+

|

| 125 |

+

```bash

|

| 126 |

+

poetry lock --no-update

|

| 127 |

+

poetry install

|

| 128 |

+

```

|

| 129 |

+

|

| 130 |

+

## Road Map

|

| 131 |

+

На данный момент продукт находится в рабочем состоянии и готов к использованию. Наша команда EdControl видит перспективы и дальнейший путь развития продукта, добавление новых функций и расширение целевой аудитории.

|

| 132 |

+

|

| 133 |

+

- Вырезание окна с обучающимся

|

| 134 |

+

- Добавление распознавания речи (текст) и интонации (аудио) для повышения точности определения эмоционального состояния

|

| 135 |

+

- Добавление распознования бан слов и жестов

|

| 136 |

+

- Добавление функции идентификации по лицу

|

| 137 |

+

- Добавление возможности распознования эмоционального состояния в групповых звонках и конференциях

|

| 138 |

+

- Интеграция в LMS системы различных платформ

|

| 139 |

+

|

| 140 |

+

At the moment, the product is in working condition and ready for use. Our EdControl team sees prospects and the further path of product development, the addition of new features and the expansion of the target audience.

|

| 141 |

+

|

| 142 |

+

- Cutting out a window with students

|

| 143 |

+

- Adding speech recognition (text) and intonation (audio) to improve the accuracy of determining the emotional state

|

| 144 |

+

- Adding recognition of ban words and gestures

|

| 145 |

+

- Adding face identification function

|

| 146 |

+

- Adding the ability to recognize the emotional state in group calls and conferences

|

| 147 |

+

- Integration into LMS systems of various platforms

|

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# EdControl

|

| 2 |

+

|

| 3 |

+

Automated analytics service that tracks students' emotional responses during online classes.

|

| 4 |

+

|

| 5 |

+

## Problem

|

| 6 |

+

|

| 7 |

+

Today, there are a large number of platforms for online learning. There are both large online schools and smaller ones in terms of the number of students. And because of the large flow of clients, it is necessary to recruit teachers to conduct classes. And managers can not always understand how much the teacher is competent in the field of soft skills when submitting material. Therefore, it is necessary to identify aggressive and unable to control their emotions teachers in time to preserve the reputation of the company.

|

| 8 |

+

|

| 9 |

+

## Solving the problem

|

| 10 |

+

|

| 11 |

+

Our product offers a solution to this problem of education assessment, helping online platforms save managers' time when manually viewing teachers' video lessons and improve the company's business metrics by identifying non-competent teachers at an early stage. The problem is solved by recognizing the client's negative emotions during an online lesson with a teacher. You upload a video lesson recording to our service and get a dashboard with information and analytics throughout the lesson. Also on this dashboard, when identifying any negative situations, you can see the specific timestamp when the emotion was noticed and how big it is.

|

| 12 |

+

|

| 13 |

+

## Implementation

|

| 14 |

+

|

| 15 |

+

Currently implemented:

|

| 16 |

+

- Emotional assessment of a person's condition

|

| 17 |

+

- Data analytics and visualization for convenient video lesson analysis

|

| 18 |

+

- Recommendations to the teacher for subsequent lessons, if any problems are found

|

| 19 |

+

|

| 20 |

+

The CV model determines the emotion that a person is experiencing at a given time and displays the depth of emotion on a scale from 0 to 100:

|

| 21 |

+

|

| 22 |

+

girl.png

|

| 23 |

+

boy.png

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

## The appearance of the service

|

| 28 |

+

|

| 29 |

+

The appearance of the service is intuitive for users and its main page looks like this:

|

| 30 |

+

3.png

|

| 31 |

+

|

| 32 |

+

After successfully processing the uploaded video, you can get analytics and recommendations:

|

| 33 |

+

1.png

|

| 34 |

+

|

| 35 |

+

## Road Map

|

| 36 |

+

|

| 37 |

+

At the moment, the product is in working condition and ready for use. Our EdControl team sees prospects and the further path of product development, the addition of new features and the expansion of the target audience.

|

| 38 |

+

|

| 39 |

+

- Cutting out a window with students

|

| 40 |

+

- Adding speech recognition (text) and intonation (audio) to improve the accuracy of determining the emotional state

|

| 41 |

+

- Adding recognition of ban words and gestures

|

| 42 |

+

- Adding face identification function

|

| 43 |

+

- Adding the ability to recognize the emotional state in group calls and conferences

|

| 44 |

+

- Integration into LMS systems of various platforms

|

|

Binary file (7.65 kB). View file

|

|

|

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

"""

|

| 3 |

+

Created on Tue Sep 5 23:28:00 2023

|

| 4 |

+

|

| 5 |

+

@author: M

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import streamlit as st

|

| 9 |

+

import os

|

| 10 |

+

|

| 11 |

+

from sidebars import video_analyse

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

VIDEO_PATH = "videos"

|

| 15 |

+

|

| 16 |

+

def main():

|

| 17 |

+

st.set_page_config(

|

| 18 |

+

page_title="EdConrol",

|

| 19 |

+

page_icon="👁🗨",

|

| 20 |

+

layout="wide",

|

| 21 |

+

initial_sidebar_state="expanded",

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

column1, column2 = st.columns([1, 10])

|

| 28 |

+

|

| 29 |

+

#отображение страницы при обновлении страницы по умолчанию - home

|

| 30 |

+

if "sidebars" not in st.session_state:

|

| 31 |

+

st.session_state.sidebars = 0

|

| 32 |

+

|

| 33 |

+

with st.sidebar:

|

| 34 |

+

home = st.button("🏠")

|

| 35 |

+

st.title("Преподаватели")

|

| 36 |

+

|

| 37 |

+

analyse = st.button("Преподаватель 1")

|

| 38 |

+

|

| 39 |

+

#уловия по нажатию на кнопки

|

| 40 |

+

if home:

|

| 41 |

+

st.session_state.sidebars = 0

|

| 42 |

+

elif analyse:

|

| 43 |

+

st.session_state.sidebars = 1

|

| 44 |

+

|

| 45 |

+

if st.session_state.sidebars == 0:

|

| 46 |

+

#column2.header("EdControl")

|

| 47 |

+

video_analyse.draw_readme(column2)

|

| 48 |

+

elif st.session_state.sidebars == 1:

|

| 49 |

+

if not os.path.exists(VIDEO_PATH):

|

| 50 |

+

os.mkdir(VIDEO_PATH)

|

| 51 |

+

video_analyse.view_side_bar("Преподаватель 1", column2, VIDEO_PATH)

|

| 52 |

+

else:

|

| 53 |

+

video_analyse.view_side_bar("Преподаватель 1", column2, VIDEO_PATH)

|

| 54 |

+

|

| 55 |

+

if __name__ == "__main__":

|

| 56 |

+

main()

|

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

os.environ["TF_ENABLE_ONEDNN_OPTS"] = "0"

|

| 4 |

+

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "3"

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

import cv2

|

| 8 |

+

import numpy as np

|

| 9 |

+

import polars as pl

|

| 10 |

+

from attrs import define, field

|

| 11 |

+

from deepface import DeepFace

|

| 12 |

+

from tqdm import tqdm

|

| 13 |

+

|

| 14 |

+

_SIGNIFICANT_EMOTION_PERIOD_LENGTH_IN_SECONDS: float = 5

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

class VideoInputException(IOError):

|

| 18 |

+

pass

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

@define(slots=True, auto_attribs=True)

|

| 22 |

+

class VideoEmotionRecognizer:

|

| 23 |

+

filepath: str

|

| 24 |

+

_analyzed_frames: pl.DataFrame = field(init=False)

|

| 25 |

+

|

| 26 |

+

def __attrs_post_init__(self):

|

| 27 |

+

print("Start processing video...")

|

| 28 |

+

self._analyzed_frames = self._analyze()

|

| 29 |

+

print("Video processed")

|

| 30 |

+

|

| 31 |

+

def _analyze(self) -> pl.DataFrame:

|

| 32 |

+

# open video file

|

| 33 |

+

cap: cv2.VideoCapture = cv2.VideoCapture(self.filepath)

|

| 34 |

+

if cap.isOpened() == False:

|

| 35 |

+

raise VideoInputException("Video opening error")

|

| 36 |

+

|

| 37 |

+

# collect timestamps and emotion probabilities for every frame

|

| 38 |

+

analyzed_frames_data: dict = {"timestamp": [], "emotion": [], "probability": []}

|

| 39 |

+

total_frame_count: int = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

|

| 40 |

+

with tqdm(total=100, bar_format="{desc}: {percentage:.3f}% | {elapsed} < {remaining}") as pbar:

|

| 41 |

+

while cap.isOpened():

|

| 42 |

+

return_flag: bool

|

| 43 |

+

frame: np.ndarray

|

| 44 |

+

return_flag, frame = cap.read()

|

| 45 |

+

if not return_flag:

|

| 46 |

+

break

|

| 47 |

+

|

| 48 |

+

result = DeepFace.analyze(frame, actions="emotion", enforce_detection=False, silent=True)[0]

|

| 49 |

+

analyzed_frames_data["timestamp"] += [cap.get(cv2.CAP_PROP_POS_MSEC) / 1000] * len(

|

| 50 |

+

result["emotion"].keys()

|

| 51 |

+

)

|

| 52 |

+

analyzed_frames_data["emotion"] += list(map(str, result["emotion"].keys()))

|

| 53 |

+

analyzed_frames_data["probability"] += list(map(float, result["emotion"].values()))

|

| 54 |

+

|

| 55 |

+

pbar_update_value = 100 / total_frame_count

|

| 56 |

+

pbar.update(pbar_update_value)

|

| 57 |

+

|

| 58 |

+

return pl.DataFrame(analyzed_frames_data)

|

| 59 |

+

|

| 60 |

+

def emotions_summary(self) -> dict:

|

| 61 |

+

# sum probabilities of every emotion by frames

|

| 62 |

+

emotions_summary: pl.DataFrame = (

|

| 63 |

+

self._analyzed_frames.groupby("emotion")

|

| 64 |

+

.agg(pl.col("probability").sum())

|

| 65 |

+

.sort("probability", descending=True)

|

| 66 |

+

)

|

| 67 |

+

|

| 68 |

+

# normalize probabilities and keep only negative emotions

|

| 69 |

+

emotions_summary = emotions_summary.with_columns(

|

| 70 |

+

(pl.col("probability") / pl.sum("probability")).alias("probability")

|

| 71 |

+

).filter(pl.col("emotion") != "neutral")

|

| 72 |

+

|

| 73 |

+

# return emotion probabilities in form of dict {emotion: probability}

|

| 74 |

+

output: dict = dict(

|

| 75 |

+

zip(

|

| 76 |

+

emotions_summary["emotion"].to_list(),

|

| 77 |

+

emotions_summary["probability"].to_list(),

|

| 78 |

+

)

|

| 79 |

+

)

|

| 80 |

+

|

| 81 |

+

return output

|

| 82 |

+

|

| 83 |

+

def emotions_timestamps(self) -> dict:

|

| 84 |

+

# keep only most probable emotion in every frame

|

| 85 |

+

emotions_timestamps: pl.DataFrame = (

|

| 86 |

+

self._analyzed_frames.sort("probability", descending=True)

|

| 87 |

+

.groupby("timestamp")

|

| 88 |

+

.first()

|

| 89 |

+

.sort(by="timestamp", descending=False)

|

| 90 |

+

)

|

| 91 |

+

|

| 92 |

+

# get duration of every consecutive emotion repetition

|

| 93 |

+

emotions_timestamps = emotions_timestamps.with_columns(

|

| 94 |

+

(pl.col("emotion") != pl.col("emotion").shift_and_fill(pl.col("emotion").backward_fill(), periods=1))

|

| 95 |

+

.cumsum()

|

| 96 |

+

.alias("emotion_group")

|

| 97 |

+

)

|

| 98 |

+

emotions_timestamps = (

|

| 99 |

+

emotions_timestamps.groupby(["emotion", "emotion_group"])

|

| 100 |

+

.agg(

|

| 101 |

+

pl.col("timestamp").min().alias("emotion_start_timestamp"),

|

| 102 |

+

pl.col("timestamp").max().alias("emotion_finish_timestamp"),

|

| 103 |

+

)

|

| 104 |

+

.drop("emotion_group")

|

| 105 |

+

.sort(by="emotion_start_timestamp", descending=False)

|

| 106 |

+

)

|

| 107 |

+

|

| 108 |

+

# keep only significant negative emotions periods

|

| 109 |

+

emotions_timestamps = (

|

| 110 |

+

emotions_timestamps.with_columns(

|

| 111 |

+

(pl.col("emotion_finish_timestamp") - pl.col("emotion_start_timestamp")).alias("duration")

|

| 112 |

+

)

|

| 113 |

+

.filter(pl.col("emotion") != "neutral")

|

| 114 |

+

.filter(pl.col("duration") > _SIGNIFICANT_EMOTION_PERIOD_LENGTH_IN_SECONDS)

|

| 115 |

+

)

|

| 116 |

+

|

| 117 |

+

# return timestamps of significant negative emotions periods in form of dict {emotion: start_timestamp}

|

| 118 |

+

output: dict = dict(

|

| 119 |

+

zip(

|

| 120 |

+

emotions_timestamps["emotion"].to_list(),

|

| 121 |

+

emotions_timestamps["emotion_start_timestamp"].to_list(),

|

| 122 |

+

)

|

| 123 |

+

)

|

| 124 |

+

|

| 125 |

+

return output

|

|

|

|

|

|

Binary file (1.87 kB). View file

|

|

|

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

"""

|

| 3 |

+

Created on Wed Sep 6 16:12:00 2023

|

| 4 |

+

|

| 5 |

+

@author: PC

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import plotly.express as px

|

| 9 |

+

import pandas as pd

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def radio_chart(data):

|

| 13 |

+

|

| 14 |

+

rData=list(data.values())

|

| 15 |

+

thetaData = list(data.keys())

|

| 16 |

+

|

| 17 |

+

fig = px.line_polar(

|

| 18 |

+

r = rData,

|

| 19 |

+

theta = thetaData,

|

| 20 |

+

line_close=True,

|

| 21 |

+

color_discrete_sequence=px.colors.sequential.Plasma_r,

|

| 22 |

+

template="plotly_dark")

|

| 23 |

+

fig.update_layout(

|

| 24 |

+

autosize=False,

|

| 25 |

+

width=400,

|

| 26 |

+

height=300,

|

| 27 |

+

paper_bgcolor="Black")

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

return fig

|

| 31 |

+

|

| 32 |

+

def bar_chart(data):

|

| 33 |

+

|

| 34 |

+

#df = pd.DataFrame(dict(

|

| 35 |

+

# x = [1, 5, 2, 2, 3, 2],

|

| 36 |

+

# y = ["Злость", "Отвращение","Страх",\

|

| 37 |

+

# "Счастье","Грусть","Удивление"]))

|

| 38 |

+

xData=list(data.values())

|

| 39 |

+

yData = list(data.keys())

|

| 40 |

+

fig = px.bar(x = xData, y =yData, barmode = 'group', labels={'x': '', 'y':''}, width=500, height=300)

|

| 41 |

+

#fig.update_layout(showlegend=False)

|

| 42 |

+

fig.update_traces(marker_color = ['#f5800d','#f2ce4d','#047e79','#a69565','#cfc1af','#574c31'], marker_line_color = 'black',

|

| 43 |

+

marker_line_width = 2, opacity = 1)

|

| 44 |

+

return fig

|

| 45 |

+

|

|

The diff for this file is too large to render.

See raw diff

|

|

|

|

@@ -6,7 +6,33 @@ authors = []

|

|

| 6 |

readme = "README.md"

|

| 7 |

|

| 8 |

[tool.poetry.dependencies]

|

| 9 |

-

python = ">=3.10,<3.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

|

| 11 |

|

| 12 |

[build-system]

|

|

|

|

| 6 |

readme = "README.md"

|

| 7 |

|

| 8 |

[tool.poetry.dependencies]

|

| 9 |

+

python = ">=3.10,<3.11"

|

| 10 |

+

tensorflow = "^2.14.0rc1"

|

| 11 |

+

opencv-python = "^4.8.0.76"

|

| 12 |

+

numpy = "^1.25.2"

|

| 13 |

+

deepface = "^0.0.79"

|

| 14 |

+

polars = "^0.19.2"

|

| 15 |

+

attrs = "^23.1.0"

|

| 16 |

+

plotly = "5.16.1"

|

| 17 |

+

pandas = "2.1.0"

|

| 18 |

+

tqdm = "^4.66.1"

|

| 19 |

+

streamlit = "^1.25.0"

|

| 20 |

+

|

| 21 |

+

[tool.poetry.group.linters.dependencies]

|

| 22 |

+

black = "^23.7.0"

|

| 23 |

+

isort = "^5.12.0"

|

| 24 |

+

mypy = "^1.5.1"

|

| 25 |

+

|

| 26 |

+

[tool.black]

|

| 27 |

+

line-length = 120

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

[tool.isort]

|

| 31 |

+

line_length = 120

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

[tool.mypy]

|

| 35 |

+

ignore_missing_imports = true

|

| 36 |

|

| 37 |

|

| 38 |

[build-system]

|

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

absl-py==1.4.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 2 |

+

altair==5.1.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 3 |

+

astunparse==1.6.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 4 |

+

attrs==23.1.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 5 |

+

beautifulsoup4==4.12.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 6 |

+

blinker==1.6.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 7 |

+

cachetools==5.3.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 8 |

+

certifi==2023.7.22 ; python_version >= "3.10" and python_version < "3.11"

|

| 9 |

+

charset-normalizer==3.2.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 10 |

+

click==8.1.7 ; python_version >= "3.10" and python_version < "3.11"

|

| 11 |

+

colorama==0.4.6 ; python_version >= "3.10" and python_version < "3.11" and platform_system == "Windows"

|

| 12 |

+

deepface==0.0.79 ; python_version >= "3.10" and python_version < "3.11"

|

| 13 |

+

filelock==3.12.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 14 |

+

fire==0.5.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 15 |

+

flask==2.3.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 16 |

+

flatbuffers==23.5.26 ; python_version >= "3.10" and python_version < "3.11"

|

| 17 |

+

gast==0.4.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 18 |

+

gdown==4.7.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 19 |

+

gitdb==4.0.10 ; python_version >= "3.10" and python_version < "3.11"

|

| 20 |

+

gitpython==3.1.35 ; python_version >= "3.10" and python_version < "3.11"

|

| 21 |

+

google-auth-oauthlib==1.0.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 22 |

+

google-auth==2.22.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 23 |

+

google-pasta==0.2.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 24 |

+

grpcio==1.58.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 25 |

+

gunicorn==21.2.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 26 |

+

h5py==3.9.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 27 |

+

idna==3.4 ; python_version >= "3.10" and python_version < "3.11"

|

| 28 |

+

importlib-metadata==6.8.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 29 |

+

itsdangerous==2.1.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 30 |

+

jinja2==3.1.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 31 |

+

jsonschema-specifications==2023.7.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 32 |

+

jsonschema==4.19.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 33 |

+

keras==2.14.0rc0 ; python_version >= "3.10" and python_version < "3.11"

|

| 34 |

+

libclang==16.0.6 ; python_version >= "3.10" and python_version < "3.11"

|

| 35 |

+

markdown-it-py==3.0.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 36 |

+

markdown==3.4.4 ; python_version >= "3.10" and python_version < "3.11"

|

| 37 |

+

markupsafe==2.1.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 38 |

+

mdurl==0.1.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 39 |

+

ml-dtypes==0.2.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 40 |

+

mtcnn==0.1.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 41 |

+

numpy==1.25.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 42 |

+

oauthlib==3.2.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 43 |

+

opencv-python==4.8.0.76 ; python_version >= "3.10" and python_version < "3.11"

|

| 44 |

+

opt-einsum==3.3.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 45 |

+

packaging==23.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 46 |

+

pandas==2.1.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 47 |

+

pillow==9.5.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 48 |

+

plotly==5.16.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 49 |

+

polars==0.19.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 50 |

+

protobuf==4.24.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 51 |

+

pyarrow==13.0.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 52 |

+

pyasn1-modules==0.3.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 53 |

+

pyasn1==0.5.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 54 |

+

pydeck==0.8.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 55 |

+

pygments==2.16.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 56 |

+

pympler==1.0.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 57 |

+

pysocks==1.7.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 58 |

+

python-dateutil==2.8.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 59 |

+

pytz-deprecation-shim==0.1.0.post0 ; python_version >= "3.10" and python_version < "3.11"

|

| 60 |

+

pytz==2023.3.post1 ; python_version >= "3.10" and python_version < "3.11"

|

| 61 |

+

referencing==0.30.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 62 |

+

requests-oauthlib==1.3.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 63 |

+

requests==2.31.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 64 |

+

requests[socks]==2.31.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 65 |

+

retina-face==0.0.13 ; python_version >= "3.10" and python_version < "3.11"

|

| 66 |

+

rich==13.5.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 67 |

+

rpds-py==0.10.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 68 |

+

rsa==4.9 ; python_version >= "3.10" and python_version < "3.11"

|

| 69 |

+

setuptools==68.2.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 70 |

+

six==1.16.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 71 |

+

smmap==5.0.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 72 |

+

soupsieve==2.5 ; python_version >= "3.10" and python_version < "3.11"

|

| 73 |

+

streamlit==1.26.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 74 |

+

tenacity==8.2.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 75 |

+

tensorboard-data-server==0.7.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 76 |

+

tensorboard==2.14.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 77 |

+

tensorflow-estimator==2.14.0rc0 ; python_version >= "3.10" and python_version < "3.11"

|

| 78 |

+

tensorflow-io-gcs-filesystem==0.33.0 ; python_version >= "3.10" and python_version < "3.11" and platform_machine != "arm64" or python_version >= "3.10" and python_version < "3.11" and platform_system != "Darwin"

|

| 79 |

+

tensorflow==2.14.0rc1 ; python_version >= "3.10" and python_version < "3.11"

|

| 80 |

+

termcolor==2.3.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 81 |

+

toml==0.10.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 82 |

+

toolz==0.12.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 83 |

+

tornado==6.3.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 84 |

+

tqdm==4.66.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 85 |

+

typing-extensions==4.5.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 86 |

+

tzdata==2023.3 ; python_version >= "3.10" and python_version < "3.11"

|

| 87 |

+

tzlocal==4.3.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 88 |

+

urllib3==1.26.16 ; python_version >= "3.10" and python_version < "3.11"

|

| 89 |

+

validators==0.22.0 ; python_version >= "3.10" and python_version < "3.11"

|

| 90 |

+

watchdog==3.0.0 ; python_version >= "3.10" and python_version < "3.11" and platform_system != "Darwin"

|

| 91 |

+

werkzeug==2.3.7 ; python_version >= "3.10" and python_version < "3.11"

|

| 92 |

+

wheel==0.41.2 ; python_version >= "3.10" and python_version < "3.11"

|

| 93 |

+

wrapt==1.14.1 ; python_version >= "3.10" and python_version < "3.11"

|

| 94 |

+

zipp==3.16.2 ; python_version >= "3.10" and python_version < "3.11"

|

|

Binary file (9.58 kB). View file

|

|

|

|

@@ -0,0 +1,159 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

"""

|

| 3 |

+

Created on Tue Sep 5 23:45:21 2023

|

| 4 |

+

|

| 5 |

+

@author: M

|

| 6 |

+

"""

|

| 7 |

+

import cv2

|

| 8 |

+

import emrec_model as EM

|

| 9 |

+

import glob

|

| 10 |

+

import streamlit as st

|

| 11 |

+

import os

|

| 12 |

+

|

| 13 |

+

from plotlycharts import charts

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

#VIDEO_PATH = "C:\\Users\\ПК\\Videos"

|

| 17 |

+

|

| 18 |

+

README_IMG_FOLDER = "img"

|

| 19 |

+

README_FILE_PATH = "Readme_for_main_page.txt"

|

| 20 |

+

|

| 21 |

+

EMOTIONS_RU = {"angry":"Злость", "disgust":"Отвращение", "fear":"Страх",

|

| 22 |

+

"happy":"Счастье", "sad":"Грусть", "surprise":"Удивление"}

|

| 23 |

+

|

| 24 |

+

def draw_readme(column):

|

| 25 |

+

print(os.listdir("."))

|

| 26 |

+

with open(README_FILE_PATH, 'r') as f:

|

| 27 |

+

readme_line = f.readlines()

|

| 28 |

+

buffer = []

|

| 29 |

+

resourses = [os.path.basename(x) for x in glob.glob(README_IMG_FOLDER + "//*")]

|

| 30 |

+

for line in readme_line:

|

| 31 |

+

buffer.append(line)

|

| 32 |

+

for imgFolder in resourses:

|

| 33 |

+

if imgFolder in line:

|

| 34 |

+

column.markdown(''.join(buffer[:-1]))

|

| 35 |

+

column.image(os.path.join(README_IMG_FOLDER, imgFolder), use_column_width = True)

|

| 36 |

+

buffer.clear()

|

| 37 |

+

column.markdown(''.join(buffer))

|

| 38 |

+

|

| 39 |

+

def seconds_to_time(seconds):

|

| 40 |

+

|

| 41 |

+

seconds = seconds % (24 * 3600)

|

| 42 |

+

hour = seconds // 3600

|

| 43 |

+

seconds %= 3600

|

| 44 |

+

minutes = seconds // 60

|

| 45 |

+

seconds %= 60

|

| 46 |

+

time = "%d:%02d:%02d" % (hour, minutes, seconds)

|

| 47 |

+

|

| 48 |

+

return time

|

| 49 |

+

|

| 50 |

+

def count_video_length(path, name):

|

| 51 |

+

|

| 52 |

+

path_to_video = os.path.join(path, name)

|

| 53 |

+

cap = cv2.VideoCapture(path_to_video)

|

| 54 |

+

fps = cap.get(cv2.CAP_PROP_FPS)

|

| 55 |

+

frame_count = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

|

| 56 |

+

duration = frame_count/fps

|

| 57 |

+

length = duration / 60 # duration in minutes

|

| 58 |

+

|

| 59 |

+

return length

|

| 60 |

+

|

| 61 |

+

#функция для отображения экспандеров до загрузки видео, аргумент - колонка, в которой все отображается

|

| 62 |

+

def default_expanders(teacher):

|

| 63 |

+

|

| 64 |

+

with teacher.expander(":red[ФИО_дата_время1.mp4]"):

|

| 65 |

+

st.write("...")

|

| 66 |

+

with teacher.expander(":green[ФИО_дата_время1.mp4]"):

|

| 67 |

+

st.write("...")

|

| 68 |

+

with teacher.expander(":orange[ФИО_дата_время1.mp4]"):

|

| 69 |

+

st.write("...")

|

| 70 |

+

|

| 71 |

+

#функция для отображения экспандеров после загрузки видео, аргументы-колонка и видеофайл

|

| 72 |

+

def add_expander(teacher, video, path, data):

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

with teacher.expander(":green[загруженное видео]"):

|

| 76 |

+

st.write("Аналитика")

|

| 77 |

+

info, timeStamps = st.columns([4, 6])

|

| 78 |

+

|

| 79 |

+

#video read

|

| 80 |

+

video_file = open(os.path.join(path, video.name), 'rb')

|

| 81 |

+

video_bytes = video_file.read()

|

| 82 |

+

#info.write("videoplayer")

|

| 83 |

+

info.video(video_bytes)

|

| 84 |

+

|

| 85 |

+

timeStamps.text("Вреременные отметки ⬇")

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

stampData = [{"время": "5:10", "Эмоция":"Удивление"},

|

| 90 |

+

{"время": "8:09", "Эмоция":"Грусть"},

|

| 91 |

+

{"время": "11:14", "Эмоция":"Счастье"}]

|

| 92 |

+

|

| 93 |

+

timeStamps.data_editor(stampData, disabled = True)

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

#selectbox выбора типа графика

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

radio = charts.radio_chart(data)

|

| 101 |

+

info.plotly_chart(radio, use_container_width=True)

|

| 102 |

+

|

| 103 |

+

bar = charts.bar_chart(data)

|

| 104 |

+

info.plotly_chart(bar, use_container_width=True)

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

with teacher.expander(":red[ФИО_дата_время1.mp4]"):

|

| 108 |

+

st.write("...")

|

| 109 |

+

with teacher.expander(":green[ФИО_дата_время1.mp4]"):

|

| 110 |

+

st.write("...")

|

| 111 |

+

with teacher.expander(":orange[ФИО_дата_время1.mp4]"):

|

| 112 |

+

st.write("...")

|

| 113 |

+

|

| 114 |

+

#view, отображаемая по нажатию на препода

|

| 115 |

+

def view_side_bar(name, teacher, path):

|

| 116 |

+

teacher.header("📸"+name)

|

| 117 |

+

|

| 118 |

+

rating, info = teacher.columns([3,5])

|

| 119 |

+

|

| 120 |

+

rating.header("Рейтинг")

|

| 121 |

+

rating.text("⭐⭐⭐")

|

| 122 |

+

|

| 123 |

+

info.header("Информация")

|

| 124 |

+

info.text("email, phone")

|

| 125 |

+

|

| 126 |

+

#upload файла

|

| 127 |

+

videoFile = teacher.file_uploader("Загрузить видео", type='mp4',\

|

| 128 |

+

accept_multiple_files=False)

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

#если видео загружено, сохраняем и отрисовываем больше экспандеров

|

| 132 |

+

if videoFile is not None:

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

#скачивание файла в дирректорию

|

| 136 |

+

with open(os.path.join(path, videoFile.name),"wb") as f:

|

| 137 |

+

f.write(videoFile.getbuffer())

|

| 138 |

+

videoLength = count_video_length(path, videoFile.name)

|

| 139 |

+

if videoLength <=3 and videoLength >= 0.01:

|

| 140 |

+

with teacher.status("Обработка"):

|

| 141 |

+

model = EM.VideoEmotionRecognizer(os.path.join(path, videoFile.name))

|

| 142 |

+

outputSummary = model.emotions_summary()

|

| 143 |

+

|

| 144 |

+

ruData = {}

|

| 145 |

+

for key, value in outputSummary.items():

|

| 146 |

+

ruData[EMOTIONS_RU[key]] = value

|

| 147 |

+

|

| 148 |

+

teacher.header("Выбор видео")

|

| 149 |

+

add_expander(teacher, videoFile, path, ruData)

|

| 150 |

+

else:

|

| 151 |

+

teacher.error("Длинное видео")

|

| 152 |

+

teacher.header("Выбор урока")

|

| 153 |

+

default_expanders(teacher)

|

| 154 |

+

#иначе отрисовываем дефолтное кол-во экспандеров

|

| 155 |

+

else:

|

| 156 |

+

|

| 157 |

+

teacher.header("Выбор видео")

|

| 158 |

+

|

| 159 |

+

default_expanders(teacher)

|