Spaces:

Runtime error

Runtime error

Commit

·

4d1ebf3

1

Parent(s):

663e9a6

track-anything --version 1

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- LICENSE +21 -0

- README.md +47 -13

- XMem-s012.pth +3 -0

- app.py +362 -0

- app_save.py +381 -0

- app_test.py +23 -0

- assets/demo_version_1.MP4 +3 -0

- assets/inpainting.gif +3 -0

- assets/poster_demo_version_1.png +0 -0

- assets/qingming.mp4 +3 -0

- demo.py +87 -0

- images/groceries.jpg +0 -0

- images/mask_painter.png +0 -0

- images/painter_input_image.jpg +0 -0

- images/painter_input_mask.jpg +0 -0

- images/painter_output_image.png +0 -0

- images/painter_output_image__.png +0 -0

- images/point_painter.png +0 -0

- images/point_painter_1.png +0 -0

- images/point_painter_2.png +0 -0

- images/truck.jpg +0 -0

- images/truck_both.jpg +0 -0

- images/truck_mask.jpg +0 -0

- images/truck_point.jpg +0 -0

- inpainter/.DS_Store +0 -0

- inpainter/base_inpainter.py +160 -0

- inpainter/config/config.yaml +4 -0

- inpainter/model/e2fgvi.py +350 -0

- inpainter/model/e2fgvi_hq.py +350 -0

- inpainter/model/modules/feat_prop.py +149 -0

- inpainter/model/modules/flow_comp.py +450 -0

- inpainter/model/modules/spectral_norm.py +288 -0

- inpainter/model/modules/tfocal_transformer.py +536 -0

- inpainter/model/modules/tfocal_transformer_hq.py +565 -0

- inpainter/util/__init__.py +0 -0

- inpainter/util/tensor_util.py +24 -0

- requirements.txt +17 -0

- sam_vit_h_4b8939.pth +3 -0

- template.html +27 -0

- templates/index.html +50 -0

- text_server.py +72 -0

- tools/__init__.py +0 -0

- tools/base_segmenter.py +129 -0

- tools/interact_tools.py +265 -0

- tools/mask_painter.py +288 -0

- tools/painter.py +215 -0

- track_anything.py +93 -0

- tracker/.DS_Store +0 -0

- tracker/base_tracker.py +233 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

assets/demo_version_1.MP4 filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/inpainting.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/qingming.mp4 filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Mingqi Gao

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,47 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Track-Anything

|

| 2 |

+

|

| 3 |

+

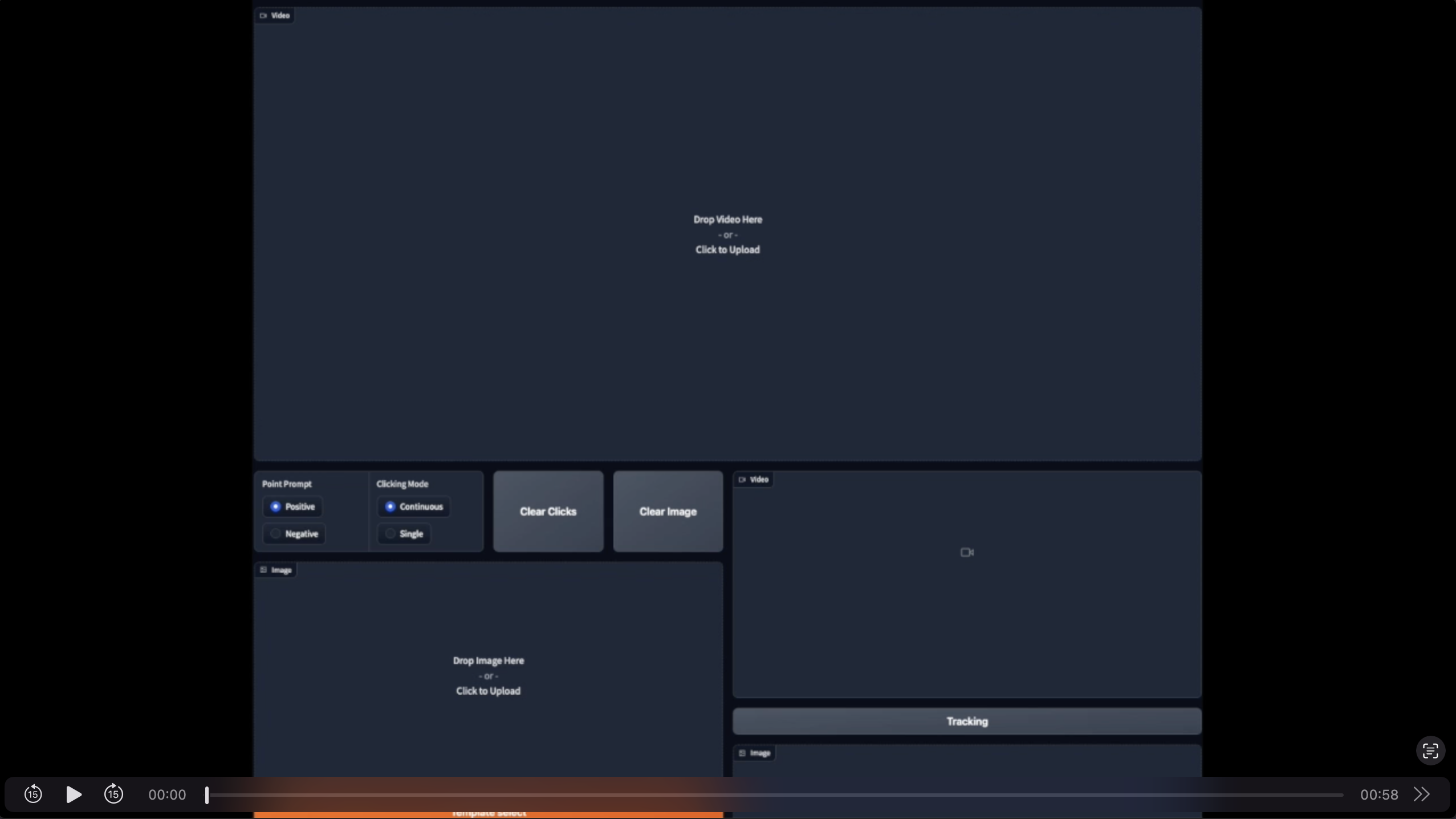

***Track-Anything*** is a flexible and interactive tool for video object tracking and segmentation. It is developed upon [Segment Anything](https://github.com/facebookresearch/segment-anything), can specify anything to track and segment via user clicks only. During tracking, users can flexibly change the objects they wanna track or correct the region of interest if there are any ambiguities. These characteristics enable ***Track-Anything*** to be suitable for:

|

| 4 |

+

- Video object tracking and segmentation with shot changes.

|

| 5 |

+

- Data annnotation for video object tracking and segmentation.

|

| 6 |

+

- Object-centric downstream video tasks, such as video inpainting and editing.

|

| 7 |

+

|

| 8 |

+

## Demo

|

| 9 |

+

|

| 10 |

+

https://user-images.githubusercontent.com/28050374/232842703-8395af24-b13e-4b8e-aafb-e94b61e6c449.MP4

|

| 11 |

+

|

| 12 |

+

### Multiple Object Tracking and Segmentation (with [XMem](https://github.com/hkchengrex/XMem))

|

| 13 |

+

|

| 14 |

+

https://user-images.githubusercontent.com/39208339/233035206-0a151004-6461-4deb-b782-d1dbfe691493.mp4

|

| 15 |

+

|

| 16 |

+

### Video Object Tracking and Segmentation with Shot Changes (with [XMem](https://github.com/hkchengrex/XMem))

|

| 17 |

+

|

| 18 |

+

https://user-images.githubusercontent.com/30309970/232848349-f5e29e71-2ea4-4529-ac9a-94b9ca1e7055.mp4

|

| 19 |

+

|

| 20 |

+

### Video Inpainting (with [E2FGVI](https://github.com/MCG-NKU/E2FGVI))

|

| 21 |

+

|

| 22 |

+

https://user-images.githubusercontent.com/28050374/232959816-07f2826f-d267-4dda-8ae5-a5132173b8f4.mp4

|

| 23 |

+

|

| 24 |

+

## Get Started

|

| 25 |

+

#### Linux

|

| 26 |

+

```bash

|

| 27 |

+

# Clone the repository:

|

| 28 |

+

git clone https://github.com/gaomingqi/Track-Anything.git

|

| 29 |

+

cd Track-Anything

|

| 30 |

+

|

| 31 |

+

# Install dependencies:

|

| 32 |

+

pip install -r requirements.txt

|

| 33 |

+

|

| 34 |

+

# Install dependencies for inpainting:

|

| 35 |

+

pip install -U openmim

|

| 36 |

+

mim install mmcv

|

| 37 |

+

|

| 38 |

+

# Install dependencies for editing

|

| 39 |

+

pip install madgrad

|

| 40 |

+

|

| 41 |

+

# Run the Track-Anything gradio demo.

|

| 42 |

+

python app.py --device cuda:0 --sam_model_type vit_h --port 12212

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

## Acknowledgements

|

| 46 |

+

|

| 47 |

+

The project is based on [Segment Anything](https://github.com/facebookresearch/segment-anything), [XMem](https://github.com/hkchengrex/XMem), and [E2FGVI](https://github.com/MCG-NKU/E2FGVI). Thanks for the authors for their efforts.

|

XMem-s012.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:16205ad04bfc55b442bd4d7af894382e09868b35e10721c5afc09a24ea8d72d9

|

| 3 |

+

size 249026057

|

app.py

ADDED

|

@@ -0,0 +1,362 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from demo import automask_image_app, automask_video_app, sahi_autoseg_app

|

| 3 |

+

import argparse

|

| 4 |

+

import cv2

|

| 5 |

+

import time

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import numpy as np

|

| 8 |

+

import os

|

| 9 |

+

import sys

|

| 10 |

+

sys.path.append(sys.path[0]+"/tracker")

|

| 11 |

+

sys.path.append(sys.path[0]+"/tracker/model")

|

| 12 |

+

from track_anything import TrackingAnything

|

| 13 |

+

from track_anything import parse_augment

|

| 14 |

+

import requests

|

| 15 |

+

import json

|

| 16 |

+

import torchvision

|

| 17 |

+

import torch

|

| 18 |

+

import concurrent.futures

|

| 19 |

+

import queue

|

| 20 |

+

|

| 21 |

+

# download checkpoints

|

| 22 |

+

def download_checkpoint(url, folder, filename):

|

| 23 |

+

os.makedirs(folder, exist_ok=True)

|

| 24 |

+

filepath = os.path.join(folder, filename)

|

| 25 |

+

|

| 26 |

+

if not os.path.exists(filepath):

|

| 27 |

+

print("download checkpoints ......")

|

| 28 |

+

response = requests.get(url, stream=True)

|

| 29 |

+

with open(filepath, "wb") as f:

|

| 30 |

+

for chunk in response.iter_content(chunk_size=8192):

|

| 31 |

+

if chunk:

|

| 32 |

+

f.write(chunk)

|

| 33 |

+

|

| 34 |

+

print("download successfully!")

|

| 35 |

+

|

| 36 |

+

return filepath

|

| 37 |

+

|

| 38 |

+

# convert points input to prompt state

|

| 39 |

+

def get_prompt(click_state, click_input):

|

| 40 |

+

inputs = json.loads(click_input)

|

| 41 |

+

points = click_state[0]

|

| 42 |

+

labels = click_state[1]

|

| 43 |

+

for input in inputs:

|

| 44 |

+

points.append(input[:2])

|

| 45 |

+

labels.append(input[2])

|

| 46 |

+

click_state[0] = points

|

| 47 |

+

click_state[1] = labels

|

| 48 |

+

prompt = {

|

| 49 |

+

"prompt_type":["click"],

|

| 50 |

+

"input_point":click_state[0],

|

| 51 |

+

"input_label":click_state[1],

|

| 52 |

+

"multimask_output":"True",

|

| 53 |

+

}

|

| 54 |

+

return prompt

|

| 55 |

+

|

| 56 |

+

# extract frames from upload video

|

| 57 |

+

def get_frames_from_video(video_input, video_state):

|

| 58 |

+

"""

|

| 59 |

+

Args:

|

| 60 |

+

video_path:str

|

| 61 |

+

timestamp:float64

|

| 62 |

+

Return

|

| 63 |

+

[[0:nearest_frame], [nearest_frame:], nearest_frame]

|

| 64 |

+

"""

|

| 65 |

+

video_path = video_input

|

| 66 |

+

frames = []

|

| 67 |

+

try:

|

| 68 |

+

cap = cv2.VideoCapture(video_path)

|

| 69 |

+

fps = cap.get(cv2.CAP_PROP_FPS)

|

| 70 |

+

while cap.isOpened():

|

| 71 |

+

ret, frame = cap.read()

|

| 72 |

+

if ret == True:

|

| 73 |

+

frames.append(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

|

| 74 |

+

else:

|

| 75 |

+

break

|

| 76 |

+

except (OSError, TypeError, ValueError, KeyError, SyntaxError) as e:

|

| 77 |

+

print("read_frame_source:{} error. {}\n".format(video_path, str(e)))

|

| 78 |

+

|

| 79 |

+

# initialize video_state

|

| 80 |

+

video_state = {

|

| 81 |

+

"video_name": os.path.split(video_path)[-1],

|

| 82 |

+

"origin_images": frames,

|

| 83 |

+

"painted_images": frames.copy(),

|

| 84 |

+

"masks": [None]*len(frames),

|

| 85 |

+

"logits": [None]*len(frames),

|

| 86 |

+

"select_frame_number": 0,

|

| 87 |

+

"fps": 30

|

| 88 |

+

}

|

| 89 |

+

return video_state, gr.update(visible=True, maximum=len(frames), value=1)

|

| 90 |

+

|

| 91 |

+

# get the select frame from gradio slider

|

| 92 |

+

def select_template(image_selection_slider, video_state):

|

| 93 |

+

|

| 94 |

+

# images = video_state[1]

|

| 95 |

+

image_selection_slider -= 1

|

| 96 |

+

video_state["select_frame_number"] = image_selection_slider

|

| 97 |

+

|

| 98 |

+

# once select a new template frame, set the image in sam

|

| 99 |

+

|

| 100 |

+

model.samcontroler.sam_controler.reset_image()

|

| 101 |

+

model.samcontroler.sam_controler.set_image(video_state["origin_images"][image_selection_slider])

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

return video_state["painted_images"][image_selection_slider], video_state

|

| 105 |

+

|

| 106 |

+

# use sam to get the mask

|

| 107 |

+

def sam_refine(video_state, point_prompt, click_state, interactive_state, evt:gr.SelectData):

|

| 108 |

+

"""

|

| 109 |

+

Args:

|

| 110 |

+

template_frame: PIL.Image

|

| 111 |

+

point_prompt: flag for positive or negative button click

|

| 112 |

+

click_state: [[points], [labels]]

|

| 113 |

+

"""

|

| 114 |

+

if point_prompt == "Positive":

|

| 115 |

+

coordinate = "[[{},{},1]]".format(evt.index[0], evt.index[1])

|

| 116 |

+

interactive_state["positive_click_times"] += 1

|

| 117 |

+

else:

|

| 118 |

+

coordinate = "[[{},{},0]]".format(evt.index[0], evt.index[1])

|

| 119 |

+

interactive_state["negative_click_times"] += 1

|

| 120 |

+

|

| 121 |

+

# prompt for sam model

|

| 122 |

+

prompt = get_prompt(click_state=click_state, click_input=coordinate)

|

| 123 |

+

|

| 124 |

+

mask, logit, painted_image = model.first_frame_click(

|

| 125 |

+

image=video_state["origin_images"][video_state["select_frame_number"]],

|

| 126 |

+

points=np.array(prompt["input_point"]),

|

| 127 |

+

labels=np.array(prompt["input_label"]),

|

| 128 |

+

multimask=prompt["multimask_output"],

|

| 129 |

+

)

|

| 130 |

+

video_state["masks"][video_state["select_frame_number"]] = mask

|

| 131 |

+

video_state["logits"][video_state["select_frame_number"]] = logit

|

| 132 |

+

video_state["painted_images"][video_state["select_frame_number"]] = painted_image

|

| 133 |

+

|

| 134 |

+

return painted_image, video_state, interactive_state

|

| 135 |

+

|

| 136 |

+

# tracking vos

|

| 137 |

+

def vos_tracking_video(video_state, interactive_state):

|

| 138 |

+

model.xmem.clear_memory()

|

| 139 |

+

following_frames = video_state["origin_images"][video_state["select_frame_number"]:]

|

| 140 |

+

template_mask = video_state["masks"][video_state["select_frame_number"]]

|

| 141 |

+

fps = video_state["fps"]

|

| 142 |

+

masks, logits, painted_images = model.generator(images=following_frames, template_mask=template_mask)

|

| 143 |

+

|

| 144 |

+

video_state["masks"][video_state["select_frame_number"]:] = masks

|

| 145 |

+

video_state["logits"][video_state["select_frame_number"]:] = logits

|

| 146 |

+

video_state["painted_images"][video_state["select_frame_number"]:] = painted_images

|

| 147 |

+

|

| 148 |

+

video_output = generate_video_from_frames(video_state["painted_images"], output_path="./result/{}".format(video_state["video_name"]), fps=fps) # import video_input to name the output video

|

| 149 |

+

interactive_state["inference_times"] += 1

|

| 150 |

+

|

| 151 |

+

print("For generating this tracking result, inference times: {}, click times: {}, positive: {}, negative: {}".format(interactive_state["inference_times"],

|

| 152 |

+

interactive_state["positive_click_times"]+interactive_state["negative_click_times"],

|

| 153 |

+

interactive_state["positive_click_times"],

|

| 154 |

+

interactive_state["negative_click_times"]))

|

| 155 |

+

|

| 156 |

+

#### shanggao code for mask save

|

| 157 |

+

if interactive_state["mask_save"]:

|

| 158 |

+

if not os.path.exists('./result/mask/{}'.format(video_state["video_name"].split('.')[0])):

|

| 159 |

+

os.makedirs('./result/mask/{}'.format(video_state["video_name"].split('.')[0]))

|

| 160 |

+

i = 0

|

| 161 |

+

print("save mask")

|

| 162 |

+

for mask in video_state["masks"]:

|

| 163 |

+

np.save(os.path.join('./result/mask/{}'.format(video_state["video_name"].split('.')[0]), '{:05d}.npy'.format(i)), mask)

|

| 164 |

+

i+=1

|

| 165 |

+

# save_mask(video_state["masks"], video_state["video_name"])

|

| 166 |

+

#### shanggao code for mask save

|

| 167 |

+

return video_output, video_state, interactive_state

|

| 168 |

+

|

| 169 |

+

# generate video after vos inference

|

| 170 |

+

def generate_video_from_frames(frames, output_path, fps=30):

|

| 171 |

+

"""

|

| 172 |

+

Generates a video from a list of frames.

|

| 173 |

+

|

| 174 |

+

Args:

|

| 175 |

+

frames (list of numpy arrays): The frames to include in the video.

|

| 176 |

+

output_path (str): The path to save the generated video.

|

| 177 |

+

fps (int, optional): The frame rate of the output video. Defaults to 30.

|

| 178 |

+

"""

|

| 179 |

+

frames = torch.from_numpy(np.asarray(frames))

|

| 180 |

+

if not os.path.exists(os.path.dirname(output_path)):

|

| 181 |

+

os.makedirs(os.path.dirname(output_path))

|

| 182 |

+

torchvision.io.write_video(output_path, frames, fps=fps, video_codec="libx264")

|

| 183 |

+

return output_path

|

| 184 |

+

|

| 185 |

+

# check and download checkpoints if needed

|

| 186 |

+

SAM_checkpoint = "sam_vit_h_4b8939.pth"

|

| 187 |

+

sam_checkpoint_url = "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth"

|

| 188 |

+

xmem_checkpoint = "XMem-s012.pth"

|

| 189 |

+

xmem_checkpoint_url = "https://github.com/hkchengrex/XMem/releases/download/v1.0/XMem-s012.pth"

|

| 190 |

+

folder ="./checkpoints"

|

| 191 |

+

SAM_checkpoint = download_checkpoint(sam_checkpoint_url, folder, SAM_checkpoint)

|

| 192 |

+

xmem_checkpoint = download_checkpoint(xmem_checkpoint_url, folder, xmem_checkpoint)

|

| 193 |

+

|

| 194 |

+

# args, defined in track_anything.py

|

| 195 |

+

args = parse_augment()

|

| 196 |

+

# args.port = 12212

|

| 197 |

+

# args.device = "cuda:4"

|

| 198 |

+

# args.mask_save = True

|

| 199 |

+

|

| 200 |

+

model = TrackingAnything(SAM_checkpoint, xmem_checkpoint, args)

|

| 201 |

+

|

| 202 |

+

with gr.Blocks() as iface:

|

| 203 |

+

"""

|

| 204 |

+

state for

|

| 205 |

+

"""

|

| 206 |

+

click_state = gr.State([[],[]])

|

| 207 |

+

interactive_state = gr.State({

|

| 208 |

+

"inference_times": 0,

|

| 209 |

+

"negative_click_times" : 0,

|

| 210 |

+

"positive_click_times": 0,

|

| 211 |

+

"mask_save": args.mask_save

|

| 212 |

+

})

|

| 213 |

+

video_state = gr.State(

|

| 214 |

+

{

|

| 215 |

+

"video_name": "",

|

| 216 |

+

"origin_images": None,

|

| 217 |

+

"painted_images": None,

|

| 218 |

+

"masks": None,

|

| 219 |

+

"logits": None,

|

| 220 |

+

"select_frame_number": 0,

|

| 221 |

+

"fps": 30

|

| 222 |

+

}

|

| 223 |

+

)

|

| 224 |

+

|

| 225 |

+

with gr.Row():

|

| 226 |

+

|

| 227 |

+

# for user video input

|

| 228 |

+

with gr.Column(scale=1.0):

|

| 229 |

+

video_input = gr.Video().style(height=360)

|

| 230 |

+

|

| 231 |

+

|

| 232 |

+

|

| 233 |

+

with gr.Row(scale=1):

|

| 234 |

+

# put the template frame under the radio button

|

| 235 |

+

with gr.Column(scale=0.5):

|

| 236 |

+

# extract frames

|

| 237 |

+

with gr.Column():

|

| 238 |

+

extract_frames_button = gr.Button(value="Get video info", interactive=True, variant="primary")

|

| 239 |

+

|

| 240 |

+

# click points settins, negative or positive, mode continuous or single

|

| 241 |

+

with gr.Row():

|

| 242 |

+

with gr.Row(scale=0.5):

|

| 243 |

+

point_prompt = gr.Radio(

|

| 244 |

+

choices=["Positive", "Negative"],

|

| 245 |

+

value="Positive",

|

| 246 |

+

label="Point Prompt",

|

| 247 |

+

interactive=True)

|

| 248 |

+

click_mode = gr.Radio(

|

| 249 |

+

choices=["Continuous", "Single"],

|

| 250 |

+

value="Continuous",

|

| 251 |

+

label="Clicking Mode",

|

| 252 |

+

interactive=True)

|

| 253 |

+

with gr.Row(scale=0.5):

|

| 254 |

+

clear_button_clike = gr.Button(value="Clear Clicks", interactive=True).style(height=160)

|

| 255 |

+

clear_button_image = gr.Button(value="Clear Image", interactive=True)

|

| 256 |

+

template_frame = gr.Image(type="pil",interactive=True, elem_id="template_frame").style(height=360)

|

| 257 |

+

image_selection_slider = gr.Slider(minimum=1, maximum=100, step=1, value=1, label="Image Selection", invisible=False)

|

| 258 |

+

|

| 259 |

+

|

| 260 |

+

|

| 261 |

+

|

| 262 |

+

with gr.Column(scale=0.5):

|

| 263 |

+

video_output = gr.Video().style(height=360)

|

| 264 |

+

tracking_video_predict_button = gr.Button(value="Tracking")

|

| 265 |

+

|

| 266 |

+

# first step: get the video information

|

| 267 |

+

extract_frames_button.click(

|

| 268 |

+

fn=get_frames_from_video,

|

| 269 |

+

inputs=[

|

| 270 |

+

video_input, video_state

|

| 271 |

+

],

|

| 272 |

+

outputs=[video_state, image_selection_slider],

|

| 273 |

+

)

|

| 274 |

+

|

| 275 |

+

# second step: select images from slider

|

| 276 |

+

image_selection_slider.release(fn=select_template,

|

| 277 |

+

inputs=[image_selection_slider, video_state],

|

| 278 |

+

outputs=[template_frame, video_state], api_name="select_image")

|

| 279 |

+

|

| 280 |

+

|

| 281 |

+

template_frame.select(

|

| 282 |

+

fn=sam_refine,

|

| 283 |

+

inputs=[video_state, point_prompt, click_state, interactive_state],

|

| 284 |

+

outputs=[template_frame, video_state, interactive_state]

|

| 285 |

+

)

|

| 286 |

+

|

| 287 |

+

tracking_video_predict_button.click(

|

| 288 |

+

fn=vos_tracking_video,

|

| 289 |

+

inputs=[video_state, interactive_state],

|

| 290 |

+

outputs=[video_output, video_state, interactive_state]

|

| 291 |

+

)

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

# clear input

|

| 295 |

+

video_input.clear(

|

| 296 |

+

lambda: (

|

| 297 |

+

{

|

| 298 |

+

"origin_images": None,

|

| 299 |

+

"painted_images": None,

|

| 300 |

+

"masks": None,

|

| 301 |

+

"logits": None,

|

| 302 |

+

"select_frame_number": 0,

|

| 303 |

+

"fps": 30

|

| 304 |

+

},

|

| 305 |

+

{

|

| 306 |

+

"inference_times": 0,

|

| 307 |

+

"negative_click_times" : 0,

|

| 308 |

+

"positive_click_times": 0,

|

| 309 |

+

"mask_save": args.mask_save

|

| 310 |

+

},

|

| 311 |

+

[[],[]]

|

| 312 |

+

),

|

| 313 |

+

[],

|

| 314 |

+

[

|

| 315 |

+

video_state,

|

| 316 |

+

interactive_state,

|

| 317 |

+

click_state,

|

| 318 |

+

],

|

| 319 |

+

queue=False,

|

| 320 |

+

show_progress=False

|

| 321 |

+

)

|

| 322 |

+

clear_button_image.click(

|

| 323 |

+

lambda: (

|

| 324 |

+

{

|

| 325 |

+

"origin_images": None,

|

| 326 |

+

"painted_images": None,

|

| 327 |

+

"masks": None,

|

| 328 |

+

"logits": None,

|

| 329 |

+

"select_frame_number": 0,

|

| 330 |

+

"fps": 30

|

| 331 |

+

},

|

| 332 |

+

{

|

| 333 |

+

"inference_times": 0,

|

| 334 |

+

"negative_click_times" : 0,

|

| 335 |

+

"positive_click_times": 0,

|

| 336 |

+

"mask_save": args.mask_save

|

| 337 |

+

},

|

| 338 |

+

[[],[]]

|

| 339 |

+

),

|

| 340 |

+

[],

|

| 341 |

+

[

|

| 342 |

+

video_state,

|

| 343 |

+

interactive_state,

|

| 344 |

+

click_state,

|

| 345 |

+

],

|

| 346 |

+

|

| 347 |

+

queue=False,

|

| 348 |

+

show_progress=False

|

| 349 |

+

|

| 350 |

+

)

|

| 351 |

+

clear_button_clike.click(

|

| 352 |

+

lambda: ([[],[]]),

|

| 353 |

+

[],

|

| 354 |

+

[click_state],

|

| 355 |

+

queue=False,

|

| 356 |

+

show_progress=False

|

| 357 |

+

)

|

| 358 |

+

iface.queue(concurrency_count=1)

|

| 359 |

+

iface.launch(debug=True, enable_queue=True, server_port=args.port, server_name="0.0.0.0")

|

| 360 |

+

|

| 361 |

+

|

| 362 |

+

|

app_save.py

ADDED

|

@@ -0,0 +1,381 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from demo import automask_image_app, automask_video_app, sahi_autoseg_app

|

| 3 |

+

import argparse

|

| 4 |

+

import cv2

|

| 5 |

+

import time

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import numpy as np

|

| 8 |

+

import os

|

| 9 |

+

import sys

|

| 10 |

+

sys.path.append(sys.path[0]+"/tracker")

|

| 11 |

+

sys.path.append(sys.path[0]+"/tracker/model")

|

| 12 |

+

from track_anything import TrackingAnything

|

| 13 |

+

from track_anything import parse_augment

|

| 14 |

+

import requests

|

| 15 |

+

import json

|

| 16 |

+

import torchvision

|

| 17 |

+

import torch

|

| 18 |

+

import concurrent.futures

|

| 19 |

+

import queue

|

| 20 |

+

|

| 21 |

+

def download_checkpoint(url, folder, filename):

|

| 22 |

+

os.makedirs(folder, exist_ok=True)

|

| 23 |

+

filepath = os.path.join(folder, filename)

|

| 24 |

+

|

| 25 |

+

if not os.path.exists(filepath):

|

| 26 |

+

print("download checkpoints ......")

|

| 27 |

+

response = requests.get(url, stream=True)

|

| 28 |

+

with open(filepath, "wb") as f:

|

| 29 |

+

for chunk in response.iter_content(chunk_size=8192):

|

| 30 |

+

if chunk:

|

| 31 |

+

f.write(chunk)

|

| 32 |

+

|

| 33 |

+

print("download successfully!")

|

| 34 |

+

|

| 35 |

+

return filepath

|

| 36 |

+

|

| 37 |

+

def pause_video(play_state):

|

| 38 |

+

print("user pause_video")

|

| 39 |

+

play_state.append(time.time())

|

| 40 |

+

return play_state

|

| 41 |

+

|

| 42 |

+

def play_video(play_state):

|

| 43 |

+

print("user play_video")

|

| 44 |

+

play_state.append(time.time())

|

| 45 |

+

return play_state

|

| 46 |

+

|

| 47 |

+

# convert points input to prompt state

|

| 48 |

+

def get_prompt(click_state, click_input):

|

| 49 |

+

inputs = json.loads(click_input)

|

| 50 |

+

points = click_state[0]

|

| 51 |

+

labels = click_state[1]

|

| 52 |

+

for input in inputs:

|

| 53 |

+

points.append(input[:2])

|

| 54 |

+

labels.append(input[2])

|

| 55 |

+

click_state[0] = points

|

| 56 |

+

click_state[1] = labels

|

| 57 |

+

prompt = {

|

| 58 |

+

"prompt_type":["click"],

|

| 59 |

+

"input_point":click_state[0],

|

| 60 |

+

"input_label":click_state[1],

|

| 61 |

+

"multimask_output":"True",

|

| 62 |

+

}

|

| 63 |

+

return prompt

|

| 64 |

+

|

| 65 |

+

def get_frames_from_video(video_input, play_state):

|

| 66 |

+

"""

|

| 67 |

+

Args:

|

| 68 |

+

video_path:str

|

| 69 |

+

timestamp:float64

|

| 70 |

+

Return

|

| 71 |

+

[[0:nearest_frame], [nearest_frame:], nearest_frame]

|

| 72 |

+

"""

|

| 73 |

+

video_path = video_input

|

| 74 |

+

# video_name = video_path.split('/')[-1]

|

| 75 |

+

|

| 76 |

+

try:

|

| 77 |

+

timestamp = play_state[1] - play_state[0]

|

| 78 |

+

except:

|

| 79 |

+

timestamp = 0

|

| 80 |

+

frames = []

|

| 81 |

+

try:

|

| 82 |

+

cap = cv2.VideoCapture(video_path)

|

| 83 |

+

fps = cap.get(cv2.CAP_PROP_FPS)

|

| 84 |

+

while cap.isOpened():

|

| 85 |

+

ret, frame = cap.read()

|

| 86 |

+

if ret == True:

|

| 87 |

+

frames.append(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

|

| 88 |

+

else:

|

| 89 |

+

break

|

| 90 |

+

except (OSError, TypeError, ValueError, KeyError, SyntaxError) as e:

|

| 91 |

+

print("read_frame_source:{} error. {}\n".format(video_path, str(e)))

|

| 92 |

+

|

| 93 |

+

# for index, frame in enumerate(frames):

|

| 94 |

+

# frames[index] = np.asarray(Image.fromarray(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)))

|

| 95 |

+

|

| 96 |

+

key_frame_index = int(timestamp * fps)

|

| 97 |

+

nearest_frame = frames[key_frame_index]

|

| 98 |

+

frames_split = [frames[:key_frame_index], frames[key_frame_index:], nearest_frame]

|

| 99 |

+

# output_path='./seperate.mp4'

|

| 100 |

+

# torchvision.io.write_video(output_path, frames[1], fps=fps, video_codec="libx264")

|

| 101 |

+

|

| 102 |

+

# set image in sam when select the template frame

|

| 103 |

+

model.samcontroler.sam_controler.set_image(nearest_frame)

|

| 104 |

+

return frames_split, nearest_frame, nearest_frame, fps

|

| 105 |

+

|

| 106 |

+

def generate_video_from_frames(frames, output_path, fps=30):

|

| 107 |

+

"""

|

| 108 |

+

Generates a video from a list of frames.

|

| 109 |

+

|

| 110 |

+

Args:

|

| 111 |

+

frames (list of numpy arrays): The frames to include in the video.

|

| 112 |

+

output_path (str): The path to save the generated video.

|

| 113 |

+

fps (int, optional): The frame rate of the output video. Defaults to 30.

|

| 114 |

+

"""

|

| 115 |

+

# height, width, layers = frames[0].shape

|

| 116 |

+

# fourcc = cv2.VideoWriter_fourcc(*"mp4v")

|

| 117 |

+

# video = cv2.VideoWriter(output_path, fourcc, fps, (width, height))

|

| 118 |

+

|

| 119 |

+

# for frame in frames:

|

| 120 |

+

# video.write(frame)

|

| 121 |

+

|

| 122 |

+

# video.release()

|

| 123 |

+

frames = torch.from_numpy(np.asarray(frames))

|

| 124 |

+

output_path='./output.mp4'

|

| 125 |

+

torchvision.io.write_video(output_path, frames, fps=fps, video_codec="libx264")

|

| 126 |

+

return output_path

|

| 127 |

+

|

| 128 |

+

def model_reset():

|

| 129 |

+

model.xmem.clear_memory()

|

| 130 |

+

return None

|

| 131 |

+

|

| 132 |

+

def sam_refine(origin_frame, point_prompt, click_state, logit, evt:gr.SelectData):

|

| 133 |

+

"""

|

| 134 |

+

Args:

|

| 135 |

+

template_frame: PIL.Image

|

| 136 |

+

point_prompt: flag for positive or negative button click

|

| 137 |

+

click_state: [[points], [labels]]

|

| 138 |

+

"""

|

| 139 |

+

if point_prompt == "Positive":

|

| 140 |

+

coordinate = "[[{},{},1]]".format(evt.index[0], evt.index[1])

|

| 141 |

+

else:

|

| 142 |

+

coordinate = "[[{},{},0]]".format(evt.index[0], evt.index[1])

|

| 143 |

+

|

| 144 |

+

# prompt for sam model

|

| 145 |

+

prompt = get_prompt(click_state=click_state, click_input=coordinate)

|

| 146 |

+

|

| 147 |

+

# default value

|

| 148 |

+

# points = np.array([[evt.index[0],evt.index[1]]])

|

| 149 |

+

# labels= np.array([1])

|

| 150 |

+

if len(logit)==0:

|

| 151 |

+

logit = None

|

| 152 |

+

|

| 153 |

+

mask, logit, painted_image = model.first_frame_click(

|

| 154 |

+

image=origin_frame,

|

| 155 |

+

points=np.array(prompt["input_point"]),

|

| 156 |

+

labels=np.array(prompt["input_label"]),

|

| 157 |

+

multimask=prompt["multimask_output"],

|

| 158 |

+

)

|

| 159 |

+

return painted_image, click_state, logit, mask

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

|

| 163 |

+

def vos_tracking_video(video_state, template_mask,fps,video_input):

|

| 164 |

+

|

| 165 |

+

masks, logits, painted_images = model.generator(images=video_state[1], template_mask=template_mask)

|

| 166 |

+

video_output = generate_video_from_frames(painted_images, output_path="./output.mp4", fps=fps)

|

| 167 |

+

# image_selection_slider = gr.Slider(minimum=1, maximum=len(video_state[1]), value=1, label="Image Selection", interactive=True)

|

| 168 |

+

video_name = video_input.split('/')[-1].split('.')[0]

|

| 169 |

+

result_path = os.path.join('/hhd3/gaoshang/Track-Anything/results/'+video_name)

|

| 170 |

+

if not os.path.exists(result_path):

|

| 171 |

+

os.makedirs(result_path)

|

| 172 |

+

i=0

|

| 173 |

+

for mask in masks:

|

| 174 |

+

np.save(os.path.join(result_path,'{:05}.npy'.format(i)), mask)

|

| 175 |

+

i+=1

|

| 176 |

+

return video_output, painted_images, masks, logits

|

| 177 |

+

|

| 178 |

+

def vos_tracking_image(image_selection_slider, painted_images):

|

| 179 |

+

|

| 180 |

+

# images = video_state[1]

|

| 181 |

+

percentage = image_selection_slider / 100

|

| 182 |

+

select_frame_num = int(percentage * len(painted_images))

|

| 183 |

+

return painted_images[select_frame_num], select_frame_num

|

| 184 |

+

|

| 185 |

+

def interactive_correction(video_state, point_prompt, click_state, select_correction_frame, evt: gr.SelectData):

|

| 186 |

+

"""

|

| 187 |

+

Args:

|

| 188 |

+

template_frame: PIL.Image

|

| 189 |

+

point_prompt: flag for positive or negative button click

|

| 190 |

+

click_state: [[points], [labels]]

|

| 191 |

+

"""

|

| 192 |

+

refine_image = video_state[1][select_correction_frame]

|

| 193 |

+

if point_prompt == "Positive":

|

| 194 |

+

coordinate = "[[{},{},1]]".format(evt.index[0], evt.index[1])

|

| 195 |

+

else:

|

| 196 |

+

coordinate = "[[{},{},0]]".format(evt.index[0], evt.index[1])

|

| 197 |

+

|

| 198 |

+

# prompt for sam model

|

| 199 |

+

prompt = get_prompt(click_state=click_state, click_input=coordinate)

|

| 200 |

+

model.samcontroler.seg_again(refine_image)

|

| 201 |

+

corrected_mask, corrected_logit, corrected_painted_image = model.first_frame_click(

|

| 202 |

+

image=refine_image,

|

| 203 |

+

points=np.array(prompt["input_point"]),

|

| 204 |

+

labels=np.array(prompt["input_label"]),

|

| 205 |

+

multimask=prompt["multimask_output"],

|

| 206 |

+

)

|

| 207 |

+

return corrected_painted_image, [corrected_mask, corrected_logit, corrected_painted_image]

|

| 208 |

+

|

| 209 |

+

def correct_track(video_state, select_correction_frame, corrected_state, masks, logits, painted_images, fps, video_input):

|

| 210 |

+

model.xmem.clear_memory()

|

| 211 |

+

# inference the following images

|

| 212 |

+

following_images = video_state[1][select_correction_frame:]

|

| 213 |

+

corrected_masks, corrected_logits, corrected_painted_images = model.generator(images=following_images, template_mask=corrected_state[0])

|

| 214 |

+

masks = masks[:select_correction_frame] + corrected_masks

|

| 215 |

+

logits = logits[:select_correction_frame] + corrected_logits

|

| 216 |

+

painted_images = painted_images[:select_correction_frame] + corrected_painted_images

|

| 217 |

+

video_output = generate_video_from_frames(painted_images, output_path="./output.mp4", fps=fps)

|

| 218 |

+

|

| 219 |

+

video_name = video_input.split('/')[-1].split('.')[0]

|

| 220 |

+

result_path = os.path.join('/hhd3/gaoshang/Track-Anything/results/'+video_name)

|

| 221 |

+

if not os.path.exists(result_path):

|

| 222 |

+

os.makedirs(result_path)

|

| 223 |

+

i=0

|

| 224 |

+

for mask in masks:

|

| 225 |

+

np.save(os.path.join(result_path,'{:05}.npy'.format(i)), mask)

|

| 226 |

+

i+=1

|

| 227 |

+

return video_output, painted_images, logits, masks

|

| 228 |

+

|

| 229 |

+

# check and download checkpoints if needed

|

| 230 |

+

SAM_checkpoint = "sam_vit_h_4b8939.pth"

|

| 231 |

+

sam_checkpoint_url = "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth"

|

| 232 |

+

xmem_checkpoint = "XMem-s012.pth"

|

| 233 |

+

xmem_checkpoint_url = "https://github.com/hkchengrex/XMem/releases/download/v1.0/XMem-s012.pth"

|

| 234 |

+

folder ="./checkpoints"

|

| 235 |

+

SAM_checkpoint = download_checkpoint(sam_checkpoint_url, folder, SAM_checkpoint)

|

| 236 |

+

xmem_checkpoint = download_checkpoint(xmem_checkpoint_url, folder, xmem_checkpoint)

|

| 237 |

+

|

| 238 |

+

# args, defined in track_anything.py

|

| 239 |

+

args = parse_augment()

|

| 240 |

+

args.port = 12207

|

| 241 |

+

args.device = "cuda:5"

|

| 242 |

+

|

| 243 |

+

model = TrackingAnything(SAM_checkpoint, xmem_checkpoint, args)

|

| 244 |

+

|

| 245 |

+

with gr.Blocks() as iface:

|

| 246 |

+

"""

|

| 247 |

+

state for

|

| 248 |

+

"""

|

| 249 |

+

state = gr.State([])

|

| 250 |

+

play_state = gr.State([])

|

| 251 |

+

video_state = gr.State([[],[],[]])

|

| 252 |

+

click_state = gr.State([[],[]])

|

| 253 |

+

logits = gr.State([])

|

| 254 |

+

masks = gr.State([])

|

| 255 |

+

painted_images = gr.State([])

|

| 256 |

+

origin_image = gr.State(None)

|

| 257 |

+

template_mask = gr.State(None)

|

| 258 |

+

select_correction_frame = gr.State(None)

|

| 259 |

+

corrected_state = gr.State([[],[],[]])

|

| 260 |

+

fps = gr.State([])

|

| 261 |

+

# video_name = gr.State([])

|

| 262 |

+

# queue value for image refresh, origin image, mask, logits, painted image

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

|

| 266 |

+

with gr.Row():

|

| 267 |

+

|

| 268 |

+

# for user video input

|

| 269 |

+

with gr.Column(scale=1.0):

|

| 270 |

+

video_input = gr.Video().style(height=720)

|

| 271 |

+

|

| 272 |

+

# listen to the user action for play and pause input video

|

| 273 |

+

video_input.play(fn=play_video, inputs=play_state, outputs=play_state, scroll_to_output=True, show_progress=True)

|

| 274 |

+

video_input.pause(fn=pause_video, inputs=play_state, outputs=play_state)

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

with gr.Row(scale=1):

|

| 278 |

+

# put the template frame under the radio button

|

| 279 |

+

with gr.Column(scale=0.5):

|

| 280 |

+

# click points settins, negative or positive, mode continuous or single

|

| 281 |

+

with gr.Row():

|

| 282 |

+

with gr.Row(scale=0.5):

|

| 283 |

+

point_prompt = gr.Radio(

|

| 284 |

+

choices=["Positive", "Negative"],

|

| 285 |

+

value="Positive",

|

| 286 |

+

label="Point Prompt",

|

| 287 |

+

interactive=True)

|

| 288 |

+

click_mode = gr.Radio(

|

| 289 |

+

choices=["Continuous", "Single"],

|

| 290 |

+

value="Continuous",

|

| 291 |

+

label="Clicking Mode",

|

| 292 |

+

interactive=True)

|

| 293 |

+

with gr.Row(scale=0.5):

|

| 294 |

+

clear_button_clike = gr.Button(value="Clear Clicks", interactive=True).style(height=160)

|

| 295 |

+

clear_button_image = gr.Button(value="Clear Image", interactive=True)

|

| 296 |

+

template_frame = gr.Image(type="pil",interactive=True, elem_id="template_frame").style(height=360)

|

| 297 |

+

with gr.Column():

|

| 298 |

+

template_select_button = gr.Button(value="Template select", interactive=True, variant="primary")

|

| 299 |

+

|

| 300 |

+

|

| 301 |

+

|

| 302 |

+

with gr.Column(scale=0.5):

|

| 303 |

+

|

| 304 |

+

|

| 305 |

+

# for intermedia result check and correction

|

| 306 |

+

# intermedia_image = gr.Image(type="pil", interactive=True, elem_id="intermedia_frame").style(height=360)

|

| 307 |

+

video_output = gr.Video().style(height=360)

|

| 308 |

+

tracking_video_predict_button = gr.Button(value="Tracking")

|

| 309 |

+

|

| 310 |

+

image_output = gr.Image(type="pil", interactive=True, elem_id="image_output").style(height=360)

|

| 311 |

+

image_selection_slider = gr.Slider(minimum=0, maximum=100, step=0.1, value=0, label="Image Selection", interactive=True)

|

| 312 |

+

correct_track_button = gr.Button(value="Interactive Correction")

|

| 313 |

+

|

| 314 |

+

template_frame.select(

|

| 315 |

+

fn=sam_refine,

|

| 316 |

+

inputs=[

|

| 317 |

+

origin_image, point_prompt, click_state, logits

|

| 318 |

+

],

|

| 319 |

+

outputs=[

|

| 320 |

+

template_frame, click_state, logits, template_mask

|

| 321 |

+

]

|

| 322 |

+

)

|

| 323 |

+

|

| 324 |

+

template_select_button.click(

|

| 325 |

+

fn=get_frames_from_video,

|

| 326 |

+

inputs=[

|

| 327 |

+

video_input,

|

| 328 |

+

play_state

|

| 329 |

+

],

|

| 330 |

+

# outputs=[video_state, template_frame, origin_image, fps, video_name],

|

| 331 |

+

outputs=[video_state, template_frame, origin_image, fps],

|

| 332 |

+

)

|

| 333 |

+

|

| 334 |

+

tracking_video_predict_button.click(

|

| 335 |

+

fn=vos_tracking_video,

|

| 336 |

+

inputs=[video_state, template_mask, fps, video_input],

|

| 337 |

+

outputs=[video_output, painted_images, masks, logits]

|

| 338 |

+

)

|

| 339 |

+

image_selection_slider.release(fn=vos_tracking_image,

|

| 340 |

+

inputs=[image_selection_slider, painted_images], outputs=[image_output, select_correction_frame], api_name="select_image")

|

| 341 |

+

# correction

|

| 342 |

+

image_output.select(

|

| 343 |

+

fn=interactive_correction,

|

| 344 |

+

inputs=[video_state, point_prompt, click_state, select_correction_frame],

|

| 345 |

+

outputs=[image_output, corrected_state]

|

| 346 |

+

)

|

| 347 |

+

correct_track_button.click(

|

| 348 |

+

fn=correct_track,

|

| 349 |

+

inputs=[video_state, select_correction_frame, corrected_state, masks, logits, painted_images, fps,video_input],

|

| 350 |

+

outputs=[video_output, painted_images, logits, masks ]

|

| 351 |

+

)

|

| 352 |

+

|

| 353 |

+

|

| 354 |

+

|

| 355 |

+

# clear input

|

| 356 |

+

video_input.clear(

|

| 357 |

+

lambda: ([], [], [[], [], []],

|

| 358 |

+

None, "", "", "", "", "", "", "", [[],[]],

|

| 359 |

+

None),

|

| 360 |

+

[],

|

| 361 |

+

[ state, play_state, video_state,

|

| 362 |

+