Spaces:

Running

on

CPU Upgrade

Running

on

CPU Upgrade

update model and gradio version

Browse files- app.py +19 -49

- examples/chemistry.png +0 -0

- examples/example_inputs.jsonl +6 -6

- examples/guicai.jpeg +0 -0

- examples/poem.jpeg +0 -0

- examples/sota.jpeg +0 -0

- examples/triangle.jpeg +0 -0

- examples/watermelon.jpeg +0 -0

- requirements.txt +1 -1

app.py

CHANGED

|

@@ -8,16 +8,12 @@ import time

|

|

| 8 |

from concurrent.futures import ThreadPoolExecutor

|

| 9 |

from utils import is_chinese, process_image_without_resize, parse_response, templates_agent_cogagent, template_grounding_cogvlm, postprocess_text

|

| 10 |

|

| 11 |

-

DESCRIPTION = '''<h2 style='text-align: center'> <a href="https://github.com/THUDM/CogVLM2"> CogVLM2 </a

|

| 12 |

|

| 13 |

-

NOTES = 'This app is adapted from <a href="https://github.com/THUDM/CogVLM">https://github.com/THUDM/CogVLM2</a>

|

| 14 |

|

| 15 |

MAINTENANCE_NOTICE1 = 'Hint 1: If the app report "Something went wrong, connection error out", please turn off your proxy and retry.<br>Hint 2: If you upload a large size of image like 10MB, it may take some time to upload and process. Please be patient and wait.'

|

| 16 |

|

| 17 |

-

GROUNDING_NOTICE = 'Hint: When you check "Grounding", please use the <a href="https://github.com/THUDM/CogVLM/blob/main/utils/utils/template.py#L344">corresponding prompt</a> or the examples below.'

|

| 18 |

-

|

| 19 |

-

AGENT_NOTICE = 'Hint: When you check "CogAgent", please use the <a href="https://github.com/THUDM/CogVLM/blob/main/utils/utils/template.py#L761C1-L761C17">corresponding prompt</a> or the examples below.'

|

| 20 |

-

|

| 21 |

|

| 22 |

default_chatbox = [("", "Hi, What do you want to know about this image?")]

|

| 23 |

|

|

@@ -36,10 +32,7 @@ def post(

|

|

| 36 |

image_prompt,

|

| 37 |

result_previous,

|

| 38 |

hidden_image,

|

| 39 |

-

|

| 40 |

-

cogagent,

|

| 41 |

-

grounding_template,

|

| 42 |

-

agent_template

|

| 43 |

):

|

| 44 |

result_text = [(ele[0], ele[1]) for ele in result_previous]

|

| 45 |

for i in range(len(result_text)-1, -1, -1):

|

|

@@ -47,7 +40,7 @@ def post(

|

|

| 47 |

del result_text[i]

|

| 48 |

print(f"history {result_text}")

|

| 49 |

|

| 50 |

-

is_zh =

|

| 51 |

|

| 52 |

if image_prompt is None:

|

| 53 |

print("Image empty")

|

|

@@ -77,24 +70,13 @@ def post(

|

|

| 77 |

encoded_img = None

|

| 78 |

|

| 79 |

model_use = "vlm_chat"

|

| 80 |

-

if not

|

| 81 |

-

model_use = "

|

| 82 |

-

if grounding_template:

|

| 83 |

-

input_text = postprocess_text(grounding_template, input_text)

|

| 84 |

-

elif cogagent:

|

| 85 |

-

model_use = "agent_chat"

|

| 86 |

-

if agent_template is not None and agent_template != "do not use template":

|

| 87 |

-

input_text = postprocess_text(agent_template, input_text)

|

| 88 |

-

|

| 89 |

prompt = input_text

|

| 90 |

|

| 91 |

-

|

| 92 |

-

prompt += "(with grounding)"

|

| 93 |

-

|

| 94 |

-

print(f'request {model_use} model... with prompt {prompt}, grounding_template {grounding_template}, agent_template {agent_template}')

|

| 95 |

data = json.dumps({

|

| 96 |

'model_use': model_use,

|

| 97 |

-

'is_grounding': grounding,

|

| 98 |

'text': prompt,

|

| 99 |

'history': result_text,

|

| 100 |

'image': encoded_img,

|

|

@@ -121,13 +103,7 @@ def post(

|

|

| 121 |

# response = {'result':input_text}

|

| 122 |

|

| 123 |

answer = str(response['result'])

|

| 124 |

-

|

| 125 |

-

parse_response(pil_img, answer, image_path_grounding)

|

| 126 |

-

new_answer = answer.replace(input_text, "")

|

| 127 |

-

result_text.append((input_text, new_answer))

|

| 128 |

-

result_text.append((None, (image_path_grounding,)))

|

| 129 |

-

else:

|

| 130 |

-

result_text.append((input_text, answer))

|

| 131 |

print(result_text)

|

| 132 |

print('finished')

|

| 133 |

return "", result_text, hidden_image

|

|

@@ -164,34 +140,28 @@ def main():

|

|

| 164 |

|

| 165 |

image_prompt = gr.Image(type="filepath", label="Image Prompt", value=None)

|

| 166 |

with gr.Row():

|

| 167 |

-

|

| 168 |

-

|

| 169 |

-

with gr.Row():

|

| 170 |

-

# grounding_notice = gr.Markdown(GROUNDING_NOTICE)

|

| 171 |

-

grounding_template = gr.Dropdown(choices=template_grounding_cogvlm, label="Grounding Template", value=template_grounding_cogvlm[0])

|

| 172 |

-

# agent_notice = gr.Markdown(AGENT_NOTICE)

|

| 173 |

-

agent_template = gr.Dropdown(choices=templates_agent_cogagent, label="Agent Template", value=templates_agent_cogagent[0])

|

| 174 |

-

|

| 175 |

with gr.Row():

|

| 176 |

-

temperature = gr.Slider(maximum=1, value=0.

|

| 177 |

-

top_p = gr.Slider(maximum=1, value=0.

|

| 178 |

-

top_k = gr.Slider(maximum=50, value=

|

| 179 |

|

| 180 |

with gr.Column(scale=5.5):

|

| 181 |

result_text = gr.components.Chatbot(label='Multi-round conversation History', value=[("", "Hi, What do you want to know about this image?")], height=550)

|

| 182 |

hidden_image_hash = gr.Textbox(visible=False)

|

| 183 |

|

| 184 |

-

gr_examples = gr.Examples(examples=[[example["text"], example["image"], example["

|

| 185 |

-

inputs=[input_text, image_prompt,

|

| 186 |

label="Example Inputs (Click to insert an examplet into the input box)",

|

| 187 |

examples_per_page=6)

|

| 188 |

|

| 189 |

gr.Markdown(MAINTENANCE_NOTICE1)

|

| 190 |

|

| 191 |

print(gr.__version__)

|

| 192 |

-

run_button.click(fn=post,inputs=[input_text, temperature, top_p, top_k, image_prompt, result_text, hidden_image_hash,

|

| 193 |

outputs=[input_text, result_text, hidden_image_hash])

|

| 194 |

-

input_text.submit(fn=post,inputs=[input_text, temperature, top_p, top_k, image_prompt, result_text, hidden_image_hash,

|

| 195 |

outputs=[input_text, result_text, hidden_image_hash])

|

| 196 |

clear_button.click(fn=clear_fn, inputs=clear_button, outputs=[input_text, result_text, image_prompt])

|

| 197 |

image_prompt.upload(fn=clear_fn2, inputs=clear_button, outputs=[result_text])

|

|

@@ -199,8 +169,8 @@ def main():

|

|

| 199 |

|

| 200 |

print(gr.__version__)

|

| 201 |

|

| 202 |

-

demo.

|

| 203 |

-

|

| 204 |

|

| 205 |

if __name__ == '__main__':

|

| 206 |

main()

|

|

|

|

| 8 |

from concurrent.futures import ThreadPoolExecutor

|

| 9 |

from utils import is_chinese, process_image_without_resize, parse_response, templates_agent_cogagent, template_grounding_cogvlm, postprocess_text

|

| 10 |

|

| 11 |

+

DESCRIPTION = '''<h2 style='text-align: center'> <a href="https://github.com/THUDM/CogVLM2"> CogVLM2 </a></h2>'''

|

| 12 |

|

| 13 |

+

NOTES = 'This app is adapted from <a href="https://github.com/THUDM/CogVLM">https://github.com/THUDM/CogVLM2</a> . It would be recommended to check out the repo if you want to see the detail of our model.\n\n该demo仅作为测试使用,不支持批量请求。如有大批量需求,欢迎联系[智谱AI](mailto:business@zhipuai.cn)。\n<a href="http://36.103.203.44:7861/">备用链接</a>'

|

| 14 |

|

| 15 |

MAINTENANCE_NOTICE1 = 'Hint 1: If the app report "Something went wrong, connection error out", please turn off your proxy and retry.<br>Hint 2: If you upload a large size of image like 10MB, it may take some time to upload and process. Please be patient and wait.'

|

| 16 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 17 |

|

| 18 |

default_chatbox = [("", "Hi, What do you want to know about this image?")]

|

| 19 |

|

|

|

|

| 32 |

image_prompt,

|

| 33 |

result_previous,

|

| 34 |

hidden_image,

|

| 35 |

+

is_english,

|

|

|

|

|

|

|

|

|

|

| 36 |

):

|

| 37 |

result_text = [(ele[0], ele[1]) for ele in result_previous]

|

| 38 |

for i in range(len(result_text)-1, -1, -1):

|

|

|

|

| 40 |

del result_text[i]

|

| 41 |

print(f"history {result_text}")

|

| 42 |

|

| 43 |

+

is_zh = not is_english

|

| 44 |

|

| 45 |

if image_prompt is None:

|

| 46 |

print("Image empty")

|

|

|

|

| 70 |

encoded_img = None

|

| 71 |

|

| 72 |

model_use = "vlm_chat"

|

| 73 |

+

if not is_english:

|

| 74 |

+

model_use = "vlm_chat_zh"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 75 |

prompt = input_text

|

| 76 |

|

| 77 |

+

print(f'request {model_use} model... with prompt {prompt}')

|

|

|

|

|

|

|

|

|

|

| 78 |

data = json.dumps({

|

| 79 |

'model_use': model_use,

|

|

|

|

| 80 |

'text': prompt,

|

| 81 |

'history': result_text,

|

| 82 |

'image': encoded_img,

|

|

|

|

| 103 |

# response = {'result':input_text}

|

| 104 |

|

| 105 |

answer = str(response['result'])

|

| 106 |

+

result_text.append((input_text, answer))

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 107 |

print(result_text)

|

| 108 |

print('finished')

|

| 109 |

return "", result_text, hidden_image

|

|

|

|

| 140 |

|

| 141 |

image_prompt = gr.Image(type="filepath", label="Image Prompt", value=None)

|

| 142 |

with gr.Row():

|

| 143 |

+

is_english = gr.Checkbox(label="Use English Model")

|

| 144 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 145 |

with gr.Row():

|

| 146 |

+

temperature = gr.Slider(maximum=1, value=0.8, minimum=0, label='Temperature')

|

| 147 |

+

top_p = gr.Slider(maximum=1, value=0.4, minimum=0, label='Top P')

|

| 148 |

+

top_k = gr.Slider(maximum=50, value=1, minimum=1, step=1, label='Top K')

|

| 149 |

|

| 150 |

with gr.Column(scale=5.5):

|

| 151 |

result_text = gr.components.Chatbot(label='Multi-round conversation History', value=[("", "Hi, What do you want to know about this image?")], height=550)

|

| 152 |

hidden_image_hash = gr.Textbox(visible=False)

|

| 153 |

|

| 154 |

+

gr_examples = gr.Examples(examples=[[example["text"], example["image"], example["is_english"]] for example in examples],

|

| 155 |

+

inputs=[input_text, image_prompt, is_english],

|

| 156 |

label="Example Inputs (Click to insert an examplet into the input box)",

|

| 157 |

examples_per_page=6)

|

| 158 |

|

| 159 |

gr.Markdown(MAINTENANCE_NOTICE1)

|

| 160 |

|

| 161 |

print(gr.__version__)

|

| 162 |

+

run_button.click(fn=post,inputs=[input_text, temperature, top_p, top_k, image_prompt, result_text, hidden_image_hash, is_english],

|

| 163 |

outputs=[input_text, result_text, hidden_image_hash])

|

| 164 |

+

input_text.submit(fn=post,inputs=[input_text, temperature, top_p, top_k, image_prompt, result_text, hidden_image_hash, is_english],

|

| 165 |

outputs=[input_text, result_text, hidden_image_hash])

|

| 166 |

clear_button.click(fn=clear_fn, inputs=clear_button, outputs=[input_text, result_text, image_prompt])

|

| 167 |

image_prompt.upload(fn=clear_fn2, inputs=clear_button, outputs=[result_text])

|

|

|

|

| 169 |

|

| 170 |

print(gr.__version__)

|

| 171 |

|

| 172 |

+

demo.launch(max_threads=10)

|

| 173 |

+

|

| 174 |

|

| 175 |

if __name__ == '__main__':

|

| 176 |

main()

|

examples/chemistry.png

ADDED

|

examples/example_inputs.jsonl

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

-

{"id":1, "text": "

|

| 2 |

-

{"id":2, "text": "

|

| 3 |

-

{"id":3, "text": "

|

| 4 |

-

{"id":4, "text": "

|

| 5 |

-

{"id":5, "text": "

|

| 6 |

-

{"id":6, "text": "

|

|

|

|

| 1 |

+

{"id":1, "text": "请详细描述这张图片。", "image": "examples/guicai.jpeg", "is_english": false}

|

| 2 |

+

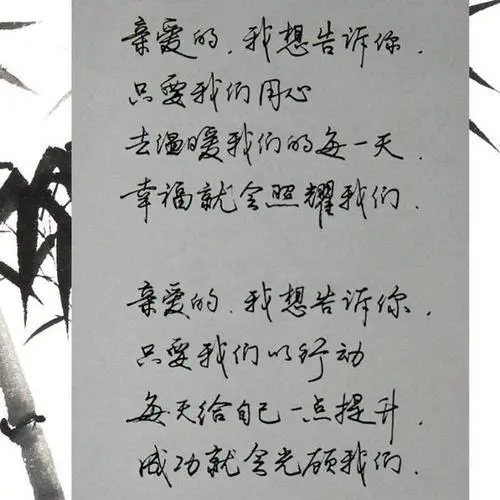

{"id":2, "text": "输出这段文本。", "image": "examples/poem.jpeg", "is_english": false}

|

| 3 |

+

{"id":3, "text": "用列表形式描述图中的关键步骤。", "image": "examples/chemistry.png", "is_english": false}

|

| 4 |

+

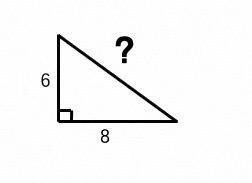

{"id":4, "text": "这道题怎么做?step by step.", "image": "examples/triangle.jpeg", "is_english": false}

|

| 5 |

+

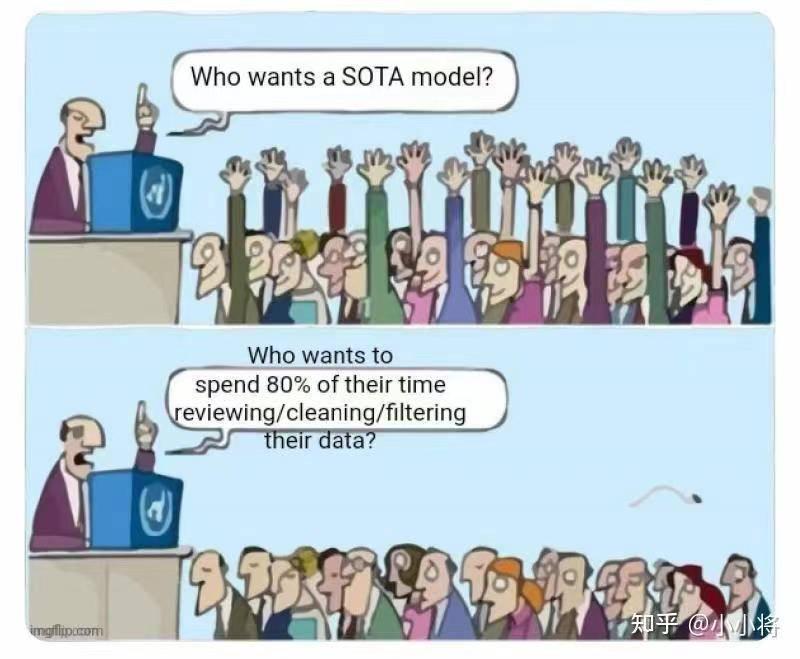

{"id":5, "text": "Please analyze this image and its meaning in detail.", "image": "examples/sota.jpeg", "is_english": true}

|

| 6 |

+

{"id":6, "text": "Which watermelon is the ripest? Provide reasons.", "image": "examples/watermelon.jpeg", "is_english": true}

|

examples/guicai.jpeg

ADDED

|

examples/poem.jpeg

ADDED

|

examples/sota.jpeg

ADDED

|

examples/triangle.jpeg

ADDED

|

examples/watermelon.jpeg

ADDED

|

requirements.txt

CHANGED

|

@@ -1,4 +1,4 @@

|

|

| 1 |

-

gradio

|

| 2 |

seaborn

|

| 3 |

Pillow

|

| 4 |

matplotlib

|

|

|

|

| 1 |

+

gradio==4.29.0

|

| 2 |

seaborn

|

| 3 |

Pillow

|

| 4 |

matplotlib

|