Spaces:

Build error

Build error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .DS_Store +0 -0

- .cache/huggingface/.gitignore +1 -0

- .cache/huggingface/download/openbiollm-llama3-8b.Q5_K_M.gguf.lock +0 -0

- .cache/huggingface/download/openbiollm-llama3-8b.Q5_K_M.gguf.metadata +3 -0

- .env +4 -0

- .gitattributes +155 -0

- .github/workflows/update_space.yml +28 -0

- .gitignore +7 -0

- .streamlit/secrets.toml +3 -0

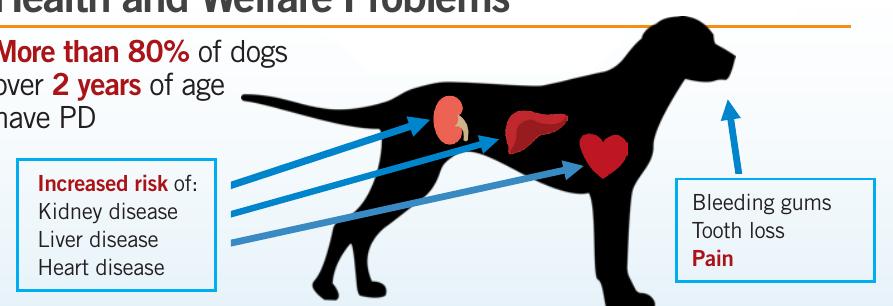

- Data/AC-Aids-for-Dogs_Canine-Periodontal-Disease.pdf +0 -0

- Data/cancer_and_cure__a_critical_analysis.27.pdf +0 -0

- Data/medical_oncology_handbook_june_2020_edition.pdf +0 -0

- DockerFile +20 -0

- MultimodalRAG.ipynb +0 -0

- MultimodalRAGUpdatedVersion.ipynb +0 -0

- README.md +125 -8

- Streaming.py +223 -0

- Streamingnewversion.py +244 -0

- __pycache__/app.cpython-310.pyc +0 -0

- __pycache__/clip_helpers.cpython-310.pyc +0 -0

- __pycache__/combinedmultimodal.cpython-310.pyc +0 -0

- __pycache__/imagebind.cpython-310.pyc +0 -0

- __pycache__/images.cpython-310.pyc +0 -0

- __pycache__/ingest.cpython-310.pyc +0 -0

- app.py +83 -0

- app1.py +119 -0

- combinedmultimodal.py +621 -0

- freeze +0 -0

- images.py +12 -0

- images/architecture.png +0 -0

- images/figure-1-1.jpg +0 -0

- images/figure-1-10.jpg +0 -0

- images/figure-1-11.jpg +0 -0

- images/figure-1-2.jpg +0 -0

- images/figure-1-3.jpg +0 -0

- images/figure-1-4.jpg +0 -0

- images/figure-1-5.jpg +0 -0

- images/figure-1-6.jpg +0 -0

- images/figure-1-7.jpg +0 -0

- images/figure-1-8.jpg +0 -0

- images/figure-1-9.jpg +0 -0

- images/multimodal.png +3 -0

- images1/figure-1-1.jpg +0 -0

- images1/figure-1-10.jpg +0 -0

- images1/figure-1-11.jpg +0 -0

- images1/figure-1-2.jpg +0 -0

- images1/figure-1-3.jpg +0 -0

- images1/figure-1-4.jpg +0 -0

- images1/figure-1-5.jpg +0 -0

- images1/figure-1-6.jpg +0 -0

.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

.cache/huggingface/.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

*

|

.cache/huggingface/download/openbiollm-llama3-8b.Q5_K_M.gguf.lock

ADDED

|

File without changes

|

.cache/huggingface/download/openbiollm-llama3-8b.Q5_K_M.gguf.metadata

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

d1248c48f0ade670847d05fb2cb356a75df4db3a

|

| 2 |

+

1753c629bf99c261e8b92498d813f382f811e903cdc0e685a11d1689612b34ce

|

| 3 |

+

1723860909.403446

|

.env

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

QDRANT_URL=https://f1e9a70a-afb9-498d-b66d-cb248e0d5557.us-east4-0.gcp.cloud.qdrant.io:6333

|

| 2 |

+

QDRANT_API_KEY=REXlX_PeDvCoXeS9uKCzC--e3-LQV0lw3_jBTdcLZ7P5_F6EOdwklA

|

| 3 |

+

NVIDIA_API_KEY=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

|

| 4 |

+

GEMINI_API_KEY=AIzaSyCXGnm-n6aF962jeorkjo2IsMCwxDwj4bo

|

.gitattributes

CHANGED

|

@@ -33,3 +33,158 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/multimodal.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

multimodal.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

myenv/bin/python filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

myenv/bin/python3 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

myenv/bin/python3.10 filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

myenv/bin/ruff filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

myenv/lib/python3.10/site-packages/Cython/Compiler/Code.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

myenv/lib/python3.10/site-packages/PIL/.dylibs/libfreetype.6.dylib filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

myenv/lib/python3.10/site-packages/PIL/.dylibs/libharfbuzz.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

myenv/lib/python3.10/site-packages/_soundfile_data/libsndfile_x86_64.dylib filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

myenv/lib/python3.10/site-packages/altair/vegalite/v5/schema/__pycache__/channels.cpython-310.pyc filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

myenv/lib/python3.10/site-packages/altair/vegalite/v5/schema/__pycache__/core.cpython-310.pyc filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libaom.3.2.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libavcodec.60.31.102.dylib filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libavfilter.9.12.100.dylib filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libavformat.60.16.100.dylib filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libdav1d.7.dylib filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libfreetype.6.dylib filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libharfbuzz.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libswscale.7.5.100.dylib filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libvpx.9.dylib filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libx264.164.dylib filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libx265.199.dylib filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

myenv/lib/python3.10/site-packages/av/.dylibs/libxml2.2.dylib filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

myenv/lib/python3.10/site-packages/cmake/data/bin/ccmake filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

myenv/lib/python3.10/site-packages/cmake/data/bin/cmake filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

myenv/lib/python3.10/site-packages/cmake/data/bin/cpack filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

myenv/lib/python3.10/site-packages/cmake/data/bin/ctest filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

myenv/lib/python3.10/site-packages/cmake/data/doc/cmake/CMake.qch filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

myenv/lib/python3.10/site-packages/cryptography/hazmat/bindings/_rust.abi3.so filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/avx/ctransformers.dll filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/avx/libctransformers.dylib filter=lfs diff=lfs merge=lfs -text

|

| 68 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/avx/libctransformers.so filter=lfs diff=lfs merge=lfs -text

|

| 69 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/avx2/ctransformers.dll filter=lfs diff=lfs merge=lfs -text

|

| 70 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/avx2/libctransformers.dylib filter=lfs diff=lfs merge=lfs -text

|

| 71 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/avx2/libctransformers.so filter=lfs diff=lfs merge=lfs -text

|

| 72 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/basic/ctransformers.dll filter=lfs diff=lfs merge=lfs -text

|

| 73 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/basic/libctransformers.dylib filter=lfs diff=lfs merge=lfs -text

|

| 74 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/basic/libctransformers.so filter=lfs diff=lfs merge=lfs -text

|

| 75 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/cuda/ctransformers.dll filter=lfs diff=lfs merge=lfs -text

|

| 76 |

+

myenv/lib/python3.10/site-packages/ctransformers/lib/cuda/libctransformers.so filter=lfs diff=lfs merge=lfs -text

|

| 77 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libSvtAv1Enc.1.8.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 78 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libX11.6.dylib filter=lfs diff=lfs merge=lfs -text

|

| 79 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libaom.3.8.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 80 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libavcodec.60.31.102.dylib filter=lfs diff=lfs merge=lfs -text

|

| 81 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libavformat.60.16.100.dylib filter=lfs diff=lfs merge=lfs -text

|

| 82 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libcrypto.3.dylib filter=lfs diff=lfs merge=lfs -text

|

| 83 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libdav1d.7.dylib filter=lfs diff=lfs merge=lfs -text

|

| 84 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libgnutls.30.dylib filter=lfs diff=lfs merge=lfs -text

|

| 85 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libjxl.0.9.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 86 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libp11-kit.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 87 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/librav1e.0.6.6.dylib filter=lfs diff=lfs merge=lfs -text

|

| 88 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libunistring.5.dylib filter=lfs diff=lfs merge=lfs -text

|

| 89 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libvpx.8.dylib filter=lfs diff=lfs merge=lfs -text

|

| 90 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libx264.164.dylib filter=lfs diff=lfs merge=lfs -text

|

| 91 |

+

myenv/lib/python3.10/site-packages/cv2/.dylibs/libx265.199.dylib filter=lfs diff=lfs merge=lfs -text

|

| 92 |

+

myenv/lib/python3.10/site-packages/cv2/cv2.abi3.so filter=lfs diff=lfs merge=lfs -text

|

| 93 |

+

myenv/lib/python3.10/site-packages/decord/.dylibs/libavcodec.58.35.100.dylib filter=lfs diff=lfs merge=lfs -text

|

| 94 |

+

myenv/lib/python3.10/site-packages/decord/.dylibs/libavfilter.7.40.101.dylib filter=lfs diff=lfs merge=lfs -text

|

| 95 |

+

myenv/lib/python3.10/site-packages/decord/.dylibs/libavformat.58.20.100.dylib filter=lfs diff=lfs merge=lfs -text

|

| 96 |

+

myenv/lib/python3.10/site-packages/decord/.dylibs/libvpx.8.dylib filter=lfs diff=lfs merge=lfs -text

|

| 97 |

+

myenv/lib/python3.10/site-packages/decord/.dylibs/libx264.164.dylib filter=lfs diff=lfs merge=lfs -text

|

| 98 |

+

myenv/lib/python3.10/site-packages/decord/libdecord.dylib filter=lfs diff=lfs merge=lfs -text

|

| 99 |

+

myenv/lib/python3.10/site-packages/emoji/unicode_codes/__pycache__/data_dict.cpython-310.pyc filter=lfs diff=lfs merge=lfs -text

|

| 100 |

+

myenv/lib/python3.10/site-packages/gradio/frpc_darwin_amd64_v0.2 filter=lfs diff=lfs merge=lfs -text

|

| 101 |

+

myenv/lib/python3.10/site-packages/grpc/_cython/cygrpc.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 102 |

+

myenv/lib/python3.10/site-packages/grpc_tools/_protoc_compiler.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 103 |

+

myenv/lib/python3.10/site-packages/layoutparser/misc/NotoSerifCJKjp-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 104 |

+

myenv/lib/python3.10/site-packages/lib/libllama.dylib filter=lfs diff=lfs merge=lfs -text

|

| 105 |

+

myenv/lib/python3.10/site-packages/llama_cpp/libllama.dylib filter=lfs diff=lfs merge=lfs -text

|

| 106 |

+

myenv/lib/python3.10/site-packages/lxml/etree.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 107 |

+

myenv/lib/python3.10/site-packages/lxml/objectify.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 108 |

+

myenv/lib/python3.10/site-packages/magic/libmagic/magic.mgc filter=lfs diff=lfs merge=lfs -text

|

| 109 |

+

myenv/lib/python3.10/site-packages/minijinja/_lowlevel.abi3.so filter=lfs diff=lfs merge=lfs -text

|

| 110 |

+

myenv/lib/python3.10/site-packages/numpy/.dylibs/libgfortran.5.dylib filter=lfs diff=lfs merge=lfs -text

|

| 111 |

+

myenv/lib/python3.10/site-packages/numpy/.dylibs/libopenblas64_.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 112 |

+

myenv/lib/python3.10/site-packages/numpy/core/_multiarray_umath.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 113 |

+

myenv/lib/python3.10/site-packages/numpy/core/_simd.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 114 |

+

myenv/lib/python3.10/site-packages/onnx/onnx_cpp2py_export.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 115 |

+

myenv/lib/python3.10/site-packages/onnxruntime/capi/onnxruntime_pybind11_state.so filter=lfs diff=lfs merge=lfs -text

|

| 116 |

+

myenv/lib/python3.10/site-packages/pandas/_libs/algos.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 117 |

+

myenv/lib/python3.10/site-packages/pandas/_libs/groupby.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 118 |

+

myenv/lib/python3.10/site-packages/pandas/_libs/hashtable.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 119 |

+

myenv/lib/python3.10/site-packages/pandas/_libs/interval.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 120 |

+

myenv/lib/python3.10/site-packages/pandas/_libs/join.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 121 |

+

myenv/lib/python3.10/site-packages/pandas/_libs/tslibs/offsets.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 122 |

+

myenv/lib/python3.10/site-packages/pikepdf/.dylibs/libgnutls.30.dylib filter=lfs diff=lfs merge=lfs -text

|

| 123 |

+

myenv/lib/python3.10/site-packages/pikepdf/.dylibs/libp11-kit.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 124 |

+

myenv/lib/python3.10/site-packages/pikepdf/.dylibs/libqpdf.29.8.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 125 |

+

myenv/lib/python3.10/site-packages/pikepdf/.dylibs/libunistring.5.dylib filter=lfs diff=lfs merge=lfs -text

|

| 126 |

+

myenv/lib/python3.10/site-packages/pikepdf/_core.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 127 |

+

myenv/lib/python3.10/site-packages/pillow_heif/.dylibs/libaom.3.8.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 128 |

+

myenv/lib/python3.10/site-packages/pillow_heif/.dylibs/libjxl.0.8.2.dylib filter=lfs diff=lfs merge=lfs -text

|

| 129 |

+

myenv/lib/python3.10/site-packages/pillow_heif/.dylibs/libx265.199.dylib filter=lfs diff=lfs merge=lfs -text

|

| 130 |

+

myenv/lib/python3.10/site-packages/pyarrow/_compute.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 131 |

+

myenv/lib/python3.10/site-packages/pyarrow/_dataset.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 132 |

+

myenv/lib/python3.10/site-packages/pyarrow/_flight.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 133 |

+

myenv/lib/python3.10/site-packages/pyarrow/lib.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 134 |

+

myenv/lib/python3.10/site-packages/pyarrow/libarrow.1601.dylib filter=lfs diff=lfs merge=lfs -text

|

| 135 |

+

myenv/lib/python3.10/site-packages/pyarrow/libarrow_acero.1601.dylib filter=lfs diff=lfs merge=lfs -text

|

| 136 |

+

myenv/lib/python3.10/site-packages/pyarrow/libarrow_dataset.1601.dylib filter=lfs diff=lfs merge=lfs -text

|

| 137 |

+

myenv/lib/python3.10/site-packages/pyarrow/libarrow_flight.1601.dylib filter=lfs diff=lfs merge=lfs -text

|

| 138 |

+

myenv/lib/python3.10/site-packages/pyarrow/libarrow_python.dylib filter=lfs diff=lfs merge=lfs -text

|

| 139 |

+

myenv/lib/python3.10/site-packages/pyarrow/libarrow_substrait.1601.dylib filter=lfs diff=lfs merge=lfs -text

|

| 140 |

+

myenv/lib/python3.10/site-packages/pyarrow/libparquet.1601.dylib filter=lfs diff=lfs merge=lfs -text

|

| 141 |

+

myenv/lib/python3.10/site-packages/pydantic_core/_pydantic_core.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 142 |

+

myenv/lib/python3.10/site-packages/pydeck/nbextension/static/index.js.map filter=lfs diff=lfs merge=lfs -text

|

| 143 |

+

myenv/lib/python3.10/site-packages/pypdf/_codecs/__pycache__/adobe_glyphs.cpython-310.pyc filter=lfs diff=lfs merge=lfs -text

|

| 144 |

+

myenv/lib/python3.10/site-packages/pypdfium2_raw/libpdfium.dylib filter=lfs diff=lfs merge=lfs -text

|

| 145 |

+

myenv/lib/python3.10/site-packages/rapidfuzz/distance/metrics_cpp.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 146 |

+

myenv/lib/python3.10/site-packages/rapidfuzz/distance/metrics_cpp_avx2.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 147 |

+

myenv/lib/python3.10/site-packages/rapidfuzz/fuzz_cpp.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 148 |

+

myenv/lib/python3.10/site-packages/rapidfuzz/fuzz_cpp_avx2.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 149 |

+

myenv/lib/python3.10/site-packages/safetensors/_safetensors_rust.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 150 |

+

myenv/lib/python3.10/site-packages/scipy/.dylibs/libgfortran.5.dylib filter=lfs diff=lfs merge=lfs -text

|

| 151 |

+

myenv/lib/python3.10/site-packages/scipy/.dylibs/libopenblas.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 152 |

+

myenv/lib/python3.10/site-packages/scipy/fft/_pocketfft/pypocketfft.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 153 |

+

myenv/lib/python3.10/site-packages/scipy/io/_fast_matrix_market/_fmm_core.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 154 |

+

myenv/lib/python3.10/site-packages/scipy/linalg/_flapack.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 155 |

+

myenv/lib/python3.10/site-packages/scipy/misc/face.dat filter=lfs diff=lfs merge=lfs -text

|

| 156 |

+

myenv/lib/python3.10/site-packages/scipy/optimize/_highs/_highs_wrapper.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 157 |

+

myenv/lib/python3.10/site-packages/scipy/sparse/_sparsetools.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 158 |

+

myenv/lib/python3.10/site-packages/scipy/spatial/_qhull.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 159 |

+

myenv/lib/python3.10/site-packages/scipy/special/_ufuncs.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 160 |

+

myenv/lib/python3.10/site-packages/scipy/special/cython_special.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 161 |

+

myenv/lib/python3.10/site-packages/scipy/stats/_unuran/unuran_wrapper.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 162 |

+

myenv/lib/python3.10/site-packages/sentencepiece/_sentencepiece.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 163 |

+

myenv/lib/python3.10/site-packages/skimage/filters/rank/generic_cy.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 164 |

+

myenv/lib/python3.10/site-packages/sklearn/_loss/_loss.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 165 |

+

myenv/lib/python3.10/site-packages/tiktoken/_tiktoken.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 166 |

+

myenv/lib/python3.10/site-packages/tokenizers/tokenizers.cpython-310-darwin.so filter=lfs diff=lfs merge=lfs -text

|

| 167 |

+

myenv/lib/python3.10/site-packages/torch/.dylibs/libiomp5.dylib filter=lfs diff=lfs merge=lfs -text

|

| 168 |

+

myenv/lib/python3.10/site-packages/torch/bin/protoc filter=lfs diff=lfs merge=lfs -text

|

| 169 |

+

myenv/lib/python3.10/site-packages/torch/bin/protoc-3.13.0.0 filter=lfs diff=lfs merge=lfs -text

|

| 170 |

+

myenv/lib/python3.10/site-packages/torch/lib/libiomp5.dylib filter=lfs diff=lfs merge=lfs -text

|

| 171 |

+

myenv/lib/python3.10/site-packages/torch/lib/libtorch_cpu.dylib filter=lfs diff=lfs merge=lfs -text

|

| 172 |

+

myenv/lib/python3.10/site-packages/torch/lib/libtorch_python.dylib filter=lfs diff=lfs merge=lfs -text

|

| 173 |

+

myenv/lib/python3.10/site-packages/torchaudio/_torchaudio.so filter=lfs diff=lfs merge=lfs -text

|

| 174 |

+

myenv/lib/python3.10/site-packages/torchaudio/lib/libflashlight-text.so filter=lfs diff=lfs merge=lfs -text

|

| 175 |

+

myenv/lib/python3.10/site-packages/torchaudio/lib/libtorchaudio.so filter=lfs diff=lfs merge=lfs -text

|

| 176 |

+

myenv/lib/python3.10/site-packages/torchvision/.dylibs/libc++.1.0.dylib filter=lfs diff=lfs merge=lfs -text

|

| 177 |

+

myenv/lib/python3.10/site-packages/unicorn/lib/libunicorn.2.dylib filter=lfs diff=lfs merge=lfs -text

|

| 178 |

+

myenv/lib/python3.10/site-packages/unicorn/lib/libunicorn.a filter=lfs diff=lfs merge=lfs -text

|

| 179 |

+

myenv/share/jupyter/nbextensions/pydeck/index.js.map filter=lfs diff=lfs merge=lfs -text

|

| 180 |

+

openbiollm-llama3-8b.Q5_K_M.gguf filter=lfs diff=lfs merge=lfs -text

|

| 181 |

+

path/to/data/collections/image_data/0/wal/open-1 filter=lfs diff=lfs merge=lfs -text

|

| 182 |

+

path/to/data/collections/image_data/0/wal/open-2 filter=lfs diff=lfs merge=lfs -text

|

| 183 |

+

path/to/data/collections/medical_img/0/wal/open-1 filter=lfs diff=lfs merge=lfs -text

|

| 184 |

+

path/to/data/collections/medical_img/0/wal/open-2 filter=lfs diff=lfs merge=lfs -text

|

| 185 |

+

qdrant_data/collections/vector_db/0/wal/open-1 filter=lfs diff=lfs merge=lfs -text

|

| 186 |

+

qdrant_data/collections/vector_db/0/wal/open-2 filter=lfs diff=lfs merge=lfs -text

|

| 187 |

+

qdrant_storage/collections/medical_img/0/wal/open-1 filter=lfs diff=lfs merge=lfs -text

|

| 188 |

+

qdrant_storage/collections/medical_img/0/wal/open-2 filter=lfs diff=lfs merge=lfs -text

|

| 189 |

+

qdrant_storage/collections/vector_db/0/wal/open-1 filter=lfs diff=lfs merge=lfs -text

|

| 190 |

+

qdrant_storage/collections/vector_db/0/wal/open-2 filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/update_space.yml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Run Python script

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- surbhi

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

build:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

|

| 12 |

+

steps:

|

| 13 |

+

- name: Checkout

|

| 14 |

+

uses: actions/checkout@v2

|

| 15 |

+

|

| 16 |

+

- name: Set up Python

|

| 17 |

+

uses: actions/setup-python@v2

|

| 18 |

+

with:

|

| 19 |

+

python-version: '3.9'

|

| 20 |

+

|

| 21 |

+

- name: Install Gradio

|

| 22 |

+

run: python -m pip install gradio

|

| 23 |

+

|

| 24 |

+

- name: Log in to Hugging Face

|

| 25 |

+

run: python -c 'import huggingface_hub; huggingface_hub.login(token="${{ secrets.hf_token }}")'

|

| 26 |

+

|

| 27 |

+

- name: Deploy to Spaces

|

| 28 |

+

run: gradio deploy

|

.gitignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

qdrant_data

|

| 2 |

+

myenv

|

| 3 |

+

openbiollm-llama3-8b.Q5_K_M.gguf

|

| 4 |

+

__pycache__

|

| 5 |

+

secrets.toml

|

| 6 |

+

.streamlit/

|

| 7 |

+

.env

|

.streamlit/secrets.toml

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# .streamlit/secrets.toml

|

| 2 |

+

QDRANT_URL = "https://f1e9a70a-afb9-498d-b66d-cb248e0d5557.us-east4-0.gcp.cloud.qdrant.io:6333"

|

| 3 |

+

QDRANT_API_KEY = "REXlX_PeDvCoXeS9uKCzC--e3-LQV0lw3_jBTdcLZ7P5_F6EOdwklA"

|

Data/AC-Aids-for-Dogs_Canine-Periodontal-Disease.pdf

ADDED

|

Binary file (485 kB). View file

|

|

|

Data/cancer_and_cure__a_critical_analysis.27.pdf

ADDED

|

Binary file (226 kB). View file

|

|

|

Data/medical_oncology_handbook_june_2020_edition.pdf

ADDED

|

Binary file (818 kB). View file

|

|

|

DockerFile

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Use the official Python image from the Docker Hub

|

| 2 |

+

FROM python:3.10

|

| 3 |

+

|

| 4 |

+

# Set the working directory in the container

|

| 5 |

+

WORKDIR /app

|

| 6 |

+

|

| 7 |

+

# Copy the requirements file into the container at /app

|

| 8 |

+

COPY requirements.txt .

|

| 9 |

+

|

| 10 |

+

# Install the required libraries

|

| 11 |

+

RUN pip install --no-cache-dir -r requirements.txt

|

| 12 |

+

|

| 13 |

+

# Copy the rest of the application code into the container

|

| 14 |

+

COPY . .

|

| 15 |

+

|

| 16 |

+

# Expose the port the app runs on

|

| 17 |

+

EXPOSE 8501

|

| 18 |

+

|

| 19 |

+

# Command to run the application

|

| 20 |

+

CMD ["streamlit", "run", "stream.py", "--server.port=8501", "--server.address=0.0.0.0"]

|

MultimodalRAG.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

MultimodalRAGUpdatedVersion.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

README.md

CHANGED

|

@@ -1,12 +1,129 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: gray

|

| 5 |

-

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Medical_RAG

|

| 3 |

+

app_file: combinedmultimodal.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 4.41.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

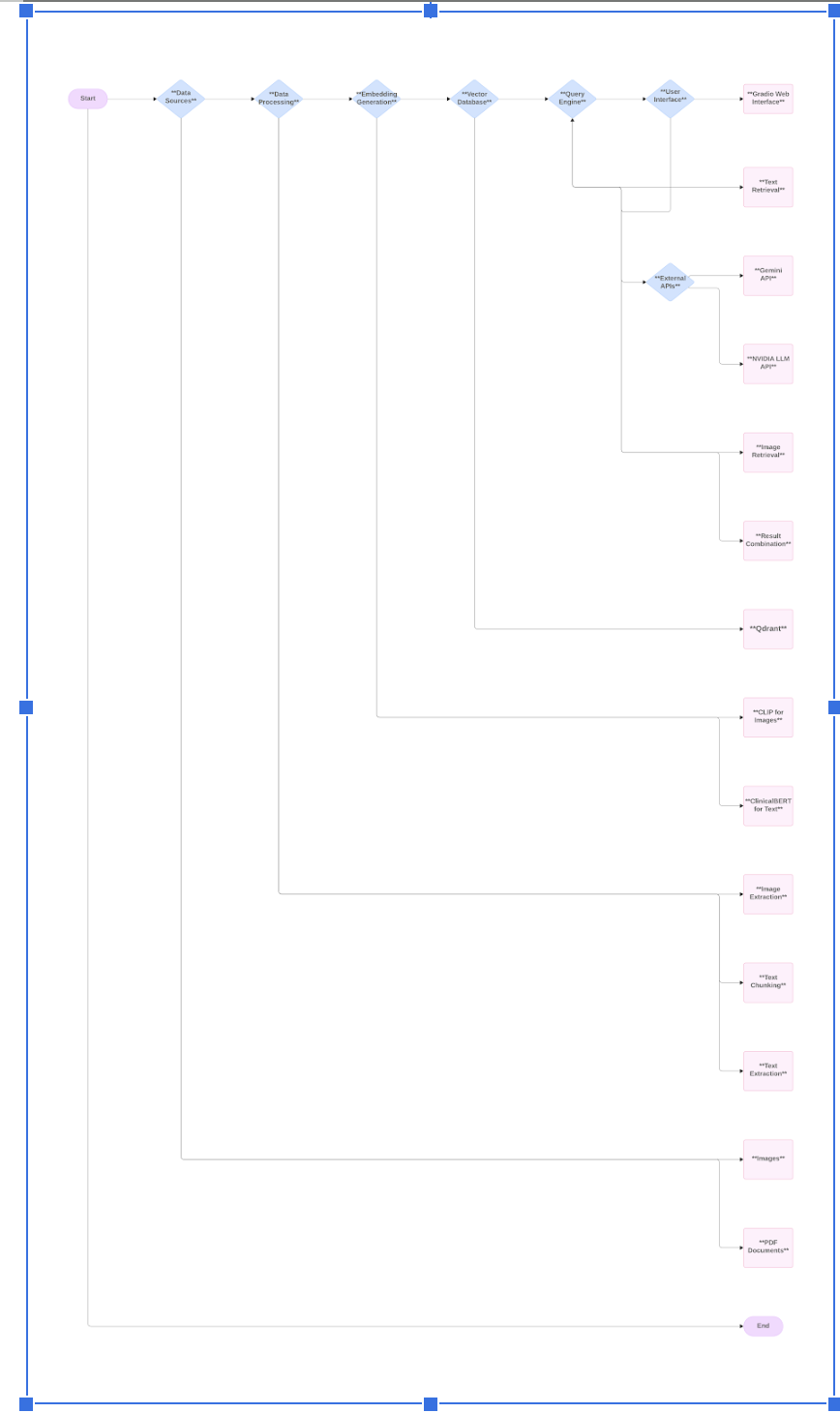

+

# Advancing Text Searching with Advanced Indexing Techniques in Healthcare Applications(In Progress)

|

| 8 |

|

| 9 |

+

Welcome to the project repository for advancing text searching with advanced indexing techniques in healthcare applications. This project implements a powerful Retrieval-Augmented Generation (RAG) system using cutting-edge AI technologies, specifically designed to enhance text searching capabilities within the healthcare domain.I have also implemented Multimodal Text Searching for Medical Documents.

|

| 10 |

+

|

| 11 |

+

## 🚀 Features For Text Based Medical Query Based System

|

| 12 |

+

|

| 13 |

+

- **BioLLM 8B**: Advanced language model for generating and processing medical text.

|

| 14 |

+

- **ClinicalBert**: State-of-the-art embedding model for accurate representation of medical texts.

|

| 15 |

+

- **Qdrant**: Self-hosted Vector Database (Vector DB) for efficient storage and retrieval of embeddings.

|

| 16 |

+

- **Langchain & Llama CPP**: Orchestration frameworks for seamless integration and workflow management.

|

| 17 |

+

|

| 18 |

+

# Medical Knowledge Base Query System

|

| 19 |

+

|

| 20 |

+

A multimodal medical information retrieval system combining text and image-based querying for comprehensive medical knowledge access.

|

| 21 |

+

|

| 22 |

+

## Features For Multimodality Medical Query Based System:

|

| 23 |

+

[Watch the video on YouTube](https://youtu.be/pNy7RqfRUrc?si=1HQgq54oHT6YoR0B)

|

| 24 |

+

|

| 25 |

+

### 🧠 Multimodal Medical Information Retrieval

|

| 26 |

+

- Combines text and image-based querying for comprehensive medical knowledge access

|

| 27 |

+

- Uses Qdrant vector database to store and retrieve both text and image embeddings

|

| 28 |

+

|

| 29 |

+

### 🔤 Advanced Natural Language Processing

|

| 30 |

+

- Utilizes ClinicalBERT for domain-specific text embeddings

|

| 31 |

+

- Implements NVIDIA's Palmyra-med-70b model for medical language understanding fast Inference time.

|

| 32 |

+

|

| 33 |

+

### 🖼️ Image Analysis Capabilities

|

| 34 |

+

- Incorporates CLIP (Contrastive Language-Image Pre-training) for image feature extraction

|

| 35 |

+

- Generates image summaries using Google's Gemini 1.5 Flash model

|

| 36 |

+

|

| 37 |

+

### 📄 PDF Processing

|

| 38 |

+

- Extracts text and images from medical PDF documents

|

| 39 |

+

- Implements intelligent chunking strategies for text processing

|

| 40 |

+

|

| 41 |

+

### 🔍 Vector Search

|

| 42 |

+

- Uses Qdrant for efficient similarity search on both text and image vectors

|

| 43 |

+

- Implements hybrid search combining CLIP-based image similarity and text-based summary similarity

|

| 44 |

+

|

| 45 |

+

### 🖥️ Interactive User Interface

|

| 46 |

+

- Gradio-based web interface for easy querying and result visualization

|

| 47 |

+

- Displays relevant text responses alongside related medical images

|

| 48 |

+

|

| 49 |

+

### 🧩 Extensible Architecture

|

| 50 |

+

- Modular design allowing for easy integration of new models or data sources

|

| 51 |

+

- Supports both local and cloud-based model deployment

|

| 52 |

+

The high level architectural framework for this application is given as follows:

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

### ⚡ Performance Optimization

|

| 56 |

+

- Implements batching and multi-threading for efficient processing of large document sets

|

| 57 |

+

- Utilizes GPU acceleration where available

|

| 58 |

+

|

| 59 |

+

### 🎛️ Customizable Retrieval

|

| 60 |

+

- Adjustable similarity thresholds for image retrieval

|

| 61 |

+

- Configurable number of top-k results for both text and image queries

|

| 62 |

+

|

| 63 |

+

### 📊 Comprehensive Visualization

|

| 64 |

+

- Displays query results with both textual information and related images

|

| 65 |

+

- Provides a gallery view of all extracted images from the knowledge base

|

| 66 |

+

|

| 67 |

+

### 🔐 Environment Management

|

| 68 |

+

- Uses .env file for secure API key management

|

| 69 |

+

- Supports both CPU and GPU environments

|

| 70 |

+

|

| 71 |

+

### DEMO SCREENSHOT

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

## 🎥 Video Demonstration

|

| 75 |

+

|

| 76 |

+

Explore the capabilities of our project with our detailed [YouTube video](https://youtu.be/nKCKUcnQ390).

|

| 77 |

+

|

| 78 |

+

## Installation

|

| 79 |

+

|

| 80 |

+

To get started with this project, follow these steps:

|

| 81 |

+

|

| 82 |

+

1. **Install Dependencies**:

|

| 83 |

+

```bash

|

| 84 |

+

pip install -r requirements.txt

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

2. **Set up Qdrant**:

|

| 88 |

+

- Follow the [Qdrant Installation Guide](https://qdrant.tech/documentation/quick_start/) to install and configure Qdrant.

|

| 89 |

+

|

| 90 |

+

3. **Configure the Application**:

|

| 91 |

+

- Ensure configuration files for BioLLM, ClinicalBert, Langchain, and Llama CPP are correctly set up.

|

| 92 |

+

|

| 93 |

+

4. **Run the Application**:

|

| 94 |

+

if you want to run the text reterival application in Flask mode

|

| 95 |

+

```bash

|

| 96 |

+

uvicorn app:app

|

| 97 |

+

```

|

| 98 |

+

if you want to run the text reterival application through Streamlit

|

| 99 |

+

``bash

|

| 100 |

+

streamlit run Streaming.py

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

if you want to run the multimodal application run it through Gradio Interface

|

| 104 |

+

```bash

|

| 105 |

+

python combinedmultimodal.py

|

| 106 |

+

```

|

| 107 |

+

|

| 108 |

+

## 💡 Usage

|

| 109 |

+

|

| 110 |

+

- **Querying the System**: Input medical queries via the application's interface for detailed information retrieval.

|

| 111 |

+

- **Text Generation**: Utilize BioLLM 8B to generate comprehensive medical responses.

|

| 112 |

+

|

| 113 |

+

## 👥 Contributing

|

| 114 |

+

|

| 115 |

+

We welcome contributions to enhance this project! Here's how you can contribute:

|

| 116 |

+

|

| 117 |

+

1. Fork the repository.

|

| 118 |

+

2. Create a new branch (`git checkout -b feature-name`).

|

| 119 |

+

3. Commit your changes (`git commit -am 'Add feature'`).

|

| 120 |

+

4. Push to the branch (`git push origin feature-name`).

|

| 121 |

+

5. Open a Pull Request with detailed information about your changes.

|

| 122 |

+

|

| 123 |

+

## 📜 License

|

| 124 |

+

|

| 125 |

+

This project is licensed under the MIT License. See the [LICENSE](LICENSE) file for details.

|

| 126 |

+

|

| 127 |

+

## 📞 Contact

|

| 128 |

+

|

| 129 |

+

For questions or suggestions, please open an issue or contact the repository owner at [surbhisharma9099@gmail.com](mailto:surbhisharma9099@gmail.com).

|

Streaming.py

ADDED

|

@@ -0,0 +1,223 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

| 3 |

+

from langchain_community.document_loaders import DirectoryLoader, UnstructuredFileLoader, PDFMinerLoader

|

| 4 |

+

from langchain_community.vectorstores import Qdrant

|

| 5 |

+

from langchain_community.embeddings import SentenceTransformerEmbeddings

|

| 6 |

+

from langchain_community.retrievers import BM25Retriever

|

| 7 |

+

from qdrant_client import QdrantClient

|

| 8 |

+

from qdrant_client.http.exceptions import ResponseHandlingException

|

| 9 |

+

from glob import glob

|

| 10 |

+

from llama_index.vector_stores.qdrant import QdrantVectorStore

|

| 11 |

+

from langchain.chains import RetrievalQA

|

| 12 |

+

from transformers import AutoTokenizer, AutoModel

|

| 13 |

+

from sentence_transformers import models, SentenceTransformer

|

| 14 |

+

from langchain.embeddings.base import Embeddings

|

| 15 |

+

from qdrant_client.models import VectorParams

|

| 16 |

+

import torch

|

| 17 |

+

import base64

|

| 18 |

+

from langchain_community.llms import LlamaCpp

|

| 19 |

+

from langchain_core.prompts import PromptTemplate

|

| 20 |

+

from huggingface_hub import hf_hub_download

|

| 21 |

+

from tempfile import NamedTemporaryFile

|

| 22 |

+

from langchain.retrievers import EnsembleRetriever

|

| 23 |

+

|

| 24 |

+

# Set page configuration

|

| 25 |

+

st.set_page_config(layout="wide")

|

| 26 |

+

st.markdown("""

|

| 27 |

+

<meta http-equiv="Content-Security-Policy"

|

| 28 |

+

content="default-src 'self'; object-src 'self'; frame-src 'self' data:;

|

| 29 |

+

script-src 'self' 'unsafe-inline' 'unsafe-eval'; style-src 'self' 'unsafe-inline';">

|

| 30 |

+

""", unsafe_allow_html=True)

|

| 31 |

+

# Streamlit secrets

|

| 32 |

+

qdrant_url = st.secrets["QDRANT_URL"]

|

| 33 |

+

qdrant_api_key = st.secrets["QDRANT_API_KEY"]

|

| 34 |

+

|

| 35 |

+

# For debugging only - remove or comment out these lines after verification

|

| 36 |

+

#st.write(f"QDRANT_URL: {qdrant_url}")

|

| 37 |

+

#st.write(f"QDRANT_API_KEY: {qdrant_api_key}")

|

| 38 |

+

|

| 39 |

+

class ClinicalBertEmbeddings(Embeddings):

|

| 40 |

+

def __init__(self, model_name: str = "medicalai/ClinicalBERT"):

|

| 41 |

+

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 42 |

+

self.model = AutoModel.from_pretrained(model_name)

|

| 43 |

+

self.model.eval()

|

| 44 |

+

|

| 45 |

+

def embed(self, text: str):

|

| 46 |

+

inputs = self.tokenizer(text, return_tensors="pt", padding=True, truncation=True, max_length=512)

|

| 47 |

+

with torch.no_grad():

|

| 48 |

+

outputs = self.model(**inputs)

|

| 49 |

+

embeddings = self.mean_pooling(outputs, inputs['attention_mask'])

|

| 50 |

+

return embeddings.squeeze().numpy()

|

| 51 |

+

|

| 52 |

+

def mean_pooling(self, model_output, attention_mask):

|

| 53 |

+

token_embeddings = model_output[0] # First element of model_output contains all token embeddings

|

| 54 |

+

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

|

| 55 |

+

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

|

| 56 |

+

|

| 57 |

+

def embed_documents(self, texts):

|

| 58 |

+

return [self.embed(text) for text in texts]

|

| 59 |

+

|

| 60 |

+

def embed_query(self, text):

|

| 61 |

+

return self.embed(text)

|

| 62 |

+

|

| 63 |

+

@st.cache_resource

|

| 64 |

+

def load_model():

|

| 65 |

+

model_name = "aaditya/OpenBioLLM-Llama3-8B-GGUF"

|

| 66 |

+

model_file = "openbiollm-llama3-8b.Q5_K_M.gguf"

|

| 67 |

+

model_path = hf_hub_download(model_name, filename=model_file, local_dir='./')

|

| 68 |

+

return LlamaCpp(

|

| 69 |

+

model_path=model_path,

|

| 70 |

+

temperature=0.3,

|

| 71 |

+

n_ctx=2048,

|

| 72 |

+

top_p=1

|

| 73 |

+

)

|

| 74 |

+

|

| 75 |

+

# Initialize embeddings

|

| 76 |

+

@st.cache_resource

|

| 77 |

+

def load_embeddings():

|

| 78 |

+

return ClinicalBertEmbeddings(model_name="medicalai/ClinicalBERT")

|

| 79 |

+

|

| 80 |

+

# Initialize database

|

| 81 |

+

@st.cache_resource

|

| 82 |

+

def setup_qdrant():

|

| 83 |

+

try:

|

| 84 |

+

if not qdrant_url or not qdrant_api_key:

|

| 85 |

+

raise ValueError("QDRANT_URL or QDRANT_API_KEY not set in environment variables.")

|

| 86 |

+

|

| 87 |

+

# Initialize Qdrant client

|

| 88 |

+

client = QdrantClient(

|

| 89 |

+

url=qdrant_url,

|

| 90 |

+

api_key=qdrant_api_key,

|

| 91 |

+

port=443, # Assuming HTTPS should use port 443

|

| 92 |

+

)

|

| 93 |

+

st.write("Qdrant client initialized successfully.")

|

| 94 |

+

|

| 95 |

+

# Create or recreate collection

|

| 96 |

+

collection_name = "vector_db"

|

| 97 |

+

try:

|

| 98 |

+

collection_info = client.get_collection(collection_name=collection_name)

|

| 99 |

+

st.write(f"Collection '{collection_name}' already exists.")

|

| 100 |

+

except ResponseHandlingException:

|

| 101 |

+

st.write(f"Collection '{collection_name}' does not exist. Creating a new one.")

|

| 102 |

+

client.recreate_collection(

|

| 103 |

+

collection_name=collection_name,

|

| 104 |

+

vectors_config=VectorParams(size=768, distance="Cosine")

|

| 105 |

+

)

|

| 106 |

+

st.write(f"Collection '{collection_name}' created successfully.")

|

| 107 |

+

|

| 108 |

+

embeddings = load_embeddings()

|

| 109 |

+

st.write("Embeddings model loaded successfully.")

|

| 110 |

+

|

| 111 |

+

return Qdrant(client=client, embeddings=embeddings, collection_name=collection_name)

|

| 112 |

+

|

| 113 |

+

except Exception as e:

|

| 114 |

+

st.error(f"Failed to initialize Qdrant: {e}")

|

| 115 |

+

return None

|

| 116 |

+

|

| 117 |

+

# Initialize database

|

| 118 |

+

db = setup_qdrant()

|

| 119 |

+

|

| 120 |

+

if db is None:

|

| 121 |

+

st.error("Qdrant setup failed, exiting.")

|

| 122 |

+

else:

|

| 123 |

+

st.success("Qdrant setup successful.")

|

| 124 |

+

|

| 125 |

+

# Load models

|

| 126 |

+

llm = load_model()

|

| 127 |

+

embeddings = load_embeddings()

|

| 128 |

+

|

| 129 |

+

# Define prompt template

|

| 130 |

+

prompt_template = """Use the following pieces of information to answer the user's question.

|

| 131 |

+

If you don't know the answer, just say that you don't know, don't try to make up an answer.

|

| 132 |

+

|

| 133 |

+

Context: {context}

|

| 134 |

+

Question: {question}

|

| 135 |

+

|

| 136 |

+

Only return the helpful answer. Answer must be detailed and well explained.

|

| 137 |

+

Helpful answer:

|

| 138 |

+

"""

|

| 139 |

+

prompt = PromptTemplate(template=prompt_template, input_variables=['context', 'question'])

|

| 140 |

+

# Define retriever

|

| 141 |

+

|

| 142 |

+

# Define Streamlit app

|

| 143 |

+

|

| 144 |

+

def process_answer(query):

|

| 145 |

+

chain_type_kwargs = {"prompt": prompt}

|

| 146 |

+

global ensemble_retriever

|

| 147 |

+

qa = RetrievalQA.from_chain_type(

|

| 148 |

+

llm=llm,

|

| 149 |

+

chain_type="stuff",

|

| 150 |

+

retriever=ensemble_retriever,

|

| 151 |

+

return_source_documents=True,

|

| 152 |

+

chain_type_kwargs=chain_type_kwargs,

|

| 153 |

+

verbose=True

|

| 154 |

+

)

|

| 155 |

+

response = qa(query)

|

| 156 |

+

answer = response['result']

|

| 157 |

+

source_document = response['source_documents'][0].page_content

|

| 158 |

+

doc = response['source_documents'][0].metadata['source']

|

| 159 |

+

return answer, source_document, doc

|

| 160 |

+

|

| 161 |

+

def display_pdf(file):

|

| 162 |

+

with open(file, "rb") as f:

|

| 163 |

+

base64_pdf = base64.b64encode(f.read()).decode('utf-8')

|

| 164 |

+

pdf_display = f'<iframe src="data:application/pdf;base64,{base64_pdf}" width="100%" height="600" type="application/pdf"></iframe>'

|

| 165 |

+

st.markdown(pdf_display, unsafe_allow_html=True)

|

| 166 |

+

|

| 167 |

+

def main():

|

| 168 |

+

st.title("PDF Question Answering System")

|

| 169 |

+

|

| 170 |

+

uploaded_file = st.file_uploader("Upload your PDF", type=["pdf"])

|

| 171 |

+

|

| 172 |

+

if uploaded_file is not None:

|

| 173 |

+

# Save uploaded PDF

|

| 174 |

+

with NamedTemporaryFile(delete=False, suffix=".pdf") as temp_file:

|

| 175 |

+

temp_file.write(uploaded_file.read())

|

| 176 |

+

temp_file_path = temp_file.name

|

| 177 |

+

|

| 178 |

+

# Display PDF

|

| 179 |

+

st.subheader("PDF Preview")

|

| 180 |

+

display_pdf(temp_file_path)

|

| 181 |

+

|

| 182 |

+

# Load and process PDF

|

| 183 |

+

loader = PDFMinerLoader(temp_file_path)

|

| 184 |

+

documents = loader.load()

|

| 185 |

+

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

|

| 186 |

+

texts = text_splitter.split_documents(documents)

|

| 187 |

+

|

| 188 |

+

# Update the Qdrant database with the new PDF content

|

| 189 |

+

|

| 190 |

+

try:

|

| 191 |

+

db.add_documents(texts)

|

| 192 |

+

st.success("PDF processed and vector database updated!")

|

| 193 |

+

global ensemble_retriever

|

| 194 |

+

# Initialize retriever after documents are added

|

| 195 |

+

bm25_retriever = BM25Retriever.from_documents(documents=texts)

|

| 196 |

+

bm25_retriever.k = 3

|

| 197 |

+

qdrant_retriever = db.as_retriever(search_kwargs={"k":1})

|

| 198 |

+

# Combine both retrievers using EnsembleRetriever

|

| 199 |

+

ensemble_retriever = EnsembleRetriever(

|

| 200 |

+

retrievers=[qdrant_retriever, bm25_retriever],

|

| 201 |

+

weights=[0.5, 0.5] # Adjust weights based on desired contribution

|

| 202 |

+

)

|

| 203 |

+

|

| 204 |

+

except Exception as e:

|

| 205 |

+

st.error(f"Error updating database: {e}")

|

| 206 |

+

|

| 207 |

+

st.subheader("Ask a question about the PDF")

|

| 208 |

+

user_input = st.text_input("Your question:")

|

| 209 |

+

|

| 210 |

+

if st.button('Get Response'):

|

| 211 |

+

if user_input:

|

| 212 |

+

try:

|

| 213 |

+

answer, source_document, doc = process_answer(user_input)

|

| 214 |

+

st.write("*Answer:*", answer)

|

| 215 |

+

st.write("*Source Document:*", source_document)

|

| 216 |

+

st.write("*Document Source:*", doc)

|

| 217 |

+

except Exception as e:

|

| 218 |

+

st.error(f"Error processing query: {e}")

|

| 219 |

+

else:

|

| 220 |

+

st.warning("Please enter a query.")

|

| 221 |

+

|

| 222 |

+

if __name__ == "__main__":

|

| 223 |

+

main()

|

Streamingnewversion.py

ADDED

|

@@ -0,0 +1,244 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

| 3 |

+

from langchain_community.document_loaders import DirectoryLoader, UnstructuredFileLoader, PDFMinerLoader

|

| 4 |

+

from langchain_community.vectorstores import Qdrant

|

| 5 |

+

from langchain_community.embeddings import SentenceTransformerEmbeddings

|

| 6 |

+

from langchain_community.retrievers import BM25Retriever

|

| 7 |

+

from qdrant_client import QdrantClient

|

| 8 |

+

from qdrant_client.http.exceptions import ResponseHandlingException

|

| 9 |

+

from glob import glob

|

| 10 |

+

from llama_index.vector_stores.qdrant import QdrantVectorStore

|

| 11 |

+

from langchain.chains import RetrievalQA

|

| 12 |

+

from transformers import AutoTokenizer, AutoModel

|

| 13 |

+

from sentence_transformers import models, SentenceTransformer

|

| 14 |

+

from langchain.embeddings.base import Embeddings

|

| 15 |

+

from qdrant_client.models import VectorParams

|

| 16 |

+

import torch

|

| 17 |

+