Use streamlit_option_menu

Browse files- Home.py +13 -15

- __pycache__/multipage.cpython-37.pyc +0 -0

- app_pages/__pycache__/about.cpython-37.pyc +0 -0

- app_pages/__pycache__/home.cpython-37.pyc +0 -0

- app_pages/__pycache__/ocr_comparator.cpython-37.pyc +0 -0

- app_pages/about.py +37 -0

- app_pages/home.py +19 -0

- app_pages/img_demo_1.jpg +0 -0

- app_pages/img_demo_2.jpg +0 -0

- app_pages/ocr.png +0 -0

- pages/App.py → app_pages/ocr_comparator.py +457 -466

- multipage.py +68 -0

- pages/About.py +0 -37

- requirements.txt +2 -1

Home.py

CHANGED

|

@@ -1,19 +1,17 @@

|

|

| 1 |

import streamlit as st

|

|

|

|

|

|

|

| 2 |

|

| 3 |

-

|

| 4 |

-

st.

|

|

|

|

|

|

|

|

|

|

| 5 |

|

| 6 |

-

|

|

|

|

|

|

|

|

|

|

| 7 |

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

st.write("")

|

| 11 |

-

st.write("")

|

| 12 |

-

|

| 13 |

-

st.markdown("##### This app allows you to compare, from a given image, the results of different solutions:")

|

| 14 |

-

st.markdown("##### *EasyOcr, PaddleOCR, MMOCR, Tesseract*")

|

| 15 |

-

st.write("")

|

| 16 |

-

st.write("")

|

| 17 |

-

st.markdown("👈 Select the **About** page from the sidebar for information on how the app works")

|

| 18 |

-

|

| 19 |

-

st.markdown("👈 or directly select the **App** page")

|

|

|

|

| 1 |

import streamlit as st

|

| 2 |

+

from multipage import MultiPage

|

| 3 |

+

from app_pages import home, about, ocr_comparator

|

| 4 |

|

| 5 |

+

app = MultiPage()

|

| 6 |

+

st.set_page_config(

|

| 7 |

+

page_title='OCR Comparator', layout ="wide",

|

| 8 |

+

initial_sidebar_state="expanded",

|

| 9 |

+

)

|

| 10 |

|

| 11 |

+

# Add all your application here

|

| 12 |

+

app.add_page("Home", "house", home.app)

|

| 13 |

+

app.add_page("About", "info-circle", about.app)

|

| 14 |

+

app.add_page("App", "cast", ocr_comparator.app)

|

| 15 |

|

| 16 |

+

# The main app

|

| 17 |

+

app.run()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

__pycache__/multipage.cpython-37.pyc

ADDED

|

Binary file (2.65 kB). View file

|

|

|

app_pages/__pycache__/about.cpython-37.pyc

ADDED

|

Binary file (2.02 kB). View file

|

|

|

app_pages/__pycache__/home.cpython-37.pyc

ADDED

|

Binary file (889 Bytes). View file

|

|

|

app_pages/__pycache__/ocr_comparator.cpython-37.pyc

ADDED

|

Binary file (46.5 kB). View file

|

|

|

app_pages/about.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

def app():

|

| 4 |

+

st.title("OCR solutions comparator")

|

| 5 |

+

|

| 6 |

+

st.write("")

|

| 7 |

+

st.write("")

|

| 8 |

+

st.write("")

|

| 9 |

+

|

| 10 |

+

st.markdown("##### This app allows you to compare, from a given picture, the results of different solutions:")

|

| 11 |

+

st.markdown("##### *EasyOcr, PaddleOCR, MMOCR, Tesseract*")

|

| 12 |

+

st.write("")

|

| 13 |

+

st.write("")

|

| 14 |

+

|

| 15 |

+

st.markdown(''' The 1st step is to choose the language for the text recognition (not all solutions \

|

| 16 |

+

support the same languages), and then choose the picture to consider. It is possible to upload a file, \

|

| 17 |

+

to take a picture, or to use a demo file. \

|

| 18 |

+

It is then possible to change the default values for the text area detection process, \

|

| 19 |

+

before launching the detection task for each solution.''')

|

| 20 |

+

st.write("")

|

| 21 |

+

|

| 22 |

+

st.markdown(''' The different results are then presented. The 2nd step is to choose one of these \

|

| 23 |

+

detection results, in order to carry out the text recognition process there. It is also possible to change \

|

| 24 |

+

the default settings for each solution.''')

|

| 25 |

+

st.write("")

|

| 26 |

+

|

| 27 |

+

st.markdown("###### The recognition results appear in 2 formats:")

|

| 28 |

+

st.markdown(''' - a visual format resumes the initial image, replacing the detected areas with \

|

| 29 |

+

the recognized text. The background is + or - strongly colored in green according to the \

|

| 30 |

+

confidence level of the recognition.

|

| 31 |

+

A slider allows you to change the font size, another \

|

| 32 |

+

allows you to modify the confidence threshold above which the text color changes: if it is at \

|

| 33 |

+

70% for example, then all the texts with a confidence threshold higher or equal to 70 will appear \

|

| 34 |

+

in white, in black otherwise.''')

|

| 35 |

+

|

| 36 |

+

st.markdown(" - a detailed format presents the results in a table, for each text box detected. \

|

| 37 |

+

It is possible to download this results in a local csv file.")

|

app_pages/home.py

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

def app():

|

| 4 |

+

st.image('ocr.png')

|

| 5 |

+

|

| 6 |

+

st.write("")

|

| 7 |

+

|

| 8 |

+

st.markdown('''#### OCR, or Optical Character Recognition, is a computer vision task, \

|

| 9 |

+

which includes the detection of text areas, and the recognition of characters.''')

|

| 10 |

+

st.write("")

|

| 11 |

+

st.write("")

|

| 12 |

+

|

| 13 |

+

st.markdown("##### This app allows you to compare, from a given image, the results of different solutions:")

|

| 14 |

+

st.markdown("##### *EasyOcr, PaddleOCR, MMOCR, Tesseract*")

|

| 15 |

+

st.write("")

|

| 16 |

+

st.write("")

|

| 17 |

+

st.markdown("👈 Select the **About** page from the sidebar for information on how the app works")

|

| 18 |

+

|

| 19 |

+

st.markdown("👈 or directly select the **App** page")

|

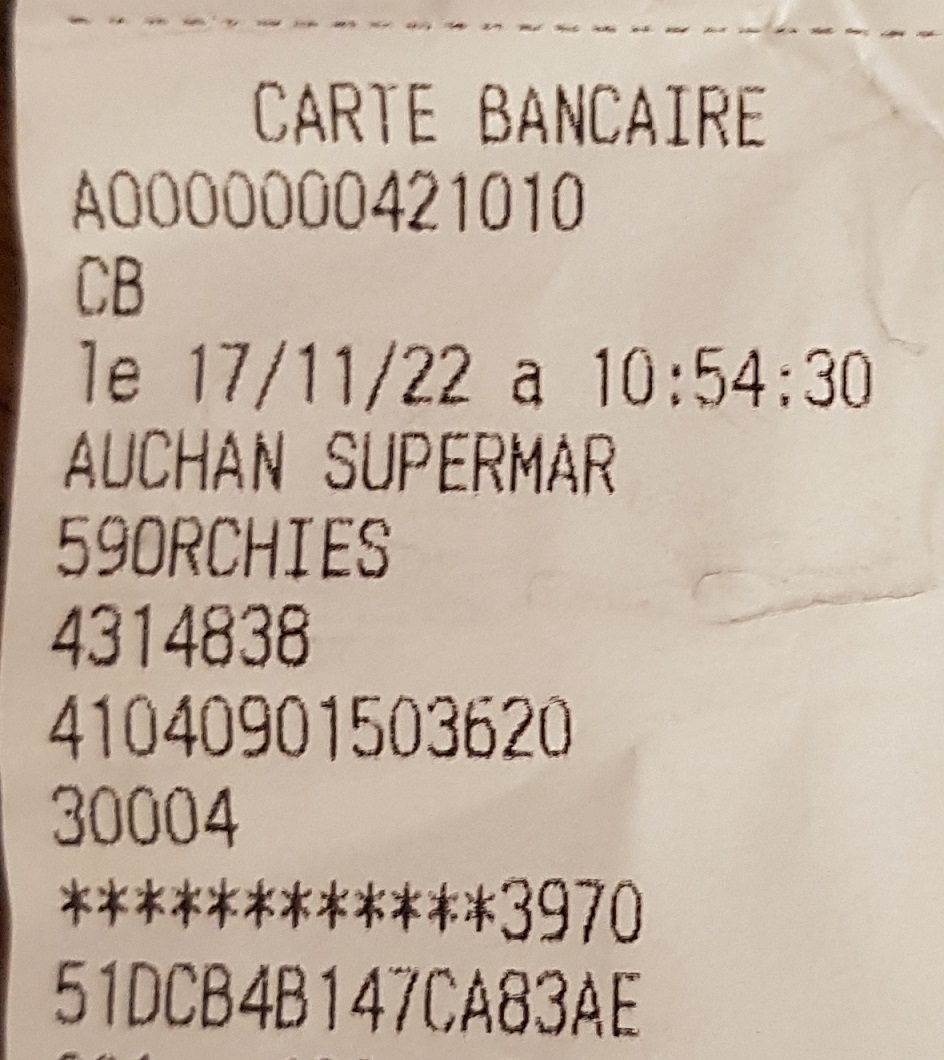

app_pages/img_demo_1.jpg

ADDED

|

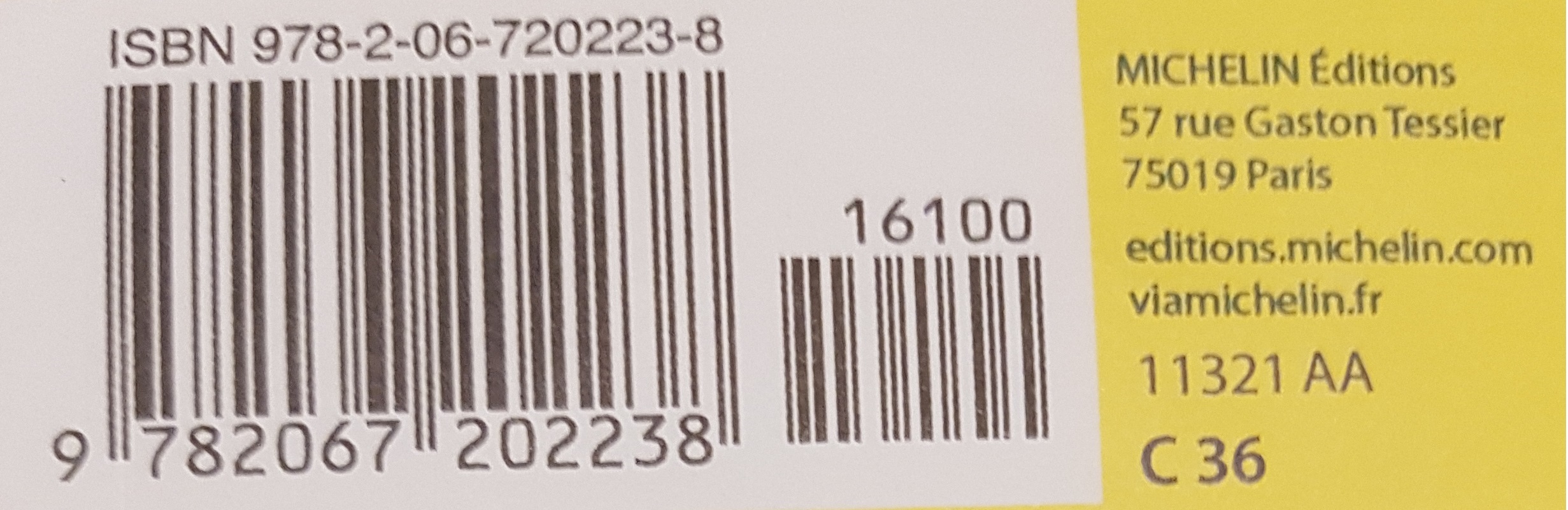

app_pages/img_demo_2.jpg

ADDED

|

app_pages/ocr.png

ADDED

|

pages/App.py → app_pages/ocr_comparator.py

RENAMED

|

@@ -929,491 +929,482 @@ def raz():

|

|

| 929 |

###################################################################################################

|

| 930 |

## MAIN

|

| 931 |

###################################################################################################

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 932 |

|

| 933 |

-

##-----------

|

| 934 |

-

|

| 935 |

-

|

| 936 |

-

|

| 937 |

-

<style>

|

| 938 |

-

section[data-testid="stSidebar"] {

|

| 939 |

-

width: 5rem;

|

| 940 |

-

}

|

| 941 |

-

</style>

|

| 942 |

-

""",unsafe_allow_html=True)

|

| 943 |

-

|

| 944 |

-

|

| 945 |

-

st.title("OCR solutions comparator")

|

| 946 |

-

st.markdown("##### *EasyOCR, PPOCR, MMOCR, Tesseract*")

|

| 947 |

-

#st.markdown("#### PID : " + str(os.getpid()))

|

| 948 |

-

|

| 949 |

-

# Initializations

|

| 950 |

-

with st.spinner("Initializations in progress ..."):

|

| 951 |

-

reader_type_list, reader_type_dict, list_dict_lang, \

|

| 952 |

-

cols_size, dict_back_colors, fig_colorscale = initializations()

|

| 953 |

-

img_demo_1, img_demo_2 = get_demo()

|

| 954 |

-

|

| 955 |

-

##----------- Choose language & image -------------------------------------------------------------

|

| 956 |

-

st.markdown("#### Choose languages for the text recognition:")

|

| 957 |

-

lang_col = st.columns(4)

|

| 958 |

-

easyocr_key_lang = lang_col[0].selectbox(reader_type_list[0]+" :", list_dict_lang[0].keys(), 26)

|

| 959 |

-

easyocr_lang = list_dict_lang[0][easyocr_key_lang]

|

| 960 |

-

ppocr_key_lang = lang_col[1].selectbox(reader_type_list[1]+" :", list_dict_lang[1].keys(), 22)

|

| 961 |

-

ppocr_lang = list_dict_lang[1][ppocr_key_lang]

|

| 962 |

-

mmocr_key_lang = lang_col[2].selectbox(reader_type_list[2]+" :", list_dict_lang[2].keys(), 0)

|

| 963 |

-

mmocr_lang = list_dict_lang[2][mmocr_key_lang]

|

| 964 |

-

tesserocr_key_lang = lang_col[3].selectbox(reader_type_list[3]+" :", list_dict_lang[3].keys(), 35)

|

| 965 |

-

tesserocr_lang = list_dict_lang[3][tesserocr_key_lang]

|

| 966 |

-

|

| 967 |

-

st.markdown("#### Choose picture:")

|

| 968 |

-

cols_pict = st.columns([1, 2])

|

| 969 |

-

img_typ = cols_pict[0].radio("", ['Upload file', 'Take a picture', 'Use a demo file'], \

|

| 970 |

-

index=0, on_change=raz)

|

| 971 |

-

|

| 972 |

-

if img_typ == 'Upload file':

|

| 973 |

-

image_file = cols_pict[1].file_uploader("Upload a file:", type=["jpg","jpeg"], on_change=raz)

|

| 974 |

-

if img_typ == 'Take a picture':

|

| 975 |

-

image_file = cols_pict[1].camera_input("Take a picture:", on_change=raz)

|

| 976 |

-

if img_typ == 'Use a demo file':

|

| 977 |

-

with st.expander('Choose a demo file:', expanded=True):

|

| 978 |

-

demo_used = st.radio('', ['File 1', 'File 2'], index=0, \

|

| 979 |

-

horizontal=True, on_change=raz)

|

| 980 |

-

cols_demo = st.columns([1, 2])

|

| 981 |

-

cols_demo[0].markdown('###### File 1')

|

| 982 |

-

cols_demo[0].image(img_demo_1, width=150)

|

| 983 |

-

cols_demo[1].markdown('###### File 2')

|

| 984 |

-

cols_demo[1].image(img_demo_2, width=300)

|

| 985 |

-

if demo_used == 'File 1':

|

| 986 |

-

image_file = 'img_demo_1.jpg'

|

| 987 |

-

else:

|

| 988 |

-

image_file = 'img_demo_2.jpg'

|

| 989 |

-

|

| 990 |

-

##----------- Process input image -----------------------------------------------------------------

|

| 991 |

-

if image_file is not None:

|

| 992 |

-

image_path, image_orig, image_cv2 = load_image(image_file)

|

| 993 |

-

list_images = [image_orig, image_cv2]

|

| 994 |

-

|

| 995 |

-

##----------- Form with original image & hyperparameters for detectors ----------------------------

|

| 996 |

-

with st.form("form1"):

|

| 997 |

-

col1, col2 = st.columns(2, ) #gap="medium")

|

| 998 |

-

col1.markdown("##### Original image")

|

| 999 |

-

col1.image(list_images[0], width=500, use_column_width=True)

|

| 1000 |

-

col2.markdown("##### Hyperparameters values for detection")

|

| 1001 |

-

|

| 1002 |

-

with col2.expander("Choose detection hyperparameters for " + reader_type_list[0], \

|

| 1003 |

-

expanded=False):

|

| 1004 |

-

t0_min_size = st.slider("min_size", 1, 20, 10, step=1, \

|

| 1005 |

-

help="min_size (int, default = 10) - Filter text box smaller than \

|

| 1006 |

-

minimum value in pixel")

|

| 1007 |

-

t0_text_threshold = st.slider("text_threshold", 0.1, 1., 0.7, step=0.1, \

|

| 1008 |

-

help="text_threshold (float, default = 0.7) - Text confidence threshold")

|

| 1009 |

-

t0_low_text = st.slider("low_text", 0.1, 1., 0.4, step=0.1, \

|

| 1010 |

-

help="low_text (float, default = 0.4) - Text low-bound score")

|

| 1011 |

-

t0_link_threshold = st.slider("link_threshold", 0.1, 1., 0.4, step=0.1, \

|

| 1012 |

-

help="link_threshold (float, default = 0.4) - Link confidence threshold")

|

| 1013 |

-

t0_canvas_size = st.slider("canvas_size", 2000, 5000, 2560, step=10, \

|

| 1014 |

-

help='''canvas_size (int, default = 2560) \n

|

| 1015 |

-

Maximum e size. Image bigger than this value will be resized down''')

|

| 1016 |

-

t0_mag_ratio = st.slider("mag_ratio", 0.1, 5., 1., step=0.1, \

|

| 1017 |

-

help="mag_ratio (float, default = 1) - Image magnification ratio")

|

| 1018 |

-

t0_slope_ths = st.slider("slope_ths", 0.01, 1., 0.1, step=0.01, \

|

| 1019 |

-

help='''slope_ths (float, default = 0.1) - Maximum slope \

|

| 1020 |

-

(delta y/delta x) to considered merging. \n

|

| 1021 |

-

Low valuans tiled boxes will not be merged.''')

|

| 1022 |

-

t0_ycenter_ths = st.slider("ycenter_ths", 0.1, 1., 0.5, step=0.1, \

|

| 1023 |

-

help='''ycenter_ths (float, default = 0.5) - Maximum shift in y direction. \n

|

| 1024 |

-

Boxes wiifferent level should not be merged.''')

|

| 1025 |

-

t0_height_ths = st.slider("height_ths", 0.1, 1., 0.5, step=0.1, \

|

| 1026 |

-

help='''height_ths (float, default = 0.5) - Maximum different in box height. \n

|

| 1027 |

-

Boxes wiery different text size should not be merged.''')

|

| 1028 |

-

t0_width_ths = st.slider("width_ths", 0.1, 1., 0.5, step=0.1, \

|

| 1029 |

-

help="width_ths (float, default = 0.5) - Maximum horizontal \

|

| 1030 |

-

distance to merge boxes.")

|

| 1031 |

-

t0_add_margin = st.slider("add_margin", 0.1, 1., 0.1, step=0.1, \

|

| 1032 |

-

help='''add_margin (float, default = 0.1) - \

|

| 1033 |

-

Extend bounding boxes in all direction by certain value. \n

|

| 1034 |

-

This is rtant for language with complex script (E.g. Thai).''')

|

| 1035 |

-

t0_optimal_num_chars = st.slider("optimal_num_chars", None, 100, None, step=10, \

|

| 1036 |

-

help="optimal_num_chars (int, default = None) - If specified, bounding boxes \

|

| 1037 |

-

with estimated number of characters near this value are returned first.")

|

| 1038 |

-

|

| 1039 |

-

with col2.expander("Choose detection hyperparameters for " + reader_type_list[1], \

|

| 1040 |

-

expanded=False):

|

| 1041 |

-

t1_det_algorithm = st.selectbox('det_algorithm', ['DB'], \

|

| 1042 |

-

help='Type of detection algorithm selected. (default = DB)')

|

| 1043 |

-

t1_det_max_side_len = st.slider('det_max_side_len', 500, 2000, 960, step=10, \

|

| 1044 |

-

help='''The maximum size of the long side of the image. (default = 960)\n

|

| 1045 |

-

Limit thximum image height and width.\n

|

| 1046 |

-

When theg side exceeds this value, the long side will be resized to this size, and the short side \

|

| 1047 |

-

will be ed proportionally.''')

|

| 1048 |

-

t1_det_db_thresh = st.slider('det_db_thresh', 0.1, 1., 0.3, step=0.1, \

|

| 1049 |

-

help='''Binarization threshold value of DB output map. (default = 0.3) \n

|

| 1050 |

-

Used to er the binarized image of DB prediction, setting 0.-0.3 has no obvious effect on the result.''')

|

| 1051 |

-

t1_det_db_box_thresh = st.slider('det_db_box_thresh', 0.1, 1., 0.6, step=0.1, \

|

| 1052 |

-

help='''The threshold value of the DB output box. (default = 0.6) \n

|

| 1053 |

-

DB post-essing filter box threshold, if there is a missing box detected, it can be reduced as appropriate. \n

|

| 1054 |

-

Boxes sclower than this value will be discard.''')

|

| 1055 |

-

t1_det_db_unclip_ratio = st.slider('det_db_unclip_ratio', 1., 3.0, 1.6, step=0.1, \

|

| 1056 |

-

help='''The expanded ratio of DB output box. (default = 1.6) \n

|

| 1057 |

-

Indicatee compactness of the text box, the smaller the value, the closer the text box to the text.''')

|

| 1058 |

-

t1_det_east_score_thresh = st.slider('det_east_cover_thresh', 0.1, 1., 0.8, step=0.1, \

|

| 1059 |

-

help="Binarization threshold value of EAST output map. (default = 0.8)")

|

| 1060 |

-

t1_det_east_cover_thresh = st.slider('det_east_cover_thresh', 0.1, 1., 0.1, step=0.1, \

|

| 1061 |

-

help='''The threshold value of the EAST output box. (default = 0.1) \n

|

| 1062 |

-

Boxes sclower than this value will be discarded.''')

|

| 1063 |

-

t1_det_east_nms_thresh = st.slider('det_east_nms_thresh', 0.1, 1., 0.2, step=0.1, \

|

| 1064 |

-

help="The NMS threshold value of EAST model output box. (default = 0.2)")

|

| 1065 |

-

t1_det_db_score_mode = st.selectbox('det_db_score_mode', ['fast', 'slow'], \

|

| 1066 |

-

help='''slow: use polygon box to calculate bbox score, fast: use rectangle box \

|

| 1067 |

-

to calculate. (default = fast) \n

|

| 1068 |

-

Use rectlar box to calculate faster, and polygonal box more accurate for curved text area.''')

|

| 1069 |

-

|

| 1070 |

-

with col2.expander("Choose detection hyperparameters for " + reader_type_list[2], \

|

| 1071 |

-

expanded=False):

|

| 1072 |

-

t2_det = st.selectbox('det', ['DB_r18','DB_r50','DBPP_r50','DRRG','FCE_IC15', \

|

| 1073 |

-

'FCE_CTW_DCNv2','MaskRCNN_CTW','MaskRCNN_IC15', \

|

| 1074 |

-

'MaskRCNN_IC17', 'PANet_CTW','PANet_IC15','PS_CTW',\

|

| 1075 |

-

'PS_IC15','Tesseract','TextSnake'], 10, \

|

| 1076 |

-

help='Text detection algorithm. (default = PANet_IC15)')

|

| 1077 |

-

st.write("###### *More about text detection models* 👉 \

|

| 1078 |

-

[here](https://mmocr.readthedocs.io/en/latest/textdet_models.html)")

|

| 1079 |

-

t2_merge_xdist = st.slider('merge_xdist', 1, 50, 20, step=1, \

|

| 1080 |

-

help='The maximum x-axis distance to merge boxes. (defaut=20)')

|

| 1081 |

-

|

| 1082 |

-

with col2.expander("Choose detection hyperparameters for " + reader_type_list[3], \

|

| 1083 |

-

expanded=False):

|

| 1084 |

-

t3_psm = st.selectbox('Page segmentation mode (psm)', \

|

| 1085 |

-

[' - Default', \

|

| 1086 |

-

' 4 Assume a single column of text of variable sizes', \

|

| 1087 |

-

' 5 Assume a single uniform block of vertically aligned text', \

|

| 1088 |

-

' 6 Assume a single uniform block of text', \

|

| 1089 |

-

' 7 Treat the image as a single text line', \

|

| 1090 |

-

' 8 Treat the image as a single word', \

|

| 1091 |

-

' 9 Treat the image as a single word in a circle', \

|

| 1092 |

-

'10 Treat the image as a single character', \

|

| 1093 |

-

'11 Sparse text. Find as much text as possible in no \

|

| 1094 |

-

particular order', \

|

| 1095 |

-

'13 Raw line. Treat the image as a single text line, \

|

| 1096 |

-

bypassing hacks that are Tesseract-specific'])

|

| 1097 |

-

t3_oem = st.selectbox('OCR engine mode', ['0 Legacy engine only', \

|

| 1098 |

-

'1 Neural nets LSTM engine only', \

|

| 1099 |

-

'2 Legacy + LSTM engines', \

|

| 1100 |

-

'3 Default, based on what is available'], 3)

|

| 1101 |

-

t3_whitelist = st.text_input('Limit tesseract to recognize only this characters :', \

|

| 1102 |

-

placeholder='Limit tesseract to recognize only this characters', \

|

| 1103 |

-

help='Example for numbers only : 0123456789')

|

| 1104 |

-

|

| 1105 |

-

color_hex = col2.color_picker('Set a color for box outlines:', '#004C99')

|

| 1106 |

-

color_part = color_hex.lstrip('#')

|

| 1107 |

-

color = tuple(int(color_part[i:i+2], 16) for i in (0, 2, 4))

|

| 1108 |

-

|

| 1109 |

-

submit_detect = st.form_submit_button("Launch detection")

|

| 1110 |

-

|

| 1111 |

-

##----------- Process text detection --------------------------------------------------------------

|

| 1112 |

-

if submit_detect:

|

| 1113 |

-

# Process text detection

|

| 1114 |

-

|

| 1115 |

-

if t0_optimal_num_chars == 0:

|

| 1116 |

-

t0_optimal_num_chars = None

|

| 1117 |

-

|

| 1118 |

-

# Construct the config Tesseract parameter

|

| 1119 |

-

t3_config = ''

|

| 1120 |

-

psm = t3_psm[:2]

|

| 1121 |

-

if psm != ' -':

|

| 1122 |

-

t3_config += '--psm ' + psm.strip()

|

| 1123 |

-

oem = t3_oem[:1]

|

| 1124 |

-

if oem != '3':

|

| 1125 |

-

t3_config += ' --oem ' + oem

|

| 1126 |

-

if t3_whitelist != '':

|

| 1127 |

-

t3_config += ' -c tessedit_char_whitelist=' + t3_whitelist

|

| 1128 |

-

|

| 1129 |

-

list_params_det = \

|

| 1130 |

-

[[easyocr_lang, \

|

| 1131 |

-

{'min_size': t0_min_size, 'text_threshold': t0_text_threshold, \

|

| 1132 |

-

'low_text': t0_low_text, 'link_threshold': t0_link_threshold, \

|

| 1133 |

-

'canvas_size': t0_canvas_size, 'mag_ratio': t0_mag_ratio, \

|

| 1134 |

-

'slope_ths': t0_slope_ths, 'ycenter_ths': t0_ycenter_ths, \

|

| 1135 |

-

'height_ths': t0_height_ths, 'width_ths': t0_width_ths, \

|

| 1136 |

-

'add_margin': t0_add_margin, 'optimal_num_chars': t0_optimal_num_chars \

|

| 1137 |

-

}], \

|

| 1138 |

-

[ppocr_lang, \

|

| 1139 |

-

{'det_algorithm': t1_det_algorithm, 'det_max_side_len': t1_det_max_side_len, \

|

| 1140 |

-

'det_db_thresh': t1_det_db_thresh, 'det_db_box_thresh': t1_det_db_box_thresh, \

|

| 1141 |

-

'det_db_unclip_ratio': t1_det_db_unclip_ratio, \

|

| 1142 |

-

'det_east_score_thresh': t1_det_east_score_thresh, \

|

| 1143 |

-

'det_east_cover_thresh': t1_det_east_cover_thresh, \

|

| 1144 |

-

'det_east_nms_thresh': t1_det_east_nms_thresh, \

|

| 1145 |

-

'det_db_score_mode': t1_det_db_score_mode}],

|

| 1146 |

-

[mmocr_lang, {'det': t2_det, 'merge_xdist': t2_merge_xdist}],

|

| 1147 |

-

[tesserocr_lang, {'lang': tesserocr_lang, 'config': t3_config}]

|

| 1148 |

-

]

|

| 1149 |

-

|

| 1150 |

-

show_info1 = st.empty()

|

| 1151 |

-

show_info1.info("Readers initializations in progress (it may take a while) ...")

|

| 1152 |

-

list_readers = init_readers(list_params_det)

|

| 1153 |

-

|

| 1154 |

-

show_info1.info("Text detection in progress ...")

|

| 1155 |

-

list_images, list_coordinates = process_detect(image_path, list_images, list_readers, \

|

| 1156 |

-

list_params_det, color)

|

| 1157 |

-

show_info1.empty()

|

| 1158 |

-

|

| 1159 |

-

# Clear previous recognition results

|

| 1160 |

-

st.session_state.df_results = pd.DataFrame([])

|

| 1161 |

-

|

| 1162 |

-

st.session_state.list_readers = list_readers

|

| 1163 |

-

st.session_state.list_coordinates = list_coordinates

|

| 1164 |

-

st.session_state.list_images = list_images

|

| 1165 |

-

st.session_state.list_params_det = list_params_det

|

| 1166 |

-

|

| 1167 |

-

if 'columns_size' not in st.session_state:

|

| 1168 |

-

st.session_state.columns_size = [2] + [1 for x in reader_type_list[1:]]

|

| 1169 |

-

if 'column_width' not in st.session_state:

|

| 1170 |

-

st.session_state.column_width = [500] + [400 for x in reader_type_list[1:]]

|

| 1171 |

-

if 'columns_color' not in st.session_state:

|

| 1172 |

-

st.session_state.columns_color = ["rgb(228,26,28)"] + \

|

| 1173 |

-

["rgb(0,0,0)" for x in reader_type_list[1:]]

|

| 1174 |

-

|

| 1175 |

-

if st.session_state.list_coordinates:

|

| 1176 |

-

list_coordinates = st.session_state.list_coordinates

|

| 1177 |

-

list_images = st.session_state.list_images

|

| 1178 |

-

list_readers = st.session_state.list_readers

|

| 1179 |

-

list_params_det = st.session_state.list_params_det

|

| 1180 |

-

|

| 1181 |

-

##----------- Text detection results --------------------------------------------------------------

|

| 1182 |

-

st.subheader("Text detection")

|

| 1183 |

-

show_detect = st.empty()

|

| 1184 |

-

list_ok_detect = []

|

| 1185 |

-

with show_detect.container():

|

| 1186 |

-

columns = st.columns(st.session_state.columns_size, ) #gap='medium')

|

| 1187 |

-

for no_col, col in enumerate(columns):

|

| 1188 |

-

column_title = '<p style="font-size: 20px;color:' + \

|

| 1189 |

-

st.session_state.columns_color[no_col] + \

|

| 1190 |

-

';">Detection with ' + reader_type_list[no_col]+ '</p>'

|

| 1191 |

-

col.markdown(column_title, unsafe_allow_html=True)

|

| 1192 |

-

if isinstance(list_images[no_col+2], PIL.Image.Image):

|

| 1193 |

-

col.image(list_images[no_col+2], width=st.session_state.column_width[no_col], \

|

| 1194 |

-

use_column_width=True)

|

| 1195 |

-

list_ok_detect.append(reader_type_list[no_col])

|

| 1196 |

-

else:

|

| 1197 |

-

col.write(list_images[no_col+2], use_column_width=True)

|

| 1198 |

-

|

| 1199 |

-

st.subheader("Text recognition")

|

| 1200 |

|

| 1201 |

-

|

| 1202 |

-

st.

|

| 1203 |

-

|

|

|

|

|

|

|

|

|

|

| 1204 |

|

| 1205 |

-

|

| 1206 |

-

st.markdown("##### Hyperparameters values for recognition:")

|

| 1207 |

-

with st.form("form2"):

|

| 1208 |

-

with st.expander("Choose recognition hyperparameters for " + reader_type_list[0], \

|

| 1209 |

expanded=False):

|

| 1210 |

-

|

| 1211 |

-

|

| 1212 |

-

|

| 1213 |

-

|

| 1214 |

-

|

| 1215 |

-

|

| 1216 |

-

|

| 1217 |

-

|

| 1218 |

-

|

| 1219 |

-

|

| 1220 |

-

|

| 1221 |

-

|

| 1222 |

-

|

| 1223 |

-

|

| 1224 |

-

|

| 1225 |

-

|

| 1226 |

-

|

| 1227 |

-

|

| 1228 |

-

|

| 1229 |

-

|

| 1230 |

-

|

| 1231 |

-

|

| 1232 |

-

|

| 1233 |

-

|

| 1234 |

-

|

| 1235 |

-

|

| 1236 |

-

|

| 1237 |

-

|

| 1238 |

-

|

| 1239 |

-

|

| 1240 |

-

|

| 1241 |

-

|

| 1242 |

-

|

|

|

|

|

|

|

|

|

|

| 1243 |

expanded=False):

|

| 1244 |

-

|

| 1245 |

-

|

| 1246 |

-

|

| 1247 |

-

|

| 1248 |

-

|

| 1249 |

-

|

| 1250 |

-

|

| 1251 |

-

|

| 1252 |

-

|

| 1253 |

-

|

| 1254 |

-

|

| 1255 |

-

|

| 1256 |

-

|

| 1257 |

-

|

| 1258 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1259 |

expanded=False):

|

| 1260 |

-

|

| 1261 |

-

|

| 1262 |

-

|

| 1263 |

-

|

| 1264 |

-

|

| 1265 |

-

|

| 1266 |

-

|

| 1267 |

-

|

|

|

|

|

|

|

|

|

|

| 1268 |

expanded=False):

|

| 1269 |

-

|

| 1270 |

-

|

| 1271 |

-

|

| 1272 |

-

|

| 1273 |

-

|

| 1274 |

-

|

| 1275 |

-

|

| 1276 |

-

|

| 1277 |

-

|

| 1278 |

-

|

| 1279 |

-

'11 Sparse text. Find as much text as possible in no \

|

| 1280 |

particular order', \

|

| 1281 |

-

|

| 1282 |

bypassing hacks that are Tesseract-specific'])

|

| 1283 |

-

|

| 1284 |

-

|

| 1285 |

-

|

| 1286 |

-

|

| 1287 |

-

|

| 1288 |

-

|

| 1289 |

-

|

| 1290 |

-

|

| 1291 |

-

|

| 1292 |

-

|

| 1293 |

-

|

| 1294 |

-

|

| 1295 |

-

|

| 1296 |

-

|

| 1297 |

-

|

| 1298 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1299 |

|

| 1300 |

# Construct the config Tesseract parameter

|

| 1301 |

-

|

| 1302 |

-

psm =

|

| 1303 |

if psm != ' -':

|

| 1304 |

-

|

| 1305 |

-

oem =

|

| 1306 |

if oem != '3':

|

| 1307 |

-

|

| 1308 |

-

if

|

| 1309 |

-

|

| 1310 |

-

|

| 1311 |

-

|

| 1312 |

-

[

|

| 1313 |

-

|

| 1314 |

-

|

| 1315 |

-

|

| 1316 |

-

|

| 1317 |

-

|

| 1318 |

-

|

| 1319 |

-

|

| 1320 |

-

|

| 1321 |

-

|

| 1322 |

-

|

| 1323 |

-

|

| 1324 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1325 |

]

|

| 1326 |

|

| 1327 |

-

|

| 1328 |

-

|

| 1329 |

-

|

| 1330 |

-

|

| 1331 |

-

|

| 1332 |

-

|

| 1333 |

-

|

| 1334 |

-

|

| 1335 |

-

|

| 1336 |

-

|

| 1337 |

-

st.session_state.

|

| 1338 |

-

|

| 1339 |

-

|

| 1340 |

-

|

| 1341 |

-

|

| 1342 |

-

|

| 1343 |

-

|

| 1344 |

-

|

| 1345 |

-

|

| 1346 |

-

|

| 1347 |

-

|

| 1348 |

-

|

| 1349 |

-

|

| 1350 |

-

|

| 1351 |

-

|

| 1352 |

-

|

| 1353 |

-

|

| 1354 |

-

|

| 1355 |

-

|

| 1356 |

-

|

| 1357 |

-

|

| 1358 |

-

|

| 1359 |

-

|

| 1360 |

-

|

| 1361 |

-

|

| 1362 |

-

|

| 1363 |

-

|

| 1364 |

-

|

| 1365 |

-

|

| 1366 |

-

|

| 1367 |

-

|

| 1368 |

-

|

| 1369 |

-

|

| 1370 |

-

|

| 1371 |

-

|

| 1372 |

-

|

| 1373 |

-

|

| 1374 |

-

|

| 1375 |

-

|

| 1376 |

-

|

| 1377 |

-

|

| 1378 |

-

|

| 1379 |

-

|

| 1380 |

-

|

| 1381 |

-

|

| 1382 |

-

|

| 1383 |

-

|

| 1384 |

-

|

| 1385 |

-

|

| 1386 |

-

|

| 1387 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1388 |

cols = st.columns(cols_size)

|

| 1389 |

-

cols[0].image(row.cropped_image, width=150)

|

| 1390 |

-

for ind_col in range(1, len(cols), 2):

|

| 1391 |

-

cols[ind_col].write(getattr(row, results_cols[ind_col]))

|

| 1392 |

-

cols[ind_col+1].write("("+str( \

|

| 1393 |

-

getattr(row, results_cols[ind_col+1]))+"%)")

|

| 1394 |

-

|

| 1395 |

-

st.download_button(

|

| 1396 |

-

label="Download results as CSV file",

|

| 1397 |

-

data=convert_df(st.session_state.df_results),

|

| 1398 |

-

file_name='OCR_comparator_results.csv',

|

| 1399 |

-

mime='text/csv',

|

| 1400 |

-

)

|

| 1401 |

-

|

| 1402 |

-

if not st.session_state.df_results_tesseract.empty:

|

| 1403 |

-

with st.expander("Detailed areas for Tesseract", expanded=False):

|

| 1404 |

-

cols = st.columns([2,2,1])

|

| 1405 |

cols[0].markdown('#### Detected area')

|

| 1406 |

-

|

| 1407 |

-

|

| 1408 |

-

|

| 1409 |

-

|

| 1410 |

-

cols

|

| 1411 |

-

cols

|

| 1412 |

-

cols[

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1413 |

|

| 1414 |

st.download_button(

|

| 1415 |

-

label="Download

|

| 1416 |

data=convert_df(st.session_state.df_results),

|

| 1417 |

-

file_name='

|

| 1418 |

mime='text/csv',

|

| 1419 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 929 |

###################################################################################################

|

| 930 |

## MAIN

|

| 931 |

###################################################################################################

|

| 932 |

+

def app():

|

| 933 |

+

##----------- Initializations ---------------------------------------------------------------------

|

| 934 |

+

#print("PID : ", os.getpid())

|

| 935 |

+

|

| 936 |

+

st.title("OCR solutions comparator")

|

| 937 |

+

st.markdown("##### *EasyOCR, PPOCR, MMOCR, Tesseract*")

|

| 938 |

+

#st.markdown("#### PID : " + str(os.getpid()))

|

| 939 |

+

|

| 940 |

+

# Initializations

|

| 941 |

+

with st.spinner("Initializations in progress ..."):

|

| 942 |

+

reader_type_list, reader_type_dict, list_dict_lang, \

|

| 943 |

+

cols_size, dict_back_colors, fig_colorscale = initializations()

|

| 944 |

+

img_demo_1, img_demo_2 = get_demo()

|

| 945 |

+

|

| 946 |

+

##----------- Choose language & image -------------------------------------------------------------

|

| 947 |

+

st.markdown("#### Choose languages for the text recognition:")

|

| 948 |

+

lang_col = st.columns(4)

|

| 949 |

+

easyocr_key_lang = lang_col[0].selectbox(reader_type_list[0]+" :", list_dict_lang[0].keys(), 26)

|

| 950 |

+

easyocr_lang = list_dict_lang[0][easyocr_key_lang]

|

| 951 |

+

ppocr_key_lang = lang_col[1].selectbox(reader_type_list[1]+" :", list_dict_lang[1].keys(), 22)

|

| 952 |

+

ppocr_lang = list_dict_lang[1][ppocr_key_lang]

|

| 953 |

+

mmocr_key_lang = lang_col[2].selectbox(reader_type_list[2]+" :", list_dict_lang[2].keys(), 0)

|

| 954 |

+

mmocr_lang = list_dict_lang[2][mmocr_key_lang]

|

| 955 |

+

tesserocr_key_lang = lang_col[3].selectbox(reader_type_list[3]+" :", list_dict_lang[3].keys(), 35)

|

| 956 |

+

tesserocr_lang = list_dict_lang[3][tesserocr_key_lang]

|

| 957 |

+

|

| 958 |

+

st.markdown("#### Choose picture:")

|

| 959 |

+

cols_pict = st.columns([1, 2])

|

| 960 |

+

img_typ = cols_pict[0].radio("", ['Upload file', 'Take a picture', 'Use a demo file'], \

|

| 961 |

+

index=0, on_change=raz)

|

| 962 |

+

|

| 963 |

+

if img_typ == 'Upload file':

|

| 964 |

+

image_file = cols_pict[1].file_uploader("Upload a file:", type=["jpg","jpeg"], on_change=raz)

|

| 965 |

+

if img_typ == 'Take a picture':

|

| 966 |

+

image_file = cols_pict[1].camera_input("Take a picture:", on_change=raz)

|

| 967 |

+

if img_typ == 'Use a demo file':

|

| 968 |

+

with st.expander('Choose a demo file:', expanded=True):

|

| 969 |

+

demo_used = st.radio('', ['File 1', 'File 2'], index=0, \

|

| 970 |

+

horizontal=True, on_change=raz)

|

| 971 |

+

cols_demo = st.columns([1, 2])

|

| 972 |

+

cols_demo[0].markdown('###### File 1')

|

| 973 |

+

cols_demo[0].image(img_demo_1, width=150)

|

| 974 |

+

cols_demo[1].markdown('###### File 2')

|

| 975 |

+

cols_demo[1].image(img_demo_2, width=300)

|

| 976 |

+

if demo_used == 'File 1':

|

| 977 |

+

image_file = 'img_demo_1.jpg'

|

| 978 |

+

else:

|

| 979 |

+

image_file = 'img_demo_2.jpg'

|

| 980 |

|

| 981 |

+

##----------- Process input image -----------------------------------------------------------------

|

| 982 |

+

if image_file is not None:

|

| 983 |

+

image_path, image_orig, image_cv2 = load_image(image_file)

|

| 984 |

+

list_images = [image_orig, image_cv2]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 985 |

|

| 986 |

+

##----------- Form with original image & hyperparameters for detectors ----------------------------

|

| 987 |

+

with st.form("form1"):

|

| 988 |

+

col1, col2 = st.columns(2, ) #gap="medium")

|

| 989 |

+

col1.markdown("##### Original image")

|

| 990 |

+

col1.image(list_images[0], width=500, use_column_width=True)

|

| 991 |

+

col2.markdown("##### Hyperparameters values for detection")

|

| 992 |

|

| 993 |

+

with col2.expander("Choose detection hyperparameters for " + reader_type_list[0], \

|

|

|

|

|

|

|

|

|

|

| 994 |

expanded=False):

|

| 995 |

+

t0_min_size = st.slider("min_size", 1, 20, 10, step=1, \

|

| 996 |

+

help="min_size (int, default = 10) - Filter text box smaller than \

|

| 997 |

+

minimum value in pixel")

|

| 998 |

+

t0_text_threshold = st.slider("text_threshold", 0.1, 1., 0.7, step=0.1, \

|

| 999 |

+

help="text_threshold (float, default = 0.7) - Text confidence threshold")

|

| 1000 |

+

t0_low_text = st.slider("low_text", 0.1, 1., 0.4, step=0.1, \

|

| 1001 |

+

help="low_text (float, default = 0.4) - Text low-bound score")

|

| 1002 |

+

t0_link_threshold = st.slider("link_threshold", 0.1, 1., 0.4, step=0.1, \

|

| 1003 |

+

help="link_threshold (float, default = 0.4) - Link confidence threshold")

|

| 1004 |

+

t0_canvas_size = st.slider("canvas_size", 2000, 5000, 2560, step=10, \

|

| 1005 |

+

help='''canvas_size (int, default = 2560) \n

|

| 1006 |

+

Maximum e size. Image bigger than this value will be resized down''')

|

| 1007 |

+

t0_mag_ratio = st.slider("mag_ratio", 0.1, 5., 1., step=0.1, \

|

| 1008 |

+

help="mag_ratio (float, default = 1) - Image magnification ratio")

|

| 1009 |

+

t0_slope_ths = st.slider("slope_ths", 0.01, 1., 0.1, step=0.01, \

|

| 1010 |

+

help='''slope_ths (float, default = 0.1) - Maximum slope \

|

| 1011 |

+

(delta y/delta x) to considered merging. \n

|

| 1012 |

+

Low valuans tiled boxes will not be merged.''')

|

| 1013 |

+

t0_ycenter_ths = st.slider("ycenter_ths", 0.1, 1., 0.5, step=0.1, \

|

| 1014 |

+

help='''ycenter_ths (float, default = 0.5) - Maximum shift in y direction. \n

|

| 1015 |

+

Boxes wiifferent level should not be merged.''')

|

| 1016 |

+

t0_height_ths = st.slider("height_ths", 0.1, 1., 0.5, step=0.1, \

|

| 1017 |

+

help='''height_ths (float, default = 0.5) - Maximum different in box height. \n

|

| 1018 |

+

Boxes wiery different text size should not be merged.''')

|

| 1019 |

+

t0_width_ths = st.slider("width_ths", 0.1, 1., 0.5, step=0.1, \

|

| 1020 |

+

help="width_ths (float, default = 0.5) - Maximum horizontal \

|

| 1021 |

+

distance to merge boxes.")

|

| 1022 |

+

t0_add_margin = st.slider("add_margin", 0.1, 1., 0.1, step=0.1, \

|

| 1023 |

+

help='''add_margin (float, default = 0.1) - \

|

| 1024 |

+

Extend bounding boxes in all direction by certain value. \n

|

| 1025 |

+

This is rtant for language with complex script (E.g. Thai).''')

|

| 1026 |

+

t0_optimal_num_chars = st.slider("optimal_num_chars", None, 100, None, step=10, \

|

| 1027 |

+

help="optimal_num_chars (int, default = None) - If specified, bounding boxes \

|

| 1028 |

+

with estimated number of characters near this value are returned first.")

|

| 1029 |

+

|

| 1030 |

+

with col2.expander("Choose detection hyperparameters for " + reader_type_list[1], \

|

| 1031 |

expanded=False):

|

| 1032 |

+

t1_det_algorithm = st.selectbox('det_algorithm', ['DB'], \

|

| 1033 |

+

help='Type of detection algorithm selected. (default = DB)')

|

| 1034 |

+

t1_det_max_side_len = st.slider('det_max_side_len', 500, 2000, 960, step=10, \

|

| 1035 |

+

help='''The maximum size of the long side of the image. (default = 960)\n

|

| 1036 |

+

Limit thximum image height and width.\n

|

| 1037 |

+

When theg side exceeds this value, the long side will be resized to this size, and the short side \

|

| 1038 |

+

will be ed proportionally.''')

|

| 1039 |

+

t1_det_db_thresh = st.slider('det_db_thresh', 0.1, 1., 0.3, step=0.1, \

|

| 1040 |

+

help='''Binarization threshold value of DB output map. (default = 0.3) \n

|

| 1041 |

+

Used to er the binarized image of DB prediction, setting 0.-0.3 has no obvious effect on the result.''')

|

| 1042 |

+

t1_det_db_box_thresh = st.slider('det_db_box_thresh', 0.1, 1., 0.6, step=0.1, \

|

| 1043 |

+

help='''The threshold value of the DB output box. (default = 0.6) \n

|

| 1044 |

+

DB post-essing filter box threshold, if there is a missing box detected, it can be reduced as appropriate. \n

|

| 1045 |

+

Boxes sclower than this value will be discard.''')

|

| 1046 |

+

t1_det_db_unclip_ratio = st.slider('det_db_unclip_ratio', 1., 3.0, 1.6, step=0.1, \

|

| 1047 |

+

help='''The expanded ratio of DB output box. (default = 1.6) \n

|

| 1048 |

+

Indicatee compactness of the text box, the smaller the value, the closer the text box to the text.''')

|

| 1049 |

+

t1_det_east_score_thresh = st.slider('det_east_cover_thresh', 0.1, 1., 0.8, step=0.1, \

|

| 1050 |

+

help="Binarization threshold value of EAST output map. (default = 0.8)")

|

| 1051 |

+

t1_det_east_cover_thresh = st.slider('det_east_cover_thresh', 0.1, 1., 0.1, step=0.1, \

|

| 1052 |

+

help='''The threshold value of the EAST output box. (default = 0.1) \n

|

| 1053 |

+

Boxes sclower than this value will be discarded.''')

|

| 1054 |

+

t1_det_east_nms_thresh = st.slider('det_east_nms_thresh', 0.1, 1., 0.2, step=0.1, \

|

| 1055 |

+

help="The NMS threshold value of EAST model output box. (default = 0.2)")

|

| 1056 |

+

t1_det_db_score_mode = st.selectbox('det_db_score_mode', ['fast', 'slow'], \

|

| 1057 |

+

help='''slow: use polygon box to calculate bbox score, fast: use rectangle box \

|

| 1058 |

+

to calculate. (default = fast) \n

|

| 1059 |

+

Use rectlar box to calculate faster, and polygonal box more accurate for curved text area.''')

|

| 1060 |

+

|

| 1061 |

+

with col2.expander("Choose detection hyperparameters for " + reader_type_list[2], \

|

| 1062 |

expanded=False):

|

| 1063 |

+

t2_det = st.selectbox('det', ['DB_r18','DB_r50','DBPP_r50','DRRG','FCE_IC15', \

|

| 1064 |

+

'FCE_CTW_DCNv2','MaskRCNN_CTW','MaskRCNN_IC15', \

|

| 1065 |

+

'MaskRCNN_IC17', 'PANet_CTW','PANet_IC15','PS_CTW',\

|

| 1066 |

+

'PS_IC15','Tesseract','TextSnake'], 10, \

|

| 1067 |

+

help='Text detection algorithm. (default = PANet_IC15)')

|

| 1068 |

+

st.write("###### *More about text detection models* 👉 \

|

| 1069 |

+

[here](https://mmocr.readthedocs.io/en/latest/textdet_models.html)")

|

| 1070 |

+

t2_merge_xdist = st.slider('merge_xdist', 1, 50, 20, step=1, \

|

| 1071 |

+

help='The maximum x-axis distance to merge boxes. (defaut=20)')

|

| 1072 |

+

|

| 1073 |

+

with col2.expander("Choose detection hyperparameters for " + reader_type_list[3], \

|

| 1074 |

expanded=False):

|

| 1075 |

+

t3_psm = st.selectbox('Page segmentation mode (psm)', \

|

| 1076 |

+

[' - Default', \

|

| 1077 |

+

' 4 Assume a single column of text of variable sizes', \

|

| 1078 |

+

' 5 Assume a single uniform block of vertically aligned text', \

|

| 1079 |

+

' 6 Assume a single uniform block of text', \

|

| 1080 |

+

' 7 Treat the image as a single text line', \

|

| 1081 |

+

' 8 Treat the image as a single word', \

|

| 1082 |

+

' 9 Treat the image as a single word in a circle', \

|

| 1083 |

+

'10 Treat the image as a single character', \

|

| 1084 |

+

'11 Sparse text. Find as much text as possible in no \

|

|

|

|

| 1085 |

particular order', \

|

| 1086 |

+

'13 Raw line. Treat the image as a single text line, \

|

| 1087 |

bypassing hacks that are Tesseract-specific'])

|

| 1088 |

+

t3_oem = st.selectbox('OCR engine mode', ['0 Legacy engine only', \

|

| 1089 |

+

'1 Neural nets LSTM engine only', \

|

| 1090 |

+

'2 Legacy + LSTM engines', \

|

| 1091 |

+

'3 Default, based on what is available'], 3)

|

| 1092 |

+

t3_whitelist = st.text_input('Limit tesseract to recognize only this characters :', \

|

| 1093 |

+

placeholder='Limit tesseract to recognize only this characters', \

|

| 1094 |

+

help='Example for numbers only : 0123456789')

|

| 1095 |

+

|

| 1096 |

+

color_hex = col2.color_picker('Set a color for box outlines:', '#004C99')

|

| 1097 |

+

color_part = color_hex.lstrip('#')

|

| 1098 |

+

color = tuple(int(color_part[i:i+2], 16) for i in (0, 2, 4))

|

| 1099 |

+

|

| 1100 |

+

submit_detect = st.form_submit_button("Launch detection")

|

| 1101 |

+

|

| 1102 |

+

##----------- Process text detection --------------------------------------------------------------

|

| 1103 |

+

if submit_detect:

|

| 1104 |

+

# Process text detection

|

| 1105 |

+

|

| 1106 |

+

if t0_optimal_num_chars == 0:

|

| 1107 |

+

t0_optimal_num_chars = None

|

| 1108 |

|

| 1109 |

# Construct the config Tesseract parameter

|

| 1110 |

+

t3_config = ''

|

| 1111 |

+

psm = t3_psm[:2]

|

| 1112 |

if psm != ' -':

|

| 1113 |

+

t3_config += '--psm ' + psm.strip()

|

| 1114 |

+

oem = t3_oem[:1]

|

| 1115 |

if oem != '3':

|

| 1116 |

+

t3_config += ' --oem ' + oem

|

| 1117 |

+

if t3_whitelist != '':

|

| 1118 |

+

t3_config += ' -c tessedit_char_whitelist=' + t3_whitelist

|

| 1119 |

+

|

| 1120 |

+

list_params_det = \

|

| 1121 |

+

[[easyocr_lang, \

|

| 1122 |

+

{'min_size': t0_min_size, 'text_threshold': t0_text_threshold, \

|

| 1123 |

+

'low_text': t0_low_text, 'link_threshold': t0_link_threshold, \

|

| 1124 |

+

'canvas_size': t0_canvas_size, 'mag_ratio': t0_mag_ratio, \

|

| 1125 |

+

'slope_ths': t0_slope_ths, 'ycenter_ths': t0_ycenter_ths, \

|

| 1126 |

+

'height_ths': t0_height_ths, 'width_ths': t0_width_ths, \

|

| 1127 |

+

'add_margin': t0_add_margin, 'optimal_num_chars': t0_optimal_num_chars \

|

| 1128 |

+

}], \

|

| 1129 |

+

[ppocr_lang, \

|

| 1130 |

+

{'det_algorithm': t1_det_algorithm, 'det_max_side_len': t1_det_max_side_len, \

|

| 1131 |

+

'det_db_thresh': t1_det_db_thresh, 'det_db_box_thresh': t1_det_db_box_thresh, \

|

| 1132 |

+

'det_db_unclip_ratio': t1_det_db_unclip_ratio, \

|

| 1133 |

+

'det_east_score_thresh': t1_det_east_score_thresh, \

|

| 1134 |

+

'det_east_cover_thresh': t1_det_east_cover_thresh, \

|

| 1135 |

+

'det_east_nms_thresh': t1_det_east_nms_thresh, \

|

| 1136 |

+

'det_db_score_mode': t1_det_db_score_mode}],

|

| 1137 |

+

[mmocr_lang, {'det': t2_det, 'merge_xdist': t2_merge_xdist}],

|

| 1138 |

+

[tesserocr_lang, {'lang': tesserocr_lang, 'config': t3_config}]

|

| 1139 |

]

|

| 1140 |

|

| 1141 |

+

show_info1 = st.empty()

|

| 1142 |

+

show_info1.info("Readers initializations in progress (it may take a while) ...")

|

| 1143 |

+

list_readers = init_readers(list_params_det)

|

| 1144 |

+

|

| 1145 |

+

show_info1.info("Text detection in progress ...")

|

| 1146 |

+

list_images, list_coordinates = process_detect(image_path, list_images, list_readers, \

|

| 1147 |

+

list_params_det, color)

|

| 1148 |

+

show_info1.empty()

|

| 1149 |

+

|

| 1150 |

+

# Clear previous recognition results

|

| 1151 |

+

st.session_state.df_results = pd.DataFrame([])

|

| 1152 |

+

|

| 1153 |

+

st.session_state.list_readers = list_readers

|

| 1154 |

+

st.session_state.list_coordinates = list_coordinates

|

| 1155 |

+

st.session_state.list_images = list_images

|

| 1156 |

+

st.session_state.list_params_det = list_params_det

|

| 1157 |

+

|

| 1158 |

+

if 'columns_size' not in st.session_state:

|

| 1159 |

+

st.session_state.columns_size = [2] + [1 for x in reader_type_list[1:]]

|

| 1160 |

+

if 'column_width' not in st.session_state:

|

| 1161 |

+

st.session_state.column_width = [500] + [400 for x in reader_type_list[1:]]

|

| 1162 |

+

if 'columns_color' not in st.session_state:

|

| 1163 |

+

st.session_state.columns_color = ["rgb(228,26,28)"] + \

|

| 1164 |

+

["rgb(0,0,0)" for x in reader_type_list[1:]]

|

| 1165 |

+

|

| 1166 |

+

if st.session_state.list_coordinates:

|

| 1167 |

+

list_coordinates = st.session_state.list_coordinates

|

| 1168 |

+

list_images = st.session_state.list_images

|

| 1169 |

+

list_readers = st.session_state.list_readers

|

| 1170 |

+

list_params_det = st.session_state.list_params_det

|

| 1171 |

+

|

| 1172 |

+

##----------- Text detection results --------------------------------------------------------------

|

| 1173 |

+

st.subheader("Text detection")

|

| 1174 |

+

show_detect = st.empty()

|

| 1175 |

+

list_ok_detect = []

|

| 1176 |

+

with show_detect.container():

|

| 1177 |

+

columns = st.columns(st.session_state.columns_size, ) #gap='medium')

|

| 1178 |

+

for no_col, col in enumerate(columns):

|

| 1179 |

+

column_title = '<p style="font-size: 20px;color:' + \

|

| 1180 |

+

st.session_state.columns_color[no_col] + \

|

| 1181 |

+

';">Detection with ' + reader_type_list[no_col]+ '</p>'

|

| 1182 |

+

col.markdown(column_title, unsafe_allow_html=True)

|

| 1183 |

+

if isinstance(list_images[no_col+2], PIL.Image.Image):

|

| 1184 |

+

col.image(list_images[no_col+2], width=st.session_state.column_width[no_col], \

|

| 1185 |

+

use_column_width=True)

|

| 1186 |

+

list_ok_detect.append(reader_type_list[no_col])

|

| 1187 |

+

else:

|

| 1188 |

+

col.write(list_images[no_col+2], use_column_width=True)

|

| 1189 |

+

|

| 1190 |

+

st.subheader("Text recognition")

|

| 1191 |

+

|

| 1192 |

+

st.markdown("##### Using detection performed above by:")

|

| 1193 |

+

st.radio('Choose the detecter:', list_ok_detect, key='detect_reader', \

|

| 1194 |

+

horizontal=True, on_change=highlight)

|

| 1195 |

+

|

| 1196 |

+

##----------- Form with hyperparameters for recognition -----------------------

|

| 1197 |

+

st.markdown("##### Hyperparameters values for recognition:")

|

| 1198 |

+

with st.form("form2"):

|

| 1199 |

+

with st.expander("Choose recognition hyperparameters for " + reader_type_list[0], \

|

| 1200 |

+

expanded=False):

|

| 1201 |

+