Spaces:

Runtime error

Runtime error

Commit

•

7cbaeb9

1

Parent(s):

559bee8

New Release

Browse files- LICENSE +437 -0

- README.md +7 -5

- app.py +357 -0

- code/bezier.py +122 -0

- code/config/base.yaml +50 -0

- code/data/fonts/Bell MT.ttf +0 -0

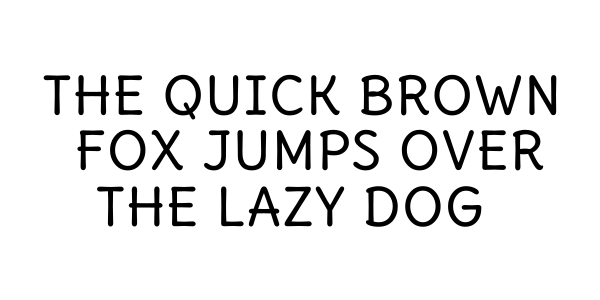

- code/data/fonts/DeliusUnicase-Regular.ttf +0 -0

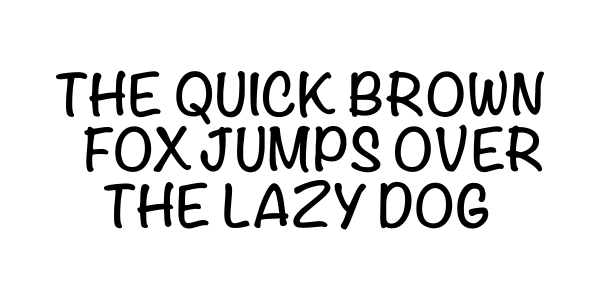

- code/data/fonts/HobeauxRococeaux-Sherman.ttf +0 -0

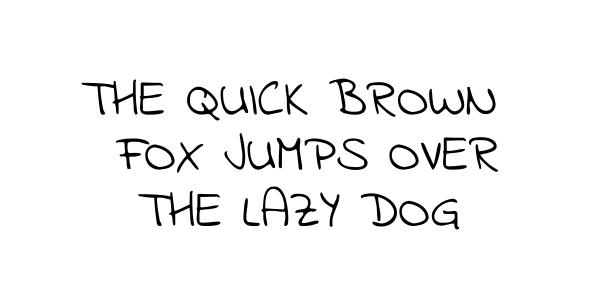

- code/data/fonts/IndieFlower-Regular.ttf +0 -0

- code/data/fonts/JosefinSans-Light.ttf +0 -0

- code/data/fonts/KaushanScript-Regular.ttf +0 -0

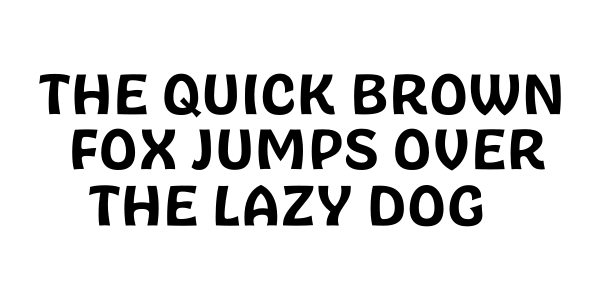

- code/data/fonts/LuckiestGuy-Regular.ttf +0 -0

- code/data/fonts/Noteworthy-Bold.ttf +0 -0

- code/data/fonts/Quicksand.ttf +0 -0

- code/data/fonts/Saira-Regular.ttf +0 -0

- code/losses.py +180 -0

- code/save_svg.py +155 -0

- code/ttf.py +264 -0

- code/utils.py +221 -0

- images/DeliusUnicase-Regular.png +0 -0

- images/HobeauxRococeaux-Sherman.png +0 -0

- images/IndieFlower-Regular.png +0 -0

- images/KaushanScript-Regular.png +0 -0

- images/LuckiestGuy-Regular.png +0 -0

- images/Noteworthy-Bold.png +0 -0

- images/Quicksand.png +0 -0

- images/Saira-Regular.png +0 -0

- packages.txt +1 -0

- requirements.txt +27 -0

LICENSE

ADDED

|

@@ -0,0 +1,437 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Attribution-NonCommercial-ShareAlike 4.0 International

|

| 2 |

+

|

| 3 |

+

=======================================================================

|

| 4 |

+

|

| 5 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and

|

| 6 |

+

does not provide legal services or legal advice. Distribution of

|

| 7 |

+

Creative Commons public licenses does not create a lawyer-client or

|

| 8 |

+

other relationship. Creative Commons makes its licenses and related

|

| 9 |

+

information available on an "as-is" basis. Creative Commons gives no

|

| 10 |

+

warranties regarding its licenses, any material licensed under their

|

| 11 |

+

terms and conditions, or any related information. Creative Commons

|

| 12 |

+

disclaims all liability for damages resulting from their use to the

|

| 13 |

+

fullest extent possible.

|

| 14 |

+

|

| 15 |

+

Using Creative Commons Public Licenses

|

| 16 |

+

|

| 17 |

+

Creative Commons public licenses provide a standard set of terms and

|

| 18 |

+

conditions that creators and other rights holders may use to share

|

| 19 |

+

original works of authorship and other material subject to copyright

|

| 20 |

+

and certain other rights specified in the public license below. The

|

| 21 |

+

following considerations are for informational purposes only, are not

|

| 22 |

+

exhaustive, and do not form part of our licenses.

|

| 23 |

+

|

| 24 |

+

Considerations for licensors: Our public licenses are

|

| 25 |

+

intended for use by those authorized to give the public

|

| 26 |

+

permission to use material in ways otherwise restricted by

|

| 27 |

+

copyright and certain other rights. Our licenses are

|

| 28 |

+

irrevocable. Licensors should read and understand the terms

|

| 29 |

+

and conditions of the license they choose before applying it.

|

| 30 |

+

Licensors should also secure all rights necessary before

|

| 31 |

+

applying our licenses so that the public can reuse the

|

| 32 |

+

material as expected. Licensors should clearly mark any

|

| 33 |

+

material not subject to the license. This includes other CC-

|

| 34 |

+

licensed material, or material used under an exception or

|

| 35 |

+

limitation to copyright. More considerations for licensors:

|

| 36 |

+

wiki.creativecommons.org/Considerations_for_licensors

|

| 37 |

+

|

| 38 |

+

Considerations for the public: By using one of our public

|

| 39 |

+

licenses, a licensor grants the public permission to use the

|

| 40 |

+

licensed material under specified terms and conditions. If

|

| 41 |

+

the licensor's permission is not necessary for any reason--for

|

| 42 |

+

example, because of any applicable exception or limitation to

|

| 43 |

+

copyright--then that use is not regulated by the license. Our

|

| 44 |

+

licenses grant only permissions under copyright and certain

|

| 45 |

+

other rights that a licensor has authority to grant. Use of

|

| 46 |

+

the licensed material may still be restricted for other

|

| 47 |

+

reasons, including because others have copyright or other

|

| 48 |

+

rights in the material. A licensor may make special requests,

|

| 49 |

+

such as asking that all changes be marked or described.

|

| 50 |

+

Although not required by our licenses, you are encouraged to

|

| 51 |

+

respect those requests where reasonable. More considerations

|

| 52 |

+

for the public:

|

| 53 |

+

wiki.creativecommons.org/Considerations_for_licensees

|

| 54 |

+

|

| 55 |

+

=======================================================================

|

| 56 |

+

|

| 57 |

+

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International

|

| 58 |

+

Public License

|

| 59 |

+

|

| 60 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 61 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 62 |

+

Attribution-NonCommercial-ShareAlike 4.0 International Public License

|

| 63 |

+

("Public License"). To the extent this Public License may be

|

| 64 |

+

interpreted as a contract, You are granted the Licensed Rights in

|

| 65 |

+

consideration of Your acceptance of these terms and conditions, and the

|

| 66 |

+

Licensor grants You such rights in consideration of benefits the

|

| 67 |

+

Licensor receives from making the Licensed Material available under

|

| 68 |

+

these terms and conditions.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

Section 1 -- Definitions.

|

| 72 |

+

|

| 73 |

+

a. Adapted Material means material subject to Copyright and Similar

|

| 74 |

+

Rights that is derived from or based upon the Licensed Material

|

| 75 |

+

and in which the Licensed Material is translated, altered,

|

| 76 |

+

arranged, transformed, or otherwise modified in a manner requiring

|

| 77 |

+

permission under the Copyright and Similar Rights held by the

|

| 78 |

+

Licensor. For purposes of this Public License, where the Licensed

|

| 79 |

+

Material is a musical work, performance, or sound recording,

|

| 80 |

+

Adapted Material is always produced where the Licensed Material is

|

| 81 |

+

synched in timed relation with a moving image.

|

| 82 |

+

|

| 83 |

+

b. Adapter's License means the license You apply to Your Copyright

|

| 84 |

+

and Similar Rights in Your contributions to Adapted Material in

|

| 85 |

+

accordance with the terms and conditions of this Public License.

|

| 86 |

+

|

| 87 |

+

c. BY-NC-SA Compatible License means a license listed at

|

| 88 |

+

creativecommons.org/compatiblelicenses, approved by Creative

|

| 89 |

+

Commons as essentially the equivalent of this Public License.

|

| 90 |

+

|

| 91 |

+

d. Copyright and Similar Rights means copyright and/or similar rights

|

| 92 |

+

closely related to copyright including, without limitation,

|

| 93 |

+

performance, broadcast, sound recording, and Sui Generis Database

|

| 94 |

+

Rights, without regard to how the rights are labeled or

|

| 95 |

+

categorized. For purposes of this Public License, the rights

|

| 96 |

+

specified in Section 2(b)(1)-(2) are not Copyright and Similar

|

| 97 |

+

Rights.

|

| 98 |

+

|

| 99 |

+

e. Effective Technological Measures means those measures that, in the

|

| 100 |

+

absence of proper authority, may not be circumvented under laws

|

| 101 |

+

fulfilling obligations under Article 11 of the WIPO Copyright

|

| 102 |

+

Treaty adopted on December 20, 1996, and/or similar international

|

| 103 |

+

agreements.

|

| 104 |

+

|

| 105 |

+

f. Exceptions and Limitations means fair use, fair dealing, and/or

|

| 106 |

+

any other exception or limitation to Copyright and Similar Rights

|

| 107 |

+

that applies to Your use of the Licensed Material.

|

| 108 |

+

|

| 109 |

+

g. License Elements means the license attributes listed in the name

|

| 110 |

+

of a Creative Commons Public License. The License Elements of this

|

| 111 |

+

Public License are Attribution, NonCommercial, and ShareAlike.

|

| 112 |

+

|

| 113 |

+

h. Licensed Material means the artistic or literary work, database,

|

| 114 |

+

or other material to which the Licensor applied this Public

|

| 115 |

+

License.

|

| 116 |

+

|

| 117 |

+

i. Licensed Rights means the rights granted to You subject to the

|

| 118 |

+

terms and conditions of this Public License, which are limited to

|

| 119 |

+

all Copyright and Similar Rights that apply to Your use of the

|

| 120 |

+

Licensed Material and that the Licensor has authority to license.

|

| 121 |

+

|

| 122 |

+

j. Licensor means the individual(s) or entity(ies) granting rights

|

| 123 |

+

under this Public License.

|

| 124 |

+

|

| 125 |

+

k. NonCommercial means not primarily intended for or directed towards

|

| 126 |

+

commercial advantage or monetary compensation. For purposes of

|

| 127 |

+

this Public License, the exchange of the Licensed Material for

|

| 128 |

+

other material subject to Copyright and Similar Rights by digital

|

| 129 |

+

file-sharing or similar means is NonCommercial provided there is

|

| 130 |

+

no payment of monetary compensation in connection with the

|

| 131 |

+

exchange.

|

| 132 |

+

|

| 133 |

+

l. Share means to provide material to the public by any means or

|

| 134 |

+

process that requires permission under the Licensed Rights, such

|

| 135 |

+

as reproduction, public display, public performance, distribution,

|

| 136 |

+

dissemination, communication, or importation, and to make material

|

| 137 |

+

available to the public including in ways that members of the

|

| 138 |

+

public may access the material from a place and at a time

|

| 139 |

+

individually chosen by them.

|

| 140 |

+

|

| 141 |

+

m. Sui Generis Database Rights means rights other than copyright

|

| 142 |

+

resulting from Directive 96/9/EC of the European Parliament and of

|

| 143 |

+

the Council of 11 March 1996 on the legal protection of databases,

|

| 144 |

+

as amended and/or succeeded, as well as other essentially

|

| 145 |

+

equivalent rights anywhere in the world.

|

| 146 |

+

|

| 147 |

+

n. You means the individual or entity exercising the Licensed Rights

|

| 148 |

+

under this Public License. Your has a corresponding meaning.

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

Section 2 -- Scope.

|

| 152 |

+

|

| 153 |

+

a. License grant.

|

| 154 |

+

|

| 155 |

+

1. Subject to the terms and conditions of this Public License,

|

| 156 |

+

the Licensor hereby grants You a worldwide, royalty-free,

|

| 157 |

+

non-sublicensable, non-exclusive, irrevocable license to

|

| 158 |

+

exercise the Licensed Rights in the Licensed Material to:

|

| 159 |

+

|

| 160 |

+

a. reproduce and Share the Licensed Material, in whole or

|

| 161 |

+

in part, for NonCommercial purposes only; and

|

| 162 |

+

|

| 163 |

+

b. produce, reproduce, and Share Adapted Material for

|

| 164 |

+

NonCommercial purposes only.

|

| 165 |

+

|

| 166 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where

|

| 167 |

+

Exceptions and Limitations apply to Your use, this Public

|

| 168 |

+

License does not apply, and You do not need to comply with

|

| 169 |

+

its terms and conditions.

|

| 170 |

+

|

| 171 |

+

3. Term. The term of this Public License is specified in Section

|

| 172 |

+

6(a).

|

| 173 |

+

|

| 174 |

+

4. Media and formats; technical modifications allowed. The

|

| 175 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 176 |

+

all media and formats whether now known or hereafter created,

|

| 177 |

+

and to make technical modifications necessary to do so. The

|

| 178 |

+

Licensor waives and/or agrees not to assert any right or

|

| 179 |

+

authority to forbid You from making technical modifications

|

| 180 |

+

necessary to exercise the Licensed Rights, including

|

| 181 |

+

technical modifications necessary to circumvent Effective

|

| 182 |

+

Technological Measures. For purposes of this Public License,

|

| 183 |

+

simply making modifications authorized by this Section 2(a)

|

| 184 |

+

(4) never produces Adapted Material.

|

| 185 |

+

|

| 186 |

+

5. Downstream recipients.

|

| 187 |

+

|

| 188 |

+

a. Offer from the Licensor -- Licensed Material. Every

|

| 189 |

+

recipient of the Licensed Material automatically

|

| 190 |

+

receives an offer from the Licensor to exercise the

|

| 191 |

+

Licensed Rights under the terms and conditions of this

|

| 192 |

+

Public License.

|

| 193 |

+

|

| 194 |

+

b. Additional offer from the Licensor -- Adapted Material.

|

| 195 |

+

Every recipient of Adapted Material from You

|

| 196 |

+

automatically receives an offer from the Licensor to

|

| 197 |

+

exercise the Licensed Rights in the Adapted Material

|

| 198 |

+

under the conditions of the Adapter's License You apply.

|

| 199 |

+

|

| 200 |

+

c. No downstream restrictions. You may not offer or impose

|

| 201 |

+

any additional or different terms or conditions on, or

|

| 202 |

+

apply any Effective Technological Measures to, the

|

| 203 |

+

Licensed Material if doing so restricts exercise of the

|

| 204 |

+

Licensed Rights by any recipient of the Licensed

|

| 205 |

+

Material.

|

| 206 |

+

|

| 207 |

+

6. No endorsement. Nothing in this Public License constitutes or

|

| 208 |

+

may be construed as permission to assert or imply that You

|

| 209 |

+

are, or that Your use of the Licensed Material is, connected

|

| 210 |

+

with, or sponsored, endorsed, or granted official status by,

|

| 211 |

+

the Licensor or others designated to receive attribution as

|

| 212 |

+

provided in Section 3(a)(1)(A)(i).

|

| 213 |

+

|

| 214 |

+

b. Other rights.

|

| 215 |

+

|

| 216 |

+

1. Moral rights, such as the right of integrity, are not

|

| 217 |

+

licensed under this Public License, nor are publicity,

|

| 218 |

+

privacy, and/or other similar personality rights; however, to

|

| 219 |

+

the extent possible, the Licensor waives and/or agrees not to

|

| 220 |

+

assert any such rights held by the Licensor to the limited

|

| 221 |

+

extent necessary to allow You to exercise the Licensed

|

| 222 |

+

Rights, but not otherwise.

|

| 223 |

+

|

| 224 |

+

2. Patent and trademark rights are not licensed under this

|

| 225 |

+

Public License.

|

| 226 |

+

|

| 227 |

+

3. To the extent possible, the Licensor waives any right to

|

| 228 |

+

collect royalties from You for the exercise of the Licensed

|

| 229 |

+

Rights, whether directly or through a collecting society

|

| 230 |

+

under any voluntary or waivable statutory or compulsory

|

| 231 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 232 |

+

reserves any right to collect such royalties, including when

|

| 233 |

+

the Licensed Material is used other than for NonCommercial

|

| 234 |

+

purposes.

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

Section 3 -- License Conditions.

|

| 238 |

+

|

| 239 |

+

Your exercise of the Licensed Rights is expressly made subject to the

|

| 240 |

+

following conditions.

|

| 241 |

+

|

| 242 |

+

a. Attribution.

|

| 243 |

+

|

| 244 |

+

1. If You Share the Licensed Material (including in modified

|

| 245 |

+

form), You must:

|

| 246 |

+

|

| 247 |

+

a. retain the following if it is supplied by the Licensor

|

| 248 |

+

with the Licensed Material:

|

| 249 |

+

|

| 250 |

+

i. identification of the creator(s) of the Licensed

|

| 251 |

+

Material and any others designated to receive

|

| 252 |

+

attribution, in any reasonable manner requested by

|

| 253 |

+

the Licensor (including by pseudonym if

|

| 254 |

+

designated);

|

| 255 |

+

|

| 256 |

+

ii. a copyright notice;

|

| 257 |

+

|

| 258 |

+

iii. a notice that refers to this Public License;

|

| 259 |

+

|

| 260 |

+

iv. a notice that refers to the disclaimer of

|

| 261 |

+

warranties;

|

| 262 |

+

|

| 263 |

+

v. a URI or hyperlink to the Licensed Material to the

|

| 264 |

+

extent reasonably practicable;

|

| 265 |

+

|

| 266 |

+

b. indicate if You modified the Licensed Material and

|

| 267 |

+

retain an indication of any previous modifications; and

|

| 268 |

+

|

| 269 |

+

c. indicate the Licensed Material is licensed under this

|

| 270 |

+

Public License, and include the text of, or the URI or

|

| 271 |

+

hyperlink to, this Public License.

|

| 272 |

+

|

| 273 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 274 |

+

reasonable manner based on the medium, means, and context in

|

| 275 |

+

which You Share the Licensed Material. For example, it may be

|

| 276 |

+

reasonable to satisfy the conditions by providing a URI or

|

| 277 |

+

hyperlink to a resource that includes the required

|

| 278 |

+

information.

|

| 279 |

+

3. If requested by the Licensor, You must remove any of the

|

| 280 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 281 |

+

reasonably practicable.

|

| 282 |

+

|

| 283 |

+

b. ShareAlike.

|

| 284 |

+

|

| 285 |

+

In addition to the conditions in Section 3(a), if You Share

|

| 286 |

+

Adapted Material You produce, the following conditions also apply.

|

| 287 |

+

|

| 288 |

+

1. The Adapter's License You apply must be a Creative Commons

|

| 289 |

+

license with the same License Elements, this version or

|

| 290 |

+

later, or a BY-NC-SA Compatible License.

|

| 291 |

+

|

| 292 |

+

2. You must include the text of, or the URI or hyperlink to, the

|

| 293 |

+

Adapter's License You apply. You may satisfy this condition

|

| 294 |

+

in any reasonable manner based on the medium, means, and

|

| 295 |

+

context in which You Share Adapted Material.

|

| 296 |

+

|

| 297 |

+

3. You may not offer or impose any additional or different terms

|

| 298 |

+

or conditions on, or apply any Effective Technological

|

| 299 |

+

Measures to, Adapted Material that restrict exercise of the

|

| 300 |

+

rights granted under the Adapter's License You apply.

|

| 301 |

+

|

| 302 |

+

|

| 303 |

+

Section 4 -- Sui Generis Database Rights.

|

| 304 |

+

|

| 305 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 306 |

+

apply to Your use of the Licensed Material:

|

| 307 |

+

|

| 308 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right

|

| 309 |

+

to extract, reuse, reproduce, and Share all or a substantial

|

| 310 |

+

portion of the contents of the database for NonCommercial purposes

|

| 311 |

+

only;

|

| 312 |

+

|

| 313 |

+

b. if You include all or a substantial portion of the database

|

| 314 |

+

contents in a database in which You have Sui Generis Database

|

| 315 |

+

Rights, then the database in which You have Sui Generis Database

|

| 316 |

+

Rights (but not its individual contents) is Adapted Material,

|

| 317 |

+

including for purposes of Section 3(b); and

|

| 318 |

+

|

| 319 |

+

c. You must comply with the conditions in Section 3(a) if You Share

|

| 320 |

+

all or a substantial portion of the contents of the database.

|

| 321 |

+

|

| 322 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 323 |

+

replace Your obligations under this Public License where the Licensed

|

| 324 |

+

Rights include other Copyright and Similar Rights.

|

| 325 |

+

|

| 326 |

+

|

| 327 |

+

Section 5 -- Disclaimer of Warranties and Limitation of Liability.

|

| 328 |

+

|

| 329 |

+

a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

|

| 330 |

+

EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

|

| 331 |

+

AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

|

| 332 |

+

ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

|

| 333 |

+

IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

|

| 334 |

+

WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

|

| 335 |

+

PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

|

| 336 |

+

ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

|

| 337 |

+

KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

|

| 338 |

+

ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

|

| 339 |

+

|

| 340 |

+

b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

|

| 341 |

+

TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

|

| 342 |

+

NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

|

| 343 |

+

INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

|

| 344 |

+

COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

|

| 345 |

+

USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

|

| 346 |

+

ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

|

| 347 |

+

DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

|

| 348 |

+

IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

|

| 349 |

+

|

| 350 |

+

c. The disclaimer of warranties and limitation of liability provided

|

| 351 |

+

above shall be interpreted in a manner that, to the extent

|

| 352 |

+

possible, most closely approximates an absolute disclaimer and

|

| 353 |

+

waiver of all liability.

|

| 354 |

+

|

| 355 |

+

|

| 356 |

+

Section 6 -- Term and Termination.

|

| 357 |

+

|

| 358 |

+

a. This Public License applies for the term of the Copyright and

|

| 359 |

+

Similar Rights licensed here. However, if You fail to comply with

|

| 360 |

+

this Public License, then Your rights under this Public License

|

| 361 |

+

terminate automatically.

|

| 362 |

+

|

| 363 |

+

b. Where Your right to use the Licensed Material has terminated under

|

| 364 |

+

Section 6(a), it reinstates:

|

| 365 |

+

|

| 366 |

+

1. automatically as of the date the violation is cured, provided

|

| 367 |

+

it is cured within 30 days of Your discovery of the

|

| 368 |

+

violation; or

|

| 369 |

+

|

| 370 |

+

2. upon express reinstatement by the Licensor.

|

| 371 |

+

|

| 372 |

+

For the avoidance of doubt, this Section 6(b) does not affect any

|

| 373 |

+

right the Licensor may have to seek remedies for Your violations

|

| 374 |

+

of this Public License.

|

| 375 |

+

|

| 376 |

+

c. For the avoidance of doubt, the Licensor may also offer the

|

| 377 |

+

Licensed Material under separate terms or conditions or stop

|

| 378 |

+

distributing the Licensed Material at any time; however, doing so

|

| 379 |

+

will not terminate this Public License.

|

| 380 |

+

|

| 381 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 382 |

+

License.

|

| 383 |

+

|

| 384 |

+

|

| 385 |

+

Section 7 -- Other Terms and Conditions.

|

| 386 |

+

|

| 387 |

+

a. The Licensor shall not be bound by any additional or different

|

| 388 |

+

terms or conditions communicated by You unless expressly agreed.

|

| 389 |

+

|

| 390 |

+

b. Any arrangements, understandings, or agreements regarding the

|

| 391 |

+

Licensed Material not stated herein are separate from and

|

| 392 |

+

independent of the terms and conditions of this Public License.

|

| 393 |

+

|

| 394 |

+

|

| 395 |

+

Section 8 -- Interpretation.

|

| 396 |

+

|

| 397 |

+

a. For the avoidance of doubt, this Public License does not, and

|

| 398 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 399 |

+

conditions on any use of the Licensed Material that could lawfully

|

| 400 |

+

be made without permission under this Public License.

|

| 401 |

+

|

| 402 |

+

b. To the extent possible, if any provision of this Public License is

|

| 403 |

+

deemed unenforceable, it shall be automatically reformed to the

|

| 404 |

+

minimum extent necessary to make it enforceable. If the provision

|

| 405 |

+

cannot be reformed, it shall be severed from this Public License

|

| 406 |

+

without affecting the enforceability of the remaining terms and

|

| 407 |

+

conditions.

|

| 408 |

+

|

| 409 |

+

c. No term or condition of this Public License will be waived and no

|

| 410 |

+

failure to comply consented to unless expressly agreed to by the

|

| 411 |

+

Licensor.

|

| 412 |

+

|

| 413 |

+

d. Nothing in this Public License constitutes or may be interpreted

|

| 414 |

+

as a limitation upon, or waiver of, any privileges and immunities

|

| 415 |

+

that apply to the Licensor or You, including from the legal

|

| 416 |

+

processes of any jurisdiction or authority.

|

| 417 |

+

|

| 418 |

+

=======================================================================

|

| 419 |

+

|

| 420 |

+

Creative Commons is not a party to its public

|

| 421 |

+

licenses. Notwithstanding, Creative Commons may elect to apply one of

|

| 422 |

+

its public licenses to material it publishes and in those instances

|

| 423 |

+

will be considered the “Licensor.” The text of the Creative Commons

|

| 424 |

+

public licenses is dedicated to the public domain under the CC0 Public

|

| 425 |

+

Domain Dedication. Except for the limited purpose of indicating that

|

| 426 |

+

material is shared under a Creative Commons public license or as

|

| 427 |

+

otherwise permitted by the Creative Commons policies published at

|

| 428 |

+

creativecommons.org/policies, Creative Commons does not authorize the

|

| 429 |

+

use of the trademark "Creative Commons" or any other trademark or logo

|

| 430 |

+

of Creative Commons without its prior written consent including,

|

| 431 |

+

without limitation, in connection with any unauthorized modifications

|

| 432 |

+

to any of its public licenses or any other arrangements,

|

| 433 |

+

understandings, or agreements concerning use of licensed material. For

|

| 434 |

+

the avoidance of doubt, this paragraph does not form part of the

|

| 435 |

+

public licenses.

|

| 436 |

+

|

| 437 |

+

Creative Commons may be contacted at creativecommons.org.

|

README.md

CHANGED

|

@@ -1,12 +1,14 @@

|

|

| 1 |

---

|

| 2 |

title: Word As Image

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 3.

|

|

|

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

|

|

|

| 10 |

---

|

| 11 |

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

title: Word As Image

|

| 3 |

+

emoji: 🚀

|

| 4 |

+

colorFrom: purple

|

| 5 |

+

colorTo: pink

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 3.21.0

|

| 8 |

+

python_version: 3.8.15

|

| 9 |

app_file: app.py

|

| 10 |

pinned: false

|

| 11 |

+

license: cc-by-sa-4.0

|

| 12 |

---

|

| 13 |

|

| 14 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,357 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

import argparse

|

| 4 |

+

from easydict import EasyDict as edict

|

| 5 |

+

import yaml

|

| 6 |

+

import os.path as osp

|

| 7 |

+

import random

|

| 8 |

+

import numpy.random as npr

|

| 9 |

+

import sys

|

| 10 |

+

|

| 11 |

+

sys.path.append('/home/user/app/code')

|

| 12 |

+

|

| 13 |

+

# set up diffvg

|

| 14 |

+

os.system('git clone https://github.com/BachiLi/diffvg.git')

|

| 15 |

+

os.chdir('diffvg')

|

| 16 |

+

os.system('git submodule update --init --recursive')

|

| 17 |

+

os.system('python setup.py install --user')

|

| 18 |

+

sys.path.append("/home/user/.local/lib/python3.8/site-packages/diffvg-0.0.1-py3.8-linux-x86_64.egg")

|

| 19 |

+

|

| 20 |

+

os.chdir('/home/user/app')

|

| 21 |

+

|

| 22 |

+

import torch

|

| 23 |

+

from diffusers import StableDiffusionPipeline

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

|

| 27 |

+

|

| 28 |

+

model = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5",

|

| 29 |

+

torch_dtype=torch.float16, use_auth_token=os.environ['HF_TOKEN']).to(device)

|

| 30 |

+

|

| 31 |

+

from typing import Mapping

|

| 32 |

+

from tqdm import tqdm

|

| 33 |

+

import torch

|

| 34 |

+

from torch.optim.lr_scheduler import LambdaLR

|

| 35 |

+

import pydiffvg

|

| 36 |

+

import save_svg

|

| 37 |

+

from losses import SDSLoss, ToneLoss, ConformalLoss

|

| 38 |

+

from utils import (

|

| 39 |

+

edict_2_dict,

|

| 40 |

+

update,

|

| 41 |

+

check_and_create_dir,

|

| 42 |

+

get_data_augs,

|

| 43 |

+

save_image,

|

| 44 |

+

preprocess,

|

| 45 |

+

learning_rate_decay,

|

| 46 |

+

combine_word)

|

| 47 |

+

import warnings

|

| 48 |

+

|

| 49 |

+

TITLE="""<h1 style="font-size: 42px;" align="center">Word-As-Image for Semantic Typography</h1>"""

|

| 50 |

+

DESCRIPTION="""This is a demo for [Word-As-Image for Semantic Typography](https://wordasimage.github.io/Word-As-Image-Page/). By using Word-as-Image, a visual representation of the meaning of the word is created while maintaining legibility of the text and font style.

|

| 51 |

+

Please select a semantic concept word and a letter you wish to generate, it will take ~5 minutes to perform 500 iterations."""

|

| 52 |

+

|

| 53 |

+

warnings.filterwarnings("ignore")

|

| 54 |

+

|

| 55 |

+

pydiffvg.set_print_timing(False)

|

| 56 |

+

gamma = 1.0

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

def set_config(semantic_concept, word, letter, font_name, num_steps):

|

| 60 |

+

|

| 61 |

+

cfg_d = edict()

|

| 62 |

+

cfg_d.config = "code/config/base.yaml"

|

| 63 |

+

cfg_d.experiment = "demo"

|

| 64 |

+

|

| 65 |

+

with open(cfg_d.config, 'r') as f:

|

| 66 |

+

cfg_full = yaml.load(f, Loader=yaml.FullLoader)

|

| 67 |

+

|

| 68 |

+

cfg_key = cfg_d.experiment

|

| 69 |

+

cfgs = [cfg_d]

|

| 70 |

+

while cfg_key:

|

| 71 |

+

cfgs.append(cfg_full[cfg_key])

|

| 72 |

+

cfg_key = cfgs[-1].get('parent_config', 'baseline')

|

| 73 |

+

|

| 74 |

+

cfg = edict()

|

| 75 |

+

for options in reversed(cfgs):

|

| 76 |

+

update(cfg, options)

|

| 77 |

+

del cfgs

|

| 78 |

+

|

| 79 |

+

cfg.semantic_concept = semantic_concept

|

| 80 |

+

cfg.word = word

|

| 81 |

+

cfg.optimized_letter = letter

|

| 82 |

+

cfg.font = font_name

|

| 83 |

+

cfg.seed = 0

|

| 84 |

+

cfg.num_iter = num_steps

|

| 85 |

+

|

| 86 |

+

if ' ' in cfg.word:

|

| 87 |

+

raise gr.Error(f'should be only one word')

|

| 88 |

+

cfg.caption = f"a {cfg.semantic_concept}. {cfg.prompt_suffix}"

|

| 89 |

+

cfg.log_dir = f"output/{cfg.experiment}_{cfg.word}"

|

| 90 |

+

if cfg.optimized_letter in cfg.word:

|

| 91 |

+

cfg.optimized_letter = cfg.optimized_letter

|

| 92 |

+

else:

|

| 93 |

+

raise gr.Error(f'letter should be in word')

|

| 94 |

+

|

| 95 |

+

cfg.letter = f"{cfg.font}_{cfg.optimized_letter}_scaled"

|

| 96 |

+

cfg.target = f"code/data/init/{cfg.letter}"

|

| 97 |

+

|

| 98 |

+

# set experiment dir

|

| 99 |

+

signature = f"{cfg.letter}_concept_{cfg.semantic_concept}_seed_{cfg.seed}"

|

| 100 |

+

cfg.experiment_dir = \

|

| 101 |

+

osp.join(cfg.log_dir, cfg.font, signature)

|

| 102 |

+

configfile = osp.join(cfg.experiment_dir, 'config.yaml')

|

| 103 |

+

|

| 104 |

+

# create experiment dir and save config

|

| 105 |

+

check_and_create_dir(configfile)

|

| 106 |

+

with open(osp.join(configfile), 'w') as f:

|

| 107 |

+

yaml.dump(edict_2_dict(cfg), f)

|

| 108 |

+

|

| 109 |

+

if cfg.seed is not None:

|

| 110 |

+

random.seed(cfg.seed)

|

| 111 |

+

npr.seed(cfg.seed)

|

| 112 |

+

torch.manual_seed(cfg.seed)

|

| 113 |

+

torch.backends.cudnn.benchmark = False

|

| 114 |

+

else:

|

| 115 |

+

assert False

|

| 116 |

+

return cfg

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

def init_shapes(svg_path, trainable: Mapping[str, bool]):

|

| 120 |

+

svg = f'{svg_path}.svg'

|

| 121 |

+

canvas_width, canvas_height, shapes_init, shape_groups_init = pydiffvg.svg_to_scene(svg)

|

| 122 |

+

|

| 123 |

+

parameters = edict()

|

| 124 |

+

|

| 125 |

+

# path points

|

| 126 |

+

if trainable.point:

|

| 127 |

+

parameters.point = []

|

| 128 |

+

for path in shapes_init:

|

| 129 |

+

path.points.requires_grad = True

|

| 130 |

+

parameters.point.append(path.points)

|

| 131 |

+

|

| 132 |

+

return shapes_init, shape_groups_init, parameters

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

def run_main_ex(semantic_concept, word, letter, font_name, num_steps):

|

| 136 |

+

return list(next(run_main_app(semantic_concept, word, letter, font_name, num_steps, 1)))

|

| 137 |

+

|

| 138 |

+

def run_main_app(semantic_concept, word, letter, font_name, num_steps, example=0):

|

| 139 |

+

|

| 140 |

+

cfg = set_config(semantic_concept, word, letter, font_name, num_steps)

|

| 141 |

+

|

| 142 |

+

pydiffvg.set_use_gpu(torch.cuda.is_available())

|

| 143 |

+

|

| 144 |

+

print("preprocessing")

|

| 145 |

+

preprocess(cfg.font, cfg.word, cfg.optimized_letter, cfg.level_of_cc)

|

| 146 |

+

filename_init = os.path.join("code/data/init/", f"{cfg.font}_{cfg.word}_scaled.svg").replace(" ", "_")

|

| 147 |

+

if not example:

|

| 148 |

+

yield gr.update(value=filename_init,visible=True),gr.update(visible=False),gr.update(visible=False)

|

| 149 |

+

|

| 150 |

+

sds_loss = SDSLoss(cfg, device, model)

|

| 151 |

+

|

| 152 |

+

h, w = cfg.render_size, cfg.render_size

|

| 153 |

+

|

| 154 |

+

data_augs = get_data_augs(cfg.cut_size)

|

| 155 |

+

|

| 156 |

+

render = pydiffvg.RenderFunction.apply

|

| 157 |

+

|

| 158 |

+

# initialize shape

|

| 159 |

+

print('initializing shape')

|

| 160 |

+

shapes, shape_groups, parameters = init_shapes(svg_path=cfg.target, trainable=cfg.trainable)

|

| 161 |

+

|

| 162 |

+

scene_args = pydiffvg.RenderFunction.serialize_scene(w, h, shapes, shape_groups)

|

| 163 |

+

img_init = render(w, h, 2, 2, 0, None, *scene_args)

|

| 164 |

+

img_init = img_init[:, :, 3:4] * img_init[:, :, :3] + \

|

| 165 |

+

torch.ones(img_init.shape[0], img_init.shape[1], 3, device=device) * (1 - img_init[:, :, 3:4])

|

| 166 |

+

img_init = img_init[:, :, :3]

|

| 167 |

+

|

| 168 |

+

tone_loss = ToneLoss(cfg)

|

| 169 |

+

tone_loss.set_image_init(img_init)

|

| 170 |

+

|

| 171 |

+

num_iter = cfg.num_iter

|

| 172 |

+

pg = [{'params': parameters["point"], 'lr': cfg.lr_base["point"]}]

|

| 173 |

+

optim = torch.optim.Adam(pg, betas=(0.9, 0.9), eps=1e-6)

|

| 174 |

+

|

| 175 |

+

conformal_loss = ConformalLoss(parameters, device, cfg.optimized_letter, shape_groups)

|

| 176 |

+

|

| 177 |

+

lr_lambda = lambda step: learning_rate_decay(step, cfg.lr.lr_init, cfg.lr.lr_final, num_iter,

|

| 178 |

+

lr_delay_steps=cfg.lr.lr_delay_steps,

|

| 179 |

+

lr_delay_mult=cfg.lr.lr_delay_mult) / cfg.lr.lr_init

|

| 180 |

+

|

| 181 |

+

scheduler = LambdaLR(optim, lr_lambda=lr_lambda, last_epoch=-1) # lr.base * lrlambda_f

|

| 182 |

+

|

| 183 |

+

print("start training")

|

| 184 |

+

# training loop

|

| 185 |

+

t_range = tqdm(range(num_iter))

|

| 186 |

+

for step in t_range:

|

| 187 |

+

optim.zero_grad()

|

| 188 |

+

|

| 189 |

+

# render image

|

| 190 |

+

scene_args = pydiffvg.RenderFunction.serialize_scene(w, h, shapes, shape_groups)

|

| 191 |

+

img = render(w, h, 2, 2, step, None, *scene_args)

|

| 192 |

+

|

| 193 |

+

# compose image with white background

|

| 194 |

+

img = img[:, :, 3:4] * img[:, :, :3] + torch.ones(img.shape[0], img.shape[1], 3, device=device) * (

|

| 195 |

+

1 - img[:, :, 3:4])

|

| 196 |

+

img = img[:, :, :3]

|

| 197 |

+

|

| 198 |

+

filename = os.path.join(

|

| 199 |

+

cfg.experiment_dir, "video-svg", f"iter{step:04d}.svg")

|

| 200 |

+

check_and_create_dir(filename)

|

| 201 |

+

save_svg.save_svg(filename, w, h, shapes, shape_groups)

|

| 202 |

+

if not example:

|

| 203 |

+

yield gr.update(visible=True),gr.update(value=filename, label=f'iters: {step} / {num_iter}', visible=True),gr.update(visible=False)

|

| 204 |

+

|

| 205 |

+

x = img.unsqueeze(0).permute(0, 3, 1, 2) # HWC -> NCHW

|

| 206 |

+

x = x.repeat(cfg.batch_size, 1, 1, 1)

|

| 207 |

+

x_aug = data_augs.forward(x)

|

| 208 |

+

|

| 209 |

+

# compute diffusion loss per pixel

|

| 210 |

+

loss = sds_loss(x_aug)

|

| 211 |

+

|

| 212 |

+

tone_loss_res = tone_loss(x, step)

|

| 213 |

+

loss = loss + tone_loss_res

|

| 214 |

+

|

| 215 |

+

loss_angles = conformal_loss()

|

| 216 |

+

loss_angles = cfg.loss.conformal.angeles_w * loss_angles

|

| 217 |

+

loss = loss + loss_angles

|

| 218 |

+

|

| 219 |

+

loss.backward()

|

| 220 |

+

optim.step()

|

| 221 |

+

scheduler.step()

|

| 222 |

+

|

| 223 |

+

|

| 224 |

+

filename = os.path.join(

|

| 225 |

+

cfg.experiment_dir, "output-svg", "output.svg")

|

| 226 |

+

check_and_create_dir(filename)

|

| 227 |

+

save_svg.save_svg(

|

| 228 |

+

filename, w, h, shapes, shape_groups)

|

| 229 |

+

|

| 230 |

+

combine_word(cfg.word, cfg.optimized_letter, cfg.font, cfg.experiment_dir)

|

| 231 |

+

|

| 232 |

+

image = os.path.join(cfg.experiment_dir,f"{cfg.font}_{cfg.word}_{cfg.optimized_letter}.svg")

|

| 233 |

+

yield gr.update(value=filename_init,visible=True),gr.update(visible=False),gr.update(value=image,visible=True)

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

with gr.Blocks() as demo:

|

| 237 |

+

|

| 238 |

+

gr.HTML(TITLE)

|

| 239 |

+

gr.Markdown(DESCRIPTION)

|

| 240 |

+

|

| 241 |

+

with gr.Row():

|

| 242 |

+

with gr.Column():

|

| 243 |

+

|

| 244 |

+

semantic_concept = gr.Text(

|

| 245 |

+

label='Semantic Concept',

|

| 246 |

+

max_lines=1,

|

| 247 |

+

placeholder=

|

| 248 |

+

'Enter a semantic concept. For example: BUNNY'

|

| 249 |

+

)

|

| 250 |

+

|

| 251 |

+

word = gr.Text(

|

| 252 |

+

label='Word',

|

| 253 |

+

max_lines=1,

|

| 254 |

+

placeholder=

|

| 255 |

+

'Enter a word. For example: BUNNY'

|

| 256 |

+

)

|

| 257 |

+

|

| 258 |

+

letter = gr.Text(

|

| 259 |

+

label='Letter',

|

| 260 |

+

max_lines=1,

|

| 261 |

+

placeholder=

|

| 262 |

+

'Choose a letter in the word to optimize. For example: Y'

|

| 263 |

+

)

|

| 264 |

+

|

| 265 |

+

num_steps = gr.Slider(label='Optimization Iterations',

|

| 266 |

+

minimum=0,

|

| 267 |

+

maximum=500,

|

| 268 |

+

step=10,

|

| 269 |

+

value=500)

|

| 270 |

+

|

| 271 |

+

font_name = gr.Text(value=None,visible=False,label="Font Name")

|

| 272 |

+

gallery = gr.Gallery(value=[(os.path.join("images","KaushanScript-Regular.png"),"KaushanScript-Regular"), (os.path.join("images","IndieFlower-Regular.png"),"IndieFlower-Regular"),(os.path.join("images","Quicksand.png"),"Quicksand"),

|

| 273 |

+

(os.path.join("images","Saira-Regular.png"),"Saira-Regular"), (os.path.join("images","LuckiestGuy-Regular.png"),"LuckiestGuy-Regular"),(os.path.join("images","DeliusUnicase-Regular.png"),"DeliusUnicase-Regular"),

|

| 274 |

+

(os.path.join("images","Noteworthy-Bold.png"),"Noteworthy-Bold"), (os.path.join("images","HobeauxRococeaux-Sherman.png"),"HobeauxRococeaux-Sherman")],label="Font Name").style(grid=4)

|

| 275 |

+

|

| 276 |

+

def on_select(evt: gr.SelectData):

|

| 277 |

+

return evt.value

|

| 278 |

+

|

| 279 |

+

gallery.select(fn=on_select, inputs=None, outputs=font_name)

|

| 280 |

+

|

| 281 |

+

run = gr.Button('Generate')

|

| 282 |

+

|

| 283 |

+

with gr.Column():

|

| 284 |

+

result0 = gr.Image(type="filepath", label="Initial Word").style(height=333)

|

| 285 |

+

result1 = gr.Image(type="filepath", label="Optimization Process").style(height=110)

|

| 286 |

+

result2 = gr.Image(type="filepath", label="Final Result",visible=False).style(height=333)

|

| 287 |

+

|

| 288 |

+

|

| 289 |

+

with gr.Row():

|

| 290 |

+

# examples

|

| 291 |

+

examples = [

|

| 292 |

+

[

|

| 293 |

+

"BUNNY",

|

| 294 |

+

"BUNNY",

|

| 295 |

+

"Y",

|

| 296 |

+

"KaushanScript-Regular",

|

| 297 |

+

500

|

| 298 |

+

],

|

| 299 |

+

[

|

| 300 |

+

"LION",

|

| 301 |

+

"LION",

|

| 302 |

+

"O",

|

| 303 |

+

"Quicksand",

|

| 304 |

+

500

|

| 305 |

+

],

|

| 306 |

+

[

|

| 307 |

+

"FROG",

|

| 308 |

+

"FROG",

|

| 309 |

+

"G",

|

| 310 |

+

"IndieFlower-Regular",

|

| 311 |

+

500

|

| 312 |

+

],

|

| 313 |

+

[

|

| 314 |

+

"CAT",

|

| 315 |

+

"CAT",

|

| 316 |

+

"C",

|

| 317 |

+

"LuckiestGuy-Regular",

|

| 318 |

+

500

|

| 319 |

+