Spaces:

Runtime error

Runtime error

shoubin

commited on

Commit

•

7e8784c

1

Parent(s):

0054d8c

upload_demo

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .DS_Store +0 -0

- .gitattributes +1 -0

- LICENSE.txt +14 -0

- MANIFEST.in +7 -0

- README.md +112 -13

- app.py +206 -0

- app/__init__.py +26 -0

- app/calculate_coco_features.py +87 -0

- app/caption.py +98 -0

- app/classification.py +216 -0

- app/dataset_browser.py +240 -0

- app/image_text_match.py +87 -0

- app/main.py +25 -0

- app/multimodal_search.py +230 -0

- app/multipage.py +41 -0

- app/text_localization.py +105 -0

- app/utils.py +81 -0

- app/vqa.py +63 -0

- assets/.DS_Store +0 -0

- assets/chain.png +0 -0

- assets/model.png +0 -0

- assets/teaser.png +0 -0

- docs/.DS_Store +0 -0

- docs/Makefile +20 -0

- docs/_static/.DS_Store +0 -0

- docs/_static/architecture.png +0 -0

- docs/_static/logo_final.png +0 -0

- docs/benchmark.rst +348 -0

- docs/build_docs.sh +101 -0

- docs/conf.py +56 -0

- docs/getting_started.rst +233 -0

- docs/index.rst +46 -0

- docs/intro.rst +99 -0

- docs/make.bat +35 -0

- docs/requirements.txt +7 -0

- docs/tutorial.configs.rst +172 -0

- docs/tutorial.datasets.rst +424 -0

- docs/tutorial.evaluation.rst +40 -0

- docs/tutorial.models.rst +245 -0

- docs/tutorial.processors.rst +233 -0

- docs/tutorial.rst +13 -0

- docs/tutorial.tasks.rst +184 -0

- docs/tutorial.training-example.rst +145 -0

- evaluate.py +92 -0

- lavis/.DS_Store +0 -0

- lavis/__init__.py +31 -0

- lavis/__pycache__/__init__.cpython-38.pyc +0 -0

- lavis/common/.DS_Store +0 -0

- lavis/common/__pycache__/config.cpython-38.pyc +0 -0

- lavis/common/__pycache__/dist_utils.cpython-38.pyc +0 -0

.DS_Store

ADDED

|

Binary file (10.2 kB). View file

|

|

|

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

demo4.mp4 filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

demo4.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

videos/*.mp4 filter=lfs diff=lfs merge=lfs -text

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Salesforce, Inc.

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 11 |

+

|

| 12 |

+

3. Neither the name of Salesforce.com nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

MANIFEST.in

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

recursive-include lavis/configs *.yaml *.json

|

| 2 |

+

recursive-include lavis/projects *.yaml *.json

|

| 3 |

+

|

| 4 |

+

recursive-exclude lavis/datasets/download_scripts *

|

| 5 |

+

recursive-exclude lavis/output *

|

| 6 |

+

|

| 7 |

+

include requirements.txt

|

README.md

CHANGED

|

@@ -1,13 +1,112 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Self-Chained Image-Language Model for Video Localization and Question Answering

|

| 2 |

+

|

| 3 |

+

* Authors: [Shoubin Yu](https://yui010206.github.io/), [Jaemin Cho](https://j-min.io), [Prateek Yadav](https://prateek-yadav.github.io/), [Mohit Bansal](https://www.cs.unc.edu/~mbansal/)

|

| 4 |

+

* [arXiv](https://arxiv.org/abs/2305.06988)

|

| 5 |

+

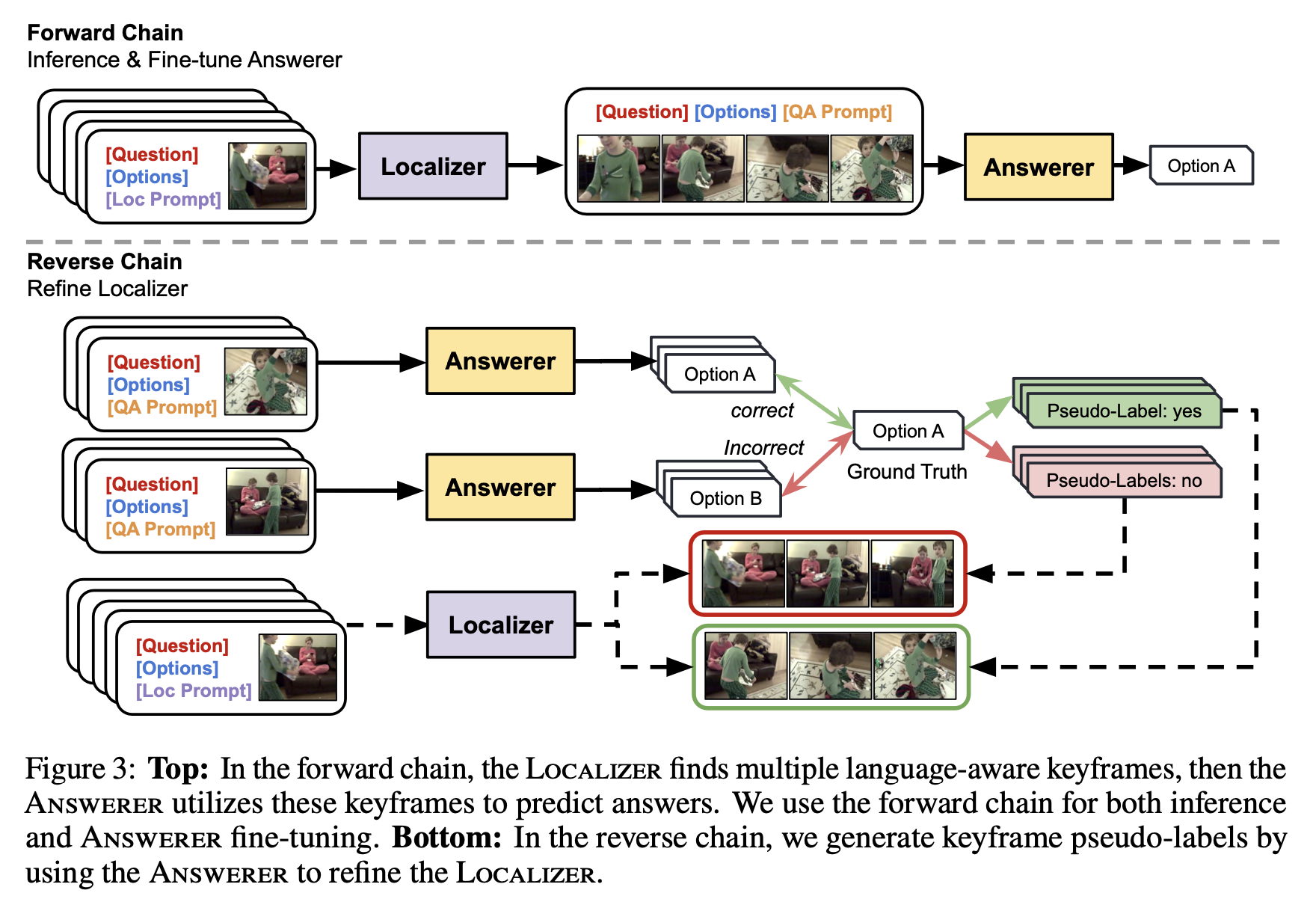

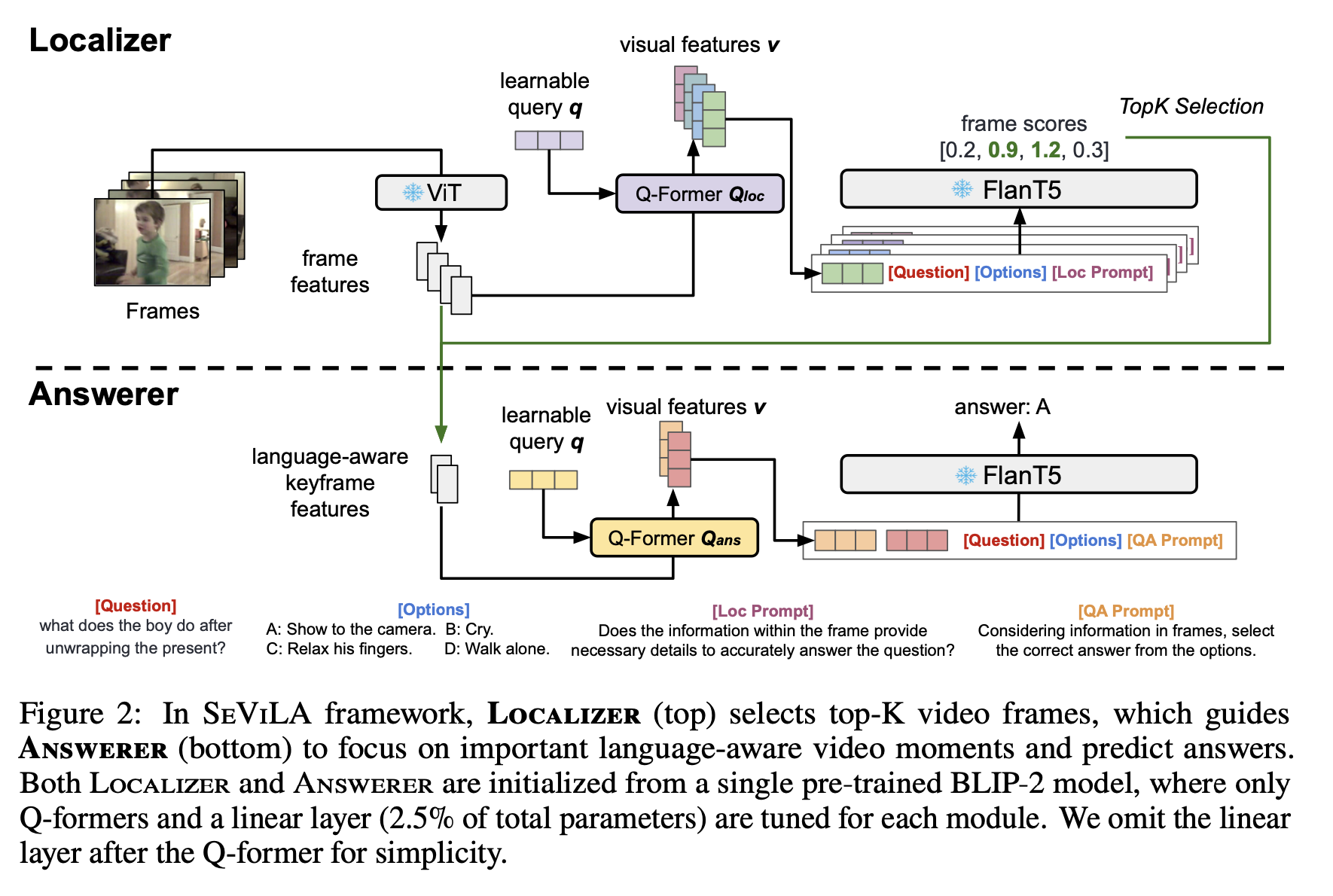

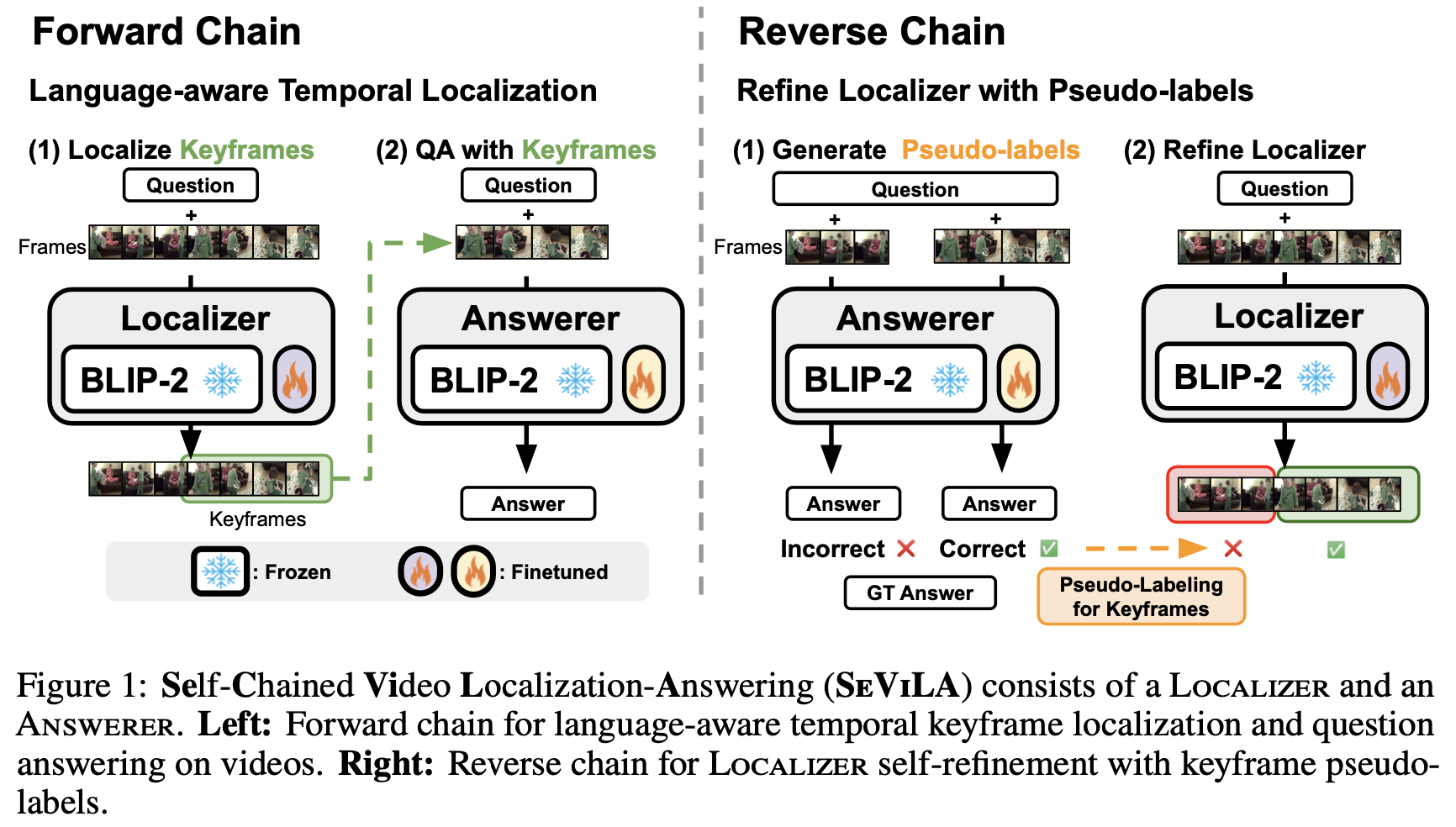

<img src="./assets/teaser.png" alt="teaser image" width="800"/>

|

| 6 |

+

|

| 7 |

+

<img src="./assets/model.png" alt="teaser image" width="800"/>

|

| 8 |

+

|

| 9 |

+

<img src="./assets/chain.png" alt="teaser image" width="800"/>

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

# Code structure

|

| 13 |

+

```bash

|

| 14 |

+

|

| 15 |

+

# Data & Data Preprocessing

|

| 16 |

+

./sevila_data

|

| 17 |

+

|

| 18 |

+

# Pretrained Checkpoints

|

| 19 |

+

./sevila_checkpoints

|

| 20 |

+

|

| 21 |

+

# SeViLA code

|

| 22 |

+

./lavis/

|

| 23 |

+

|

| 24 |

+

# running scripts for SeViLa localizer/answerer training/inference

|

| 25 |

+

./run_scripts

|

| 26 |

+

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

# Setup

|

| 30 |

+

|

| 31 |

+

## Install Dependencies

|

| 32 |

+

|

| 33 |

+

1. (Optional) Creating conda environment

|

| 34 |

+

|

| 35 |

+

```bash

|

| 36 |

+

conda create -n sevila python=3.8

|

| 37 |

+

conda activate sevila

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

2. build from source

|

| 41 |

+

|

| 42 |

+

```bash

|

| 43 |

+

pip install -e .

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

## Download Pretrained Models

|

| 47 |

+

We pre-train SeViLA localizer on QVHighlights and hold checkpoints via [Huggingface](https://huggingface.co/Shoubin/SeViLA/resolve/main/sevila_pretrained.pth).

|

| 48 |

+

Download checkpoints and put it under /sevila_checkpoints.

|

| 49 |

+

The checkpoints (814.55M) contains pre-trained localizer and zero-shot answerer.

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

# Dataset Preparation

|

| 54 |

+

We test our model on:

|

| 55 |

+

+ [NExT-QA](https://doc-doc.github.io/docs/nextqa.html)

|

| 56 |

+

|

| 57 |

+

+ [STAR](https://star.csail.mit.edu/)

|

| 58 |

+

|

| 59 |

+

+ [How2QA](https://value-benchmark.github.io/index.html)

|

| 60 |

+

|

| 61 |

+

+ [TVQA](https://tvqa.cs.unc.edu/)

|

| 62 |

+

|

| 63 |

+

+ [VLEP](https://value-benchmark.github.io/index.html)

|

| 64 |

+

|

| 65 |

+

+ [QVHighlights](https://github.com/jayleicn/moment_detr)

|

| 66 |

+

|

| 67 |

+

please download original data and preprocess them via our [scripts](sevila_data/) under ./sevila_data/ .

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

# Training and Inference

|

| 71 |

+

We provideo SeViLA training and inference script examples as following:

|

| 72 |

+

## 1) Localizer Pre-training

|

| 73 |

+

```bash

|

| 74 |

+

sh run_scripts/sevila/pre-train/pretrain_qvh.sh

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

## 2) Localizer Self-refinement

|

| 78 |

+

|

| 79 |

+

```bash

|

| 80 |

+

sh run_scripts/sevila/refinement/nextqa_sr.sh

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

## 3) Answerer Fine-tuning

|

| 84 |

+

|

| 85 |

+

```bash

|

| 86 |

+

sh run_scripts/sevila/finetune/nextqa_ft.sh

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

## 4) Inference

|

| 90 |

+

|

| 91 |

+

```bash

|

| 92 |

+

sh run_scripts/sevila/inference/nextqa_infer.sh

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

# Acknowledgments

|

| 97 |

+

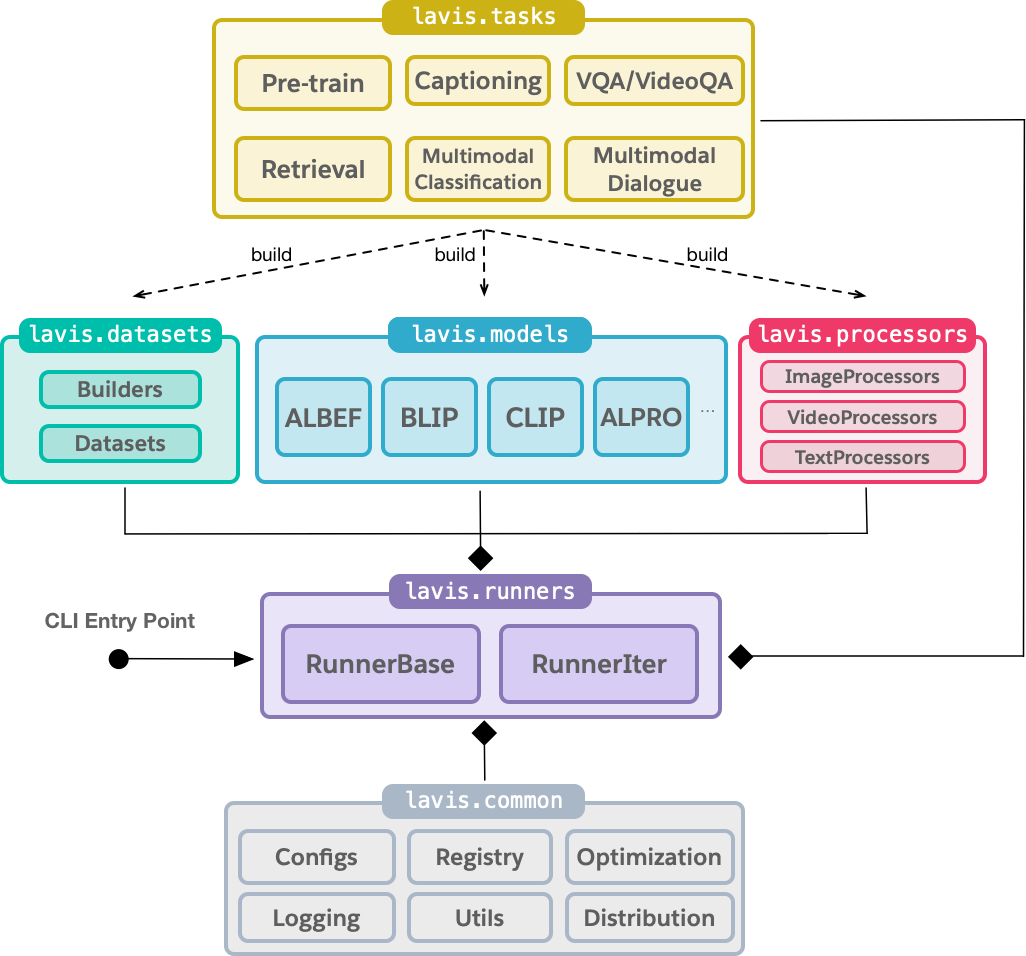

We thank the developers of [LAVIS](https://github.com/salesforce/LAVIS), [BLIP-2](https://github.com/salesforce/LAVIS/tree/main/projects/blip2), [CLIP](https://github.com/openai/CLIP), [All-in-one](https://github.com/showlab/all-in-one), for their public code release.

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

# Reference

|

| 101 |

+

Please cite our paper if you use our models in your works:

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

```bibtex

|

| 105 |

+

@misc{yu2023selfchained,

|

| 106 |

+

title={Self-Chained Image-Language Model for Video Localization and Question Answering},

|

| 107 |

+

author={Shoubin Yu and Jaemin Cho and Prateek Yadav and Mohit Bansal},

|

| 108 |

+

year={2023},

|

| 109 |

+

eprint={2305.06988},

|

| 110 |

+

archivePrefix={arXiv},

|

| 111 |

+

primaryClass={cs.CV}

|

| 112 |

+

}

|

app.py

ADDED

|

@@ -0,0 +1,206 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

import torch

|

| 4 |

+

from torchvision import transforms

|

| 5 |

+

from lavis.processors import transforms_video

|

| 6 |

+

from lavis.datasets.data_utils import load_video_demo

|

| 7 |

+

from lavis.processors.blip_processors import ToUint8, ToTHWC

|

| 8 |

+

from lavis.models.sevila_models.sevila import SeViLA

|

| 9 |

+

from typing import Optional

|

| 10 |

+

import warnings

|

| 11 |

+

# model config

|

| 12 |

+

img_size = 224

|

| 13 |

+

num_query_token = 32

|

| 14 |

+

t5_model = 'google/flan-t5-xl'

|

| 15 |

+

drop_path_rate = 0

|

| 16 |

+

use_grad_checkpoint = False

|

| 17 |

+

vit_precision = "fp16"

|

| 18 |

+

freeze_vit = True

|

| 19 |

+

prompt = ''

|

| 20 |

+

max_txt_len = 77

|

| 21 |

+

answer_num = 5

|

| 22 |

+

apply_lemmatizer = False

|

| 23 |

+

task = 'freeze_loc_freeze_qa_vid'

|

| 24 |

+

|

| 25 |

+

# prompt

|

| 26 |

+

LOC_propmpt = 'Does the information within the frame provide the necessary details to accurately answer the given question?'

|

| 27 |

+

QA_prompt = 'Considering the information presented in the frame, select the correct answer from the options.'

|

| 28 |

+

|

| 29 |

+

# processors config

|

| 30 |

+

mean = (0.48145466, 0.4578275, 0.40821073)

|

| 31 |

+

std = (0.26862954, 0.26130258, 0.27577711)

|

| 32 |

+

normalize = transforms.Normalize(mean, std)

|

| 33 |

+

image_size = img_size

|

| 34 |

+

transform = transforms.Compose([ToUint8(), ToTHWC(), transforms_video.ToTensorVideo(), normalize])

|

| 35 |

+

|

| 36 |

+

print('model loading')

|

| 37 |

+

sevila = SeViLA(

|

| 38 |

+

img_size=img_size,

|

| 39 |

+

drop_path_rate=drop_path_rate,

|

| 40 |

+

use_grad_checkpoint=use_grad_checkpoint,

|

| 41 |

+

vit_precision=vit_precision,

|

| 42 |

+

freeze_vit=freeze_vit,

|

| 43 |

+

num_query_token=num_query_token,

|

| 44 |

+

t5_model=t5_model,

|

| 45 |

+

prompt=prompt,

|

| 46 |

+

max_txt_len=max_txt_len,

|

| 47 |

+

apply_lemmatizer=apply_lemmatizer,

|

| 48 |

+

frame_num=4,

|

| 49 |

+

answer_num=answer_num,

|

| 50 |

+

task=task,

|

| 51 |

+

)

|

| 52 |

+

|

| 53 |

+

sevila.load_checkpoint(url_or_filename='https://huggingface.co/Shoubin/SeViLA/resolve/main/sevila_pretrained.pth')

|

| 54 |

+

print('model loaded')

|

| 55 |

+

|

| 56 |

+

ANS_MAPPING = {0 : 'A', 1 : 'B', 2 : 'C', 3 : 'D', 4 : 'E'}

|

| 57 |

+

|

| 58 |

+

# os.mkdir('video')

|

| 59 |

+

|

| 60 |

+

def sevila_demo(video,

|

| 61 |

+

question,

|

| 62 |

+

option1, option2, option3,

|

| 63 |

+

video_frame_num,

|

| 64 |

+

keyframe_num):

|

| 65 |

+

|

| 66 |

+

if torch.cuda.is_available():

|

| 67 |

+

device = 0

|

| 68 |

+

else:

|

| 69 |

+

device = 'cpu'

|

| 70 |

+

|

| 71 |

+

global sevila

|

| 72 |

+

if device == "cpu":

|

| 73 |

+

sevila = sevila.float()

|

| 74 |

+

else:

|

| 75 |

+

sevila = sevila.to(int(device))

|

| 76 |

+

|

| 77 |

+

vpath = video

|

| 78 |

+

raw_clip, indice, fps, vlen = load_video_demo(

|

| 79 |

+

video_path=vpath,

|

| 80 |

+

n_frms=int(video_frame_num),

|

| 81 |

+

height=image_size,

|

| 82 |

+

width=image_size,

|

| 83 |

+

sampling="uniform",

|

| 84 |

+

clip_proposal=None

|

| 85 |

+

)

|

| 86 |

+

clip = transform(raw_clip.permute(1,0,2,3))

|

| 87 |

+

clip = clip.float().to(int(device))

|

| 88 |

+

clip = clip.unsqueeze(0)

|

| 89 |

+

# check

|

| 90 |

+

if option1[-1] != '.':

|

| 91 |

+

option1 += '.'

|

| 92 |

+

if option2[-1] != '.':

|

| 93 |

+

option2 += '.'

|

| 94 |

+

if option3[-1] != '.':

|

| 95 |

+

option3 += '.'

|

| 96 |

+

option_dict = {0:option1, 1:option2, 2:option3}

|

| 97 |

+

options = 'Option A:{} Option B:{} Option C:{}'.format(option1, option2, option3)

|

| 98 |

+

text_input_qa = 'Question: ' + question + ' ' + options + ' ' + QA_prompt

|

| 99 |

+

text_input_loc = 'Question: ' + question + ' ' + options + ' ' + LOC_propmpt

|

| 100 |

+

|

| 101 |

+

out = sevila.generate_demo(clip, text_input_qa, text_input_loc, int(keyframe_num))

|

| 102 |

+

# print(out)

|

| 103 |

+

answer_id = out['output_text'][0]

|

| 104 |

+

answer = option_dict[answer_id]

|

| 105 |

+

select_index = out['frame_idx'][0]

|

| 106 |

+

# images = []

|

| 107 |

+

keyframes = []

|

| 108 |

+

timestamps =[]

|

| 109 |

+

|

| 110 |

+

# print('raw_clip', len(raw_clip))

|

| 111 |

+

# for j in range(int(video_frame_num)):

|

| 112 |

+

# image = raw_clip[:, j, :, :].int()

|

| 113 |

+

# image = image.permute(1, 2, 0).numpy()

|

| 114 |

+

# images.append(image)

|

| 115 |

+

|

| 116 |

+

video_len = vlen/fps # seconds

|

| 117 |

+

|

| 118 |

+

for i in select_index:

|

| 119 |

+

image = raw_clip[:, i, :, :].int()

|

| 120 |

+

image = image.permute(1, 2, 0).numpy()

|

| 121 |

+

keyframes.append(image)

|

| 122 |

+

select_i = indice[i]

|

| 123 |

+

time = round((select_i / vlen) * video_len, 2)

|

| 124 |

+

timestamps.append(str(time)+'s')

|

| 125 |

+

|

| 126 |

+

gr.components.Gallery(keyframes)

|

| 127 |

+

#gr.components.Gallery(images)

|

| 128 |

+

timestamps_des = ''

|

| 129 |

+

for i in range(len(select_index)):

|

| 130 |

+

timestamps_des += 'Keyframe {}: {} \n'.format(str(i+1), timestamps[i])

|

| 131 |

+

|

| 132 |

+

return keyframes, timestamps_des, answer

|

| 133 |

+

|

| 134 |

+

with gr.Blocks(title="SeViLA demo") as demo:

|

| 135 |

+

description = """<p style="text-align: center; font-weight: bold;">

|

| 136 |

+

<span style="font-size: 28px">Self-Chained Image-Language Model for Video Localization and Question Answering</span>

|

| 137 |

+

<br>

|

| 138 |

+

<span style="font-size: 18px" id="author-info">

|

| 139 |

+

<a href="https://yui010206.github.io/" target="_blank">Shoubin Yu</a>,

|

| 140 |

+

<a href="https://j-min.io/" target="_blank">Jaemin Cho</a>,

|

| 141 |

+

<a href="https://prateek-yadav.github.io/" target="_blank">Prateek Yadav</a>,

|

| 142 |

+

<a href="https://www.cs.unc.edu/~mbansal/" target="_blank">Mohit Bansal</a>

|

| 143 |

+

</span>

|

| 144 |

+

<br>

|

| 145 |

+

<span style="font-size: 18px" id="paper-info">

|

| 146 |

+

[<a href="https://github.com/Yui010206/SeViLA" target="_blank">GitHub</a>]

|

| 147 |

+

[<a href="https://arxiv.org/abs/2305.06988" target="_blank">Paper</a>]

|

| 148 |

+

</span>

|

| 149 |

+

</p>

|

| 150 |

+

<p>

|

| 151 |

+

To locate keyframes in a video and answer question, please:

|

| 152 |

+

<br>

|

| 153 |

+

(1) upolad your video; (2) write your question/options and set # video frame/# keyframe/running device; (3) click Locate and Answer!

|

| 154 |

+

<br>

|

| 155 |

+

Just a heads up - loading the SeViLA model can take a few minutes (typically 2-3), and running examples requires about 12GB of memory.

|

| 156 |

+

<br>

|

| 157 |

+

We've got you covered! We've provided some example videos and questions below to help you get started. Feel free to try out SeViLA with these!

|

| 158 |

+

</p>

|

| 159 |

+

"""

|

| 160 |

+

gr.HTML(description)

|

| 161 |

+

with gr.Row():

|

| 162 |

+

with gr.Column(scale=1, min_width=600):

|

| 163 |

+

video = gr.Video(label='Video')

|

| 164 |

+

question = gr.Textbox(placeholder="Why did the two ladies put their hands above their eyes while staring out?", label='Question')

|

| 165 |

+

with gr.Row():

|

| 166 |

+

option1 = gr.Textbox(placeholder="practicing cheer", label='Option 1')

|

| 167 |

+

option2 = gr.Textbox(placeholder="posing for photo", label='Option 2')

|

| 168 |

+

option3 = gr.Textbox(placeholder="to see better", label='Option 3')

|

| 169 |

+

video_frame_num = gr.Textbox(placeholder=32, label='# Video Frame')

|

| 170 |

+

keyframe_num = gr.Textbox(placeholder=4, label='# Keyframe')

|

| 171 |

+

# device = gr.Textbox(placeholder=0, label='Device')

|

| 172 |

+

gen_btn = gr.Button(value='Locate and Answer!')

|

| 173 |

+

with gr.Column(scale=2, min_width=600):

|

| 174 |

+

keyframes = gr.Gallery(

|

| 175 |

+

label="Keyframes", show_label=False, elem_id="gallery"

|

| 176 |

+

).style(columns=[4], rows=[1], object_fit="contain", height="auto")

|

| 177 |

+

#keyframes = gr.Gallery(label='Keyframes')

|

| 178 |

+

timestamps = gr.outputs.Textbox(label="Keyframe Timestamps")

|

| 179 |

+

answer = gr.outputs.Textbox(label="Output Answer")

|

| 180 |

+

|

| 181 |

+

gen_btn.click(

|

| 182 |

+

sevila_demo,

|

| 183 |

+

inputs=[video, question, option1, option2, option3, video_frame_num, keyframe_num],

|

| 184 |

+

outputs=[keyframes, timestamps, answer],

|

| 185 |

+

queue=True

|

| 186 |

+

)

|

| 187 |

+

#demo = gr.Interface(sevila_demo,

|

| 188 |

+

# inputs=[gr.Video(), question, option1, option2, option3, video_frame_num, keyframe_num, device],

|

| 189 |

+

# outputs=['gallery', timestamps, answer],

|

| 190 |

+

# examples=[['videos/demo1.mp4', 'Why did the two ladies put their hands above their eyes while staring out?', 'practicing cheer.', 'play ball.', 'to see better.', 32, 4, 0],

|

| 191 |

+

# ['videos/demo2.mp4', 'What did both of them do after completing skiing?', 'jump and pose.' , 'bend down.','raised their hands.', 32, 4, 0],

|

| 192 |

+

# ['videos/demo3.mp4', 'What room was Wilson breaking into when House found him?', 'the kitchen.' , 'the dining room.','the bathroom.', 32, 4, 0]]

|

| 193 |

+

# )

|

| 194 |

+

with gr.Column():

|

| 195 |

+

gr.Examples(

|

| 196 |

+

inputs=[video, question, option1, option2, option3, video_frame_num, keyframe_num],

|

| 197 |

+

outputs=[keyframes, timestamps, answer],

|

| 198 |

+

fn=sevila_demo,

|

| 199 |

+

examples=[['videos/demo1.mp4', 'Why did the two ladies put their hands above their eyes while staring out?', 'practicing cheer', 'play ball', 'to see better', 32, 4],

|

| 200 |

+

['videos/demo2.mp4', 'What did both of them do after completing skiing?', 'jump and pose' , 'bend down','raised their hands', 32, 4],

|

| 201 |

+

['videos/demo3.mp4', 'What room was Wilson breaking into when House found him?', 'the kitchen' , 'the dining room','the bathroom', 32, 4],

|

| 202 |

+

['videos/demo4.mp4', 'what kind of bird is it?', 'chikadee' , 'eagle','seagull', 32, 1]],

|

| 203 |

+

cache_examples=False,

|

| 204 |

+

)

|

| 205 |

+

demo.queue(concurrency_count=1, api_open=False)

|

| 206 |

+

demo.launch(share=False)

|

app/__init__.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

# Copyright (c) 2022, salesforce.com, inc.

|

| 3 |

+

# All rights reserved.

|

| 4 |

+

# SPDX-License-Identifier: BSD-3-Clause

|

| 5 |

+

# For full license text, see the LICENSE file in the repo root or https://opensource.org/licenses/BSD-3-Clause

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import requests

|

| 10 |

+

|

| 11 |

+

import streamlit as st

|

| 12 |

+

import torch

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

@st.cache()

|

| 16 |

+

def load_demo_image():

|

| 17 |

+

img_url = (

|

| 18 |

+

"https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg"

|

| 19 |

+

)

|

| 20 |

+

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

|

| 21 |

+

return raw_image

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 25 |

+

|

| 26 |

+

cache_root = "/export/home/.cache/lavis/"

|

app/calculate_coco_features.py

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

# Copyright (c) 2022, salesforce.com, inc.

|

| 3 |

+

# All rights reserved.

|

| 4 |

+

# SPDX-License-Identifier: BSD-3-Clause

|

| 5 |

+

# For full license text, see the LICENSE file in the repo root or https://opensource.org/licenses/BSD-3-Clause

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import requests

|

| 10 |

+

import torch

|

| 11 |

+

|

| 12 |

+

import os

|

| 13 |

+

|

| 14 |

+

from lavis.common.registry import registry

|

| 15 |

+

from lavis.processors import *

|

| 16 |

+

from lavis.models import *

|

| 17 |

+

from lavis.common.utils import build_default_model

|

| 18 |

+

|

| 19 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def load_demo_image():

|

| 23 |

+

img_url = (

|

| 24 |

+

"https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg"

|

| 25 |

+

)

|

| 26 |

+

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

|

| 27 |

+

|

| 28 |

+

return raw_image

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

def read_img(filepath):

|

| 32 |

+

raw_image = Image.open(filepath).convert("RGB")

|

| 33 |

+

|

| 34 |

+

return raw_image

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

# model

|

| 38 |

+

model_url = "https://storage.googleapis.com/sfr-vision-language-research/BLIP/models/model_base.pth"

|

| 39 |

+

feature_extractor = BlipFeatureExtractor(pretrained=model_url)

|

| 40 |

+

|

| 41 |

+

feature_extractor.eval()

|

| 42 |

+

feature_extractor = feature_extractor.to(device)

|

| 43 |

+

|

| 44 |

+

# preprocessors

|

| 45 |

+

vis_processor = BlipImageEvalProcessor(image_size=224)

|

| 46 |

+

text_processor = BlipCaptionProcessor()

|

| 47 |

+

|

| 48 |

+

# files to process

|

| 49 |

+

# file_root = "/export/home/.cache/lavis/coco/images/val2014"

|

| 50 |

+

file_root = "/export/home/.cache/lavis/coco/images/train2014"

|

| 51 |

+

filepaths = os.listdir(file_root)

|

| 52 |

+

|

| 53 |

+

print(len(filepaths))

|

| 54 |

+

|

| 55 |

+

caption = "dummy"

|

| 56 |

+

|

| 57 |

+

path2feat = dict()

|

| 58 |

+

bsz = 256

|

| 59 |

+

|

| 60 |

+

images_in_batch = []

|

| 61 |

+

filepaths_in_batch = []

|

| 62 |

+

|

| 63 |

+

for i, filename in enumerate(filepaths):

|

| 64 |

+

if i % bsz == 0 and i > 0:

|

| 65 |

+

images_in_batch = torch.cat(images_in_batch, dim=0).to(device)

|

| 66 |

+

with torch.no_grad():

|

| 67 |

+

image_features = feature_extractor(

|

| 68 |

+

images_in_batch, caption, mode="image", normalized=True

|

| 69 |

+

)[:, 0]

|

| 70 |

+

|

| 71 |

+

for filepath, image_feat in zip(filepaths_in_batch, image_features):

|

| 72 |

+

path2feat[os.path.basename(filepath)] = image_feat.detach().cpu()

|

| 73 |

+

|

| 74 |

+

images_in_batch = []

|

| 75 |

+

filepaths_in_batch = []

|

| 76 |

+

|

| 77 |

+

print(len(path2feat), image_features.shape)

|

| 78 |

+

else:

|

| 79 |

+

filepath = os.path.join(file_root, filename)

|

| 80 |

+

|

| 81 |

+

image = read_img(filepath)

|

| 82 |

+

image = vis_processor(image).unsqueeze(0)

|

| 83 |

+

|

| 84 |

+

images_in_batch.append(image)

|

| 85 |

+

filepaths_in_batch.append(filepath)

|

| 86 |

+

|

| 87 |

+

torch.save(path2feat, "path2feat_coco_train2014.pth")

|

app/caption.py

ADDED

|

@@ -0,0 +1,98 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

# Copyright (c) 2022, salesforce.com, inc.

|

| 3 |

+

# All rights reserved.

|

| 4 |

+

# SPDX-License-Identifier: BSD-3-Clause

|

| 5 |

+

# For full license text, see the LICENSE file in the repo root or https://opensource.org/licenses/BSD-3-Clause

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import streamlit as st

|

| 9 |

+

from app import device, load_demo_image

|

| 10 |

+

from app.utils import load_model_cache

|

| 11 |

+

from lavis.processors import load_processor

|

| 12 |

+

from PIL import Image

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def app():

|

| 16 |

+

# ===== layout =====

|

| 17 |

+

model_type = st.sidebar.selectbox("Model:", ["BLIP_base", "BLIP_large"])

|

| 18 |

+

|

| 19 |

+

sampling_method = st.sidebar.selectbox(

|

| 20 |

+

"Sampling method:", ["Beam search", "Nucleus sampling"]

|

| 21 |

+

)

|

| 22 |

+

|

| 23 |

+

st.markdown(

|

| 24 |

+

"<h1 style='text-align: center;'>Image Description Generation</h1>",

|

| 25 |

+

unsafe_allow_html=True,

|

| 26 |

+

)

|

| 27 |

+

|

| 28 |

+

instructions = """Try the provided image or upload your own:"""

|

| 29 |

+

file = st.file_uploader(instructions)

|

| 30 |

+

|

| 31 |

+

use_beam = sampling_method == "Beam search"

|

| 32 |

+

|

| 33 |

+

col1, col2 = st.columns(2)

|

| 34 |

+

|

| 35 |

+

if file:

|

| 36 |

+

raw_img = Image.open(file).convert("RGB")

|

| 37 |

+

else:

|

| 38 |

+

raw_img = load_demo_image()

|

| 39 |

+

|

| 40 |

+

col1.header("Image")

|

| 41 |

+

|

| 42 |

+

w, h = raw_img.size

|

| 43 |

+

scaling_factor = 720 / w

|

| 44 |

+

resized_image = raw_img.resize((int(w * scaling_factor), int(h * scaling_factor)))

|

| 45 |

+

|

| 46 |

+

col1.image(resized_image, use_column_width=True)

|

| 47 |

+

col2.header("Description")

|

| 48 |

+

|

| 49 |

+

cap_button = st.button("Generate")

|

| 50 |

+

|

| 51 |

+

# ==== event ====

|

| 52 |

+

vis_processor = load_processor("blip_image_eval").build(image_size=384)

|

| 53 |

+

|

| 54 |

+

if cap_button:

|

| 55 |

+

if model_type.startswith("BLIP"):

|

| 56 |

+

blip_type = model_type.split("_")[1].lower()

|

| 57 |

+

model = load_model_cache(

|

| 58 |

+

"blip_caption",

|

| 59 |

+

model_type=f"{blip_type}_coco",

|

| 60 |

+

is_eval=True,

|

| 61 |

+

device=device,

|

| 62 |

+

)

|

| 63 |

+

|

| 64 |

+

img = vis_processor(raw_img).unsqueeze(0).to(device)

|

| 65 |

+

captions = generate_caption(

|

| 66 |

+

model=model, image=img, use_nucleus_sampling=not use_beam

|

| 67 |

+

)

|

| 68 |

+

|

| 69 |

+

col2.write("\n\n".join(captions), use_column_width=True)

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def generate_caption(

|

| 73 |

+

model, image, use_nucleus_sampling=False, num_beams=3, max_length=40, min_length=5

|

| 74 |

+

):

|

| 75 |

+

samples = {"image": image}

|

| 76 |

+

|

| 77 |

+

captions = []

|

| 78 |

+

if use_nucleus_sampling:

|

| 79 |

+

for _ in range(5):

|

| 80 |

+

caption = model.generate(

|

| 81 |

+

samples,

|

| 82 |

+

use_nucleus_sampling=True,

|

| 83 |

+

max_length=max_length,

|

| 84 |

+

min_length=min_length,

|

| 85 |

+

top_p=0.9,

|

| 86 |

+

)

|

| 87 |

+

captions.append(caption[0])

|

| 88 |

+

else:

|

| 89 |

+

caption = model.generate(

|

| 90 |

+

samples,

|

| 91 |

+

use_nucleus_sampling=False,

|

| 92 |

+

num_beams=num_beams,

|

| 93 |

+

max_length=max_length,

|

| 94 |

+

min_length=min_length,

|

| 95 |

+

)

|

| 96 |

+

captions.append(caption[0])

|

| 97 |

+

|

| 98 |

+

return captions

|

app/classification.py

ADDED

|

@@ -0,0 +1,216 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

# Copyright (c) 2022, salesforce.com, inc.

|

| 3 |

+

# All rights reserved.

|

| 4 |

+

# SPDX-License-Identifier: BSD-3-Clause

|

| 5 |

+

# For full license text, see the LICENSE file in the repo root or https://opensource.org/licenses/BSD-3-Clause

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import plotly.graph_objects as go

|

| 9 |

+

import requests

|

| 10 |

+

import streamlit as st

|

| 11 |

+

import torch

|

| 12 |

+

from lavis.models import load_model

|

| 13 |

+

from lavis.processors import load_processor

|

| 14 |

+

from lavis.processors.blip_processors import BlipCaptionProcessor

|

| 15 |

+

from PIL import Image

|

| 16 |

+

|

| 17 |

+

from app import device, load_demo_image

|

| 18 |

+

from app.utils import load_blip_itm_model

|

| 19 |

+

from lavis.processors.clip_processors import ClipImageEvalProcessor

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

@st.cache()

|

| 23 |

+

def load_demo_image(img_url=None):

|

| 24 |

+

if not img_url:

|

| 25 |

+

img_url = "https://img.atlasobscura.com/yDJ86L8Ou6aIjBsxnlAy5f164w1rjTgcHZcx2yUs4mo/rt:fit/w:1200/q:81/sm:1/scp:1/ar:1/aHR0cHM6Ly9hdGxh/cy1kZXYuczMuYW1h/em9uYXdzLmNvbS91/cGxvYWRzL3BsYWNl/X2ltYWdlcy85MDll/MDRjOS00NTJjLTQx/NzQtYTY4MS02NmQw/MzI2YWIzNjk1ZGVk/MGZhMTJiMTM5MmZi/NGFfUmVhcl92aWV3/X29mX3RoZV9NZXJs/aW9uX3N0YXR1ZV9h/dF9NZXJsaW9uX1Bh/cmssX1NpbmdhcG9y/ZSxfd2l0aF9NYXJp/bmFfQmF5X1NhbmRz/X2luX3RoZV9kaXN0/YW5jZV8tXzIwMTQw/MzA3LmpwZw.jpg"

|

| 26 |

+

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

|

| 27 |

+

return raw_image

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

@st.cache(

|

| 31 |

+

hash_funcs={

|

| 32 |

+

torch.nn.parameter.Parameter: lambda parameter: parameter.data.detach()

|

| 33 |

+

.cpu()

|

| 34 |

+

.numpy()

|

| 35 |

+

},

|

| 36 |

+

allow_output_mutation=True,

|

| 37 |

+

)

|

| 38 |

+

def load_model_cache(model_type, device):

|

| 39 |

+

if model_type == "blip":

|

| 40 |

+

model = load_model(

|

| 41 |

+

"blip_feature_extractor", model_type="base", is_eval=True, device=device

|

| 42 |

+

)

|

| 43 |

+

elif model_type == "albef":

|

| 44 |

+

model = load_model(

|

| 45 |

+

"albef_feature_extractor", model_type="base", is_eval=True, device=device

|

| 46 |

+

)

|

| 47 |

+

elif model_type == "CLIP_ViT-B-32":

|

| 48 |

+

model = load_model(

|

| 49 |

+

"clip_feature_extractor", "ViT-B-32", is_eval=True, device=device

|

| 50 |

+

)

|

| 51 |

+

elif model_type == "CLIP_ViT-B-16":

|

| 52 |

+

model = load_model(

|

| 53 |

+

"clip_feature_extractor", "ViT-B-16", is_eval=True, device=device

|

| 54 |

+

)

|

| 55 |

+

elif model_type == "CLIP_ViT-L-14":

|

| 56 |

+

model = load_model(

|

| 57 |

+

"clip_feature_extractor", "ViT-L-14", is_eval=True, device=device

|

| 58 |

+

)

|

| 59 |

+

|

| 60 |

+

return model

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

def app():

|

| 64 |

+

model_type = st.sidebar.selectbox(

|

| 65 |

+

"Model:",

|

| 66 |

+

["ALBEF", "BLIP_Base", "CLIP_ViT-B-32", "CLIP_ViT-B-16", "CLIP_ViT-L-14"],

|

| 67 |

+

)

|

| 68 |

+

score_type = st.sidebar.selectbox("Score type:", ["Cosine", "Multimodal"])

|

| 69 |

+

|

| 70 |

+

# ===== layout =====

|

| 71 |

+

st.markdown(

|

| 72 |

+

"<h1 style='text-align: center;'>Zero-shot Classification</h1>",

|

| 73 |

+

unsafe_allow_html=True,

|

| 74 |

+

)

|

| 75 |

+

|

| 76 |

+

instructions = """Try the provided image or upload your own:"""

|

| 77 |

+

file = st.file_uploader(instructions)

|

| 78 |

+

|

| 79 |

+

st.header("Image")

|

| 80 |

+

if file:

|

| 81 |

+

raw_img = Image.open(file).convert("RGB")

|

| 82 |

+

else:

|

| 83 |

+

raw_img = load_demo_image()

|

| 84 |

+

|

| 85 |

+

st.image(raw_img) # , use_column_width=True)

|

| 86 |

+

|

| 87 |

+

col1, col2 = st.columns(2)

|

| 88 |

+

|

| 89 |

+

col1.header("Categories")

|

| 90 |

+

|

| 91 |

+

cls_0 = col1.text_input("category 1", value="merlion")

|

| 92 |

+

cls_1 = col1.text_input("category 2", value="sky")

|

| 93 |

+

cls_2 = col1.text_input("category 3", value="giraffe")

|

| 94 |

+

cls_3 = col1.text_input("category 4", value="fountain")

|

| 95 |

+

cls_4 = col1.text_input("category 5", value="marina bay")

|

| 96 |

+

|

| 97 |

+

cls_names = [cls_0, cls_1, cls_2, cls_3, cls_4]

|

| 98 |

+

cls_names = [cls_nm for cls_nm in cls_names if len(cls_nm) > 0]

|

| 99 |

+

|

| 100 |

+

if len(cls_names) != len(set(cls_names)):

|

| 101 |

+

st.error("Please provide unique class names")

|

| 102 |

+

return

|

| 103 |

+

|

| 104 |

+

button = st.button("Submit")

|

| 105 |

+

|

| 106 |

+

col2.header("Prediction")

|

| 107 |

+

|

| 108 |

+

# ===== event =====

|

| 109 |

+

|

| 110 |

+

if button:

|

| 111 |

+

if model_type.startswith("BLIP"):

|

| 112 |

+

text_processor = BlipCaptionProcessor(prompt="A picture of ")

|

| 113 |

+

cls_prompt = [text_processor(cls_nm) for cls_nm in cls_names]

|

| 114 |

+

|

| 115 |

+

if score_type == "Cosine":

|

| 116 |

+

vis_processor = load_processor("blip_image_eval").build(image_size=224)

|

| 117 |

+

img = vis_processor(raw_img).unsqueeze(0).to(device)

|

| 118 |

+

|

| 119 |

+

feature_extractor = load_model_cache(model_type="blip", device=device)

|

| 120 |

+

|

| 121 |

+

sample = {"image": img, "text_input": cls_prompt}

|

| 122 |

+

|

| 123 |

+

with torch.no_grad():

|

| 124 |

+

image_features = feature_extractor.extract_features(

|

| 125 |

+

sample, mode="image"

|

| 126 |

+

).image_embeds_proj[:, 0]

|

| 127 |

+

text_features = feature_extractor.extract_features(

|

| 128 |

+

sample, mode="text"

|

| 129 |

+

).text_embeds_proj[:, 0]

|

| 130 |

+

sims = (image_features @ text_features.t())[

|

| 131 |

+

0

|

| 132 |

+

] / feature_extractor.temp

|

| 133 |

+

|

| 134 |

+

else:

|

| 135 |

+

vis_processor = load_processor("blip_image_eval").build(image_size=384)

|

| 136 |

+

img = vis_processor(raw_img).unsqueeze(0).to(device)

|

| 137 |

+

|

| 138 |

+

model = load_blip_itm_model(device)

|

| 139 |

+

|

| 140 |

+

output = model(img, cls_prompt, match_head="itm")

|

| 141 |

+

sims = output[:, 1]

|

| 142 |

+

|

| 143 |

+

sims = torch.nn.Softmax(dim=0)(sims)

|

| 144 |

+

inv_sims = [sim * 100 for sim in sims.tolist()[::-1]]

|

| 145 |

+

|

| 146 |

+

elif model_type.startswith("ALBEF"):

|

| 147 |

+

vis_processor = load_processor("blip_image_eval").build(image_size=224)

|

| 148 |

+

img = vis_processor(raw_img).unsqueeze(0).to(device)

|

| 149 |

+

|

| 150 |

+

text_processor = BlipCaptionProcessor(prompt="A picture of ")

|

| 151 |

+

cls_prompt = [text_processor(cls_nm) for cls_nm in cls_names]

|

| 152 |

+

|

| 153 |

+

feature_extractor = load_model_cache(model_type="albef", device=device)

|

| 154 |

+

|

| 155 |

+

sample = {"image": img, "text_input": cls_prompt}

|

| 156 |

+

|

| 157 |

+

with torch.no_grad():

|

| 158 |

+

image_features = feature_extractor.extract_features(

|

| 159 |

+

sample, mode="image"

|

| 160 |

+

).image_embeds_proj[:, 0]

|

| 161 |

+

text_features = feature_extractor.extract_features(

|

| 162 |

+

sample, mode="text"

|

| 163 |

+

).text_embeds_proj[:, 0]

|

| 164 |

+

|

| 165 |

+

st.write(image_features.shape)

|

| 166 |

+

st.write(text_features.shape)

|

| 167 |

+

|

| 168 |

+

sims = (image_features @ text_features.t())[0] / feature_extractor.temp

|

| 169 |

+

|

| 170 |

+

sims = torch.nn.Softmax(dim=0)(sims)

|

| 171 |

+

inv_sims = [sim * 100 for sim in sims.tolist()[::-1]]

|

| 172 |

+

|

| 173 |

+

elif model_type.startswith("CLIP"):

|

| 174 |

+

if model_type == "CLIP_ViT-B-32":

|

| 175 |

+

model = load_model_cache(model_type="CLIP_ViT-B-32", device=device)

|

| 176 |

+

elif model_type == "CLIP_ViT-B-16":

|

| 177 |

+

model = load_model_cache(model_type="CLIP_ViT-B-16", device=device)

|

| 178 |

+

elif model_type == "CLIP_ViT-L-14":

|

| 179 |

+

model = load_model_cache(model_type="CLIP_ViT-L-14", device=device)

|

| 180 |

+

else:

|

| 181 |

+

raise ValueError(f"Unknown model type {model_type}")

|

| 182 |

+

|

| 183 |

+

if score_type == "Cosine":

|

| 184 |

+

# image_preprocess = ClipImageEvalProcessor(image_size=336)

|

| 185 |

+

image_preprocess = ClipImageEvalProcessor(image_size=224)

|

| 186 |

+

img = image_preprocess(raw_img).unsqueeze(0).to(device)

|

| 187 |

+

|

| 188 |

+

sample = {"image": img, "text_input": cls_names}

|

| 189 |

+

|

| 190 |

+

with torch.no_grad():

|

| 191 |

+

clip_features = model.extract_features(sample)

|

| 192 |

+

|

| 193 |

+

image_features = clip_features.image_embeds_proj

|

| 194 |

+

text_features = clip_features.text_embeds_proj

|

| 195 |

+

|

| 196 |

+

sims = (100.0 * image_features @ text_features.T)[0].softmax(dim=-1)

|

| 197 |

+

inv_sims = sims.tolist()[::-1]

|

| 198 |

+

else:

|

| 199 |

+

st.warning("CLIP does not support multimodal scoring.")

|

| 200 |

+

return

|

| 201 |

+

|

| 202 |

+

fig = go.Figure(

|

| 203 |

+

go.Bar(

|

| 204 |

+

x=inv_sims,

|

| 205 |

+

y=cls_names[::-1],

|

| 206 |

+

text=["{:.2f}".format(s) for s in inv_sims],

|

| 207 |

+

orientation="h",

|

| 208 |

+

)

|

| 209 |

+

)

|

| 210 |

+

fig.update_traces(

|

| 211 |

+

textfont_size=12,

|

| 212 |

+

textangle=0,

|

| 213 |

+

textposition="outside",

|

| 214 |

+

cliponaxis=False,

|

| 215 |

+

)

|

| 216 |

+

col2.plotly_chart(fig, use_container_width=True)

|

app/dataset_browser.py

ADDED

|

@@ -0,0 +1,240 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|