Spaces:

Running

Running

finalizing

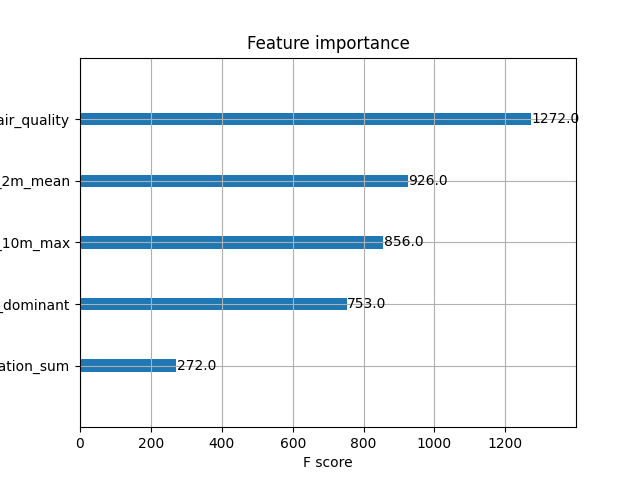

Browse files- air_quality_model/images/feature_importance.png +0 -0

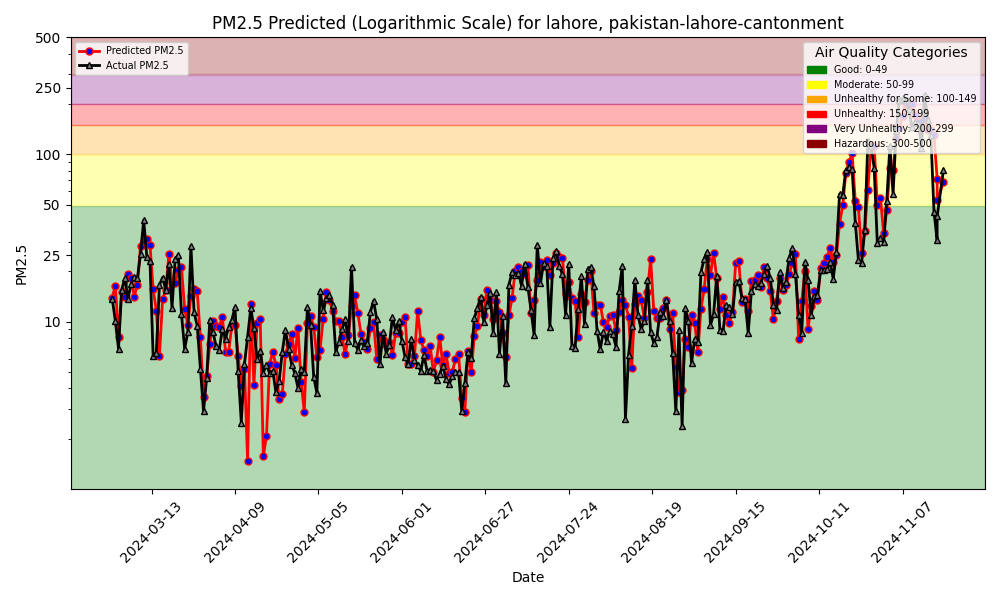

- air_quality_model/images/pm25_hindcast.png +0 -0

- air_quality_model/model.json +0 -0

- app_streamlit.py +12 -11

- backfill.ipynb +587 -0

- backfill.py +0 -62

- data/lahore.csv +0 -0

- debug.ipynb +64 -363

- functions/__pycache__/util.cpython-312.pyc +0 -0

- functions/{merge_df.py → retrieve.py} +4 -1

- functions/util.py +13 -9

- inference_pipeline.py +1 -95

- training.py +270 -0

air_quality_model/images/feature_importance.png

ADDED

|

air_quality_model/images/pm25_hindcast.png

ADDED

|

air_quality_model/model.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

app_streamlit.py

CHANGED

|

@@ -3,7 +3,7 @@ import pandas as pd

|

|

| 3 |

import numpy as np

|

| 4 |

import datetime

|

| 5 |

import hopsworks

|

| 6 |

-

from functions import figure,

|

| 7 |

import os

|

| 8 |

import pickle

|

| 9 |

import plotly.express as px

|

|

@@ -13,17 +13,18 @@ import os

|

|

| 13 |

|

| 14 |

|

| 15 |

# Real data

|

| 16 |

-

|

|

|

|

|

|

|

| 17 |

|

| 18 |

# Dummmy data

|

| 19 |

-

size = 400

|

| 20 |

-

data = {

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

}

|

| 25 |

-

df = pd.DataFrame(data)

|

| 26 |

-

|

| 27 |

|

| 28 |

# Page configuration

|

| 29 |

|

|

@@ -42,5 +43,5 @@ st.subheader('Forecast and hindcast')

|

|

| 42 |

st.subheader('Unit: PM25 - particle matter of diameter < 2.5 micrometers')

|

| 43 |

|

| 44 |

# Plotting

|

| 45 |

-

fig = figure.plot(df)

|

| 46 |

st.plotly_chart(fig, use_container_width=True)

|

|

|

|

| 3 |

import numpy as np

|

| 4 |

import datetime

|

| 5 |

import hopsworks

|

| 6 |

+

from functions import figure, retrieve

|

| 7 |

import os

|

| 8 |

import pickle

|

| 9 |

import plotly.express as px

|

|

|

|

| 13 |

|

| 14 |

|

| 15 |

# Real data

|

| 16 |

+

today = datetime.today().strftime('%Y-%m-%d')

|

| 17 |

+

df = retrieve.get_merged_dataframe()

|

| 18 |

+

n = len(df[df['pm25'].isna()]) - 1

|

| 19 |

|

| 20 |

# Dummmy data

|

| 21 |

+

# size = 400

|

| 22 |

+

# data = {

|

| 23 |

+

# 'date': pd.date_range(start='2023-01-01', periods=size, freq='D'),

|

| 24 |

+

# 'pm25': np.random.randint(50, 150, size=size),

|

| 25 |

+

# 'predicted_pm25': np.random.randint(50, 150, size=size)

|

| 26 |

+

# }

|

| 27 |

+

# df = pd.DataFrame(data)

|

|

|

|

| 28 |

|

| 29 |

# Page configuration

|

| 30 |

|

|

|

|

| 43 |

st.subheader('Unit: PM25 - particle matter of diameter < 2.5 micrometers')

|

| 44 |

|

| 45 |

# Plotting

|

| 46 |

+

fig = figure.plot(df, n=n)

|

| 47 |

st.plotly_chart(fig, use_container_width=True)

|

backfill.ipynb

ADDED

|

@@ -0,0 +1,587 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": 1,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [

|

| 8 |

+

{

|

| 9 |

+

"name": "stdout",

|

| 10 |

+

"output_type": "stream",

|

| 11 |

+

"text": [

|

| 12 |

+

"Connected. Call `.close()` to terminate connection gracefully.\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"Logged in to project, explore it here https://c.app.hopsworks.ai:443/p/1160340\n",

|

| 15 |

+

"2024-11-21 05:38:56,037 WARNING: using legacy validation callback\n",

|

| 16 |

+

"Connected. Call `.close()` to terminate connection gracefully.\n",

|

| 17 |

+

"Connected. Call `.close()` to terminate connection gracefully.\n",

|

| 18 |

+

"Deleted air_quality_fv/1\n",

|

| 19 |

+

"Deleted air_quality/1\n",

|

| 20 |

+

"Deleted weather/1\n",

|

| 21 |

+

"Deleted aq_predictions/1\n",

|

| 22 |

+

"Deleted model air_quality_xgboost_model/1\n",

|

| 23 |

+

"Connected. Call `.close()` to terminate connection gracefully.\n",

|

| 24 |

+

"No SENSOR_LOCATION_JSON secret found\n"

|

| 25 |

+

]

|

| 26 |

+

}

|

| 27 |

+

],

|

| 28 |

+

"source": [

|

| 29 |

+

"import datetime\n",

|

| 30 |

+

"import requests\n",

|

| 31 |

+

"import pandas as pd\n",

|

| 32 |

+

"import hopsworks\n",

|

| 33 |

+

"import datetime\n",

|

| 34 |

+

"from pathlib import Path\n",

|

| 35 |

+

"from functions import util\n",

|

| 36 |

+

"import json\n",

|

| 37 |

+

"import re\n",

|

| 38 |

+

"import os\n",

|

| 39 |

+

"import warnings\n",

|

| 40 |

+

"warnings.filterwarnings(\"ignore\")\n",

|

| 41 |

+

"\n",

|

| 42 |

+

"AQI_API_KEY = os.getenv('AQI_API_KEY')\n",

|

| 43 |

+

"api_key = os.getenv('HOPSWORKS_API_KEY')\n",

|

| 44 |

+

"project_name = os.getenv('HOPSWORKS_PROJECT')\n",

|

| 45 |

+

"project = hopsworks.login(project=project_name, api_key_value=api_key)\n",

|

| 46 |

+

"util.purge_project(project)"

|

| 47 |

+

]

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

"cell_type": "code",

|

| 51 |

+

"execution_count": 2,

|

| 52 |

+

"metadata": {},

|

| 53 |

+

"outputs": [

|

| 54 |

+

{

|

| 55 |

+

"name": "stdout",

|

| 56 |

+

"output_type": "stream",

|

| 57 |

+

"text": [

|

| 58 |

+

"File successfully found at the path: data/lahore.csv\n"

|

| 59 |

+

]

|

| 60 |

+

}

|

| 61 |

+

],

|

| 62 |

+

"source": [

|

| 63 |

+

"country=\"pakistan\"\n",

|

| 64 |

+

"city = \"lahore\"\n",

|

| 65 |

+

"street = \"pakistan-lahore-cantonment\"\n",

|

| 66 |

+

"aqicn_url=\"https://api.waqi.info/feed/A74005\"\n",

|

| 67 |

+

"\n",

|

| 68 |

+

"latitude, longitude = util.get_city_coordinates(city)\n",

|

| 69 |

+

"today = datetime.date.today()\n",

|

| 70 |

+

"\n",

|

| 71 |

+

"csv_file=\"data/lahore.csv\"\n",

|

| 72 |

+

"util.check_file_path(csv_file)"

|

| 73 |

+

]

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"cell_type": "code",

|

| 77 |

+

"execution_count": 3,

|

| 78 |

+

"metadata": {},

|

| 79 |

+

"outputs": [

|

| 80 |

+

{

|

| 81 |

+

"name": "stdout",

|

| 82 |

+

"output_type": "stream",

|

| 83 |

+

"text": [

|

| 84 |

+

"Connected. Call `.close()` to terminate connection gracefully.\n"

|

| 85 |

+

]

|

| 86 |

+

}

|

| 87 |

+

],

|

| 88 |

+

"source": [

|

| 89 |

+

"secrets = util.secrets_api(project.name)\n",

|

| 90 |

+

"try:\n",

|

| 91 |

+

" secrets.create_secret(\"AQI_API_KEY\", AQI_API_KEY)\n",

|

| 92 |

+

"except hopsworks.RestAPIError:\n",

|

| 93 |

+

" AQI_API_KEY = secrets.get_secret(\"AQI_API_KEY\").value"

|

| 94 |

+

]

|

| 95 |

+

},

|

| 96 |

+

{

|

| 97 |

+

"cell_type": "code",

|

| 98 |

+

"execution_count": 4,

|

| 99 |

+

"metadata": {},

|

| 100 |

+

"outputs": [],

|

| 101 |

+

"source": [

|

| 102 |

+

"try:\n",

|

| 103 |

+

" aq_today_df = util.get_pm25(aqicn_url, country, city, street, today, AQI_API_KEY)\n",

|

| 104 |

+

"except hopsworks.RestAPIError:\n",

|

| 105 |

+

" print(\"It looks like the AQI_API_KEY doesn't work for your sensor. Is the API key correct? Is the sensor URL correct?\")\n"

|

| 106 |

+

]

|

| 107 |

+

},

|

| 108 |

+

{

|

| 109 |

+

"cell_type": "code",

|

| 110 |

+

"execution_count": null,

|

| 111 |

+

"metadata": {},

|

| 112 |

+

"outputs": [

|

| 113 |

+

{

|

| 114 |

+

"name": "stdout",

|

| 115 |

+

"output_type": "stream",

|

| 116 |

+

"text": [

|

| 117 |

+

"<class 'pandas.core.frame.DataFrame'>\n",

|

| 118 |

+

"RangeIndex: 1802 entries, 0 to 1801\n",

|

| 119 |

+

"Data columns (total 2 columns):\n",

|

| 120 |

+

" # Column Non-Null Count Dtype \n",

|

| 121 |

+

"--- ------ -------------- ----- \n",

|

| 122 |

+

" 0 date 1802 non-null object \n",

|

| 123 |

+

" 1 pm25 1802 non-null float32\n",

|

| 124 |

+

"dtypes: float32(1), object(1)\n",

|

| 125 |

+

"memory usage: 21.2+ KB\n"

|

| 126 |

+

]

|

| 127 |

+

},

|

| 128 |

+

{

|

| 129 |

+

"data": {

|

| 130 |

+

"text/plain": [

|

| 131 |

+

"'2019-12-09'"

|

| 132 |

+

]

|

| 133 |

+

},

|

| 134 |

+

"execution_count": 6,

|

| 135 |

+

"metadata": {},

|

| 136 |

+

"output_type": "execute_result"

|

| 137 |

+

}

|

| 138 |

+

],

|

| 139 |

+

"source": [

|

| 140 |

+

"aq_today_df.head()\n",

|

| 141 |

+

"\n",

|

| 142 |

+

"df = pd.read_csv(csv_file, parse_dates=['date'], skipinitialspace=True)\n",

|

| 143 |

+

"\n",

|

| 144 |

+

"# These commands will succeed if your CSV file didn't have a `median` or `timestamp` column\n",

|

| 145 |

+

"df = df.rename(columns={\"median\": \"pm25\"})\n",

|

| 146 |

+

"# df = df.rename(columns={\"timestamp\": \"date\"})\n",

|

| 147 |

+

"df['date'] = pd.to_datetime(df['date']).dt.date\n",

|

| 148 |

+

"\n",

|

| 149 |

+

"df_aq = df[['date', 'pm25']]\n",

|

| 150 |

+

"df_aq['pm25'] = df_aq['pm25'].astype('float32')\n",

|

| 151 |

+

"df_aq.info()\n",

|

| 152 |

+

"df_aq.dropna(inplace=True)\n",

|

| 153 |

+

"df_aq['country']=country\n",

|

| 154 |

+

"df_aq['city']=city\n",

|

| 155 |

+

"df_aq['street']=street\n",

|

| 156 |

+

"df_aq['url']=aqicn_url\n",

|

| 157 |

+

"df_aq\n",

|

| 158 |

+

"\n",

|

| 159 |

+

"df_aq =df_aq.set_index(\"date\")\n",

|

| 160 |

+

"df_aq['past_air_quality'] = df_aq['pm25'].rolling(3).mean()\n",

|

| 161 |

+

"df_aq[\"past_air_quality\"] = df_aq[\"past_air_quality\"].fillna(df_aq[\"past_air_quality\"].mean())\n",

|

| 162 |

+

"df_aq = df_aq.reset_index()\n",

|

| 163 |

+

"df_aq.date.describe()\n",

|

| 164 |

+

"\n",

|

| 165 |

+

"earliest_aq_date = pd.Series.min(df_aq['date'])\n",

|

| 166 |

+

"earliest_aq_date = earliest_aq_date.strftime('%Y-%m-%d')\n",

|

| 167 |

+

"earliest_aq_date"

|

| 168 |

+

]

|

| 169 |

+

},

|

| 170 |

+

{

|

| 171 |

+

"cell_type": "code",

|

| 172 |

+

"execution_count": null,

|

| 173 |

+

"metadata": {},

|

| 174 |

+

"outputs": [

|

| 175 |

+

{

|

| 176 |

+

"data": {

|

| 177 |

+

"text/plain": [

|

| 178 |

+

"datetime.date(2024, 11, 20)"

|

| 179 |

+

]

|

| 180 |

+

},

|

| 181 |

+

"execution_count": 8,

|

| 182 |

+

"metadata": {},

|

| 183 |

+

"output_type": "execute_result"

|

| 184 |

+

}

|

| 185 |

+

],

|

| 186 |

+

"source": [

|

| 187 |

+

"today"

|

| 188 |

+

]

|

| 189 |

+

},

|

| 190 |

+

{

|

| 191 |

+

"cell_type": "code",

|

| 192 |

+

"execution_count": null,

|

| 193 |

+

"metadata": {},

|

| 194 |

+

"outputs": [

|

| 195 |

+

{

|

| 196 |

+

"name": "stdout",

|

| 197 |

+

"output_type": "stream",

|

| 198 |

+

"text": [

|

| 199 |

+

"Coordinates 31.59929656982422°N 74.26347351074219°E\n",

|

| 200 |

+

"Elevation 215.0 m asl\n",

|

| 201 |

+

"Timezone None None\n",

|

| 202 |

+

"Timezone difference to GMT+0 0 s\n",

|

| 203 |

+

"<class 'pandas.core.frame.DataFrame'>\n",

|

| 204 |

+

"Index: 1807 entries, 0 to 1806\n",

|

| 205 |

+

"Data columns (total 6 columns):\n",

|

| 206 |

+

" # Column Non-Null Count Dtype \n",

|

| 207 |

+

"--- ------ -------------- ----- \n",

|

| 208 |

+

" 0 date 1807 non-null datetime64[ns]\n",

|

| 209 |

+

" 1 temperature_2m_mean 1807 non-null float32 \n",

|

| 210 |

+

" 2 precipitation_sum 1807 non-null float32 \n",

|

| 211 |

+

" 3 wind_speed_10m_max 1807 non-null float32 \n",

|

| 212 |

+

" 4 wind_direction_10m_dominant 1807 non-null float32 \n",

|

| 213 |

+

" 5 city 1807 non-null object \n",

|

| 214 |

+

"dtypes: datetime64[ns](1), float32(4), object(1)\n",

|

| 215 |

+

"memory usage: 70.6+ KB\n"

|

| 216 |

+

]

|

| 217 |

+

}

|

| 218 |

+

],

|

| 219 |

+

"source": [

|

| 220 |

+

"weather_df = util.get_historical_weather(city, earliest_aq_date, str(today - datetime.timedelta(days=1)), latitude, longitude)\n",

|

| 221 |

+

"# weather_df = util.get_historical_weather(city, earliest_aq_date, \"2024-11-05\", latitude, longitude)\n",

|

| 222 |

+

"weather_df.info()"

|

| 223 |

+

]

|

| 224 |

+

},

|

| 225 |

+

{

|

| 226 |

+

"cell_type": "code",

|

| 227 |

+

"execution_count": 10,

|

| 228 |

+

"metadata": {},

|

| 229 |

+

"outputs": [

|

| 230 |

+

{

|

| 231 |

+

"data": {

|

| 232 |

+

"text/plain": [

|

| 233 |

+

"{\"expectation_type\": \"expect_column_min_to_be_between\", \"kwargs\": {\"column\": \"pm25\", \"min_value\": -0.1, \"max_value\": 500.0, \"strict_min\": true}, \"meta\": {}}"

|

| 234 |

+

]

|

| 235 |

+

},

|

| 236 |

+

"execution_count": 10,

|

| 237 |

+

"metadata": {},

|

| 238 |

+

"output_type": "execute_result"

|

| 239 |

+

}

|

| 240 |

+

],

|

| 241 |

+

"source": [

|

| 242 |

+

"\n",

|

| 243 |

+

"import great_expectations as ge\n",

|

| 244 |

+

"aq_expectation_suite = ge.core.ExpectationSuite(\n",

|

| 245 |

+

" expectation_suite_name=\"aq_expectation_suite\"\n",

|

| 246 |

+

")\n",

|

| 247 |

+

"\n",

|

| 248 |

+

"aq_expectation_suite.add_expectation(\n",

|

| 249 |

+

" ge.core.ExpectationConfiguration(\n",

|

| 250 |

+

" expectation_type=\"expect_column_min_to_be_between\",\n",

|

| 251 |

+

" kwargs={\n",

|

| 252 |

+

" \"column\":\"pm25\",\n",

|

| 253 |

+

" \"min_value\":-0.1,\n",

|

| 254 |

+

" \"max_value\":500.0,\n",

|

| 255 |

+

" \"strict_min\":True\n",

|

| 256 |

+

" }\n",

|

| 257 |

+

" )\n",

|

| 258 |

+

")\n"

|

| 259 |

+

]

|

| 260 |

+

},

|

| 261 |

+

{

|

| 262 |

+

"cell_type": "code",

|

| 263 |

+

"execution_count": 11,

|

| 264 |

+

"metadata": {},

|

| 265 |

+

"outputs": [],

|

| 266 |

+

"source": [

|

| 267 |

+

"import great_expectations as ge\n",

|

| 268 |

+

"weather_expectation_suite = ge.core.ExpectationSuite(\n",

|

| 269 |

+

" expectation_suite_name=\"weather_expectation_suite\"\n",

|

| 270 |

+

")\n",

|

| 271 |

+

"\n",

|

| 272 |

+

"def expect_greater_than_zero(col):\n",

|

| 273 |

+

" weather_expectation_suite.add_expectation(\n",

|

| 274 |

+

" ge.core.ExpectationConfiguration(\n",

|

| 275 |

+

" expectation_type=\"expect_column_min_to_be_between\",\n",

|

| 276 |

+

" kwargs={\n",

|

| 277 |

+

" \"column\":col,\n",

|

| 278 |

+

" \"min_value\":-0.1,\n",

|

| 279 |

+

" \"max_value\":1000.0,\n",

|

| 280 |

+

" \"strict_min\":True\n",

|

| 281 |

+

" }\n",

|

| 282 |

+

" )\n",

|

| 283 |

+

" )\n",

|

| 284 |

+

"expect_greater_than_zero(\"precipitation_sum\")\n",

|

| 285 |

+

"expect_greater_than_zero(\"wind_speed_10m_max\")"

|

| 286 |

+

]

|

| 287 |

+

},

|

| 288 |

+

{

|

| 289 |

+

"cell_type": "code",

|

| 290 |

+

"execution_count": 12,

|

| 291 |

+

"metadata": {},

|

| 292 |

+

"outputs": [

|

| 293 |

+

{

|

| 294 |

+

"name": "stdout",

|

| 295 |

+

"output_type": "stream",

|

| 296 |

+

"text": [

|

| 297 |

+

"Connected. Call `.close()` to terminate connection gracefully.\n"

|

| 298 |

+

]

|

| 299 |

+

}

|

| 300 |

+

],

|

| 301 |

+

"source": [

|

| 302 |

+

"fs = project.get_feature_store() "

|

| 303 |

+

]

|

| 304 |

+

},

|

| 305 |

+

{

|

| 306 |

+

"cell_type": "code",

|

| 307 |

+

"execution_count": 13,

|

| 308 |

+

"metadata": {},

|

| 309 |

+

"outputs": [

|

| 310 |

+

{

|

| 311 |

+

"name": "stdout",

|

| 312 |

+

"output_type": "stream",

|

| 313 |

+

"text": [

|

| 314 |

+

"Secret created successfully, explore it at https://c.app.hopsworks.ai:443/account/secrets\n"

|

| 315 |

+

]

|

| 316 |

+

}

|

| 317 |

+

],

|

| 318 |

+

"source": [

|

| 319 |

+

"dict_obj = {\n",

|

| 320 |

+

" \"country\": country,\n",

|

| 321 |

+

" \"city\": city,\n",

|

| 322 |

+

" \"street\": street,\n",

|

| 323 |

+

" \"aqicn_url\": aqicn_url,\n",

|

| 324 |

+

" \"latitude\": latitude,\n",

|

| 325 |

+

" \"longitude\": longitude\n",

|

| 326 |

+

"}\n",

|

| 327 |

+

"\n",

|

| 328 |

+

"# Convert the dictionary to a JSON string\n",

|

| 329 |

+

"str_dict = json.dumps(dict_obj)\n",

|

| 330 |

+

"\n",

|

| 331 |

+

"try:\n",

|

| 332 |

+

" secrets.create_secret(\"SENSOR_LOCATION_JSON\", str_dict)\n",

|

| 333 |

+

"except hopsworks.RestAPIError:\n",

|

| 334 |

+

" print(\"SENSOR_LOCATION_JSON already exists. To update, delete the secret in the UI (https://c.app.hopsworks.ai/account/secrets) and re-run this cell.\")\n",

|

| 335 |

+

" existing_key = secrets.get_secret(\"SENSOR_LOCATION_JSON\").value\n",

|

| 336 |

+

" print(f\"{existing_key}\")\n"

|

| 337 |

+

]

|

| 338 |

+

},

|

| 339 |

+

{

|

| 340 |

+

"cell_type": "code",

|

| 341 |

+

"execution_count": 15,

|

| 342 |

+

"metadata": {},

|

| 343 |

+

"outputs": [],

|

| 344 |

+

"source": [

|

| 345 |

+

"air_quality_fg = fs.get_or_create_feature_group(\n",

|

| 346 |

+

" name='air_quality',\n",

|

| 347 |

+

" description='Air Quality characteristics of each day',\n",

|

| 348 |

+

" version=1,\n",

|

| 349 |

+

" primary_key=['city', 'street', 'date'],\n",

|

| 350 |

+

" event_time=\"date\",\n",

|

| 351 |

+

" expectation_suite=aq_expectation_suite\n",

|

| 352 |

+

")\n"

|

| 353 |

+

]

|

| 354 |

+

},

|

| 355 |

+

{

|

| 356 |

+

"cell_type": "code",

|

| 357 |

+

"execution_count": 16,

|

| 358 |

+

"metadata": {},

|

| 359 |

+

"outputs": [

|

| 360 |

+

{

|

| 361 |

+

"name": "stdout",

|

| 362 |

+

"output_type": "stream",

|

| 363 |

+

"text": [

|

| 364 |

+

"Feature Group created successfully, explore it at \n",

|

| 365 |

+

"https://c.app.hopsworks.ai:443/p/1160340/fs/1151043/fg/1362254\n",

|

| 366 |

+

"2024-11-21 05:44:54,527 INFO: \t1 expectation(s) included in expectation_suite.\n",

|

| 367 |

+

"Validation succeeded.\n",

|

| 368 |

+

"Validation Report saved successfully, explore a summary at https://c.app.hopsworks.ai:443/p/1160340/fs/1151043/fg/1362254\n"

|

| 369 |

+

]

|

| 370 |

+

},

|

| 371 |

+

{

|

| 372 |

+

"data": {

|

| 373 |

+

"application/vnd.jupyter.widget-view+json": {

|

| 374 |

+

"model_id": "506badbe42224a17b3ccc6d6b1ae7927",

|

| 375 |

+

"version_major": 2,

|

| 376 |

+

"version_minor": 0

|

| 377 |

+

},

|

| 378 |

+

"text/plain": [

|

| 379 |

+

"Uploading Dataframe: 0.00% | | Rows 0/1802 | Elapsed Time: 00:00 | Remaining Time: ?"

|

| 380 |

+

]

|

| 381 |

+

},

|

| 382 |

+

"metadata": {},

|

| 383 |

+

"output_type": "display_data"

|

| 384 |

+

},

|

| 385 |

+

{

|

| 386 |

+

"name": "stdout",

|

| 387 |

+

"output_type": "stream",

|

| 388 |

+

"text": [

|

| 389 |

+

"Launching job: air_quality_1_offline_fg_materialization\n",

|

| 390 |

+

"Job started successfully, you can follow the progress at \n",

|

| 391 |

+

"https://c.app.hopsworks.ai/p/1160340/jobs/named/air_quality_1_offline_fg_materialization/executions\n"

|

| 392 |

+

]

|

| 393 |

+

},

|

| 394 |

+

{

|

| 395 |

+

"data": {

|

| 396 |

+

"text/plain": [

|

| 397 |

+

"(<hsfs.core.job.Job at 0x74c9c7eb8c20>,\n",

|

| 398 |

+

" {\n",

|

| 399 |

+

" \"success\": true,\n",

|

| 400 |

+

" \"results\": [\n",

|

| 401 |

+

" {\n",

|

| 402 |

+

" \"success\": true,\n",

|

| 403 |

+

" \"expectation_config\": {\n",

|

| 404 |

+

" \"expectation_type\": \"expect_column_min_to_be_between\",\n",

|

| 405 |

+

" \"kwargs\": {\n",

|

| 406 |

+

" \"column\": \"pm25\",\n",

|

| 407 |

+

" \"min_value\": -0.1,\n",

|

| 408 |

+

" \"max_value\": 500.0,\n",

|

| 409 |

+

" \"strict_min\": true\n",

|

| 410 |

+

" },\n",

|

| 411 |

+

" \"meta\": {\n",

|

| 412 |

+

" \"expectationId\": 686087\n",

|

| 413 |

+

" }\n",

|

| 414 |

+

" },\n",

|

| 415 |

+

" \"result\": {\n",

|

| 416 |

+

" \"observed_value\": 1.9899998903274536,\n",

|

| 417 |

+

" \"element_count\": 1802,\n",

|

| 418 |

+

" \"missing_count\": null,\n",

|

| 419 |

+

" \"missing_percent\": null\n",

|

| 420 |

+

" },\n",

|

| 421 |

+

" \"meta\": {\n",

|

| 422 |

+

" \"ingestionResult\": \"INGESTED\",\n",

|

| 423 |

+

" \"validationTime\": \"2024-11-20T09:44:54.000525Z\"\n",

|

| 424 |

+

" },\n",

|

| 425 |

+

" \"exception_info\": {\n",

|

| 426 |

+

" \"raised_exception\": false,\n",

|

| 427 |

+

" \"exception_message\": null,\n",

|

| 428 |

+

" \"exception_traceback\": null\n",

|

| 429 |

+

" }\n",

|

| 430 |

+

" }\n",

|

| 431 |

+

" ],\n",

|

| 432 |

+

" \"evaluation_parameters\": {},\n",

|

| 433 |

+

" \"statistics\": {\n",

|

| 434 |

+

" \"evaluated_expectations\": 1,\n",

|

| 435 |

+

" \"successful_expectations\": 1,\n",

|

| 436 |

+

" \"unsuccessful_expectations\": 0,\n",

|

| 437 |

+

" \"success_percent\": 100.0\n",

|

| 438 |

+

" },\n",

|

| 439 |

+

" \"meta\": {\n",

|

| 440 |

+

" \"great_expectations_version\": \"0.18.12\",\n",

|

| 441 |

+

" \"expectation_suite_name\": \"aq_expectation_suite\",\n",

|

| 442 |

+

" \"run_id\": {\n",

|

| 443 |

+

" \"run_name\": null,\n",

|

| 444 |

+

" \"run_time\": \"2024-11-21T05:44:54.526004+08:00\"\n",

|

| 445 |

+

" },\n",

|

| 446 |

+

" \"batch_kwargs\": {\n",

|

| 447 |

+

" \"ge_batch_id\": \"adcf6d76-a788-11ef-a237-1091d10619ea\"\n",

|

| 448 |

+

" },\n",

|

| 449 |

+

" \"batch_markers\": {},\n",

|

| 450 |

+

" \"batch_parameters\": {},\n",

|

| 451 |

+

" \"validation_time\": \"20241120T214454.525505Z\",\n",

|

| 452 |

+

" \"expectation_suite_meta\": {\n",

|

| 453 |

+

" \"great_expectations_version\": \"0.18.12\"\n",

|

| 454 |

+

" }\n",

|

| 455 |

+

" }\n",

|

| 456 |

+

" })"

|

| 457 |

+

]

|

| 458 |

+

},

|

| 459 |

+

"execution_count": 16,

|

| 460 |

+

"metadata": {},

|

| 461 |

+

"output_type": "execute_result"

|

| 462 |

+

}

|

| 463 |

+

],

|

| 464 |

+

"source": [

|

| 465 |

+

"air_quality_fg.insert(df_aq)"

|

| 466 |

+

]

|

| 467 |

+

},

|

| 468 |

+

{

|

| 469 |

+

"cell_type": "code",

|

| 470 |

+

"execution_count": 17,

|

| 471 |

+

"metadata": {},

|

| 472 |

+

"outputs": [

|

| 473 |

+

{

|

| 474 |

+

"data": {

|

| 475 |

+

"text/plain": [

|

| 476 |

+

"<hsfs.feature_group.FeatureGroup at 0x74c9c7ed3d10>"

|

| 477 |

+

]

|

| 478 |

+

},

|

| 479 |

+

"execution_count": 17,

|

| 480 |

+

"metadata": {},

|

| 481 |

+

"output_type": "execute_result"

|

| 482 |

+

}

|

| 483 |

+

],

|

| 484 |

+

"source": [

|

| 485 |

+

"air_quality_fg.update_feature_description(\"date\", \"Date of measurement of air quality\")\n",

|

| 486 |

+

"air_quality_fg.update_feature_description(\"country\", \"Country where the air quality was measured (sometimes a city in acqcn.org)\")\n",

|

| 487 |

+

"air_quality_fg.update_feature_description(\"city\", \"City where the air quality was measured\")\n",

|

| 488 |

+

"air_quality_fg.update_feature_description(\"street\", \"Street in the city where the air quality was measured\")\n",

|

| 489 |

+

"air_quality_fg.update_feature_description(\"pm25\", \"Particles less than 2.5 micrometers in diameter (fine particles) pose health risk\")\n",

|

| 490 |

+

"air_quality_fg.update_feature_description(\"past_air_quality\", \"mean air quality of the past 3 days\")\n"

|

| 491 |

+

]

|

| 492 |

+

},

|

| 493 |

+

{

|

| 494 |

+

"cell_type": "code",

|

| 495 |

+

"execution_count": 18,

|

| 496 |

+

"metadata": {},

|

| 497 |

+

"outputs": [

|

| 498 |

+

{

|

| 499 |

+

"name": "stdout",

|

| 500 |

+

"output_type": "stream",

|

| 501 |

+

"text": [

|

| 502 |

+

"Feature Group created successfully, explore it at \n",

|

| 503 |

+

"https://c.app.hopsworks.ai:443/p/1160340/fs/1151043/fg/1362255\n",

|

| 504 |

+

"2024-11-21 05:56:51,769 INFO: \t2 expectation(s) included in expectation_suite.\n",

|

| 505 |

+

"Validation succeeded.\n",

|

| 506 |

+

"Validation Report saved successfully, explore a summary at https://c.app.hopsworks.ai:443/p/1160340/fs/1151043/fg/1362255\n"

|

| 507 |

+

]

|

| 508 |

+

},

|

| 509 |

+

{

|

| 510 |

+

"data": {

|

| 511 |

+

"application/vnd.jupyter.widget-view+json": {

|

| 512 |

+

"model_id": "455439f2dd8643b4b06da1d3851d2f8c",

|

| 513 |

+

"version_major": 2,

|

| 514 |

+

"version_minor": 0

|

| 515 |

+

},

|

| 516 |

+

"text/plain": [

|

| 517 |

+

"Uploading Dataframe: 0.00% | | Rows 0/1807 | Elapsed Time: 00:00 | Remaining Time: ?"

|

| 518 |

+

]

|

| 519 |

+

},

|

| 520 |

+

"metadata": {},

|

| 521 |

+

"output_type": "display_data"

|

| 522 |

+

},

|

| 523 |

+

{

|

| 524 |

+

"name": "stdout",

|

| 525 |

+

"output_type": "stream",

|

| 526 |

+

"text": [

|

| 527 |

+

"Launching job: weather_1_offline_fg_materialization\n",

|

| 528 |

+

"Job started successfully, you can follow the progress at \n",

|

| 529 |

+

"https://c.app.hopsworks.ai/p/1160340/jobs/named/weather_1_offline_fg_materialization/executions\n"

|

| 530 |

+

]

|

| 531 |

+

},

|

| 532 |

+

{

|

| 533 |

+

"data": {

|

| 534 |

+

"text/plain": [

|

| 535 |

+

"<hsfs.feature_group.FeatureGroup at 0x74c9c7ebaea0>"

|

| 536 |

+

]

|

| 537 |

+

},

|

| 538 |

+

"execution_count": 18,

|

| 539 |

+

"metadata": {},

|

| 540 |

+

"output_type": "execute_result"

|

| 541 |

+

}

|

| 542 |

+

],

|

| 543 |

+

"source": [

|

| 544 |

+

"weather_fg = fs.get_or_create_feature_group(\n",

|

| 545 |

+

" name='weather',\n",

|

| 546 |

+

" description='Weather characteristics of each day',\n",

|

| 547 |

+

" version=1,\n",

|

| 548 |

+

" primary_key=['city', 'date'],\n",

|

| 549 |

+

" event_time=\"date\",\n",

|

| 550 |

+

" expectation_suite=weather_expectation_suite\n",

|

| 551 |

+

") \n",

|

| 552 |

+

"\n",

|

| 553 |

+

"weather_fg.insert(weather_df)\n",

|

| 554 |

+

"\n",

|

| 555 |

+

"weather_fg.update_feature_description(\"date\", \"Date of measurement of weather\")\n",

|

| 556 |

+

"weather_fg.update_feature_description(\"city\", \"City where weather is measured/forecast for\")\n",

|

| 557 |

+

"weather_fg.update_feature_description(\"temperature_2m_mean\", \"Temperature in Celsius\")\n",

|

| 558 |

+

"weather_fg.update_feature_description(\"precipitation_sum\", \"Precipitation (rain/snow) in mm\")\n",

|

| 559 |

+

"weather_fg.update_feature_description(\"wind_speed_10m_max\", \"Wind speed at 10m abouve ground\")\n",

|

| 560 |

+

"weather_fg.update_feature_description(\"wind_direction_10m_dominant\", \"Dominant Wind direction over the dayd\")\n",

|

| 561 |

+

"\n",

|

| 562 |

+

"\n"

|

| 563 |

+

]

|

| 564 |

+

}

|

| 565 |

+

],

|

| 566 |

+

"metadata": {

|

| 567 |

+

"kernelspec": {

|

| 568 |

+

"display_name": ".venv",

|

| 569 |

+

"language": "python",

|

| 570 |

+

"name": "python3"

|

| 571 |

+

},

|

| 572 |

+

"language_info": {

|

| 573 |

+

"codemirror_mode": {

|

| 574 |

+

"name": "ipython",

|

| 575 |

+

"version": 3

|

| 576 |

+

},

|

| 577 |

+

"file_extension": ".py",

|

| 578 |

+

"mimetype": "text/x-python",

|

| 579 |

+

"name": "python",

|

| 580 |

+

"nbconvert_exporter": "python",

|

| 581 |

+

"pygments_lexer": "ipython3",

|

| 582 |

+

"version": "3.12.4"

|

| 583 |

+

}

|

| 584 |

+

},

|

| 585 |

+

"nbformat": 4,

|

| 586 |

+

"nbformat_minor": 2

|

| 587 |

+

}

|

backfill.py

DELETED

|

@@ -1,62 +0,0 @@

|

|

| 1 |

-

import datetime

|

| 2 |

-

import requests

|

| 3 |

-

import pandas as pd

|

| 4 |

-

import hopsworks

|

| 5 |

-

import datetime

|

| 6 |

-

from pathlib import Path

|

| 7 |

-

from functions import util

|

| 8 |

-

import json

|

| 9 |

-

import re

|

| 10 |

-

import os

|

| 11 |

-

import warnings

|

| 12 |

-

import pandas as pd

|

| 13 |

-

|

| 14 |

-

api_key = os.getenv('HOPSWORKS_API_KEY')

|

| 15 |

-

project_name = os.getenv('HOPSWORKS_PROJECT')

|

| 16 |

-

|

| 17 |

-

project = hopsworks.login(project=project_name, api_key_value=api_key)

|

| 18 |

-

fs = project.get_feature_store()

|

| 19 |

-

secrets = util.secrets_api(project.name)

|

| 20 |

-

|

| 21 |

-

AQI_API_KEY = secrets.get_secret("AQI_API_KEY").value

|

| 22 |

-

location_str = secrets.get_secret("SENSOR_LOCATION_JSON").value

|

| 23 |

-

location = json.loads(location_str)

|

| 24 |

-

|

| 25 |

-

country=location['country']

|

| 26 |

-

city=location['city']

|

| 27 |

-

street=location['street']

|

| 28 |

-

aqicn_url=location['aqicn_url']

|

| 29 |

-

latitude=location['latitude']

|

| 30 |

-

longitude=location['longitude']

|

| 31 |

-

|

| 32 |

-

today = datetime.date.today()

|

| 33 |

-

|

| 34 |

-

# Retrieve feature groups

|

| 35 |

-

air_quality_fg = fs.get_feature_group(

|

| 36 |

-

name='air_quality',

|

| 37 |

-

version=1,

|

| 38 |

-

)

|

| 39 |

-

weather_fg = fs.get_feature_group(

|

| 40 |

-

name='weather',

|

| 41 |

-

version=1,

|

| 42 |

-

)

|

| 43 |

-

|

| 44 |

-

aq_today_df = util.get_pm25(aqicn_url, country, city, street, today, AQI_API_KEY)

|

| 45 |

-

#aq_today_df = util.get_pm25(aqicn_url, country, city, street, "2024-11-15", AQI_API_KEY)

|

| 46 |

-

aq_today_df['date'] = pd.to_datetime(aq_today_df['date']).dt.date

|

| 47 |

-

aq_today_df

|

| 48 |

-

|

| 49 |

-

# Get weather forecast data

|

| 50 |

-

|

| 51 |

-

hourly_df = util.get_hourly_weather_forecast(city, latitude, longitude)

|

| 52 |

-

hourly_df = hourly_df.set_index('date')

|

| 53 |

-

|

| 54 |

-

# We will only make 1 daily prediction, so we will replace the hourly forecasts with a single daily forecast

|

| 55 |

-

# We only want the daily weather data, so only get weather at 12:00

|

| 56 |

-

daily_df = hourly_df.between_time('11:59', '12:01')

|

| 57 |

-

daily_df = daily_df.reset_index()

|

| 58 |

-

daily_df['date'] = pd.to_datetime(daily_df['date']).dt.date

|

| 59 |

-

daily_df['date'] = pd.to_datetime(daily_df['date'])

|

| 60 |

-

# daily_df['date'] = daily_df['date'].astype(str)

|

| 61 |

-

daily_df['city'] = city

|

| 62 |

-

daily_df

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

data/lahore.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

debug.ipynb

CHANGED

|

@@ -2,7 +2,7 @@

|

|

| 2 |

"cells": [

|

| 3 |

{

|

| 4 |

"cell_type": "code",

|

| 5 |

-

"execution_count":

|

| 6 |

"metadata": {},

|

| 7 |

"outputs": [

|

| 8 |

{

|

|

@@ -10,266 +10,70 @@

|

|

| 10 |

"output_type": "stream",

|

| 11 |

"text": [

|

| 12 |

"Connection closed.\n",

|

| 13 |

-

"Connected. Call `.close()` to terminate connection gracefully.\n"

|

| 14 |

-

]

|

| 15 |

-

},

|

| 16 |

-

{

|

| 17 |

-

"name": "stdout",

|

| 18 |

-

"output_type": "stream",

|

| 19 |

-

"text": [

|

| 20 |

"\n",

|

| 21 |

"Logged in to project, explore it here https://c.app.hopsworks.ai:443/p/1160344\n",

|

| 22 |

"Connected. Call `.close()` to terminate connection gracefully.\n",

|

| 23 |

-

"Connected. Call `.close()` to terminate connection gracefully.\n"

|

|

|

|

|

|

|

| 24 |

]

|

| 25 |

}

|

| 26 |

],

|

| 27 |

"source": [

|

| 28 |

-

"import

|

| 29 |

"import pandas as pd\n",

|

| 30 |

-

"

|

|

|

|

| 31 |

"import hopsworks\n",

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

"import json\n",

|

| 33 |

-

"from

|

| 34 |

"import os\n",

|

| 35 |

"\n",

|

| 36 |

-

"# Set up\n",

|

| 37 |

-

"\n",

|

| 38 |

-

"api_key = os.getenv('HOPSWORKS_API_KEY')\n",

|

| 39 |

-

"project_name = os.getenv('HOPSWORKS_PROJECT')\n",

|

| 40 |

-

"project = hopsworks.login(project=project_name, api_key_value=api_key)\n",

|

| 41 |

-

"fs = project.get_feature_store() \n",

|

| 42 |

-

"secrets = util.secrets_api(project.name)\n",

|

| 43 |

-

"location_str = secrets.get_secret(\"SENSOR_LOCATION_JSON\").value\n",

|

| 44 |

-

"location = json.loads(location_str)\n",

|

| 45 |

-

"country=location['country']\n",

|

| 46 |

-

"city=location['city']\n",

|

| 47 |

-

"street=location['street']\n",

|

| 48 |

-

"\n",

|

| 49 |

-

"AQI_API_KEY = secrets.get_secret(\"AQI_API_KEY\").value\n",

|

| 50 |

-

"location_str = secrets.get_secret(\"SENSOR_LOCATION_JSON\").value\n",

|

| 51 |

-

"location = json.loads(location_str)\n",

|

| 52 |

"\n",

|

| 53 |

-

"

|

|

|

|

|

|

|

| 54 |

]

|

| 55 |

},

|

| 56 |

{

|

| 57 |

"cell_type": "code",

|

| 58 |

-

"execution_count":

|

| 59 |

"metadata": {},

|

| 60 |

"outputs": [

|

| 61 |

{

|

| 62 |

"name": "stdout",

|

| 63 |

"output_type": "stream",

|

| 64 |

"text": [

|

|

|

|

| 65 |

"Connected. Call `.close()` to terminate connection gracefully.\n",

|

| 66 |

-

"

|

| 67 |

-

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

" version=1,\n",

|

| 78 |

-

")\n",

|

| 79 |

-

"\n",

|

| 80 |

-

"saved_model_dir = retrieved_model.download()\n",

|

| 81 |

-

"retrieved_xgboost_model = XGBRegressor()\n",

|

| 82 |

-

"retrieved_xgboost_model.load_model(saved_model_dir + \"/model.json\")\n",

|

| 83 |

-

"\n",

|

| 84 |

-

"### Retrieve features \n",

|

| 85 |

-

"\n",

|

| 86 |

-

"weather_fg = fs.get_feature_group(\n",

|

| 87 |

-

" name='weather',\n",

|

| 88 |

-

" version=1,\n",

|

| 89 |

-

")\n",

|

| 90 |

-

"\n",

|

| 91 |

-

"today_timestamp = pd.to_datetime(today)\n",

|

| 92 |

-

"batch_data = weather_fg.filter(weather_fg.date >= today_timestamp ).read().sort_values(by=['date'])"

|

| 93 |

-

]

|

| 94 |

-

},

|

| 95 |

-

{

|

| 96 |

-

"cell_type": "code",

|

| 97 |

-

"execution_count": 7,

|

| 98 |

-

"metadata": {},

|

| 99 |

-

"outputs": [

|

| 100 |

-

{

|

| 101 |

-

"data": {

|

| 102 |

-

"text/html": [

|

| 103 |

-

"<div>\n",

|

| 104 |

-

"<style scoped>\n",

|

| 105 |

-

" .dataframe tbody tr th:only-of-type {\n",

|

| 106 |

-

" vertical-align: middle;\n",

|

| 107 |

-

" }\n",

|

| 108 |

-

"\n",

|

| 109 |

-

" .dataframe tbody tr th {\n",

|

| 110 |

-

" vertical-align: top;\n",

|

| 111 |

-

" }\n",

|

| 112 |

-

"\n",

|

| 113 |

-

" .dataframe thead th {\n",

|

| 114 |

-

" text-align: right;\n",

|

| 115 |

-

" }\n",

|

| 116 |

-

"</style>\n",

|

| 117 |

-

"<table border=\"1\" class=\"dataframe\">\n",

|

| 118 |

-

" <thead>\n",

|

| 119 |

-

" <tr style=\"text-align: right;\">\n",

|

| 120 |

-

" <th></th>\n",

|

| 121 |

-

" <th>date</th>\n",

|

| 122 |

-

" <th>temperature_2m_mean</th>\n",

|

| 123 |

-

" <th>precipitation_sum</th>\n",

|

| 124 |

-

" <th>wind_speed_10m_max</th>\n",

|

| 125 |

-

" <th>wind_direction_10m_dominant</th>\n",

|

| 126 |

-

" <th>city</th>\n",

|

| 127 |

-

" </tr>\n",

|

| 128 |

-

" </thead>\n",

|

| 129 |

-

" <tbody>\n",

|

| 130 |

-

" <tr>\n",

|

| 131 |

-

" <th>1</th>\n",

|

| 132 |

-

" <td>2024-11-21 00:00:00+00:00</td>\n",

|

| 133 |

-

" <td>21.700001</td>\n",

|

| 134 |

-

" <td>0.0</td>\n",

|

| 135 |

-

" <td>1.138420</td>\n",

|

| 136 |

-

" <td>71.564964</td>\n",

|

| 137 |

-

" <td>lahore</td>\n",

|

| 138 |

-

" </tr>\n",

|

| 139 |

-

" <tr>\n",

|

| 140 |

-

" <th>4</th>\n",

|

| 141 |

-

" <td>2024-11-22 00:00:00+00:00</td>\n",

|

| 142 |

-

" <td>21.850000</td>\n",

|

| 143 |

-

" <td>0.0</td>\n",

|

| 144 |

-

" <td>4.610250</td>\n",

|

| 145 |

-

" <td>128.659836</td>\n",

|

| 146 |

-

" <td>lahore</td>\n",

|

| 147 |

-

" </tr>\n",

|

| 148 |

-

" <tr>\n",

|

| 149 |

-

" <th>7</th>\n",

|

| 150 |

-

" <td>2024-11-23 00:00:00+00:00</td>\n",

|

| 151 |

-

" <td>22.250000</td>\n",

|

| 152 |

-

" <td>0.0</td>\n",

|

| 153 |

-

" <td>5.091168</td>\n",

|

| 154 |

-

" <td>44.999897</td>\n",

|

| 155 |

-

" <td>lahore</td>\n",

|

| 156 |

-

" </tr>\n",

|

| 157 |

-

" <tr>\n",

|

| 158 |

-

" <th>6</th>\n",

|

| 159 |

-

" <td>2024-11-24 00:00:00+00:00</td>\n",

|

| 160 |

-

" <td>21.400000</td>\n",

|

| 161 |

-

" <td>0.0</td>\n",

|

| 162 |

-

" <td>4.334974</td>\n",

|

| 163 |

-

" <td>318.366547</td>\n",

|

| 164 |

-

" <td>lahore</td>\n",

|

| 165 |

-

" </tr>\n",

|

| 166 |

-

" <tr>\n",

|

| 167 |

-

" <th>5</th>\n",

|

| 168 |

-

" <td>2024-11-25 00:00:00+00:00</td>\n",

|

| 169 |

-

" <td>20.750000</td>\n",

|

| 170 |

-

" <td>0.0</td>\n",

|

| 171 |

-

" <td>6.439876</td>\n",

|

| 172 |

-

" <td>296.564972</td>\n",

|

| 173 |

-

" <td>lahore</td>\n",

|

| 174 |

-

" </tr>\n",

|

| 175 |

-

" <tr>\n",

|

| 176 |

-

" <th>2</th>\n",

|

| 177 |

-

" <td>2024-11-26 00:00:00+00:00</td>\n",

|

| 178 |

-

" <td>20.750000</td>\n",

|

| 179 |

-

" <td>0.0</td>\n",

|

| 180 |

-

" <td>4.680000</td>\n",

|

| 181 |

-

" <td>270.000000</td>\n",

|

| 182 |

-

" <td>lahore</td>\n",

|

| 183 |

-

" </tr>\n",

|

| 184 |

-

" <tr>\n",

|

| 185 |

-

" <th>0</th>\n",

|

| 186 |

-

" <td>2024-11-27 00:00:00+00:00</td>\n",

|

| 187 |

-

" <td>20.350000</td>\n",

|

| 188 |

-

" <td>0.0</td>\n",

|

| 189 |

-

" <td>4.104631</td>\n",

|

| 190 |

-

" <td>37.875053</td>\n",

|

| 191 |

-

" <td>lahore</td>\n",

|

| 192 |

-

" </tr>\n",

|

| 193 |

-

" <tr>\n",

|

| 194 |

-

" <th>3</th>\n",

|

| 195 |

-

" <td>2024-11-28 00:00:00+00:00</td>\n",

|

| 196 |

-

" <td>19.799999</td>\n",

|

| 197 |

-

" <td>0.0</td>\n",

|

| 198 |

-

" <td>2.189795</td>\n",

|

| 199 |

-

" <td>9.462248</td>\n",

|

| 200 |

-

" <td>lahore</td>\n",

|

| 201 |

-

" </tr>\n",

|

| 202 |

-

" </tbody>\n",

|

| 203 |

-

"</table>\n",

|

| 204 |

-

"</div>"

|

| 205 |

-

],

|

| 206 |

-

"text/plain": [

|

| 207 |

-

" date temperature_2m_mean precipitation_sum \\\n",

|

| 208 |

-

"1 2024-11-21 00:00:00+00:00 21.700001 0.0 \n",

|

| 209 |

-

"4 2024-11-22 00:00:00+00:00 21.850000 0.0 \n",

|

| 210 |

-

"7 2024-11-23 00:00:00+00:00 22.250000 0.0 \n",

|

| 211 |

-

"6 2024-11-24 00:00:00+00:00 21.400000 0.0 \n",

|

| 212 |

-

"5 2024-11-25 00:00:00+00:00 20.750000 0.0 \n",

|

| 213 |

-

"2 2024-11-26 00:00:00+00:00 20.750000 0.0 \n",

|

| 214 |

-

"0 2024-11-27 00:00:00+00:00 20.350000 0.0 \n",

|

| 215 |

-

"3 2024-11-28 00:00:00+00:00 19.799999 0.0 \n",

|

| 216 |

-

"\n",

|

| 217 |

-

" wind_speed_10m_max wind_direction_10m_dominant city \n",

|

| 218 |

-

"1 1.138420 71.564964 lahore \n",

|

| 219 |

-

"4 4.610250 128.659836 lahore \n",

|

| 220 |

-

"7 5.091168 44.999897 lahore \n",

|

| 221 |

-

"6 4.334974 318.366547 lahore \n",

|

| 222 |

-

"5 6.439876 296.564972 lahore \n",

|

| 223 |

-

"2 4.680000 270.000000 lahore \n",

|

| 224 |

-

"0 4.104631 37.875053 lahore \n",

|

| 225 |

-

"3 2.189795 9.462248 lahore "

|

| 226 |

-

]

|

| 227 |

-

},

|

| 228 |

-

"execution_count": 7,

|

| 229 |

-

"metadata": {},

|

| 230 |

-

"output_type": "execute_result"

|

| 231 |

-

}

|

| 232 |

-

],

|

| 233 |

-

"source": [

|

| 234 |

-

"batch_data"

|

| 235 |

-

]

|

| 236 |

-

},

|

| 237 |

-

{

|

| 238 |

-

"cell_type": "code",

|

| 239 |

-

"execution_count": 6,

|

| 240 |

-

"metadata": {},

|

| 241 |

-

"outputs": [

|

| 242 |

-

{

|

| 243 |

-

"ename": "ValueError",

|

| 244 |

-

"evalue": "feature_names mismatch: ['past_air_quality', 'temperature_2m_mean', 'precipitation_sum', 'wind_speed_10m_max', 'wind_direction_10m_dominant'] ['temperature_2m_mean', 'precipitation_sum', 'wind_speed_10m_max', 'wind_direction_10m_dominant']\nexpected past_air_quality in input data",

|

| 245 |

-

"output_type": "error",

|

| 246 |

-

"traceback": [

|

| 247 |

-

"\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

|

| 248 |

-

"\u001b[0;31mValueError\u001b[0m Traceback (most recent call last)",

|

| 249 |

-