Spaces:

Runtime error

Runtime error

app

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- Audio_Classifier.py +26 -0

- README.md +5 -5

- helper.py +281 -0

- img/bestmodelacc.png +0 -0

- img/bestmodelcm.png +0 -0

- img/bestmodelloss.png +0 -0

- model/bestmodel.h5 +3 -0

- pages/.ipynb_checkpoints/Classify by file upload-checkpoint.py +27 -0

- pages/.ipynb_checkpoints/Classify by recordings-checkpoint.py +27 -0

- pages/Classify by file upload.py +27 -0

- pages/Classify by recordings.py +27 -0

- requirements(old).txt +14 -0

- requirements.txt +16 -0

- st_audiorec/.DS_Store +0 -0

- st_audiorec/.ipynb_checkpoints/__init__-checkpoint.py +1 -0

- st_audiorec/__init__.py +1 -0

- st_audiorec/frontend/.DS_Store +0 -0

- st_audiorec/frontend/.env +6 -0

- st_audiorec/frontend/.prettierrc +5 -0

- st_audiorec/frontend/build/.DS_Store +0 -0

- st_audiorec/frontend/build/asset-manifest.json +22 -0

- st_audiorec/frontend/build/bootstrap.min.css +0 -0

- st_audiorec/frontend/build/index.html +1 -0

- st_audiorec/frontend/build/precache-manifest.4829c060d313d0b0d13d9af3b0180289.js +26 -0

- st_audiorec/frontend/build/service-worker.js +39 -0

- st_audiorec/frontend/build/static/css/2.bfbf028b.chunk.css +2 -0

- st_audiorec/frontend/build/static/css/2.bfbf028b.chunk.css.map +1 -0

- st_audiorec/frontend/build/static/js/2.270b84d8.chunk.js +0 -0

- st_audiorec/frontend/build/static/js/2.270b84d8.chunk.js.LICENSE.txt +58 -0

- st_audiorec/frontend/build/static/js/2.270b84d8.chunk.js.map +0 -0

- st_audiorec/frontend/build/static/js/main.833ba252.chunk.js +2 -0

- st_audiorec/frontend/build/static/js/main.833ba252.chunk.js.map +1 -0

- st_audiorec/frontend/build/static/js/runtime-main.11ec9aca.js +2 -0

- st_audiorec/frontend/build/static/js/runtime-main.11ec9aca.js.map +1 -0

- st_audiorec/frontend/build/styles.css +59 -0

- st_audiorec/frontend/node_modules/.DS_Store +0 -0

- st_audiorec/frontend/node_modules/.bin/acorn +1 -0

- st_audiorec/frontend/node_modules/.bin/ansi-html +1 -0

- st_audiorec/frontend/node_modules/.bin/arrow2csv +1 -0

- st_audiorec/frontend/node_modules/.bin/atob +1 -0

- st_audiorec/frontend/node_modules/.bin/autoprefixer +1 -0

- st_audiorec/frontend/node_modules/.bin/babylon +1 -0

- st_audiorec/frontend/node_modules/.bin/browserslist +1 -0

- st_audiorec/frontend/node_modules/.bin/css-blank-pseudo +1 -0

- st_audiorec/frontend/node_modules/.bin/css-has-pseudo +1 -0

- st_audiorec/frontend/node_modules/.bin/css-prefers-color-scheme +1 -0

- st_audiorec/frontend/node_modules/.bin/cssesc +1 -0

- st_audiorec/frontend/node_modules/.bin/detect +1 -0

- st_audiorec/frontend/node_modules/.bin/detect-port +1 -0

- st_audiorec/frontend/node_modules/.bin/errno +1 -0

Audio_Classifier.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_chat import message

|

| 3 |

+

from helper import model, upload, record

|

| 4 |

+

|

| 5 |

+

title = "Emotion Audio Classifier"

|

| 6 |

+

st.title(title)

|

| 7 |

+

|

| 8 |

+

st.subheader('This Web App allows user to classify Emotion from Audio File')

|

| 9 |

+

|

| 10 |

+

def add_bg_from_url():

|

| 11 |

+

st.markdown(

|

| 12 |

+

f"""

|

| 13 |

+

<style>

|

| 14 |

+

.stApp {{

|

| 15 |

+

background-image: url("https://img.freepik.com/free-vector/white-grid-line-pattern-gray-background_53876-99015.jpg? w=996&t=st=1667979021~exp=1667979621~hmac=25b2066b6c3abd08a038e86ad9579fe48db944b57f853bd8ee2a00a98f752d7b");

|

| 16 |

+

background-attachment: fixed;

|

| 17 |

+

background-size: cover

|

| 18 |

+

}}

|

| 19 |

+

</style>

|

| 20 |

+

""",

|

| 21 |

+

unsafe_allow_html=True

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

add_bg_from_url()

|

| 25 |

+

|

| 26 |

+

model()

|

README.md

CHANGED

|

@@ -1,11 +1,11 @@

|

|

| 1 |

---

|

| 2 |

-

title: Audio

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.10.0

|

| 8 |

-

app_file:

|

| 9 |

pinned: false

|

| 10 |

license: mit

|

| 11 |

---

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Emotion Audio Classifier

|

| 3 |

+

emoji: ⚡

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: gray

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.10.0

|

| 8 |

+

app_file: Audio Classifier.py

|

| 9 |

pinned: false

|

| 10 |

license: mit

|

| 11 |

---

|

helper.py

ADDED

|

@@ -0,0 +1,281 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_chat import message

|

| 3 |

+

from keras.models import load_model

|

| 4 |

+

import os

|

| 5 |

+

import streamlit as st

|

| 6 |

+

import numpy as np

|

| 7 |

+

import pandas as pd

|

| 8 |

+

from sklearn.preprocessing import normalize

|

| 9 |

+

import matplotlib.pyplot as plt

|

| 10 |

+

import librosa

|

| 11 |

+

import librosa.display

|

| 12 |

+

import IPython.display as ipd

|

| 13 |

+

import streamlit.components.v1 as components

|

| 14 |

+

from io import BytesIO

|

| 15 |

+

from scipy.io.wavfile import read, write

|

| 16 |

+

from googlesearch import search

|

| 17 |

+

import requests

|

| 18 |

+

from bs4 import BeautifulSoup

|

| 19 |

+

|

| 20 |

+

classifier = load_model('./model/bestmodel.h5')

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def prepare_test(file):

|

| 24 |

+

max_size=350

|

| 25 |

+

features=[]

|

| 26 |

+

data_ori, sample_rate = librosa.load(file)

|

| 27 |

+

data, _ = librosa.effects.trim(data_ori)

|

| 28 |

+

|

| 29 |

+

spec_bw = padding(librosa.feature.spectral_bandwidth(y = data, sr= sample_rate), 20, max_size).astype('float32')

|

| 30 |

+

cent = padding(librosa.feature.spectral_centroid(y = data, sr=sample_rate), 20, max_size).astype('float32')

|

| 31 |

+

mfcc = librosa.feature.mfcc(y=data, sr=sample_rate,n_mfcc=20)

|

| 32 |

+

mfccs = padding(normalize(mfcc, axis=1), 20, max_size).astype('float32')

|

| 33 |

+

rms = padding(librosa.feature.rms(y = data),20, max_size).astype('float32')

|

| 34 |

+

y = librosa.effects.harmonic(data)

|

| 35 |

+

tonnetz = padding(librosa.feature.tonnetz(y=y, sr=sample_rate,fmin=75),20, max_size).astype('float32')

|

| 36 |

+

image = padding(librosa.feature.chroma_cens(y = data, sr=sample_rate,fmin=75), 20, max_size).astype('float32')

|

| 37 |

+

|

| 38 |

+

image=np.dstack((image,spec_bw))

|

| 39 |

+

image=np.dstack((image,cent))

|

| 40 |

+

image=np.dstack((image,mfccs))

|

| 41 |

+

image=np.dstack((image,rms))

|

| 42 |

+

image=np.dstack((image,tonnetz))

|

| 43 |

+

features.append(image[np.newaxis,...])

|

| 44 |

+

output = np.concatenate(features,axis=0)

|

| 45 |

+

return output

|

| 46 |

+

|

| 47 |

+

def padding(array, xx, yy):

|

| 48 |

+

h = array.shape[0]

|

| 49 |

+

w = array.shape[1]

|

| 50 |

+

a = max((xx - h) // 2,0)

|

| 51 |

+

aa = max(0,xx - a - h)

|

| 52 |

+

b = max(0,(yy - w) // 2)

|

| 53 |

+

bb = max(yy - b - w,0)

|

| 54 |

+

return np.pad(array, pad_width=((a, aa), (b, bb)), mode='constant')

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

def model():

|

| 58 |

+

select = st.selectbox('Please select one to find out more about the model',

|

| 59 |

+

('Choose','Model Training History', 'Confusion Matrix', 'Train-Test Scores'))

|

| 60 |

+

if select == 'Model Training History':

|

| 61 |

+

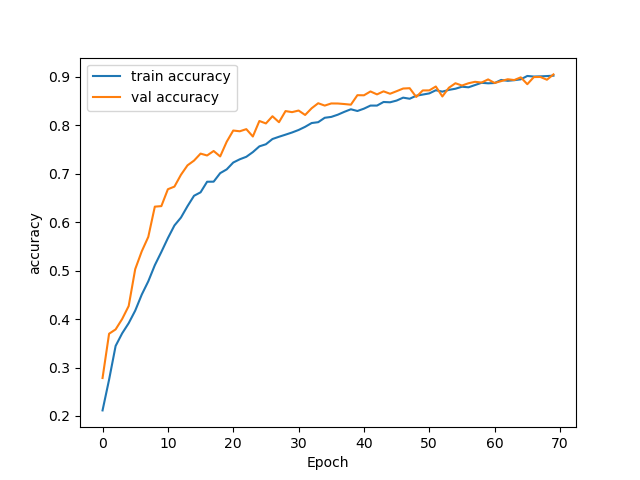

st.header('Train-Val Accuracy History')

|

| 62 |

+

st.write('Train and Validation accuracy scores are comparable this indicates that the Model is moderately trained.')

|

| 63 |

+

st.image('./img/bestmodelacc.png')

|

| 64 |

+

|

| 65 |

+

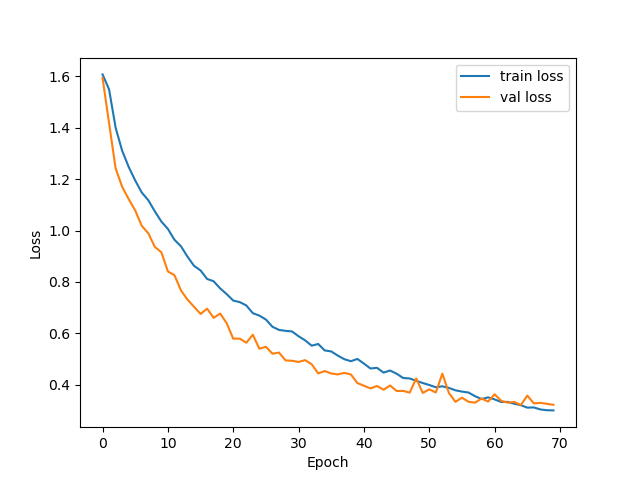

st.header('Train-Val Loss History')

|

| 66 |

+

st.write('The training was stopped with EarlyStopping() when the Validation loss score starts to saturate.')

|

| 67 |

+

st.image('./img/bestmodelloss.png')

|

| 68 |

+

|

| 69 |

+

if select == 'Confusion Matrix':

|

| 70 |

+

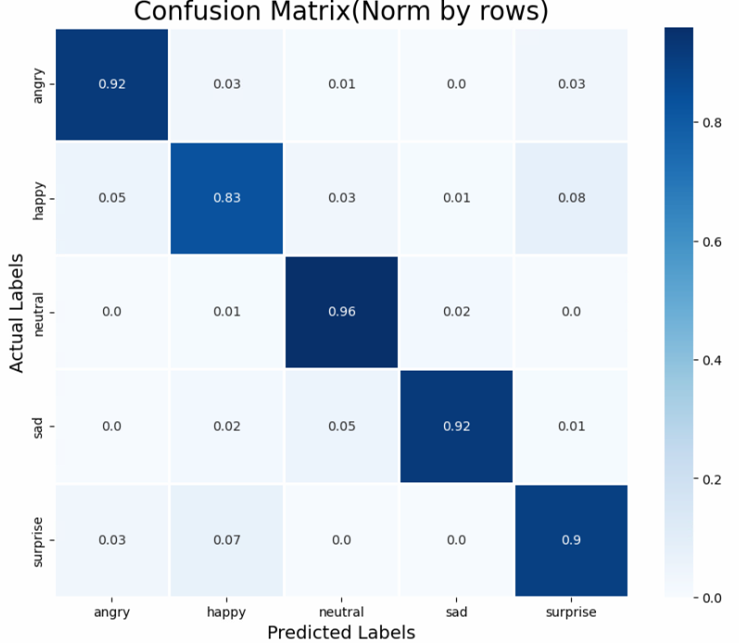

st.header('Confusion Matrix')

|

| 71 |

+

st.write('Below is the Confusion Matrix for this Model normalized by rows indicating Recall scores which are over 80%.')

|

| 72 |

+

st.image('./img/bestmodelcm.png')

|

| 73 |

+

|

| 74 |

+

if select == 'Train-Test Scores':

|

| 75 |

+

st.header('Train-Test Accuracy scores')

|

| 76 |

+

st.write('Train accuracy score is 94% and Test accuracy score is 90%. There is a 4% difference indicating that the model fitted moderately')

|

| 77 |

+

acc = [0.943, 0.908]

|

| 78 |

+

loss = [0.177, 0.304]

|

| 79 |

+

traintest_df = pd.DataFrame(list(zip(acc,loss)),columns=['Accuracy', 'loss'],index =['Train','Test'])

|

| 80 |

+

st.dataframe(traintest_df.style.format("{:.3}"))

|

| 81 |

+

|

| 82 |

+

st.header('Model Precision, Recall, F1-score & MCC')

|

| 83 |

+

st.write('Macro avg F1 score is 91%.')

|

| 84 |

+

st.write('Matthew’s correlation coefficient: 0.885')

|

| 85 |

+

prec = [0.92, 0.86, 0.91, 0.97, 0.88,0.91]

|

| 86 |

+

re = [0.92, 0.83, 0.96, 0.92, 0.90, 0.91]

|

| 87 |

+

f1 = [0.92, 0.85, 0.94, 0.94, 0.89, 0.91]

|

| 88 |

+

traintest_df2 = pd.DataFrame(list(zip(prec,re,f1)),columns=['Precision', 'Recall', 'F1-score'],index =['angry', 'happy', 'neutral', 'sad', 'surprise','macro avg'])

|

| 89 |

+

st.dataframe(traintest_df2.style.format("{:.3}"))

|

| 90 |

+

|

| 91 |

+

def plot_features(data,sample_rate):

|

| 92 |

+

fig1, ax1 = plt.subplots(figsize=(6, 2))

|

| 93 |

+

img = librosa.display.waveshow(y = data, sr=sample_rate, x_axis="time")

|

| 94 |

+

ax1.set(title = 'Sample Waveform')

|

| 95 |

+

st.pyplot(plt.gcf())

|

| 96 |

+

|

| 97 |

+

fig2, ax2 = plt.subplots(figsize=(6, 2))

|

| 98 |

+

cens = librosa.feature.chroma_cens(y = data, sr=sample_rate,fmin=75)

|

| 99 |

+

img_cens = librosa.display.specshow(cens, y_axis = 'chroma', x_axis='time', ax=ax2)

|

| 100 |

+

ax2.set(title = 'Chroma_CENS')

|

| 101 |

+

st.pyplot(plt.gcf())

|

| 102 |

+

|

| 103 |

+

fig3, ax3 = plt.subplots(figsize=(6, 2))

|

| 104 |

+

spec_bw = librosa.feature.spectral_bandwidth(y = data, sr= sample_rate)

|

| 105 |

+

cent = librosa.feature.spectral_centroid(y = data, sr=sample_rate)

|

| 106 |

+

times = librosa.times_like(spec_bw)

|

| 107 |

+

S, phase = librosa.magphase(librosa.stft(y=data))

|

| 108 |

+

librosa.display.specshow(librosa.amplitude_to_db(S, ref=np.max), y_axis='log', x_axis='time', ax=ax3)

|

| 109 |

+

ax3.fill_between(times, np.maximum(0, cent[0] - spec_bw[0]),

|

| 110 |

+

np.minimum(cent[0] + spec_bw[0], sample_rate/2),

|

| 111 |

+

alpha=0.5, label='Centroid +- bandwidth')

|

| 112 |

+

ax3.plot(times, cent[0], label='Spectral centroid', color='w')

|

| 113 |

+

ax3.legend(loc='lower right')

|

| 114 |

+

ax3.set(title='log Power spectrogram')

|

| 115 |

+

st.pyplot(plt.gcf())

|

| 116 |

+

|

| 117 |

+

fig4, ax4 = plt.subplots(figsize=(6, 2))

|

| 118 |

+

mfcc = librosa.feature.mfcc(y=data, sr=sample_rate,n_mfcc=40)

|

| 119 |

+

mfccs = normalize(mfcc, axis=1)

|

| 120 |

+

img_mfcc = librosa.display.specshow(mfccs, y_axis = 'mel', x_axis='time', ax=ax4)

|

| 121 |

+

ax4.set(title = 'Sample Mel-Frequency Cepstral Coefficients')

|

| 122 |

+

st.pyplot(plt.gcf())

|

| 123 |

+

|

| 124 |

+

fig5, ax5 = plt.subplots(figsize=(6, 2))

|

| 125 |

+

rms = librosa.feature.rms(y=data)

|

| 126 |

+

times = librosa.times_like(rms)

|

| 127 |

+

ax5.semilogy(times, rms[0], label='RMS Energy')

|

| 128 |

+

ax5.set_title(f'RMS Energy')

|

| 129 |

+

ax5.set(xticks=[])

|

| 130 |

+

ax5.legend()

|

| 131 |

+

ax5.label_outer()

|

| 132 |

+

st.pyplot(plt.gcf())

|

| 133 |

+

|

| 134 |

+

fig6, ax6= plt.subplots(figsize=(6, 2))

|

| 135 |

+

y = librosa.effects.harmonic(data)

|

| 136 |

+

tonnetz = librosa.feature.tonnetz(y=y, sr=sample_rate,fmin=75)

|

| 137 |

+

img_tonnetz = librosa.display.specshow(tonnetz,

|

| 138 |

+

y_axis='tonnetz', x_axis='time', ax=ax6)

|

| 139 |

+

ax6.set(title=f'Tonal Centroids(Tonnetz)')

|

| 140 |

+

ax6.label_outer()

|

| 141 |

+

fig6.colorbar(img_tonnetz, ax=ax6)

|

| 142 |

+

st.pyplot(plt.gcf())

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

def find_definition(emo):

|

| 147 |

+

word_to_search = emo

|

| 148 |

+

scrape_url = 'https://www.oxfordlearnersdictionaries.com/definition/english/' + word_to_search

|

| 149 |

+

|

| 150 |

+

headers = {"User-Agent": "mekmek"}

|

| 151 |

+

web_response = requests.get(scrape_url, headers=headers)

|

| 152 |

+

|

| 153 |

+

if web_response.status_code == 200:

|

| 154 |

+

soup = BeautifulSoup(web_response.text, 'html.parser')

|

| 155 |

+

|

| 156 |

+

try:

|

| 157 |

+

for sense in soup.find_all('li', class_='sense'):

|

| 158 |

+

definition = sense.find('span', class_='def').text

|

| 159 |

+

for example in soup.find_all('ul', class_='examples'):

|

| 160 |

+

example_1 = example.text.split('.')[0:1]

|

| 161 |

+

except AttributeError:

|

| 162 |

+

print('Word not found!!')

|

| 163 |

+

else:

|

| 164 |

+

print('Failed to get response...')

|

| 165 |

+

return definition, example_1

|

| 166 |

+

|

| 167 |

+

def get_search_results(emo):

|

| 168 |

+

results_lis =[]

|

| 169 |

+

results = search(f"Understanding {emo}", num_results=1)

|

| 170 |

+

for result in results:

|

| 171 |

+

results_lis.append(result)

|

| 172 |

+

result_1 = results_lis[0]

|

| 173 |

+

result_2 = results_lis[1]

|

| 174 |

+

result_3 = results_lis[2]

|

| 175 |

+

return result_1, result_2, result_3

|

| 176 |

+

|

| 177 |

+

def get_content(emo):

|

| 178 |

+

definition, example_1 = find_definition(emo)

|

| 179 |

+

result_1, result_2, result_3 = get_search_results(emo)

|

| 180 |

+

|

| 181 |

+

|

| 182 |

+

with st.expander(f"Word Definition of {emo.capitalize()}"):

|

| 183 |

+

st.write(definition.capitalize()+'.')

|

| 184 |

+

with st.expander(f'Example of {emo.capitalize()}'):

|

| 185 |

+

with st.container():

|

| 186 |

+

st.write(f'1) {example_1[0].capitalize()}'+'.')

|

| 187 |

+

|

| 188 |

+

with st.expander(f'The following links will help you understand more on {emo.capitalize()}'):

|

| 189 |

+

with st.container():

|

| 190 |

+

st.write(f"Check out this link ➡ {result_1}")

|

| 191 |

+

st.write(f"Check out this link ➡ {result_2}")

|

| 192 |

+

st.write(f"Check out this link ➡ {result_3}")

|

| 193 |

+

|

| 194 |

+

if emo == 'anger':

|

| 195 |

+

with st.expander(f'Video on {emo.capitalize()}'):

|

| 196 |

+

with st.container():

|

| 197 |

+

st.video('https://www.youtube.com/watch?v=weMeIh10cLs')

|

| 198 |

+

if emo in 'happy':

|

| 199 |

+

with st.expander(f'Video on {emo.capitalize()}'):

|

| 200 |

+

with st.container():

|

| 201 |

+

st.video('https://www.youtube.com/watch?v=FDF2DidUAyY')

|

| 202 |

+

if emo in 'sad':

|

| 203 |

+

with st.expander(f'Video on {emo.capitalize()}'):

|

| 204 |

+

with st.container():

|

| 205 |

+

st.video('https://www.youtube.com/watch?v=34rqQEkuhK4')

|

| 206 |

+

if emo in 'suprise':

|

| 207 |

+

with st.expander(f'Video on {emo.capitalize()}'):

|

| 208 |

+

with st.container():

|

| 209 |

+

st.video('https://www.youtube.com/watch?v=UYoBi0EssLE')

|

| 210 |

+

|

| 211 |

+

|

| 212 |

+

def upload():

|

| 213 |

+

upload_file = st.sidebar.file_uploader('Upload an audio .wav file. Currently max 8 seconds', type=".wav", accept_multiple_files = False)

|

| 214 |

+

|

| 215 |

+

if upload_file:

|

| 216 |

+

st.write('Sample Audio')

|

| 217 |

+

st.audio(upload_file, format='audio/wav')

|

| 218 |

+

|

| 219 |

+

if st.sidebar.button('Show Features'):

|

| 220 |

+

with st.spinner(f'Showing....'):

|

| 221 |

+

data_ori, sample_rate = librosa.load(upload_file)

|

| 222 |

+

data, _ = librosa.effects.trim(data_ori)

|

| 223 |

+

|

| 224 |

+

plot_features(data,sample_rate)

|

| 225 |

+

st.sidebar.success("Completed")

|

| 226 |

+

|

| 227 |

+

if st.sidebar.button('Classify'):

|

| 228 |

+

with st.spinner(f'Classifying....'):

|

| 229 |

+

test = prepare_test(upload_file)

|

| 230 |

+

pred = classifier.predict(test)

|

| 231 |

+

pred_df = pd.DataFrame(pred.T,index=['anger', 'happy','neutral','sad','suprise'],columns =['Scores'])

|

| 232 |

+

emo = pred_df[pred_df['Scores'] == pred_df.max().values[0]].index[0]

|

| 233 |

+

st.info(f'The predicted Emotion: {emo.upper()}')

|

| 234 |

+

st.sidebar.success("Classification completed")

|

| 235 |

+

|

| 236 |

+

if emo:

|

| 237 |

+

get_content(emo)

|

| 238 |

+

|

| 239 |

+

def record():

|

| 240 |

+

with st.spinner(f'Recording....'):

|

| 241 |

+

st.sidebar.write('To start press Start Recording and stop to finish recording')

|

| 242 |

+

parent_dir = os.path.dirname(os.path.abspath(__file__))

|

| 243 |

+

build_dir = os.path.join(parent_dir, "st_audiorec/frontend/build")

|

| 244 |

+

st_audiorec = components.declare_component("st_audiorec", path=build_dir)

|

| 245 |

+

val = st_audiorec()

|

| 246 |

+

|

| 247 |

+

|

| 248 |

+

if isinstance(val, dict):

|

| 249 |

+

st.sidebar.success("Audio Recorded")

|

| 250 |

+

ind, val = zip(*val['arr'].items())

|

| 251 |

+

ind = np.array(ind, dtype=int)

|

| 252 |

+

val = np.array(val)

|

| 253 |

+

sorted_ints = val[ind]

|

| 254 |

+

stream = BytesIO(b"".join([int(v).to_bytes(1, "big") for v in sorted_ints]))

|

| 255 |

+

wav_bytes = stream.read()

|

| 256 |

+

rate, data = read(BytesIO(wav_bytes))

|

| 257 |

+

reversed_data = data[::-1]

|

| 258 |

+

bytes_wav = bytes()

|

| 259 |

+

byte_io = BytesIO(bytes_wav)

|

| 260 |

+

write(byte_io, rate, reversed_data)

|

| 261 |

+

|

| 262 |

+

if st.sidebar.button('Show Features'):

|

| 263 |

+

with st.spinner(f'Showing....'):

|

| 264 |

+

data_ori, sample_rate = librosa.load(byte_io)

|

| 265 |

+

data, _ = librosa.effects.trim(data_ori)

|

| 266 |

+

|

| 267 |

+

plot_features(data,sample_rate)

|

| 268 |

+

st.sidebar.success("Completed")

|

| 269 |

+

|

| 270 |

+

if st.sidebar.button('Classify'):

|

| 271 |

+

with st.spinner(f'Classifying....'):

|

| 272 |

+

test = prepare_test(byte_io)

|

| 273 |

+

pred = classifier.predict(test)

|

| 274 |

+

pred_df = pd.DataFrame(pred.T,index=['anger', 'happy','neutral','sad','suprise'],columns =['Scores'])

|

| 275 |

+

emo = pred_df[pred_df['Scores'] == pred_df.max().values[0]].index[0]

|

| 276 |

+

st.info(f'The predicted Emotion: {emo.upper()}')

|

| 277 |

+

st.sidebar.success("Classification completed")

|

| 278 |

+

|

| 279 |

+

if emo:

|

| 280 |

+

get_content(emo)

|

| 281 |

+

|

img/bestmodelacc.png

ADDED

|

img/bestmodelcm.png

ADDED

|

img/bestmodelloss.png

ADDED

|

model/bestmodel.h5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b3a036e70d32322fee44116c4103c95c18ee4bd9219075cb8673743d290d25cf

|

| 3 |

+

size 29266612

|

pages/.ipynb_checkpoints/Classify by file upload-checkpoint.py

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_chat import message

|

| 3 |

+

from helper import model, upload, record

|

| 4 |

+

|

| 5 |

+

title = "Emotion Audio Classifier with uploaded file"

|

| 6 |

+

st.title(title)

|

| 7 |

+

|

| 8 |

+

st.write('Click show features to see the extracted features used for prediction.')

|

| 9 |

+

st.write('Click classify to get predictions.')

|

| 10 |

+

|

| 11 |

+

def add_bg_from_url():

|

| 12 |

+

st.markdown(

|

| 13 |

+

f"""

|

| 14 |

+

<style>

|

| 15 |

+

.stApp {{

|

| 16 |

+

background-image: url("https://img.freepik.com/free-vector/white-grid-line-pattern-gray-background_53876-99015.jpg? w=996&t=st=1667979021~exp=1667979621~hmac=25b2066b6c3abd08a038e86ad9579fe48db944b57f853bd8ee2a00a98f752d7b");

|

| 17 |

+

background-attachment: fixed;

|

| 18 |

+

background-size: cover

|

| 19 |

+

}}

|

| 20 |

+

</style>

|

| 21 |

+

""",

|

| 22 |

+

unsafe_allow_html=True

|

| 23 |

+

)

|

| 24 |

+

|

| 25 |

+

add_bg_from_url()

|

| 26 |

+

|

| 27 |

+

upload()

|

pages/.ipynb_checkpoints/Classify by recordings-checkpoint.py

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_chat import message

|

| 3 |

+

from helper import model, upload, record

|

| 4 |

+

|

| 5 |

+

title = "Emotion Audio Classifier with recordings"

|

| 6 |

+

st.title(title)

|

| 7 |

+

|

| 8 |

+

st.write('Click show features to see the extracted features used for prediction.')

|

| 9 |

+

st.write('Click classify to get predictions.')

|

| 10 |

+

|

| 11 |

+

def add_bg_from_url():

|

| 12 |

+

st.markdown(

|

| 13 |

+

f"""

|

| 14 |

+

<style>

|

| 15 |

+

.stApp {{

|

| 16 |

+

background-image: url("https://img.freepik.com/free-vector/white-grid-line-pattern-gray-background_53876-99015.jpg? w=996&t=st=1667979021~exp=1667979621~hmac=25b2066b6c3abd08a038e86ad9579fe48db944b57f853bd8ee2a00a98f752d7b");

|

| 17 |

+

background-attachment: fixed;

|

| 18 |

+

background-size: cover

|

| 19 |

+

}}

|

| 20 |

+

</style>

|

| 21 |

+

""",

|

| 22 |

+

unsafe_allow_html=True

|

| 23 |

+

)

|

| 24 |

+

|

| 25 |

+

add_bg_from_url()

|

| 26 |

+

|

| 27 |

+

record()

|

pages/Classify by file upload.py

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_chat import message

|

| 3 |

+

from helper import model, upload, record

|

| 4 |

+

|

| 5 |

+

title = "Emotion Audio Classifier with uploaded file"

|

| 6 |

+

st.title(title)

|

| 7 |

+

|

| 8 |

+

st.write('Click show features to see the extracted features used for prediction.')

|

| 9 |

+

st.write('Click classify to get predictions.')

|

| 10 |

+

|

| 11 |

+

def add_bg_from_url():

|

| 12 |

+

st.markdown(

|

| 13 |

+

f"""

|

| 14 |

+

<style>

|

| 15 |

+

.stApp {{

|

| 16 |

+

background-image: url("https://img.freepik.com/free-vector/white-grid-line-pattern-gray-background_53876-99015.jpg? w=996&t=st=1667979021~exp=1667979621~hmac=25b2066b6c3abd08a038e86ad9579fe48db944b57f853bd8ee2a00a98f752d7b");

|

| 17 |

+

background-attachment: fixed;

|

| 18 |

+

background-size: cover

|

| 19 |

+

}}

|

| 20 |

+

</style>

|

| 21 |

+

""",

|

| 22 |

+

unsafe_allow_html=True

|

| 23 |

+

)

|

| 24 |

+

|

| 25 |

+

add_bg_from_url()

|

| 26 |

+

|

| 27 |

+

upload()

|

pages/Classify by recordings.py

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_chat import message

|

| 3 |

+

from helper import model, upload, record

|

| 4 |

+

|

| 5 |

+

title = "Emotion Audio Classifier with recordings"

|

| 6 |

+

st.title(title)

|

| 7 |

+

|

| 8 |

+

st.write('Click show features to see the extracted features used for prediction.')

|

| 9 |

+

st.write('Click classify to get predictions.')

|

| 10 |

+

|

| 11 |

+

def add_bg_from_url():

|

| 12 |

+

st.markdown(

|

| 13 |

+

f"""

|

| 14 |

+

<style>

|

| 15 |

+

.stApp {{

|

| 16 |

+

background-image: url("https://img.freepik.com/free-vector/white-grid-line-pattern-gray-background_53876-99015.jpg? w=996&t=st=1667979021~exp=1667979621~hmac=25b2066b6c3abd08a038e86ad9579fe48db944b57f853bd8ee2a00a98f752d7b");

|

| 17 |

+

background-attachment: fixed;

|

| 18 |

+

background-size: cover

|

| 19 |

+

}}

|

| 20 |

+

</style>

|

| 21 |

+

""",

|

| 22 |

+

unsafe_allow_html=True

|

| 23 |

+

)

|

| 24 |

+

|

| 25 |

+

add_bg_from_url()

|

| 26 |

+

|

| 27 |

+

record()

|

requirements(old).txt

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

googlesearch_python==1.1.0

|

| 2 |

+

ipython==8.6.0

|

| 3 |

+

tensorflow==2.10.0

|

| 4 |

+

keras==2.10.0

|

| 5 |

+

librosa==0.9.2

|

| 6 |

+

matplotlib==3.6.0

|

| 7 |

+

numpy==1.22.1

|

| 8 |

+

pandas==1.4.4

|

| 9 |

+

scikit_learn==1.1.3

|

| 10 |

+

soundfile==0.11.0

|

| 11 |

+

scipy==1.7.3

|

| 12 |

+

streamlit==1.14.0

|

| 13 |

+

streamlit_chat==0.0.2.1

|

| 14 |

+

helper==2.5.0

|

requirements.txt

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

beautifulsoup4==4.9.3

|

| 2 |

+

googlesearch_python==1.1.0

|

| 3 |

+

ipython==8.6.0

|

| 4 |

+

keras==2.10.0

|

| 5 |

+

tensorflow==2.10.0

|

| 6 |

+

librosa==0.9.2

|

| 7 |

+

matplotlib==3.6.0

|

| 8 |

+

numpy==1.22.1

|

| 9 |

+

pandas==1.4.4

|

| 10 |

+

requests==2.25.1

|

| 11 |

+

scikit_learn==1.1.3

|

| 12 |

+

soundfile==0.11.0

|

| 13 |

+

scipy==1.7.3

|

| 14 |

+

streamlit==1.14.0

|

| 15 |

+

streamlit_chat==0.0.2.1

|

| 16 |

+

helper==2.5.0

|

st_audiorec/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

st_audiorec/.ipynb_checkpoints/__init__-checkpoint.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

st_audiorec/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

st_audiorec/frontend/.DS_Store

ADDED

|

Binary file (8.2 kB). View file

|

|

|

st_audiorec/frontend/.env

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Run the component's dev server on :3001

|

| 2 |

+

# (The Streamlit dev server already runs on :3000)

|

| 3 |

+

PORT=3001

|

| 4 |

+

|

| 5 |

+

# Don't automatically open the web browser on `npm run start`.

|

| 6 |

+

BROWSER=none

|

st_audiorec/frontend/.prettierrc

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"endOfLine": "lf",

|

| 3 |

+

"semi": false,

|

| 4 |

+

"trailingComma": "es5"

|

| 5 |

+

}

|

st_audiorec/frontend/build/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

st_audiorec/frontend/build/asset-manifest.json

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"files": {

|

| 3 |

+

"main.js": "./static/js/main.833ba252.chunk.js",

|

| 4 |

+

"main.js.map": "./static/js/main.833ba252.chunk.js.map",

|

| 5 |

+

"runtime-main.js": "./static/js/runtime-main.11ec9aca.js",

|

| 6 |

+

"runtime-main.js.map": "./static/js/runtime-main.11ec9aca.js.map",

|

| 7 |

+

"static/css/2.bfbf028b.chunk.css": "./static/css/2.bfbf028b.chunk.css",

|

| 8 |

+

"static/js/2.270b84d8.chunk.js": "./static/js/2.270b84d8.chunk.js",

|

| 9 |

+

"static/js/2.270b84d8.chunk.js.map": "./static/js/2.270b84d8.chunk.js.map",

|

| 10 |

+

"index.html": "./index.html",

|

| 11 |

+

"precache-manifest.4829c060d313d0b0d13d9af3b0180289.js": "./precache-manifest.4829c060d313d0b0d13d9af3b0180289.js",

|

| 12 |

+

"service-worker.js": "./service-worker.js",

|

| 13 |

+

"static/css/2.bfbf028b.chunk.css.map": "./static/css/2.bfbf028b.chunk.css.map",

|

| 14 |

+

"static/js/2.270b84d8.chunk.js.LICENSE.txt": "./static/js/2.270b84d8.chunk.js.LICENSE.txt"

|

| 15 |

+

},

|

| 16 |

+

"entrypoints": [

|

| 17 |

+

"static/js/runtime-main.11ec9aca.js",

|

| 18 |

+

"static/css/2.bfbf028b.chunk.css",

|

| 19 |

+

"static/js/2.270b84d8.chunk.js",

|

| 20 |

+

"static/js/main.833ba252.chunk.js"

|

| 21 |

+

]

|

| 22 |

+

}

|

st_audiorec/frontend/build/bootstrap.min.css

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

st_audiorec/frontend/build/index.html

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

<!doctype html><html lang="en"><head><title>Streamlit Audio Recorder Component</title><meta charset="UTF-8"/><meta name="viewport" content="width=device-width,initial-scale=1"/><meta name="theme-color" content="#000000"/><meta name="description" content="Streamlit Audio Recorder Component"/><link rel="stylesheet" href="bootstrap.min.css"/><link rel="stylesheet" href="./styles.css"/><link href="./static/css/2.bfbf028b.chunk.css" rel="stylesheet"></head><body><noscript>You need to enable JavaScript to run this app.</noscript><div id="root"></div><script>!function(e){function t(t){for(var n,l,a=t[0],p=t[1],i=t[2],c=0,s=[];c<a.length;c++)l=a[c],Object.prototype.hasOwnProperty.call(o,l)&&o[l]&&s.push(o[l][0]),o[l]=0;for(n in p)Object.prototype.hasOwnProperty.call(p,n)&&(e[n]=p[n]);for(f&&f(t);s.length;)s.shift()();return u.push.apply(u,i||[]),r()}function r(){for(var e,t=0;t<u.length;t++){for(var r=u[t],n=!0,a=1;a<r.length;a++){var p=r[a];0!==o[p]&&(n=!1)}n&&(u.splice(t--,1),e=l(l.s=r[0]))}return e}var n={},o={1:0},u=[];function l(t){if(n[t])return n[t].exports;var r=n[t]={i:t,l:!1,exports:{}};return e[t].call(r.exports,r,r.exports,l),r.l=!0,r.exports}l.m=e,l.c=n,l.d=function(e,t,r){l.o(e,t)||Object.defineProperty(e,t,{enumerable:!0,get:r})},l.r=function(e){"undefined"!=typeof Symbol&&Symbol.toStringTag&&Object.defineProperty(e,Symbol.toStringTag,{value:"Module"}),Object.defineProperty(e,"__esModule",{value:!0})},l.t=function(e,t){if(1&t&&(e=l(e)),8&t)return e;if(4&t&&"object"==typeof e&&e&&e.__esModule)return e;var r=Object.create(null);if(l.r(r),Object.defineProperty(r,"default",{enumerable:!0,value:e}),2&t&&"string"!=typeof e)for(var n in e)l.d(r,n,function(t){return e[t]}.bind(null,n));return r},l.n=function(e){var t=e&&e.__esModule?function(){return e.default}:function(){return e};return l.d(t,"a",t),t},l.o=function(e,t){return Object.prototype.hasOwnProperty.call(e,t)},l.p="./";var a=this.webpackJsonpstreamlit_component_template=this.webpackJsonpstreamlit_component_template||[],p=a.push.bind(a);a.push=t,a=a.slice();for(var i=0;i<a.length;i++)t(a[i]);var f=p;r()}([])</script><script src="./static/js/2.270b84d8.chunk.js"></script><script src="./static/js/main.833ba252.chunk.js"></script></body></html>

|

st_audiorec/frontend/build/precache-manifest.4829c060d313d0b0d13d9af3b0180289.js

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

self.__precacheManifest = (self.__precacheManifest || []).concat([

|

| 2 |

+

{

|

| 3 |

+

"revision": "de27ef444ab2ed520b64cb0c988a478a",

|

| 4 |

+

"url": "./index.html"

|

| 5 |

+

},

|

| 6 |

+

{

|

| 7 |

+

"revision": "1a47c80c81698454dced",

|

| 8 |

+

"url": "./static/css/2.bfbf028b.chunk.css"

|

| 9 |

+

},

|

| 10 |

+

{

|

| 11 |

+

"revision": "1a47c80c81698454dced",

|

| 12 |

+

"url": "./static/js/2.270b84d8.chunk.js"

|

| 13 |

+

},

|

| 14 |

+

{

|

| 15 |

+

"revision": "3fc7fb5bfeeec1534560a2c962e360a7",

|

| 16 |

+

"url": "./static/js/2.270b84d8.chunk.js.LICENSE.txt"

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"revision": "3478f4c246f37a2cbb97",

|

| 20 |

+

"url": "./static/js/main.833ba252.chunk.js"

|

| 21 |

+

},

|

| 22 |

+

{

|

| 23 |

+

"revision": "7c26bca7e16783d14d15",

|

| 24 |

+

"url": "./static/js/runtime-main.11ec9aca.js"

|

| 25 |

+

}

|

| 26 |

+

]);

|

st_audiorec/frontend/build/service-worker.js

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/**

|

| 2 |

+

* Welcome to your Workbox-powered service worker!

|

| 3 |

+

*

|

| 4 |

+

* You'll need to register this file in your web app and you should

|

| 5 |

+

* disable HTTP caching for this file too.

|

| 6 |

+

* See https://goo.gl/nhQhGp

|

| 7 |

+

*

|

| 8 |

+

* The rest of the code is auto-generated. Please don't update this file

|

| 9 |

+

* directly; instead, make changes to your Workbox build configuration

|

| 10 |

+

* and re-run your build process.

|

| 11 |

+

* See https://goo.gl/2aRDsh

|

| 12 |

+

*/

|

| 13 |

+

|

| 14 |

+

importScripts("https://storage.googleapis.com/workbox-cdn/releases/4.3.1/workbox-sw.js");

|

| 15 |

+

|

| 16 |

+

importScripts(

|

| 17 |

+

"./precache-manifest.4829c060d313d0b0d13d9af3b0180289.js"

|

| 18 |

+

);

|

| 19 |

+

|

| 20 |

+

self.addEventListener('message', (event) => {

|

| 21 |

+

if (event.data && event.data.type === 'SKIP_WAITING') {

|

| 22 |

+

self.skipWaiting();

|

| 23 |

+

}

|

| 24 |

+

});

|

| 25 |

+

|

| 26 |

+

workbox.core.clientsClaim();

|

| 27 |

+

|

| 28 |

+

/**

|

| 29 |

+

* The workboxSW.precacheAndRoute() method efficiently caches and responds to

|

| 30 |

+

* requests for URLs in the manifest.

|

| 31 |

+

* See https://goo.gl/S9QRab

|

| 32 |

+

*/

|

| 33 |

+

self.__precacheManifest = [].concat(self.__precacheManifest || []);

|

| 34 |

+

workbox.precaching.precacheAndRoute(self.__precacheManifest, {});

|

| 35 |

+

|

| 36 |

+

workbox.routing.registerNavigationRoute(workbox.precaching.getCacheKeyForURL("./index.html"), {

|

| 37 |

+

|

| 38 |

+

blacklist: [/^\/_/,/\/[^/?]+\.[^/]+$/],

|

| 39 |

+

});

|

st_audiorec/frontend/build/static/css/2.bfbf028b.chunk.css

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

._3ybTi{margin:2em;padding:.5em;border:2px solid #000;font-size:2em;text-align:center}

|

| 2 |

+

/*# sourceMappingURL=2.bfbf028b.chunk.css.map */

|

st_audiorec/frontend/build/static/css/2.bfbf028b.chunk.css.map

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"version":3,"sources":["index.css"],"names":[],"mappings":"AAEA,QACE,UAAW,CACX,YAAc,CACd,qBAAsB,CACtB,aAAc,CACd,iBACF","file":"2.bfbf028b.chunk.css","sourcesContent":["/* add css module styles here (optional) */\n\n._3ybTi {\n margin: 2em;\n padding: 0.5em;\n border: 2px solid #000;\n font-size: 2em;\n text-align: center;\n}\n"]}

|

st_audiorec/frontend/build/static/js/2.270b84d8.chunk.js

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

st_audiorec/frontend/build/static/js/2.270b84d8.chunk.js.LICENSE.txt

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/*

|

| 2 |

+

object-assign

|

| 3 |

+

(c) Sindre Sorhus

|

| 4 |

+

@license MIT

|

| 5 |

+

*/

|

| 6 |

+

|

| 7 |

+

/**

|

| 8 |

+

* @license

|

| 9 |

+

* Copyright 2018-2021 Streamlit Inc.

|

| 10 |

+

*

|

| 11 |

+

* Licensed under the Apache License, Version 2.0 (the "License");

|

| 12 |

+

* you may not use this file except in compliance with the License.

|

| 13 |

+

* You may obtain a copy of the License at

|

| 14 |

+

*

|

| 15 |

+

* http://www.apache.org/licenses/LICENSE-2.0

|

| 16 |

+

*

|

| 17 |

+

* Unless required by applicable law or agreed to in writing, software

|

| 18 |

+

* distributed under the License is distributed on an "AS IS" BASIS,

|

| 19 |

+

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 20 |

+

* See the License for the specific language governing permissions and

|

| 21 |

+

* limitations under the License.

|

| 22 |

+

*/

|

| 23 |

+

|

| 24 |

+

/** @license React v0.19.1

|

| 25 |

+

* scheduler.production.min.js

|

| 26 |

+

*

|

| 27 |

+

* Copyright (c) Facebook, Inc. and its affiliates.

|

| 28 |

+

*

|

| 29 |

+

* This source code is licensed under the MIT license found in the

|

| 30 |

+

* LICENSE file in the root directory of this source tree.

|

| 31 |

+

*/

|

| 32 |

+

|

| 33 |

+

/** @license React v16.13.1

|

| 34 |

+

* react-is.production.min.js

|

| 35 |

+

*

|

| 36 |

+

* Copyright (c) Facebook, Inc. and its affiliates.

|

| 37 |

+

*

|

| 38 |

+

* This source code is licensed under the MIT license found in the

|

| 39 |

+

* LICENSE file in the root directory of this source tree.

|

| 40 |

+

*/

|

| 41 |

+

|

| 42 |

+

/** @license React v16.14.0

|

| 43 |

+

* react-dom.production.min.js

|

| 44 |

+

*

|

| 45 |

+

* Copyright (c) Facebook, Inc. and its affiliates.

|

| 46 |

+

*

|

| 47 |

+

* This source code is licensed under the MIT license found in the

|

| 48 |

+

* LICENSE file in the root directory of this source tree.

|

| 49 |

+

*/

|

| 50 |

+

|

| 51 |

+

/** @license React v16.14.0

|

| 52 |

+

* react.production.min.js

|

| 53 |

+

*

|

| 54 |

+

* Copyright (c) Facebook, Inc. and its affiliates.

|

| 55 |

+

*

|

| 56 |

+

* This source code is licensed under the MIT license found in the

|

| 57 |

+

* LICENSE file in the root directory of this source tree.

|

| 58 |

+

*/

|

st_audiorec/frontend/build/static/js/2.270b84d8.chunk.js.map

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

st_audiorec/frontend/build/static/js/main.833ba252.chunk.js

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

(this.webpackJsonpstreamlit_component_template=this.webpackJsonpstreamlit_component_template||[]).push([[0],{17:function(t,e,a){t.exports=a(28)},28:function(t,e,a){"use strict";a.r(e);var n=a(6),o=a.n(n),r=a(15),c=a.n(r),i=a(0),l=a(1),s=a(2),u=a(3),d=a(8),p=a(11),m=(a(27),function(t){Object(s.a)(a,t);var e=Object(u.a)(a);function a(){var t;Object(l.a)(this,a);for(var n=arguments.length,r=new Array(n),c=0;c<n;c++)r[c]=arguments[c];return(t=e.call.apply(e,[this].concat(r))).state={isFocused:!1,recordState:null,audioDataURL:"",reset:!1},t.render=function(){var e=t.props.theme,a={},n=t.state.recordState;if(e){var r="1px solid ".concat(t.state.isFocused?e.primaryColor:"gray");a.border=r,a.outline=r}return o.a.createElement("span",null,o.a.createElement("div",null,o.a.createElement("button",{id:"record",onClick:t.onClick_start},"Start Recording"),o.a.createElement("button",{id:"stop",onClick:t.onClick_stop},"Stop"),o.a.createElement("button",{id:"reset",onClick:t.onClick_reset},"Reset"),o.a.createElement("button",{id:"continue",onClick:t.onClick_continue},"Download"),o.a.createElement(p.b,{state:n,onStop:t.onStop_audio,type:"audio/wav",backgroundColor:"rgb(255, 255, 255)",foregroundColor:"rgb(255,76,75)",canvasWidth:450,canvasHeight:100}),o.a.createElement("audio",{id:"audio",controls:!0,src:t.state.audioDataURL})))},t.onClick_start=function(){t.setState({reset:!1,audioDataURL:"",recordState:p.a.START}),d.a.setComponentValue("")},t.onClick_stop=function(){t.setState({reset:!1,recordState:p.a.STOP})},t.onClick_reset=function(){t.setState({reset:!0,audioDataURL:"",recordState:p.a.STOP}),d.a.setComponentValue("")},t.onClick_continue=function(){if(""!==t.state.audioDataURL){var e=(new Date).toLocaleString(),a="streamlit_audio_"+(e=(e=(e=e.replace(" ","")).replace(/_/g,"")).replace(",",""))+".wav",n=document.createElement("a");n.style.display="none",n.href=t.state.audioDataURL,n.download=a,document.body.appendChild(n),n.click()}},t.onStop_audio=function(e){!0===t.state.reset?(t.setState({audioDataURL:""}),d.a.setComponentValue("")):(t.setState({audioDataURL:e.url}),fetch(e.url).then((function(t){return t.blob()})).then((function(t){return new Response(t).arrayBuffer()})).then((function(t){d.a.setComponentValue({arr:new Uint8Array(t)})})))},t}return Object(i.a)(a)}(d.b)),f=Object(d.c)(m);d.a.setComponentReady(),d.a.setFrameHeight(),c.a.render(o.a.createElement(o.a.StrictMode,null,o.a.createElement(f,null)),document.getElementById("root"))}},[[17,1,2]]]);

|

| 2 |

+

//# sourceMappingURL=main.833ba252.chunk.js.map

|

st_audiorec/frontend/build/static/js/main.833ba252.chunk.js.map

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"version":3,"sources":["StreamlitAudioRecorder.tsx","index.tsx"],"names":["StAudioRec","state","isFocused","recordState","audioDataURL","reset","render","theme","props","style","borderStyling","primaryColor","border","outline","id","onClick","onClick_start","onClick_stop","onClick_reset","onClick_continue","onStop","onStop_audio","type","backgroundColor","foregroundColor","canvasWidth","canvasHeight","controls","src","setState","RecordState","START","Streamlit","setComponentValue","STOP","datetime","Date","toLocaleString","filename","replace","a","document","createElement","display","href","download","body","appendChild","click","data","url","fetch","then","ctx","blob","Response","arrayBuffer","buffer","Uint8Array","StreamlitComponentBase","withStreamlitConnection","setComponentReady","setFrameHeight","ReactDOM","StrictMode","getElementById"],"mappings":"wQAiBMA,G,kNACGC,MAAQ,CAAEC,WAAW,EAAOC,YAAa,KAAMC,aAAc,GAAIC,OAAO,G,EAExEC,OAAS,WAMd,IAAQC,EAAU,EAAKC,MAAfD,MACFE,EAA6B,GAE3BN,EAAgB,EAAKF,MAArBE,YAGR,GAAII,EAAO,CAGT,IAAMG,EAAa,oBACjB,EAAKT,MAAMC,UAAYK,EAAMI,aAAe,QAC9CF,EAAMG,OAASF,EACfD,EAAMI,QAAUH,EAGlB,OACE,8BACE,6BACE,4BAAQI,GAAG,SAASC,QAAS,EAAKC,eAAlC,mBAGA,4BAAQF,GAAG,OAAOC,QAAS,EAAKE,cAAhC,QAGA,4BAAQH,GAAG,QAAQC,QAAS,EAAKG,eAAjC,SAIA,4BAAQJ,GAAG,WAAWC,QAAS,EAAKI,kBAApC,YAIA,kBAAC,IAAD,CACElB,MAAOE,EACPiB,OAAQ,EAAKC,aACbC,KAAK,YACLC,gBAAgB,qBAChBC,gBAAgB,iBAChBC,YAAa,IACbC,aAAc,MAGhB,2BACEZ,GAAG,QACHa,UAAQ,EACRC,IAAK,EAAK3B,MAAMG,kB,EASlBY,cAAgB,WACtB,EAAKa,SAAS,CACZxB,OAAO,EACPD,aAAc,GACdD,YAAa2B,IAAYC,QAE3BC,IAAUC,kBAAkB,K,EAGtBhB,aAAe,WACrB,EAAKY,SAAS,CACZxB,OAAO,EACPF,YAAa2B,IAAYI,Q,EAIrBhB,cAAgB,WACtB,EAAKW,SAAS,CACZxB,OAAO,EACPD,aAAc,GACdD,YAAa2B,IAAYI,OAE3BF,IAAUC,kBAAkB,K,EAGtBd,iBAAmB,WACzB,GAAgC,KAA5B,EAAKlB,MAAMG,aACf,CAEE,IAAI+B,GAAW,IAAIC,MAAOC,iBAItBC,EAAW,oBADfH,GADAA,GADAA,EAAWA,EAASI,QAAQ,IAAK,KACbA,QAAQ,KAAM,KACdA,QAAQ,IAAK,KACc,OAGzCC,EAAIC,SAASC,cAAc,KACjCF,EAAE/B,MAAMkC,QAAU,OAClBH,EAAEI,KAAO,EAAK3C,MAAMG,aACpBoC,EAAEK,SAAWP,EACbG,SAASK,KAAKC,YAAYP,GAC1BA,EAAEQ,U,EAIE3B,aAAe,SAAC4B,IACG,IAArB,EAAKhD,MAAMI,OAEb,EAAKwB,SAAS,CACZzB,aAAc,KAEhB4B,IAAUC,kBAAkB,MAE5B,EAAKJ,SAAS,CACZzB,aAAc6C,EAAKC,MAGrBC,MAAMF,EAAKC,KAAKE,MAAK,SAASC,GAC5B,OAAOA,EAAIC,UACVF,MAAK,SAASE,GAGf,OAAQ,IAAIC,SAASD,GAAOE,iBAC3BJ,MAAK,SAASK,GACfzB,IAAUC,kBAAkB,CAC1B,IAAO,IAAIyB,WAAWD,U,yBAhIPE,MA8IVC,cAAwB5D,GAIvCgC,IAAU6B,oBAIV7B,IAAU8B,iBCnKVC,IAASzD,OACP,kBAAC,IAAM0D,WAAP,KACE,kBAAC,EAAD,OAEFvB,SAASwB,eAAe,W","file":"static/js/main.833ba252.chunk.js","sourcesContent":["import {\n Streamlit,\n StreamlitComponentBase,\n withStreamlitConnection,\n} from \"streamlit-component-lib\"\nimport React, { ReactNode } from \"react\"\n\nimport AudioReactRecorder, { RecordState } from 'audio-react-recorder'\nimport 'audio-react-recorder/dist/index.css'\n\ninterface State {\n isFocused: boolean\n recordState: null\n audioDataURL: string\n reset: boolean\n}\n\nclass StAudioRec extends StreamlitComponentBase<State> {\n public state = { isFocused: false, recordState: null, audioDataURL: '', reset: false}\n\n public render = (): ReactNode => {\n // Arguments that are passed to the plugin in Python are accessible\n\n // Streamlit sends us a theme object via props that we can use to ensure\n // that our component has visuals that match the active theme in a\n // streamlit app.\n const { theme } = this.props\n const style: React.CSSProperties = {}\n\n const { recordState } = this.state\n\n // compatibility with older vers of Streamlit that don't send theme object.\n if (theme) {\n // Use the theme object to style our button border. Alternatively, the\n // theme style is defined in CSS vars.\n const borderStyling = `1px solid ${\n this.state.isFocused ? theme.primaryColor : \"gray\"}`\n style.border = borderStyling\n style.outline = borderStyling\n }\n\n return (\n <span>\n <div>\n <button id='record' onClick={this.onClick_start}>\n Start Recording\n </button>\n <button id='stop' onClick={this.onClick_stop}>\n Stop\n </button>\n <button id='reset' onClick={this.onClick_reset}>\n Reset\n </button>\n\n <button id='continue' onClick={this.onClick_continue}>\n Download\n </button>\n\n <AudioReactRecorder\n state={recordState}\n onStop={this.onStop_audio}\n type='audio/wav'\n backgroundColor='rgb(255, 255, 255)'\n foregroundColor='rgb(255,76,75)'\n canvasWidth={450}\n canvasHeight={100}\n />\n\n <audio\n id='audio'\n controls\n src={this.state.audioDataURL}\n />\n\n </div>\n </span>\n )\n }\n\n\n private onClick_start = () => {\n this.setState({\n reset: false,\n audioDataURL: '',\n recordState: RecordState.START\n })\n Streamlit.setComponentValue('')\n }\n\n private onClick_stop = () => {\n this.setState({\n reset: false,\n recordState: RecordState.STOP\n })\n }\n\n private onClick_reset = () => {\n this.setState({\n reset: true,\n audioDataURL: '',\n recordState: RecordState.STOP\n })\n Streamlit.setComponentValue('')\n }\n\n private onClick_continue = () => {\n if (this.state.audioDataURL !== '')\n {\n // get datetime string for filename\n let datetime = new Date().toLocaleString();\n datetime = datetime.replace(' ', '');\n datetime = datetime.replace(/_/g, '');\n datetime = datetime.replace(',', '');\n var filename = 'streamlit_audio_' + datetime + '.wav';\n\n // auromatically trigger download\n const a = document.createElement('a');\n a.style.display = 'none';\n a.href = this.state.audioDataURL;\n a.download = filename;\n document.body.appendChild(a);\n a.click();\n }\n }\n\n private onStop_audio = (data) => {\n if (this.state.reset === true)\n {\n this.setState({\n audioDataURL: ''\n })\n Streamlit.setComponentValue('')\n }else{\n this.setState({\n audioDataURL: data.url\n })\n\n fetch(data.url).then(function(ctx){\n return ctx.blob()\n }).then(function(blob){\n // converting blob to arrayBuffer, this process step needs to be be improved\n // this operation's time complexity scales exponentially with audio length\n return (new Response(blob)).arrayBuffer()\n }).then(function(buffer){\n Streamlit.setComponentValue({\n \"arr\": new Uint8Array(buffer)\n })\n })\n\n }\n\n\n }\n}\n\n// \"withStreamlitConnection\" is a wrapper function. It bootstraps the\n// connection between your component and the Streamlit app, and handles\n// passing arguments from Python -> Component.\n// You don't need to edit withStreamlitConnection (but you're welcome to!).\nexport default withStreamlitConnection(StAudioRec)\n\n// Tell Streamlit we're ready to start receiving data. We won't get our\n// first RENDER_EVENT until we call this function.\nStreamlit.setComponentReady()\n\n// Finally, tell Streamlit to update our initial height. We omit the\n// `height` parameter here to have it default to our scrollHeight.\nStreamlit.setFrameHeight()\n","import React from \"react\"\nimport ReactDOM from \"react-dom\"\nimport StAudioRec from \"./StreamlitAudioRecorder\"\n\nReactDOM.render(\n <React.StrictMode>\n <StAudioRec />\n </React.StrictMode>,\n document.getElementById(\"root\")\n)\n"],"sourceRoot":""}

|

st_audiorec/frontend/build/static/js/runtime-main.11ec9aca.js

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

!function(e){function t(t){for(var n,l,a=t[0],p=t[1],i=t[2],c=0,s=[];c<a.length;c++)l=a[c],Object.prototype.hasOwnProperty.call(o,l)&&o[l]&&s.push(o[l][0]),o[l]=0;for(n in p)Object.prototype.hasOwnProperty.call(p,n)&&(e[n]=p[n]);for(f&&f(t);s.length;)s.shift()();return u.push.apply(u,i||[]),r()}function r(){for(var e,t=0;t<u.length;t++){for(var r=u[t],n=!0,a=1;a<r.length;a++){var p=r[a];0!==o[p]&&(n=!1)}n&&(u.splice(t--,1),e=l(l.s=r[0]))}return e}var n={},o={1:0},u=[];function l(t){if(n[t])return n[t].exports;var r=n[t]={i:t,l:!1,exports:{}};return e[t].call(r.exports,r,r.exports,l),r.l=!0,r.exports}l.m=e,l.c=n,l.d=function(e,t,r){l.o(e,t)||Object.defineProperty(e,t,{enumerable:!0,get:r})},l.r=function(e){"undefined"!==typeof Symbol&&Symbol.toStringTag&&Object.defineProperty(e,Symbol.toStringTag,{value:"Module"}),Object.defineProperty(e,"__esModule",{value:!0})},l.t=function(e,t){if(1&t&&(e=l(e)),8&t)return e;if(4&t&&"object"===typeof e&&e&&e.__esModule)return e;var r=Object.create(null);if(l.r(r),Object.defineProperty(r,"default",{enumerable:!0,value:e}),2&t&&"string"!=typeof e)for(var n in e)l.d(r,n,function(t){return e[t]}.bind(null,n));return r},l.n=function(e){var t=e&&e.__esModule?function(){return e.default}:function(){return e};return l.d(t,"a",t),t},l.o=function(e,t){return Object.prototype.hasOwnProperty.call(e,t)},l.p="./";var a=this.webpackJsonpstreamlit_component_template=this.webpackJsonpstreamlit_component_template||[],p=a.push.bind(a);a.push=t,a=a.slice();for(var i=0;i<a.length;i++)t(a[i]);var f=p;r()}([]);

|

| 2 |

+

//# sourceMappingURL=runtime-main.11ec9aca.js.map

|

st_audiorec/frontend/build/static/js/runtime-main.11ec9aca.js.map

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+