Spaces:

Running

Running

Vincentqyw

commited on

Commit

•

9223079

1

Parent(s):

71bbcb3

add: files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .gitignore +19 -0

- .gitmodules +45 -0

- README.md +107 -12

- app.py +291 -0

- assets/demo.gif +3 -0

- assets/gui.jpg +0 -0

- datasets/.gitignore +0 -0

- datasets/lines/terrace0.JPG +0 -0

- datasets/lines/terrace1.JPG +0 -0

- datasets/sacre_coeur/README.md +3 -0

- datasets/sacre_coeur/mapping/02928139_3448003521.jpg +0 -0

- datasets/sacre_coeur/mapping/03903474_1471484089.jpg +0 -0

- datasets/sacre_coeur/mapping/10265353_3838484249.jpg +0 -0

- datasets/sacre_coeur/mapping/17295357_9106075285.jpg +0 -0

- datasets/sacre_coeur/mapping/32809961_8274055477.jpg +0 -0

- datasets/sacre_coeur/mapping/44120379_8371960244.jpg +0 -0

- datasets/sacre_coeur/mapping/51091044_3486849416.jpg +0 -0

- datasets/sacre_coeur/mapping/60584745_2207571072.jpg +0 -0

- datasets/sacre_coeur/mapping/71295362_4051449754.jpg +0 -0

- datasets/sacre_coeur/mapping/93341989_396310999.jpg +0 -0

- extra_utils/__init__.py +0 -0

- extra_utils/plotting.py +504 -0

- extra_utils/utils.py +182 -0

- extra_utils/visualize_util.py +642 -0

- hloc/__init__.py +31 -0

- hloc/extract_features.py +516 -0

- hloc/extractors/__init__.py +0 -0

- hloc/extractors/alike.py +52 -0

- hloc/extractors/cosplace.py +44 -0

- hloc/extractors/d2net.py +57 -0

- hloc/extractors/darkfeat.py +57 -0

- hloc/extractors/dedode.py +102 -0

- hloc/extractors/dir.py +76 -0

- hloc/extractors/disk.py +32 -0

- hloc/extractors/dog.py +131 -0

- hloc/extractors/example.py +58 -0

- hloc/extractors/fire.py +73 -0

- hloc/extractors/fire_local.py +90 -0

- hloc/extractors/lanet.py +53 -0

- hloc/extractors/netvlad.py +147 -0

- hloc/extractors/openibl.py +26 -0

- hloc/extractors/r2d2.py +61 -0

- hloc/extractors/rekd.py +53 -0

- hloc/extractors/superpoint.py +44 -0

- hloc/match_dense.py +384 -0

- hloc/match_features.py +389 -0

- hloc/matchers/__init__.py +3 -0

- hloc/matchers/adalam.py +69 -0

- hloc/matchers/aspanformer.py +76 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

build/

|

| 2 |

+

|

| 3 |

+

lib/

|

| 4 |

+

bin/

|

| 5 |

+

|

| 6 |

+

cmake_modules/

|

| 7 |

+

cmake-build-debug/

|

| 8 |

+

.idea/

|

| 9 |

+

.vscode/

|

| 10 |

+

*.pyc

|

| 11 |

+

flagged

|

| 12 |

+

.ipynb_checkpoints

|

| 13 |

+

__pycache__

|

| 14 |

+

Untitled*

|

| 15 |

+

experiments

|

| 16 |

+

third_party/REKD

|

| 17 |

+

Dockerfile

|

| 18 |

+

hloc/matchers/dedode.py

|

| 19 |

+

gradio_cached_examples

|

.gitmodules

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[submodule "third_party/Roma"]

|

| 2 |

+

path = third_party/Roma

|

| 3 |

+

url = https://github.com/Vincentqyw/RoMa.git

|

| 4 |

+

[submodule "third_party/SuperGluePretrainedNetwork"]

|

| 5 |

+

path = third_party/SuperGluePretrainedNetwork

|

| 6 |

+

url = https://github.com/magicleap/SuperGluePretrainedNetwork.git

|

| 7 |

+

[submodule "third_party/SOLD2"]

|

| 8 |

+

path = third_party/SOLD2

|

| 9 |

+

url = https://github.com/cvg/SOLD2.git

|

| 10 |

+

[submodule "third_party/GlueStick"]

|

| 11 |

+

path = third_party/GlueStick

|

| 12 |

+

url = https://github.com/cvg/GlueStick.git

|

| 13 |

+

[submodule "third_party/ASpanFormer"]

|

| 14 |

+

path = third_party/ASpanFormer

|

| 15 |

+

url = https://github.com/Vincentqyw/ml-aspanformer.git

|

| 16 |

+

[submodule "third_party/TopicFM"]

|

| 17 |

+

path = third_party/TopicFM

|

| 18 |

+

url = https://github.com/Vincentqyw/TopicFM.git

|

| 19 |

+

[submodule "third_party/d2net"]

|

| 20 |

+

path = third_party/d2net

|

| 21 |

+

url = https://github.com/Vincentqyw/d2-net.git

|

| 22 |

+

[submodule "third_party/r2d2"]

|

| 23 |

+

path = third_party/r2d2

|

| 24 |

+

url = https://github.com/naver/r2d2.git

|

| 25 |

+

[submodule "third_party/DKM"]

|

| 26 |

+

path = third_party/DKM

|

| 27 |

+

url = https://github.com/Vincentqyw/DKM.git

|

| 28 |

+

[submodule "third_party/ALIKE"]

|

| 29 |

+

path = third_party/ALIKE

|

| 30 |

+

url = https://github.com/Shiaoming/ALIKE.git

|

| 31 |

+

[submodule "third_party/lanet"]

|

| 32 |

+

path = third_party/lanet

|

| 33 |

+

url = https://github.com/wangch-g/lanet.git

|

| 34 |

+

[submodule "third_party/LightGlue"]

|

| 35 |

+

path = third_party/LightGlue

|

| 36 |

+

url = https://github.com/cvg/LightGlue.git

|

| 37 |

+

[submodule "third_party/SGMNet"]

|

| 38 |

+

path = third_party/SGMNet

|

| 39 |

+

url = https://github.com/vdvchen/SGMNet.git

|

| 40 |

+

[submodule "third_party/DarkFeat"]

|

| 41 |

+

path = third_party/DarkFeat

|

| 42 |

+

url = https://github.com/THU-LYJ-Lab/DarkFeat.git

|

| 43 |

+

[submodule "third_party/DeDoDe"]

|

| 44 |

+

path = third_party/DeDoDe

|

| 45 |

+

url = https://github.com/Parskatt/DeDoDe.git

|

README.md

CHANGED

|

@@ -1,12 +1,107 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[![Contributors][contributors-shield]][contributors-url]

|

| 2 |

+

[![Forks][forks-shield]][forks-url]

|

| 3 |

+

[![Stargazers][stars-shield]][stars-url]

|

| 4 |

+

[![Issues][issues-shield]][issues-url]

|

| 5 |

+

|

| 6 |

+

<p align="center">

|

| 7 |

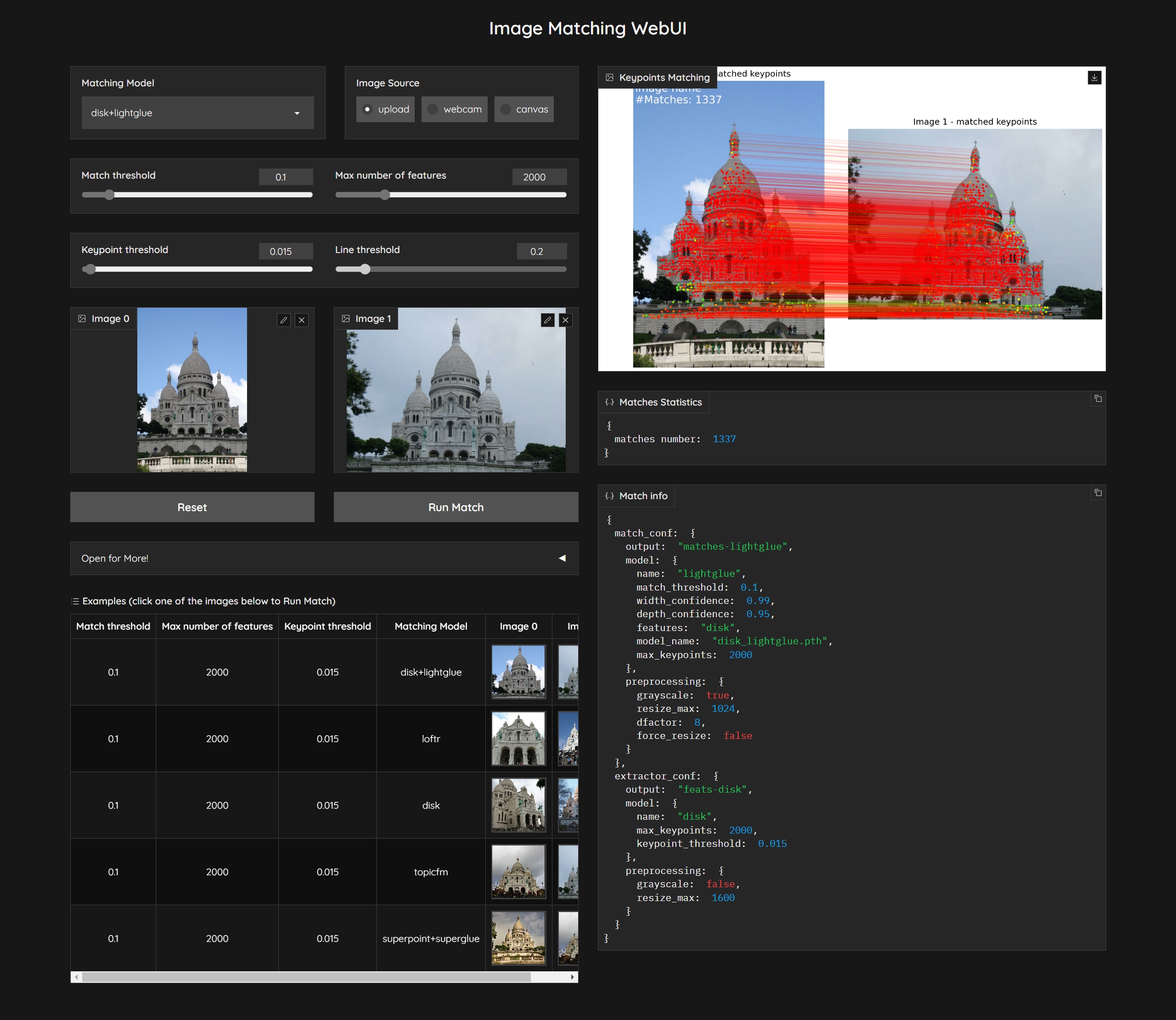

+

<h1 align="center"><br><ins>Image Matching WebUI</ins><br>find matches between 2 images</h1>

|

| 8 |

+

</p>

|

| 9 |

+

|

| 10 |

+

## Description

|

| 11 |

+

|

| 12 |

+

This simple tool efficiently matches image pairs using multiple famous image matching algorithms. The tool features a Graphical User Interface (GUI) designed using [gradio](https://gradio.app/). You can effortlessly select two images and a matching algorithm and obtain a precise matching result.

|

| 13 |

+

**Note**: the images source can be either local images or webcam images.

|

| 14 |

+

|

| 15 |

+

Here is a demo of the tool:

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

The tool currently supports various popular image matching algorithms, namely:

|

| 20 |

+

- [x] [LightGlue](https://github.com/cvg/LightGlue), ICCV 2023

|

| 21 |

+

- [x] [DeDoDe](https://github.com/Parskatt/DeDoDe), TBD

|

| 22 |

+

- [x] [DarkFeat](https://github.com/THU-LYJ-Lab/DarkFeat), AAAI 2023

|

| 23 |

+

- [ ] [ASTR](https://github.com/ASTR2023/ASTR), CVPR 2023

|

| 24 |

+

- [ ] [SEM](https://github.com/SEM2023/SEM), CVPR 2023

|

| 25 |

+

- [ ] [DeepLSD](https://github.com/cvg/DeepLSD), CVPR 2023

|

| 26 |

+

- [x] [GlueStick](https://github.com/cvg/GlueStick), ArXiv 2023

|

| 27 |

+

- [ ] [ConvMatch](https://github.com/SuhZhang/ConvMatch), AAAI 2023

|

| 28 |

+

- [x] [SOLD2](https://github.com/cvg/SOLD2), CVPR 2021

|

| 29 |

+

- [ ] [LineTR](https://github.com/yosungho/LineTR), RA-L 2021

|

| 30 |

+

- [x] [DKM](https://github.com/Parskatt/DKM), CVPR 2023

|

| 31 |

+

- [x] [RoMa](https://github.com/Vincentqyw/RoMa), Arxiv 2023

|

| 32 |

+

- [ ] [NCMNet](https://github.com/xinliu29/NCMNet), CVPR 2023

|

| 33 |

+

- [x] [TopicFM](https://github.com/Vincentqyw/TopicFM), AAAI 2023

|

| 34 |

+

- [x] [AspanFormer](https://github.com/Vincentqyw/ml-aspanformer), ECCV 2022

|

| 35 |

+

- [x] [LANet](https://github.com/wangch-g/lanet), ACCV 2022

|

| 36 |

+

- [ ] [LISRD](https://github.com/rpautrat/LISRD), ECCV 2022

|

| 37 |

+

- [ ] [REKD](https://github.com/bluedream1121/REKD), CVPR 2022

|

| 38 |

+

- [x] [ALIKE](https://github.com/Shiaoming/ALIKE), ArXiv 2022

|

| 39 |

+

- [x] [SGMNet](https://github.com/vdvchen/SGMNet), ICCV 2021

|

| 40 |

+

- [x] [SuperPoint](https://github.com/magicleap/SuperPointPretrainedNetwork), CVPRW 2018

|

| 41 |

+

- [x] [SuperGlue](https://github.com/magicleap/SuperGluePretrainedNetwork), CVPR 2020

|

| 42 |

+

- [x] [D2Net](https://github.com/Vincentqyw/d2-net), CVPR 2019

|

| 43 |

+

- [x] [R2D2](https://github.com/naver/r2d2), NeurIPS 2019

|

| 44 |

+

- [x] [DISK](https://github.com/cvlab-epfl/disk), NeurIPS 2020

|

| 45 |

+

- [ ] [Key.Net](https://github.com/axelBarroso/Key.Net), ICCV 2019

|

| 46 |

+

- [ ] [OANet](https://github.com/zjhthu/OANet), ICCV 2019

|

| 47 |

+

- [ ] [SOSNet](https://github.com/scape-research/SOSNet), CVPR 2019

|

| 48 |

+

- [x] [SIFT](https://docs.opencv.org/4.x/da/df5/tutorial_py_sift_intro.html), IJCV 2004

|

| 49 |

+

|

| 50 |

+

## How to use

|

| 51 |

+

|

| 52 |

+

### requirements

|

| 53 |

+

``` bash

|

| 54 |

+

git clone --recursive https://github.com/Vincentqyw/image-matching-webui.git

|

| 55 |

+

cd image-matching-webui

|

| 56 |

+

conda env create -f environment.yaml

|

| 57 |

+

conda activate imw

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

### run demo

|

| 61 |

+

``` bash

|

| 62 |

+

python3 ./app.py

|

| 63 |

+

```

|

| 64 |

+

then open http://localhost:7860 in your browser.

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

### Add your own feature / matcher

|

| 69 |

+

|

| 70 |

+

I provide an example to add local feature in [hloc/extractors/example.py](hloc/extractors/example.py). Then add feature settings in `confs` in file [hloc/extract_features.py](hloc/extract_features.py). Last step is adding some settings to `model_zoo` in file [extra_utils/utils.py](extra_utils/utils.py).

|

| 71 |

+

|

| 72 |

+

## Contributions welcome!

|

| 73 |

+

|

| 74 |

+

External contributions are very much welcome. Please follow the [PEP8 style guidelines](https://www.python.org/dev/peps/pep-0008/) using a linter like flake8 (reformat using command `python -m black .`). This is a non-exhaustive list of features that might be valuable additions:

|

| 75 |

+

|

| 76 |

+

- [x] add webcam support

|

| 77 |

+

- [x] add [line feature matching](https://github.com/Vincentqyw/LineSegmentsDetection) algorithms

|

| 78 |

+

- [x] example to add a new feature extractor / matcher

|

| 79 |

+

- [ ] ransac to filter outliers

|

| 80 |

+

- [ ] support export matches to colmap ([#issue 6](https://github.com/Vincentqyw/image-matching-webui/issues/6))

|

| 81 |

+

- [ ] add config file to set default parameters

|

| 82 |

+

- [ ] dynamically load models and reduce GPU overload

|

| 83 |

+

|

| 84 |

+

Adding local features / matchers as submodules is very easy. For example, to add the [GlueStick](https://github.com/cvg/GlueStick):

|

| 85 |

+

|

| 86 |

+

``` bash

|

| 87 |

+

git submodule add https://github.com/cvg/GlueStick.git third_party/GlueStick

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

If remote submodule repositories are updated, don't forget to pull submodules with `git submodule update --remote`, if you only want to update one submodule, use `git submodule update --remote third_party/GlueStick`.

|

| 91 |

+

|

| 92 |

+

## Resources

|

| 93 |

+

- [Image Matching: Local Features & Beyond](https://image-matching-workshop.github.io)

|

| 94 |

+

- [Long-term Visual Localization](https://www.visuallocalization.net)

|

| 95 |

+

|

| 96 |

+

## Acknowledgement

|

| 97 |

+

|

| 98 |

+

This code is built based on [Hierarchical-Localization](https://github.com/cvg/Hierarchical-Localization). We express our gratitude to the authors for their valuable source code.

|

| 99 |

+

|

| 100 |

+

[contributors-shield]: https://img.shields.io/github/contributors/Vincentqyw/image-matching-webui.svg?style=for-the-badge

|

| 101 |

+

[contributors-url]: https://github.com/Vincentqyw/image-matching-webui/graphs/contributors

|

| 102 |

+

[forks-shield]: https://img.shields.io/github/forks/Vincentqyw/image-matching-webui.svg?style=for-the-badge

|

| 103 |

+

[forks-url]: https://github.com/Vincentqyw/image-matching-webui/network/members

|

| 104 |

+

[stars-shield]: https://img.shields.io/github/stars/Vincentqyw/image-matching-webui.svg?style=for-the-badge

|

| 105 |

+

[stars-url]: https://github.com/Vincentqyw/image-matching-webui/stargazers

|

| 106 |

+

[issues-shield]: https://img.shields.io/github/issues/Vincentqyw/image-matching-webui.svg?style=for-the-badge

|

| 107 |

+

[issues-url]: https://github.com/Vincentqyw/image-matching-webui/issues

|

app.py

ADDED

|

@@ -0,0 +1,291 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import gradio as gr

|

| 3 |

+

|

| 4 |

+

from hloc import extract_features

|

| 5 |

+

from extra_utils.utils import (

|

| 6 |

+

matcher_zoo,

|

| 7 |

+

device,

|

| 8 |

+

match_dense,

|

| 9 |

+

match_features,

|

| 10 |

+

get_model,

|

| 11 |

+

get_feature_model,

|

| 12 |

+

display_matches

|

| 13 |

+

)

|

| 14 |

+

|

| 15 |

+

def run_matching(

|

| 16 |

+

match_threshold, extract_max_keypoints, keypoint_threshold, key, image0, image1

|

| 17 |

+

):

|

| 18 |

+

# image0 and image1 is RGB mode

|

| 19 |

+

if image0 is None or image1 is None:

|

| 20 |

+

raise gr.Error("Error: No images found! Please upload two images.")

|

| 21 |

+

|

| 22 |

+

model = matcher_zoo[key]

|

| 23 |

+

match_conf = model["config"]

|

| 24 |

+

# update match config

|

| 25 |

+

match_conf["model"]["match_threshold"] = match_threshold

|

| 26 |

+

match_conf["model"]["max_keypoints"] = extract_max_keypoints

|

| 27 |

+

|

| 28 |

+

matcher = get_model(match_conf)

|

| 29 |

+

if model["dense"]:

|

| 30 |

+

pred = match_dense.match_images(

|

| 31 |

+

matcher, image0, image1, match_conf["preprocessing"], device=device

|

| 32 |

+

)

|

| 33 |

+

del matcher

|

| 34 |

+

extract_conf = None

|

| 35 |

+

else:

|

| 36 |

+

extract_conf = model["config_feature"]

|

| 37 |

+

# update extract config

|

| 38 |

+

extract_conf["model"]["max_keypoints"] = extract_max_keypoints

|

| 39 |

+

extract_conf["model"]["keypoint_threshold"] = keypoint_threshold

|

| 40 |

+

extractor = get_feature_model(extract_conf)

|

| 41 |

+

pred0 = extract_features.extract(

|

| 42 |

+

extractor, image0, extract_conf["preprocessing"]

|

| 43 |

+

)

|

| 44 |

+

pred1 = extract_features.extract(

|

| 45 |

+

extractor, image1, extract_conf["preprocessing"]

|

| 46 |

+

)

|

| 47 |

+

pred = match_features.match_images(matcher, pred0, pred1)

|

| 48 |

+

del extractor

|

| 49 |

+

fig, num_inliers = display_matches(pred)

|

| 50 |

+

del pred

|

| 51 |

+

return (

|

| 52 |

+

fig,

|

| 53 |

+

{"matches number": num_inliers},

|

| 54 |

+

{"match_conf": match_conf, "extractor_conf": extract_conf},

|

| 55 |

+

)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def ui_change_imagebox(choice):

|

| 59 |

+

return {"value": None, "source": choice, "__type__": "update"}

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def ui_reset_state(

|

| 63 |

+

match_threshold, extract_max_keypoints, keypoint_threshold, key, image0, image1

|

| 64 |

+

):

|

| 65 |

+

match_threshold = 0.2

|

| 66 |

+

extract_max_keypoints = 1000

|

| 67 |

+

keypoint_threshold = 0.015

|

| 68 |

+

key = list(matcher_zoo.keys())[0]

|

| 69 |

+

image0 = None

|

| 70 |

+

image1 = None

|

| 71 |

+

return (

|

| 72 |

+

match_threshold,

|

| 73 |

+

extract_max_keypoints,

|

| 74 |

+

keypoint_threshold,

|

| 75 |

+

key,

|

| 76 |

+

image0,

|

| 77 |

+

image1,

|

| 78 |

+

{"value": None, "source": "upload", "__type__": "update"},

|

| 79 |

+

{"value": None, "source": "upload", "__type__": "update"},

|

| 80 |

+

"upload",

|

| 81 |

+

None,

|

| 82 |

+

{},

|

| 83 |

+

{},

|

| 84 |

+

)

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

def run(config):

|

| 88 |

+

with gr.Blocks(

|

| 89 |

+

theme=gr.themes.Monochrome(), css="footer {visibility: hidden}"

|

| 90 |

+

) as app:

|

| 91 |

+

gr.Markdown(

|

| 92 |

+

"""

|

| 93 |

+

<p align="center">

|

| 94 |

+

<h1 align="center">Image Matching WebUI</h1>

|

| 95 |

+

</p>

|

| 96 |

+

"""

|

| 97 |

+

)

|

| 98 |

+

|

| 99 |

+

with gr.Row(equal_height=False):

|

| 100 |

+

with gr.Column():

|

| 101 |

+

with gr.Row():

|

| 102 |

+

matcher_list = gr.Dropdown(

|

| 103 |

+

choices=list(matcher_zoo.keys()),

|

| 104 |

+

value="disk+lightglue",

|

| 105 |

+

label="Matching Model",

|

| 106 |

+

interactive=True,

|

| 107 |

+

)

|

| 108 |

+

match_image_src = gr.Radio(

|

| 109 |

+

["upload", "webcam", "canvas"],

|

| 110 |

+

label="Image Source",

|

| 111 |

+

value="upload",

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

with gr.Row():

|

| 115 |

+

match_setting_threshold = gr.Slider(

|

| 116 |

+

minimum=0.0,

|

| 117 |

+

maximum=1,

|

| 118 |

+

step=0.001,

|

| 119 |

+

label="Match threshold",

|

| 120 |

+

value=0.1,

|

| 121 |

+

)

|

| 122 |

+

match_setting_max_features = gr.Slider(

|

| 123 |

+

minimum=10,

|

| 124 |

+

maximum=10000,

|

| 125 |

+

step=10,

|

| 126 |

+

label="Max number of features",

|

| 127 |

+

value=1000,

|

| 128 |

+

)

|

| 129 |

+

# TODO: add line settings

|

| 130 |

+

with gr.Row():

|

| 131 |

+

detect_keypoints_threshold = gr.Slider(

|

| 132 |

+

minimum=0,

|

| 133 |

+

maximum=1,

|

| 134 |

+

step=0.001,

|

| 135 |

+

label="Keypoint threshold",

|

| 136 |

+

value=0.015,

|

| 137 |

+

)

|

| 138 |

+

detect_line_threshold = gr.Slider(

|

| 139 |

+

minimum=0.1,

|

| 140 |

+

maximum=1,

|

| 141 |

+

step=0.01,

|

| 142 |

+

label="Line threshold",

|

| 143 |

+

value=0.2,

|

| 144 |

+

)

|

| 145 |

+

# matcher_lists = gr.Radio(

|

| 146 |

+

# ["NN-mutual", "Dual-Softmax"],

|

| 147 |

+

# label="Matcher mode",

|

| 148 |

+

# value="NN-mutual",

|

| 149 |

+

# )

|

| 150 |

+

with gr.Row():

|

| 151 |

+

input_image0 = gr.Image(

|

| 152 |

+

label="Image 0",

|

| 153 |

+

type="numpy",

|

| 154 |

+

interactive=True,

|

| 155 |

+

image_mode="RGB",

|

| 156 |

+

)

|

| 157 |

+

input_image1 = gr.Image(

|

| 158 |

+

label="Image 1",

|

| 159 |

+

type="numpy",

|

| 160 |

+

interactive=True,

|

| 161 |

+

image_mode="RGB",

|

| 162 |

+

)

|

| 163 |

+

|

| 164 |

+

with gr.Row():

|

| 165 |

+

button_reset = gr.Button(label="Reset", value="Reset")

|

| 166 |

+

button_run = gr.Button(

|

| 167 |

+

label="Run Match", value="Run Match", variant="primary"

|

| 168 |

+

)

|

| 169 |

+

|

| 170 |

+

with gr.Accordion("Open for More!", open=False):

|

| 171 |

+

gr.Markdown(

|

| 172 |

+

f"""

|

| 173 |

+

<h3>Supported Algorithms</h3>

|

| 174 |

+

{", ".join(matcher_zoo.keys())}

|

| 175 |

+

"""

|

| 176 |

+

)

|

| 177 |

+

|

| 178 |

+

# collect inputs

|

| 179 |

+

inputs = [

|

| 180 |

+

match_setting_threshold,

|

| 181 |

+

match_setting_max_features,

|

| 182 |

+

detect_keypoints_threshold,

|

| 183 |

+

matcher_list,

|

| 184 |

+

input_image0,

|

| 185 |

+

input_image1,

|

| 186 |

+

]

|

| 187 |

+

|

| 188 |

+

# Add some examples

|

| 189 |

+

with gr.Row():

|

| 190 |

+

examples = [

|

| 191 |

+

[

|

| 192 |

+

0.1,

|

| 193 |

+

2000,

|

| 194 |

+

0.015,

|

| 195 |

+

"disk+lightglue",

|

| 196 |

+

"datasets/sacre_coeur/mapping/71295362_4051449754.jpg",

|

| 197 |

+

"datasets/sacre_coeur/mapping/93341989_396310999.jpg",

|

| 198 |

+

],

|

| 199 |

+

[

|

| 200 |

+

0.1,

|

| 201 |

+

2000,

|

| 202 |

+

0.015,

|

| 203 |

+

"loftr",

|

| 204 |

+

"datasets/sacre_coeur/mapping/03903474_1471484089.jpg",

|

| 205 |

+

"datasets/sacre_coeur/mapping/02928139_3448003521.jpg",

|

| 206 |

+

],

|

| 207 |

+

[

|

| 208 |

+

0.1,

|

| 209 |

+

2000,

|

| 210 |

+

0.015,

|

| 211 |

+

"disk",

|

| 212 |

+

"datasets/sacre_coeur/mapping/10265353_3838484249.jpg",

|

| 213 |

+

"datasets/sacre_coeur/mapping/51091044_3486849416.jpg",

|

| 214 |

+

],

|

| 215 |

+

[

|

| 216 |

+

0.1,

|

| 217 |

+

2000,

|

| 218 |

+

0.015,

|

| 219 |

+

"topicfm",

|

| 220 |

+

"datasets/sacre_coeur/mapping/44120379_8371960244.jpg",

|

| 221 |

+

"datasets/sacre_coeur/mapping/93341989_396310999.jpg",

|

| 222 |

+

],

|

| 223 |

+

[

|

| 224 |

+

0.1,

|

| 225 |

+

2000,

|

| 226 |

+

0.015,

|

| 227 |

+

"superpoint+superglue",

|

| 228 |

+

"datasets/sacre_coeur/mapping/17295357_9106075285.jpg",

|

| 229 |

+

"datasets/sacre_coeur/mapping/44120379_8371960244.jpg",

|

| 230 |

+

],

|

| 231 |

+

]

|

| 232 |

+

# Example inputs

|

| 233 |

+

gr.Examples(

|

| 234 |

+

examples=examples,

|

| 235 |

+

inputs=inputs,

|

| 236 |

+

outputs=[],

|

| 237 |

+

fn=run_matching,

|

| 238 |

+

cache_examples=False,

|

| 239 |

+

label="Examples (click one of the images below to Run Match)",

|

| 240 |

+

)

|

| 241 |

+

|

| 242 |

+

with gr.Column():

|

| 243 |

+

output_mkpts = gr.Image(label="Keypoints Matching", type="numpy")

|

| 244 |

+

matches_result_info = gr.JSON(label="Matches Statistics")

|

| 245 |

+

matcher_info = gr.JSON(label="Match info")

|

| 246 |

+

|

| 247 |

+

# callbacks

|

| 248 |

+

match_image_src.change(

|

| 249 |

+

fn=ui_change_imagebox, inputs=match_image_src, outputs=input_image0

|

| 250 |

+

)

|

| 251 |

+

match_image_src.change(

|

| 252 |

+

fn=ui_change_imagebox, inputs=match_image_src, outputs=input_image1

|

| 253 |

+

)

|

| 254 |

+

|

| 255 |

+

# collect outputs

|

| 256 |

+

outputs = [

|

| 257 |

+

output_mkpts,

|

| 258 |

+

matches_result_info,

|

| 259 |

+

matcher_info,

|

| 260 |

+

]

|

| 261 |

+

# button callbacks

|

| 262 |

+

button_run.click(fn=run_matching, inputs=inputs, outputs=outputs)

|

| 263 |

+

|

| 264 |

+

# Reset images

|

| 265 |

+

reset_outputs = [

|

| 266 |

+

match_setting_threshold,

|

| 267 |

+

match_setting_max_features,

|

| 268 |

+

detect_keypoints_threshold,

|

| 269 |

+

matcher_list,

|

| 270 |

+

input_image0,

|

| 271 |

+

input_image1,

|

| 272 |

+

input_image0,

|

| 273 |

+

input_image1,

|

| 274 |

+

match_image_src,

|

| 275 |

+

output_mkpts,

|

| 276 |

+

matches_result_info,

|

| 277 |

+

matcher_info,

|

| 278 |

+

]

|

| 279 |

+

button_reset.click(fn=ui_reset_state, inputs=inputs, outputs=reset_outputs)

|

| 280 |

+

|

| 281 |

+

app.launch(share=True)

|

| 282 |

+

|

| 283 |

+

|

| 284 |

+

if __name__ == "__main__":

|

| 285 |

+

parser = argparse.ArgumentParser()

|

| 286 |

+

parser.add_argument(

|

| 287 |

+

"--config_path", type=str, default="config.yaml", help="configuration file path"

|

| 288 |

+

)

|

| 289 |

+

args = parser.parse_args()

|

| 290 |

+

config = None

|

| 291 |

+

run(config)

|

assets/demo.gif

ADDED

|

Git LFS Details

|

assets/gui.jpg

ADDED

|

datasets/.gitignore

ADDED

|

File without changes

|

datasets/lines/terrace0.JPG

ADDED

|

|

datasets/lines/terrace1.JPG

ADDED

|

|

datasets/sacre_coeur/README.md

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Sacre Coeur demo

|

| 2 |

+

|

| 3 |

+

We provide here a subset of images depicting the Sacre Coeur. These images were obtained from the [Image Matching Challenge 2021](https://www.cs.ubc.ca/research/image-matching-challenge/2021/data/) and were originally collected by the [Yahoo Flickr Creative Commons 100M (YFCC) dataset](https://multimediacommons.wordpress.com/yfcc100m-core-dataset/).

|

datasets/sacre_coeur/mapping/02928139_3448003521.jpg

ADDED

|

datasets/sacre_coeur/mapping/03903474_1471484089.jpg

ADDED

|

datasets/sacre_coeur/mapping/10265353_3838484249.jpg

ADDED

|

datasets/sacre_coeur/mapping/17295357_9106075285.jpg

ADDED

|

datasets/sacre_coeur/mapping/32809961_8274055477.jpg

ADDED

|

datasets/sacre_coeur/mapping/44120379_8371960244.jpg

ADDED

|

datasets/sacre_coeur/mapping/51091044_3486849416.jpg

ADDED

|

datasets/sacre_coeur/mapping/60584745_2207571072.jpg

ADDED

|

datasets/sacre_coeur/mapping/71295362_4051449754.jpg

ADDED

|

datasets/sacre_coeur/mapping/93341989_396310999.jpg

ADDED

|

extra_utils/__init__.py

ADDED

|

File without changes

|

extra_utils/plotting.py

ADDED

|

@@ -0,0 +1,504 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import bisect

|

| 2 |

+

import numpy as np

|

| 3 |

+

import matplotlib.pyplot as plt

|

| 4 |

+

import matplotlib, os, cv2

|

| 5 |

+

import matplotlib.cm as cm

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import torch.nn.functional as F

|

| 8 |

+

import torch

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def _compute_conf_thresh(data):

|

| 12 |

+

dataset_name = data["dataset_name"][0].lower()

|

| 13 |

+

if dataset_name == "scannet":

|

| 14 |

+

thr = 5e-4

|

| 15 |

+

elif dataset_name == "megadepth":

|

| 16 |

+

thr = 1e-4

|

| 17 |

+

else:

|

| 18 |

+

raise ValueError(f"Unknown dataset: {dataset_name}")

|

| 19 |

+

return thr

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# --- VISUALIZATION --- #

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

def make_matching_figure(

|

| 26 |

+

img0,

|

| 27 |

+

img1,

|

| 28 |

+

mkpts0,

|

| 29 |

+

mkpts1,

|

| 30 |

+

color,

|

| 31 |

+

titles=None,

|

| 32 |

+

kpts0=None,

|

| 33 |

+

kpts1=None,

|

| 34 |

+

text=[],

|

| 35 |

+

dpi=75,

|

| 36 |

+

path=None,

|

| 37 |

+

pad=0,

|

| 38 |

+

):

|

| 39 |

+

# draw image pair

|

| 40 |

+

# assert mkpts0.shape[0] == mkpts1.shape[0], f'mkpts0: {mkpts0.shape[0]} v.s. mkpts1: {mkpts1.shape[0]}'

|

| 41 |

+

fig, axes = plt.subplots(1, 2, figsize=(10, 6), dpi=dpi)

|

| 42 |

+

axes[0].imshow(img0) # , cmap='gray')

|

| 43 |

+

axes[1].imshow(img1) # , cmap='gray')

|

| 44 |

+

for i in range(2): # clear all frames

|

| 45 |

+

axes[i].get_yaxis().set_ticks([])

|

| 46 |

+

axes[i].get_xaxis().set_ticks([])

|

| 47 |

+

for spine in axes[i].spines.values():

|

| 48 |

+

spine.set_visible(False)

|

| 49 |

+

if titles is not None:

|

| 50 |

+

axes[i].set_title(titles[i])

|

| 51 |

+

|

| 52 |

+

plt.tight_layout(pad=pad)

|

| 53 |

+

|

| 54 |

+

if kpts0 is not None:

|

| 55 |

+

assert kpts1 is not None

|

| 56 |

+

axes[0].scatter(kpts0[:, 0], kpts0[:, 1], c="w", s=5)

|

| 57 |

+

axes[1].scatter(kpts1[:, 0], kpts1[:, 1], c="w", s=5)

|

| 58 |

+

|

| 59 |

+

# draw matches

|

| 60 |

+

if mkpts0.shape[0] != 0 and mkpts1.shape[0] != 0:

|

| 61 |

+

fig.canvas.draw()

|

| 62 |

+

transFigure = fig.transFigure.inverted()

|

| 63 |

+

fkpts0 = transFigure.transform(axes[0].transData.transform(mkpts0))

|

| 64 |

+

fkpts1 = transFigure.transform(axes[1].transData.transform(mkpts1))

|

| 65 |

+

fig.lines = [

|

| 66 |

+

matplotlib.lines.Line2D(

|

| 67 |

+

(fkpts0[i, 0], fkpts1[i, 0]),

|

| 68 |

+

(fkpts0[i, 1], fkpts1[i, 1]),

|

| 69 |

+

transform=fig.transFigure,

|

| 70 |

+

c=color[i],

|

| 71 |

+

linewidth=2,

|

| 72 |

+

)

|

| 73 |

+

for i in range(len(mkpts0))

|

| 74 |

+

]

|

| 75 |

+

|

| 76 |

+

# freeze the axes to prevent the transform to change

|

| 77 |

+

axes[0].autoscale(enable=False)

|

| 78 |

+

axes[1].autoscale(enable=False)

|

| 79 |

+

|

| 80 |

+

axes[0].scatter(mkpts0[:, 0], mkpts0[:, 1], c=color[..., :3], s=4)

|

| 81 |

+

axes[1].scatter(mkpts1[:, 0], mkpts1[:, 1], c=color[..., :3], s=4)

|

| 82 |

+

|

| 83 |

+

# put txts

|

| 84 |

+

txt_color = "k" if img0[:100, :200].mean() > 200 else "w"

|

| 85 |

+

fig.text(

|

| 86 |

+

0.01,

|

| 87 |

+

0.99,

|

| 88 |

+

"\n".join(text),

|

| 89 |

+

transform=fig.axes[0].transAxes,

|

| 90 |

+

fontsize=15,

|

| 91 |

+

va="top",

|

| 92 |

+

ha="left",

|

| 93 |

+

color=txt_color,

|

| 94 |

+

)

|

| 95 |

+

|

| 96 |

+

# save or return figure

|

| 97 |

+

if path:

|

| 98 |

+

plt.savefig(str(path), bbox_inches="tight", pad_inches=0)

|

| 99 |

+

plt.close()

|

| 100 |

+

else:

|

| 101 |

+

return fig

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

def _make_evaluation_figure(data, b_id, alpha="dynamic"):

|

| 105 |

+

b_mask = data["m_bids"] == b_id

|

| 106 |

+

conf_thr = _compute_conf_thresh(data)

|

| 107 |

+

|

| 108 |

+

img0 = (data["image0"][b_id][0].cpu().numpy() * 255).round().astype(np.int32)

|

| 109 |

+

img1 = (data["image1"][b_id][0].cpu().numpy() * 255).round().astype(np.int32)

|

| 110 |

+

kpts0 = data["mkpts0_f"][b_mask].cpu().numpy()

|

| 111 |

+

kpts1 = data["mkpts1_f"][b_mask].cpu().numpy()

|

| 112 |

+

|

| 113 |

+

# for megadepth, we visualize matches on the resized image

|

| 114 |

+

if "scale0" in data:

|

| 115 |

+

kpts0 = kpts0 / data["scale0"][b_id].cpu().numpy()[[1, 0]]

|

| 116 |

+

kpts1 = kpts1 / data["scale1"][b_id].cpu().numpy()[[1, 0]]

|

| 117 |

+

|

| 118 |

+

epi_errs = data["epi_errs"][b_mask].cpu().numpy()

|

| 119 |

+

correct_mask = epi_errs < conf_thr

|

| 120 |

+

precision = np.mean(correct_mask) if len(correct_mask) > 0 else 0

|

| 121 |

+

n_correct = np.sum(correct_mask)

|

| 122 |

+

n_gt_matches = int(data["conf_matrix_gt"][b_id].sum().cpu())

|

| 123 |

+

recall = 0 if n_gt_matches == 0 else n_correct / (n_gt_matches)

|

| 124 |

+

# recall might be larger than 1, since the calculation of conf_matrix_gt

|

| 125 |

+

# uses groundtruth depths and camera poses, but epipolar distance is used here.

|

| 126 |

+

|

| 127 |

+

# matching info

|

| 128 |

+

if alpha == "dynamic":

|

| 129 |

+

alpha = dynamic_alpha(len(correct_mask))

|

| 130 |

+

color = error_colormap(epi_errs, conf_thr, alpha=alpha)

|

| 131 |

+

|

| 132 |

+

text = [

|

| 133 |

+

f"#Matches {len(kpts0)}",

|

| 134 |

+

f"Precision({conf_thr:.2e}) ({100 * precision:.1f}%): {n_correct}/{len(kpts0)}",

|

| 135 |

+

f"Recall({conf_thr:.2e}) ({100 * recall:.1f}%): {n_correct}/{n_gt_matches}",

|

| 136 |

+

]

|

| 137 |

+

|

| 138 |

+

# make the figure

|

| 139 |

+

figure = make_matching_figure(img0, img1, kpts0, kpts1, color, text=text)

|

| 140 |

+

return figure

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

def _make_confidence_figure(data, b_id):

|

| 144 |

+

# TODO: Implement confidence figure

|

| 145 |

+

raise NotImplementedError()

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

def make_matching_figures(data, config, mode="evaluation"):

|

| 149 |

+

"""Make matching figures for a batch.

|

| 150 |

+

|

| 151 |

+

Args:

|

| 152 |

+

data (Dict): a batch updated by PL_LoFTR.

|

| 153 |

+

config (Dict): matcher config

|

| 154 |

+

Returns:

|

| 155 |

+

figures (Dict[str, List[plt.figure]]

|

| 156 |

+

"""

|

| 157 |

+

assert mode in ["evaluation", "confidence"] # 'confidence'

|

| 158 |

+

figures = {mode: []}

|

| 159 |

+

for b_id in range(data["image0"].size(0)):

|

| 160 |

+

if mode == "evaluation":

|

| 161 |

+

fig = _make_evaluation_figure(

|

| 162 |

+

data, b_id, alpha=config.TRAINER.PLOT_MATCHES_ALPHA

|

| 163 |

+

)

|

| 164 |

+

elif mode == "confidence":

|

| 165 |

+

fig = _make_confidence_figure(data, b_id)

|

| 166 |

+

else:

|

| 167 |

+

raise ValueError(f"Unknown plot mode: {mode}")

|

| 168 |

+

figures[mode].append(fig)

|

| 169 |

+

return figures

|

| 170 |

+

|

| 171 |

+

|

| 172 |

+

def dynamic_alpha(

|

| 173 |

+

n_matches, milestones=[0, 300, 1000, 2000], alphas=[1.0, 0.8, 0.4, 0.2]

|

| 174 |

+

):

|

| 175 |

+

if n_matches == 0:

|

| 176 |

+

return 1.0

|

| 177 |

+

ranges = list(zip(alphas, alphas[1:] + [None]))

|

| 178 |

+

loc = bisect.bisect_right(milestones, n_matches) - 1

|

| 179 |

+

_range = ranges[loc]

|

| 180 |

+

if _range[1] is None:

|

| 181 |

+

return _range[0]

|

| 182 |

+

return _range[1] + (milestones[loc + 1] - n_matches) / (

|

| 183 |

+

milestones[loc + 1] - milestones[loc]

|

| 184 |

+

) * (_range[0] - _range[1])

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

def error_colormap(err, thr, alpha=1.0):

|

| 188 |

+

assert alpha <= 1.0 and alpha > 0, f"Invaid alpha value: {alpha}"

|

| 189 |

+

x = 1 - np.clip(err / (thr * 2), 0, 1)

|

| 190 |

+

return np.clip(

|

| 191 |

+

np.stack([2 - x * 2, x * 2, np.zeros_like(x), np.ones_like(x) * alpha], -1),

|

| 192 |

+

0,

|

| 193 |

+

1,

|

| 194 |

+

)

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

np.random.seed(1995)

|

| 198 |

+

color_map = np.arange(100)

|

| 199 |

+

np.random.shuffle(color_map)

|

| 200 |

+

|

| 201 |

+

|

| 202 |

+

def draw_topics(

|

| 203 |

+

data, img0, img1, saved_folder="viz_topics", show_n_topics=8, saved_name=None

|

| 204 |

+

):

|

| 205 |

+

|

| 206 |

+

topic0, topic1 = data["topic_matrix"]["img0"], data["topic_matrix"]["img1"]

|

| 207 |

+

hw0_c, hw1_c = data["hw0_c"], data["hw1_c"]

|

| 208 |

+

hw0_i, hw1_i = data["hw0_i"], data["hw1_i"]

|

| 209 |

+

# print(hw0_i, hw1_i)

|

| 210 |

+

scale0, scale1 = hw0_i[0] // hw0_c[0], hw1_i[0] // hw1_c[0]

|

| 211 |

+

if "scale0" in data:

|

| 212 |

+

scale0 *= data["scale0"][0]

|

| 213 |

+

else:

|

| 214 |

+

scale0 = (scale0, scale0)

|

| 215 |

+

if "scale1" in data:

|

| 216 |

+

scale1 *= data["scale1"][0]

|

| 217 |

+

else:

|

| 218 |

+

scale1 = (scale1, scale1)

|

| 219 |

+

|

| 220 |

+

n_topics = topic0.shape[-1]

|

| 221 |

+

# mask0_nonzero = topic0[0].sum(dim=-1, keepdim=True) > 0

|

| 222 |

+

# mask1_nonzero = topic1[0].sum(dim=-1, keepdim=True) > 0

|

| 223 |

+

theta0 = topic0[0].sum(dim=0)

|

| 224 |

+

theta0 /= theta0.sum().float()

|

| 225 |

+

theta1 = topic1[0].sum(dim=0)

|

| 226 |

+

theta1 /= theta1.sum().float()

|

| 227 |

+

# top_topic0 = torch.argsort(theta0, descending=True)[:show_n_topics]

|

| 228 |

+

# top_topic1 = torch.argsort(theta1, descending=True)[:show_n_topics]

|

| 229 |

+

top_topics = torch.argsort(theta0 * theta1, descending=True)[:show_n_topics]

|

| 230 |

+

# print(sum_topic0, sum_topic1)

|

| 231 |

+

|

| 232 |

+

topic0 = topic0[0].argmax(

|

| 233 |

+

dim=-1, keepdim=True

|

| 234 |

+

) # .float() / (n_topics - 1) #* 255 + 1 #

|

| 235 |

+

# topic0[~mask0_nonzero] = -1

|

| 236 |

+

topic1 = topic1[0].argmax(

|

| 237 |

+

dim=-1, keepdim=True

|

| 238 |

+

) # .float() / (n_topics - 1) #* 255 + 1

|

| 239 |

+

# topic1[~mask1_nonzero] = -1

|

| 240 |

+

label_img0, label_img1 = torch.zeros_like(topic0) - 1, torch.zeros_like(topic1) - 1

|

| 241 |

+

for i, k in enumerate(top_topics):

|

| 242 |

+

label_img0[topic0 == k] = color_map[k]

|

| 243 |

+

label_img1[topic1 == k] = color_map[k]

|

| 244 |

+

|

| 245 |

+

# print(hw0_c, scale0)

|

| 246 |

+

# print(hw1_c, scale1)

|

| 247 |

+

# map_topic0 = F.fold(label_img0.unsqueeze(0), hw0_i, kernel_size=scale0, stride=scale0)

|

| 248 |

+

map_topic0 = (

|

| 249 |

+

label_img0.float().view(hw0_c).cpu().numpy()

|

| 250 |

+

) # map_topic0.squeeze(0).squeeze(0).cpu().numpy()

|

| 251 |

+

map_topic0 = cv2.resize(

|

| 252 |

+

map_topic0, (int(hw0_c[1] * scale0[0]), int(hw0_c[0] * scale0[1]))

|

| 253 |

+

)

|

| 254 |

+

# map_topic1 = F.fold(label_img1.unsqueeze(0), hw1_i, kernel_size=scale1, stride=scale1)

|

| 255 |

+

map_topic1 = (

|

| 256 |

+

label_img1.float().view(hw1_c).cpu().numpy()

|

| 257 |

+

) # map_topic1.squeeze(0).squeeze(0).cpu().numpy()

|

| 258 |

+

map_topic1 = cv2.resize(

|

| 259 |

+

map_topic1, (int(hw1_c[1] * scale1[0]), int(hw1_c[0] * scale1[1]))

|

| 260 |

+

)

|

| 261 |

+

|

| 262 |

+

# show image0

|

| 263 |

+

if saved_name is None:

|

| 264 |

+

return map_topic0, map_topic1

|

| 265 |

+

|

| 266 |

+

if not os.path.exists(saved_folder):

|

| 267 |

+

os.makedirs(saved_folder)

|

| 268 |

+

path_saved_img0 = os.path.join(saved_folder, "{}_0.png".format(saved_name))

|

| 269 |

+

plt.imshow(img0)

|

| 270 |

+

masked_map_topic0 = np.ma.masked_where(map_topic0 < 0, map_topic0)

|

| 271 |

+

plt.imshow(

|

| 272 |

+

masked_map_topic0,

|

| 273 |

+