Spaces:

Running

Running

Vincentqyw

commited on

Commit

·

42dde81

1

Parent(s):

e15a186

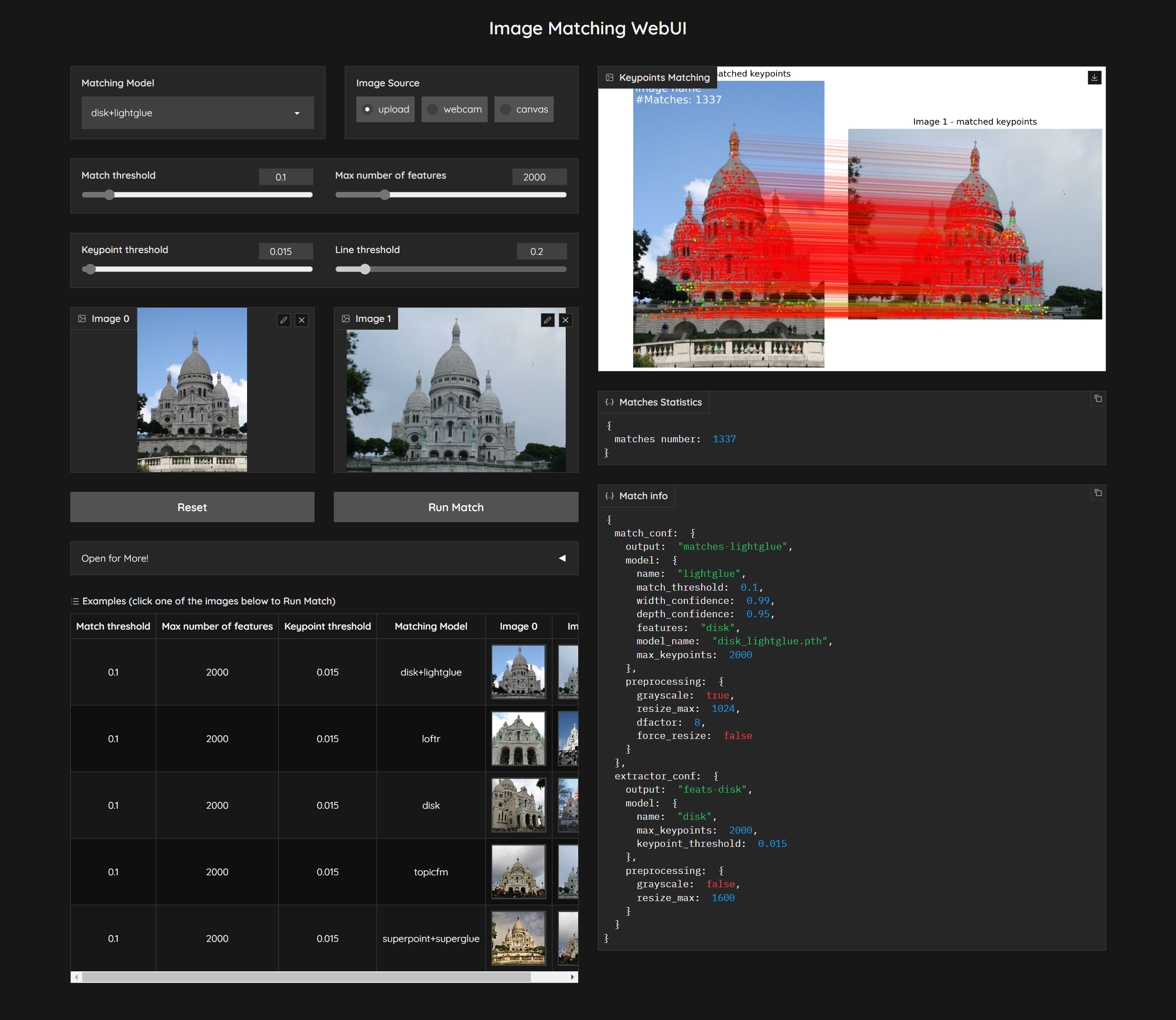

add: keypoints

Browse files- README.md +10 -3

- app.py +26 -15

- assets/gui.jpg +0 -0

- common/utils.py +63 -19

- hloc/match_dense.py +2 -2

- hloc/match_features.py +10 -6

README.md

CHANGED

|

@@ -30,7 +30,7 @@ Here is a demo of the tool:

|

|

| 30 |

|

| 31 |

The tool currently supports various popular image matching algorithms, namely:

|

| 32 |

- [x] [LightGlue](https://github.com/cvg/LightGlue), ICCV 2023

|

| 33 |

-

- [x] [DeDoDe](https://github.com/Parskatt/DeDoDe),

|

| 34 |

- [x] [DarkFeat](https://github.com/THU-LYJ-Lab/DarkFeat), AAAI 2023

|

| 35 |

- [ ] [ASTR](https://github.com/ASTR2023/ASTR), CVPR 2023

|

| 36 |

- [ ] [SEM](https://github.com/SEM2023/SEM), CVPR 2023

|

|

@@ -61,7 +61,13 @@ The tool currently supports various popular image matching algorithms, namely:

|

|

| 61 |

|

| 62 |

## How to use

|

| 63 |

|

| 64 |

-

###

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 65 |

``` bash

|

| 66 |

git clone --recursive https://github.com/Vincentqyw/image-matching-webui.git

|

| 67 |

cd image-matching-webui

|

|

@@ -88,7 +94,8 @@ External contributions are very much welcome. Please follow the [PEP8 style guid

|

|

| 88 |

- [x] add webcam support

|

| 89 |

- [x] add [line feature matching](https://github.com/Vincentqyw/LineSegmentsDetection) algorithms

|

| 90 |

- [x] example to add a new feature extractor / matcher

|

| 91 |

-

- [

|

|

|

|

| 92 |

- [ ] support export matches to colmap ([#issue 6](https://github.com/Vincentqyw/image-matching-webui/issues/6))

|

| 93 |

- [ ] add config file to set default parameters

|

| 94 |

- [ ] dynamically load models and reduce GPU overload

|

|

|

|

| 30 |

|

| 31 |

The tool currently supports various popular image matching algorithms, namely:

|

| 32 |

- [x] [LightGlue](https://github.com/cvg/LightGlue), ICCV 2023

|

| 33 |

+

- [x] [DeDoDe](https://github.com/Parskatt/DeDoDe), ArXiv 2023

|

| 34 |

- [x] [DarkFeat](https://github.com/THU-LYJ-Lab/DarkFeat), AAAI 2023

|

| 35 |

- [ ] [ASTR](https://github.com/ASTR2023/ASTR), CVPR 2023

|

| 36 |

- [ ] [SEM](https://github.com/SEM2023/SEM), CVPR 2023

|

|

|

|

| 61 |

|

| 62 |

## How to use

|

| 63 |

|

| 64 |

+

### HuggingFace

|

| 65 |

+

|

| 66 |

+

Just try it on HF <a href='https://huggingface.co/spaces/Realcat/image-matching-webui'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Spaces-blue'> [](https://openxlab.org.cn/apps/detail/Realcat/image-matching-webui)

|

| 67 |

+

|

| 68 |

+

or deploy it locally following the instructions below.

|

| 69 |

+

|

| 70 |

+

### Requirements

|

| 71 |

``` bash

|

| 72 |

git clone --recursive https://github.com/Vincentqyw/image-matching-webui.git

|

| 73 |

cd image-matching-webui

|

|

|

|

| 94 |

- [x] add webcam support

|

| 95 |

- [x] add [line feature matching](https://github.com/Vincentqyw/LineSegmentsDetection) algorithms

|

| 96 |

- [x] example to add a new feature extractor / matcher

|

| 97 |

+

- [x] ransac to filter outliers

|

| 98 |

+

- [ ] add [rotation images](https://github.com/pidahbus/deep-image-orientation-angle-detection) options before matching

|

| 99 |

- [ ] support export matches to colmap ([#issue 6](https://github.com/Vincentqyw/image-matching-webui/issues/6))

|

| 100 |

- [ ] add config file to set default parameters

|

| 101 |

- [ ] dynamically load models and reduce GPU overload

|

app.py

CHANGED

|

@@ -28,7 +28,7 @@ def ui_reset_state(

|

|

| 28 |

extract_max_keypoints,

|

| 29 |

keypoint_threshold,

|

| 30 |

key,

|

| 31 |

-

enable_ransac=False,

|

| 32 |

ransac_method="RANSAC",

|

| 33 |

ransac_reproj_threshold=8,

|

| 34 |

ransac_confidence=0.999,

|

|

@@ -41,7 +41,7 @@ def ui_reset_state(

|

|

| 41 |

key = list(matcher_zoo.keys())[0]

|

| 42 |

image0 = None

|

| 43 |

image1 = None

|

| 44 |

-

enable_ransac = False

|

| 45 |

return (

|

| 46 |

image0,

|

| 47 |

image1,

|

|

@@ -52,12 +52,14 @@ def ui_reset_state(

|

|

| 52 |

ui_change_imagebox("upload"),

|

| 53 |

ui_change_imagebox("upload"),

|

| 54 |

"upload",

|

| 55 |

-

None,

|

|

|

|

|

|

|

| 56 |

{},

|

| 57 |

{},

|

| 58 |

None,

|

| 59 |

{},

|

| 60 |

-

False,

|

| 61 |

"RANSAC",

|

| 62 |

8,

|

| 63 |

0.999,

|

|

@@ -145,7 +147,7 @@ def run(config):

|

|

| 145 |

# )

|

| 146 |

with gr.Accordion("RANSAC Setting", open=True):

|

| 147 |

with gr.Row(equal_height=False):

|

| 148 |

-

enable_ransac = gr.Checkbox(label="Enable RANSAC")

|

| 149 |

ransac_method = gr.Dropdown(

|

| 150 |

choices=ransac_zoo.keys(),

|

| 151 |

value="RANSAC",

|

|

@@ -192,7 +194,7 @@ def run(config):

|

|

| 192 |

match_setting_max_features,

|

| 193 |

detect_keypoints_threshold,

|

| 194 |

matcher_list,

|

| 195 |

-

enable_ransac,

|

| 196 |

ransac_method,

|

| 197 |

ransac_reproj_threshold,

|

| 198 |

ransac_confidence,

|

|

@@ -223,18 +225,23 @@ def run(config):

|

|

| 223 |

)

|

| 224 |

|

| 225 |

with gr.Column():

|

| 226 |

-

|

| 227 |

-

|

|

|

|

|

|

|

| 228 |

)

|

| 229 |

with gr.Accordion(

|

| 230 |

-

"Open for More: Matches Statistics", open=

|

| 231 |

):

|

| 232 |

matches_result_info = gr.JSON(label="Matches Statistics")

|

| 233 |

matcher_info = gr.JSON(label="Match info")

|

| 234 |

|

| 235 |

-

with gr.Accordion("Open for More:

|

| 236 |

-

output_wrapped = gr.Image(

|

| 237 |

-

|

|

|

|

|

|

|

|

|

|

| 238 |

|

| 239 |

# callbacks

|

| 240 |

match_image_src.change(

|

|

@@ -250,7 +257,9 @@ def run(config):

|

|

| 250 |

|

| 251 |

# collect outputs

|

| 252 |

outputs = [

|

| 253 |

-

|

|

|

|

|

|

|

| 254 |

matches_result_info,

|

| 255 |

matcher_info,

|

| 256 |

geometry_result,

|

|

@@ -270,12 +279,14 @@ def run(config):

|

|

| 270 |

input_image0,

|

| 271 |

input_image1,

|

| 272 |

match_image_src,

|

| 273 |

-

|

|

|

|

|

|

|

| 274 |

matches_result_info,

|

| 275 |

matcher_info,

|

| 276 |

output_wrapped,

|

| 277 |

geometry_result,

|

| 278 |

-

enable_ransac,

|

| 279 |

ransac_method,

|

| 280 |

ransac_reproj_threshold,

|

| 281 |

ransac_confidence,

|

|

|

|

| 28 |

extract_max_keypoints,

|

| 29 |

keypoint_threshold,

|

| 30 |

key,

|

| 31 |

+

# enable_ransac=False,

|

| 32 |

ransac_method="RANSAC",

|

| 33 |

ransac_reproj_threshold=8,

|

| 34 |

ransac_confidence=0.999,

|

|

|

|

| 41 |

key = list(matcher_zoo.keys())[0]

|

| 42 |

image0 = None

|

| 43 |

image1 = None

|

| 44 |

+

# enable_ransac = False

|

| 45 |

return (

|

| 46 |

image0,

|

| 47 |

image1,

|

|

|

|

| 52 |

ui_change_imagebox("upload"),

|

| 53 |

ui_change_imagebox("upload"),

|

| 54 |

"upload",

|

| 55 |

+

None, # keypoints

|

| 56 |

+

None, # raw matches

|

| 57 |

+

None, # ransac matches

|

| 58 |

{},

|

| 59 |

{},

|

| 60 |

None,

|

| 61 |

{},

|

| 62 |

+

# False,

|

| 63 |

"RANSAC",

|

| 64 |

8,

|

| 65 |

0.999,

|

|

|

|

| 147 |

# )

|

| 148 |

with gr.Accordion("RANSAC Setting", open=True):

|

| 149 |

with gr.Row(equal_height=False):

|

| 150 |

+

# enable_ransac = gr.Checkbox(label="Enable RANSAC")

|

| 151 |

ransac_method = gr.Dropdown(

|

| 152 |

choices=ransac_zoo.keys(),

|

| 153 |

value="RANSAC",

|

|

|

|

| 194 |

match_setting_max_features,

|

| 195 |

detect_keypoints_threshold,

|

| 196 |

matcher_list,

|

| 197 |

+

# enable_ransac,

|

| 198 |

ransac_method,

|

| 199 |

ransac_reproj_threshold,

|

| 200 |

ransac_confidence,

|

|

|

|

| 225 |

)

|

| 226 |

|

| 227 |

with gr.Column():

|

| 228 |

+

output_keypoints = gr.Image(label="Keypoints", type="numpy")

|

| 229 |

+

output_matches_raw = gr.Image(label="Raw Matches", type="numpy")

|

| 230 |

+

output_matches_ransac = gr.Image(

|

| 231 |

+

label="Ransac Matches", type="numpy"

|

| 232 |

)

|

| 233 |

with gr.Accordion(

|

| 234 |

+

"Open for More: Matches Statistics", open=False

|

| 235 |

):

|

| 236 |

matches_result_info = gr.JSON(label="Matches Statistics")

|

| 237 |

matcher_info = gr.JSON(label="Match info")

|

| 238 |

|

| 239 |

+

with gr.Accordion("Open for More: Warped Image", open=False):

|

| 240 |

+

output_wrapped = gr.Image(

|

| 241 |

+

label="Wrapped Pair", type="numpy"

|

| 242 |

+

)

|

| 243 |

+

with gr.Accordion("Open for More: Geometry info", open=False):

|

| 244 |

+

geometry_result = gr.JSON(label="Reconstructed Geometry")

|

| 245 |

|

| 246 |

# callbacks

|

| 247 |

match_image_src.change(

|

|

|

|

| 257 |

|

| 258 |

# collect outputs

|

| 259 |

outputs = [

|

| 260 |

+

output_keypoints,

|

| 261 |

+

output_matches_raw,

|

| 262 |

+

output_matches_ransac,

|

| 263 |

matches_result_info,

|

| 264 |

matcher_info,

|

| 265 |

geometry_result,

|

|

|

|

| 279 |

input_image0,

|

| 280 |

input_image1,

|

| 281 |

match_image_src,

|

| 282 |

+

output_keypoints,

|

| 283 |

+

output_matches_raw,

|

| 284 |

+

output_matches_ransac,

|

| 285 |

matches_result_info,

|

| 286 |

matcher_info,

|

| 287 |

output_wrapped,

|

| 288 |

geometry_result,

|

| 289 |

+

# enable_ransac,

|

| 290 |

ransac_method,

|

| 291 |

ransac_reproj_threshold,

|

| 292 |

ransac_confidence,

|

assets/gui.jpg

CHANGED

|

|

Git LFS Details

|

common/utils.py

CHANGED

|

@@ -8,6 +8,7 @@ import gradio as gr

|

|

| 8 |

from hloc import matchers, extractors

|

| 9 |

from hloc.utils.base_model import dynamic_load

|

| 10 |

from hloc import match_dense, match_features, extract_features

|

|

|

|

| 11 |

from .viz import draw_matches, fig2im, plot_images, plot_color_line_matches

|

| 12 |

|

| 13 |

device = "cuda" if torch.cuda.is_available() else "cpu"

|

|

@@ -68,7 +69,7 @@ def gen_examples():

|

|

| 68 |

match_setting_max_features,

|

| 69 |

detect_keypoints_threshold,

|

| 70 |

mt,

|

| 71 |

-

enable_ransac,

|

| 72 |

ransac_method,

|

| 73 |

ransac_reproj_threshold,

|

| 74 |

ransac_confidence,

|

|

@@ -105,6 +106,9 @@ def filter_matches(

|

|

| 105 |

return pred

|

| 106 |

if ransac_method not in ransac_zoo.keys():

|

| 107 |

ransac_method = "RANSAC"

|

|

|

|

|

|

|

|

|

|

| 108 |

H, mask = cv2.findHomography(

|

| 109 |

mkpts0,

|

| 110 |

mkpts1,

|

|

@@ -236,7 +240,7 @@ def change_estimate_geom(input_image0, input_image1, matches_info, choice):

|

|

| 236 |

return None, None

|

| 237 |

|

| 238 |

|

| 239 |

-

def display_matches(pred: dict):

|

| 240 |

img0 = pred["image0_orig"]

|

| 241 |

img1 = pred["image1_orig"]

|

| 242 |

|

|

@@ -255,11 +259,8 @@ def display_matches(pred: dict):

|

|

| 255 |

img0,

|

| 256 |

img1,

|

| 257 |

mconf,

|

| 258 |

-

dpi=

|

| 259 |

-

titles=

|

| 260 |

-

"Image 0 - matched keypoints",

|

| 261 |

-

"Image 1 - matched keypoints",

|

| 262 |

-

],

|

| 263 |

)

|

| 264 |

fig = fig_mkpts

|

| 265 |

if "line0_orig" in pred.keys() and "line1_orig" in pred.keys():

|

|

@@ -302,7 +303,7 @@ def run_matching(

|

|

| 302 |

extract_max_keypoints,

|

| 303 |

keypoint_threshold,

|

| 304 |

key,

|

| 305 |

-

enable_ransac=False,

|

| 306 |

ransac_method="RANSAC",

|

| 307 |

ransac_reproj_threshold=8,

|

| 308 |

ransac_confidence=0.999,

|

|

@@ -312,6 +313,10 @@ def run_matching(

|

|

| 312 |

# image0 and image1 is RGB mode

|

| 313 |

if image0 is None or image1 is None:

|

| 314 |

raise gr.Error("Error: No images found! Please upload two images.")

|

|

|

|

|

|

|

|

|

|

|

|

|

| 315 |

|

| 316 |

model = matcher_zoo[key]

|

| 317 |

match_conf = model["config"]

|

|

@@ -341,16 +346,48 @@ def run_matching(

|

|

| 341 |

pred = match_features.match_images(matcher, pred0, pred1)

|

| 342 |

del extractor

|

| 343 |

|

| 344 |

-

|

| 345 |

-

|

| 346 |

-

|

| 347 |

-

|

| 348 |

-

|

| 349 |

-

|

| 350 |

-

|

| 351 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 352 |

|

| 353 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 354 |

geom_info = compute_geom(pred)

|

| 355 |

output_wrapped, _ = change_estimate_geom(

|

| 356 |

pred["image0_orig"],

|

|

@@ -358,10 +395,17 @@ def run_matching(

|

|

| 358 |

{"geom_info": geom_info},

|

| 359 |

choice_estimate_geom,

|

| 360 |

)

|

|

|

|

| 361 |

del pred

|

|

|

|

| 362 |

return (

|

| 363 |

-

|

| 364 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 365 |

{

|

| 366 |

"match_conf": match_conf,

|

| 367 |

"extractor_conf": extract_conf,

|

|

|

|

| 8 |

from hloc import matchers, extractors

|

| 9 |

from hloc.utils.base_model import dynamic_load

|

| 10 |

from hloc import match_dense, match_features, extract_features

|

| 11 |

+

from hloc.utils.viz import add_text, plot_keypoints

|

| 12 |

from .viz import draw_matches, fig2im, plot_images, plot_color_line_matches

|

| 13 |

|

| 14 |

device = "cuda" if torch.cuda.is_available() else "cpu"

|

|

|

|

| 69 |

match_setting_max_features,

|

| 70 |

detect_keypoints_threshold,

|

| 71 |

mt,

|

| 72 |

+

# enable_ransac,

|

| 73 |

ransac_method,

|

| 74 |

ransac_reproj_threshold,

|

| 75 |

ransac_confidence,

|

|

|

|

| 106 |

return pred

|

| 107 |

if ransac_method not in ransac_zoo.keys():

|

| 108 |

ransac_method = "RANSAC"

|

| 109 |

+

|

| 110 |

+

if len(mkpts0) < 4:

|

| 111 |

+

return pred

|

| 112 |

H, mask = cv2.findHomography(

|

| 113 |

mkpts0,

|

| 114 |

mkpts1,

|

|

|

|

| 240 |

return None, None

|

| 241 |

|

| 242 |

|

| 243 |

+

def display_matches(pred: dict, titles=[], dpi=300):

|

| 244 |

img0 = pred["image0_orig"]

|

| 245 |

img1 = pred["image1_orig"]

|

| 246 |

|

|

|

|

| 259 |

img0,

|

| 260 |

img1,

|

| 261 |

mconf,

|

| 262 |

+

dpi=dpi,

|

| 263 |

+

titles=titles,

|

|

|

|

|

|

|

|

|

|

| 264 |

)

|

| 265 |

fig = fig_mkpts

|

| 266 |

if "line0_orig" in pred.keys() and "line1_orig" in pred.keys():

|

|

|

|

| 303 |

extract_max_keypoints,

|

| 304 |

keypoint_threshold,

|

| 305 |

key,

|

| 306 |

+

# enable_ransac=False,

|

| 307 |

ransac_method="RANSAC",

|

| 308 |

ransac_reproj_threshold=8,

|

| 309 |

ransac_confidence=0.999,

|

|

|

|

| 313 |

# image0 and image1 is RGB mode

|

| 314 |

if image0 is None or image1 is None:

|

| 315 |

raise gr.Error("Error: No images found! Please upload two images.")

|

| 316 |

+

# init output

|

| 317 |

+

output_keypoints = None

|

| 318 |

+

output_matches_raw = None

|

| 319 |

+

output_matches_ransac = None

|

| 320 |

|

| 321 |

model = matcher_zoo[key]

|

| 322 |

match_conf = model["config"]

|

|

|

|

| 346 |

pred = match_features.match_images(matcher, pred0, pred1)

|

| 347 |

del extractor

|

| 348 |

|

| 349 |

+

# plot images with keypoints

|

| 350 |

+

titles = [

|

| 351 |

+

"Image 0 - Keypoints",

|

| 352 |

+

"Image 1 - Keypoints",

|

| 353 |

+

]

|

| 354 |

+

output_keypoints = plot_images([image0, image1], titles=titles, dpi=300)

|

| 355 |

+

plot_keypoints([pred["keypoints0"], pred["keypoints1"]])

|

| 356 |

+

text = (

|

| 357 |

+

f"# keypoints0: {len(pred['keypoints0'])} \n"

|

| 358 |

+

+ f"# keypoints1: {len(pred['keypoints1'])}"

|

| 359 |

+

)

|

| 360 |

+

|

| 361 |

+

add_text(0, text, fs=15)

|

| 362 |

+

output_keypoints = fig2im(output_keypoints)

|

| 363 |

+

|

| 364 |

+

# plot images with raw matches

|

| 365 |

+

titles = [

|

| 366 |

+

"Image 0 - Raw matched keypoints",

|

| 367 |

+

"Image 1 - Raw matched keypoints",

|

| 368 |

+

]

|

| 369 |

+

|

| 370 |

+

output_matches_raw, num_matches_raw = display_matches(pred, titles=titles)

|

| 371 |

|

| 372 |

+

# if enable_ransac:

|

| 373 |

+

filter_matches(

|

| 374 |

+

pred,

|

| 375 |

+

ransac_method=ransac_method,

|

| 376 |

+

ransac_reproj_threshold=ransac_reproj_threshold,

|

| 377 |

+

ransac_confidence=ransac_confidence,

|

| 378 |

+

ransac_max_iter=ransac_max_iter,

|

| 379 |

+

)

|

| 380 |

+

|

| 381 |

+

# plot images with ransac matches

|

| 382 |

+

titles = [

|

| 383 |

+

"Image 0 - Ransac matched keypoints",

|

| 384 |

+

"Image 1 - Ransac matched keypoints",

|

| 385 |

+

]

|

| 386 |

+

output_matches_ransac, num_matches_ransac = display_matches(

|

| 387 |

+

pred, titles=titles

|

| 388 |

+

)

|

| 389 |

+

|

| 390 |

+

# plot wrapped images

|

| 391 |

geom_info = compute_geom(pred)

|

| 392 |

output_wrapped, _ = change_estimate_geom(

|

| 393 |

pred["image0_orig"],

|

|

|

|

| 395 |

{"geom_info": geom_info},

|

| 396 |

choice_estimate_geom,

|

| 397 |

)

|

| 398 |

+

|

| 399 |

del pred

|

| 400 |

+

|

| 401 |

return (

|

| 402 |

+

output_keypoints,

|

| 403 |

+

output_matches_raw,

|

| 404 |

+

output_matches_ransac,

|

| 405 |

+

{

|

| 406 |

+

"number raw matches": num_matches_raw,

|

| 407 |

+

"number ransac matches": num_matches_ransac,

|

| 408 |

+

},

|

| 409 |

{

|

| 410 |

"match_conf": match_conf,

|

| 411 |

"extractor_conf": extract_conf,

|

hloc/match_dense.py

CHANGED

|

@@ -340,8 +340,8 @@ def match_images(model, image_0, image_1, conf, device="cpu"):

|

|

| 340 |

"image1": image1.squeeze().cpu().numpy(),

|

| 341 |

"image0_orig": image_0,

|

| 342 |

"image1_orig": image_1,

|

| 343 |

-

"keypoints0":

|

| 344 |

-

"keypoints1":

|

| 345 |

"keypoints0_orig": kpts0_origin.cpu().numpy(),

|

| 346 |

"keypoints1_orig": kpts1_origin.cpu().numpy(),

|

| 347 |

"original_size0": np.array(image_0.shape[:2][::-1]),

|

|

|

|

| 340 |

"image1": image1.squeeze().cpu().numpy(),

|

| 341 |

"image0_orig": image_0,

|

| 342 |

"image1_orig": image_1,

|

| 343 |

+

"keypoints0": kpts0_origin.cpu().numpy(),

|

| 344 |

+

"keypoints1": kpts1_origin.cpu().numpy(),

|

| 345 |

"keypoints0_orig": kpts0_origin.cpu().numpy(),

|

| 346 |

"keypoints1_orig": kpts1_origin.cpu().numpy(),

|

| 347 |

"original_size0": np.array(image_0.shape[:2][::-1]),

|

hloc/match_features.py

CHANGED

|

@@ -369,15 +369,19 @@ def match_images(model, feat0, feat1):

|

|

| 369 |

# rescale the keypoints to their original size

|

| 370 |

s0 = feat0["original_size"] / feat0["size"]

|

| 371 |

s1 = feat1["original_size"] / feat1["size"]

|

| 372 |

-

kpts0_origin = scale_keypoints(torch.from_numpy(

|

| 373 |

-

kpts1_origin = scale_keypoints(torch.from_numpy(

|

|

|

|

|

|

|

|

|

|

|

|

|

| 374 |

ret = {

|

| 375 |

"image0_orig": feat0["image_orig"],

|

| 376 |

"image1_orig": feat1["image_orig"],

|

| 377 |

-

"keypoints0":

|

| 378 |

-

"keypoints1":

|

| 379 |

-

"keypoints0_orig":

|

| 380 |

-

"keypoints1_orig":

|

| 381 |

"mconf": mconfid,

|

| 382 |

}

|

| 383 |

del feat0, feat1, desc0, desc1, kpts0, kpts1, kpts0_origin, kpts1_origin

|

|

|

|

| 369 |

# rescale the keypoints to their original size

|

| 370 |

s0 = feat0["original_size"] / feat0["size"]

|

| 371 |

s1 = feat1["original_size"] / feat1["size"]

|

| 372 |

+

kpts0_origin = scale_keypoints(torch.from_numpy(kpts0 + 0.5), s0) - 0.5

|

| 373 |

+

kpts1_origin = scale_keypoints(torch.from_numpy(kpts1 + 0.5), s1) - 0.5

|

| 374 |

+

|

| 375 |

+

mkpts0_origin = scale_keypoints(torch.from_numpy(mkpts0 + 0.5), s0) - 0.5

|

| 376 |

+

mkpts1_origin = scale_keypoints(torch.from_numpy(mkpts1 + 0.5), s1) - 0.5

|

| 377 |

+

|

| 378 |

ret = {

|

| 379 |

"image0_orig": feat0["image_orig"],

|

| 380 |

"image1_orig": feat1["image_orig"],

|

| 381 |

+

"keypoints0": kpts0_origin.numpy(),

|

| 382 |

+

"keypoints1": kpts1_origin.numpy(),

|

| 383 |

+

"keypoints0_orig": mkpts0_origin.numpy(),

|

| 384 |

+

"keypoints1_orig": mkpts1_origin.numpy(),

|

| 385 |

"mconf": mconfid,

|

| 386 |

}

|

| 387 |

del feat0, feat1, desc0, desc1, kpts0, kpts1, kpts0_origin, kpts1_origin

|